- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA Turing & Ampere CUDA & OptiX Rendering Performance

Ahead of 2021, we wanted to take one last look at rendering performance with the current range of NVIDIA GPUs and the renderers that target them. Those include some staples: Arnold, KeyShot, Octane, Redshift, and V-Ray, with a little Blender on the side. Many have also been tested with RTX on and off, so read on and see how well each one of these eleven GPUs fare.

As 2020 comes to a close, we can reflect on an interesting year for GPUs, even though the vast majority of the action came in the final four months of the year. When NVIDIA launched its Ampere generation in September, we saw incredible performance from the get-go, with clear advantages being seen over Turing.

Following that launch article, we greeted both the RTX 3090 and RTX 3070 launches the same way – and then a number of updates happened, to Windows, some renderers we test with, and our test platform itself. We recently moved from our Intel Core X rig to an AMD Ryzen Threadripper one for workstation GPU testing – partly because the Core X machine is aging, and the thought of using a 32-core CPU for our heterogeneous testing has been pretty tempting. Not to mention, only AMD platforms currently offer PCIe 4.0 support. For those wondering why we didn’t go with the 64-core Threadripper, it was so that we could keep clock speeds high for workloads that do not scale completely with high core count CPUs.

Since our look at rendering performance with the RTX 3070 in October, the move to the new test rig happened, and thus all of the GPUs were retested. With this switch also came an upgrade to KeyShot (10), and Blender (2.91). On the topic of Blender, we’re going to have a modest look at its performance here; if you want a much more in-depth look, check out our dedicated article.

Note that this article exists to cater to those who use a Windows renderer exclusively developed around use on NVIDIA GPUs, as there are (unfortunately) many that lack support for Radeon. We currently believe that Arnold, KeyShot, and V-Ray won’t support Radeon for quite some time (if at all), but we’re hopeful to see Radeon-supported Octane and Redshift for Windows in the new year, as both currently offer betas for macOS.

For a refresher on NVIDIA’s current lineup, reference the table below:

| NVIDIA’s GeForce Gaming GPU Lineup | |||||||

| Cores | Base MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 | 10,496 | 1,400 | 35.6 TFLOPS | 24GB 1 | 936GB/s | 350W | $1,499 |

| RTX 3080 | 8,704 | 1,440 | 29.7 TFLOPS | 10GB 1 | 760GB/s | 320W | $699 |

| RTX 3070 | 5,888 | 1,500 | 20.4 TFLOPS | 8GB 2 | 512GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| TITAN RTX | 4,608 | 1,770 | 16.3 TFLOPS | 24GB 2 | 672 GB/s | 280W | $2,499 |

| RTX 2080 Ti | 4,352 | 1,350 | 13.4 TFLOPS | 11GB 2 | 616 GB/s | 250W | $1,199 |

| RTX 2080 S | 3,072 | 1,650 | 11.1 TFLOPS | 8GB 2 | 496 GB/s | 250W | $699 |

| RTX 2070 S | 2,560 | 1,605 | 9.1 TFLOPS | 8GB 2 | 448 GB/s | 215W | $499 |

| RTX 2060 S | 2,176 | 1,470 | 7.2 TFLOPS | 8GB 2 | 448 GB/s | 175W | $399 |

| RTX 2060 | 1,920 | 1,365 | 6.4 TFLOPS | 6GB 2 | 336 GB/s | 160W | $299 |

| GTX 1660 Ti | 1,536 | 1,500 | 5.5 TFLOPS | 6GB 2 | 288 GB/s | 120W | $279 |

| GTX 1660 S | 1,408 | 1,530 | 5.0 TFLOPS | 6GB 2 | 336 GB/s | 125W | $229 |

| GTX 1660 | 1,408 | 1,530 | 5 TFLOPS | 6GB 4 | 192 GB/s | 120W | $219 |

| GTX 1650 S | 1,280 | 1,530 | 4.4TFLOPS | 4GB 2 | 192 GB/s | 100W | $159 |

| GTX 1650 | 896 | 1,485 | 3 TFLOPS | 4GB 4 | 128 GB/s | 75W | $149 |

| Notes | 1 GDDR6X; 2 GDDR6; 3 GDDR5X; 4 GDDR5; 5 HBM2 GTX 1080 Ti = Pascal; GTX/RTX 2000 = Turing; RTX 3000 = Ampere |

||||||

NVIDIA’s Turing architecture brought forth GeForce’s first RT and Tensor cores, and while initial rollout of supported software took a little while to drop, we can honestly say the wait was worth it. It became clear from our first look at OptiX ray tracing acceleration in Blender that some jaw-dropping improvements could be made with it. The fact that the likes of Autodesk made OptiX default with its Arnold renderer is enough to tell you what vendors think of it.

What we’ve seen from previous articles, and most recently, our Blender 2.91 in-depth look, is that the biggest concern any creator will have with the latest crop of GPUs is the memory limitations. With its 10GB of memory, the RTX 3080 is what we’d call the best go-to GPU if you can swing its price tag. While it grants only 2GB more than the RTX 3060 Ti and RTX 3070, that makes it a little more “future-proof”, if such a thing were to exist.

We’ve seen certain occasions where it seemed the extra frame buffer helped the RTX 3080 pull even further ahead of the RTX 3070 than it would have if it too only had an 8GB frame buffer. It would have been great if the RTX 3080 was actually 12GB, but what can you do? The rumor mill claims that other higher density versions of NVIDIA’s Ampere cards are coming, so we may have to revisit this performance look soon enough.

Here’s an overview of the workstation used in testing for this article:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen Threadripper 3970X (32-core; 3.7GHz) |

| Motherboard | ASUS Zenith II Extreme Alpha |

| Memory | Corsair Vengeance RGB Pro (CMW32GX4M4C3200C16) 4x8GB; DDR4-3200 16-18-18 |

| Graphics | NVIDIA RTX 3090 (24GB, GeForce 457.09) NVIDIA RTX 3080 (10GB, GeForce 457.09) NVIDIA RTX 3070 (8GB, GeForce 457.09) NVIDIA RTX 3060 Ti (8GB, GeForce 457.09) NVIDIA TITAN RTX (24GB, GeForce 457.09) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 457.09) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 457.09) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 457.09) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 457.09) NVIDIA GeForce RTX 2060 (6GB, GeForce 457.09) NVIDIA GeForce GTX 1660 Ti (6GB, GeForce 457.09) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | NZXT H710i Mid-Tower |

| Cooling | NZXT Kraken X63 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19042 (20H2) |

| All product links in this table are affiliated, and help support our work. | |

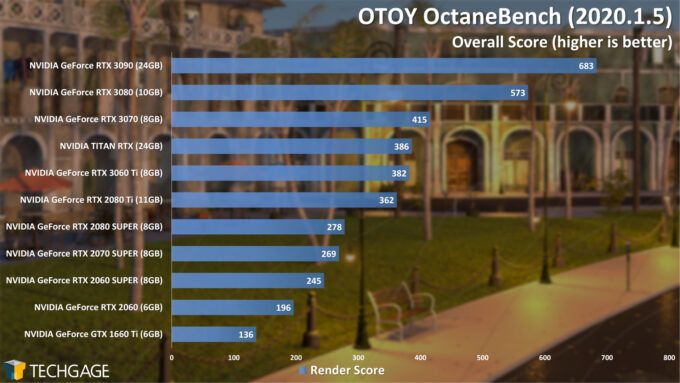

OTOY OctaneRender 2020

OTOY’s OctaneRender seems to exhibit the strongest GPU performance scaling across the collection we test with, and fortunately, that scaling carries over to OctaneBench. If you have an NVIDIA GPU, you can download and run the benchmark in a matter of minutes if you want to compare your performance to ours.

Ahead of the Ampere launch, NVIDIA said that the RTX 3070 would beat out the last-gen RTX 2080 Ti, and in many cases, that’s true. However, in gaming, both cards can flip-flop their strengths, whereas in rendering, Ampere cards are able to show great strength even before RT cores get involved. All of the results in the above chart are with RTX on, with the exception of the 1660 Ti, as it doesn’t include dedicated RT cores.

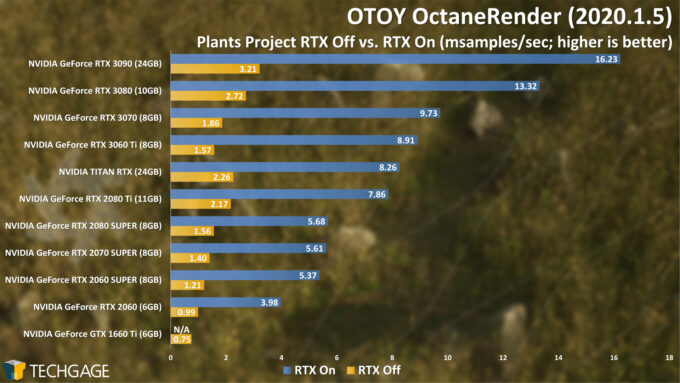

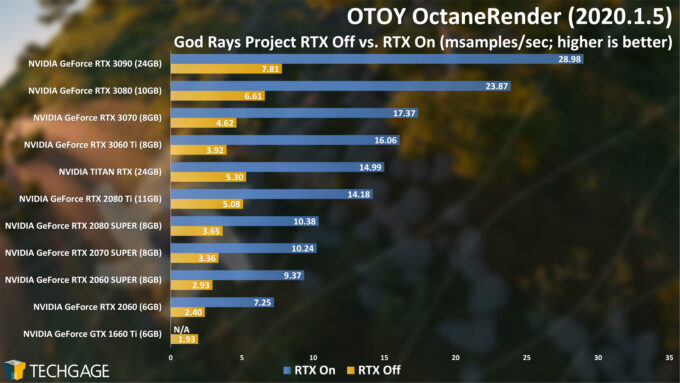

Let’s see how things transition to the real OctaneRender:

OTOY must really care about benchmarks, because despite the fact that OctaneBench serves its purpose well, the company still goes the length to include a separate RTX Off / On test inside of OctaneRender itself. This allows anyone to benchmark their own projects when upgrading from one GPU to another, to easily understand what kind of performance they’re gaining.

Overall, these OctaneRender tests scale quite well with OctaneBench, and so far, the performance delivered out of NVIDIA’s latest GPUs is going to be undoubtedly alluring to anyone who owns anything less than a top-end GPU of the previous GeForce generation. It is quite something to see a $399 Ampere GeForce outperform the previous generation’s $999 Turing-based 2080 Ti.

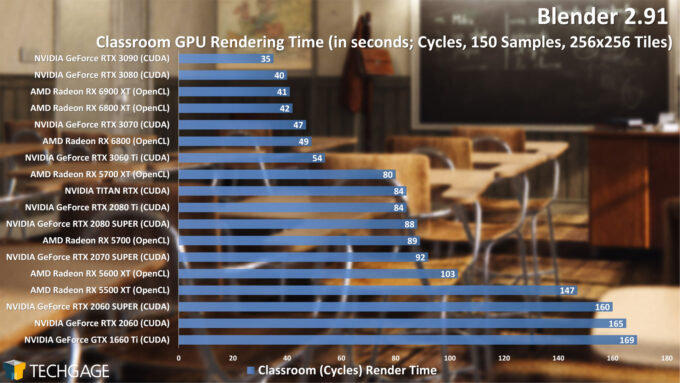

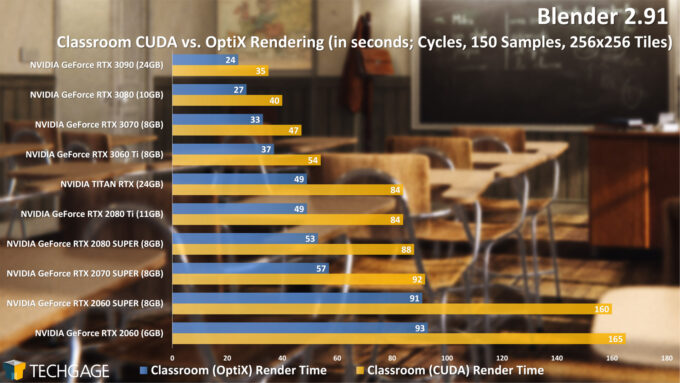

Blender 2.91

As mentioned earlier, we have a dedicated Blender 2.91 performance article if you want to explore broader results, all of which include AMD’s current crop of graphics cards (except for OptiX testing, naturally). In a basic CUDA and OpenCL rendering test, the Classroom project really highlights AMD’s improved ray traced performance on its RDNA2-equipped GPUs, with the RX 6800 XT placing only just behind the RTX 3080.

The Classroom project is an odd one, in that it always seems to favor Radeon more than we’d expect, only because of how we’ve seen other projects scale. Even the Radeon VII would place extremely well in this chart, although we’d never suggest it as an ideal creator card today. Either way, what hurts AMD at this point is NVIDIA’s OptiX ray tracing acceleration, which we’ll see next:

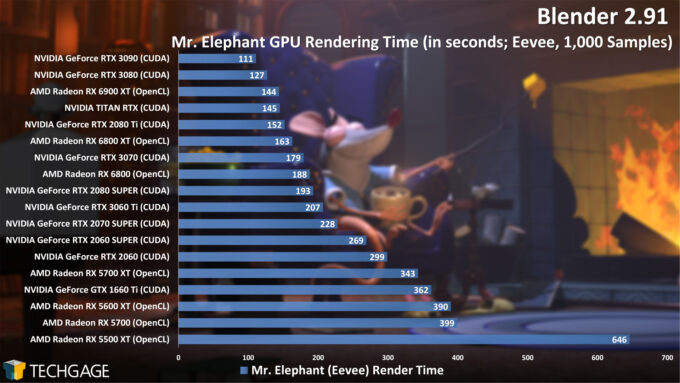

Any renderer that takes advantage of NVIDIA’s RT cores will make it obvious why it’s smart to use over the standard CUDA option, but that doesn’t mean that using the OptiX API will always offer feature parity with its CUDA equivalent. That said, we’re at the point now where it shouldn’t matter unless you are converting older projects. To AMD’s benefit, this OptiX acceleration doesn’t apply to the Eevee render engine:

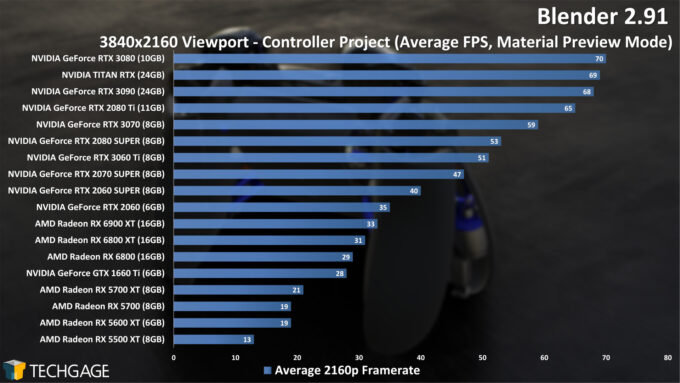

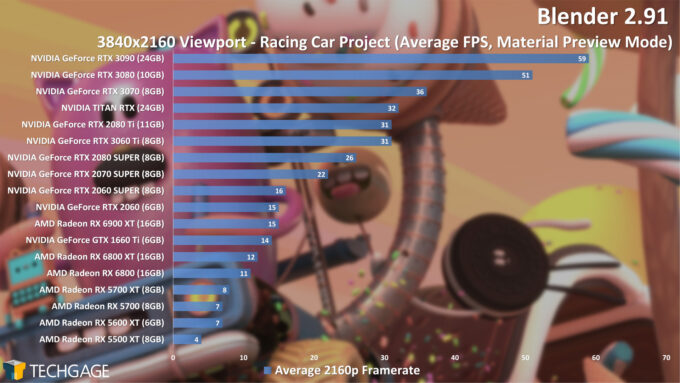

Even with AMD’s stronger Eevee performance, NVIDIA still ends up coming out ahead in the dollar-for-dollar comparison, and to be honest, based on problems we found in Blender 2.91 with Radeon GPUs, we couldn’t possibly recommend anything other than GeForce right now. The above-linked 2.91 deep-dive article will explore Wireframe viewport modes, due to performance issues we encountered on Radeon, but for here, we’ll just take a look at the Material Preview mode:

In the Controller project, which is the simpler of the two tested, the FPS scales reliably up until the top, when the fastest GPUs can begin tripping over each other. This issue is more pronounced at lower resolutions, where the GPU may not be stressed as hard due to the CPU trying to keep up. In the more complex Racing Car project, we see more expected scaling at the top-end.

We should stress that while Radeon GPUs suffer hard in these tests, they perform better in 2.90 – just not better than NVIDIA. Ultimately, NVIDIA will reign supreme in Blender any way you look at it, but AMD GPUs are capable of a lot more in 2.90. We’re not sure why a degradation occurred, but this is the current reality of Blender if you keep up-to-date.

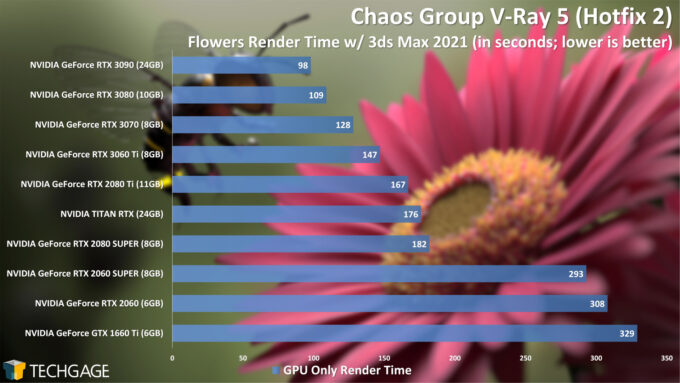

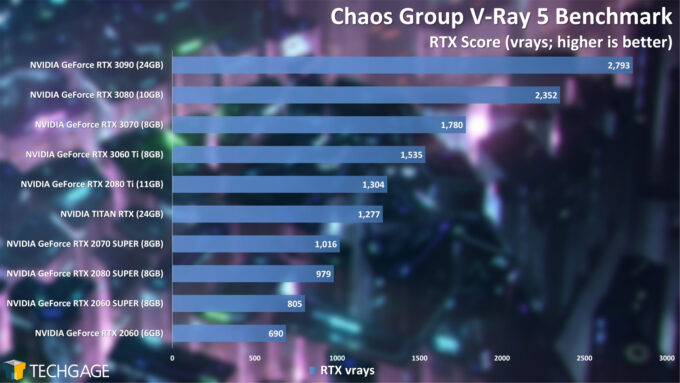

Chaos Group V-Ray 5

Note that due to performance anomalies with the RTX 2070 SUPER, we had to drop it from the above charts, but it will make a return in the future (our V-Ray license is in limbo, hence we couldn’t just retest). As we’ve seen exhibited a few times up to this point, NVIDIA’s latest crop of GPUs perform really strongly in comparison to the previous generation. Naturally, things only get better once OptiX is enabled:

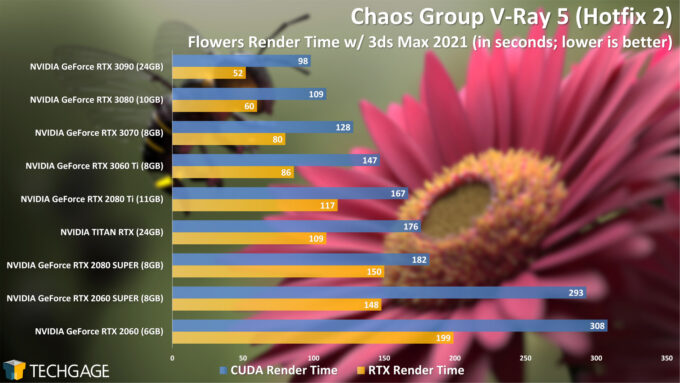

The gains with ray tracing acceleration are pretty incredible here, with the RTX 3090 dropping its render time from 98 to 52 seconds – roughly half. Even the last-gen GPUs fare extremely well here, with the 2060 SUPER also cutting its render time in half with OptiX (RTX) enabled. In most cases, OptiX makes it unnecessary to consider adding the CPU into the mix, but let’s check out that performance anyway:

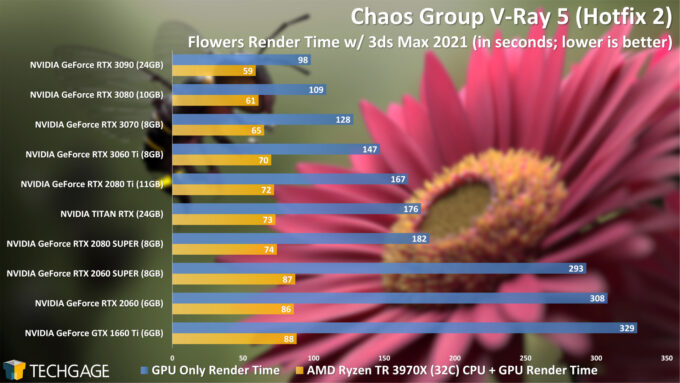

Bear in mind that our workstation PC is equipped with a 32-core processor, so the scaling seen here isn’t likely to align with your CPU unless you happen to have the same one. This is an interesting chart, because the level of benefit really depends on the GPU you’re using. With OptiX, the RTX 3080 and RTX 3090 are faster on their own than when combined with the CPU in the CUDA API. But then you can look at the RTX 3070, which actually is faster in the heterogeneous mode than with OptiX.

Does any of this mean that you should ever choose a big CPU for V-Ray if you will have a big GPU? Not likely. If you need a many core CPU for other purposes, that’s one thing, but adding a second GPU to the mix is going to show even greater performance gains than we can see here. We even have examples of combining current and last-gen GPUs for V-Ray use, in case you don’t want to get rid of your old GPU when upgrading to a new one.

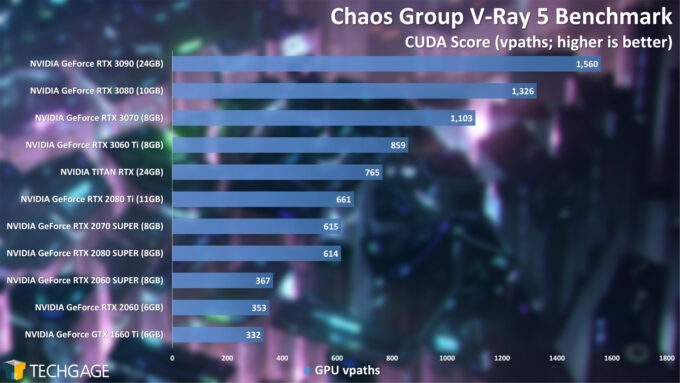

Since the last article like this we published, Chaos Group released an updated version of its standalone benchmark, based on the latest engine. That engine upgrade means RTX testing is now included:

While the results between the CUDA and RTX scores are not comparable to one another (as they are in some other benchmarks, like OctaneRender), we’ve seen proof in many of our other results of the kinds of gains that can be had with ray tracing acceleration being supported. Unlike Arnold, which activates the ray tracing acceleration by default, you will need to change to RTX in V-Ray’s options to take advantage of the speed boost.

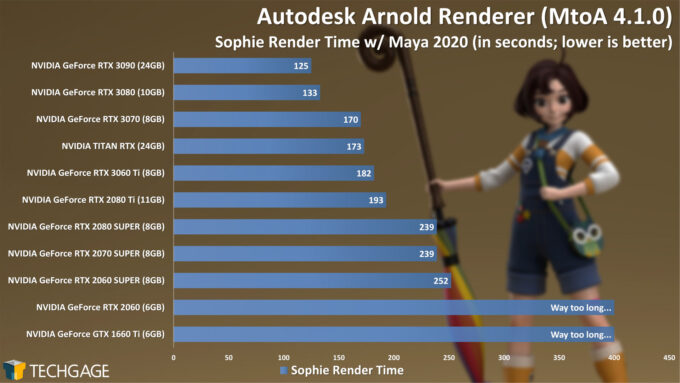

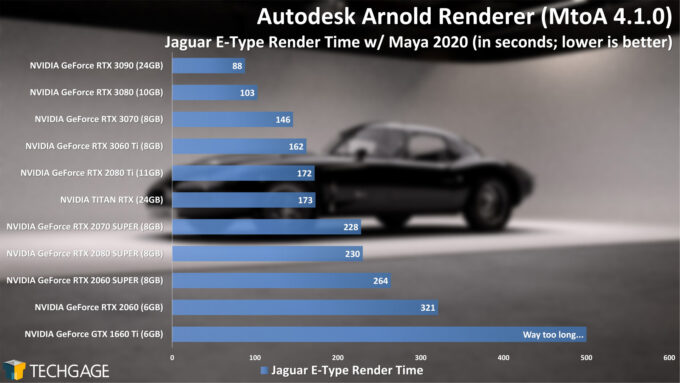

Autodesk Arnold 6

We’ve mentioned Arnold a couple of times in this article so far, so it’s about time we get to tackling GPU rendering performance using it – inside of Maya. As we’ve seen time and time again, GPUs with less than 8GB of memory are going to suffer in Arnold, at least based on the three projects we have in-house to test with (the two above are the only ones currently benchmarked). Interestingly, the RTX 2060 did manage to pass the E-Type render without fail, but the Sophie project bogs it down hard – along with the 1660 Ti.

At the top-end of the stack, it’s nice to see that choosing RTX 3080 over RTX 3070 will deliver a notable performance uplift. Anything less than a 2080 Ti will see a considerable performance improvement with the move to an Ampere GeForce, assuming you’re not only moving from 2080 Ti to 3060 Ti. Compared to that 2080 Ti, the RTX 3080 outperforms it greatly, and retails for much less this generation (something that’s hard to appreciate with inflated costs all over).

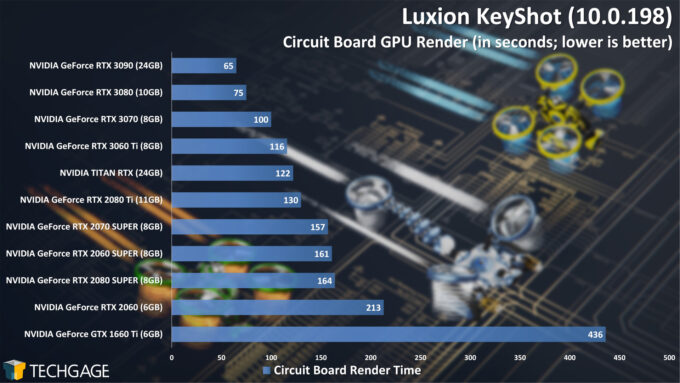

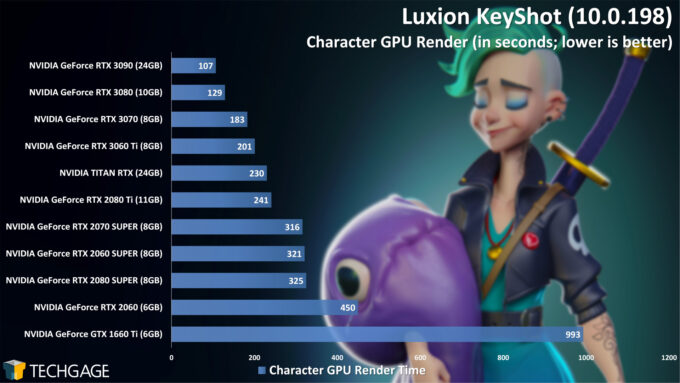

Luxion KeyShot 10

KeyShot is one of the software suites that saw the release of a major update since our last dedicated look at NVIDIA-bound renderers, so the numbers here cannot be compared to those of the past. From the get-go, we can see continued issues with lower-end cards, as anything with 6GB or less will begin to struggle in modern projects.

The fact that KeyShot can take advantage of RT acceleration is really obvious at the bottom of the chart, where the 1660 Ti falls flat on its face; taking more than twice the time to render the project than the RTX 2060. If you look back to our CUDA-only test with the Blender Classroom project, you’ll see that both of those GPUs performed closely – but that’s not close to being the case here in KeyShot.

Again, NVIDIA’s top two GPUs do well to separate themselves from the rest of the pack here, while the likes of the RTX 3060 Ti and RTX 3070 deliver some seriously strong performance for the dollar.

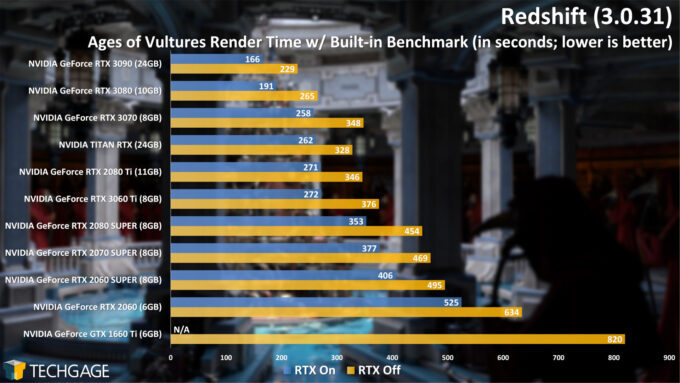

Maxon Redshift 3

We had a lot of fun with expiring licenses while putting this article together, so for this performance look at Redshift, we’re sticking to the most recent demo version to get our testing done. Fortunately, even the demo allows you to test with RTX on and off, which is one of our favorite ways to look at rendering performance. Interestingly, the gains with RTX on are not quite as grand in Redshift as they are in other renderers, but nothing is changed about the fact that you’ll want to keep it enabled.

Similar to V-Ray, you need to manually enable the RTX in Redshift’s options. We would expect to see the RTX option become default in more renderers the next year as compatibility becomes less and less of an issue.

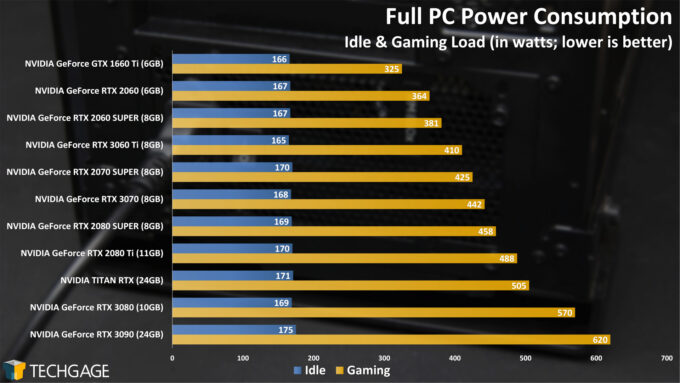

Power Consumption

Up until this point, we somehow hadn’t tested the power consumption of our Threadripper machine, at least at idle. In the move from our Intel Core X rig to this Ryzen Threadripper one, the idle wattage jumped quite a bit, from ~90W to 170W on average. Some Threadripper platforms seem to idle with much less wattage, but no change we made to auto-OC options in the EFI would make our idle budge much (we got it as low as 150W without enabling eco modes).

Nonetheless, while our idle results are much different than before, the scaling at peak operation hasn’t seemed to change much at all. The RTX 3080 and 3090 draw quite a bit more power than the rest of the lot, which would suck if not for the fact that the performance uptick justifies it.

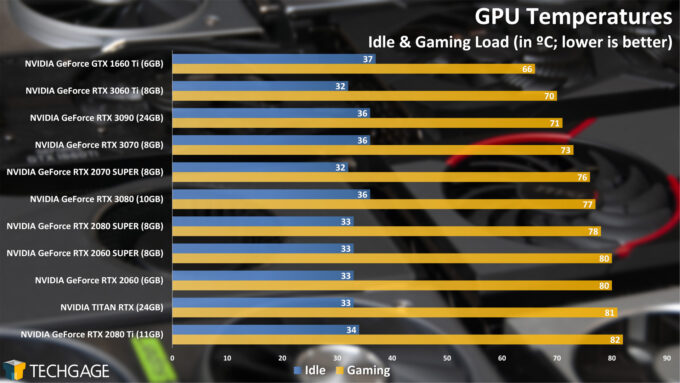

As for the temperatures, all of these GPUs aside from the 1660 Ti are based on “reference” designs, so if you have a vendor card that sports a beefier cooler, you’ll likely see improvement. NVIDIA’s latest coolers seem pretty efficient to us, though, especially compared to the previous-gen cards.

With these Ampere cards running so quietly, we’ve really noticed now how often the Turing FE cards ramp their fans up during use, something we just don’t hear with the newer cards and coolers. Even though the RTX 3090 is the fastest card of NIVDIA’s bunch, its larger-than-life cooler helps keep temperatures lower at peak than even the RTX 3070.

Final Thoughts

Some conclusions are easier to write than others, and when NVIDIA’s latest-gen GPUs offer such a notable performance improvement over the previous-gen offerings, it makes summarizing what we just saw pretty simple. If you’re buying a new GPU for creator work that heavily involves rendering, then you’ll definitely want to seek out one of the new RTX 3000-series cards. Your biggest concern should be whether or not you can expect an 8GB frame buffer to be a limitation for your work anytime soon.

Because most renderers scale with multiple GPUs so well, you also have the option to pair your current GPU with another that you find at a good price, assuming that your current GPU is actually performant to begin with. The biggest problem right now is scarcity, making dual-GPU even less of an option whether you’re trying to buy an old or new GPU. If you don’t mind going the dissimilar GPU route, you could get an impression of improved performance in this article that pairs the RTX 3080 with every last-gen GPU to show what you could expect to gain if you go that route.

In a world where the pricing NVIDIA says is what you actually pay, both the $399 RTX 3060 Ti and $499 RTX 3070 strike us as a seriously good value for the performance they offer. The 3060 Ti, at $399, manages to beat out the 2080 Ti in many tests, and we can honestly say it’s not often we see a $400 GPU beat out a $1,000 GPU of last-gen quite like this. Naturally, the 8GB frame buffer would be the biggest concern there, but how much of a concern it actually is will be dictated by how memory-hungry your work is.

Right now, chances are good that you’ll be fine with 8GB for a while longer, but if you do have the means to upgrade to the $699 RTX 3080, you’d be gaining not just some extra memory, but a healthy amount of performance at the same time. As mentioned earlier in the article, NVIDIA is rumored to release a few more GeForces in the months ahead with different memory options, so the overall picture might change before the spring hits.

If you are left with a question unanswered, please leave a comment below!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!