- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

The Almost Titan: NVIDIA GeForce GTX 780 Review

When Titan released in February, it seemed likely that NVIDIA’s GeForce 700 series would be held off on for some time. Well, with today’s launch of the company’s mini-Titan – ahem, GTX 780, we’ve been proven wrong. Compared to the GTX 680, it offers a lot of +50%’s – but is it worth its $649 price tag?

Page 1 – Introduction

Around this time every year, a ton of action-packed movies hits the cinema that helps make summer taste just a little bit sweeter. This year, it’s Fast & Furious 6 that’s on my radar. The speed… the fun, the hot women. Actually, that reminds me of something: a new top-end graphics card, much like NVIDIA’s GeForce GTX 780, priced at $649.

Is it fast? You bet. Is it fun? Of course. Does it get you hot women? In my limited experience, I can assuredly say that no it does not. But for those lonely nights, there’s gaming, and gaming is better with a top-end graphics card.

You’ll have to excuse me – I’m a bit wired. I’ve been testing the GTX 780 and other select cards for the past week, and as usual, time hasn’t been kind. Mere days prior to receiving NVIDIA’s latest, our 30″ testing monitor perished, meaning our usual 2560×1600 testing was out. That’s a major issue when we’re dealing with ~$500 GPUs.

As a direct result, this article is going to be structured a little different than usual. Instead of benchmarking with our three usual resolutions of 1680×1050, 1920×1080 and 2560×1600, we’re instead using 1920×1080 and two multi-monitor resolutions: 4098×900 and 5760×1080. Multi-monitor testing is something we’ve wanted to implement for a while, but I was hoping to hold it off for just a couple of more weeks. In the future, we’ll benchmark 4800×900 instead of 4098×768, as during testing, I realized it was a more suitable resolution.

*takes another sip of coffee*

Back in February, NVIDIA released its GeForce GTX Titan, the monster $1,000 GPU based on the hardware that powers the massive Titan supercomputer. Because of its unique design compared to NVIDIA’s 600 series, it seemed like Titan was in fact part of the 700 series, despite its non-numerical nomenclature. While that’s not true as far as the proper product-line goes, the GTX 780 NVIDIA’s introducing today is based on the same GK110 architecture as Titan. Therefore, in effect, the GTX 780 is Titan, but with fewer cores and half the GDDR5.

| NVIDIA GeForce Series | Cores | Core MHz | Memory | Mem MHz | Mem Bus | TDP |

| GeForce GTX Titan | 2688 | 837 | 6144MB | 6008 | 384-bit | 250W |

| GeForce GTX 780 | 2304 | 863 | 3072MB | 6008 | 384-bit | 250W |

| GeForce GTX 690 | 3072 | 915 | 2x 2048MB | 6008 | 256-bit | 300W |

| GeForce GTX 680 | 1536 | 1006 | 2048MB | 6008 | 256-bit | 195W |

| GeForce GTX 670 | 1344 | 915 | 2048MB | 6008 | 256-bit | 170W |

| GeForce GTX 660 Ti | 1344 | 915 | 2048MB | 6008 | 192-bit | 150W |

| GeForce GTX 660 | 960 | 980 | 2048MB | 6000 | 192-bit | 140W |

| GeForce GTX 650 Ti BOOST | 768 | 980 | 2048MB | 6008 | 192-bit | 134W |

| GeForce GTX 650 Ti | 768 | 925 | 1024MB | 5400 | 128-bit | 110W |

| GeForce GTX 650 | 384 | 1058 | 1024MB | 5000 | 128-bit | 64W |

| GeForce GT 640 | 384 | 900 | 2048MB | 5000 | 128-bit | 65W |

| GeForce GT 630 | 96 | 810 | 1024MB | 3200 | 128-bit | 65W |

| GeForce GT 620 | 96 | 700 | 1024MB | 1800 | 64-bit | 49W |

| GeForce GT 610 | 48 | 810 | 1024MB | 1800 | 64-bit | 29W |

Compared to the GTX 680, the GTX 780 has 50% more cores, 50% more memory, a 50% more capable memory bus and a 1,000% better-looking GPU cooler. Another increase is one we all hate to see, but it had to happen: the TDP has been boosted from 195W to 250W.

Because of the tight embargo timing I’m facing at the moment (which isn’t NVIDIA’s fault, mind you), I’m going to speed through some of these GTX 700 features more than I’d like. In the near-future, I’ll dedicate a quick article to GeForce Experience, as I believe it deserves one (and there are a couple of things coming that are cool.)

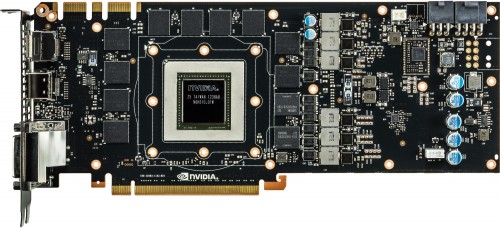

Fast, and heavy. No, that’s not plastic.

As seen in the above image, the GTX 780 shares the exact same GPU cooler found on Titan. While I’m indifferent to most coolers or GPU chassis in general, this one is about as badass as it gets. It’s designed to be as efficient as possible, keeping the GPU performing at its best without exceeding 80°C. Like the Titan, the GTX 780 includes GPU Boost 2.0, which unlike the original iteration focuses on adjusting the GPU clock based on temperature, not voltage. This is a design NVIDIA would agree should have existed from the start, as heat is a killer of electronics. Out-of-the-box, you should never see your GTX 780 exceed 80°C – as we’ll see in our temperature results later.

More cores is nice, but what helps set the GTX 780 apart from the GTX 680 is that it bumps the memory bus up to 384-bit (6 x 64-bit controllers), allowing games a bit more room to exercise – further helped by the increase of memory from 2GB to 3GB. While a bump of the memory seems minor, it’s actually rather important and I think it should have happened earlier – AMD has been a spearhead in this regard. While I haven’t found immediate proof of a game with a hunger for graphical RAM that will exceed 2GB (not even Metro 2033), there’s little doubt that we’ll reach that threshold soon.

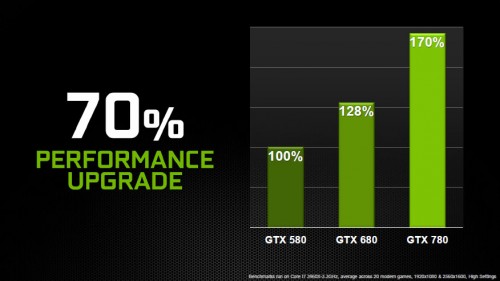

In the table above, NVIDIA showcases the performance improvements that its top-end GPUs have experienced since the GTX 580. Simply put, NVIDIA says that the GTX 780 is 70% more capable than the GTX 580, and 33% more capable than last year’s GTX 680.

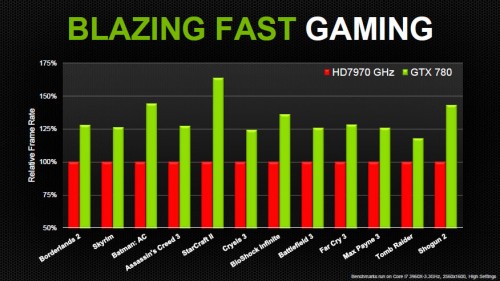

Compared to the HD 7970 GHz, considerable gains can also be seen:

Taking into consideration the games we use for our testing in this review, I’d say that these boosts seem fairly realistic, though as we’ll see later, there will be some occasions where a game will favor AMD’s GPU more, allowing it to catch up to or in one or two cases even surpass the GTX 780.

Given the GTX 780 is similar in design to Titan, there’s not too much else to talk about, although it’s worth noting that NVIDIA continues to tout its ability to offer multi-GPU users excellent scaling. In its testing, ~+55% is the minimum improvement you could expect (based on Batman: Arkham City), while +75% looks to be the norm (at least in the games NVIDIA tests… which are of course going to favor its cards).

For those who like to see a card’s naughty bits, don’t let me be the one to deprive you:

As mentioned earlier, this article is all over the place due to our testing monitor dying and the subsequent extra time that was required as a result. I couldn’t test all of the games I wanted to (Far Cry 3, Crysis 3 and Hitman: Absolution, namely), but in addition to our usual six, I was able to test using Borderlands 2, Metro: Last Light and also BioShock Infinite.

Each of the games tested for this article were run at 1080p for single-monitor and 4098×768 and 5760×1080 for multi-monitor. While comparing the number of megapixels between multi-monitor resolutions and a single-monitor one shouldn’t be done, 1080p clocks in at about 2 megapixels; 4098×768 at 3 megapixels, and 5760×1080 at 6 megapixels. Because AMD’s Catalyst Control Center doesn’t allow custom resolutions, I couldn’t include the HD 7970 GHz in our 4098×768 testing.

Since it’s been months since I last tested the GTX 680, I re-benchmarked it again for this article using the current 320.14 beta GeForce drivers. But there’s a twist: I left the old results using the 306.38 driver intact This is meant to show the scaling that can occur from driver improvements alone, which I’ll be talking a bit about in our conclusion.

With that all tackled, let’s move onto our testing methodology and then get right into our results, starting with Battlefield 3.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!