- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

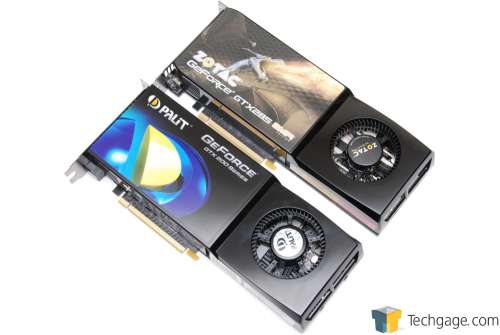

Zotac GeForce GTX 285 & GTX 295

When NVIDIA released their GTX 285 and 295 earlier this month, they successfully reclaimed the performance crown for both single and dual-GPU graphics cards. We’re finally putting both of these models through our grueling testing, in both single card and SLI configurations, to see just how much value can be had when compared to previous offerings.

Page 1 – Introduction, Closer Look

You may recall that last summer, ATI came out of nowhere with their HD 4000-series and impressed anyone who managed to get their hands on one, and surprised the heck out of NVIDIA, who expected their GTX 200-series to reign supreme for more than just a month. Well since ATI’s launch, we haven’t seen much from NVIDIA in way of extreme competition, but that changed earlier this month with the launch of their single-GPU GTX 285 and dual-GPU single-card GTX 295.

Although we had a preview for the GTX 295 prepared for posting earlier this month, we were forced to toss it in the trash bin due to a few simple reasons. The main reason was that I didn’t like how the numbers added up, and there seemed to be a few inconsistencies. I wasn’t sure whether or not this was due to NVIDIA’s pre-beta driver that we were using for testing, and I’m still unsure, but I wasn’t confident enough in our results to publish anything.

Once I returned from CES, I decided to start fresh, and rather than simply re-test the cards, I decided that the time was right to change our testing machine completely, and move up to using Core i7 as our new baseline machine. The main reason I decided to make the move right then and there was simple. The ASUS Rampage II Extreme allows both SLI and CrossFireX configurations, and since we had two GTX 285 and GTX 295’s here, it seemed to be the logical thing to complete all testing on the same motherboard.

It seemed like a great idea at the time, but I didn’t expect to see all of the complications that were awaiting me during testing. None of those were related to either of these GPUs, however, I’m happy to report. So while this article was meant to be posted two weeks ago, on the upside, we finally made our shift to making Core i7 the base for our GPU benchmarking machine, and we also have SLI results for both the GTX 285 and GTX 295 for you today.

Closer Look at NVIDIA’s GTX 285 & GTX 295

As I was mentioning above, before my whiny tangent, NVIDIA was looking to finally release some follow-up GPUs to their top-end GTX 280, first released last summer, and a release is exactly what we saw earlier this month. Although it might sound a bit odd to release two follow-up graphics cards for a single model, it’s the only way to look at things, given that both of the new cards are extremely powerful, and become NVIDIA’s highest-end offerings.

When we first got a glimpse of NVIDIA’s GTX 280 card last summer, we were blown away. Despite the fact that ATI followed-up so quickly with their HD 4000-series, the GTX 280 was still drool-worthy in its own right, and although it didn’t look too appealing months after launch, it was still the fastest-single GPU card on the market, and remained as such until NVIDIA’s own follow-ups earlier this month.

The biggest problem on NVIDIA’s radar over the past half-of-a-year was ATI’s dual-GPU Radeon HD 4870 X2, a card that was so powerful, it simply left everything else in its dust. As enthusiasts, the launch of that card was great, but what wasn’t great is that we knew it would take a while before NVIDIA could possibly follow-up to such a threat, given their GTX 200 GPU core was far too large, despite being one based on a modest 65nm node.

Long story short, it took NVIDIA a while, but they were finally able to revise their chip and re-release them on a 55nm process. This allowed more clocking headroom (which is why the GTX 285 is clocked much higher than the GTX 280), lower power draw and improved temperatures at an equal clock. The other benefit was that due to the die shrink, two GPUs were finally able to be enclosed inside of a single graphics card, and as a result, the GTX 295 was born.

|

Model

|

Core MHz

|

Shader MHz

|

Mem MHz

|

Memory

|

Memory Bus

|

Stream Proc.

|

| GTX 295 |

576

|

1242

|

1000

|

1792MB

|

448-bit

|

480

|

| GTX 285 |

648

|

1476

|

1242

|

1GB

|

512-bit

|

240

|

| GTX 280 |

602

|

1296

|

1107

|

1GB

|

512-bit

|

240

|

| GTX 260/216 |

576

|

1242

|

999

|

896MB

|

448-bit

|

216

|

| GTX 260 |

576

|

1242

|

999

|

896MB

|

448-bit

|

192

|

| 9800 GX2 |

600

|

1500

|

1000

|

1GB

|

512-bit

|

256

|

| 9800 GTX+ |

738

|

1836

|

1100

|

512MB

|

256-bit

|

128

|

| 9800 GTX |

675

|

1688

|

1100

|

512MB

|

256-bit

|

128

|

| 9800 GT |

600

|

1500

|

900

|

512MB

|

256-bit

|

112

|

| 9600 GT |

650

|

1625

|

900

|

512MB

|

256-bit

|

64

|

| 9600 GSO |

550

|

1375

|

800

|

384MB

|

192-bit

|

96

|

The major change on both of the new cards is the die shrink, but aside from that, the architecture remains the same. Thanks to the shrink, the GTX 285 enjoys clock boosts all-around, while retaining the same number of stream (or CUDA) processors. Thanks to the die shrink alone, the GTX 285 could have seen modest gains in performance, but with generously-boosted clocks, the GTX 280 should be little competition.

The GTX 295 is far more unique, though, as it’s NVIDIA’s first dual-GPU card based on their GTX 200-series. Its uniqueness goes beyond that, though, because although you might expect it to essentially be two GTX 280’s or GTX 285’s put together, it’s more of a hybrid between the GTX 260/216 and GTX 280/285. It shares the exact-same frequencies as the GTX 260/216, but bumps its processor count up to match the GTX 280/280. Interesting mix-matching, to say the least.

One interesting development with technology I’ve noticed is that while our products were becoming ever faster, they were also shrinking as well. Die shrinks played a role in this, but take a look now at the likes of Intel’s Core i7. Core 2 CPUs were modestly-sized, but then Core i7 comes along and almost doubles its volume. The same is going on with graphics cards. I thought the HD 4870 X2 was one heavy card, but the GTX 295 puts it to shame, likely thanks to it’s extra PCB and slightly larger GPUs.

NVIDIA’s GTX 295 & ATI’s HD 4870 X2

One thing’s for certain – when you hold a GTX 295 in your hand, you really feel like you have a well-designed product. It’s incredibly solid, and I’m not too confident that dropping it on concrete would do much harm (though I don’t suggest anyone take credence in anything I say). It’s easily the most sturdy card I’ve ever touched, but it’s also the heaviest.

Aside from its beefiness, the GTX 295 shares the same power connection configuration as the HD 4870 X2. To run one, you’ll need a power supply with an 8-pin PCI-E connector, along with the standard 6-pin. For dual-GPU, as common sense would suggest, you’d want two of each.

NVIDIA’s GTX 295 & ATI’s HD 4870 X2

Most, if not all, launch GTX 295 cards will feature a reference cooler. Every-single one will be identical, including the Zotac model we are using for our testing here. Deciding on which brand to go with will be a personal choice, and things like free games or warranties may matter most, so just keep an eye out. We’ll take a brief look at Zotac’s inclusions in a moment.

NVIDIA’s GTX 295 Face and Back

Like the GTX 295, most launch GTX 285’s will also feature a reference cooler. I do believe that we’ll be seeing custom coolers on this card far sooner than the GTX 295, however, since the latter is much more difficult to cool efficiently, and as a result, would require far more testing prior to release. The GTX 285, being a single-GPU card, will be much easier to apply a custom cooler too, but sadly, no current GTX 280 third-party offering will likely fit the GTX 285, due to screw holes being placed differently.

NVIDIA’s GTX 285 (Top) & GTX 280 (Bottom)

As you can see in the above photos, we’ll be taking a look specifically at Zotac’s offerings today. Although both cards follow the reference design to a T, the GTX 285 is the company’s AMP! edition, which means it’s pre-overclocked. That card bumps its Core clock to 702MHz (from 648MHz), its Shader clock to 1512MHz (from 1476MHz) and its Memory clock to 2592MHz (from 2484MHz). Overall, these are all healthy boosts, and we can expect to see that reflected in our results.

Accessories included with the GTX 285 AMP! include a well-written quick-start guide, a driver installation CD, a copy of Futuremark’s 3DMark Vantage Advanced Edition, as well as a copy of popular-racer GRID. In addition, respective power cable converters are also here (2x Molex to 1x PCI-E 6-Pin), an HDMI to DVI adapter and also an audio cable, for HDCP-related content.

The GTX 295 card includes almost the same, except rather than an HDMI to DVI adapter, an actual HDMI cable is included. For those with a VGA monitor, a VGA to DVI adapter has also been included. This is all on top of the proper power converter cables (2x Molex to 1x PCI-E 6-Pin and also a 2x PCI-E 6-Pin to 1x PCI-E 8-Pin).

Overall, both the GTX 285 and GTX 295 are great-looking cards, but what matters is the performance. On the next page, we’ll take a brief look at our test system and testing methodology, which we highly recommend you check out if you haven’t before, as we do things a little different than most. Also, in addition to this being our first GPU article based on our Core i7 test bed, it’s also the first with a fresh selection of games, so we highly recommend you read through. After that, we’ll get right to testing, beginning with Call of Duty: World at War.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!