- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA Announces PCIe-based Tesla P100, Expected To Ship By Year’s End

At its GPU Technology Conference held this past April, NVIDIA announced its most powerful GPU ever, the Tesla P100. We were told at that time that the card would push 5.3 TFLOPs of double-precision performance and 10.6 TFLOPs of single-precision, but little did most people realize at the time (including me), those specs were for the NVLink-specific version of the card. As it turns out, NVIDIA will also be offering a regular PCIe version of the card, with just a couple of specs scaled back a little bit.

| PCIe P100 | NVLink P100 | |

| Double-precision | 4.7 TFLOPs | 5.3 TFLOPs |

| Single-precision | 9.3 TFLOPs | 10.6 TFLOPs |

| Half-precision | 18.7 TFLOPs | 21.2 TFLOPs |

| NVLink Bandwidth | N/A | 160 GB/s |

| PCIe Bandwidth | 32 GB/s | 32 GB/s |

| CoWoS HBM2 Capacity | 12GB / 16GB | 16GB |

| CoWoS HBM2 Bandwidth | 540GB/s / 720GB/s | 720GB/s |

| Page Migration Engine | Yes | Yes |

| ECC Memory | Yes | Yes |

While a bit slower, the PCIe version of the Tesla P100 would still be faster than the fastest desktop gaming GPU, the GeForce GTX 1080. That card boasts single-precision performance of 9 TFLOPs, and 257 GFLOPs of double-precision. That makes the PCIe-based P100 about 1800% faster on the DP front over the GTX 1080, while the NVLink variant is over 2000% better. Tesla has long been the series to look at for good DP performance, and nothing changes about that with the P100.

While the P100 isn’t expected to begin shipping until December, it might still become the first commercially available HBM2-equipped NVIDIA card on the market. That could change if the company decides to release an HBM2-equipped GeForce TITAN variant before then, but it’s far too early to take a guess at whether or not that could actually happen.

NVIDIA combines its P100 GPU and HBM2 memory with a CoWoS (chip on wafer on substrate) design that’s said to improve efficiency by up to 3x. The 12GB version of the PCIe-based Tesla P100 pushes 540GB/s, while the 16GB variants of both cards boost that to 720GB/s. Both models also support ECC memory, as well as Page Migration Engine which aims to let developers focus more on eking as much performance out of the GPU as possible, rather than fussing over data management.

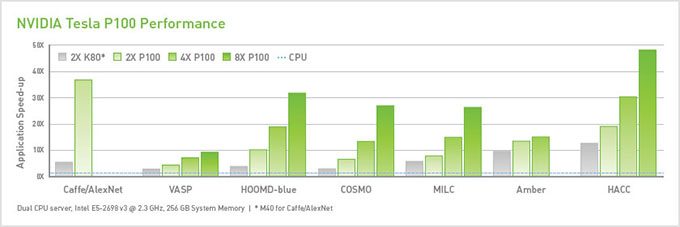

NVIDIA notes that the P100 natively supports over 410 GPU-accelerated applications, and takes care of 9 of the top 10 HPC applications (we’re not sure which one is missing). In the graph above, NVIDIA highlights the fact that the P100 isn’t only much faster than a CPU, it’s even much faster than previous generation Teslas. A good example is with the K80; 2x P100 proves about (up to) 36x faster than 2x K80. The same graph also shows that the P100 is designed for huge scaling, officially supporting up to 8 GPUs per server.

As mentioned earlier, NVIDIA expects this card to become available in Q4, which is likely the same time we’ll see the NVLink version achieve greater availability. As hinted at GTC in April, NVIDIA’s already sent out P100s to various early-adopters, but it will still be a little while before they begin being shipped out in huge numbers. Part of that could be because of the monstrous GPU used, but it’s more likely that HBM2 supply and availability has even more to do with it.