- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA’s Deep Learning Institute Aims To Train 100,000 Developers In 2017

Over the course of the last five years, deep learning has gone from being a relatively unknown technology to becoming one that’s a very important part of the future of computing. IDC’s research predicts that by 2020, a staggering 80% of applications built will implement deep learning in some way, highlighting the fact that we’re merely at the crux of its impact.

To put it simply, deep learning is a branch of machine learning that uses algorithms to mimic a neural network. The goal is to piece together many different bits of information to deliver accurate answers to the user, a higher rate of prediction success, and generally behave as AI to solve complicated problems. Deep learning is being heavily used in finance, health, science, manufacturing, and even public safety (think autonomous vehicles).

At one of its GTCs a number of years ago, NVIDIA CEO Jen-Hsun Huang took to the stage to tell the world of the benefits of deep learning. At each new GTC, that message has been amplified more and more. At this point, deep learning is a major focal point of NVIDIA’s (remember when it unveiled its second-gen TITAN X to a room full of researchers in lieu of press or fans?), because as mentioned before, it’s quickly becoming a huge part of our lives. Have an Amazon Echo? You’re already benefiting from the fruits of deep learning.

At this year’s GTC, NVIDIA held a session to talk about its Deep Learning Institute, which aims to train thousands of developers each year (100K is the goal for 2017) to best use the technologies. The information learned through DLI isn’t necessarily specific to NVIDIA’s hardware, but given the company’s focus on the technology, and its resources, its GPU product stack will be quite alluring to developers.

Given the acceleration of deep learning, there’s a massive need for more developers, and that’s where DLI comes in. Across the globe, NVIDIA regularly hosts one-subject labs and more in-depth workshops to help get developers on the right track. Individual labs cost $30 a pop, while workshops can range in the thousands. Ultimately, NVIDIA says it charges what it needs to, and that it just about “breaks even”. Its goal is to accelerate deep learning adoption, because that will naturally improve the market success for its hardware. NVIDIA’s biggest costs with DLI are tied to training and servers.

To give an idea of just how much demand there is for this kind of training, at a recent lab conducted at IIT Bombay (pictured above), some folks lined up outside the doors 75 minutes in advance in the hopes that the sold-out event would have some seats be freed-up.

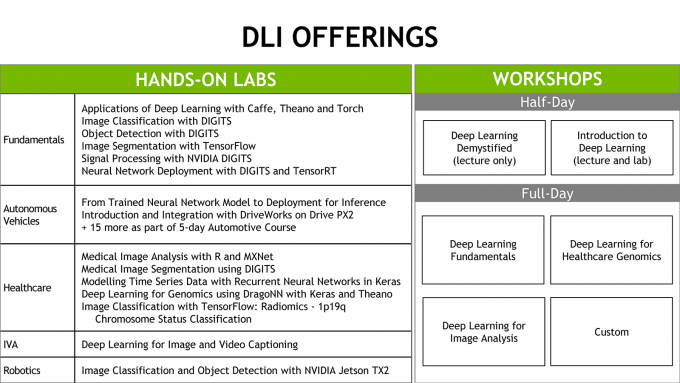

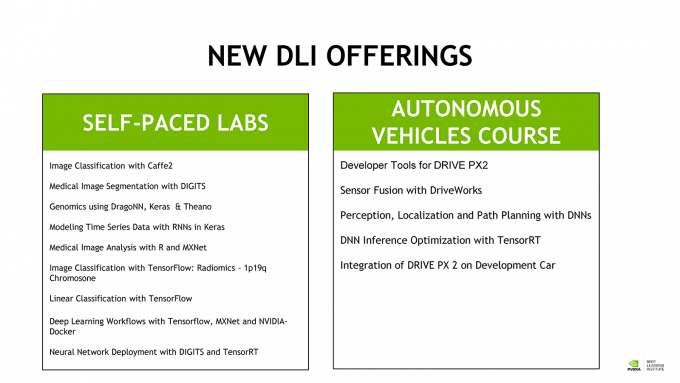

At the moment, DLI’s subjects revolve around five major deep learning platforms, including Google’s TensorFlow, Caffe2, Microsoft’s CNTK, MXNet, and Pytorch. Adobe, Alibaba Group, Temasek, IIT Bombay, and SAP are some of the most notable customers of DLI, with NVIDIA hoping more vendors (large and small) will join the fray in the future. For some subjects, NVIDIA also offers online courses, taught by its trained instructors. Some subjects are far too complex to complete online, though, so don’t be surprised if yours doesn’t make the cut.

If you’re interested in learning more about NVIDIA’s Deep Learning Institute, your one-stop-shop is right here.