- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

A Glimpse Into The Future Of GPUs: What We Took Away From NVIDIA’s GTC 2018

NVIDIA’s annual GPU Technology Conference took place last week in San Jose, California, and as always, the event has given us a lot to think about upon returning home. Some things stand out more than others, and it’s those that will be talked about here: from GPUs and ray tracing to deep-learning and artificial intelligence.

NVIDIA’s annual GPU Technology Conference, held near its headquarters in San Jose, California, is always a great time. It’s been getting better every single year, too, as long as you’re not a gamer hoping for a look at a new GeForce release (GTC is not the place, as has been proven the past few years!).

As far as I’m aware, 2018’s event drew in more attendees than ever. It did feel like that. The keynote hall where Jensen Huang gave his anticipated annual outlook on GPU technology was packed from front to back.

Likewise, the show floor was extremely busy, as well. At this rate, the San Jose Convention Center isn’t going to remain a suitable host forever, but that’s not a bad thing. If anything, this is a testament to how much GPUs matter right now.

After any GTC, there are certain things that stand out more than the rest. This year was no exception, so for this quick article, I want to talk about (or maybe gush over) some of the cool technology featured at the event. Given our hardware focus, it’d be weird to start with anything other than a second look at the new Quadro GV100:

Quadro GV100 And DGX-2

Like the Tesla V100 and TITAN V, the GV100 sports 5120 CUDA, and 640 Tensor cores. Whereas the TITAN V’s memory bandwidth hovers around ~650GB/s, the GV100 comes much closer to the Tesla V100’s 900GB/s, at 870GB/s. Performance-wise, the card is completely uncapped, delivering performance of 29.6 TFLOPS half-precision, 14.8 TFLOPS single-precision, and 7.4 TFLOPS double-precision. That comes in addition to the 118.5 TFLOPS of deep-learning performance. Not bad for a 250W GPU.

Because the GV100 is a Quadro, it differs from the Tesla V100 and TITAN V due to its added workstation focus. I haven’t tested it myself, but based on the TITAN Xp, I’d wager the TITAN V would keep up to Quadro just fine in many scenarios, but not all. For high-end CAD suites, as one example, Quadro is going to deliver the best overall performance.

As impressive as that GPU is, it doesn’t hold a candle to another piece of hardware NVIDIA unveiled: the DGX-2. Using NVIDIA NVSwitch, this beast of a machine combines 16 GPUs to become 1. That’s “one” GPU with 81,920 CUDA cores, and a completely reasonable 512GB of HBM2 memory, that memory is pooled by the way, meaning it can be treated as a single block (rather than cloned with traditional SLI/multi GPU configurations).

The resulting unit isn’t just packed to the gills with modern technologies, it’s extremely weighty. At 350lbs, or thereabouts, the DGX-2 isn’t meant to be transported often. As the $399,999 price tag can attest, this is a serious piece of hardware, but somehow, I found myself more impressed with it when I could take a look up close.

In the shot above, you can see one of the DGX-2’s GPUs raised, alongside the chipset for NVIDIA’s NVSwitch, and below, you can get a better idea of how everything comes together:

The DGX-2 features two system boards that hold 8 GPUs each, and once everything is closed up, nothing inside of the rig is going to budge. This isn’t just a beefy piece of hardware, it’s really a work of engineering art. Interestingly, despite having 16 of NVIDIA’s highest-end GPUs, every single one of them is air-cooled inside of the DGX-2.

Autonomous Vehicles And Simulation

Days ahead of the opening day of GTC, the first death of a civilian killed by an autonomous vehicle occurred, and it was inevitable that it’d become a subject at the event. During Jensen’s keynote, he talked about how the company was using simulators to combat the simple fact that autonomous vehicles can only spend so much real-world time on the roads, and really, it’s not enough.

NVIDIA’s talked about virtual robots and other sims for a while, but this one hit home due to the recent accident. I’m not able to recall the exact figure used, but Jensen mentioned that it takes hundreds of thousands of hours to encounter (or come close to) an accident, and if that’s the case, then it means most autonomous vehicles will drive for years without real tests. That seems great, until we have what happened last week… proof of how easily things can go wrong, and why more work needs to be done.

So, if a regular driver could muster 50,000 miles a year, it goes without saying that software and hardware solutions like NVIDIA’s Drive wouldn’t be challenged nearly enough. We need to perfect these things to the best of our abilities, after all.

In a simulation, NVIDIA’s shown that you can test autonomous vehicles day and night – and before you think it’s not as good as the real-world, it’s probably better. No one will lose their life in a simulator.

The reason this works is because a real NVIDIA Drive system is monitoring the data the exact same way in the simulator as in real-life. As far as the Drive system is concerned, there’s literally no difference because of how accurate the simulator is. NVIDIA could throw any event or situation it wants at it to test the outcome and how the AI will respond.

In another example of it being better than real-life: the time of day can be controlled. You know that period of a few minutes each day when the sun seems to have it out for you? Imagine being able to test with such blinding light any time you wanted.

GPU tech isn’t just going to be used for autonomous driving, but really… anything. That’s the beauty of artificial intelligence. Recognition is a huge part of this, and many examples were seen at GTC last week. Some of it may seem a little “Big Brother”, but the benefits in some cases are extreme. If your child goes lost in a crowd, for example, AI-assisted cameras could help figure out, quickly and easily, when and where they were last seen.

YITU was a company with a big presence at this year’s GTC with a huge focus on recognition technologies, such as the one just mentioned. That includes facial recognition, medical diagnosis, and as just alluded in an example, “smart city”. Potential here shouldn’t be understated; the amount of help that AI could provide is really incredible. It will take a while before AI will be truly permeated throughout our lives, but GTC is filled to the brim with inspiring ideas that really can’t come to fruition a moment too soon.

Unfortunately, due to a packed schedule, I couldn’t stop and meet with as many companies I would have liked to at GTC, and for that matter, I definitely missed some neat tech demos, as well. Here’s one I didn’t miss, however, and I’m glad I didn’t, because it’s proof that AI is going to impact more than you probably realize.

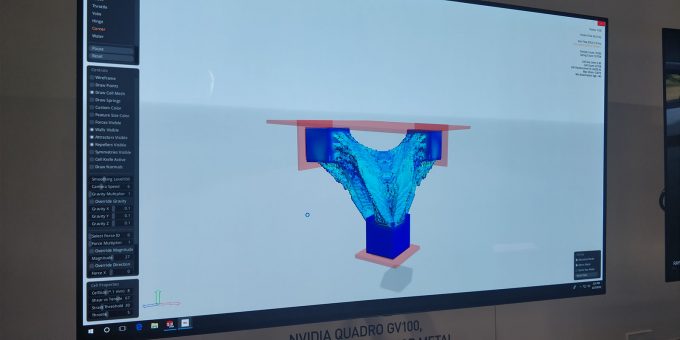

That shot might not look like much, but bear with me. What you’re looking at is a self-building model (generative, eg: how our body heals itself) that will be printed out on a 3D metal printer. Say you need a new bracket for a shelf, or for something bigger, a bridge. With this software, you set your parameters and boundaries, and the GPU calculations get underway.

In its initial state, the only thing you’d see from the model above are the blue blocks. All of the “cells” connecting everything were automatically generated. The ultimate goal is to print out a functional part that doesn’t waste material. As such, you may end up seeing some gaps in the end product, but that’s fine, because the software certified its durability.

Virtual Reality And Holodecks

Another popular subject at this year’s GTC was VR, which was to be expected. While VR might not seem to be on fire right now on the consumer and gaming side due to its higher cost of entry, it’s growing in traction very quickly on the enterprise side. That ranges from consumers using a headset in a car dealer to configure their perfect ride to professionals using the same kind of system to design the car in the first place.

During GTC, I was able to give the new HTC Vive Pro a go in a Holodeck environment similar to the one seen above, where a Koenigsegg Regera (not that McLaren) awaited my design ineptitude. Sadly, I don’t have screenshots to share, but in this demo, I was able to change the color of the car, interact with absolutely any part of it, and my favorite part: use a lens that let me see the myriad components under the hood as I waved it through.

A close second “wow” moment was the “explode” view, which showed every single one of the car’s components floating in mid-air. At that moment, I realized I personally don’t have the capability to build my own Koenigsegg Regera from scratch. Bah.

I have tested other “for work” solutions in VR in the past, including walking around a construction site, making note of issues or things that need to be changed. The sky’s the limit, and while VR isn’t as mainstream as it should be, it’s not going anywhere, and based on my initial taste, the best experience is definitely going to be on the new Vive Pro going forward.

Finally, another thing that grabbed me at GTC was the obsession (a good thing) with ray tracing. NVIDIA has called ray tracing in gaming a “holy grail”, and that’s not just marketing. There’s a reason rendering ray traced images takes so long… it’s extremely computationally intensive. To get that kind of lighting accuracy in a game seems almost impossible, doesn’t it?

If you had asked me a decade ago if I could see games featuring ray tracing in the next fifteen or twenty years, I don’t think I would have ever imagined it could happen. But, that was before I ever considered how far AI would come along, or how it could be used to improve those very things. NVIDIA talked about its OptiX AI denoiser at SIGGRAPH last fall, a key ingredient in delivering usable real-time ray traced scenes.

I attended a session called The Future of GPU Ray Tracing, where eight companies of great stature in the community all vouched support for NVIDIA’s RTX, and I presume, the AI denoiser technology. Those include Blur Studio, Autodesk, Isotropix, OTOY, Epic Games, Redshift, Pixar, and Chaos Group (side note: read about our V-Ray 4.0 beta renderer tests). I would not be surprised to see the likes of Dassault Systèmes (CATIA, SolidWorks) and Siemens (NX) implementing these technologies in time, as they already utilize NVIDIA’s technologies in their default renderers.

Final Thoughts

There’s never really a “Final Thoughts” with GTC, because like technology itself, it’s ever-evolving. It’s evolving fast, too, on both fronts. I remember when GTC had a couple of thousand attendees. Now we’re over 8,000. Each year, the halls are packed, and since the need for GPU tech is not going away, it stands to reason that it’s going to continue its upward trajectory.

None of that is to imply that there is only one GTC, though. San Jose’s annual event is the flagship, but NVIDIA holds regional events all over the world, and if you are from any of the countries included, you could expect a smaller, but still very info-packed event.

Each year, there definitely seems to be a theme that can be taken away from a GTC, and this year, that’d probably be ray tracing. But beyond that, autonomous vehicles, and the use of AI in many different fields, has not waned at all. It’s only getting stronger, and with each GTC, we see more proof of that, and more proof of how AI can be used in ways we never considered before.

Based on my history with GTCs, I can imagine that 2019’s event will be equally great. But fortunately, GTC isn’t the only event where GPU magic happens, so we’re surely going to continue learning more about NVIDIA’s (and others’) GPU technologies throughout the year. Notably, SIGGRAPH 2018 takes place this August, in Vancouver, British Columbia. That’s likely when the industry will get its next massive wallop of GPU tech goodness.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!