- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

A Look at NVIDIA’s Kepler-based Tesla K-Series GPU Accelerators

We talked at length about the NVIDIA-infused Titan supercomputer already, but we haven’t been able to talk about the GPUs that help make the magic happen. In this article, we’re doing just that. We’ll see what the Tesla K-series of “GPU Accelerators” bring to the table and are capable of, and also take a look at where CUDA usage stands today.

In our look at the Titan supercomputer, housed at the Oak Ridge National Laboratory, we mentioned that there were a couple of features and design choices that made it unique. At the forefront, the entire supercomputer is comprised of nodes that implement one CPU and one GPU, for a total of 18,688 each. Second, the GPU chosen for the job was NVIDIA’s Tesla K20X, an up-to-this-point unreleased card. Given its capabilities however, it has been long-awaited.

With Titan having just become the world’s fastest supercomputer, it’s no wonder NVIDIA chose to promote it heavily. After all, that in turn promotes the Tesla GPU itself, and touts its capabilities. While Titan’s creators were blessed with the ability to purchase the GPUs in advance, the rest of the world has had to wait. Today, that wait over, as NVIDIA is formally announcing the K-series, consisting of the K10, K20 and K20X.

Unlike NVIDIA’s other GPU launches, we didn’t receive a sample of one of these cards for review, and the reason boils down to the fact that this is not a typical GPU, nor are we setup to properly test one. Unlike gaming GPUs and those used for workstation purposes, the goal of Tesla GPUs is to compute complex math as fast as possible. Even if you had one, you wouldn’t be able to game with it. There are no video outputs, and likely no logic at all on the board to drive them even if they were there. Tesla is a brand that means business, designed for those who have some of the most serious jobs on earth.

For gaming, it’s not uncommon to see the latest generation of GPUs deliver up to a 25% performance boost, which for all intents and purposes is quite good. But that kind of boost pales in comparison to what’s been seen on the compute side of things, and the table below helps us to understand why.

| Tesla K10 | Tesla K20 | Tesla K20X | |

| Peak Double Precision FP | 0.19 TFLOPs | 1.17 TFLOPs | 1.31 TFLOPs |

| Peak Single Precision FP | 4.58 TFLOPs | 3.52 TFLOPs | 3.95 TFLOPs |

| Number of GPUs | 2 x GK104 | 1 x GK100 | |

| Number of CUDA Cores | 2 x 1536 | 2496 | 2688 |

| Clock Speed | 745 MHz | 705 MHz | 732 MHz |

| Memory Size Per Board | 8 GB | 5 GB | 6 GB |

| Memory Bandwidth (ECC Off) | 320 GB/s | 208 GB/s | 250 GB/s |

| Power Consumption | 250W | 225W | 235W |

| GPU Computing Applications | Seismic, image, signal processing, video analytics | CFD, CAE, financial computing, computational chemistry and physics, data analytics, satellite imaging, weather modeling | |

| Architecture Features | SMX | SMX, Dynamic Parallelism, Hyper-Q | |

| System | Servers | Servers and Workstations | Servers |

| Pricing | ??? | ??? | ??? |

The top-end last-gen Tesla offering was the M2090, a card that offered dream-worthy performance at the time of its launch. It delivered 1.33 TFLOPs of single-precision floating-point performance, and 0.66 TFLOPs of double-precision. In this single generation increase, we can see that the proper M2090 successor, the K20, boosts those values to 3.52 TFLOPs and 1.17 TFLOPs, respectively. This, all while decreasing the power consumption from 250W to 225W.

For customers in need of even more performance from a single GPU, there’s the K20X. It features additional cores and higher clock-speeds. For those working on projects that benefit more from single-precision performance, NVIDIA also offers the K10. This card features a dual-GPU design, and while it becomes just about useless for double-precision performance, it boosts the single-precision to 4.58 TFLOPs, 1.06 more than the K20. Because both of these GPUs can get hot, they’re designed strictly for server use, as a typical workstation chassis can’t provide the airflow such cards would need. Even the K20 would be pushing it, but a proper and well-thought-out airflow scheme can make it suitable.

For this launch, NVIDIA inundated us with information and fun facts, so we’re going to be storming through a lot of that here. It just about makes up for not being able to benchmark the card for ourselves!

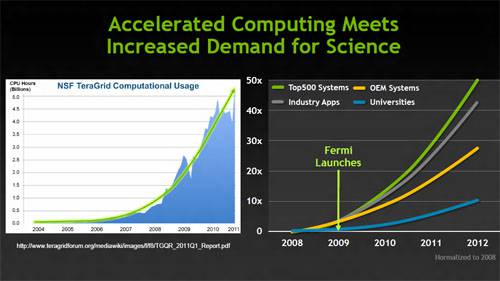

One of the first slides we were shown emphasizes the sheer amount of demand out there for scientific computing. When NVIDIA launched its Fermi architecture in 2009 (GTX 400 series), things were beginning to pick up fast. With that launch, we all began to see just how seriously NVIDIA was taking GPGPU computing, as the cards excelled in that area. It didn’t take too long before users of Folding@home were picking them up to maximize their output. As time has passed, pure GPGPU throughput has varied on the desktop side, but on the Tesla side, performance has increased dramatically.

According to the above slide, the demand for “accelerated computing” has increased 50-fold since 2008. While that might sound like an outrageous number, it doesn’t strike me as unreasonable. In 2008, GPGPU was in its infancy, and we were mostly unsure of what the future would bring. As GPU vendors pressed forward, NVIDIA especially, the future of GPU computing became super-clear. We’re at a point right now where GPU computing isn’t just viable, but necessary, and supercomputers such as Titan prove that.

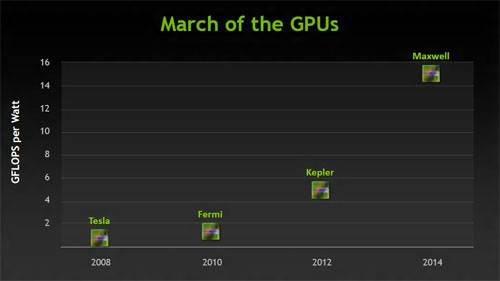

It’s easy to understand that GPU computing has gotten better over the past few years, but the following slide really puts things into perspective. It showcases how the performance-per-watt has changed since 2008, and the results are pretty staggering to say the least. With the current Kepler-based GPUs, GFLOPs per watt has increased by a factor of five. By 2015, NVIDIA wants to increase that to a factor of fifteen.

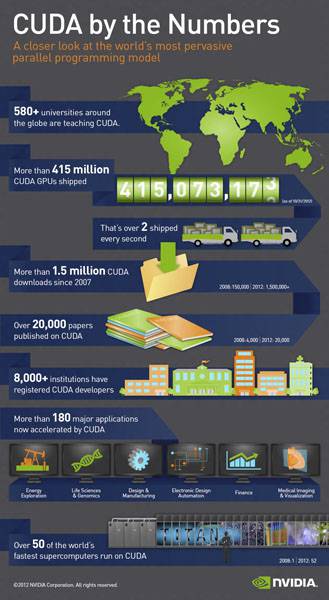

Let’s tackle some other interesting statistics that don’t have too much to do with the hardware itself. CUDA, NVIDIA’s extension to C and Fortran, is now being used in 8,000 institutions, with its SDK having been downloaded a total of 1.5 million times. To date, 415 million+ CUDA GPUs have been shipped. It boggles the mind. In terms of actual CUDA applications, those have increased in popularity as well, as you’d likely expect. From 2010, the total number of CUDA apps increased by 61% across top supercomputing fields.

Emphasizing the fact that some simulations can be more efficient on CUDA than traditional CPUs, NVIDIA gave an example of material sciences application WL-LSMS. With 2% of the lines of code being changed for CUDA’s sake, the throughput for a given simulation has been increased from 3.08 PFLOPs to 10+ PFLOPs. The 3.08 PFLOPs measurement was reached in 2011 with the help of Japan’s K supercomputer.

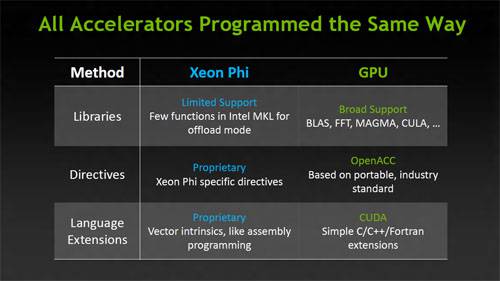

It wouldn’t be an NVIDIA GPGPU launch without some Intel-jabbing, so we have that thanks to Intel’s upcoming Xeon Phi. If that doesn’t sound too familiar, it helps to realize that it’s inspired by the work done with Larrabee, which never came to market. Years ago, when Larrabee still seemed to be a real product, we talked a bit about the differences between it and CUDA. In the end, without actually being a programmer, it’s hard to appreciate either side’s perspective on things, but even today, NVIDIA remains adamant about its CUDA architecture being superior.

Wrapping things up, NVIDIA provided a fun infographic that shows off some of the statistics we’ve talked about already, and adds in a couple more:

If you’re interested in reading more on NVIDIA’s latest Tesla cards, we’re making a couple of documents available in case they’re not easy to find after the launch:

- GPU Applications Catalog – 200KB

- Tesla K20 Launch Presentation – 3MB

- Tesla K10/K20 Datasheet – 200KB

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!