- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

An In-depth Look At Blender 2.80 (Beta) Viewport & Rendering Performance

Blender’s upcoming 2.8 version represents one of the biggest shifts the software has ever seen, something its meager bump from version 2.79 hides well. To see where things stand today on the performance front, we’re using 30 GPUs to tackle the current beta with viewport testing, as well as Eevee and Cycles rendering.

Page 1 – Introduction, Blender 2.8 Viewport Performance

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

Unlike web browser makers, which seem to be in a race to score the highest version number possible (Firefox is at 65, whereas it was 9 in 2011), Blender’s done things a little differently. To the layman, Blender 2.80 might seem like a minor update over 2.79, but the reality is, it’s a major upgrade, both under the hood, and on the surface.

This next Blender is such a big release, that the developers a year ago sold “Flash Drive Rockets” to help fund travel for key team members to get together and better steer the 2.80 ship.

This is just scratching the surface, but 2.80 brings on a new physically-based renderer called Eevee, many updates to the preexisting Cycles renderer, improved animation tools, boosts to performance, and arguably a much more refined (and better looking) UI.

It could be that some performance in 2.80 is going to change between now and final release (which is tentative), and if that happens, we’ll retest and reflect the updated performance. If anything, we could see the viewport getting some more fine-tuning, but we’re not entirely confident that the rendering results will change very much between now and final, at least on the Cycles side.

| CPUs & GPUs Tested in Blender 2.80.44 (Beta) | |

| AMD Ryzen Threadripper 2990WX (32-core; 3.0 GHz, ~$1,729) AMD Ryzen Threadripper 2970WX (24-core; 3.0 GHz, ~$1,119) AMD Ryzen Threadripper 2950X (16-core; 3.5 GHz, ~$829) AMD Ryzen Threadripper 2920X (12-core; 3.5 GHz, ~$649) AMD Ryzen 7 2700X (8-core; 3.7 GHz, ~$299) AMD Ryzen 5 2600X (6-core; 3.6 GHz, ~$199) AMD Ryzen 5 2400G (4-core; 3.6 GHz, ~$140) Intel Core i9-7980XE (18-core; 2.6 GHz, ~$1,800) Intel Core i9-9900K (8-core; 3.6 GHz, ~$525) |

|

| AMD Radeon VII (16GB, ~$699) AMD Radeon RX Vega 64 (8GB, ~$449) AMD Radeon RX Vega 56 (8GB, ~$499) AMD Radeon RX 590 (8GB, ~$279) AMD Radeon RX 580 (8GB, ~$229) AMD Radeon RX 570 (8GB, ~$179) AMD Radeon RX 550 (2GB, ~$99) AMD Radeon Pro WX 8200 (8GB, ~$999) AMD Radeon Pro WX 7100 (8GB, ~$549) AMD Radeon Pro WX 5100 (8GB, ~$359) AMD Radeon Pro WX 4100 (4GB, ~$259) AMD Radeon Pro WX 3100 (4GB, ~$169) NVIDIA TITAN Xp (12GB, ~$1,200) (x2) NVIDIA GeForce RTX 2080 Ti (11GB, ~$1,200) NVIDIA GeForce RTX 2080 (8GB, ~$800) NVIDIA GeForce RTX 2070 (8GB, ~$499) NVIDIA GeForce RTX 2060 (6GB, ~$349) NVIDIA GeForce GTX 1080 Ti (11GB, ~$699) NVIDIA GeForce GTX 1080 (8GB, ~$499) NVIDIA GeForce GTX 1070 Ti (8GB, ~$449) NVIDIA GeForce GTX 1070 (8GB, ~$379) NVIDIA GeForce GTX 1060 (6GB, ~$299) NVIDIA GeForce GTX 1050 Ti (4GB, ~$139) NVIDIA GeForce GTX 1050 (2GB, ~$109) NVIDIA GeForce GTX 1660 Ti (6GB, ~$279) NVIDIA Quadro RTX 4000 (8GB, ~$899) NVIDIA Quadro P6000 (24GB, ~$4,300) NVIDIA Quadro P5000 (12GB, ~$1,249) NVIDIA Quadro P4000 (8GB, ~$749) NVIDIA Quadro P2000 (5GB, ~$425) |

|

| All GPU-specific testing was conducted on our Intel Core i9-7980XE workstation. All product links in this table are affiliated, and support the website. |

|

The main reason we’re including professional GPUs in our testing is in response to receiving questions about whether or not there are pro-level enhancements in Blender. We can say that there definitely isn’t, but if you already have a current-gen ProViz card, you can use these results to see how it stacks up to other cards in a neutral design suite.

We recently upgraded our Blender testing script to include viewport testing, so in this article, we’ll take care of that, along with rendering performance in both Cycles and Eevee. There’s even a bit of heterogeneous rendering testing, and info on tile sizes, so… let’s get right to it!

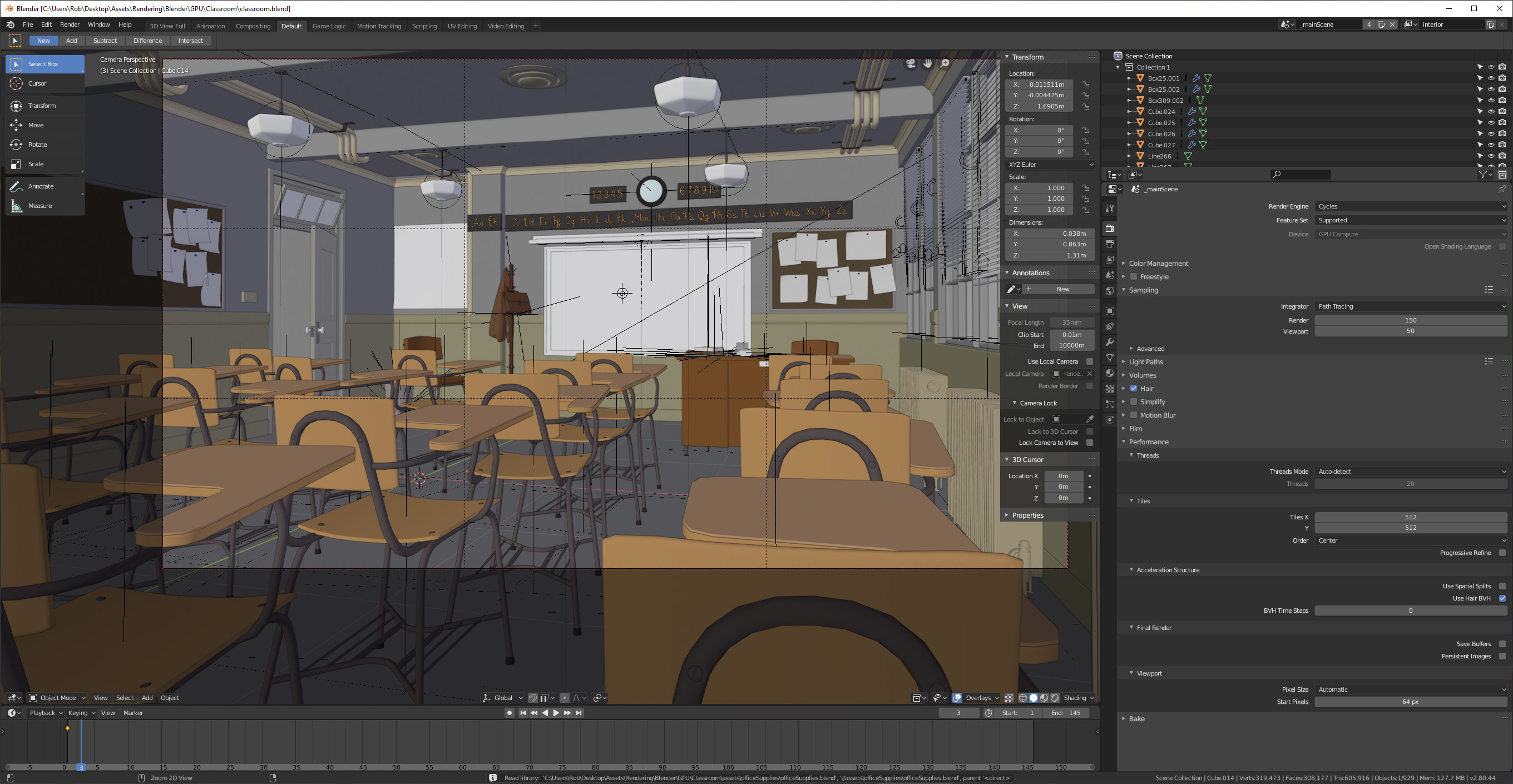

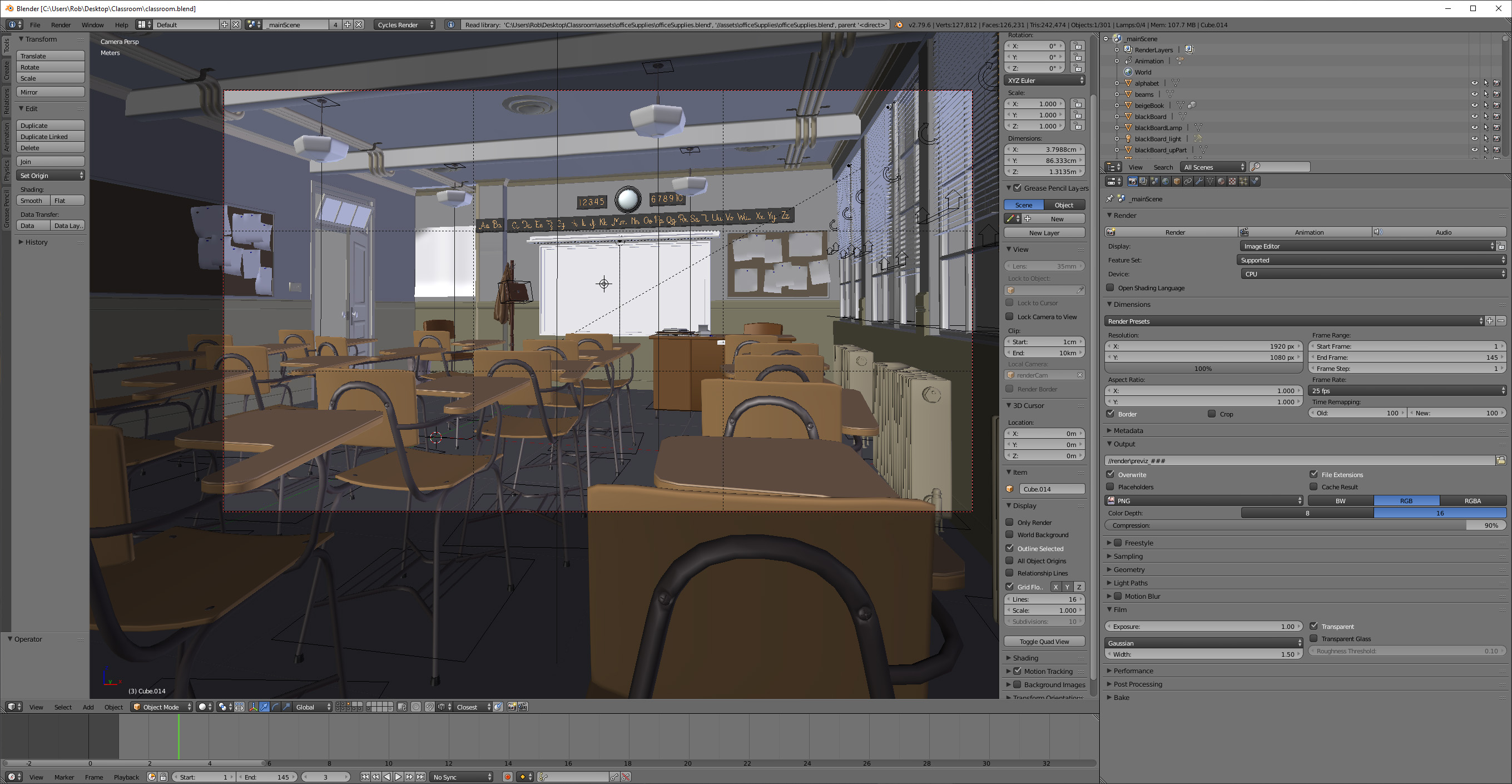

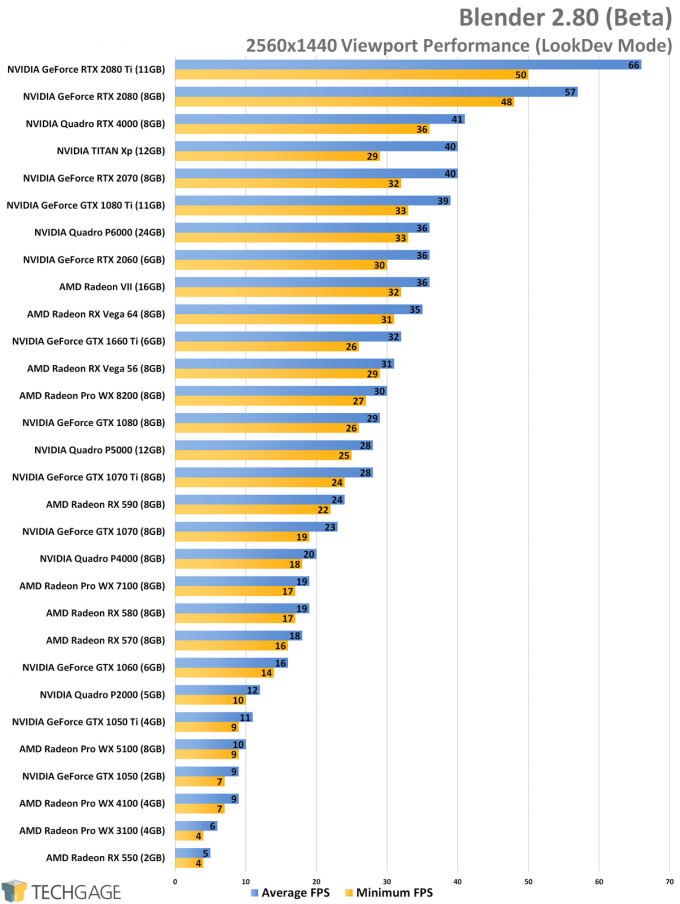

Viewport Performance

Blender’s built-in viewport offers three rendering modes: Solid, Wireframe, and LookDev. We didn’t test the first two, as they run so well on the vast majority of GPUs that no real scaling would be seen between them. We can say that if you want 60 FPS out of Wireframe in heavier projects, you’ll want at least a “decent” GPU, which is to say a GeForce GTX 1060.

LookDev is the most grueling of the three modes, as it loads up all of the assets and lighting effects to help you gauge whether you’re going in the right direction with your scene. LookDev is too grueling to use for real-time work, but artists love it to better gauge a scene before they continue their work.

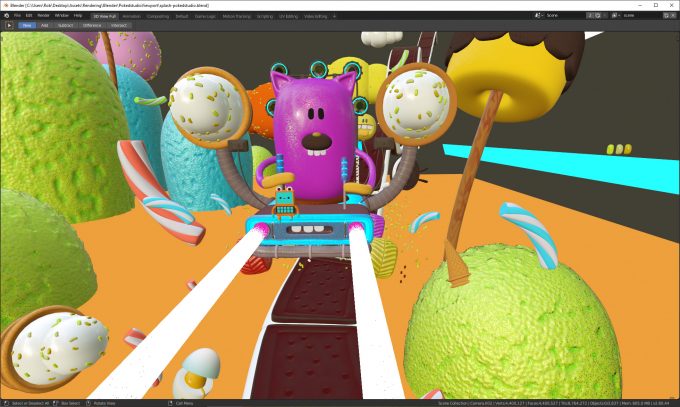

For our viewport testing, we use the “Racing Car” project found on the official demo files page. Since performance in Solid and Wireframe are pretty pointless (you still don’t want a super low-end GPU), we stuck to LookDev for all of our testing, at 1080p, 1440, and 4K.

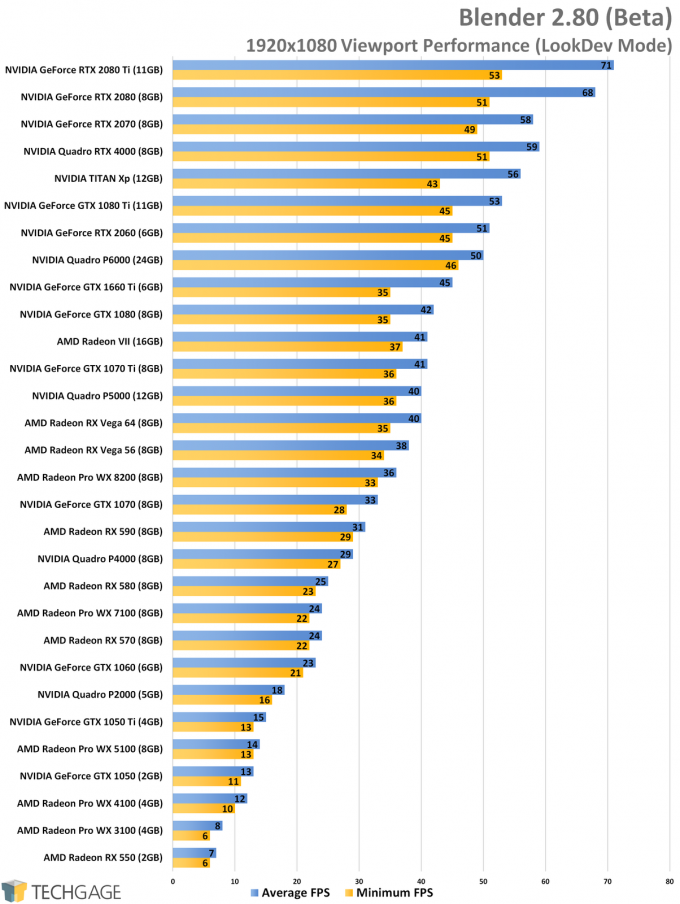

Right off the bat, we can see NVIDIA’s Turing architecture make a statement at the top of the chart. Which card really stands out to us is the GTX 1660 Ti, as it delivers quite a bit of performance in relation to the top dogs, but for a $279 price point. The RTX 2060 likewise offers very strong performance, sitting right behind the GTX 1080 Ti.

Even at the arguably modest resolution of 1080p, the LookDev mode proves a serious bog on lower-end GPUs. This is largely fine if you don’t plan on moving the camera, but chances are good that you are going to do that. While you don’t need 60 FPS in a viewport like you do actual gameplay, you don’t want it to behave like a sideshow, either. It can be literally headache inducing.

How bad does the pain get at 1440p?

1440p has about 80% more pixels than 1080p, but its performance hit over the smaller resolution isn’t exactly big. The top 2080 Ti loses just 5 FPS off the top, while the GTX 1660 Ti once again becomes an extremely strong contender at its price point.

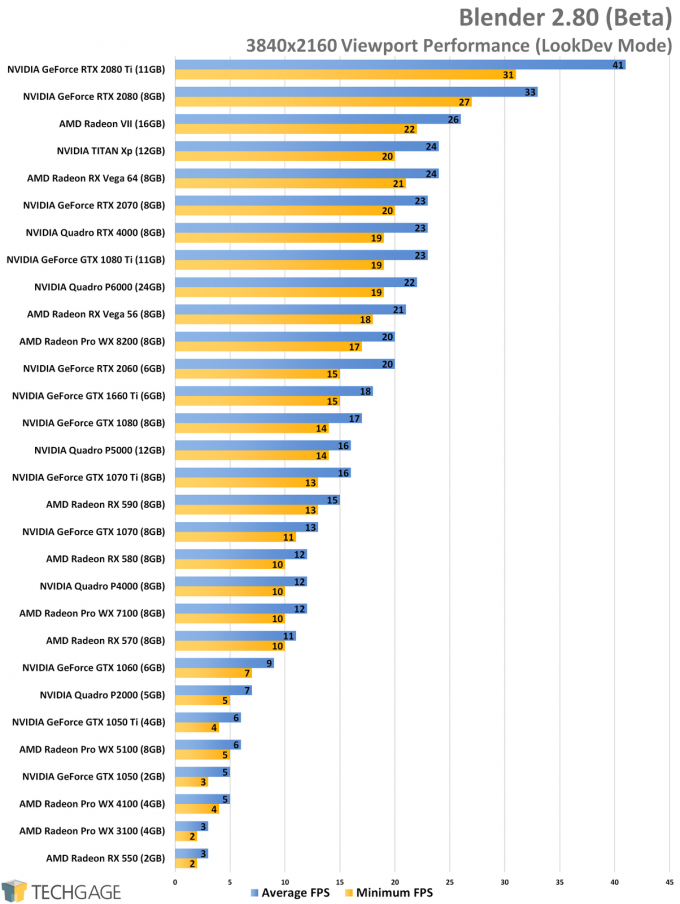

All of these GPUs are included here for completeness, but the smaller models should honestly be avoided if you want anything close to a reasonable experience in LookDev. We’d wager you’d want at least 20 FPS, but 30 FPS is a whole lot better. The cards that can hit that at 4K are going to be part of a special club, so let’s take a look:

Whether for gaming or design, 4K resolution is where some agony is brought on. Only the RTX 2080 and RTX 2080 Ti managed to keep above 30 FPS at 4K, but anything WX 8200 or over is going to prove satisfactory. At 4K, we really can’t afford to complain about lacking performance too much, especially if the market’s $1,200 gaming card (2080 Ti) barely manages to move past 40 FPS.

For most GPUs, 4K LookDev means it’ll run like a slide show. Again, while you don’t need 60 FPS in a viewport, you don’t want the opposite problem of so few frames, that rotating the camera is a stuttery, imprecise mess.

For both Wireframe and Solid, we’d recommend at least a GTX 1060. After you get faster than that GPU, the frame rates effectively jump over each other from each card; they don’t scale on the high-end like LookDev does. The 1050 Ti in our tests hit 30 FPS in Wireframe at 4K, but the GTX 1060 – the very next step up – hit 52 FPS. The GTX 1660 Ti hits 69 FPS, which basically matches every GPU faster than it, since the mode simply doesn’t scale.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!