- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 2.80 Viewport & Rendering Performance

With Blender 2.80 now launched, we’re taking a fresh look at performance across the latest hardware, including AMD’s latest Ryzen 3000-series CPUs and Navi GPUs, as well as NVIDIA SUPER cards. That includes both CPU and GPU testing with the Cycles renderer, GPU testing with Eevee, and viewport performance with LookDev.

Page 1 – Blender 2.80 CPU, GPU & Hybrid Rendering Performance

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

Since Blender 2.80 came out of beta a couple of weeks ago, we’ve been working on wrapping-up some performance testing with the help of a dozen or so CPUs and GPUs. As with our look at beta performance a few months ago, we want to find out what hardware gets the job done the quickest, and also figure out which hardware will offer the best value for the dollar overall.

Blender 2.80 might be a minor version bump over 2.79, but the reality is that this is such a massive update, it could have been called 3.0. From a performance perspective, what we care about for our testing is that the Cycles renderer has been given lots of polish, and the new GPU-focused Eevee renderer makes its debut. We will be testing both for our render tests here, in CPU-only, GPU-only, and hybrid tests.

Rendering is just part of the design equation, though. The vast majority of your time spent in Blender will involve staring at the viewport, the big box where all of the visual feedback takes place. The better the performance, the smoother models and scenes will spin. While Solid and Wireframe modes won’t prove too much of a problem for anyone, Blender 2.80’s new LookDev shading mode will make GPUs work hard. We’re taking a look at this performance on the next page.

Here’s the full list of hardware being tested for this article:

| CPUs & GPUs Tested in Blender 2.80 |

| AMD Ryzen Threadripper 2990WX (32-core; 3.0 GHz; $1,799) AMD Ryzen Threadripper 2970WX (24-core; 3.0 GHz; $1,299) AMD Ryzen Threadripper 2950X (16-core; 3.5 GHz; $899) AMD Ryzen Threadripper 2920X (12-core; 3.5 GHz; $649) AMD Ryzen 9 3900X (12-core; 3.8 GHz; $499) AMD Ryzen 7 3700X (8-core; 3.6 GHz; $329) AMD Ryzen 7 2700X (8-core; 3.7 GHz; $329) AMD Ryzen 5 3600X (6-core; 3.8 GHz; $249) AMD Ryzen 5 2600X (6-core; 3.6 GHz; $219) AMD Ryzen 5 3400G (4-core; 3.7 GHz; $149) Intel Core i9-9980XE (18-core; 3.0 GHz; $1,799) Intel Core i9-7900X (10-core; 3.3 GHz; $999) Intel Core i9-9900K (8-core; 3.6 GHz; $499) |

| AMD Radeon VII (16GB; $699) AMD Radeon RX 5700 XT (8GB; $399) AMD Radeon RX 5700 (8GB; $349) AMD Radeon RX Vega 64 (8GB; $EOL) AMD Radeon RX 590 (8GB; $279) AMD Radeon Pro WX 8200 (8GB; $999) NVIDIA TITAN RTX (24GB; $2,499) NVIDIA TITAN Xp (12GB; $1,200) NVIDIA GeForce RTX 2080 Ti (11GB; $1,199) NVIDIA GeForce RTX 2080 SUPER (8GB, $699) NVIDIA GeForce RTX 2070 SUPER (8GB; $499) NVIDIA GeForce RTX 2060 SUPER (6GB; $399) NVIDIA GeForce RTX 2060 (6GB; $349) NVIDIA GeForce GTX 1080 Ti (11GB; $EOL) NVIDIA GeForce GTX 1660 Ti (6GB; $279) NVIDIA Quadro RTX 4000 (8GB; $899) |

| All GPU-specific testing was conducted on our Intel Core i9-9980XE workstation. All product links in this table are affiliated, and support the website. |

| AMD Radeon Driver: Adrenaline 19.7.5 AMD Radeon Pro Driver: Enterprise 19.Q2.1 NVIDIA GeForce & TITAN Driver: GeForce 431.56 NVIDIA Quadro Driver: Quadro 431.02 |

Most major Blender releases come with a new scene that helps show off what the new version can do, and with 2.80 being as massive a release as it is, it truly warranted one. This time, the related project is called Spring, and its assets can be found for download here.

We had thought about adopting the scene in our testing, but the first 30 seconds or so of the render process is pre-processing that doesn’t use the CPU or GPU to any significant degree (it just bloats the end result time). Our current projects will still portray accurate scaling with either Cycles or Eevee, but it would have been nice to adopt the latest official project.

When we tackled 2.80 beta performance months ago, we used many more GPUs and CPUs than have been used here, largely because most of the models end up just being noise, and not very helpful. This is especially true when many workstation and gaming cards are counterparts, and overlap in performance (but not always feature sets). We’re keeping it simpler here. For CPUs, we wanted to get the Intel Core i7-8700K in, but our Intel Z390 motherboard died before we could get to it.

If after reading, you are still not sure about what hardware you should go with, please feel free to leave a comment. In time, we hope to add an animation-type test to Blender, as well as NVIDIA RTX-related tests. RTX features were announced a couple of weeks ago at SIGGRAPH, but are not currently included in the stable build. We’ll explore testing that soon, but for now, it’s not relevant to the current Blender.

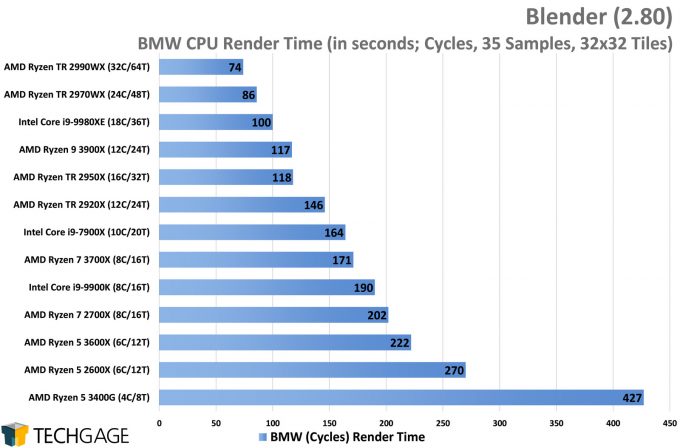

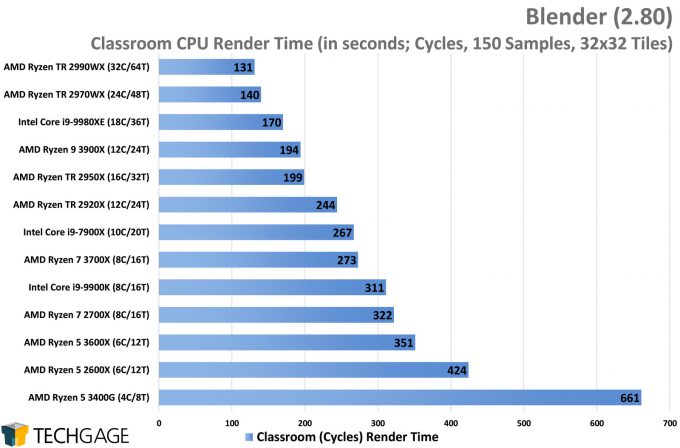

CPU Rendering

At least with our chosen projects, we’ve found that faster GPUs will prove more beneficial than faster CPUs, but having fast of both is going to produce even better rewards. If the only thing you ever do on your PC is work in Blender, you may not need to splurge on a high-end CPU; otherwise, you’ll want to take other workloads into consideration when choosing a new CPU.

What’s immediately clear from these results is that you don’t want to go too low-end when you’re doing creative work. On the flip-side, it’s nice to see that Blender scales as well as it does across the entire stack. We’ve seen Threadripper give us the odd scaling issues in certain applications before (not as common today), but it scales Cycles beautifully. Just how beautifully?

At AMD’s EPYC Horizon event held in San Francisco last week, we spotted a Blender demo showcasing the use of 256 threads being used on an AMD server to render frames from the Spring project. It’s good to know that if you use Cycles, it’s going to take great advantage of your CPU.

Just as we wrap-up performance testing @blender_org 2.80 on up to 64-thread CPUs, an @AMDServer EPYC Rome demo using 256 threads (w/Cycles) comes along to tease us! It’s great that a 100% open-source and free 3D design suite has no problem using every single core you give it. pic.twitter.com/79o1qOJR2Z

— Techgage (@Techgage) August 7, 2019

We’re going to see later that a smaller CPU like the quad-core 3400G can allow the GPU to do good work, but ultimately, we’d never in our right minds recommend going with such a chip for creator work. We lightly consider eight-core to be a bare minimum nowadays, because as workloads grow bigger, anyone who can’t take advantage of eight+ cores today will find that changing in the future. Even a bump to a six-core from the quad-core makes a huge difference.

Buuuut, as mentioned before, we’ve found GPUs to make a bigger performance impact than CPUs, when rendering the same exact scene using the same settings (save for tile size changes). So let’s jump into a look at that testing:

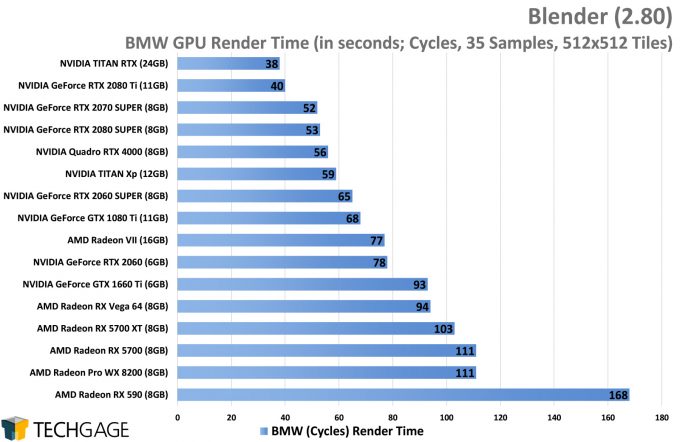

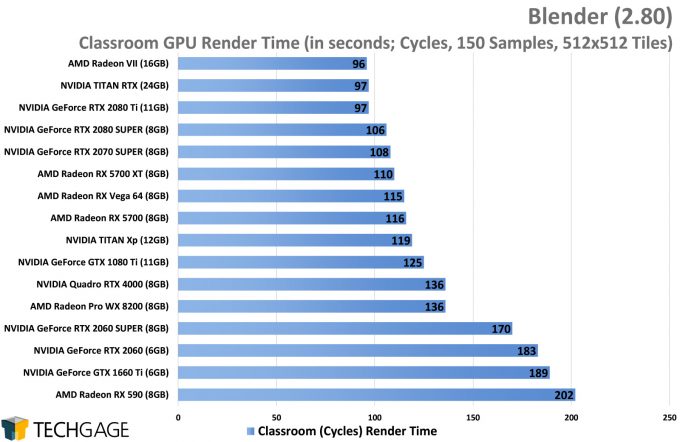

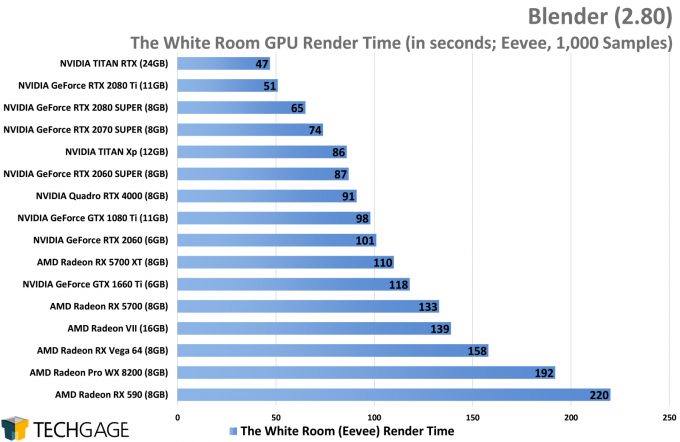

GPU Rendering

Let’s kick things off here with a focus on Cycles, using the same BMW and Classroom renders as in the CPU testing. While the end result might differ slightly due to subtle differences in render paths, we can see that a $349 RTX 2060 renders the BMW scene in the same amount of time as the $1,799 32-core Threadripper 2990WX. You might be able to see what we mean by the GPU being a bit more important than the CPU for this kind of rendering.

We need to be clear, though; we can’t say that this performance is going to be representative of every possible Blender scenario. It could be that in some cases, the CPU ends up mattering a lot more. But in our tests, and with these official Blender projects, we see the GPU as being more important. That said, thanks to the fact that Cycles can render to multiple devices at once, the CPU can still provide valuable work, as we’ll see in a few moments.

Overall, the GPU scaling isn’t as interesting as the CPU scaling, as there are more points where two models end up syncing up. The 2080S performing 1s worse than the 2070S in the BMW scene is a byproduct of benchmark variance, and highlights that it doesn’t always pay to splurge extra for beefier hardware. In the Classroom scene, the 2080S manages to be 2 seconds faster than 2070S, but is that worth the extra $200? Both have the same 8GB framebuffer, so it’d be hard justifying the bigger model if Blender is your bag.

That is unless Eevee happens to grab you, because it shows slightly more interesting scaling:

Whereas the 2080S and 2070S sat close to each other in the Cycles test, the faster card pulls ahead here as expected, with both the 2080 Ti and TITAN RTX running far ahead of that. Throughout all of these results, it’s clear that NVIDIA’s latest Turing architecture brings upgrades that ends up benefiting Blender performance, as the overall performance is hard to beat. Oddly, the Radeon VII performed amazingly in the Classroom test, but sat in the middle of the pack for the others.

Other models flip-flop their strengths like that also. Ultimately, we’d say the Classroom test is more representative of a proper project for Cycles, whereas BMW is more of an actual benchmark at this point. As for Eevee, that’s important for viewport performance, as well as animation – so if you are after the latter, you should pay particularly close attention to that performance.

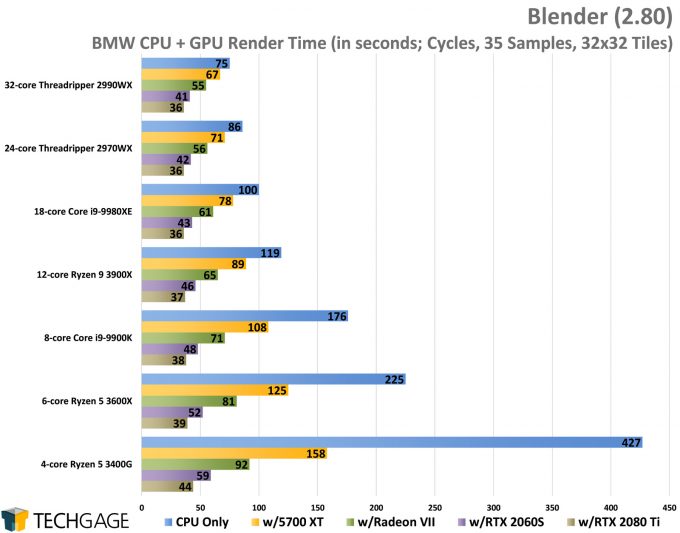

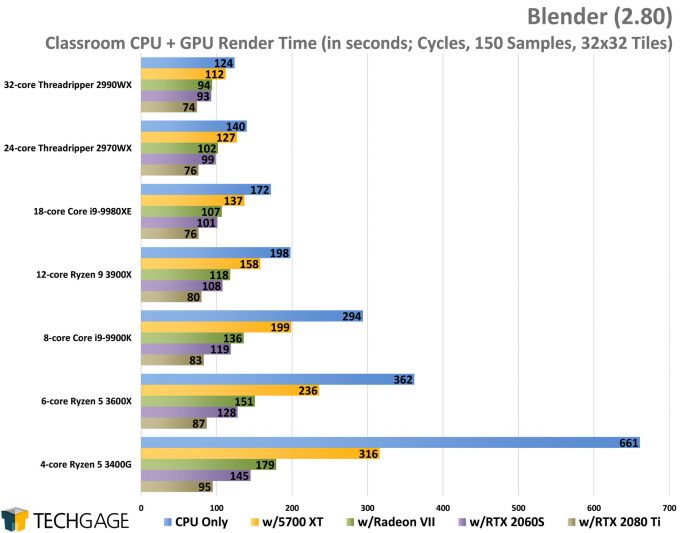

Heterogeneous Rendering

At the moment, Eevee’s rendering is done on the GPU, but Cycles is able to use both the CPU and GPU without any issues, whether you’re using CUDA or OpenCL. And, when you render that way, you can really see some huge speed-ups. For this testing, we paired seven CPUs with four GPUs each, to see if we could find a sweet-spot, or at worst, just see how things scale.

It’s immediately clear that even a modest GPU will outperform even the biggest CPU (in this chart), but when you combine their efforts, great gains can be seen. No one in their right mind is going to pair a 3400G with an RTX 2080 Ti, but, it’s nice to see that the smaller CPU won’t be holding the GPU back.

3600X + RTX 2060S, at a combined price point of $650, however, beats out the 3900X + Radeon VII, which has a combined price point of $1,200. Even with Blender, it pays to “know your workload” and hardware.

That all said, if money isn’t much of a concern to you, your top-of-the-line rig will still deliver notable advantages, but less so when your GPU is extremely fast. In this testing, the RTX 2080 Ti delivered the same hybrid result on the top three CPUs. Among all of these results, the 2060S ends up coming out looking like a bit of a champion.

It’s worth pointing out though, that as scenes become more complex, and more memory is required to render, then the focus will shift away from GPUs back to CPUs again. This is because of the framebuffer on the GPU being exceeded and Blender is forced to use the CPU, so keep that in mind as your project develops.

For those that are interested in checking out how Blender does when NVIDIA’s Optix render mode is enabled and utilizes RTX hardware for an extra boost in GPU render performance, you can check out our other article.

With rendering performance all covered, we’ll tackle viewport performance next:

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!