- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 2.83: Best CPUs & GPUs For Rendering & Viewport

To greet the launch of the Blender 2.83 release, we loaded up our test rigs to generate fresh performance numbers. For rendering, we’re going to pore over CPU, GPU, CPU and GPU, and NVIDIA’s OptiX. For good measure, we’ll also look at viewport frame rates, and the impact of tile sizes with Cycles GPU rendering.

Page 1 – Rendering Performance (CPU, GPU, Hybrid & NVIDIA OptiX)

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

With the launch of Blender 2.83, we’re reminded once again that time really flies. Before we’ll know it, it will have already been a year since 2.80 released – and remember just how long that took to land? While that release was a massive update over 2.79, the Blender Foundation hasn’t slowed down in its development. Each successor release has had notable features, and 2.83 is no different.

Due to the amount of time it takes to generate in-depth performance data for Blender alone, across CPU and GPU, and the fact that performance doesn’t always change much from one release to another, we like to wait a few iterations before diving in. Well, with 2.80 having released last summer, we’re definitely due for a fresh set of results.

One of the most notable aspects of 2.83 is that it’s an LTS (long-term support) release. Many Blender users may eagerly update with each new release, but if quick adoption is out-of-the-question, those users can relax, knowing that the LTS will be supported for up to two years. Any updates that hit it will be related to bug and security fixes. The next mainline release will be 2.90, due this August.

To get a full overview of features added to Blender 2.83, we’d suggest looking through the release notes. The Blender Foundation does an amazing job of showing off new features, and not to mention, cluing you into their existence in the first place.

Of note, 2.83 introduces OpenXR and scene inspection for VR use – a major addition that will be built up with each new release. Grease Pencil has also been overhauled for 2D animation, while Eevee has seen a numbers of added features, like high-quality normals and hair transparency. AI denoising has also gained another option, thanks to NVIDIA’s OptiX (RTX hardware required). This is all barely scratching the surface, so definitely check out the release notes and video above.

For this article, we’re going to explore both rendering and viewport performance. On the rendering side, we’re evaluating CPU only, GPU only, CPU + GPU heterogeneous, and also NVIDIA’s OptiX. For viewport, we’ve swapped out our complex scene with a more modest replacement, of a detailed game controller.

Here’s the full list of hardware being tested for this article:

| CPUs & GPUs Tested in Blender 2.83 |

| AMD Ryzen Threadripper 3990X (64-core; 2.9 GHz; $3,990) AMD Ryzen Threadripper 3970X (32-core; 3.7 GHz; $1,999) AMD Ryzen Threadripper 3960X (24-core; 3.8 GHz; $1,399) AMD Ryzen 9 3950X (16-core; 3.5 GHz; $749) AMD Ryzen 9 3900X (12-core; 3.8 GHz; $499) AMD Ryzen 7 3700X (8-core; 3.6 GHz; $329) AMD Ryzen 5 3600X (6-core; 3.8 GHz; $249) AMD Ryzen 3 3300X (4-core; 3.8 GHz; $120) AMD Ryzen 3 3100 (4-core; 3.6 GHz; $99) Intel Core i9-10980XE (18-core, 3.0 GHz; $999) Intel Core i9-10900K (10-core; 3.7 GHz; $499) Intel Core i5-10600K (6-core; 3.8 GHz; $263) Intel Core i9-9900K (8-core; 3.6 GHz; $499) |

| AMD Radeon VII (16GB; $EOL) AMD Radeon RX 5700 XT (8GB; $399) AMD Radeon RX 5600 XT (6GB; $279) AMD Radeon RX 5500 XT (8GB; $199) AMD Radeon RX Vega 64 (8GB; $EOL) AMD Radeon RX 590 (8GB; $199) NVIDIA TITAN RTX (24GB; $2,499) NVIDIA GeForce RTX 2080 Ti (11GB; $1,199) NVIDIA GeForce RTX 2080 SUPER (8GB, $699) NVIDIA GeForce RTX 2070 SUPER (8GB; $499) NVIDIA GeForce RTX 2060 SUPER (8GB; $399) NVIDIA GeForce RTX 2060 (6GB; $349) NVIDIA GeForce GTX 1660 Ti (6GB; $279) NVIDIA GeForce GTX 1660 SUPER (6GB; $229) NVIDIA GeForce GTX 1660 (6GB; $219) |

| Motherboard chipset drivers were updated on each platform before testing. AMD CPU: All chips were run with DDR4-3600 16GBx4 Corsair Vengeance. Intel CPU: All chips were run with DDR4-3200 16GBx4 Corsair Vengeance. All GPU testing was done with our Intel Core i9-10980XE workstation. AMD Radeon Driver: Adrenaline 20.5.1 NVIDIA GeForce & TITAN Driver: GeForce 446.14 All product links in this table are affiliated, and support the website. |

We included some workstation graphics cards in our testing when we looked at Blender 2.80 last year, but we skipped them this time due to the fact that having them only adds noise – and not in the rendering sense. As an example, an RTX 6000 is going to perform little different from a TITAN RTX, as both have the same core configurations. There are no ProViz-specific optimizations that we know of exclusive to AMD Radeon Pro or NVIDIA Quadro.

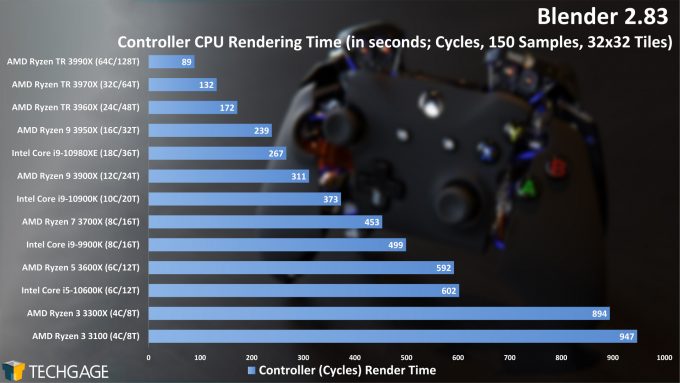

There have been a couple of new projects to usher into this performance look, replacing some of old. First up is a new Cycles test of a game controller that we found on reddit last month, made by ftobler. This project is open-source, and would be a great way for new users to poke around and understand how an animated project can come together. The second is Mr. Elephant, a project updated for Eevee and designed by Glenn Melenhorst to accompany a children’s book he wrote.

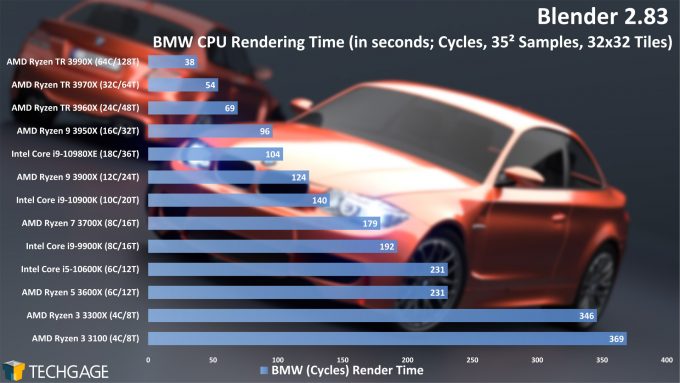

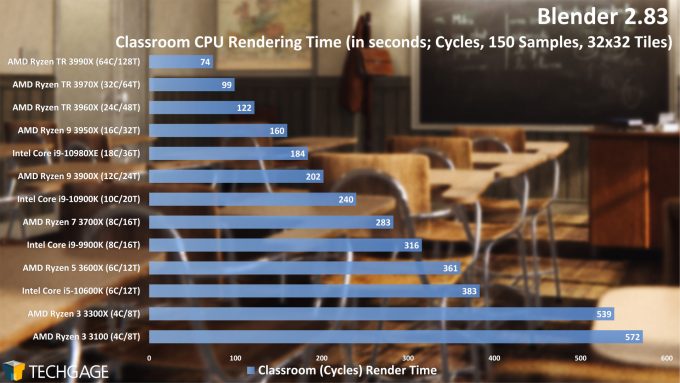

CPU Rendering

As we’ll see with some of the performance results ahead, rendering to only a CPU isn’t going to be the best choice for most people. If you happen to have a beast chip like AMD’s Ryzen Threadripper 3990X, which offers 128 threads, then you’ll be able to effectively topple a top-end graphics card like NVIDIA’s TITAN RTX. That’s ignoring use of NVIDIA’s OptiX, which we’ll see numbers for soon.

For a typical Blender user, we wouldn’t recommend necessarily going with a high-end CPU unless you have external needs for one. If you’re a money-is-no-object type of person, then you’ll be happy to see some of the heterogeneous results coming up.

This all being said, you don’t want to go too “low-end” when choosing a CPU, even if you primarily render to the GPU in Blender. There are a number of hard-to-test intangible factors when it comes to CPU performance, such as physics simulations, which for the most part are single-core processes (although this is being updated in the 2.90 release). We’d suggest a bare minimum of eight cores nowadays, to give yourself some breathing room. Blender probably isn’t the only thing you do on your workstation, so any CPU choice should also hinge on those other workloads. You can check out a full CPU performance look on many of these chips in our Threadripper 3990X evaluation.

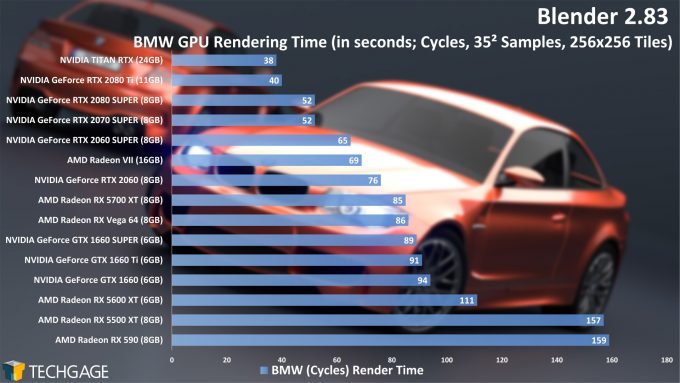

GPU (CUDA & OpenCL) Rendering

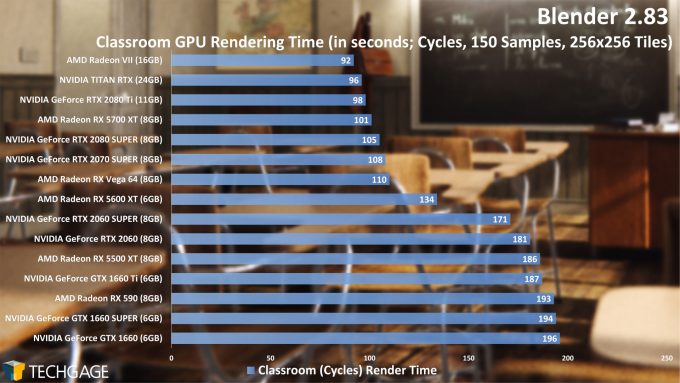

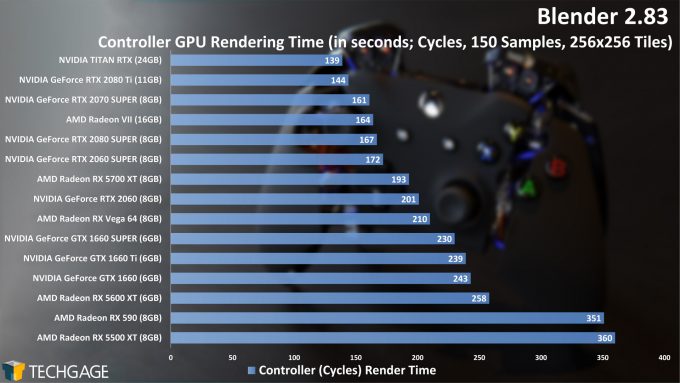

We’ve said it before, but it’s always interesting how differently GPUs (or CPUs) can scale from one project to the next. The BMW project is really old by today’s standards, but it’s such a de facto and simple test, we continue to use it to see how hardware behaves with a modest workload. There, NVIDIA seems to dominate, but AMD strikes back hard in the more complex Classroom scene. The Radeon VII might not be readily available, but it handles that Classroom project really well. It’s not the first time we’ve seen that scaling.

As for the Controller scene, it behaves somewhat like the BMW project, with the TITAN RTX comfortably sitting on top. There are some odd arrangements, though, such as the 2070 SUPER placing ahead of the 2080 SUPER, and that’s something we just have to say c’est la vie to. This isn’t the first instance where we’ve seen those two GPUs reverse their expected order, but based on the Classroom and Mr. Elephant tests, odd scaling like that is rare in Blender.

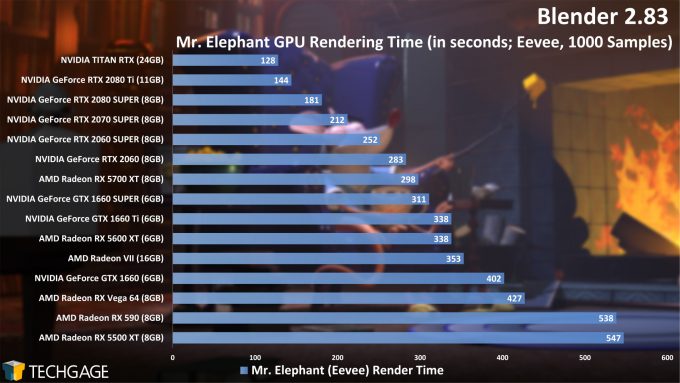

Speaking of Mr. Elephant, with this Eevee brute-force test (1,000 samples is overkill), we can see NVIDIA has strengths here, which means that your rendered animations will complete quicker on GeForce. Eevee can’t take advantage of NVIDIA’s OptiX (yet), but the Turing architecture has some clear optimization for these types of workloads to begin with, with even the lowbie 1660 SUPER placing just behind AMD’s 5700 XT.

It’s worth pointing out that Eevee is a raster render engine like that used by games, rather than ray traced, but is built around OpenGL 3.3. This makes the engine a bit out of date compared to OpenGL 4.5 and Vulkan, so performance is not ideal. There are plans to transition over to Vulkan, which also brings the benefit of ray tracing and additional performance improvements, but this is unfortunately not coming any time soon. The reason for OpenGL 3.3 is because of macOS, since that is the latest supported version of OpenGL before the Metal API started to emerge. AMD’s Radeon ProRender, a plugin and alternative render engine for Blender, will at least allow GPU rendering for Mac users because of its support for the Metal API.

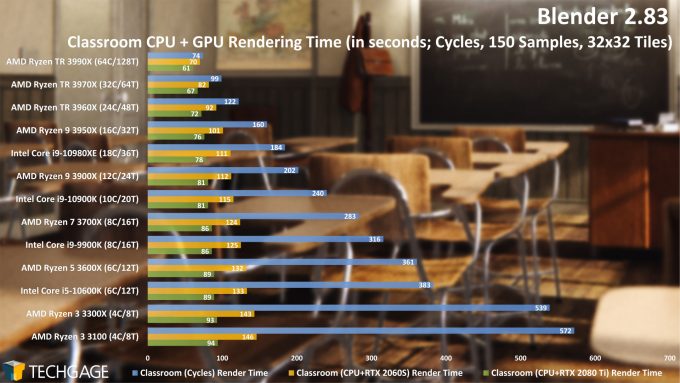

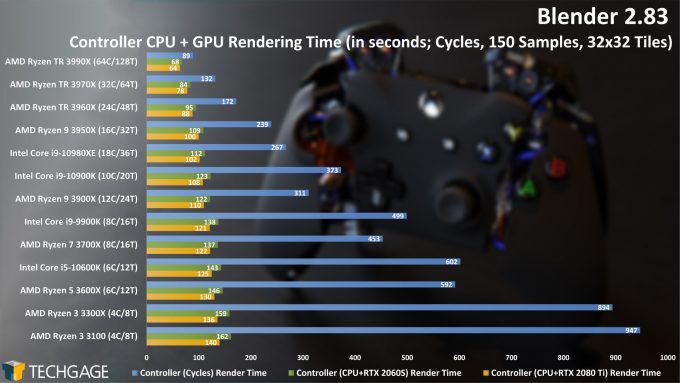

Heterogeneous Rendering

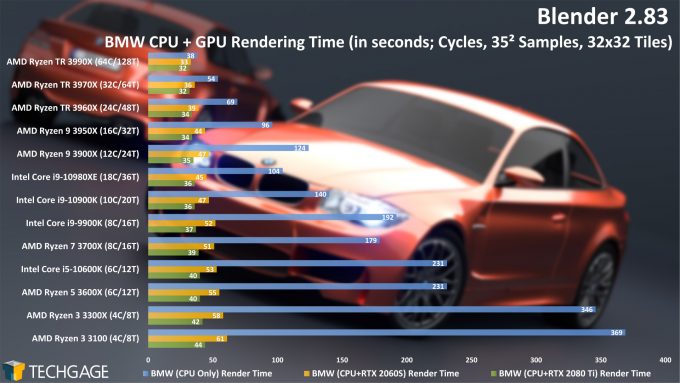

In previous Blender performance looks, we’ve included a hybrid rendering test with only one GPU, but we’ve always wondered how the scaling would look if we threw a more modest option in there. Thus, we decided to test both the RTX 2060 SUPER and RTX 2080 Ti in these modes, to see if we can find some perfect blend of CPU and GPU.

It’s immediately clear that if you can render to both the CPU and GPU, you should do it – unless, maybe, you have an NVIDIA OptiX GPU that’s still faster. What we can ultimately glean here is that there’s little point in opting for a huge CPU if you have a competent GPU – but, it’s good to see that you will in fact still see gains if you do decide to get a big CPU.

It’s neat to see even the low-end four-cores doing well once a GPU is added in, but as mentioned earlier, we wouldn’t ever recommend such parts for a serious workstation. You want some breathing room. If your CPU choice doesn’t impact Blender all too much, you need to consider all of your other workloads, and not to mention general multi-tasking performance. You want a faster frequency CPU for a snappier OS, and more cores for multi-threaded workloads, like video encoding, or even compression.

Radeon can do heterogeneous rendering as well, but we’ve never had luck with it in Cycles. In previous testing, we encountered a black screen crash that would occur, and sadly, that didn’t change with our latest attempt. It’s worth noting that AMD’s Radeon ProRender supports heterogeneous rendering, but we do not have a project to test with it.

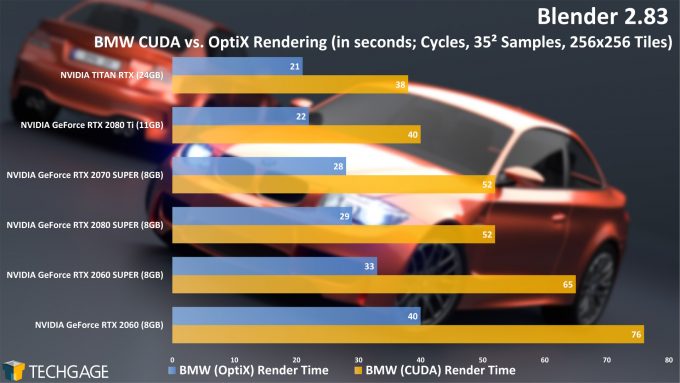

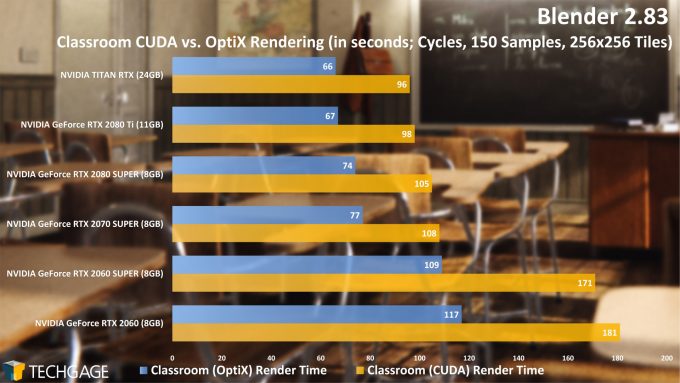

NVIDIA OptiX Rendering

When accelerated by NVIDIA’s RTX series of graphics cards, the performance of its OptiX rendering engine can be dramatically improved, as highlighted by the charts above. By itself, 38 seconds for the BMW project with the TITAN RTX is impressive, but enabling OptiX manages to shave that down further to 21 seconds. A lesser gain is seen in the Classroom test, yet it’s still a major one.

It’s important to note that NVIDIA’s OptiX only works with Cycles, and while it could support Eevee in the future, our above performance suggests that there might not be a ton to gain, as the Turing architecture accelerates that workload quite a bit already. But, we’d like to be surprised.

At the moment, OptiX doesn’t work out-of-the-box with every single Cycles project you’ll come across. Interestingly, the Controller project we added to this suite won’t use OptiX correctly, as AO and bevel shaders are not yet supported (that will change). If you want to download official projects that work with OptiX, we’d suggest BMW, Classroom, or The Junk Shop.

On the next page, we’ll explore tile size performance variations, as well as viewport frame rates and AI denoising.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!