- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Mid-2021 GPU Rendering Performance: Arnold, Blender, KeyShot, LuxCoreRender, Octane, Radeon ProRender, Redshift & V-Ray

It’s been six months since we’ve last taken an in-depth look at GPU rendering performance, and with NVIDIA having just released two new GPUs, we felt now was a great time to get up-to-date. With AMD’s and NVIDIA’s current-get stack in-hand, along with a few legends of the past, we’re going to investigate rendering performance in Blender, Octane, Redshift, V-Ray, and more.

Page 1 – Introduction; Blender, Radeon ProRender & LuxCoreRender Performance

It’s been quite a while since we’ve last taken a deep-dive look at GPU rendering performance, so with NVIDIA’s GeForce RTX 3080 Ti and 3070 Ti having been recently released, now seems like a good time to get caught up. If you’re after gaming performance, we’ve already taken care of that, for both ultrawide and 4K resolutions.

This article will include rendering performance for eight renderers, three of which will run on Radeon. That includes Blender, Radeon ProRender (used in Blender), as well as LuxCoreRender. For CUDA/OptiX-only renderers, we’re going to tackle (on the next page) Arnold, KeyShot, Redshift, Octane, and V-Ray.

Professional GPUs such as NVIDIA’s Quadro (or not-so-Quadro A-series RTX cards) and AMD’s Radeon Pro series are not included in these render-focused tests, since they perform similarly in rendering as the gaming counterparts. Pro cards are particularly special for CAD modeling and viewport-heavy workloads, and a number of such tests can be found in another article.

We’re not aware of Arnold, KeyShot, or V-Ray having any plans to support Radeon in the future, but both Redshift and Octane currently support Radeon on Apple platforms only. Fortunately, both companies plan to support Radeon in Windows at some point, and when the trigger is pulled, it’s going to be a great day. It’s admittedly a little annoying to have to leave Radeon out of so many tests here, but in the words of Ray LaFleur, that’s just the way she goes.

For this article, we wanted to make a point to include robust generational performance information, so that you can see how your old GPU may compete against the latest and greatest. In addition to the entire fleet of current-gen gaming GPUs being tested, we’ve also included the RTX 2080 Ti (Turing) and GTX 1080 Ti (Pascal) for NVIDIA, as well as RX 5700 XT (RDNA), VII (Vega), and RX 590 (Polaris) for AMD. Each of those GPUs were the top-end part for their respective architecture (and generation).

Here’s AMD’s current-gen lineup:

| AMD’s Radeon Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| RX 6900 XT | 5,120 | 2,250 | 23 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $999 |

| RX 6800 XT | 4,608 | 2,250 | 20.7 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $649 |

| RX 6800 | 3,840 | 2,105 | 16.2 TFLOPS | 16 GB 1 | 512 GB/s | 250W | $579 |

| RX 6700 XT | 2,560 | 2,581 | 13.2 TFLOPS | 12 GB 1 | 384 GB/s | 230W | $479 |

| Notes | 1 GDDR6 Architecture: RX 6000 = RDNA2 |

||||||

AMD doesn’t currently offer “low-end” parts for its RDNA2-based lineup, although due to current market conditions, the company hasn’t exactly suffered for it. The most recent release is the RX 6700 XT, starting at $479 SRP. As you’ll see in the table below, NVIDIA wins on the memory bandwidth front, but all of these current-gen Radeons offer more memory than the majority of NVIDIA’s current-gen GeForces.

And speaking of:

| NVIDIA’s GeForce Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

When it comes to “ultimate” creator cards, it’s hard to compete with NVIDIA’s GeForce RTX 3090. As we’ll see in multiple tests throughout this article, NVIDIA’s RT cores can make a big difference with rendering performance, and for that reason, AMD might actually feel safe not being supported by most of our tested renderers right now.

Because creator workloads generally thrive with lots of memory, GPUs offering 8GB should be considered a bare minimum, because even if it’s not limiting to you today, it probably will become so over the life of the card. That rationale might make the RTX 3060, with its 12GB frame buffer, look attractive, but the performance results will be the ultimate judge of that.

If you have a choice between a lower-end RTX 3000 series card, or the RTX 2080 Ti with 11GB buffer, you’re likely to benefit more from the latter, even though the Ampere generation boosts rendering performance even further. Again, this is something the many test results in this article can highlight.

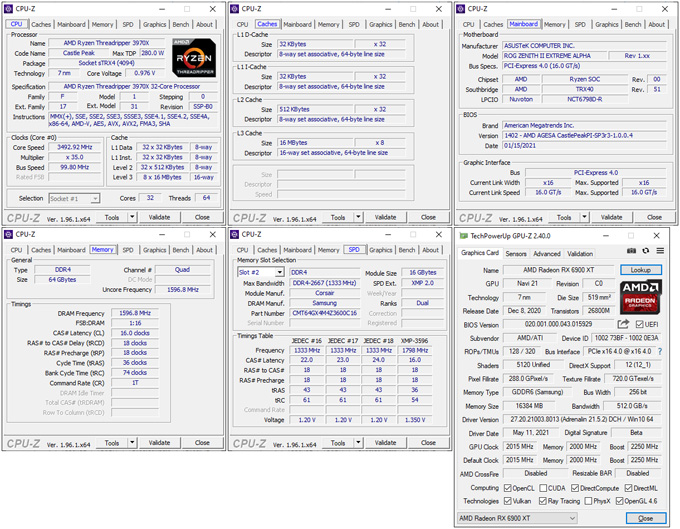

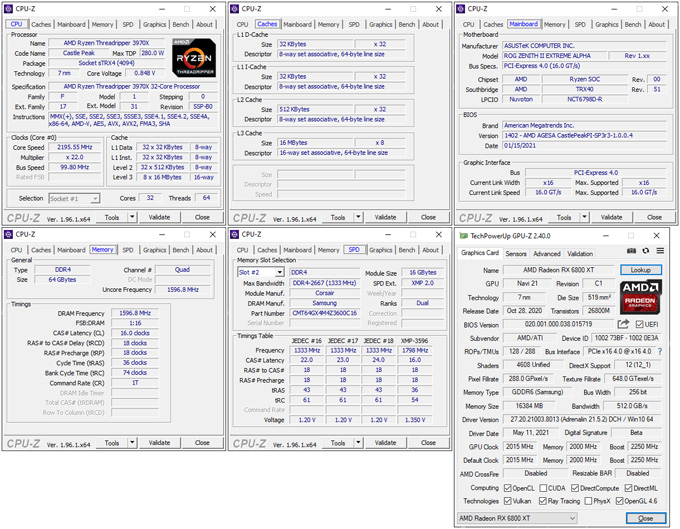

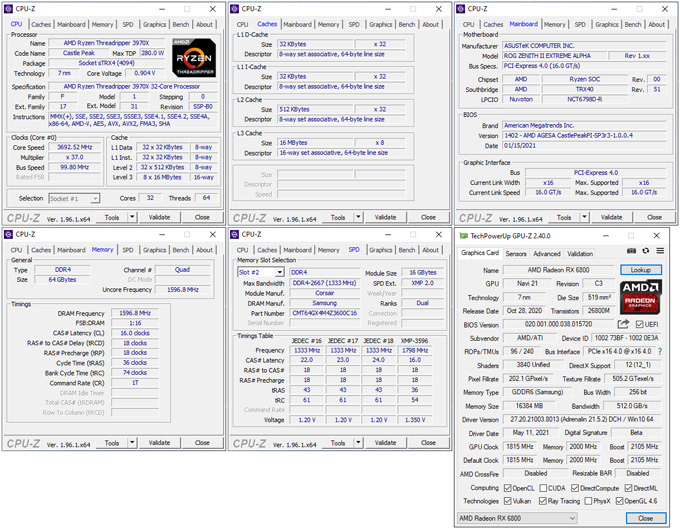

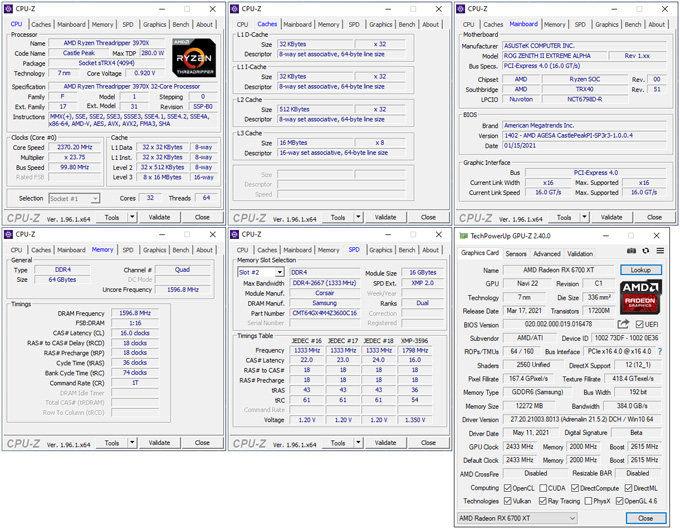

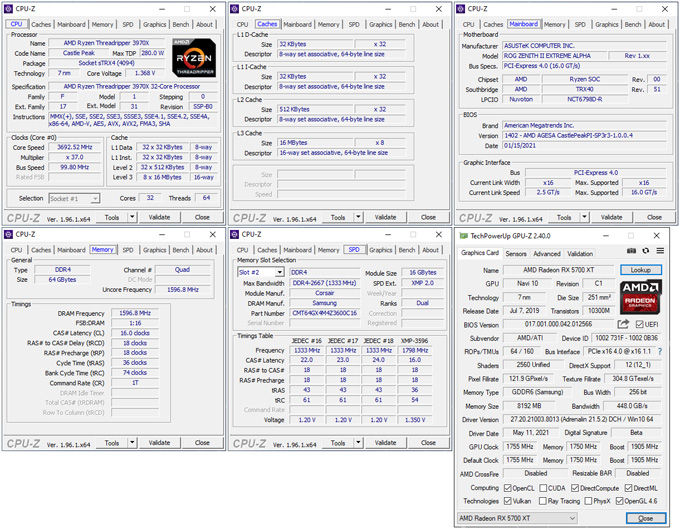

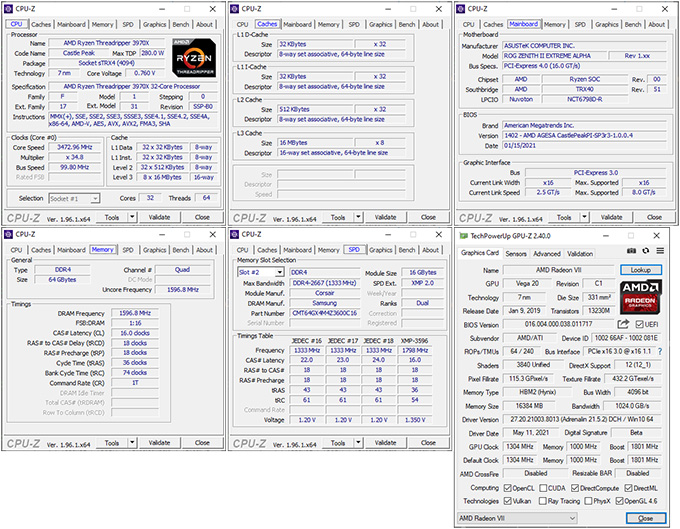

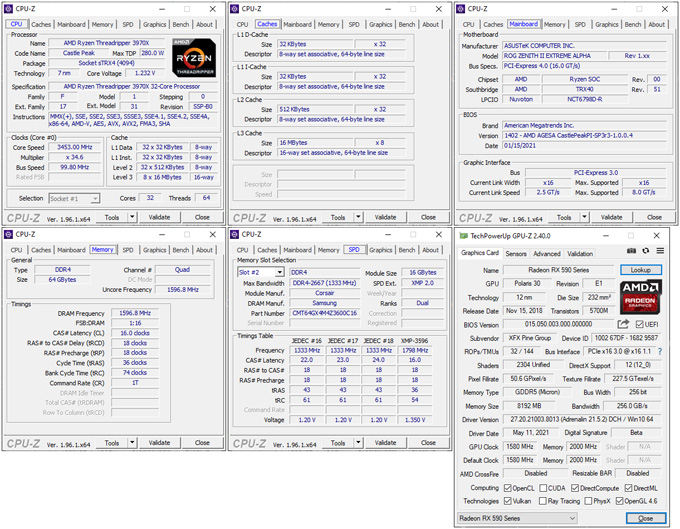

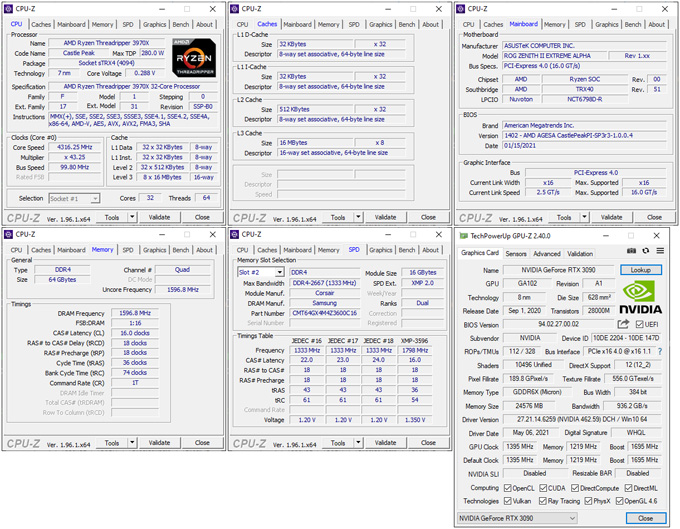

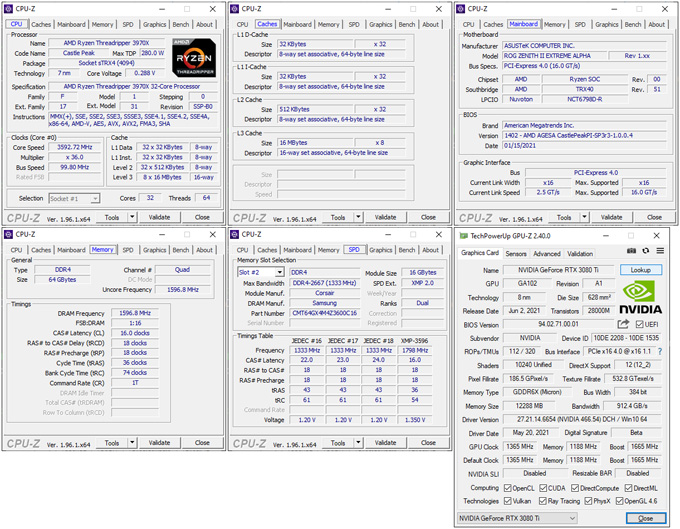

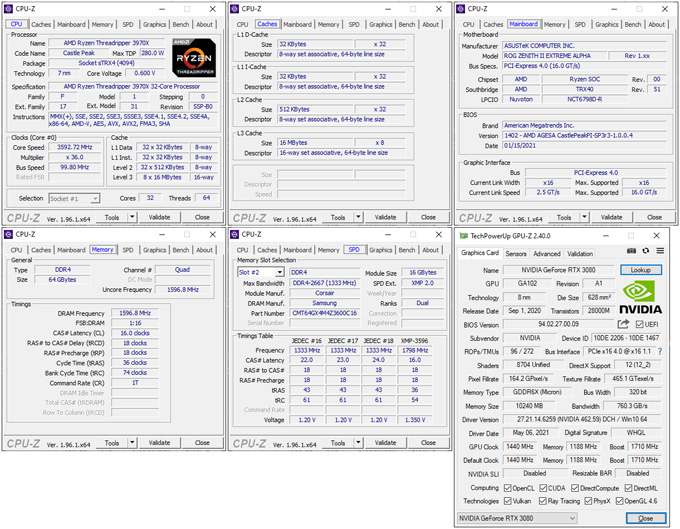

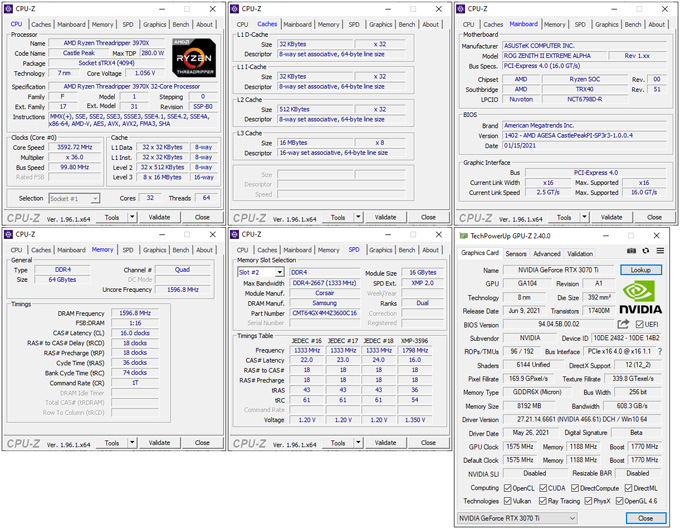

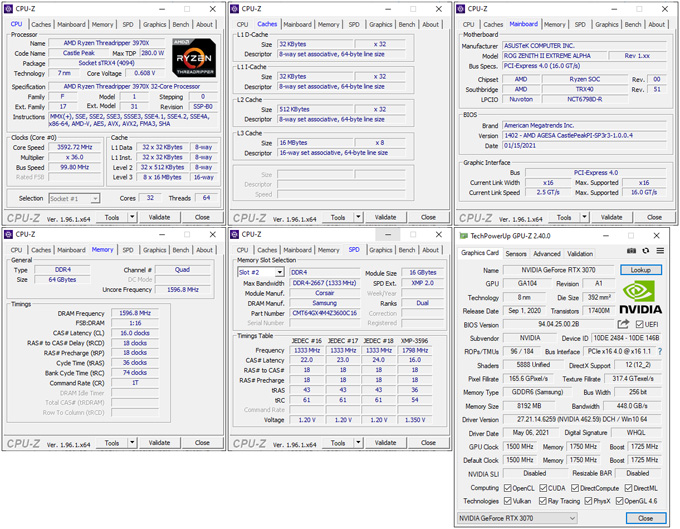

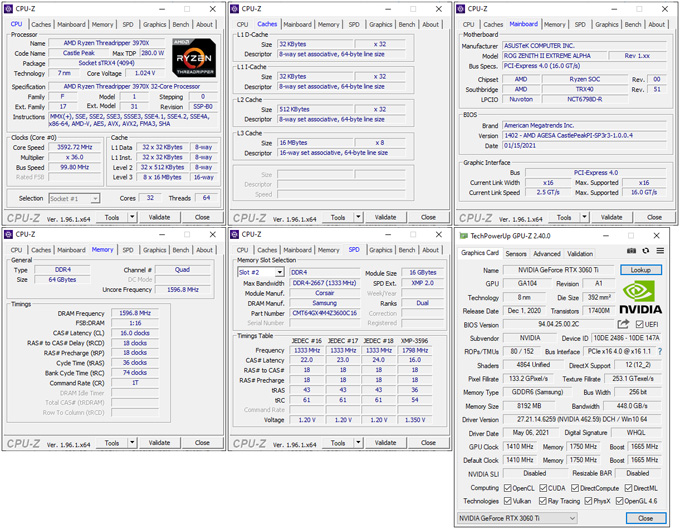

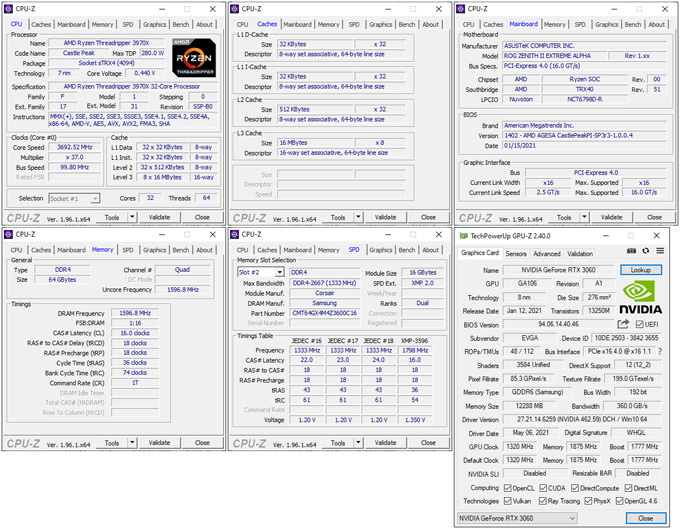

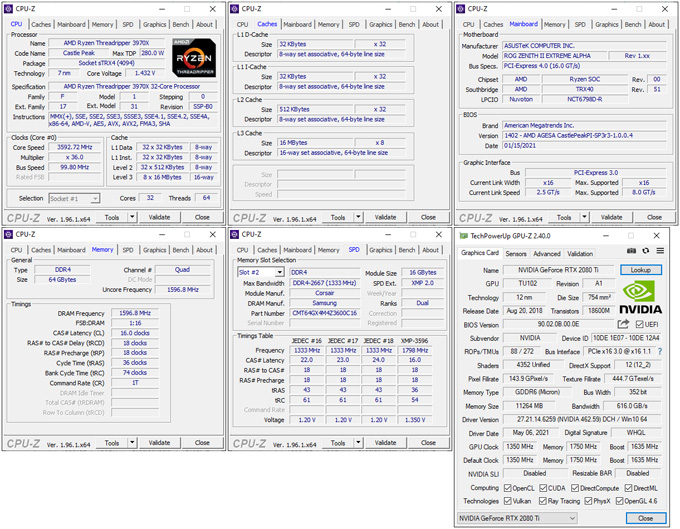

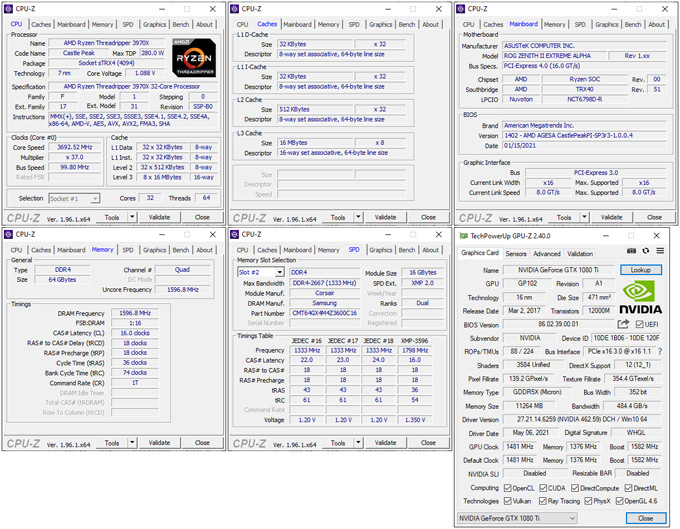

On that note, here’s an overview of the PC used in testing for this article:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen Threadripper 3970X (32-core; 3.7GHz) |

| Motherboard | ASUS Zenith II Extreme Alpha (EFI: 1402 01/15/2021) |

| Memory | Corsair Dominator (CMT64GX4M4Z4Z3600C16) 4x16GB; DDR4-3200 16-18-18 |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 21.5.2) AMD Radeon RX 6800 XT (16GB; Adrenalin 21.5.2) AMD Radeon RX 6800 (16GB; Adrenalin 21.5.2) AMD Radeon RX 6700 XT (12GB; Adrenalin 21.5.2) AMD Radeon RX 5700 XT (8GB; Adrenalin 21.5.2) AMD Radeon VII (16GB; Adrenalin 21.5.2) AMD Radeon RX 590 (8GB; Adrenalin 21.5.2) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 462.59) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 466.54) NVIDIA GeForce RTX 3080 (10GB; GeForce 462.59) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 466.61) NVIDIA GeForce RTX 3070 (8GB; GeForce 462.59) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 462.59) NVIDIA GeForce RTX 3060 (12GB; GeForce 462.59) NVIDIA GeForce RTX 2080 Ti (11GB; GeForce 462.59) NVIDIA GeForce GTX 1080 Ti (11GB; GeForce 462.59) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | NZXT H710i Mid-Tower |

| Cooling | NZXT Kraken X63 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19043.1023 (21H1) |

| All product links in this table are affiliated, and help support our work. | |

There are a couple of exceptions to the test platform above, as a result of our choosing to include LuxCoreRender and Radeon ProRender after the other testing was completed. For some reason, LuxMark crashed each and every time on our Threadripper rig, even on a fresh Windows install. So, to save having to swap GPUs twice as many times, we simply completed both the LuxCoreRender and RPR tests on our AMD Ryzen 9 5950X platform instead, which was equipped with the same memory configuration.

All of our testing is completed using the latest versions of: Windows (10, 21H1), AMD and NVIDIA graphics drivers, and the software tested. Two exceptions for the latter: we can’t test LuxMark 4.0 alpha1, as we’ve yet to get it successfully compiled, and Radeon ProRender has a beta for Blender 2.93 available, but after testing it, we’re keen on waiting for the stable version instead. More on that later.

Here are some other general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

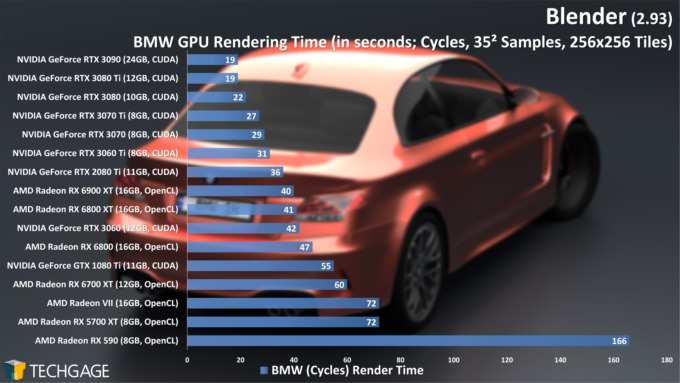

Blender 2.93

Considering that we have a dedicated Blender 2.93 performance deep-dive article planned, it feels odd to kick off this article with it. However, Blender has become a truly de facto point of reference for rendering, one that both AMD and NVIDIA heavily rely-upon, so for that reason it makes good sense to start there.

Something that becomes immediately obvious from the results above is that the Radeon RX 590 is really lacking in performance when compared to the rest of the lineup. Its performance is so much worse than the other bottom cards, in fact, that we wondered if we should just chop it off, since it ends up making the rest of the scaling look less impressive than it actually is. For the sake of painting a really clear picture of generational performance improvements, however, we decided to leave it in.

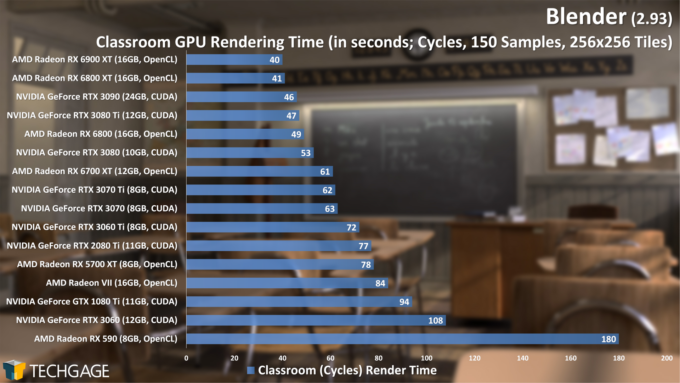

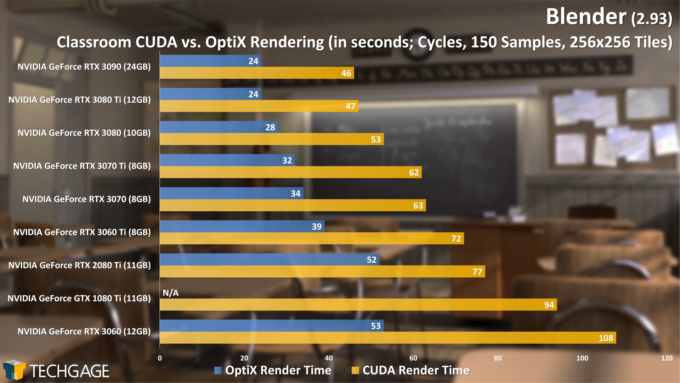

Both the BMW and Classroom projects are getting up there in age, but they’re still incredibly scalable and representative of current performance. They also behave quite differently from one another. In the simpler BMW test, NVIDIA GPUs reign supreme; in the Classroom test, AMD historically performs really well, and no exception has been made here. It’s quite something to see a GPU like the RX 6900 XT beat out the RTX 3090 ever-so-slightly in the Classroom test, but take twice as long in the BMW one. And that’s before OptiX is introduced. Here’s that angle:

Sticking to the Classroom scene, enabling OptiX effectively halves the amount of time it takes to render a frame, which makes AMD’s strengths in the straight-forward CUDA vs. OpenCL battle seem less impressive. While AMD’s RDNA2 improved upon Radeon’s ray tracing capabilities quite well, it’s still been unable to compete with the boost provided by the RTX series’ dedicated ray tracing cores.

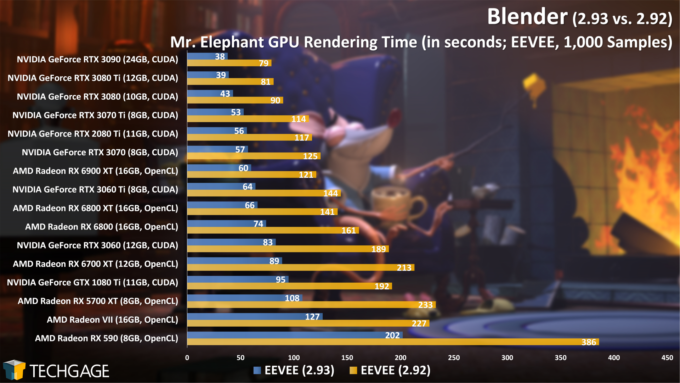

Fortunately for AMD, only Cycles currently benefits from RT cores. EEVEE doesn’t, so let’s check that out next:

After we compiled our Blender 2.93 EEVEE results, we immediately had to reinstall every single tested GPU and test 2.92 again with the same graphics driver. We knew that EEVEE performance improvements came with 2.93, but we didn’t realize they would represent a literal halving of each GPU’s render times. Blender’s developers have worked some real magic here. The best part? This all comes in addition to a fine-tuning of the end result. Side-by-side, there are super slight differences between versions, but the ultimate nod goes to 2.93.

Both AMD and NVIDIA have gained handsomely here, although NVIDIA seems to get a super slight advantage overall. For example, with 2.92, the RTX 3070 placed just behind the RX 6900 XT, whereas it now manages to place ahead. So, even though EEVEE doesn’t support NVIDIA’s RT cores, it still has the overall advantage. When EEVEE switches over to Vulkan at some point in the future, we’ll likely see another big reshuffle, since Vulkan RT could lead to yet more performance, and added ray tracing elements to this raster-based engine.

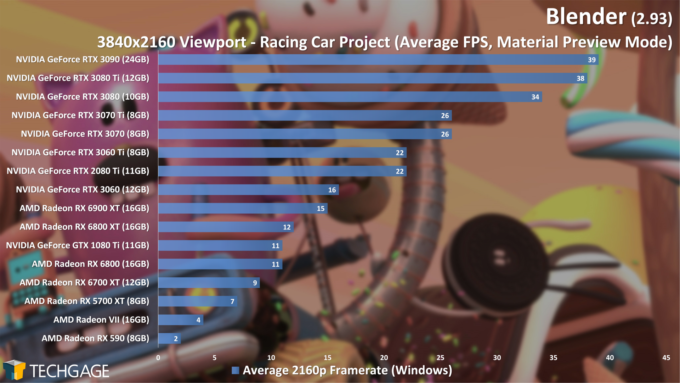

Rendering is just one part of the Blender equation; viewport performance also matters, so let’s check that out:

Using the complex Racing Car scene from Blender’s 2.77 release, we can see that performance hasn’t changed too much from what we saw with our 2.92 testing. Scaling is effectively the same, although some numbers changed by one or two FPS. Yet again, AMD’s GPUs for some reason fall to the bottom of the chart, with even the lowly RTX 3060 edging out the RX 6900 XT.

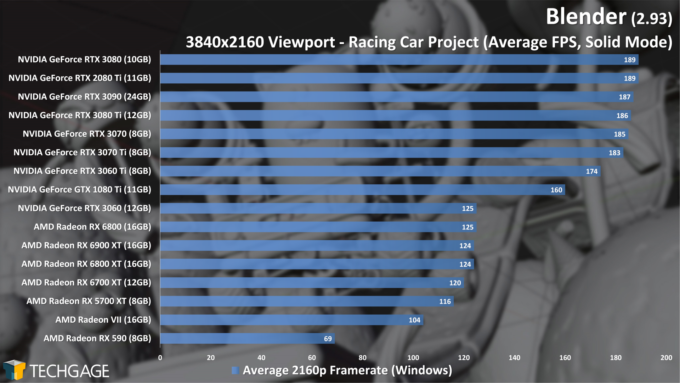

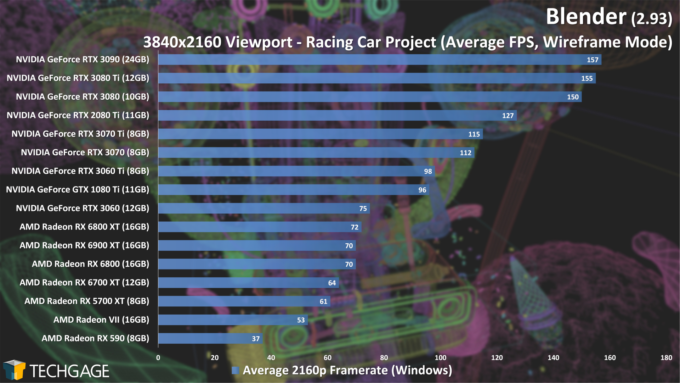

This particular scene is really complex, so we’d naturally expect it to be grueling on all GPUs, but we really do wish we saw better performance out of the Radeon camp this go-around. Once we post our fuller Blender 2.93 deep-dive, you’ll see that simpler scenes will naturally run better, but it’s still hard to ignore the performance deltas between both AMD and NVIDIA here. We can even see similar behavior with both the Solid and Wireframe modes:

Our test platform uses a 32-core AMD Ryzen Threadripper, which means that simpler graphics workloads like those above are largely going to look the same at the top (especially at resolutions lower than 4K). Even still, we see a clear separation between AMD and NVIDIA. The RTX 3060 in particular noticeably lags behind the other GeForces (something we retested to sanity check).

On that note, it’s time to use Blender once again for another kind of rendering test, one involving AMD’s own Radeon ProRender:

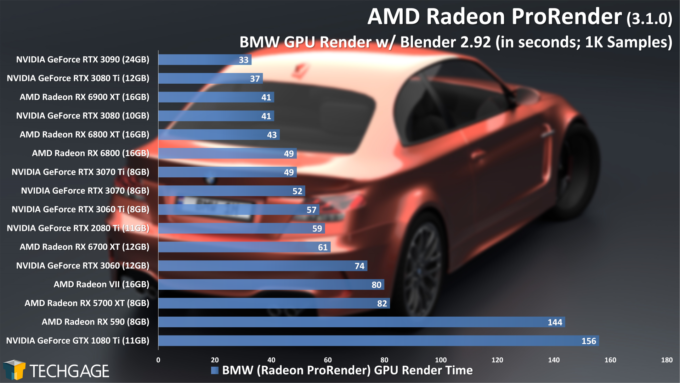

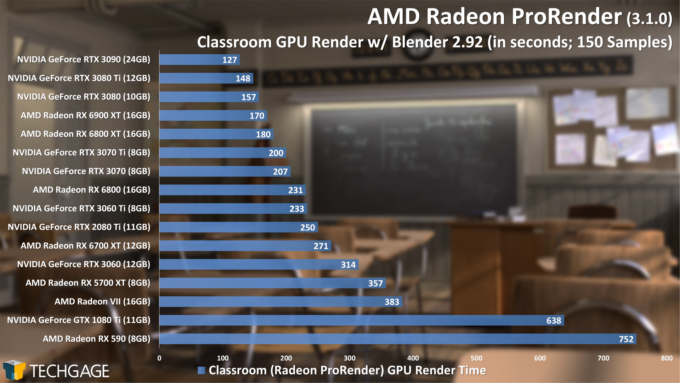

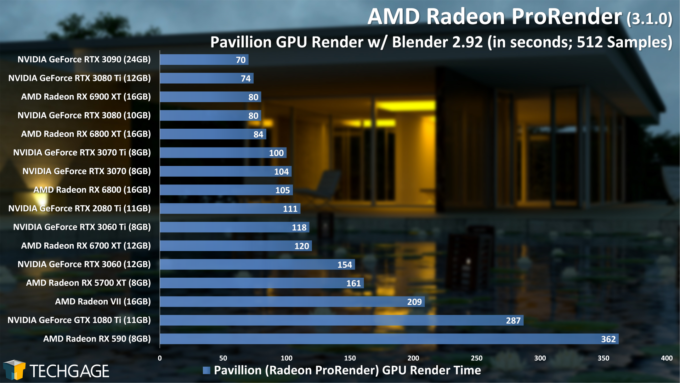

AMD Radeon ProRender 3.1

With Radeon ProRender, scaling across all three of these projects is pretty similar, with the older GPUs, including GTX 1080 Ti, sinking to the bottom of the pile in a significant way. Despite being a Radeon-branded renderer, NVIDIA’s current-gen GPUs outpace AMD’s latest and greatest a wee bit overall, although the RTX 3080 and RX 6900 XT could be considered similar.

What’s interesting about these results is how differently the Classroom project scales in RPR vs. Cycles. AMD’s top-end GPUs soared to the top of this respective chart with Cycles, but fails to topple NVIDIA the same way with ProRender.

As lightly mentioned earlier, the current stable version of Radeon ProRender for Blender doesn’t support 2.93, due to Python changes. There is a beta available, which we gave some hands-on testing. What we found was that performance was worse in almost every case, though it was more significant in Classroom than the others. The end render result did look a little bit better in each case, although not to the level you’d expect given the increased rendering times.

After testing out a few GPUs with this beta, we decided to just stick with the stable version, and retest later once the 2.93-compatible version goes final, because we believe it will likely bundle performance improvements.

We will note that Radeon ProRender is a valuable tool if you’re an Apple user, since RPR can make use of the Metal API used by macOS, and will provide you with a GPU performance boost that you would otherwise have to get from a commercial render engine.

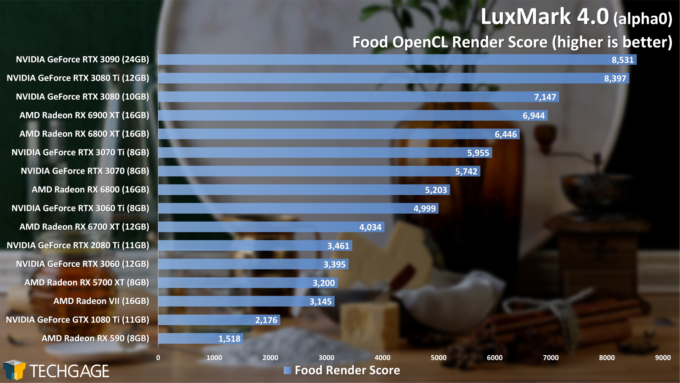

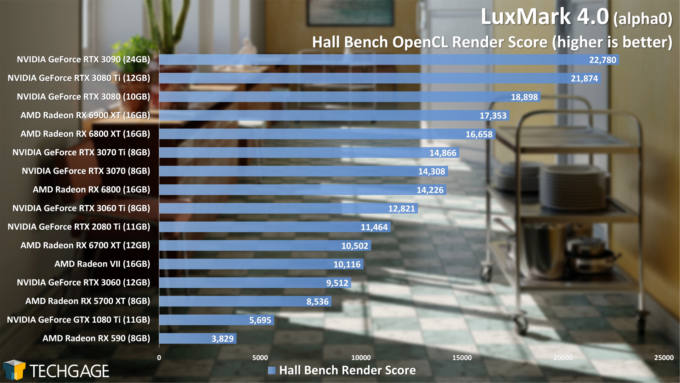

LuxCoreRender 2.2

The latest precompiled binary for LuxMark represents the 2.2 version of LuxCoreRender, and because 2.5 is effectively available now, that means a completely up-to-date LuxMark may show different results (and hopefully not crash every single time on our Threadripper machine). Nonetheless, we still see some great scaling here, with Radeons finally holding their own against the GeForces. Yet again, NVIDIA has an edge overall, but not to a truly significant degree.

This concludes our look at the three renderers we were able to run on both GeForce and Radeon GPUs. On the next page, we’ll dive into the CUDA/OptiX-specific renderers. In case you care about power consumption, we’ll also be tackling that.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!