- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Accelerated Ray Tracing: Testing NVIDIA’s RTX In Blender 2.81 Alpha

NVIDIA’s RTX features may be a bit of a slow starter in games, but on the creative side, it’s a different story. We’re taking a look at Blender’s latest 2.81 alpha build and see NVIDIA’s OptiX render engine in action, leveraging the power of the RT cores to accelerate ray traced workloads. The results are impressive.

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

On the gaming front, NVIDIA’s real-time ray tracing solution, RTX, has had a luke-warm reception at best. While it can be visually impressive at times, the performance hit that comes with it can be off-putting.

On the creative front, the people who make the assets for games and movies are finding RTX to be a real game-changer. When you see the kind of performance benefits that RTX can achieve, it’s not hard to understand why so many are asking for support.

GPU rendering gave the industry one hell of a boost by itself, along with heterogeneous rendering, combining both CPUs and GPUs to render the same scene. NVIDIA’s RT cores are yet another boost on top, as you’ll see below.

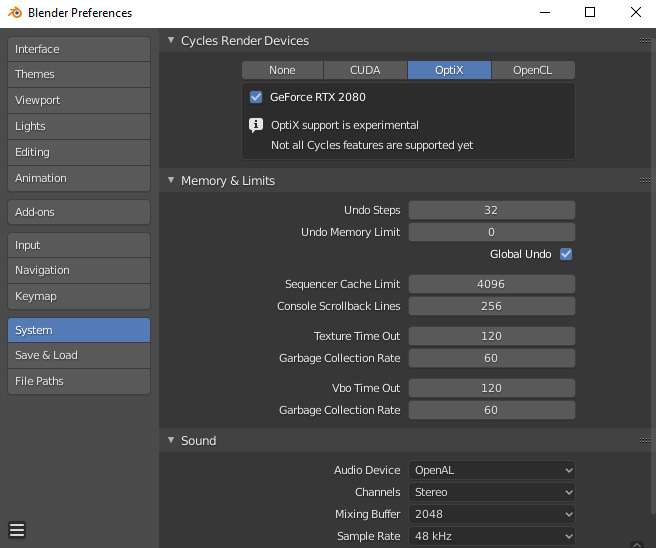

Setting Up Blender With OptiX

Blender announced back at SIGGRAPH 2019 that it was actively developing RTX support, or to be more accurate, OptiX support, as that includes a number of render pipeline improvements beyond just ray tracing. There is no firm date yet as to when the full OptiX feature suite will be integrated, but it might come with the Blender 2.81 release due in November.

Having said that, for those who are brave, or impatient enough, you can test out Blender’s current RTX support early by taking part in the alpha and compiling/building the latest version from scratch. While you don’t need to be a programmer to follow along, it’s not a completely straight-forward process as it involves installing and setting up a bunch of dev tools and SDKs.

Fortunately, the Blender Foundation has succinct and easy-to-follow guides on how to take part in the alpha program, and integrating the RTX features. You’ll just need to install Visual Studio Community edition, Subversion, GIT, CMake, NVIDIA’s CUDA and OptiX SDKs, make some changes to the build files, and wait patiently for it to compile.

Alternatively, instead of compiling everything from scratch, you can simply download the daily builds directly from the Blender Foundation, under its experimental builds section, since OptiX support has now been integrated into that build (ironically, after we went through the process of compiling the build manually.)

You will need the latest NVIDIA driver, currently 436.30, in order to use OptiX; this means either the Game Ready driver, or Quadro. The Studio Ready (or Creative) driver is not up to-date at this time, and won’t be detected by Blender as being OptiX compatible.

| Techgage Workstation Test Systems | |

| Processors | AMD Ryzen Threadripper 1950X (16-core; 3.4 GHz) AMD Ryzen 9 3900X (12-core; 3.8 GHz) |

| Motherboards | AMD X399: Aorus Gaming 7 AMD X570: Aorus X570 MASTER |

| Memory | G.SKILL Flare X (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA TITAN RTX (24GB) NVIDIA TITAN Xp (12GB) NVIDIA GeForce RTX 2080 Ti (11GB) NVIDIA GeForce RTX 2080 SUPER (8GB) NVIDIA GeForce RTX 2080 (8GB) NVIDIA GeForce RTX 2070 SUPER (8GB) NVIDIA GeForce RTX 2060 SUPER (8GB) NVIDIA GeForce RTX 2060 (6GB) NVIDIA GeForce GTX 1080 Ti (11GB) NVIDIA Quadro RTX 4000 (8GB) |

| Et cetera | Windows 10 Pro build 18362 (1903) |

| Drivers | NVIDIA GeForce & TITAN: Game Ready 436.30 NVIDIA Quadro: Quadro 436.30 |

| All product links in this table are affiliated, and support the website. | |

Alpha Testing & Limitations

Blender’s OptiX support is in alpha, and NVIDIA’s RTX is a new technology, so there are a number of pitfalls to be aware of. It should go without saying that you shouldn’t be using OptiX rendering in Cycles for production work at this time, but it does give you a sneak peek as to what to expect.

The biggest issue you will come across is lack of support for Cycle’s full rendering feature set. Certain features that are supported may not work correctly, either. As we dive into things, we’ll point out some issues we had.

The biggest issue for some people will be lack of Branched Path Tracing support. This does pose an issue in one of our benchmarks, and we had to use Path Tracing with modified settings to get it to work. One feature currently missing, but expected to be implemented in the future, is OptiX AI-based denoising using RTX’s Tensor cores (this will likely come as a plugin to be used as a composite filter). Reading through some of the other build files, we also see mention of network rendering, too, so there’s plenty to be excited about in the future.

Early versions of OptiX also showed a number of Image Quality (IQ) issues, specially related to Ambient Occlusion. Being mindful of this, when benchmarking different scenes, we saved high-resolution images of final renders for both CPU and OptiX to compare.

Since OptiX requires NVIDIA GPUs with RTX enabled, only Turing GPUs were used; from the RTX 2060, up to the TITAN RTX, and with a Quadro RTX 4000 thrown in for good measure. Testing was done on two systems: an AMD Ryzen 9 3900X, used to gather data on GPU scaling (comparing GPU to GPU), and a Threadripper 1950X used as a stand-in for a production machine which concentrated on full system scaling with a single GPU across multiple tests (comparing CPU, GPU, CPU+GPU, and OptiX.)

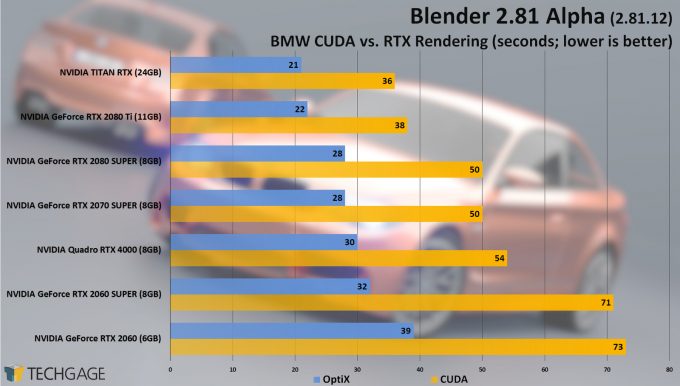

We’ll start off with GPU scaling in both BMW and Classroom renders.

Blender OptiX GPU Scaling

The difference should be pretty apparent. Overall, we are seeing render times cut in half on the BMW test, just by flicking a switch. These results are just going from the standard NVIDIA GPU render mode using CUDA, and switching over to OptiX; no other modifications were done. Later on, we show how this scales up with the rest of the system.

When the long-standing BMW Blender benchmark gets completed in 21 seconds, by a single GPU, we’re left with a sad feeling that it’s not going to remain viable for much longer, at least in its default state. The identical scores for the RTX 2070S and 2080S were quite repeatable, and are just a half second different between them, showing the limitations of such a quick render.

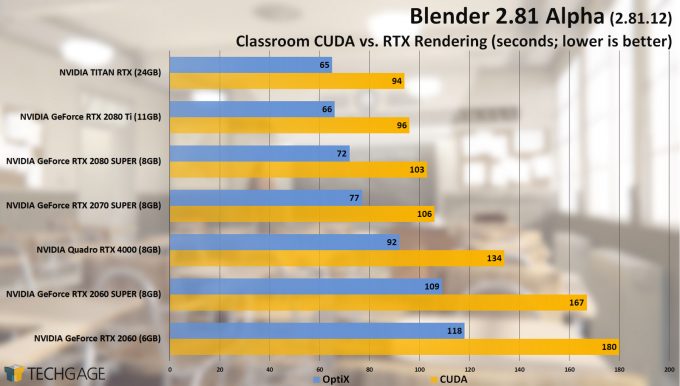

In a more complex scene such as the Classroom one, the performance delta is a little less dramatic, but still impressive. It’s hard to believe such a huge jump in performance, but then again, this is exactly what those RT cores were meant to do. GPU-based rendering over CPU was already a huge leap in performance, yet here we are again, with yet another leap.

Those two charts sum up the overall look at GPU scaling with OptiX enabled and disabled, but now we turn our attention to the bigger picture and show just how absurd rendering times can really get, as we compare it against the CPU, and heterogeneous processing.

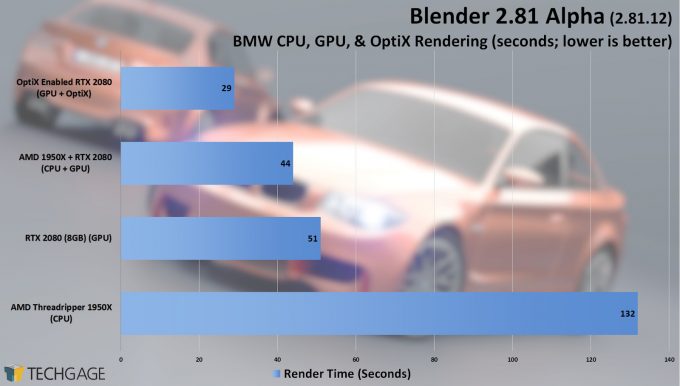

Blender OptiX System Performance

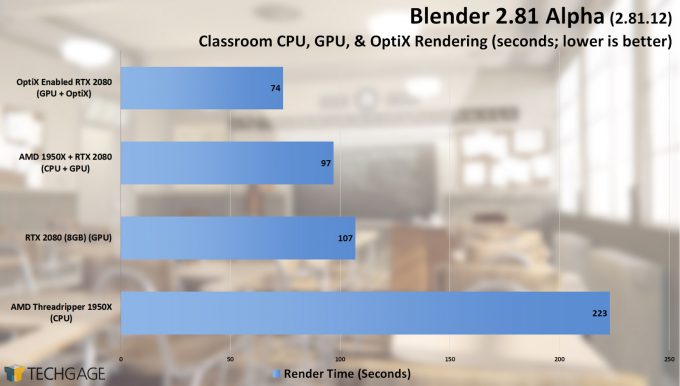

We have the same BMW and Classroom tests again, but this time throw in AMD’s Threadripper 1950X, a 16-core, 32-thread workstation CPU, complete with 32GB of DDR4-3200MHz RAM, and using NVIDIA’s RTX 2080 as our baseline GPU.

The power of GPU rendering is quite well-known at this point, as long as the workload being processed fits within its framebuffer. Just comparing the RTX 2080 against an arguably very powerful CPU is kind of unfair, though, when you see the two are in completely different leagues. Even when combined with heterogeneous/mixed rendering (using the CPU and GPU at the same time), things don’t really improve. However, keep in mind that these are very short projects.

We’re now going to move into some more challenging renders with the help of the Pavilion at night scene, Agent 327, and a 3-year-old classic, Blenderman.

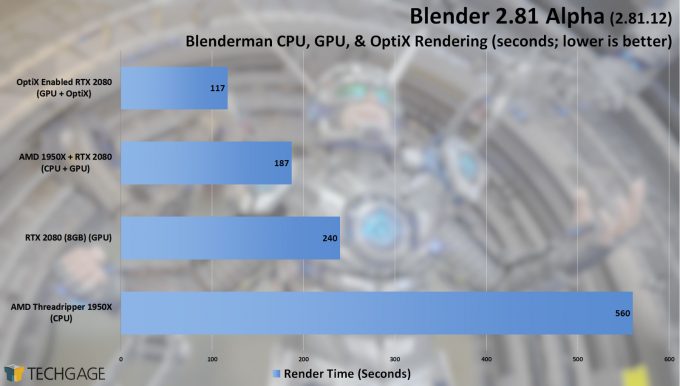

Blenderman is the next jump up in complexity, and benefits a lot by switching from the CPU to a GPU. Better yet, we see a measurable improvement with mixed-mode rendering, yet, OptiX is almost twice as fast as the CPU and GPU combined. There is also no perceptual loss in image quality, either.

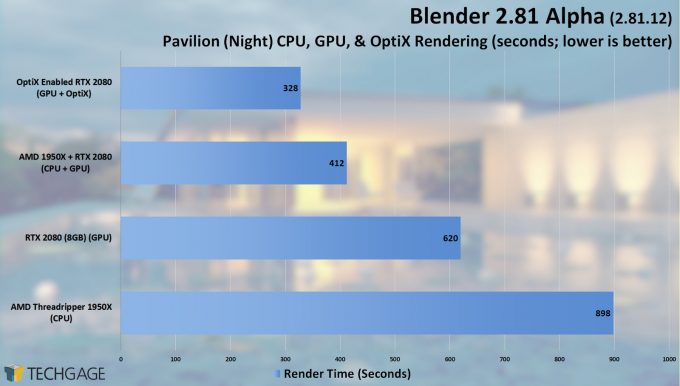

The normal Pavilion render was a little too quick for our initial tests, completing in just 15 seconds with OptiX (seriously), so we used a more aggressive version of the scene, based at night. This uses 2500 samples per pixel and is rather timely even at a low resolution. On the CPU we’re at 15 minutes render time, with the 2080 using CUDA it drops down to 10 minutes. Mixed-mode rendering actually manages to show some really impressive gains here, so even if you didn’t have an RTX GPU, mixed mode is worth a shot. But when OptiX is thrown into the mix, we’re down to just over 5 minutes, three times faster than the CPU, and twice as fast as CUDA rendering.

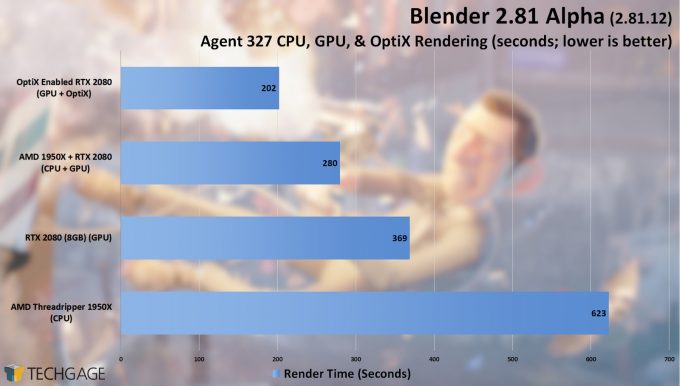

The Agent render was something we typically avoided for a while, partly because it was difficult to get working, and when it did, it took ages to complete (ages for a benchmark when you have 15 CPUs, each taking 10-20 minutes a piece for a single test). With OptiX, we take a 10 minute render down to just over 3 minutes… however… there’s a catch.

Earlier on we mentioned OptiX couldn’t support Branched Path Tracing in Cycles (also see the addendum), and that’s what the default configuration uses for the Agent scene. There is no easy way to match the equivalent image quality of what Branched rendering does, so we had to take our best (yet futile) attempt at it with just plain Path Tracing. We managed to effectively match the render time, though, using a mixture of high sample rates and increasing the max bounces, so results should still scale. To be clear, all renders were performed with Path Tracing only. We’ve included a full-size image of the Branched render to compare against.

OptiX Lost Its Spring?

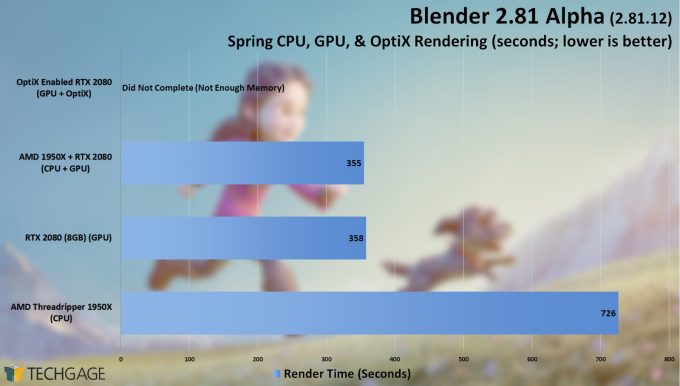

The last render on our list is the splash-screen for the launch of Blender 2.80, named ‘Spring’. This is a deceptively complex scene because of the large amount of hair used, plus liberal use of BVH shaders. If there was a project to really stretch what OptiX could do, this was it.

Sadly, there were more than a few issues, most notably, memory.

Try as we might, we just couldn’t get Spring to render with the RTX 2080, apparently due to a lack of VRAM. But this doesn’t completely make sense, and may well be a bug. Without OptiX, Spring will render fine with an 8GB framebuffer using CUDA. It’s only when OptiX is enabled that issues arise. The scene doesn’t even start to render, but locks up when compiling the BVH shaders.

Out of curiosity, we tried Spring with a much beefier GPU, a TITAN RTX with 24GB framebuffer, and that completed the OptiX render fine. What proved strange was OptiX used less VRAM than the CUDA render (9GB vs 11GB), but took longer to complete (514 seconds compared to CUDA’s 400). Even mixed rendering showed little increase in performance.

Perhaps this is a bug with the current OptiX implementation, or maybe the CUDA mode can use system memory as a buffer, while OptiX can not. In any case, the only way we could get Spring to successfully render with the RTX 2080 was by disabling hair rendering, which invalidates the test compared to the other methods.

Note: We reached out to NVIDIA about this issue, and this observation appears to be accurate. CUDA can use system memory as a fallback, while OptiX can not at this time, thus fails to render. This is currently being looked into.

Final Thoughts

Blender 2.81 has many more features coming, and it’s fantastic to see the design suite come along so far, and so quickly. Eevee, the raster-based rendering engine, will be getting a number of performance improvements. Intel’s ML-based denoise filter will be released, along with NVIDIA’s own denoise filters.

Blender joins a number of 3D design suites that have integrated RTX features, including V-Ray, Octane, Redshift, with more coming from the likes of Maxon and others. The price of entry is fairly steep, and does restrict people to RTX-enabled GPUs only, the cheapest being the RTX 2060, and it does put pressure on AMD to figure out some kind of hardware accelerated ray-tracing system, even if it means just in its Radeon Pro cards.

Perhaps we will see more of this when details are released around Microsoft and Sony’s new console launches, both of which will use Navi-based GPUs and some kind of ray tracing feature (although, this may end up being some kind of software or shader technique.)

The performance gains attributed to OptiX in the latest Blender release is undeniable, even at this early stage. However, everything we’ve seen today is still alpha, and may change. There are still some irregularities to sort out, and certain limitations to be aware of.

Complex scenes will need the much more expensive TITAN and Quadro cards to make use of OptiX, due to the larger framebuffers, but it’s not like sticking with normal GPU rendering is somehow slow, either.

Now if only NVIDIA could transfer the same excitement about RTX in the professional market to gamers.

Addendum:

While working on this project, we reached out to NVIDIA regarding specific features and issues we ran into, and were given responses accordingly. With the issues reported, most can be answered by looking at the OptiX feature checklist over at Blender’s bug tracker.

With the Spring failed OptiX render, this was due to a lack of support for shared system memory allocation, hence why normal CUDA based rendering will work, while OptiX will not, and is being investigated.

Branched Path Tracing is unlikely to be enabled in Blender’s OptiX implementation, and instead will get its own extra feature list for Path Tracing.

Mixed rendering mode between CPU and OptiX GPU is currently being worked on.

Network rendering in Cycles is still work in progress, and is independent of OptiX. This will sit on top of the entire render stack and should be compatible with any rendering system.

Eevee will not be supporting OptiX (since Eevee is a raster engine, and OptiX is ray tracing), however, there is talk of moving Eevee to Vulkan, which would allow NVIDIA to use its RTX extensions like it does with games.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!