- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

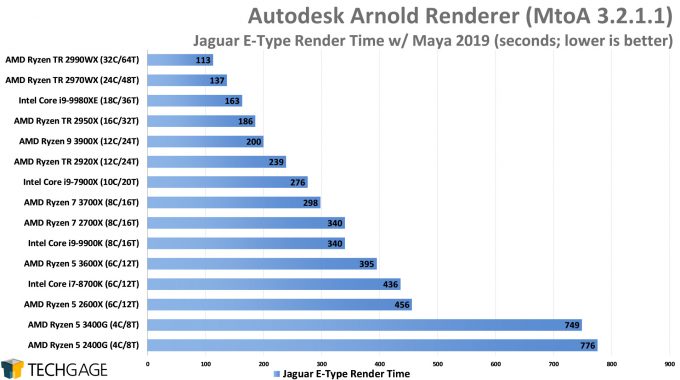

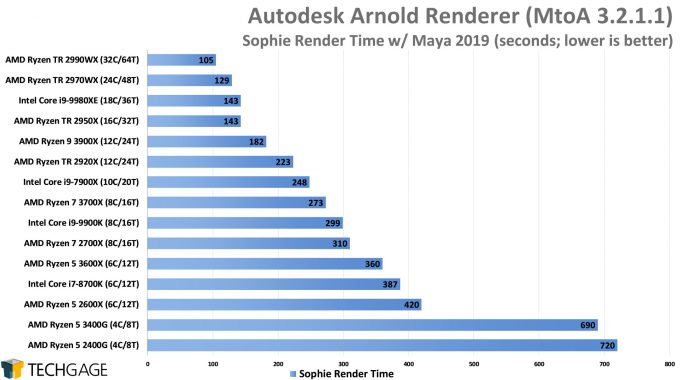

Autodesk Maya Viewport & Arnold Rendering Performance

Autodesk’s Maya is one of the industry’s most popular design suites, and likewise, its built-in Arnold is one of the most popular renderers. Both solutions are used for TV, movies, architecture, gaming, and even VFX. Join us as we explore both viewport and rendering performance with a wide range of CPUs and GPUs.

Since its introduction over twenty years ago, Maya has become a de facto 3D graphics application for use in the creation of TV shows and movies, visual effects, games, and even architecture. The rights to Maya were passed around in the beginning, but the software ultimately became an integral Autodesk M&E addition in 2005.

Maya is one of the best-supported design suites around, with many popular third-party vendors offering their support for it. That includes Chaos Group, with V-Ray; Maxon, with its (newly acquired) Redshift; OTOY, with Octane Render; and Pixar, with RenderMan – to name a few.

Another important name that needs to be on that list is Arnold, as it’s become synonymous with Maya despite Autodesk only having acquired its original developer SolidAngle in 2016. Anyone who owns a license for Maya owns a license for Arnold, and it comes preinstalled with the software. Autodesk’s Maya service pack updates don’t always include the latest Arnold, so if you want to always be up-to-date, you can fetch the newest release straight from the source.

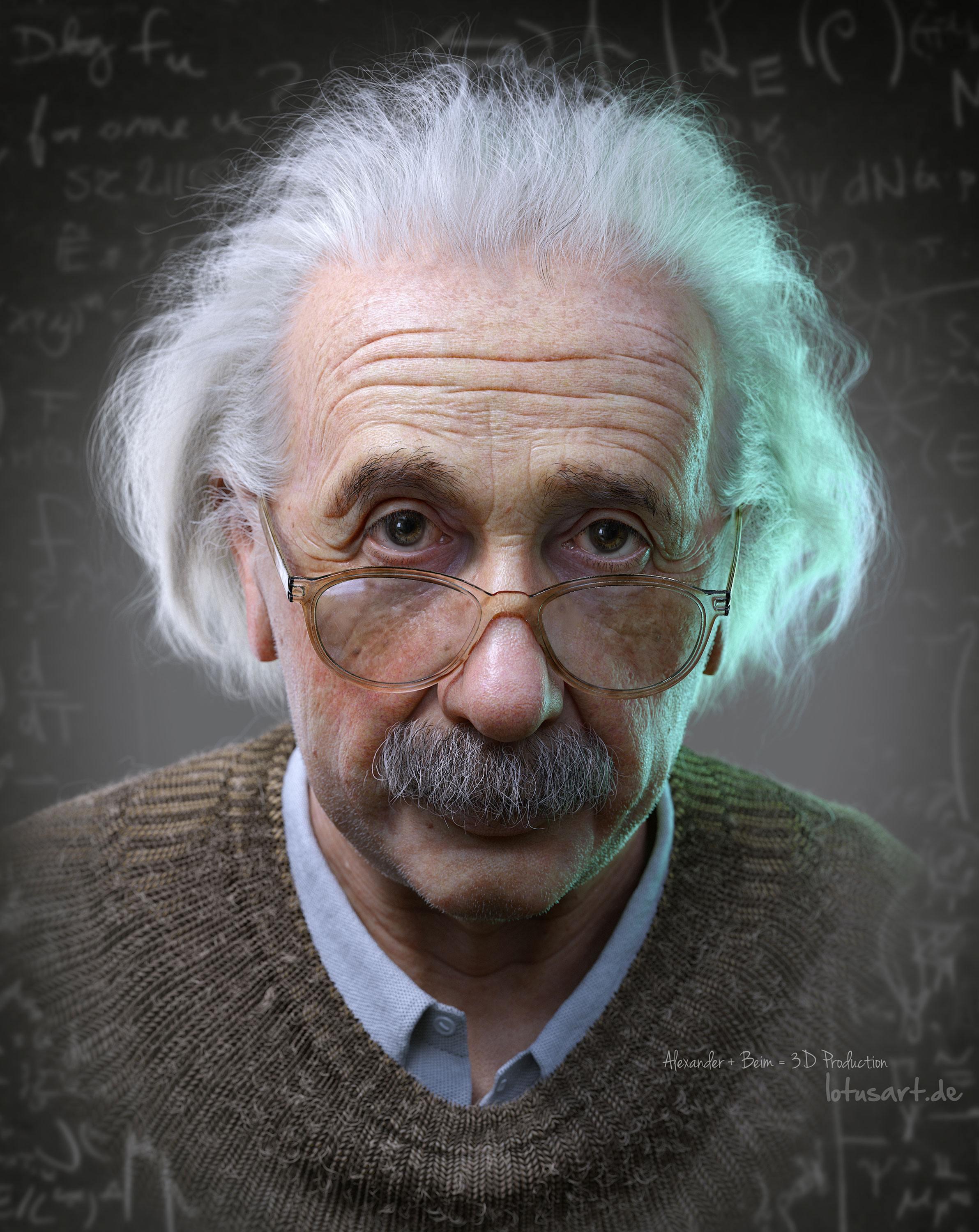

The slider below has an assortment of great art and animation created with Maya and Arnold. All of them were pulled from various articles found at Autodesk’s Life in 3D Maya page.

And here are some examples of Maya’s use in hit TV series Gotham and Game of Thrones:

We haven’t tested with Maya that much over the years, outside of using specific plugins inside of the suite (eg: AMD’s Radeon ProRender). When we added Arnold to our test suite, however, Maya became the compelling choice for benchmarking. Users of other suites can use Arnold in Maxon’s Cinema 4D, SideFX’s Houdini, Foundry’s Katana, and Autodesk’s own 3ds Max.

Getting Down To Performance Testing

The idea for this article came about after we spotted a promoted ad on Twitter which claimed that AMD’s Radeon WX 7100 was faster than NVIDIA’s Quadro RTX 4000 in SPECapc Maya 2017. We went on to do our own testing, ultimately coming up with different results than AMD. We then figured that we may as well test a bunch of other GPUs to get a fuller look at overall performance.

SPECapc Maya 2017 is just one of SPEC’s benchmarks for the software. SPECviewperf 13, which you can easily run from home, utilizes application traces to mimic SPECapc’s Maya viewport performance. This test tends to scale a bit differently (and more interestingly) than SPECapc, so we’re including it for completeness.

The viewport is only part of the performance battle Maya users will face. Rendering is the other half of the equation, and since we’ve recently tested Arnold’s CPU and GPU renderers for recent hardware reviews, we’re compiling everything together here for easier consumption (while also adding some previously missing models in places).

Here’s the bulk of the hardware used for testing:

| Techgage Workstation Test Systems | |

| Processors | AMD Ryzen Threadripper 2990WX (32-core; 3.0 GHz) AMD Ryzen Threadripper 2970WX (24-core; 3.0 GHz) AMD Ryzen Threadripper 2950X (16-core; 3.5 GHz) AMD Ryzen Threadripper 2920X (12-core; 3.5 GHz) AMD Ryzen 9 3900X (12-core; 3.8 GHz) AMD Ryzen 7 3700X (8-core; 3.6 GHz) AMD Ryzen 5 2700X (8-core; 3.7 GHz) AMD Ryzen 5 3600X (6-core; 3.8 GHz) AMD Ryzen 5 2600X (6-core; 3.6 GHz) AMD Ryzen 5 3400G (4-core; 3.7 GHz) AMD Ryzen 5 2400G (4-core; 3.6 GHz) Intel Core i9-9980XE (18-core; 3.0GHz) Intel Core i9-7900X (10-core; 3.3 GHz) Intel Core i9-9900K (8-core; 3.6 GHz) Intel Core i7-8700K (6-core; 4.2 GHz) |

| Motherboards | AMD X399: MSI MEG CREATION AMD B450 (G APUs): Aorus B450 PRO WIFI AMD X570: Aorus X570 MASTER Intel Z390: ASUS ROG STRIX Z390-E GAMING Intel X299: ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL Flare X (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | AMD Radeon VII (16GB) AMD Radeon RX Vega 64 (8GB) AMD Radeon RX 590 (8GB) AMD Radeon Pro WX 9100 (16GB) AMD Radeon Pro WX 7100 (8GB) AMD Radeon Pro WX 4100 (4GB) NVIDIA TITAN RTX (24GB) NVIDIA TITAN Xp (12GB) NVIDIA GeForce RTX 2080 Ti (11GB) NVIDIA GeForce RTX 2080 SUPER (8GB) NVIDIA GeForce RTX 2070 SUPER (8GB) NVIDIA GeForce RTX 2060 SUPER (8GB) NVIDIA GeForce RTX 2060 (6GB) NVIDIA GeForce GTX 1080 Ti (11GB) NVIDIA GeForce GTX 1660 Ti (6GB) NVIDIA Quadro RTX 4000 (8GB) NVIDIA Quadro P2000 (5GB) |

| Et cetera | Windows 10 Pro build 18362 (1903) |

| Drivers | AMD Radeon: Adrenaline 19.9.2 AMD Radeon Pro: Enterprise 19.Q3 NVIDIA GeForce & TITAN: Studio 431.86 NVIDIA Quadro: Quadro 431.86 |

| All viewport testing was conducted on an Intel Core i9-7900X 10-core test rig. All product links in this table are affiliated, and support the website. |

|

The most appropriate driver for each graphics card was used for testing. For NVIDIA, that means Studio for GeForce and TITAN, and Quadro for the cards of the same name. We’ve covered before that Studio and GeForce drivers are comparable, so in reality, the “different” 431.86 drivers used for all of NVIDIA are the exact same driver, just wrapped in a different installer. We sanity checked performance with the newer 436-series NVIDIA driver, and performance remained unchanged.

Maya Viewport Performance

The biggest performance concern for most Maya users will be with its viewport. Wireframe and simple shaded modes won’t usually be a huge problem for manipulation on mid-range systems, but once you begin enhancing the available modes, such as with anti-aliasing and texturing, the difficulty of hitting a suitable frame rate will increase.

SPECapc Maya 2017 is developed by SPEC, a consortium which creates a wide-range of benchmarks for server and professional visualization use. We’ve tested with both SPECapc Maya and 3ds Max in the past, but not to the extent here. Overall scores are measured after taking many different individual test results into consideration, covering both basic and advanced viewport modes.

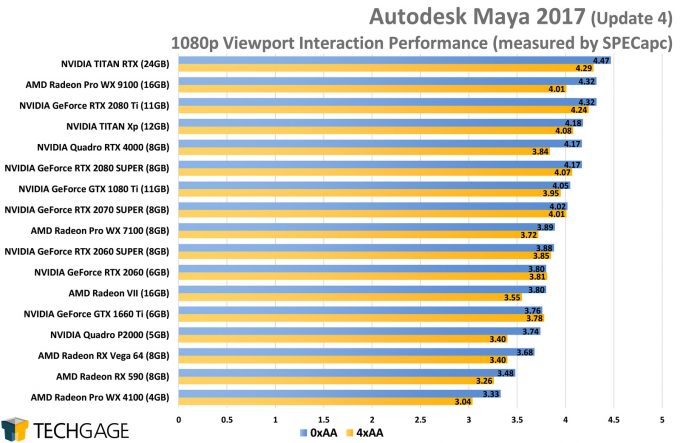

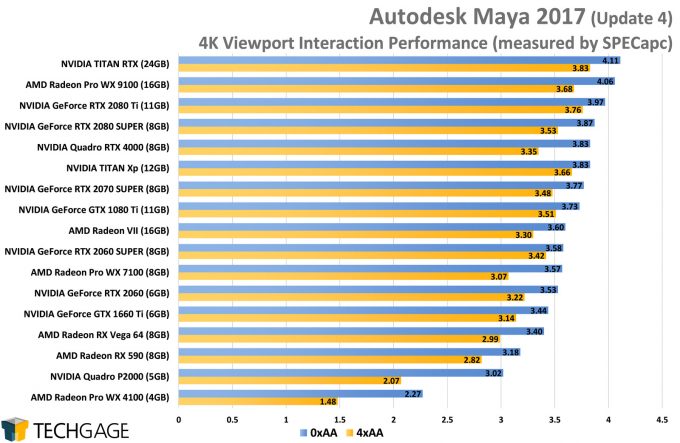

For this article, we’re focusing on the overall scores for viewport interaction. An animation and ‘GPGPU’ score are also provided in the software, but the animation scores don’t scale, and the GPGPU test has been deemed as not being useful right now. The overwhelming amount of viewport work most users will do is interactive, and fortunately, those results do work for us:

Note: AMD’s Radeon RX 5700 series is not included here due to SPECapc being unable to generate results at the end due to an error.

In most performance tests that explicitly test the GPU, we generally see far more pronounced scaling than this. At face value, results like these can paint the picture that a TITAN RTX really isn’t that much better than a WX 4100, but this is one of the rare tests where the baseline is easy to hit, but improving-upon performance beyond that point gets increasingly difficult.

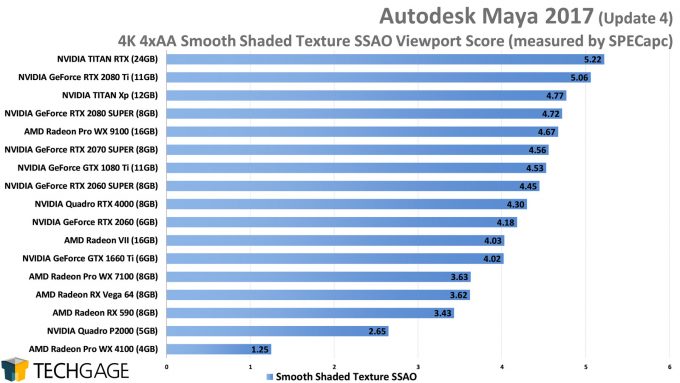

If these charts didn’t start at 0, the results would scale a lot more according to our expectations. Because we’re simply given a score here, rather than frame rate information, it’s hard to appreciate the real impact a bigger GPU will make. The one subtest that we felt showed decent scaling was for all the bells and whistles enabled. Let’s pull the results for 4K 4xAA specifically:

These results largely scale the same way as the overall interactive results, and would act as a good “worst case” for most current Maya work. NVIDIA’s top-end GPUs rule the roost, while AMD’s Radeon Pro WX 9100 puts up a good fight. With the overall interactive scores, Radeon Pro seems to bundle in optimizations that help lunge it ahead of similarly spec’d Radeon gaming cards. With this breakdown, that advantage almost seems to disappear.

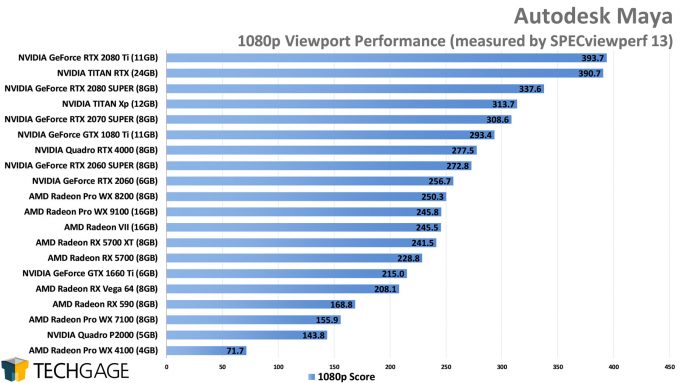

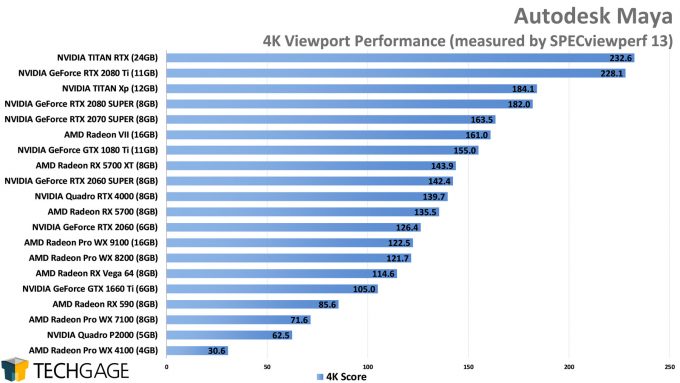

While SPECapc is far more thorough than SPECviewperf (SVP), the latter results seem to scale much better. In fact, we’d consider it safe to consider SPECviewperf a “3DMark of ProViz”, because as AMD and NVIDIA optimize their drivers for 3DMark scores, they do the exact same thing for SPECviewperf. Ultimately, SVP’s representation of real-world, modern workloads isn’t completely accurate, but it’s a good enough gauge, and one that both AMD and NVIDIA put their weight behind. So, let’s check out those results:

There’s some shake-up with the scaling in SPECviewperf. Here, the Radeon Pro WX 9100 isn’t nearly as dominant, and actually falls behind the lower spec’d WX 8200. Unfortunately, our WX 8200 went into cardiac arrest after we did its SVP testing, and before we could test it in SPECapc, so it’s missing from that set.

Nonetheless, performance between the two top cards, TITAN RTX and RTX 2080 Ti, is a battle between only them. You could run these tests over and over, and each would win some, and lose some – that’s how close they are. Their biggest differences, then, would boil down to the frame buffer size improvement.

It’s not too hard to find a good sweet spot in this list. AMD’s RX 5700 and RX 5700 XT have made it to this chart, fighting nicely against NVIDIA’s RTX 2060 and RTX 2060 SUPER, with performance scaling well against their prices. One thing becomes clear, though, and that’s that frame buffer limits can wreak havoc when a project gets too big.

At this point in time, we’d never recommend opting for a GPU with less than 8GB of memory if you plan to create complex projects, and if cost restrictions come into play, you should probably opt for a gaming GPU with more memory than a professional card with less. NVIDIA announced 10-bit color OpenGL support for its Studio and GeForce drivers in recent months, so that previous concern is no more. We’re not sure exactly where AMD’s 10-bit color situation sits, and we’ve been unable to get answers in recent months. We’d imagine RPro drivers will enable 10-bit in Maya, but gaming Radeons may not, and we wait to be told different.

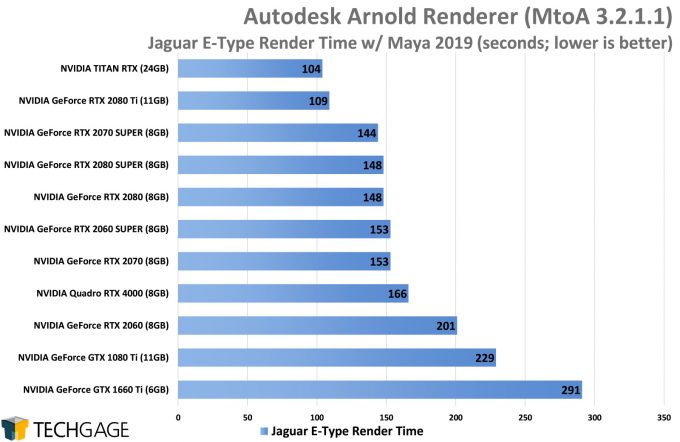

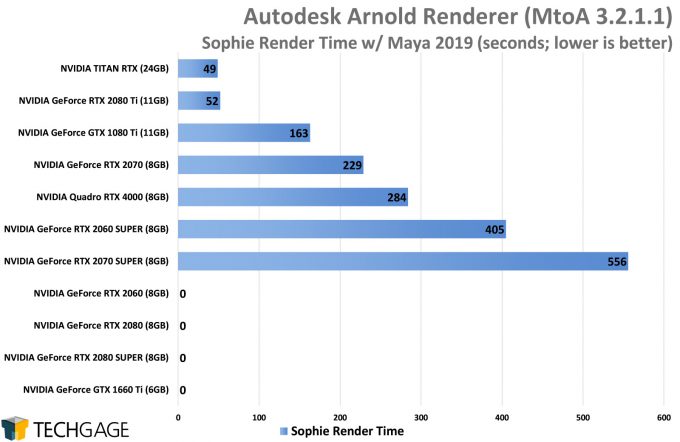

Arnold CPU & GPU Rendering

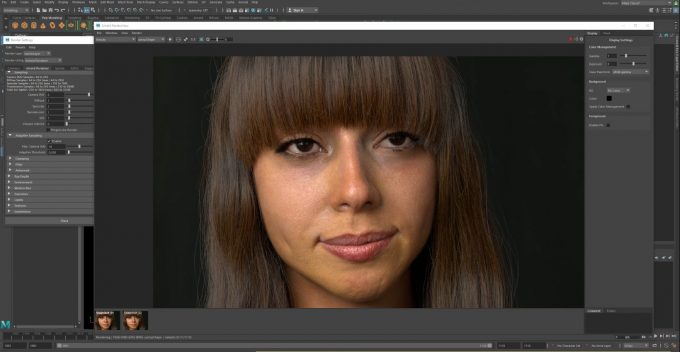

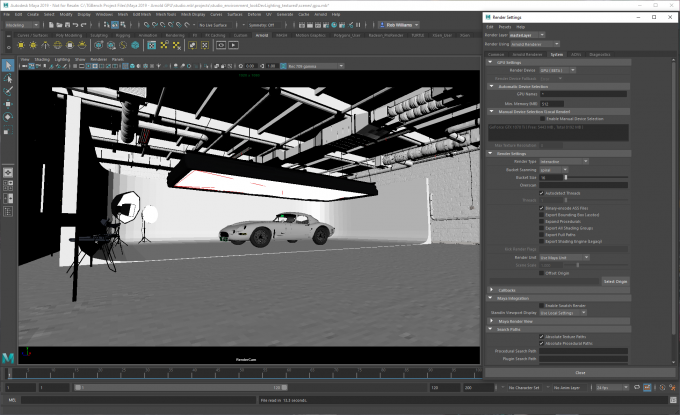

For our Arnold testing, we’re using a recent version of the plugin that hasn’t changed its performance characteristics between the time of our testing and the release of the most recent MtoA 3.3.0.1 version. The projects we’re testing with were supplied to us by Autodesk, helping us to take care of both an automotive and character render workload:

It only requires a quick glance to begin to see immediate trends in CPU rendering performance here. First and foremost, AMD’s Zen 2-based CPUs perform extremely well, with the current-gen 12-core Ryzen 9 3900X beating out the last-gen 12-core Threadripper 2920X very well. Likewise, the 8-core 3700X leaps well ahead of the last-gen 2700X.

Overall, none of Intel’s equal core spec’d chips manage to overtake AMD, which is pretty impressive for that opposing camp. With the 32-core 2990WX performing so well here, we’re really keen on seeing how a Zen 2 Threadripper variant will compare – or better still, one with even more cores. Likewise, Intel has more many-core CPUs en route, so this performance outlook will likely change-up a bit in the months ahead.

Until earlier this year, all of Arnold’s life has revolved around being a CPU renderer. With hugely parallel GPUs able to accelerate ray traced workloads so well, though, Arnold’s developers have had to listen to fan demands of graphics card support for ages. We were quick to jump on the first beta when it released, and have since made it a permanent part of our suite. But alas, there are some caveats:

Arnold GPU doesn’t currently support the feature set of Arnold CPU 1:1, but it’s inching ever closer all the time. At last check, it’s missing some functionality with cameras and lights, and is missing support for some shaders and shapes, but for the most part, most seems to be supported. All of the color managers and operators are supported, and we wouldn’t be surprised if all of these categories were marked as complete come next year.

A second, and rather major caveat, is that Arnold GPU was born as an NVIDIA CUDA-based renderer, with future intent to extend to OptiX for ray tracing acceleration with RTX. That means that AMD GPUs are not supported, and we’re not currently sure of the company’s thoughts on changing that. It’s our hope that OpenCL or other AMD-supporting APIs like Vulkan, will be adopted in the future.

Nonetheless, let’s check out the performance:

Our character render results are nonsensical, but they have been since we first tested the project out this past spring. We’re not sure why very specific GPU models suffer an odd bug that literally prohibits rendering of that particular project, but it’s likely due to how the renderer is allocating resources, triggered by very specific configurations. We saved a new version of the project with the most up-to-date Maya and Arnold, and retested these GPUs with the latest 436.* driver, and none of them fared any different.

Clearly, the faster your GPU, the better your render time with Arnold. Yet again, the 2080 Ti and TITAN RTX rule at the peak, while the rest scales largely with what you pay for. That said, it does seem that Turing brings about great improvements gen-over-gen, especially if you compare the 1080 Ti to the RTX 2080 Ti.

Final Thoughts

With all of that performance information behind us, it should be easy to drum up some conclusions, right? Well, not really. As with all workloads, it pays to understand where hardware strengths lay, but with Maya, certain strengths may matter more than others depending on how you’re using the software.

SPECapc and SPECviewperf disagree a bit on viewport scaling, with the former in particular offering more than the expected amount of anomalies with AMD GPUs. The Radeon Pro WX 9100 performed great with SPECapc, but didn’t fare quite as well with SPECviewperf. NVIDIA definitely rules the top of the charts, but we don’t think the higher-end current-gen Radeons would realistically fall too far behind, especially based on the more expected scaling seen with SPECviewperf.

For those wanting Maya to be a simple all-in-one solution, NVIDIA’s GPUs are going to be more attractive based on the fact that they hold nothing back in performance, and represent the only vendor that will be supported in Arnold GPU for quite some time (we have no idea if AMD support is planned).

AMD users wanting to use their GPU for both viewport and rendering in Maya will need to opt for a different renderer, such as AMD’s own Radeon ProRender. Maya is one of the two design suites (the other being Blender) that is guaranteed to be updated on a regular basis, and the lack of AMD GPU rendering support in Maya could be a good reason for that.

Overall, we’d say that an AMD CPU and NVIDIA GPU is the safest pairing for Maya, based on the value of the CPUs and offered performance and improved support of the GPUs with Arnold. Intel CPUs are just fine for rendering, and AMD’s GPUs are fine for viewport – but pairing a CPU like the Ryzen 9 3900X (~$500) and a GPU like the RTX 2060 SUPER (~$400) will deliver a well-rounded and well-supported Maya experience for the cost. Even the 8-core Ryzen 7 3700X (~$330) managed to really impress against the 8-core Core i9-9900K (~$500).

For those dealing with the heaviest of projects, both the 11GB RTX 2080 Ti (~$1,199) and 24GB TITAN RTX (~$2,499) are safe choices – if your wallet can handle it. On the CPU side, you can go with a really big CPU for fast rendering now, or opt for a mid-range one that will serve the purpose now, and then pass on its work to the GPU in the future. In time, we will be seeing heterogeneous rendering in Arnold with OptiX, but it’s impossible to make judgments (or decisions) based on that right now (if you want to see how OptiX performs, you can check our Blender article to see the performance that might be coming to Arnold). It’s hard enough to do that with the straight GPU renderer, given it’s still in beta.

The fact that Arnold GPU is in beta may have some wondering if the tag will ever be removed. What we know is that from the beginning, Arnold GPU has been planned to offer 1:1 render quality with the CPU renderer, and that’s a message Autodesk reiterated during presentations this year. Pixel compatibility is just part of the concern; thankfully, Autodesk plans for complete API support, as well.

See? It’s not that simple to sum things up. If you’re finding yourself still left with questions, feel free to leave them in the comments!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!