- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

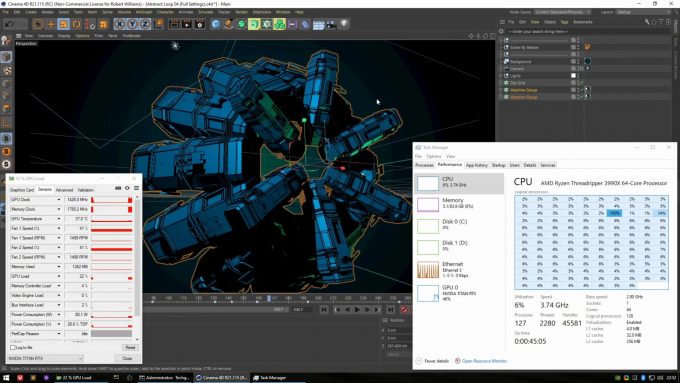

Which CPU Rules Them All? A Look At Performance In Maxon’s Cinema 4D R21

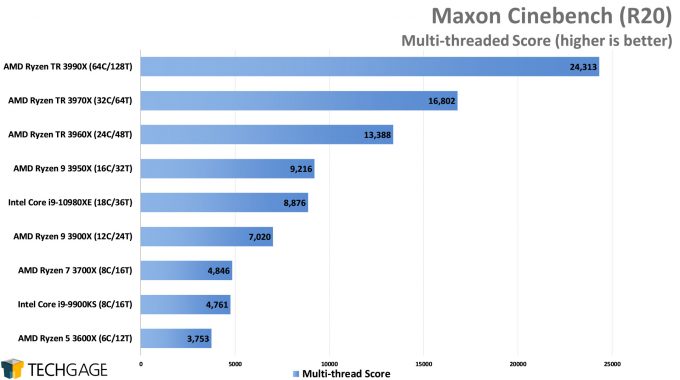

How well does that new CPU you’ve got an eye on accelerate your Cinema 4D workloads? We’re going to find that out with the help of static and animation renders, as well as some simple viewport tests. For good measure, we’ll toss Cinebench results in as well, to see how those agree with our real-world testing.

For about as long as Techgage has existed, we’ve been covering rendering performance in Maxon’s Cinema 4D. Cinebench is a well-known benchmark at this point, and because it both represents a useful workload and is easy to run, it’s long been a no-brainer inclusion in our content. In recent years, we added the real Cinema 4D suite to our testing to see how real-world results align with CB’s.

It was a different world when we posted our first Cinebench results way back in 2006. Those revolved around an Intel dual-core, the Pentium 820 D, built on a 90nm process. Today, we have 64-core CPUs built on a 7nm process. The amount of performance you can get out of a single workstation PC nowadays is simply astonishing.

Cinema 4D’s default render engine is Physical, which is completely CPU bound, and thus benefits greatly from high core counts. A secondary built-in renderer is AMD’s Radeon ProRender, which can render to the CPU, but has primary focus on the GPU. Whichever of these renderers you use will dictate which hardware you should prioritize in a new workstation. That is, unless you use a third-party option.

The focus in this article is going to revolve around C4D’s Physical renderer, which uses Intel’s Embree as its backend. Those interested in CPU rendering performance for Arnold, Corona, KeyShot, or V-Ray should check out our AMD Ryzen Threadripper 3990X review, while those after GPU rendering performance for Arnold, Redshift, Octane, or V-Ray should check out our article from early January.

Performance Testing Cinema 4D R21

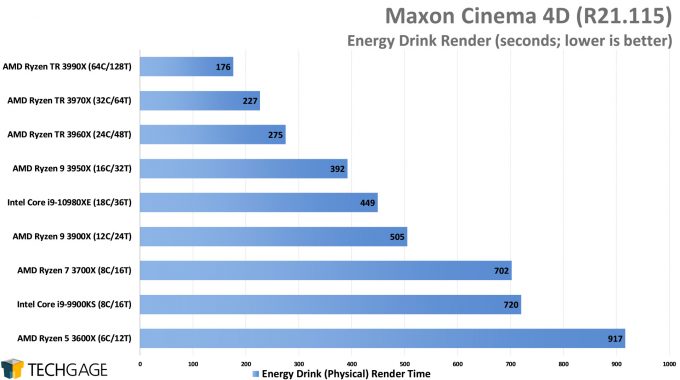

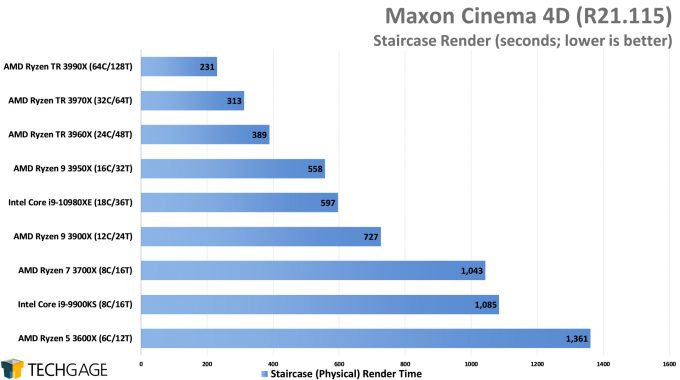

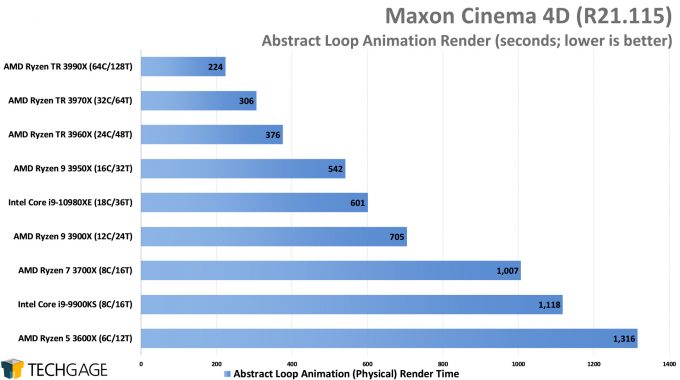

In this article, we’re including the same Cinebench results that we benchmarked for our Threadripper 3990WX review, but have since reinstalled each CPU to test C4D R21.115 with some fresh real-world projects. These include a render of an energy drink, such as for advertising use, and a bedroom render that makes us want to improve our own living spaces. We’ve also added an abstract art animation, although as we’ll see later, the scaling won’t differ much from single-frame rendering.

We’ll explain more about each test as we come to them. Here’s a quick look at our tested hardware:

| Techgage Workstation Test System(s) | |

| Processors | AMD Ryzen Threadripper 3990X (64C/128T; 2.9GHz) AMD Ryzen Threadripper 3970X (32C/64T; 3.7GHz) AMD Ryzen Threadripper 3960X (24C/48T; 3.8GHz) AMD Ryzen 9 3950X (12C/24T; 3.8GHz) AMD Ryzen 9 3900X (12C/24T; 3.8GHz) AMD Ryzen 7 3700X (8C/16C; 3.6GHz) AMD Ryzen 5 3600X (6C/12C; 3.8 GHz) Intel Core i9-10980XE (18C/36T; 3.0GHz) Intel Core i9-9900KS (8C/16T; 4.0 GHz) |

| Motherboards | AMD X399: ASUS ROG Zenith II Extreme AMD X570: ASRock X570 Taichi Intel Z390: ASUS ROG STRIX Z390-E GAMING Intel X299: ASUS ROG STRIX X299-E GAMING |

| Cooling | AMD X399: NZXT Kraken X62 AMD X570: Corsair Hydro H115i PRO RGB Intel Z390: Corsair Hydro H100i V2 Intel X299: NZXT Kraken X62 |

| Chassis | AMD X399: Cooler Master MasterCase H500P Mesh AMD X570: Fractal Design Define C Intel Z390: NZXT S340 Elite Intel X299: Corsair Carbide 600C |

| Graphics | NVIDIA TITAN RTX |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 4x16GB; DDR4-3600 16-18-18 |

| Et cetera | Windows 10 (1909) |

| All product links in this table are affiliated, and support the website. | |

For details on our testing methodology, you can check out our other CPU reviews linked previously. The most important aspects are that motherboards are configured with MCE off and XMP enabled, tests are run at least twice, with additional runs to verify results if required, although they are usually <1% deltas.

While a GPU is listed here, it doesn’t matter much for any one of the tests we’ve included here. As mentioned and linked-to above, we have other articles that tackle rendering in other engines, for both CPU and GPU. For now, let’s jump into an out-of-the-box C4D:

Rendering Performance

At quick glance, it’s easy to surmise that all three of these renders scale the same way across-the-board. There are some exceptions where some models inch a bit closer in one render than another, but overall, the scaling is clean. That’s a great thing, since it means you should feel confident in actually being able to take advantage of these biggest CPUs regardless of your project design.

Does Cinebench agree?

For the most part, Cinebench agrees with our real-world tests on where each CPU stacks up against their relative competition, but it’s clear that the standalone benchmark is able to produce even more attractive performance deltas than our tests. It could be that there will be certain projects that could align better with the scaling of Cinebench, but neither of our three happen to match it.

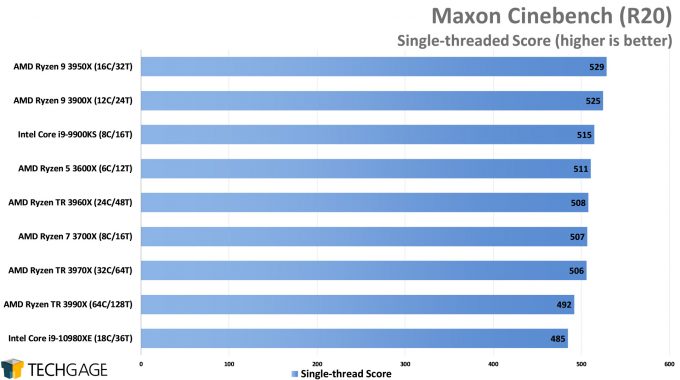

While no one is going to render to a single thread, Cinebench’s ST score has become synonymous with its MT score, because it lets us peer into clock and IPC advantages between chips. With CB R15, Intel usually took the lead over AMD, but with Zen 2 and CB R20, which utilizes AVX instructions, AMD manages to topple its competitor.

Considering what we know about the 9900KS, such as the fact that it’s clocked at 5GHz, we’ve been surprised to not see Intel manage to take the lead here. But, this is where things get interesting. Let’s jump into a look at viewport performance:

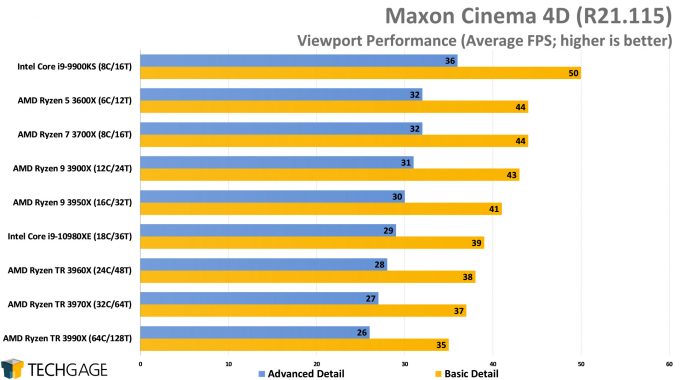

Viewport Performance

When we first started including rendering performance from the actual Cinema 4D suite on our site, we ultimately wanted to expand our testing to include animation, and also viewport performance. What we found out during our in-depth testing for this article is that the viewport isn’t nearly as GPU-heavy as you might think, at least for the time-being. Our scripted tests gave us the same frame rate on both a $350 and $2,500 NVIDIA GPU.

When we monitored both manual movement and animation playback in the viewport inside of Task Manager, we found that either operation would heavily utilize only a single thread. Because of this, high core count CPUs are not going to improve the viewport performance, and in fact, they could hurt it. But, “hurt” should be in quotes.

We debated about including this performance because we don’t believe that it’s hugely relevant to real-world use, but because these findings contrast with Cinebench a bit, we decided to anyway.

In our playback test, Intel’s 5GHz 9900KS glued itself to the top of the chart, while the advantages start to decline as the core counts go higher – a natural occurrence, since higher core count chips generally have lower clock speeds. In Cinebench’s single-thread test, AMD rules the roost, but when Intel’s and AMD’s competitive chips are compared here (eg: 10980XE vs. Threadripper), Intel has the overall advantage.

Again, we should stress that the real-world benefits to be derived from these results are what we’d consider minimal, as we’re talking about viewport interactions here, which don’t need to be a perfect 60 FPS to be completely usable. If you want proof that you shouldn’t stress viewport performance, consider the fact that Maxon took the viewport test out of Cinebench R20. Clearly, that was done for a reason.

That all said, it is worth noting the improvements on higher-clocked parts if you use C4D with only a GPU renderer; eg: Redshift. If that’s the case, then it means the CPU no longer matters for rendering, and thus, a faster clocked model might be best; eg: Intel Core i9-9900KS + NVIDIA GeForce RTX 2080 Ti might be better than a single massive CPU. No one’s workload is exactly the same, so what might work for you may not work for someone else. As always, it pays to know your workload.

Final Thoughts

As mentioned earlier, this article exists to take a look at the performance from Cinema 4D’s Physical renderer, as well as the viewport. Clearly, the viewport performance doesn’t matter a great deal if you rely on CPU rendering, but if you focus more on GPU rendering, then you may want to opt for a lower core count (eg: 8) CPU, but one with higher clocks.

Fortunately, rendering is fairly easy to choose hardware for, as long as it scales according to expectations. Cinema 4D largely does, with the higher core count CPUs scaling nicely against one another. In terms of the best bang for the buck, it completely depends on your needs and budget. The most important workloads would run well behind the $3,990 64-core Threadripper, but the best “bang for the buck” in that area is likely the $1,999 32-core, since the 64-core doesn’t quiet double performance. That said, the time savings seen here is still going to matter a lot for most studios. If each frame renders even 10 seconds quicker, that’s 10 seconds multiplied by every single frame. That’s considerable.

For more down-to-earth workstations, you’d really want to consider a chip like the 3950X, as its 16 cores manages to overtake Intel’s 18 in this test, and unlike the $1,000 10980XE, you can actually find the $750 3950X available for sale. We’re hesitant to recommend CPUs lower-end than this, because if CPU rendering is the biggest performance concern in your workload, then it won’t bode well to skimp on it.

Again, if GPU rendering is your bag, then you will want to check out respective performance from our previous articles. Even though we render in a different software suite, the performance should largely scale the same. GPU renderer folks can check out our Arnold, Redshift, Octane, or V-Ray performance here, while performance for Arnold, Corona, KeyShot, or V-Ray CPU renderers can be perused here.

If you have questions not answered in this article, please hit up the comments and let us hear it. We hope this article has proven useful to you, and if not, we also want to hear from you, so that we can improve our methods the next time around.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!