- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GTC Europe 2017: Early Access To Holodeck & Debut Of DRIVE PX Pegasus

NVIDIA sure loves its autos, as with each and every GTC over the past number of years, we’ve heard the company tell us how it’s going to change our driving (or perhaps our “not” driving). At GTC Europe 2017, the company unveiled two bombshells: the debut of DRIVE PX Pegasus, and the announcement of early access to its VR design suite, Holodeck.

Over in Munich, Germany, NVIDIA is holding its annual European GTC (Graphics Technology Conference). This is a place for NVIDIA to ‘talk shop’ with its partners, as well as to keep the industry apprised of its ongoing development plans. This year, automotive and AI took center stage as the industry pushes for self-driving cars.

NVIDIA had a number of announcements, including the new DRIVE PX Pegasus, capable of 320 trillion operations per second serving as the brain for Robotaxis, and its early access initiative on the much hyped Holodeck collaborative design virtual suite.

NVIDIA DRIVE PX Pegasus

Self-driving vehicles are one of the biggest challenges the automotive industry has had to tackle in a long time. While attempts have been made over the decades, they all required some form of infrastructure to be put in place on existing roads to work. Teaching cars to drive on existing roads, while conforming to all applicable laws and unexpected incidences, has proven to be a monumental challenge.

Fitting a car with a bunch of sensors and a GPS unit is woefully inadequate to perform in the real world. While more sensors allow for better realization of the environment, it puts a heavy load on the brains of the system. The computation to take in all the sensor data, process it, and then make a decision, is very demanding and power intensive. Currently, most vehicles use desktop PCs tucked away in the boot, complete with GPUs to handle the amount of computation. Slowly, though, the industry is moving over to more power efficient embedded systems, like NVIDIA’s DRIVE PX.

The latest DRIVE PX system, Pegasus, has been updated to include more than 10x the performance over its predecessor, capable of 320 trillion operations per second (TOPS). In a briefing, we were told that this was the result of NVIDIA’s ‘unannounced next-gen GPU’ – something beyond Volta (which itself hasn’t even made an appearance on GeForce yet).

While Volta isn’t ‘unannounced’, it isn’t exactly widely available either, as the only commercial version that exists today is found in the Tesla V100. This compute card is primarily available from select system builders, and is also found in NVIDIA’s own $75,000 DGX1. However, Volta is NVIDIA’s latest-gen GPU architecture, and it introduced a number of AI specific advances that has caused an explosion in deep learning. What we’re talking about specifically, is the introduction of Tensor Cores, the deep learning specific processing units introduced with Volta, and likely at the heart of Pegasus.

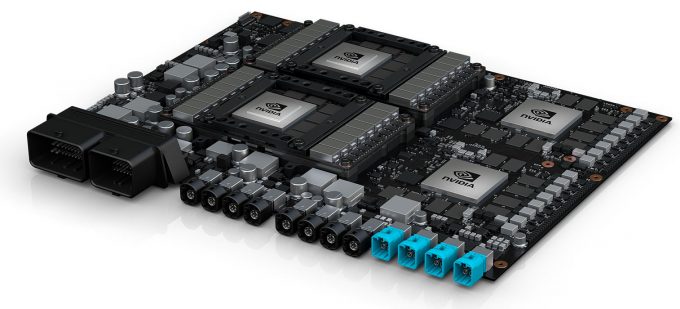

Pegasus is capable of 320 TOPS, not TFLOPS, as is normal for standard compute. NVIDIA did clarify that the 320 TOPS consisted of multiple core types, including Tensor Cores. In any case, that’s a lot of compute in a small 30 watt package. The Pegasus units are composed of two Xavier SoC processors which feature Volta architecture, but there are also two additional ‘next-gen’ discrete GPUs on the boards as well (four chips in total). The only information given at this time is that the new GPUs feature ‘hardware created for accelerating deep learning and computer vision algorithms’.

These Pegasus units can be hooked up with a number of industry standard sensors, including cameras, ultrasonics, radar and LiDAR systems, plus multiple 10Gbit Ethernet interfaces. There is also a combined memory bandwidth of 1TB/s, so perhaps there is HBM2 involved as well, much like the Volta GPUs. The DRIVE PX Systems can even work in conjunction with full PCs with graphics cards for additional compute for on-the-fly learning. NVIDIA expects to start seeding Pegasus units to industry partners beginning in H2 2018.

NVIDIA has also secured a deal with the world’s largest delivery company, Deutsche Post DHL, outfitting a fleet of StreetScooters with autonomy, with help from ZF. So far, a prototype vehicle with six cameras, one radar and two LiDAR systems has been hooked up to an NVIDIA DRIVE PX system, which DPDHL trained with the help of a DGX-1 supercomputer, and is currently being shown at the GTC event. DPDHL makes one of 225 partners that NVIDIA works with, developing the DRIVE PX platform.

NVIDIA Holodeck Early Access

Over the last year, NVIDIA has showcased a new project management and collaborative design system utilizing physically simulated VR environments, called Holodeck. These are multi-user systems where a development team can work on a project, in photo-realistic 3D, with a virtual reality HMD, in real-time. It’s called Holodeck for a reason.

We’ve seen Holodeck showcased before at GTC and SIGGRAPH, with a number of projects on display, from the likes of NASA and Koenigsegg. However, this is the first time the public will actually be able to put it to use on their own systems, through the early access program.

Holodeck is an amalgamation of different technologies at play, from the 3D rendering system that works with head-mounted displays, such as the Oculus Rift and HTC Vive, to NVIDIA’s DesignWorks and VRWorks systems for application integration, and then PhysX to make sure the world obeys the laws of physics. This same environment is also what runs the Isaac Lab training system for machine intelligence and learning, which was also covered at GTC earlier this year.

To begin, you’ll be able to import 3ds Max and Maya projects into Holodeck, but be prepared as you’ll need a fairly hefty system in order to get the performance necessary to run it. Early access will require users to have some serious hardware, with either a GeForce GTX 1080 Ti, TITAN Xp, or a Quadro P6000 graphics card, as well as a suitable HMD and core system to run the projects (CPU and RAM).

Holodeck ties in with other AR and VR related technologies that NVIDIA has worked on, which also ties in with other AI related research on production rendering with denoising using Optix 5.0 on DGX-1 servers, Iray, and AI assisted facial motion. These were covered previously at SIGGRAPH.

It won’t be long before we’re simulating cars rendered and designed through Holodeck, using a DRIVE PX system trained on a DGX-1 in Isaac, delivering parcels and packages through a completely autonomous vehicle. Yikes.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!