- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Workstation GPU Performance Testing: Redshift, Blender & MAGIX Vegas

We test a lot of software to get a good gauge on workstation performance, but there’s always room for more – or at least possible replacements. In this article, we’re exploring performance of three applications readers encouraged us to test: Blender and Redshift for rendering, and MAGIX’s Vegas for video encoding.

July 9, 2018 Addendum: Article was updated with more comprehensive MAGIX Vegas test results.

A couple of months ago, we published a look at updated workstation GPU performance across a wide-range of benchmarks, and the feedback received has been like nothing we’ve seen before. Among the feedback, a number of requests were made for inclusion of other software, with Blender, Vegas, and Redshift all proving worthy of exploration.

Our content is ever-evolving, so over time, some tests that might not seem so important could be dropped in favor of more relevant tests, and tying into that, optimizations are likely to be made, to allow for all of this testing to take place efficiently.

That all said, we’re always on the lookout for new tests, so we’re still open for suggestions, and likewise, if you spot ways any of our current testing could be improved, please feel free to comment. Contrary to popular belief, we couldn’t possibly understand dozens of pieces of software better than you understand the one you use nearly every day – so you may catch something we don’t.

Since this article focuses on just three tests, it’s not going to be that exhaustive. However, all three tests were run with the latest graphics drivers on both the AMD and NVIDIA side, and as before, some gaming cards are thrown in for a better overall picture of performance from a workstation GPU vs. gaming GPU standpoint.

| Techgage Workstation Test System | |

| Processor | Intel Core i9-7980XE (18-core; 2.6GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING (1401 EFI) |

| Memory | HyperX FURY (4x16GB; DDR4-2666 16-18-18) |

| Graphics | AMD Radeon RX Vega 64 8GB (Radeon 18.6.1) AMD Radeon RX 580 8GB (Radeon 18.6.1) AMD Radeon Pro WX 7100 8GB (Radeon Pro 18.Q2.1) AMD Radeon Pro WX 5100 8GB (Radeon Pro 18.Q2.1) AMD Radeon Pro WX 4100 4GB (Radeon Pro 18.Q2.1) AMD Radeon Pro WX 3100 4GB (Radeon Pro 18.Q2.1) NVIDIA TITAN Xp 12GB (GeForce 398.11) NVIDIA GeForce GTX 1080 Ti 11GB (GeForce 398.11) NVIDIA Quadro P6000 24GB (Quadro 397.93) NVIDIA Quadro P5000 16GB (Quadro 397.93) NVIDIA Quadro P4000 8GB (Quadro 397.93) NVIDIA Quadro P2000 4GB (Quadro 397.93) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 17134 |

| For an in-depth pictorial look at this build, head here. | |

As of the time of writing, the drivers used for testing are still current, so this is as up-to-date as possible (that also includes the PC itself, which has had its EFI and OS fully updated for the post-Spectre era).

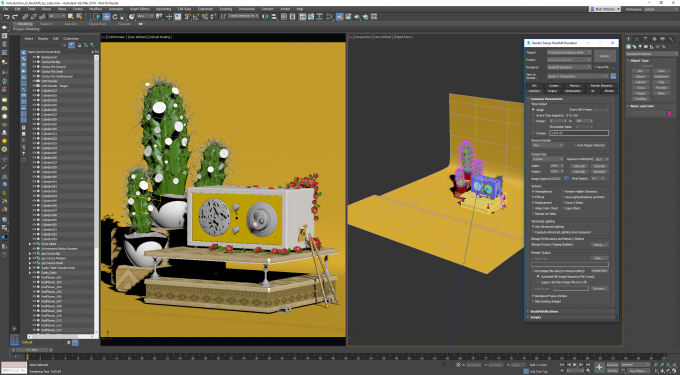

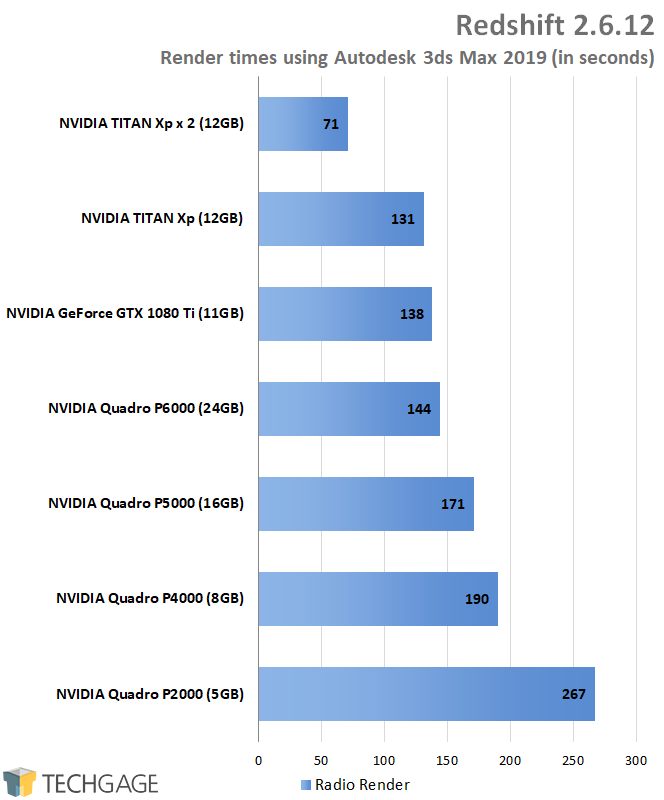

Redshift

Redshift calls its renderer the world’s first GPU-accelerated biased renderer, aiming to make the best possible use of the GPU hardware on hand, and deliver the resulting images as quickly as possible. This contrasts with unbiased renderers, which can often take a very long time to process, sometimes at the expense of superfluous quality changes.

At the moment, Redshift supports only CUDA cards, so by default it’s slightly less interesting than the other tests here, but for those who use Redshift, they’re no less important. Fortunately, support for AMD GPUs is planned for the future.

There are two great things about Redshift’s licensing worth noting. First is the fact that there’s a flat annual fee that allows you to use the renderer for any and all of the supported design suites (3ds Max, Maya, Softimage, Cinema 4D, Houdini, and Katana). Even better, there’s no limit on the free trial, outside of watermarked images, allowing you to easily compare our performance to yours – assuming you’re using 3ds Max as the suite of choice.

If you would like to compare your performance to ours, you can snag the Redshift free trial here, and then the project file here. You’ll want to render the scene at 1600×1920, making sure your GPU (or GPUs) are properly selected in the ‘System’ tab.

Like all good GPU-accelerated renderers worth their weight in bytes, Redshift takes great advantage of multiple GPUs. Adding a second TITAN Xp in our test returned a dramatic speed-up, and if you have really deep pockets, that’s just the start of how fast things can get. Redshift supports up to 8 GPUs per session, which ought to be enough for most users.

Worth noting is the fact that Quadro cards don’t gain a performance advantage in Redshift. The GTX 1080 Ti falls just behind the TITAN Xp, about as far as we’d expect given the theoretical performance delta between them. Ultimately, the faster the NVIDIA GPU, the more you’re going to love life while using Redshift. Just don’t forget to take memory into consideration: for some, an 11 or 12GB framebuffer might not be sufficient enough. On the Quadro P6000, upwards of 20GB was used during the render.

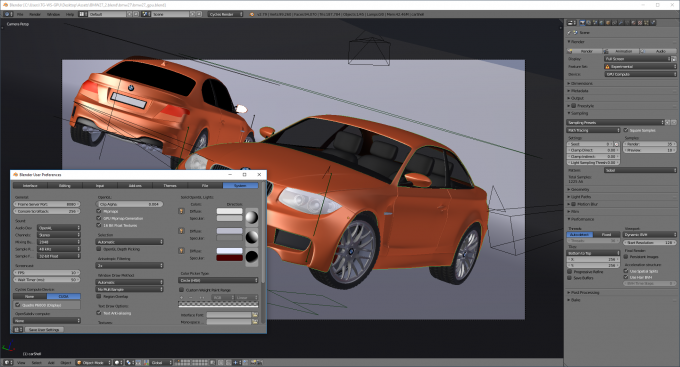

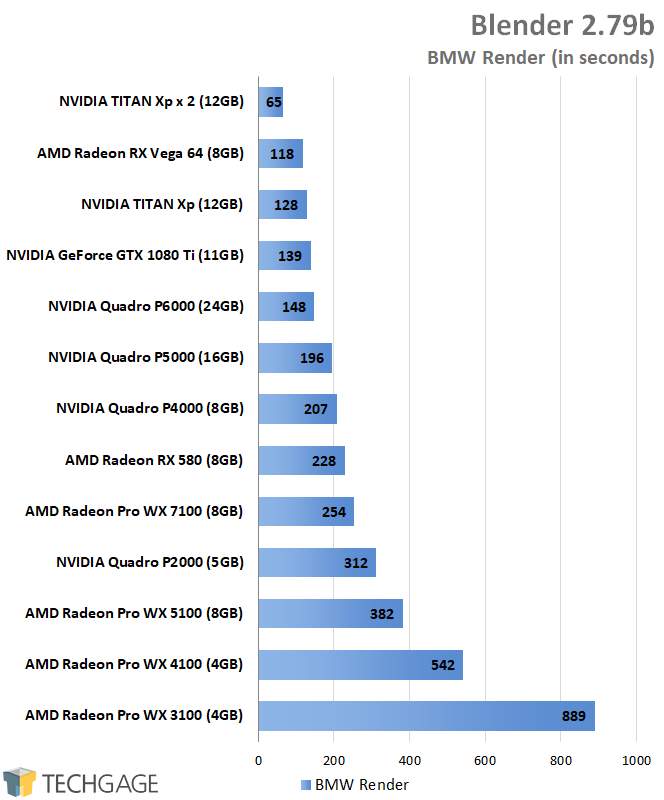

Blender

Blender needs no introduction, but I’ll give it one anyway. Blender is a free and open-source 3D design suite that really involves the community in every aspect of its design. There’s lots of documentation to be had, to help you make the most of the tool, and development is very active. The upcoming 2.80 version is going to add CPU+GPU rendering capabilities, which is one feature we’re eager to test out more.

Like Redshift, Blender offers a number of test projects for free, including the one used for testing here. You can snag the model from this blog post. When testing, you’ll want to make sure that your GPU is actively selected (seen in the shot above). For good measure, you may wish to hit-up the “File” tab as well, and enable auto-loading of Python scripts, unless you want to manually click the ‘Reload Trusted’ option after the load of most of the sample projects.

There are a couple of immediate takeaways here. Like Redshift yet again, Blender can take great advantage of multiple GPUs, delivering dramatic gains when a second card is added. And this comes before the 2.80 update which should also allow CPUs to be added to the mix! Not the kind of sweet perk you’d usually expect from a free and open-source solution, is it?

AMD has a couple of interesting results here, as well. For starters, the Polaris-based WX line isn’t really ideal for Blender use in comparison to the competition, or even AMD’s own Vega chips. The WX 7100 performed pretty well overall, but NVIDIA’s similarly priced P4000 comes ahead. What I didn’t admittedly expect is what Vega delivered: it performed better than every single other NVIDIA GPU.

As far as I’m aware, AMD once donated an engineer to the Blender foundation, which I can only imagine was done to improve performance on its hardware. That makes sense when you consider the fact that AMD regularly promotes Blender for use on its Zen-based CPUs. Until now, I had never known about the company’s prowess in GPU-rendering within the same application.

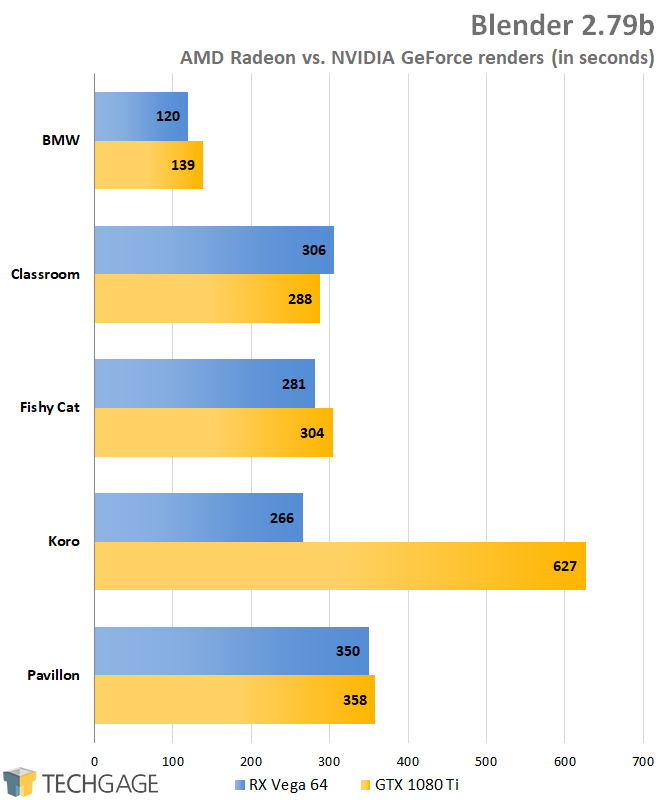

Wondering if AMD’s dominance was specific to the BMW render, I decided to take the Vega 64 once again and compare it against the GTX 1080 Ti. These cards are the closest competitors in this lineup; both are gaming-focused, and are priced similarly. Lo and behold, AMD still keeps ahead most often:

That Koro result is not a mistake, and no, I cannot answer why AMD performs so much better in that one over NVIDIA, but it’s a very repeatable result (as they all are). Ultimately, these results are exactly what we’d love to see from a vendor supporting free and open source software. Blender and AMD Radeon seem to be made for each other – as long as we’re talking Vega. I’d quicker choose NVIDIA over AMD’s Polaris cards. While Cycles was the renderer of choice here, AMD users can also give Radeon ProRender a go.

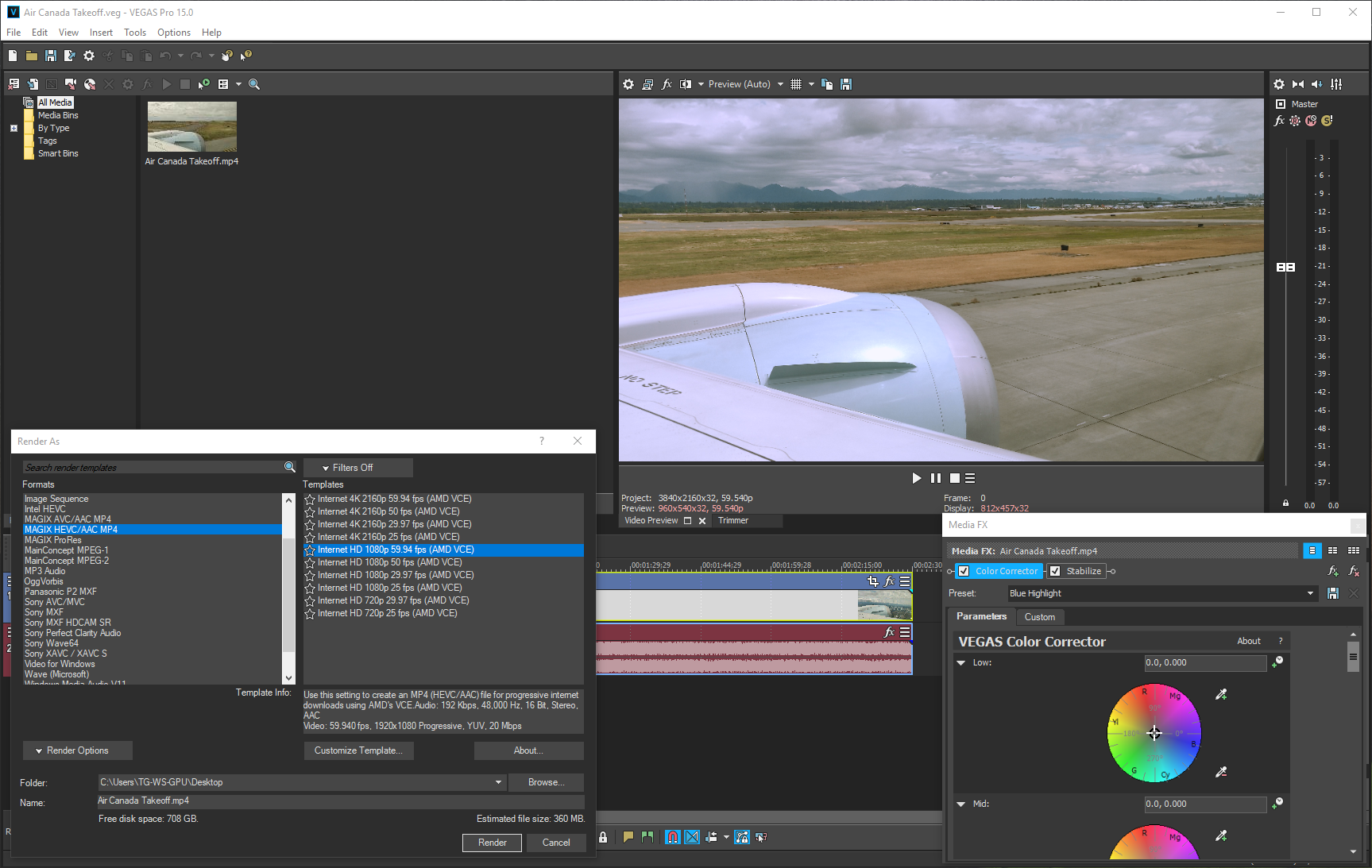

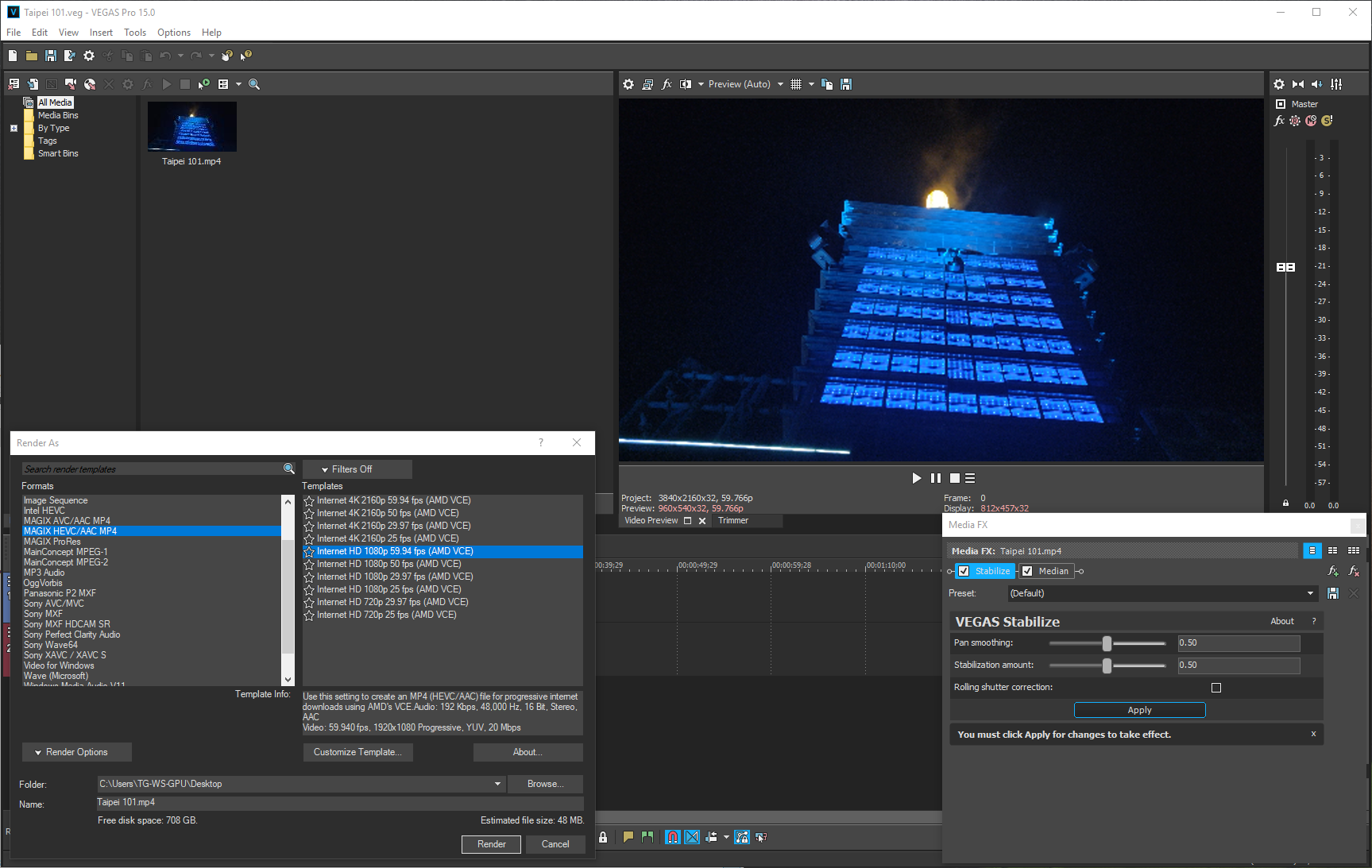

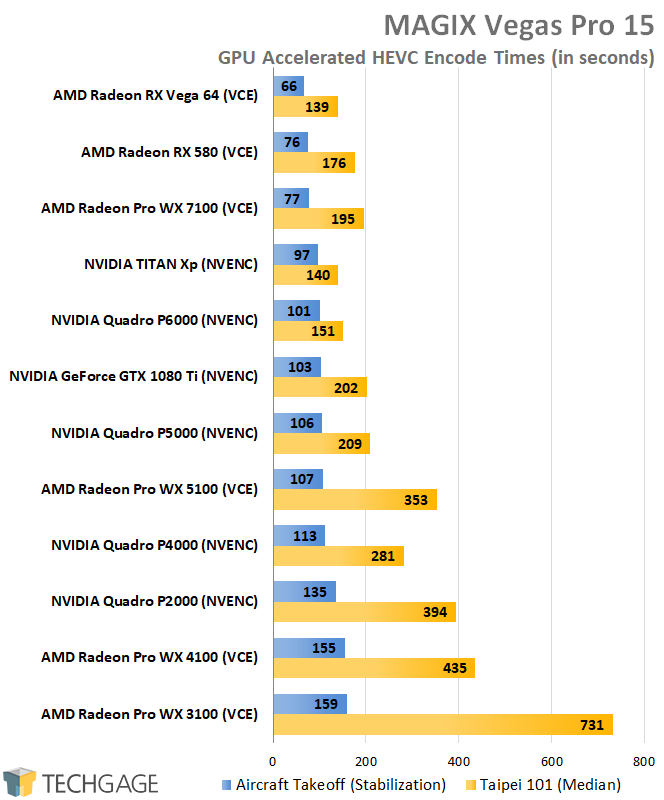

MAGIX Vegas

Vegas is an interesting tool to test with (and use), as is video encoding in general. You never really know exactly how a GPU is going to be utilized with encodes, because one encoder may utilize processors differently – or more effectively – than others. That being the case, it’s sometimes difficult to find a “great” video encode test, but fortunately, you don’t need to do too much in Vegas to get the GPU involved.

This article was originally published with testing that didn’t do much to satisfy my appetite, so over the weekend, two realistic projects were created with effects added in order to generate some better test results.

Both projects used for encoding here were captured with the OnePlus 6 at 4K/60; the footage of which can be seen in our OnePlus 6 review. The first project is a 2.5 minute clip of an aircraft takeoff, where I apply both color correction and stabilization, choices that utilize a Quadro P6000’s GPU about 30% overall. The second test is more grueling, adding stabilization and denoising (median) to a 20 second night scene atop the Taipei 101 tower in Taipei, Taiwan. That project utilizes the same GPU at about 80%.

For reference, the original performance results painted a great picture for AMD, and I’ll be honest: I was worried that retesting with proper projects would yield different results. Thankfully, that’s not the case:

Choosing two different projects to encode with here was a smart move, because it gives us a better understanding of what to expect overall. That wouldn’t really be the case if the projects scaled the exact same (which is the case in most of our Blender tests, for example).

Overall, AMD continues to perform exceptionally well, leading the pack with a trio of its GPUs. With the aircraft project, AMD outright dominates, with even the WX 7100 outpacing NVIDIA’s top dogs. The green team fares a lot better in the median test, but overall, AMD still comes ahead, with the Vega 64 beating out the TITAN Xp by a mere second.

As an unrelated side note, in talking to MAGIX, the company encouraged anyone using Vegas to take advantage of either its own AVC or HEVC encoder. Long-time users may still be using Sony’s XAVC, which will still use the GPU to a suitable degree, but going forward, you should consider MAGIX’s own encoders to be your standard choice (unless you use an older one for specific reasons, of course).

Final Thoughts

And with that, we wrap-up our look at the performance of three applications we’ve never tackled much before. An exception would be with Blender, since we have used the tool for CPU benchmarking for a while, but now that we know it’s so perfectly-suited as a GPU benchmark, it will continue to be included. The same goes for Redshift, as it’s the only biased renderer in our collection – and it happens to use GPUs really well.

In time, we’d like to explore DaVinci Resolve performance as well, as that’s been another oft-recommended one to include. Some others are in the “mulling” stages as well, but free time dictates how much can be tackled.

In the near-future, we’ll follow-up this content with a look at workstation-focused performance on a 16-core AMD Threadripper machine, pitting it against an equivalent 16-core Intel Core-X machine. That will preface AMD’s Ryzen Threadripper 2 launch, and not to mention SIGGRAPH, which takes place the middle of next month. There, either AMD or NVIDIA (or both) could launch some new hardware, so this is turning out to be quite an interesting summer for workstation users!

As always, if you have thoughts or suggestions, please feel free to leave a comment below.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!