- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Benchmarks That Matter: Synthetic vs. Real-World

Since the launch of AMD’s third-gen Ryzen CPUs in July, there’s been a lot of chatter around the web with regards to which benchmarks matter, and which can be safely ignored. We’re rather passionate about benchmarking, so we decided to chime in and give our thoughts.

AMD’s launch was massive for a bunch of reasons. On one hand, AMD is offering great value with its latest Ryzen chips, thanks largely to the fact that it’s selling more cores for the same price as Intel (or less price, depending). Intel still rules when it comes to clock speeds, and to some extent, IPC, but for the creators of the world, AMD’s additional cores will prove helpful.

A couple of weeks ago, an Intel slide deck began to make the rounds that tried to highlight the fact that AMD wasn’t good at anything other than Cinebench. We held off on reporting about these slides, because there was no guarantee that they had actually come from Intel. We’re still not going to share them here (techPowerUp has them), since we haven’t been sourced them, but we do know now that Intel did in fact produce them.

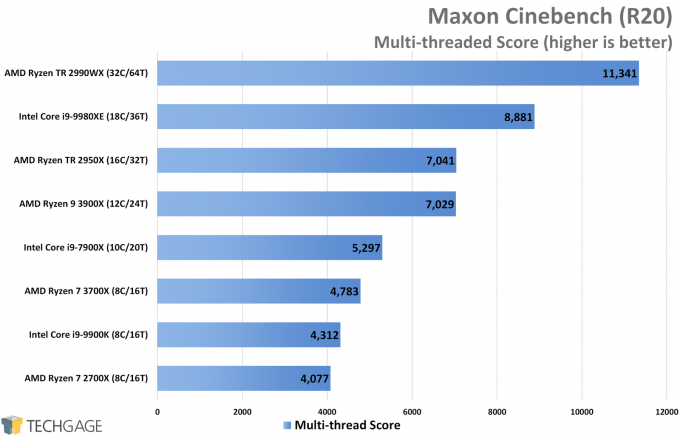

In one of the slides, Intel is shown to outperform AMD in Windows desktop and application performance, gaming, and some compute tasks. Even without testing the same applications Intel did, we can safely say that these claims are probably correct, based on all of our previous testing. That said, the company admits in the same slide that it falls well short in Cinebench R20, which is treated as unimportant.

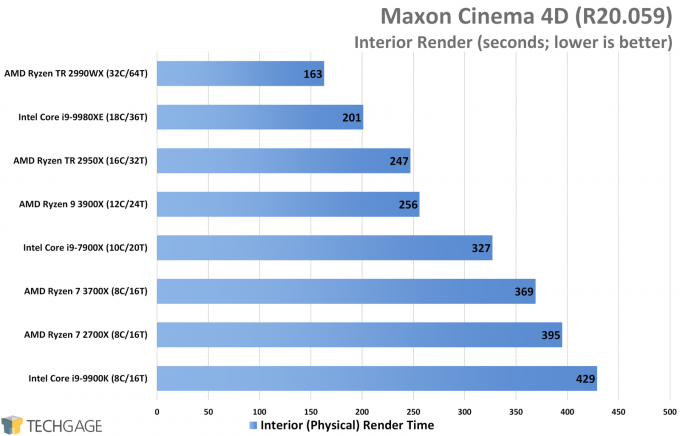

Most of our readers likely know that Cinebench is not a typical synthetic benchmark. In fact, it’s not a synthetic benchmark at all. It’s a tool you can use that utilizes a real rendering workload with Cinema 4D, a well-regarded and widely used rendering suite. Rendering on the whole is “niche”, but it’s this niche that directly benefits from AMD’s current offerings. It’s also a niche we happen to care a lot about, as our content section proves. OctaneBench is an example of another standalone benchmark that seems synthetic, but does apply directly to real-world performance.

To that end, we care a lot about our benchmarks, and strive to deliver ones that are as accurate and realistic as possible. In some cases, we’re forced to synthetics because of various reasons, but we aim to keep that to a minimum. We value real-world benchmarks, first and foremost, because our readers don’t run benchmarks all day. While some benchmarks are useful for certain gauges of performance, we’d attest that it’s extremely difficult to get meaningful results with most synthetic tests.

For this reason, we ignore and don’t find much use in benchmarks that aim to test a hundred things in a couple of minutes. Such tools might be fine for simple comparison, but you can’t glean useful metrics from them. We harp on an awful lot about the simple fact that not all workloads are built alike, and if you look at our workstation GPU content, you’ll see quick proof of this.

We on occasion get asked to run such benchmarks, but we prefer to continue creating our own internal benchmarks, which are a hundred times more relevant. How is a single score supposed to accurately portray the capabilities of a processor? It can’t.

BAPCo’s SYSmark has been in the crosshairs recently because Intel has been using it to promote its own performance, including in these aforementioned slides. We’re not exactly sure how the suite utilizes AMD and Intel processors separately, but it does use real applications. That said, not a single reader in fifteen years has asked us for Microsoft Excel performance, so we haven’t felt any urge to test with it. Photoshop and After Effects are far more worthy pursuits (which are also included in SYSmark).

Way back in 2011, AMD issued a warning that SYSmark couldn’t be trusted because it was optimized for Intel. At one of the Ryzen-specific tech days last year, we asked AMD for an update on its thoughts, and were again told it didn’t trust SYSmark at all. So when we see Intel primarily focusing on SYSmark in 2019, we’re of course a bit skeptical. Especially when Intel is the lone processor company sponsoring the benchmark right now. Intel and NVIDIA used to support BAPCo, but they both left a while ago. Meanwhile, AMD and Intel agree on many other benchmarks, like those from UL and SPEC.

Ultimately, we would suggest caring more about real-world performance over synthetic scores that don’t actually tell you anything. This is especially true if you are a creator, because while you may be able to expect different games to behave the same on a GPU, ProViz workload can sometimes scale much differently than you’d think.

We at Techgage still have some work we can do, as well. There are more benchmarks we should focus on getting in (three are in the works right now), especially those that test single-threaded performance a bit better, since we don’t have many that specifically test it. As always, we try to improve how we do things, and which benchmarks we focus on, and ultimately, we’re not keen on irrelevant benchmarks.