- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

File Transfers Over 1Gbit/s Ethernet: SSD vs. HDD

As mentioned a couple times recently in our news and content, we’re in the process of completely overhauling our suite for motherboard testing, to help assure that we’re delivering the more relevant data possible. Taking into account the fact that not all NICs are built equal, one introduction we’ll be making is Ethernet testing, to see which integrated card will deliver you the best networking experience.

Believe it or not, there are differences in NIC quality, and over the past week, Jamie and I tested a couple of different integrated solutions with various tools. Up to this point, we’ve settled on a tool called JPerf (Screenshot), as it’s highly customizable, and very reliable. We’ve yet to settle on an exact configuration as of the time of writing, but we do believe we have one (lips are sealed for the time-being!).

In our tests, especially where many jobs at once are concerned (think peer-to-peer or servers), some NICs fail a lot sooner than others. As one quick example, while a Realtek NIC here failed after about 700~900 seconds, Intel’s integrated solution lasted much longer – up to 1,300 seconds. While our testing might be a little “hardcore” by some standards, it will do well to weed out the sub-par from the excellent.

During all of this testing, I thought back to a request our good friend Psi* had: How would transfers fare across the network when dealing with SSDs at both ends? At the same time, what kind of detriment are mechanical hard drives?

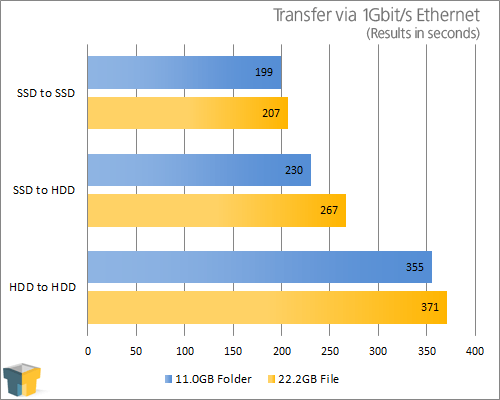

Prior to testing, I had general suspicions that performance wouldn’t differ too far from transferring from one storage device to another on the same PC, as long as the network was rock stable. For the most part, that theory held true, as you can see in the chart below:

Utilizing Intel’s I340 server NIC on one end, and a Realtek on the other, a transfer from SSD to SSD (Corsair F160 to Corsair F160) proved to be about 111MB/s for a solid file, and 56.75MB/s for a folder, which consisted of 6,353 files of varying sizes. At around 111MB/s, we found our network to be pretty-well maxed-out, even with the Intel server card involved (and for what it’s worth, we found pretty much the same in Linux, except it was 1MB/s slower on average).

Using the same tests, copying from an SSD to an HDD (Seagate Barracuda 7200.11 1TB on both ends) proved far, far more efficient than copying from one HDD to the other. The super-fast continuous write speeds of the SSD reduced the overall bottleneck tremendously for the hard drive, and compared to our HDD to HDD test, there’s just no comparison.

What can we gain from this? If you’re looking to run a very efficient network – as in, you need to – having an SSD on the client machine isn’t a bad idea at all. Taking our folder transfer into consideration, our SSD to HDD test rendered an average speed of 98.86MB/s, while the HDD to HDD transfer delivered performance of 64.05MB/s. If you are constantly negotiating a lot of data from one PC to the next, time savings like these can be nice.

Our tests here may not be completely done, as I have a couple of nagging curiosities, but given we’re knee-deep into a motherboard suite upgrade, finding time lately for anything is a bit tough – but we’ll see.