- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

P2P Users Spend More than Non-P2P Users?

There are many media executives around the world who would do anything to believe otherwise, but according to a recent report done by Frank N. Magid Associates, Internet users who pirate media, whether it be music, movies or what-have-you, spend more of their hard-earned money on media products. These products can include anything from going to see a movie, to purchasing a new HDTV, to buying a game console.

I’m sure there are many who won’t, but I wholeheartedly agree with this research. I admit that in the past, I used to be a rather rampant P2P’er, downloading a fair amount of music over time. Today, I don’t usually download anything unless one of my favorite artists’ album gets leaked a few weeks early, but in the end, they still get my money when the actual product hits the market.

For me, P2P was a game-changer where discovering new music was concerned. Before the likes of Last.fm, P2P was the absolute best way to sample new music – especially music that’s not normally played via convenient means, unless it’s mainstream. As I look over at my music collection, I see a few artists that I would have likely never discovered had I never took a chance on downloading their album. For a few of those bands, I now own every-single album they’ve ever made (The Thermals, for example).

Today, rather than download random albums via P2P, I use services like Last.fm to discover new artists and then YouTube to hear the music. It’s at that point that I can decide whether to move forward with a purchase or not. That setup sure works better than the foolish 30s low bit-rate clips of music that services like iTunes avail you, I can tell you that much. While I became bored of P2P, many haven’t (obviously), and I still believe that people who get to actually sample what they want to buy first are going to spend more than someone who doesn’t have such a capability.

We compared a random set of Vuze users with a national sample of internet users ages 18 to 44, and results revealed that users of P2P technology spend considerable money on traditional media and entertainment. They are, in fact, important and valued customers of the traditional media companies. Our survey shows that the P2P user attends 34% more movies in theaters, purchases 34% more DVDs and rents 24% more movies than the average internet user. The P2P user owns more HDTVs and is more likely to own a high-def-DVD player, too.

| Source: Advertising Age |

Discuss: Comment Thread

|

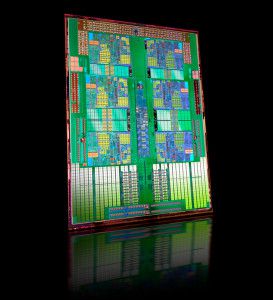

AMD Releases 40W ACP Opteron 2419 EE

Earlier this summer, AMD launched their six-core Istanbul Opteron processors, suited for the server/workstation environment, and in doing so, consumers had an interesting choice when it came to building their large or small clusters. Launch Istanbul chips carried an “ACP” power rating of 75W (Intel and AMD grade their TDPs differently, so they cannot be directly compared), while a new chip launched today settles in at only 40W.

Not surprisingly, the Opteron 2419 EE isn’t a high-clocked part, but rather becomes AMD’s current lowest-clocked Istanbul part at 1.80GHz. By comparison, the company’s also recently-launched HE parts start off at 2.0GHz and 55W, but overall, the 2419 EE still wins when looking at things from the performance per watt perspective, and that’s what AMD is emphasizing.

Consumers who this is targeted for are those who need strong performance but absolutely need the best performance per watt possible. With the 2419 EE, consumers would be able to fit many more machines into a single cluster, resulting in much higher performance overall, with still-improved power consumption. In particular, AMD mentions Web 2.0 and cloud computing scenarios in its press release.

If there’s one area where AMD wins big with this new launch, it’s in the pricing. Although Intel’s 6-Core Dunnington-based Xeon’s would out-perform AMD’s chip in most tests (similar to the desktop side), that company’s least-expensive Sextal-Core offering is the L7455, at 2.13GHz and $2,729. Compare that with AMD’s $989 price tag on their Opteron 2419 EE. It will be interesting to see if Intel follows-up soon with some more modestly-priced Dunnington offerings, or if the pricing on current models will change soon.

AMD (NYSE: AMD) today announced the immediate availability of the new Six-Core AMD Opteron EE processor at 40W ACP. Delivering up to 31 percent higher performance-per-watt over standard Quad-Core AMD Opteron processors, the Six-Core AMD Opteron EE processor is tailored to meet the demands of customers who need strong performance, but must trim out every watt possible in a server system and reduce the datacenter’s power draw.

| Source: AMD Press Release |

Discuss: Comment Thread

|

CipherChain Enables Hardware-based AES Encryption to S-ATA Storage

If you’re concerned about your computer’s level of security, which would be a good idea, then chances are you take a fair amount of precaution with how you handle your everyday tasks. You likely also run various pieces of software that protect you against malware and other negative pieces of software, and you also no doubt use robust passwords in order to protect your sensitive data as much as possible.

But what about taking things a step further? Data encryption is nothing new, and even certain hard drive vendors have been, or will be, releasing products with built-in data encryption. Interestingly though, I stumbled on a site the other day for the company Addonics, which sells products with similar goals. The plus side though, is that you have fairly robust control over your setup, and their products allow you to encrypt any storage device.

The product in particular that caught my eye was CiperChain, a very small piece of silicon (size is comparable to a compact flash card), which can go anywhere in machine, and act as a proxy between your storage device and your S-ATA port. Once installed, the CiperChain will automatically encrypt all of your data on-the-fly with an AES 256-bit algorithm, and apparently has no noticeable performance drawback. How you are protected isn’t with a password, but rather a proprietary key, which must be plugged into the CiperChain before the PC is boot up.

What I like about this device is that it’s simple to use, and very configurable. It doesn’t matter what your PC setup is like, because the company sells various mounts to allow you to store it anywhere in your PC, whether it be the typical HDD mounting area, or even a slot on the back of your computer. If you are ultra-hardcore about protecting you data, you can even daisy-chain an infinite number of these together which would effectively encrypt the encrypted data. Mind-boggling stuff! Pricing starts at $79, and scales depending on your accessories.

Similar to all other Addonics encryption products, the CipherChain is a pure hardware solution. This means that there is no software to run, no driver to install and no password to remember. There is very little user training required to use the CipherChain. All the user needs to do is to insert the correct Cipher key before powering on the system or the storage device. The CipherChain can be operated under any operating system and this makes the CipherChain an ideal security solution for organization with heterogeneous computing environment and legacy systems.

| Source: Addonics’ Product Page |

Discuss: Comment Thread

|

What’s Up with NVIDIA?

Just the other day, I was pondering the question, “What’s up with NVIDIA lately?”, so it was with great amusement when I spotted an article at Loyd Case’s site, Improbable Insights, which asked that very question. It’s good to know I’m not alone in my thinking, because pretty well every point he makes, I agree with wholeheartedly. So, what is up with NVIDIA?

In the past year, sadly, most of the news to come out of the company has been less-than-stellar, such as quarterly losses, the debacle with their mobile graphics, and of course, the short-lived king-of-the-hill, the GT200 series. Not all has been bad with the San Jose company, but I’m sure CEO Jen-Hsun Huang prefers to go do his work when not at a war with Intel and others.

Over the course of the past year, the absolute number one focus at NVIDIA has to have been CUDA, because throughout the numerous conference calls I’ve been on, and e-mails I’ve been sent, it’s been pretty obvious. Second to that would have to be ION, and happily, that seems to be working out for the company just fine so far. How long the growth will continue is yet to be seen, however, as Intel has the opportunity to change the landscape drastically once they implement a GPU and CPU on the same chip.

What’s the next big thing for the company is really unknown. CUDA support is ramping up, and so is ION (not to mention Tegra), but what’s really going to help push the company back to wowing enthusiasts again is up in the air. There’s the GT300 cards, but even the launch of those is shrouded in doubt, due to supposed delays. On the other hand, once they do arrive, we may be truly blown away (and ATI) too, so we can’t discredit them just yet.

If there’s one thing that’s true, it’s that the next year for graphics is going to be incredibly exciting. With Intel’s Larrabee right around the corner, OpenCL adoption up and DirectX 11 on the horizon, who knows how things are going to play out?

Credit: Techgage |

Still, it’s an odd place for Nvidia to be. The company’s marketing strategy once revolved around putting out high end products that offered either better performance or a more robust feature set than competitors – or both, in some cases. Then the company would push the new tech down into the mainstream and low end. Now, their key successes seem to revolve around high volume, low margin products.

| Source: Improbable Insights |

Discuss: Comment Thread

|

Uncharted 2 Couldn’t Happen on Xbox 360?

From a technical standpoint, Sony’s PlayStation 3 is the most capable game console on the market right now, thanks in part to its robust Cell processor that features seven fast cores – four more than Microsoft’s Xbox 360. Of course, comparisons like these are too simple, but the fact remains, the processing power of the PS3 is incredible, which is one reason things aside from gaming have even found a home there, such as Linux and Folding@home.

Up to this point though, we’ve heard various humors of how certain PS3 exclusive titles simply wouldn’t have been made possible on the Xbox 360, but no developer has ever been too clear-cut about it. It appears that Uncharted 2, the sequel to Uncharted: Drake’s Fortune is indeed going to be one of those titles – at least, if Christophe Balestra, the co-president of Naughty Dog (the game’s developer), has something to say about it.

If there’s one reason in particular that couldn’t be debunked, it’s, “we fill the Blu-ray 100 percent, we have no room left on this one. We have 25GB of data; we’re using every single bit of it.” Compare that to the Xbox 360’s discs that hold just about 7GB. This is simply a minor reason, though, as Naughty Dog is planning to take full advantage of the PS3’s hardware, unlike any other game to come before it.

The company will be relying heavily on the multi-core nature of the Cell processor to accelerate both the gameplay elements and animations (and we could assume physics), while they’ll focus the RSX graphics chip on what it does best… graphics. The realism is meant to be top-rate in the upcoming sequel, so much so, that even the audio will be processed off the SPUs on the CPU. What’s the point? No matter where you are in the game, the audio should give you the impression of realism. Picture the ray tracing technique, for example, which calculates reflection and light off of an object based off of available light sources. This audio technique would be somewhat similar.

Either way, the original Uncharted was a fantastic game, so I’m looking forward to seeing if the sequel’s going to be able to live up to all this hype. After all, fancy graphics and effects are one thing, but enjoyable gameplay is something else…

For the first game, Balestra estimated that they used around 30 percent of the power of the SPUs, now the team was able to use them to 100 percent capacity. Naughty Dog understands the Cell processor, and knows how to get it to sing. “The ability to use the RSX [the PS3’s graphics processor] to draw your pixels on the screen, then you use the Cell to do gameplay and animations—we kind of took the step of using the Cell process to help the RSX.

| Source: Ars Technica |

Discuss: Comment Thread

|

OCZ Releases Industry’s Most Affordable SLC-based SSD

If there’s one area in technology that’s moving at an unbelievable pace, it’s with solid-state disks. It seems that with each day that passes, we’re seeing drives that are faster, larger in size, and more affordable. While we haven’t quite hit a point where SSDs can be considered affordable for everyone, it hasn’t stopped many enthusiasts from speeding up their machine with one as their main drive.

While most consumers purchase MLC-based SSDs due to their cost-effectiveness, it’s hard to ignore the incredible performance and longevity that SLC-based drives will offer. As we mentioned in our news a few weeks ago, because MLC writes two bits per cell, and SLC only bit one per cell, the latter will have the longer lifespan – not to mention better performance.

But, if MLC drives could be considered expensive, SLC drives simply takes things to the next level. Roughly, an SLC drive is usually more expensive than an MLC drive with twice its density, making it a good choice for only servers and workstation environments. OCZ is hoping to invite more people into the SLC world with their new Agility Series EX drive, though, which they call the “industry’s most affordable” SLC-based SSD.

At 60GB (~64GB) and $399, the company isn’t exaggerating. By comparison, Intel’s SLC-based X25-E, also at 64GB, currently retails for $679, and it’s pretty much the only competition at this point. How the two would fair in an entire gamut of tests is yet to be seen, but with rated speeds of 255MB/s Read and 195MB/s Write, it looks to be proper competition to Intel’s much more expensive offering.

The OCZ Agility EX Series makes SLC (single-level cell) NAND-based storage truly affordable in a solid state drive for the first time. The Agility EX provides the best of both worlds -the performance and advantages of SLC NAND technology at an incredible value. Based on the quality Indilinx controller, the Agility EX Series delivers an enhanced computing experience with faster application loading, snappier data access, shorter boot-ups, and longer battery life.

| Source: OCZ Agility Series EX Product Page |

Discuss: Comment Thread

|

What Caused So Many People to Stick to Firefox 2.x?

Whenever I see a new version of one of my commonly-used applications become available, I’ll download without putting too much thought into it. Sometimes, I don’t even look to see what’s new feature-wise. After all, there’s more than just features that come to new versions of applications, such as security fixes, and possibly also stability fixes. So when Firefox 3 came out, I didn’t put much thought into an upgrade. It appears that there are many who did, however.

As a last-ditch effort to have people upgrade their Firefox 2 to 3, Mozilla prompted people running 2 to fill out a quick survey to explain their reasons for not upgrading to the latest version. Can you even guess? Believe it or not, 25% of people said that the robust bookmarks feature was the main reason, because with it, you could easily get caught by family members if you’re using your PC for more than just business and gaming!

For those not familiar with how Firefox 3 handles bookmarks, you can see an example below. As soon as you type in a single letter, the address bar will bring up a drop-down menu with things it believes you might be looking for. So, if your family members wanted to come to our site, and they push the letter “T”, chances are fairly good that your “Top Teen Sex” bookmark would also pop up. No need to go into further detail than this.

I do find it interesting that of all things, this is what stops people from upgrading. But, on a shared PC, I guess the situation would be a little more complex than simply deleting your cookies/cache/history every time you’re done surfing. For those users who held off upgrading, Firefox 3.5 pretty much takes care of that issue, as it allows you to turn off the advanced address bar quite easily.

The number one reason for not upgrading was the new location bar, and the fact that it delved into people’s bookmark collections to suggest sites as they typed. No fewer than 25% of Firefox 3 refuseniks cited this as the reason they wouldn’t upgrade. In fact, almost all of the people who provided feedback had tried Firefox 3, didn’t like what they saw, and headed back to Firefox 2.

| Source: PC Pro |

Discuss: Comment Thread

|

Intel’s HDxPRT Grades Your PC on its Multi-Media Capabilities

As I was cleaning up around my desk last night, I unsurfaced a disc I almost forgot about… one that Intel included with all the Lynnfield reviewer’s kits that were sent out. The software is called “Intel High Definition Experience & Performance Ratings Test”, or HDxPRT for short. The disc immediately reminded me of software we were given a while ago, called “Intel Digital Home Capabilities Assessment Test”, and I believe HDxPRT is, for the most part, a follow-up.

So what is it? Similar to tests designed by BAPCO (SYSmark 2007 Preview), HDxPRT’s goal is to test your PC for its overall media capabilities, from HD video playback to handling of your content. That’s what makes this test better than SYSmark, though, because here, the scenarios are relevant to the majority of people who use their computer (SYSmark focuses more on workstation scenarios).

Within HDxPRT, there are two main categories, Create and Play. The latter will award a 0 – 5 star rating depending on how well the machine handles HD video, and according to the guide included on the disc, most computers today should have no issue running that, which is only a good thing for those looking to build a new HTPC on the cheap (it’s more possible now than ever).

Under the Create category are three tests. The first takes an HD camcorder video and converts it to both a format suitable for uploading to a website, like YouTube, and also to a Blu-ray format. The second takes files from a digital camera and edits them accordingly, while the third prepares music and videos for copying to your iPod. I’m sure few would argue that each one of these scenarios is actually relevant to most consumers today.

Since the software requires a clean installation of Windows (7 is also supported), I haven’t yet had the chance to give it a spin, but I’m hoping to prior to our Lynnfield launch article. If it proves to deliver useful metrics, we may consider using it in our future CPU and possibly motherboard content. At least, once we know for certain that some of the tests don’t favor one company over another.

For those interested in giving the software a go, it’s completely free, so you’re able to go to the URL below and register on the site to gain access. Just be warned, you’ll need to also download a trial of Adobe’s Photoshop Elements 7, unless you happen to own it already.

Mainstream consumers are confronted with many choices and decisions, and not enough straightforward performance tools to guide those decisions. But what would that better guide look like? It would focus its attention on what you care about most, and tell you how well a PC did those things, in plain English. It would tell you about quality of experience, because media playback isn’t really about “faster is better.” But more than anything, this guide should help you make an informed decision about the right PC for what you want to do.

| Source: Intel HDxPRT |

Discuss: Comment Thread

|

8-Bit: The Story as Told by Lego

What will 1,500 hours, a lot of Lego blocks and excellent video skills get you? This video answers that question. I recommend clicking play and then clicking again anywhere in the video to go straight to YouTube to watch in HD… it’s well-worth the extra bandwidth (60MB).

|

Discuss: Comment Thread

|

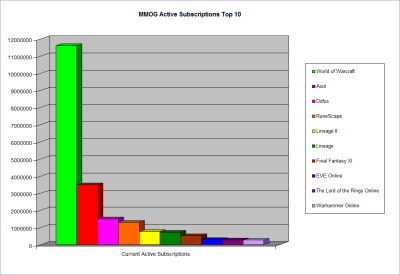

World of Warcraft Still the Dominant MMORPG, Can Aion Make it Budge?

Last week, site faithful madstork91 and I were talking and got on the topic of MMORPG’s. To be more precise, we were talking about what games are popular now, which were popular then, and which game out there has the potential to grow fast upon release. So, that got me interested in checking out some updated information regarding MMO subscriptions and the like, and for that, I turned to MMOData.net as per stork’s recommendation.

This is a site that aggregates a wide-range of information based on various sources to put together graphs that show the current trends in MMO gaming, and it makes it easy for us to get the information quick without putting too much thought into things. Can you guess the current top MMORPG on the market in term of active subscriptions? Of course I’m kidding… even my grandmother would be able to tell me that it’s World of Warcraft.

With a current subscriber-base of ~11.5 million though, I can’t help but notice that the number over the past eight months or so hasn’t moved, up or down. Proof of this can be seen in a press release Blizzard shot out to the wire last December, which stated their new achievement of, you guessed it, 11.5 million subscribers. Either people are leaving the game on a regular basis and new players move on in, or nobody is interested in leaving.

What might be most interesting about the current top 10 is that #2 is Aion… a game from NCsoft that’s currently only in closed beta. To say that we might finally have some competition to WoW when the game gets released is an understatement. Taking the third spot is Dofus, a game I’ve never heard of until now, with fourth place belonging to RuneScape. Number five is Lineage II, an MMORPG I currently play, with the original Lineage taking sixth.

If you are a fan of MMORPG’s, I recommend checking this site out… there’s a lot of fun information to be had. For example, did you know that the Asian MMO Zhengtu Online at one point reached 2.1 million concurrent users? The only MMO to come anywhere close on these shores is of course WoW, which once managed to hit almost exactly 1 million. Whew, just think of the poor servers!

The purpose of this site is to track and compare subscriptions, active players and peak concurrent users from MMOG’s and to give you my opinion on various events in the MMOG scene.

| Source: MMOData.net |

Discuss: Comment Thread

|

Apple’s Mac OS X “Snow Leopard” to be Available this Friday

At Apple’s Worldwide Developer Conference this past June, the company announced that OS X “Snow Leopard” would become available to consumers in September. Well, there’s good news for Apple fans, as the OS’ release date has been pushed up to this Friday, the 28th. Even without knowing what the latest version entails, if there’s one reason to get excited, it’d be for the $29 US upgrade price.

What makes Snow Leopard a little bit interesting is that it’s not a release that focuses on unveiling new features, and that’s no doubt the reason for the $29 price tag. Rather, the goal is to release a product that’s more secure and faster, both of which are helped by the fact that about 90% of the OS has been re-written – the first time that’s happened since OS X first debuted in 2001.

As a result, many aspects of the OS will feel much faster, including Finder, Mail, Time Machine and even QuickTime (which becomes QuickTime X). How all these optimizations affect install size is staggering. Apple claims that after upgrading to Snow Leopard, you will regain up to 7GB of hard drive space, as a result of the latest version taking up half the size of Leopard.

Another major feature of Snow Leopard is the fact that almost the entire OS was coded with 64-bit addresses in mind, so you’re really going to be taking full advantage of your processor, more so than Windows or Linux would avail you. It will be interesting to see if consumers, after upgrading, notice notable speed gains, because with all the time and effort Apple’s developers have put into the latest version, it’d sure seem likely.

For the first time, system applications including Finder, Mail, iCal, iChat and Safari are 64-bit and Snow Leopard’s support for 64-bit processors makes use of large amounts of RAM, increases performance and improves security while remaining compatible with 32-bit applications. Grand Central Dispatch (GCD) provides a revolutionary new way for software developers to write applications that take advantage of multicore processors. OpenCL, a C-based open standard, allows developers to tap the incredible power of the graphics processing unit for tasks that go beyond graphics.

| Source: Apple Press Release |

Discuss: Comment Thread

|

WinX DVD Ripper – Free Ripper that Works

I received an e-mail last week from Digiarty Software, which asked us if we’d like to post news about its free DVD ripper application. I don’t usually jump at the opportunity for the main reason that there are so many applications like this around, but this one might very-well be worth your time. By all coincidence, the same day I received the e-mail, a friend of mine was looking for a free DVD ripper, so he was the perfect guinea pig.

Little did I realize, but there is actually a lacking of reliable free DVD rippers out there. My friend had tried five or six different applications he found, and none successfully ripped the DVD out of the box. So as a last-ditch effort, he tried the same DVD ripper that was linked to me in the e-mail, and surprisingly, the process finished without a hitch. Impressive.

From the looks of things, the software is rather robust, offering the ability to rip to a variety of formats, including AVI, MPEG, MP4 and more – even Flash-based FLV. Taking things a step further, the application offers light tweaking to each profile, such as the ability to adjust the bitrate, resolution and so forth. Have a copy-protected DVD? It doesn’t matter according to the product page… this can handle ’em.

If there’s one caveat, it’s that it looks as though there’s no way to simply rip the DVD 1:1 to your hard drive. Rather, the only option is to convert to another video format. This might be fine for most people, however, since full DVD rips to take up a fair amount of hard drive space. Either way, if you are looking for a free ripper, give this one a try.

WinX DVD Ripper is a free software program that facilitates ripping the content of a DVD to computer hard disk drive and converting to popular video formats. It supports all types of DVDs including normal DVDs, CSS protected DVDs, commercial DVDs, Sony ArccOS DVDs, all region 1-6 DVDs and most of popular video formats such as FLV, AVI, MP4, WMV, MOV, MPEG1/2 as output video formats.

| Source: WinX DVD Ripper |

Discuss: Comment Thread

|

id Software Considering Skipping Rage for Linux?

For gamers running Linux as their primary OS, the choices are unfortunately limited. In fact, I’d be hard-pressed to believe that gaming wasn’t the main reason that most people either dual-boot their PC, or never give Linux a try in the first place. The former can be said for me. While I run Linux as my main OS, and Windows XP in VMware for “simpler” tasks (Microsoft Office, Adobe Photoshop), the reason I still keep my machine as a dual-boot is strictly because of gaming.

While the gaming landscape on Linux isn’t too stellar, there are a lot of choices, depending on your tastes. If you’re looking for a commercial game, though, things are different. Companies like S2 Games and id Software have been some of the very few to deliver commercial games to Linux, which made us appreciate them even more. Who doesn’t like to have masterpieces like Doom, Wolfenstein, Quake and others available to them?

Sadly, as games become more and more robust, and tied to libraries specific to Windows, the chances of seeing blockbuster commercial games is beginning to wane. This was highlighted by a letter that an Ubuntu forum member sent to John Carmack, which asked about the possibility of seeing Rage available for Linux. His response couldn’t have been more clear:

“We are not currently scheduling native linux ports. It isn’t out of the question, but I don’t think we will be able to justify the work. If there are hundreds of thousands of linux users playing Quake Live when we are done with Rage, that would certainly influence our decision…“

Well, I for one am planning to game ‘er up in Quake Live often, but I highly doubt that hundreds of thousands of others are going to join me. Linux gamers are facing a harsh reality, though, because if one of the leading supporters of gaming on the OS is looking to step out, what does that tell us about the future? What in your opinion needs to be done to continue to attract both developers and gamers alike to Linux? Let us know your thoughts in the thread!

The codebase is much, much larger, and the graphics technology pushes a lot of paths that are not usually optimized. It probably wouldn’t be all that bad to get it running on the nvidia binary drivers, but the chance of it working correctly and acceptably anywhere else would be small. If you are restricted to it only working on the closed source drivers, you might as well boot into windows and get the fully tested and tuned experience…

| Source: Ubuntu Forums, Via: Slashdot |

Discuss: Comment Thread

|

Canadian Cable Companies Continue to Shaft their Customers

As I live in a relatively small town in eastern Canada, our choices for cable, phone and Internet service is limited. It comes down to two main choices, Rogers and Bell, both which feature certain trade-offs (like reliability and junk mail en masse). As a subscriber of Rogers, I received a letter in the mail a few weeks ago that stated that the cable portion of our bill would be increased 1.5% to comply with the CRTC’s new LPIF fund. Let me give you some quick background.

The CRTC is the “Canadian Radio/Television and Telecommunications Commission”, who oversee the regulation of media all over Canada. The LPIF is the “Local Programming Improvement Fund” which the CRTC established in order to help both improve and support local programming. To make this happen, the CRTC ruled that Canadian cable/satellite companies were required to contribute 1.5% of their gross broadcasting revenues, which would amount to around $100 million total for the year.

That sounds great for local programming, but it turns out it’s bad for cable and satellite subscribers, since most of these companies are, rather than taking the hit themselves, continuing to nickel and dime their customers by passing the fee onto them, as mentioned above. So far, Rogers Cable and Bell have been verified to be doing this, but in checking with people who subscribe to services in other parts of Canada, I’ve yet to find another company to do this.

The LPIF was established as a way to have these mammoth companies contribute to local programming, which many find integral and important. But rather, they shift the cost over to their faithful customers, which I find truly ridiculous. Sure, it’s 1.5%, but it’s not the money that matters. It’s the principle. I contacted the CRTC to get their comment on things, and the response I received was, (bold is theirs) “Given their reported profits, the CRTC is of the view that there is no justification to pass the cost on to consumers.

Enough said. I find it insane that companies as rich as these, are forcing their loyal customers, who already pay out the rear, for something that they are supposed to be paying themselves. If you’re a Canadian reader, I’d love to know if your cable provider is forcing the fee on you, or not, and if you are in the US, please let us know if companies down there are like they are up here.

|

Discuss: Comment Thread

|

TRENDnet Releases “World’s Smallest” Travel Router

Travel often? Travel with more than one person often? Sick and tired of being tethered to a desk at a hotel that offers only wired Internet? Well, TRENDnet’s latest wireless router might be just for you. The company touts it as being the world’s smallest travel router, which is quite a big claim, but if you take a look at the product page on their website, I have no issue with believing it (the LAN port on the back seems to take up only 1/5th of its total width).

It’s the overall size that makes this router so desirable, as it’s “so small, it disappears in your front pocket”, and what’s not to like about that? One thing I absolutely hate while travelling is filling more than one suitcase, or simply weighing my luggage down with various electronics. I’ve often brought full-blown routers with me on business trips, and they’re really cumbersome. But this one is so small, that I could easily fit it in the same bag as my laptop.

This mini router also happens to offer 300Mbps operating speeds (802.11 n), which means at it’s highest transfer rate, you should actually be able to transfer files from one PC to the next faster than you could if you were using a USB thumb drive or external hard drive. Of course, the company also keeps security in mind, along with easy setup, and if you think this will cost you out the rear, don’t… it’s only ~$60 USD.

TRENDnet has sent me one of these to test out, and with Intel’s Developer Forum taking place next month, I’ll have a perfect opportunity to see how it fares in exactly the scenario it’s designed for.

The 300Mbps Wireless N Travel Router Kit offers a high performance router that is so small it disappears in your front pocket. Enjoy the freedom of wireless n while on the go and share a single Internet connection with multiple users. This compact kit fits into the most overstuffed luggage and contains everything needed to quickly create a 300Mbps wireless network.

| Source: TRENDnet 300Mbps Wireless N Travel Router |

Discuss: Comment Thread

|

Site Content Updates!

It’s been far too long since we’ve had “real” content posted on our site, so I thought our readers deserved to know what’s going on, and also when things are going to ramp back up. The good news, is that the answer to the latter thought is “soon!”, so don’t worry. Allow me to apologize to everyone for failing to deliver all of the content you’ve come to expect on our site over the course of the last four years.

As mentioned in the past, I’ve been working hard on wrapping up methodologies for absolutely every product-type we’ve had, and as I’ve been working on those, I haven’t been able to dedicate hard time to other content. If I do, then it means the methodology stuff continues to get pushed behind. It’s a vicious circle of sorts. To make the timing worse, our other staffers have been dealing with life’s little pleasures (aka: unforeseen occurrences and other demands), which of course doesn’t help our situation.

Things are slowly coming back together, however, and our GPU re-benchmarking is just about done. I’m wrapping up a couple of ATI cards as we speak, and once those are done, I’m going to quickly re-benchmark each ATI card once again to, what I like to call “double-verify”, our results. A review featuring our new methodology will be posted next Monday, and it will feature Gigabyte’s Radeon HD 4770.

F.E.A.R. 2: Project Origin – Recent recruit to our GPU suite. |

Speaking of methodologies, don’t miss an article posted just the other day which takes an in-depth look at how we handle our testing here at Techgage. So far, we only have information on how we install Windows, and also benchmark our graphics cards, but in the future, it will feature methodologies for everything we touch, from processors to motherboards to storage and so forth.

Once again, apologies for having such a slow content cycle recently. When I decided to take on the monumental task of revamping all of our methodologies at once, I sure didn’t expect everything else to work against me. Lesson learned… if something can go wrong, chances are it will! The good news is that with our revamped methodologies, our benchmarking results will be even more accurate than ever, along with our resulting analysis. I have never been more confident in how we handle our testing here, and I can’t wait to see all of that reflected in our content!

Windows 7 to Sell in UK for Half of US Pricing

Living in Canada, I tend to know what it’s like to pay more for something than it’s worth. Comparing what things cost in the US and then doing a simple currency conversion will prove just how much more we overpay for things. But, I’ll be the first to admit that Canadians in no way get hit as hard as other countries around the world, such as countries in Europe, and the whole of Australia.

Finally, though, European consumers will catch a break when Windows 7 sees its release, and the pricing differences seen are significant enough to cause US consumers to shout, “What the?!”. The cost for the Home Premium edition will cost £65, which is actually less than half the price of the upgrade version over here. Amazon has said that these prices should be treated as indefinite, so it doesn’t look at all like it’s a error.

No questions about it… this is an incredible deal for Euro consumers. Over here, you sure wouldn’t see too much complaining about a full version of Home Premium for roughly $120, that’s for sure. It is a very interesting situation, though, because that version in Europe still includes Internet Explorer 8 on the disc. The question also arises… why exactly is the pricing over there so different than here?

If I had to guess, I’d assume it would essentially be a preemptive strike to avoid potential frivolous lawsuits that the EU loves to throw at Microsoft at every turn. But something also tells me this won’t help too much, as previous disputes have had little to do with pricing, but rather monopolies.

In the UK, full versions of Windows 7 Home Premium — not an upgrade edition — are going to cost around £65. That’s less than the price the Yanks have to pay just for an upgrade version — $120 (£72) — and half what they’ll have to cough up for a full version — $200 (£122). Amazon.co.uk is already selling the full version of Home Premium for £65, and Play.com is selling it for a little more at £75, but with free delivery.

| Source: Crave UK |

Discuss: Comment Thread

|

Ten of the Stupidest Tech Company Blunders

Every so often, up pops a “top ten” article that takes a look at the biggest mistakes made in the tech industry, and too often, most include the exact same ten. A recent article posted at PC World, though, brings forth some major mistakes that I forgot all about, or didn’t hear about at all. Some even make me cringe at thinking about such lost opportunities.

Did you know, for example, that Yahoo! aimed to purchase Facebook in 2006? This was at a time when Facebook had 8 million users and a modest value compared to today. Yahoo! offered $1B, but due to issues that arose, they ended up losing out on the deal. Today, Facebook has 250 million users, and is valued at around $10B. Just imagine if Yahoo! did go through with the purchase… they sure wouldn’t be struggling as they are today.

Then there is OpenText, the Google before Google. It began in the earliest days of the Internet as a search engine, and the company claimed that all 5 million documents available on the web at the time were indexed with it. That’s great, except execs at the company decided to take an alternate direction with their business, totally underestimating how important web searches were to become. Google came out a few years later, and are now worth billions, and billions and…

More mentions include how Microsoft “saved” Apple, by pretty much donating $150 million, Napster’s demise and what could have happened if the music industry actually made proper decisions and a lot more. There’s much here not mentioned, but really, that would end up becoming a top 100 article, wouldn’t it?

In the early 1990s the Compuserve Information Service had “an unbelievable set of advantages that most companies would kill for: a committed customer base, incredible data about those customers’ usage patterns, a difficult-to-replicate storehouse of knowledge, and little competition,” says Kip Gregory, a management consultant and author of Winning Clients in a Wired World. “What it lacked was probably … the will to invest in converting those advantages into a sustainable lead.”

| Source: PC World |

Discuss: Comment Thread

|

iTunes Continues to Dominate Music Sales

Is this much of a surprise? Given that Apple’s iPods continue to dominate the market, iTunes’ success was bound to follow – and it has. According to recent reports by the NPD group, iTunes sales accounted for a staggering 25% of the entire overall music market in the US, and accounted for 69% where digital music is concerned, towering over the likes of Amazon.com, which holds just 8%.

These numbers are very revealing as to the future of the music sales landscape. Physical CDs have been decreasing in popularity since the digital music scheme of things exploded, but believe it or not, it’s still the CD that holds the majority of the sales. Digital music downloads are expected to eclipse the sales of physical CDs by the end of 2010.

I’ve expressed my own opinions on this many times before, and my mind certainly hasn’t changed. I’m still the kind of person who loves walking through a real music store, rummaging through the selection and leaving with a couple of new discs to go home and rip. But, I seem to be part of a dying breed, and I’m personally wondering just how long it will take before the physical music store will disappear, if ever.

Who’s with me? Do you prefer to purchase the real CD or hop online to download it? Are you opposed to purchasing music on other physical media, not on CDs?

iTunes-purchased songs now account for 25 percent of the overall music market–both physical and digital–in the U.S., says an NPD Group report released Tuesday. However, CDs are still the most popular format for music lovers, winning a 65 percent slice of the market for the first half of 2009. Digital music downloads have jumped in recent years, said NPD, hitting 35 percent of the overall market for the first half of this year, compared with 30 percent last year and 20 percent in 2007.

| Source: CNET News |

Discuss: Comment Thread

|

Netscape Founder Backs New Web Browser, “RockMelt”

When the Internet first launched, Netscape was there. In fact, believe it or not, Netscape Navigator existed before Microsoft’s own Internet Explorer. Thanks to that, Netscape enjoyed an incredible market share up until Microsoft’s browser, and for the most part, they continued to see a huge user base for years into Windows XP’s life, which was around a time when more options were becoming available to consumers.

About thirteen years after the launch of its initial browser, Netscape decided to call it quits, with the final version being 9.0.0.6. Since then, we’ve continued to see all of the other browsers flourish, with Mozilla’s Firefox really on track to become the dominant browser, and Google’s Chrome and Apple’s Safari beginning to make some headway. So the big question… do we need another? Or should we be pleased with what we have?

Well, according to the founder of Netscape, Marc Andreessen, there’s always room for one more browser, as long as it’s done right. Marc believes that there is something wrong with all current browsers… none of them were built from the ground up. Firefox, for example, continues to get updates, but it’s essentially all done with patches. There might be a major version upgrade, but the back-end will change little, and it certainly won’t be entirely re-written to keep up with the times.

It’s with this idea that urged him to back a new browser company called RockMelt. He already has a lot of experience with creating a browser from the ground up, and with the experience he’s gained since Netscape’s beginnings, he no doubt has a clear idea of what needs to be done. Given that browsers like Google Chrome entered the game late and are still enjoying nice growth, I’m not going to be too quick to discredit any new entrant, especially with what seems to have good goals behind it. I can’t seem to be satisfied with any web browser lately, so I say bring it on.

Mr. Andreessen’s backing is certain to make RockMelt the focus of intense attention. For now, the company is keeping a lid on its plans. On the company’s Web site, the corporate name and the words “coming soon” are topped by a logo of the earth, with cracks exposing what seems to be molten lava from the planet’s core. A privacy policy on the site, which was removed after a reporter made inquiries to Mr. Vishria, indicates the browser is intended to be coupled somehow with Facebook. Mr. Andreessen serves as a director of Facebook.

| Source: The New York Times |

Discuss: Comment Thread

|

Quake Live to Open Up to Linux and Mac OS X Gamers

1999 was a great year for multiple reasons, but the main reason it might linger in some gamer’s minds is because right before we entered Y2K, id Software released its highly-anticipated Quake III. Surprisingly, both the Windows and Linux versions became available at roughly the same time, so gamers all over could get their frag on, in beautiful fully-accelerated OpenGL.

The cool thing about Q3, aside from the stellar gameplay, is that even upon its release, it ran quite well on most any current machine at the time, as long as it was equipped with a 3D accelerator card. That to me, was truly amazing, because while I don’t recall what GPU I had at the time, I remember being incredibly impressed that it ran as well as it did. After all, for months leading up to its release, the graphics seemed to be the focal point, so I had assumed that nothing less than a bleeding-edge machine could handle it. I was wrong, thankfully.

With such a highly-optimized game, nearing 10-years in age, it’s no surprise that id decided to bring the game to everyone’s web browser, and that they did last year, with an open beta which began earlier this year for Windows users. Because id highly regards alternate operating systems, we all knew it’d only be a matter of time before we’d see a Linux and Mac OS X variant, and apparently, that time is just about upon us.

According to gaming site 1UP, the update will be applied in tomorrow’s patch, as was discovered during this past weekend’s QuakeCon. As a Linux user, I can’t express how great this news is. I have yet to actually touch Quake Live, so I’m happy I’ll soon be able to in my OS of choice. It seems like the perfect occasional distraction. I’ll be sure to follow-up in our forums once I can give it a try.

Mac and Linux versions of the client are available for users to play on the show floor. Non-attendees will be able to check out the update when it launches this Tuesday, August 18th. I guess fans of BeOS will have to wait just a little bit longer to join in the fun.

| Source: 1UP |

Discuss: Comment Thread

|

Digsby Takes Bloatware to an Entirely New Level

In the world of Instant Messengers, the competition is rather small, and for the most part, it’s split between the official clients, closed or open-source free clients, or paid clients, such as Trillian. In the free, but non-official space, the choice is highly limited. There’s Pidgin, which is what I use, but it lacks a lot of functionality of official clients, which is why Digsby, a rather new IM client, immediately drew a legion of supporters.

Though closed-source, Digsby is completely free, and supports a wide-variety of protocols, such as Windows Live, Yahoo!, ICQ, AIM and more. It even goes as far as to integrate e-mail notification support, as well as social networking tools. Overall, it seems like the perfect IM client. Well, at least that may appear to be the case, until you install it.

Up to now, I didn’t think that an IM client more bloated than Windows Live Messenger existed, or could even exist (I mean, how much worse could one possibly be?). Well, it appears that Digsby proves that free doesn’t always mean free, and their installer and fine-print TOS clear it up fast. The writers at Lifehacker took a look at the latest version of the program, and were so disgusted, that they urge everyone to uninstall and move on.

Just how bad can it be? We’re all familiar with quick-trick installers that try to pull a fast one on us in order to get some toolbar installed, or Google products, but believe it or not, Digsby’s installer has six such screens, meaning six totally separate pieces of bloat. These are all used to Digsby’s advantage, of course, in order to help them make money, and while I am hardly against the idea of making money off of your free application, this is not how to do it.

After hearing so many good things about Digsby, I couldn’t wait for a Linux version, but now, I have absolutely no intention of touching it, even if it doesn’t include such bloat (it will eventually). It makes you wonder… is the reason the Mac OS X and Linux versions have been in the works for well over a year, simply because this bloatware isn’t executable on those OS’?

While there’s no way to tell exactly how much money Digsby is making from the sneaky use of your computer and abusing the less knowledgeable with loads of crapware, there is one disturbing fact that you should consider: They are paying up to $1 for every new user that you refer to them through their affiliate program. If they can pay that much money for every new user, they aren’t just paying the bills anymore.

| Source: Lifehacker |

Discuss: Comment Thread

|

Firefox Hits 1 Billion Downloads, Microsoft Disputes the Numbers

A few weeks ago, we reported on a story that encouraged the death of Internet Explorer 6, and since then, lots has happened. For one, many popular web companies have opted into a program called “IE6 no more”, where a snippet of code is implemented into a website that will warn users of IE6 that they’re using an outdated browser, with recommendations to upgrade. It’ll be interesting to see where that goes.

As if that wasn’t enough of a hassle to deal with for Microsoft, not too long afterwards, Mozilla launched a website entitled One Billion + You, which touts the fact that since Firefox’s inception, it’s received over one billion downloads… a truly incredible feat. Microsoft isn’t too impressed though, as you’d imagine, and IE GM Amy Barzdukas asks people to question the validity of the number. Her reasoning is that there are roughly 1.1 to 1.5 billion Internet users, so for Firefox, which doesn’t hold a majority number in market share, 1 billion seems a bit off.

I have little doubt as to the validity of the numbers though, because we’re talking one billion downloads, not one billion users. Personally, I must have downloaded Firefox at least 50 times since I began using it, because of wanting it on more than one computer, or friend’s computers, or simply because I needed to re-install a machine. Given that its enthusiasts who have helped with the success of Firefox, I’m sure I’m not the only one who’s had to download it over, and over, and over.

Firefox does have one major advantage, though… it’s cross-platform. It works not only on Windows, but Linux, Mac OS X and other operating systems as well, so it’s no wonder that it’s proven to be such a popular choice for those looking to an alternative browser. Currently, Internet Explorer is only available with Windows, and rarely do people download a completely new version (they don’t come out that often).

For those interested, in the past 30 days on our website, 51.72% of visitors were running some form of Mozilla Firefox, while 32.65% ran Internet Explorer (with 8.0 dominating the versions). Chrome has actually seen a rise, to sit at 6.38%, with both Opera and Apple’s Safari just under the 4% mark, at 3.98% and 3.66%, respectively.

“The reason that a consumer would still be on IE6 at this point is a lack of awareness or the ‘good enough’ problem,” she said. “If you’re satisfied with what you’re doing and you’re not particularly curious about new technology and don’t really care, upgrading sounds like a hassle. Part of our communication needs to be making clear that there are significant advantages to upgrading to a modern browser.”

| Source: Mashable |

Discuss: Comment Thread

|

The Moments that Cause Us to Quit a Game

Here’s a situation I’m sure all gamers have been in at one point or another. You’re gaming it up, getting a ton of enjoyment out of the latest title you’ve just picked up, immersed in the gameplay. Then, things go awry. You die, and at that point, realize that you haven’t saved your game for a while, or due to the mechanics of the game, you’ve essentially been placed back an hour or more, left to redo what you’ve just completed.

When I saw an article discussing this at Ars Technica, I had a flurry of memories come back with events just like this, and not many of them I particularly wanted to remember. I tend to become overly frustrated with games easily, especially if I believe it’s the design that’s at the root of the problems, but I’m certainly not alone. When you realise that the only way to progress through the game is to completely redo any part of a game you didn’t find that enjoyable to begin with, it’s incredibly easy to just say, “screw it”, and put it back on the shelf, never to touch it again.

This is an issue game developers are well aware of, and it’s no doubt due to the fact that they are gamers themselves. There have been a few titles that have tried new techniques to not punish the player much on death, such as Prey, which warps you to another world to kill off some flying creatures, and once you’ve killed enough, you’re put back exactly where you were. I didn’t have too much to complain about with that feature, and I actually did find it to add a useful element to the gameplay.

As always, what makes a game fun is its gameplay, and if there’s a part of mechanic that causes frustration, the game’s not going to be fun, and most likely, the player will just quit and never touch it again. I personally have a few games like that on my shelf. So my question is, what game has stumped or frustrated you the point of never touching it again?

So why do we quit? What makes us walk away from a game? Developers are aware of it, and at E3 I talked to a developer from Turn 10 about why they had added a rewind feature into Forza 3, allowing you a mulligan after a bad crash. “If you’re at the end of a five-lap race, and you make one mistake, that’s when you decide to turn the game off and go to bed,” he explained. “I don’t want to lose people at that point.”

| Source: Ars Technica |

Discuss: Comment Thread

|

Disgruntled Enough with Apple to Move Back to the PC?

As our site focuses more on PC’s using Windows or Linux, we haven’t put much emphasis on Apple in the past. That will no doubt change at some point in the future though, but for now, we’re content with simply talking about what’s going on down there in Cupertino, whether it be a new product launch, or something else on the business side of things (good or bad).

I’ve made it no secret in the past that I’m not a big fan of Apple, and that really hasn’t changed. I won’t go through my personal reasons again here, but I will say that things have even rubbed me even worse lately, especially with regards to the entire App Store debacle of good applications being denied for no apparent reason. I’m not alone, and it seems like some actual pro-Apple fans are starting to become annoyed as well.

Jason Calacanis, the CEO of the human-powered search-engine, Mahalo, moved over to the Apple side six years ago, after being a devoted Microsoft fan for over 20 years. Since then, he’s given over $20,000 to Apple, across his various computers and accessories. His loving relationship with Apple has apparently ended, due to how the company is handling things lately, and he’s even looking back to Microsoft

This is a long blog post, but it’s worth it if you want to see it from a rather unique perspective. Jason lists five specific reasons that pushed him over the edge, which include destroying MP3 player innovation through anti-competitive practices, monopolistic practices in telecommunications, and of course, the App Store policies.

Years and years after Microsoft’s antitrust headlines, Apple is now the anti-competitive monster that Jobs rallied us against in the infamous 1984 commercial. Steve Jobs is the oppressive man on the jumbotron and the Olympian carrying the hammer is the open-source movement. Steve Jobs is on the cusp of devolving from the visionary radical we all love to a sad, old hypocrite and control freak–a sellout of epic proportions.

| Source: Jason Calacanis Weblog |

Discuss: Comment Thread

|