- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Poor Passwords a Larger Threat than Malware?

What’s your password? Of course, I don’t want you to actually tell me, but just think about it. What’s the password to your most data-sensitive web-services, like your e-mail account or bank account? If you were able to repeat your full password, or even picture it spelled out, instantly in your head, chances are it’s a little too simple. And if it’s simple, you aren’t taking it too seriously, which is too bad, given it is serious.

A recently-posted article at Channel Insider takes a look at the password issue, and they say that weak passwords are so common, that their security risk eclipses that of a computer virus. That’s a bold statement, but when you think about it, it’s easy to understand why it could be true. Many people are adamant about running virus protection on their PC, along with ad-ware protection, but what about your passwords? All that protection will do you little good if your password is easily-crackable.

I know for a fact that this is indeed a problem, and it’s rare to find anyone who actually cares about their password choice. In helping friends out with various things on their computers in the past, for which I’ve required a password, some of their choices simply appalled me. Some are so bad, that anyone with a brute-force cracker would be able to get into their account within seconds – assuming there were no additional security measures put into place.

You might be quick to say, “But it’s just by e-mail… nothing is bad in there.”, but that’s not the point. The point is that your stuff should be private, and properly protected. Passwords like “hellokit88” are not at all secure. Passwords like “h3ll0k1t88!” are far more secure. To take things even further though, I’d personally recommend choosing a password between 12 – 16 characters long, which includes letters, numbers and special characters. I’ll post a few more tips in the discussion thread below, to help you create one such password, so check it out and be secure!

During a security panel I conducted at Breakaway, one of my panelists said that one medical practice he serves recognized the need for strong password policies and required each user to have a strong, mixed alphanumeric password for different applications and resources. The only problem was that this led to “sunflowers,” or users—including the practice’s owner—adorning their monitors with Post-it notes with scribbled passwords.

| Source: Channel Insider |

Discuss: Comment Thread

|

AMD Releases Speed-Bumped Phenom II 965 BE

AMD has today launched their fastest-ever processor, the Phenom II 965 Black Edition, clocked at what’s currently the highest stock-clocked frequency today: 3.40GHz. As you’d expect, the TDP would have to be high to reach this, and it is, at 140W TDP – bumped from the 125W of its predecessor, the 955, which we reviewed a couple of months ago.

As you could imagine, the latest chip is AMD’s fastest, but it still doesn’t manage to compete head-to-head with Intel’s Core i7, but that’s to be expected. Plus, in that comparison, there are noticeable price differences, so AMD is really trying to target the enthusiast who doesn’t want to spend $500 on just a processor and motherboard, and they’re doing a fair job of that.

Launching the processor at this time is no doubt a great idea, though, as Intel’s Core i5 is released next month and threatens to steal whatever thunder AMD currently has right from underneath them. Like most newly-launched products though, especially processors, it’s doubtful the pricing is going to be ideal from the get go, so AMD may enjoy longer success with the 965, because after all, pricing matters. Especially right now.

As you’ve probably noticed, our site hasn’t been updated for a while, and it’s due to a number of various factors I won’t get into here. We have indeed received this processor from AMD, and hope to get an article up for it as soon as possible. Please bear with me… I have a lot to fix and straighten up around here.

Credit: The Tech Report |

Although the X4 965’s power requirements are on the extreme side, its pricing and performance are not. AMD tells us the 965 will list for $245, the same price rung that the 955 Black Edition occupied previously. That puts the 965 in more or less direct competition with the Core 2 Quad Q9550, which lists at $266 but is selling for as little as $220 at major online vendors like Newegg.

| Source: The Tech Report |

Discuss: Comment Thread

|

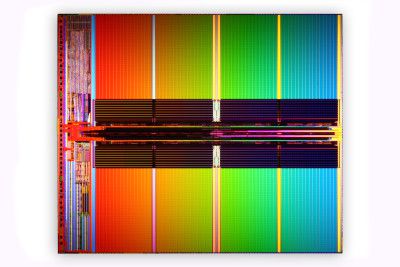

Micron and Intel Introduce 3-bit-per-cell NAND Technology

When Solid-State Disks were first introduced to consumers, many people didn’t quite know the differences between the various flash chips, and their technologies. Since then, though, information has been abundant, and it’s probably rare that anyone interested in an SSD hasn’t heard of SLC and MLC, or know their differences. But, prepare to add a third type, “3-bit-per-cell MLC”.

To understand what makes 3BPC MLC interesting, it’s important to first understand the basics of how NAND and its cells work. SLC, or “single-level” cells are 1-bit, which means two things. First, there are two possible logic states, and second, the resulting chip has equal bit density to the chip being used (typically, 16 Gbit, or 32 Gbit in really high-end situations). These are also the reasons SLC is so much faster than MLC. Because there are only two possible voltage states per cell, the access time is faster, and for the same reason, SLC has a longer lifespan.

MLC, or “multi-level” cell, increases the bit-density to 2-bit, meaning that we move from two possible states to four, but also a larger possible overall density (which is why MLC-based SSDs are far larger and far less expensive over SLC). But for the opposite reasons that SLC lasts much longer than MLC, the extra possible states per cell for MLC means far more writes, and ultimately, a shorter lifespan.

3BPC MLC takes things one step further, and increases the cells to 3-bits, which means larger possible densities, but an even shorter lifespan and also another performance hit. The reason is because that with 3BPC, there are eight possible states, and each would take a little bit longer to trigger.

So what’s this mean to you? 3-bit-per-cell is not for SSDs, but rather things like flash media (thumb drives, camera cards, media players), where density and physical size is more important than performance. On a related note, I have to vote for this as being one of the coolest-looking die shots I’ve seen in a while, or at least one of the most colorful.

Designed and manufactured by IM Flash Technologies (IMFT), their NAND flash joint venture, the new 3bpc NAND technology produces the industry’s smallest and most cost-effective 32-gigabit (Gb) chip that is currently available on the market. The 32Gb 3bpc NAND chip is 126mm². Micron is currently sampling and will be in mass production in the fourth quarter 2009. With the companies’ continuing to focus on the next process shrink, 3bpc NAND technology is an important piece of their product strategy and is an effective approach in serving key market segments.

| Source: Micron Press Release |

Discuss: Comment Thread

|

Recording Industry Planning New Digital Format, “CMX”

MP3? FLAC? M4A? Apparently, such formats aren’t good enough for a few large players in the recording industry, such as Sony, Warner and Universal, as the entire conglomerate is planning on releasing a brand-new format, tentatively called, “CMX”. This format’s main goal is to sell entire albums as a digital format, not single tracks. Similar to how Apple’s iTunes embeds album art into their M4A files, CMX would implement that, along with lyrics, and potentially other bonuses.

This new format, is of course, doomed. There’s a reason the MP3 format is so popular. First, it works. Today’s encoders are so robust, that you can have a modestly-sized MP3 to one encoded at a high bitrate of 320kbit/s, or even beyond in some cases. It’s successful, because it’s popular, and all media players out there support it – even the iPod, which prefers M4A’s.

That’s not to say that CMX, aside from the ridiculous name, is a horrible idea in theory, but I’m afraid it’s far too late in the game to see a real chance of it becoming successful. After all, no current media players will be able to use it (except ones that have update-able software), and Apple has already rejected it in lieu of creating their own such format. Unfortunately, Apple’s is more likely to succeed, at least for a while, since the iPod is still the leading player on the market. The scary thing, of course, is that their format would not likely work in other players, or anywhere outside of iTunes, unless the company chooses to license it, which I can’t personally see happening.

Again, it’s not a horrible idea, but for something like this to be truly successful, we’d need total openness… a format that’s not exclusive to one company. The idea behind CMX and whatever Apple has en route is that it should recreate the experience of buying the real CD. So you get album art, liner notes, perhaps some extra content, and whatever else they might choose to throw in there. But with CMX offering so much more than a typical downloaded MP3 or M4A… you’d imagine there would be a premium as well. What’s that mean? More than ever, it would make more sense to just go buy the physical CD, and rip it however you like.

Credit: Bob Donlon |

“Apple at first told us that they were not interested, but now they have decided to do their own, in case ours catches on,” a label rep told the Times. “Ours will be a file that you click on, it opens and it would have a brand new look, with a launch page and all the different options. When you click on it you’re not just going to get the 10 tracks, you’re going to get the artwork, the video and mobile products.”

| Source: The Guardian |

Discuss: Comment Thread

|

Zune HD’s Pricing Revealed, Launching in a Month

It’s been a few months since Microsoft made the Zune HD official, and we’re inching ever-closer to the launch date. Over the course of the past few days, both Best Buy and Amazon.com leaked the pricing (the latter is allowing pre-orders). For those who have been holding out, your patience is likely to have paid off, as the pricing looks to be pretty reasonable.

The 16GB model of the player will retail for $219.99, while the 32GB will debut at $289.99. Comparing these prices to equivalent iPod touch’s, which are $299 and $399 for the 16GB and 32GB respectively, Microsoft has done well to make sure there will be a huge draw for their latest player. The Zune HD, like the iPod touch, features a huge touchscreen, and while it doesn’t support Apple’s App Store, the more than $100 savings could be worth it.

With the pricing leaks coming from these companies at almost the same time, we could likely expect the announcement for the launch date anytime now, and if rumors prove true, it will hit the streets at around Sept 6 – 8.

As a refresher, what exactly does the Zune HD offer over the previous iteration? As you’d expect, “HD” refers to the screen, which here is a 3.3″ OLED display capable of a 480×272 resolution. This is the same resolution that Sony’s PSP supports, so if you are familiar with that device, you know what to expect here. Of course, the screen is also touch-capable, so all of your media control is done with an on-screen keyboard. Just how efficient it will be (and whether it will support landscape mode) is yet to be seen.

The Zune HD also becomes the first consumer device to run on NVIDIA’s Tegra, which means both great battery-life (hopefully), and also 720p video playback through an HDMI output. Because this chip is highly-optimized for handling HD media, running 720p content on the device itself and having it downscaled shouldn’t be a problem, but the size of the files could become a problem given the overall size of the devices.

Oh, and it actually has a freaking HD radio tuner. That’s something no iPod offers (and something I whined about a few months ago.

The Best Buy screenshots also add weight to the previously rumored September 8th launch date so it looks like we’ve got less than a month until lift off. The only question is whether or not Apple’s impending iPod touch refresh will tout enough bells and whistles at launch to overshadow Microsoft’s new gem. Hit the jump for the Best Buy images.

| Source: Boy Genius Report |

Discuss: Comment Thread

|

Intel Issues Firmware Update for X-25M G2 SSDs

When Intel released their second-generation SSD’s last month, we saw drives with chips based on a 34nm process, faster overall speeds, and even lower latencies. But, sadly for Intel, a show-stopper issue surfaced only a few days later.

The issue affects only those who use a BIOS password. If you set a password with the drive using the launch firmware, the SSD would become inoperable on the next boot. What exactly a BIOS password has to do with a storage device, I have no idea, but the issue was severe enough to push Intel to immediately release the information. It took a few weeks for an updated firmware to become available, but it’s here now.

If you are using one of Intel’s latest G2 drives, it’s highly recommended that you go and update the firmware now. You can download it here, in the form of an ISO. You will need a blank CD-R for burning. If you are unsure of how properly burn an ISO, download and install CDBurnerXP. Once launched, use the “Burn ISO image”. The steps from that point are rather straight-forward.

After the image is burned, you can boot up your machine with it in the drive, and change the boot device to whichever disc drive it’s inserted into. The flashing process is simple, so don’t worry, you are not going to kill your drive. If you have more than one X25-M installed, they will be displays, but the latest version of the firmware will only update G2 drives. G1 drives will go ignored (as they don’t suffer from this issue).

| Source: Intel Support Page |

Discuss: Comment Thread

|

Is Firefox Becoming More Crash-Prone with Each Release?

Whenever a new version of your favorite software gets released, there’s usually good reason to get a little excited. After all, minor version upgrades usually mean important bug fixes, and major upgrades are a combination of that and feature updates. Firefox 3.5 was no exception to the rule, and along with a slew of bug fixes, many notable features were also introduced, which made the upgrade decision a no-brainer.

For me personally, though, each new major version update gives me hope that what I’ll download will be a much more stable browser. I’ve been using Firefox since it went by the Firebird moniker, and the reason I came to love it so much is that in the beginning, the browser was rock-solid. Fast, simple, and stable. Since then, though, and especially since 2.x, I have found Firefox to become buggier than ever, and far more crash-prone.

Since I run Linux as a primary OS, I usually blame other factors when Firefox crashes. Prior to Adobe’s releasing of an official 64-bit Flash plugin for Linux, most crashes usually could be attributed to that, but I don’t think that plugin today is as guilty in the same high percentage of crashes as it used to be. I mainly come to this conclusion, only because, as of Firefox 3.5, I have found the crashes to become more frequent. And with each crash, my love for the browser diminishes just a wee bit further.

I took some comfort in seeing I wasn’t alone, though, as Erick Schonfeld at TechCrunch is apparently suffering the same issue, and judging by the screenshot, he’s running Mac OS X. Many will be quick to blame the extensions being used, and that’s understandable. But realize that the extensions scheme of things is what made Firefox so popular in the first place. It’s a major feature. Of the three extensions I currently run, none are noted to cause issues (Compete, Forecast Fox, Web Developer), so I still tend to blame the browser itself more than anything.

If you’re a Firefox 3.5 user, how has your experience been so far? Have you seen as many screens like the one below as I have?

Without basic stability, none of the other great features or add-ons really matter much. Mozilla needs to fix this issue fast because Firefox 3.5 is already gaining a lot of traction. Net Applications has it at a 4.5 percent market share at the end of July, while StatCounter has it at 9.4 percent as of today. People are using this as their main browser, despite the beta label, and there are plenty of other powerful choices out there from Safari to Chrome to, yes, even IE8.

| Source: TechCrunch |

Discuss: Comment Thread

|

Dell Retires Mini 12 Netbook, But Why?

A couple of days ago, a Dell employee posted on the Direct2Dell blog that the company was retiring the Mini 12, a “netbook” that it released way back in February. This understandably caused many to question the reason, as I’ve met no one that has thought bigger netbooks were a bad idea (unless we’re talking about the confusion for their placement in the market). So what’s the deal?

Michael Arrington, of Tech Crunch, has a lot to say on the matter, and has had a lot to say ever since the Mini 12 was released. In a post made in January, he mentions that with Dell’s latest (at the time) release, Intel wasn’t happy, and it’s easy to understand why. Intel makes more money off of the beefier machines (thanks to their better processors, chipsets and other components). No problem would exist if consumers purchased netbooks to complement their notebooks, but that’s rarely the case.

So with Intel obviously more concerned with selling notebooks over netbooks, this announcement from Dell, coupled with the fact that, again, I’ve met few who haven’t liked 12″ netbooks (I also own one), the decision to pull out seems a bit odd. From a consumer standpoint, 12″ notebooks are great. They’re affordable, and the perfect size, depending on who you ask. The 1280×800 resolution is far, far better than the netbook standard of 1024×600, and the larger keyboard helps also.

As someone who loves 12″ notebooks/netbooks, I really hope this isn’t going to become a trend. Dell made it clear that when people use 12″ notebooks, they expect to do more with them, so in a sense, they are disappointed. 10″ netbooks, however, are limiting in many different ways, making the slower processor a non-issue. Either way, though, with Intel’s CULV ramping up production and AMD also targeting the 12″ market, hopefully Dell will become the only company with this mindset.

Probably a couple of reasons. First, Intel doesn’t like 12-inch netbooks because they are deep into dual core territory, where Intel has much healthier profit margins. For casual users a 12-inch netbook with an Atom chip works just fine, and they are buying these devices instead of more expensive dual core machines. Intel has put pressure on OEMs to build netbooks that have 10 inch or smaller screens.

| Source: Tech Crunch |

Discuss: Comment Thread

|

Californian GM Dealerships to Sell Cars on eBay

When purchasing a vehicle, new or not, it’s always been common practice to go to a local dealer and have a look around. But with the popularity of the Internet, it was only a matter of time before people would sit at their computers and browse instead. And why not? At your PC, you have access to thousands of cars, not dozens, and you can even look outside your own state for specific models if you have to.

When people do look to purchase a car online though, it’s usually not brand-new. Rather, it’s an older model that someone chooses to buy either because it saves money, or because they want an older model that’s not exactly easy to come by (mint Chevelle’s, for example). But that might change soon, thanks in part to ideas spawned by GM’s turn-around plan, which will bring their new cars to eBay.

This will start out as a trial, and run until September 8th, and it really only applies to Californians. Of the 250 GM dealers located in that state, 225 of them have opted into going online. As a consumer, you’ll be able to visit a site like GM.eBay.com and peruse GM’s many models (spanning Chevrolet, Buick, et cetera), find the model you want, and then discuss the sale with the nearest dealer. It’s not as simple a process as clicking a button to buy, as consumers will have the ability to haggle or ask questions.

This is also an interesting move for eBay, as typically all auto sales have gone through their sub-site eBay Motors. Not this, though, as each of GM’s brands will have co-branded pages on the actual eBay site, and search results will be found on eBay, not eBay Motors. Whether this will begin a trend or not is yet to be seen. If proven successful, it’s unlikely that the Sept 8 end date would be upheld, and chances are many other US states (and perhaps Canada) would join in on the fun.

My question to you is, would you rather purchase a brand-new car online, or go to the dealer? For me, I’d prefer online, only because if the time came to purchase a new car, I would have done enough research and seen the model in real-life before committing to a purchase. As with most things, the online convenience is just too good to pass up.

The test comes a month after GM made an unusually quick exit from bankruptcy protection with ambitions of becoming profitable and building cars people are eager to buy. Once the world’s largest and most powerful automaker, new GM is now leaner, cleansed of massive debt and burdensome contracts that would have sunk it without additional federal loans.

| Source: USA Today |

Discuss: Comment Thread

|

Toshiba Applies to Join the Blu-Ray Disc Association

Remember HD DVD? The HD format that never could? Well, it’s been well over a year since Toshiba officially pulled out of the HD format scheme of things, and since then, the only HD optical format has remained Blu-ray. It’s been long-rumored that Toshiba would shift over to the Blu-ray camp, and begin releasing players there, and that’s just what’s going to happen, according to a company-issued press release.

In what is no doubt one of the shortest press releases I’ve seen recently, Toshiba says not much more than the company has applied to join the Blu-ray Disc Association, and has intentions to release related products in the future. This is a long-time coming, and I have to say I’m mostly impressed that it took them so long, as Toshiba is a well-known brand when it comes to HD and high-end media in general.

Could this also be confirmation that there is still hope for Blu-ray to hit the mainstream? Many have doubted that it will happen, and since HD DVD’s death, Blu-ray demand hasn’t exactly exploded. But, things are indeed improving. This past spring, prices for Blu-ray players dropped, and as a result, sales soared by 72%. We’ll have to wait a wee bit long to see if the trend continued into the current quarter.

Not to raise another rumor from the dead for the hundredth time, with Toshiba seemingly being the exclusive vendor to produce external ODDs for Microsoft’s Xbox 360, I can’t help but wonder if there’s any chance we’ll see Blu-ray come to that console anytime soon. Something tells me no, but stranger things have certainly happened. After all, if an add-on such as that could sell for around $100 retail, there might actually be a market for it.

Tokyo-Toshiba Corporation (TOKYO: 6502) announced today that the company has applied for membership of the Blu-ray Disc Association (BDA) and plans to introduce products that support the Blu-ray format. As a market leader in digital technologies, Toshiba provides a wide range of advanced digital products, such as DVD recorders and players, HDTVs and notebook PCs that support a wide range of storage devices, including hard disk drives (HDD), DVD, and SD Cards. In light of recent growth in digital devices supporting the Blu-ray format, combined with market demand from consumers and retailers alike, Toshiba has decided to join the BDA.

| Source: Toshiba Press Release |

Discuss: Comment Thread

|

Adobe Kills Free Photoshop Application, Urges Users Online

Although I’m not entirely stoked about it, there’s a huge shift lately in how companies would like us to use their software. If you ask Google, they believe any application you need should be available online, and they’ve proved it by offering suites of various sources that way (including their very popular office suite). But what about applications that are incredibly robust, such as Photoshop?

Well, as much as hate working with applications of any sort online (I’ll always be a desktop guy), there are some applications that, when done right, will work online just fine, like image manipulation tools, such as the one offered by Adobe at Photoshop.com. Obviously what’s offered online here isn’t going to be near as robust as the full-blown suite, but rather, it’s designed people on a low budget, or people who just want to take care of quick photo edits fast.

The reason that the site is significant now, is because Adobe has just discontinued their Photoshop Album Starter Edition application, which has always been free, in order to push people towards their online service. It’s an interesting move, to say the least, because rather than have people use their free desktop application, they’d rather people use their bandwidth and be confined to a web browser. Seems a wee bit odd to me, but once again, I am not a web apps guy.

How do you guys feel about this? Would you rather use Photoshop in a web browser than on the desktop? I admit, it is a nice feature if you are on the go and need a quick photo edit, but I don’t recall the last time when I was in that situation, and without my notebook. Either way, I realize I’m probably in the minority, as it’s far from being only Adobe that’s starting to throw such robust applications online.

The move reflects the growing importance of Web-based applications even for software powerhouses such as Adobe. Web applications, even when using relatively sophisticated technology such as Adobe’s Flash, are typically primitive compared to what can run on a computer, but they offer advantages in sharing, maintenance, and remote access from multiple computers and mobile devices. And of course the Web is gradually growing more sophisticated as a foundation for applications.

| Source: Underexposed Blog |

Discuss: Comment Thread

|

Chkdsk Bug in Windows 7 Could Cause BSOD?

Yesterday, a fierce storm blew through the Internet targeting Windows 7, and the reports weren’t good. It’s been discovered that there is a severe enough issue that many are believing could result in a delayed Windows 7 release, although that would be tough to believe, since the RTM has just been released to MSDN subscribers today. According to Microsoft though, they see no issue, and if there is one, it’s not their fault.

The problem lies with the built-in disk-checker, “chkdsk”. When run with the /r switch, on a secondary drive, the program suffers from a major memory leak that effectively hogs all of your system RAM, and ultimately, gives you the infamous Blue Screen of Death. While many people have verified that this issue exists, Microsoft is adamant in stating that they’ve yet to experience the issue, and they believe if anything, it would be caused by hardware, not their software.

“While we appreciate the drama of ‘critical bug’ and then the pickup of ‘showstopper’ that I’ve seen, we might take a step back and realize that this might not have that defcon level.” is what Microsoft’s Steven Sinofsky, president of the Windows Division, said, so it appears that they don’t believe it to be a major issue at this point. It’s also unknown whether or not this bug only exists in the RTM or not, because you’d imagine that if it has always existed, people would have discovered it long ago.

If anyone out there happens to be running any build of Windows 7, and you have a two-disk setup, and you are feeling a little brave, try out the chkdsk command, and see if the bug exists on your system. If your secondary drive is “E:”, then the proper command would be, “chkdsk /r e:”, without quotes.

On the Chris123NT’s blog, a user name FireRX, who appears to be a Microsoft MVP, said, “the chkdsk /r tool is not at fault here. It was simply a chipset controller issue. Please update [your] chipset drivers to the current driver from your motherboard manufacturer. I did mine, and this fixed the issue. Yes, it still uses a lot of physical memory, because [you’re] checking for physical damage, and errors on the Harddrive [you’re] testing… Again, there is no Bug.” FireRX also said he was sure a hotfix would be issued today.

| Source: Network World |

Discuss: Comment Thread

|

Accused Domain Name Thief Faces Jail Time

The Internet has been around for about 14 years now, which many would consider to be a while. But, despite all that time, the legal system still hasn’t had time (or so it seems) to catch up, as there are still many uncertainties about what’s legal, and not, online. Even things that are blatantly illegal, or should be, are not, and as you could imagine, that can cause a headache for some people.

Once such crime is theft of a domain name. That is, to physically log (or break) into the rightful owners domain account, change the information, and simply call it your own. That’s just what Daniel Goncalves did with web URL P2P.com. Except, he didn’t just steal it, he actually sold it from right under the real owner’s noses. As you’d expect, such a domain would sell for a pretty penny, and it did… $110,000 to NBA player Mark Madsen.

It’s hard to tell if the owners received the domain name back yet, or not, but it’s proven to be a complicated process in having the case dealt with, since, as mentioned before, it seems that no one knows just how to handle legal cases like this. It’s a legal gray area, but with the Internet what it is today, I don’t think it’s safe to keep on going without hardened laws. After all, the owners of P2P.com had the money to fight their case… many people do not.

The main problems affecting victims of domain name theft are lack of experience of law enforcement, lack of clear legal precedents, and the money necessary to launch an investigation. DomainNameNews, which first reported the arrest, relates the Angels’ experience in reporting the crime. When the Angels called Florida police to report the theft, a uniformed officer in a squad car was sent to their home. “What’s a domain?” the officer asked them, according to DomainNameNews.

| Source: Ars Technica |

Discuss: Comment Thread

|

NVIDIA’s CUDA Helps Improve Apollo 11 Videos

Although GPGPU (general-purpose computing on GPUs) is growing in popularity, we’re still a little ways away from it becoming totally mainstream, and used by many. Over the course of the past year, though, NVIDIA is one company who has actively been pushing GPGPU, along with their CUDA technology, in order to get applications and scenarios in front of consumers to show them the benefits that their speedy graphics cards can offer.

One area where GPGPU can drastically improve performance is with video encoding, especially where filters are concerned. In some cases, the performance can increase upwards of 100 times over a CPU, and it’s for this reason that NVIDIA focuses on pushing the technology so hard. The company has just experienced a nice thumbs-up from a company called Lowry Digital, as well, as that company is the one which is responsible for enhancing the film footage of the Apollo 11 landing on the moon.

As you’d imagine, video footage from almost fifty years ago can’t hold a candle to today’s HD video, but even considering the time it was recorded, it still looks pretty bad given the circumstances. As you can see in the sample below, though, the image is drastically improved (there are more samples at the below link). In the press release, it notes that on typical CPUs, each frame being dealt with would take upwards of 45 minutes to process, but with GPGPU, it takes seconds.

Maybe this is the kind of news that NVIDIA and GPGPU as a whole needs to get consumers excited about such technology, especially given that many people don’t realize they can do similar processing in their own homes.

“Lowry Digital’s restoration process has brought out details in the Apollo 11 videos that were never visible before,” said Andy Keane, general manager of the Tesla business unit at NVIDIA. “You can now see the faces of Neil Armstrong and Buzz Aldrin behind their visors, the stars on the U.S. flag when it is being raised and amazing details of the moon surface. We’re proud that NVIDIA has made such an important contribution to this historic project.”

| Source: NVIDIA Press Release |

Discuss: Comment Thread

|

Microsoft Releases “Windows XP Mode” Release Candidate

One of the more touted features of Windows 7 has got to be the “Windows XP Mode”, which aims to tackle an issue that some found themselves with when moving to Windows Vista… incompatibility. With Windows XP, compatibilities existed, but they usually weren’t a major issue. Vista was the stark opposite though, with many peripherals simply not working, and applications as well. While XP Mode could only be considered an unbelievable workaround, it should do well to please anyone who’ll need to use it.

Last week, we posted an article taking an introductory look at virtualization, and how it works. Windows XP Mode uses the same technology, through Microsoft’s Windows Virtual PC application. With Windows 7 (and any current version of Windows for that matter), anyone can go and download the application for free. But if you want to use “Windows XP Mode” as Microsoft calls it, you’ll need to be using either the Professional or Ultimate version of 7. If you’re on Home Basic or Home Premium, you’ll have to provide your own copy of XP.

For those with Professional or Ultimate installations, you’re now able to download the RC version of Windows XP Mode and get to work right away. If all goes well, it should be simple, and everything should work as hoped. The RC features some updates worth noting, such as USB sharing. This means that your XP installation can utilize your USB devices such as printers and flash drives without issue.

To make use of the Windows XP Mode, you need a processor that supports either AMD’s “AMD-V” or Intel’s “Intel VT”. Most recent CPUs support these, but to make sure, you should look up your respective CPU model on the vendor’s website. To read more about Virtual PC and Windows XP Mode, check out Microsoft’s site here. If you happen to take this for a spin, post in our forums and let us know how you made out!

Windows XP Mode provides what we like to call that “last mile” compatibility technology for those cases when a Windows XP productivity application isn’t compatible with Windows 7. Users can run and launch Windows XP productivity applications in Windows XP Mode directly from a Windows 7 desktop. I also strongly recommend that customers install anti-malware and anti-virus software in Windows XP Mode so that Windows XP Mode environment is well protected.

| Source: Windows Team Blog |

Discuss: Comment Thread

|

Intel Develops Hardware and Software for Healthcare

For the most part, Intel is known as a semi-conductor company, one that’s known for producing some of the best processors on the planet, and also chipsets, SSDs and so forth. They’re not entirely known for software, though, except for their developer tools, and those of course are designed for a select audience. As Bright Side of News* discovered at the company’s Technology Summit, Intel is actively looking to branch out into other markets as well, producing both the hardware and software for the products.

The product being demonstrated is an important one, as it focuses on the healthcare for the elderly, or anyone else with severe enough health problems that makes it a non-option to leave the house on occasion. The secondary goal is to allow doctors an easier time to deal with more patients, which is also undeniably important. The device that they’ve developed is an all-in-one computer, with specialized software and also a touch-screen display. It comes equipped with various security measures to make sure data does get intercepted, and also that it remains safe.

This FDA approved device is a lot more than a simple computer, though, as the software is designed to be easy to use on both ends. Essentially, the patient in their house would have one of these, and doctors at their clinic would also. So, doctors at the office can keep up-to-date on the client’s progress, and they can adjust their activities accordingly, which the patient would then see on their screen.

It goes a bit further, because thanks to the fact that it includes a web cam, the patient and doctor can talk to each other via video, which is important since the doctor would then be able to visually see how the patient is doing. There’s a lot more than discussed here, but progresses like this are important, and I can’t wait to see something like this become mainstream. If all the elderly had access to this kind of technology in their home, it’d no doubt make their lives a lot easier (well, unless they hate technology, of course).

With their FDA approved Health Guide, Intel is branching out into the medical arena. Why? The baby boomers are rapidly reaching the AARP (American Association of Retired Persons) stage of life. As they age, their chronic illnesses will need to be addressed. And statistics show that there just won’t be enough youngsters in the care giving profession to give them the attention they need. Louis Burns, VP and GM, Intel Digital Health Group presented slides, video, and commentary illustrating those concerns.

| Source: Bright Side of News* |

Discuss: Comment Thread

|

Microsoft Reveals Anytime Upgrade & Family Pack Pricing

Last Friday, Microsoft posted some interesting information on their Windows Team blog that I somehow overlooked… pricing for Anytime Upgrade, and also their family pack. First, to reiterate what WAU (Windows Anytime Upgrade) is. This feature was first introduced with Vista, as a way to allow consumers to purchase one version of Windows, and later have the option to upgrade to another. This is useful since it allows you to save money early on, and only spend more if you need to.

I admit… the pricing makes absolutely no sense to me, but here it is. To upgrade from Starter to Home Premium, it’s $79.99 USD. To upgrade from Home Premium to Professional, it’s $89.99, and from Home Premium to Ultimate, it’s $139.99. The reason I find this odd, is that if you combine the retail pricing for a full copy of Home Premium and add in the WAU price to go to Professional, it’s actually $10 less expensive than buying Professional outright. Then where Ultimate’s concerned, you’ll spend $20 more…

Then there’s the family pack, which actually happens to be offered at a very good price: $149.99 for a 3-pack. The problem is that the blog doesn’t go into detail and give pre-requisites for the pack, but for now, we could assume that you could upgrade from either XP or Vista. Another strange fact is that the family pack looks to be sold in limited quantities, and also limited markets. Why Microsoft would take this route, I have no idea, but hopefully they’ll change their mind.

Any way you look at it, $149.99 for three upgrade copies is a great deal, so you’ll want to take advantage of that soon after launch to avoid missing out.

Credit: madstork91 |

The Windows 7 Family Pack will be available starting on October 22nd until supplies last here in the US and other select markets. In the US, the price for the Windows 7 Family Pack will be $149.99 for 3 Windows 7 Home Premium licenses. That’s a savings of more than $200 for three licenses. This is a great value and we’re excited to be able to offer it to customers.

| Source: Windows Team Blog |

Discuss: Comment Thread

|

NVIDIA’s Derek Perez Swaps GPUs for Pucks

In any industry, it’s common to see people come and go, but I’d be willing to bet that in the tech industry, as thriving as it is, it happens a bit more often than you’d expect. Some people jump around from position to position at various companies like there’s no tomorrow, so it’s notable when someone decides to change things up and leave a position that they’ve held for over a decade.

A week ago today, though, Derek Perez, NVIDIA’s Director of Public Relations, posted on his Facebook account that he made the decision to leave NVIDIA after 11 years to set out to tackle a new adventure. He waited a week to fill us in on just what that adventure was, though, and believe it or not, it has not much to do with the tech industry. Rather, Derek is off to become the head of marketing for the Nashville Predators, an NHL team.

Anyone who knows Derek knows what kind of sports nut he is, with an incredible love for hockey (and he’s not even Canadian!). So while this new job puts him in a completely different environment, his expertise with management and marketing, along with his love of hockey, makes the move a no-brainer. In his words: “I think I got a dream job“.

On behalf of our site, I’d like to give Derek wholehearted congratulations and also a big thanks for all the help and support he’s given us for as long as we’ve been doing business with NVIDIA, and we wish him the best of luck. Keep your eyes on the ice, DP!

Credit: Igor Stanek |

Here are some quick fun facts. When Derek joined NVIDIA in 1998, it was around the same time that the company moved their offices to Santa Clara, California, where they still remain today. The hot new product of the year? It was none other than the RIVA TNT, the successor to the RIVA 128, and direct competition to 3Dfx’s newly-launched Voodoo2 (NVIDIA acquired 3Dfx’s assets in 2000).

In addition to offering improved texture filtering techniques and trilinear filtering, it also introduced 32-bit color and a 24-bit Z-buffer for gaming. Then of course, the TNT2 followed the next year. Also that year, NVIDIA released their first Vanta graphics card, and also the GeForce 256. Boy, how time flies!

|

Discuss: Comment Thread

|

Battle of the Retail Netbooks: Acer vs. Gateway

At one time, netbooks used to be known as small notebooks that offered just enough power to accomplish rather modest tasks throughout the day, such as e-mail, web surfing, listening to music, et cetera. Things have changed a lot since then, though, as recently, even ASUS has been releasing Eee PC models that in no way represent what we originally thought a netbook would be, primarily in size.

The cool thing about netbooks, though, is that because the prices for such machines are affordable, many people are now purchasing mobile PCs who might not have ever done so in the past. Also because of this, competition is fierce, especially to get into retail channels where regular Joe’s will actually stumble on them.

About a month ago, both Acer and Gateway (Acer owns Gateway) released ~$400 netbooks, one that would see its life in Wal-Mart, the other in Best Buy. Both offer a varied combination of features and perks, and whichever is the better buy is really dependant on your needs. Our friends at The Tech Report took both new notebooks for a spin though, to see which was more worthy of your $400.

In the end, it was difficult for Scott to reach an ultimate winner, as both had their pros and cons, but overall, the Gateway machine looked to pull ahead, thanks to its nice blend of components and style. One hit is against the overall battery-life, but as is mentioned in the conclusion, if it had a second battery (or perhaps an even larger battery), it’d be almost perfect. Now that’s a statement.

Credit: The Tech Report |

The Gateway LT3103 is the most successfully executed of the two systems, because its Athlon 64 processor and Radeon graphics give it the performance to match its larger screen and keyboard. The grown-up looks and finish of the Gateway set it apart from the Aspire One 751, as well. If you like to fret over the semantics of “netbook” versus “notebook,” the LT3103 will positively put you into a tizzy of hair splitting and confusion—endless hours of fun.

| Source: The Tech Report |

Discuss: Comment Thread

|

Google’s Eric Schmidt Resigns from Apple’s Board of Directors

When Google’s Chief Executive Officer, Eric Schmidt, joined Apple’s Board of Directors in 2006, it seemed like a sensible move on both ends. At that time, Google was not considered a competitor to Apple, but almost a compliment, and vice versa, since Google readily supported Apple. Most important, though, is that both companies had a similar goal in mind… to become crucial competition to Microsoft’s core markets.

In the past two years, though, Google has changed their focus in a lot of ways, and as it stands, they’re producing products that actually do compete with Apple in some regards. This became ultimately clear last week when it was discovered that Apple denied Google’s Voice iPhone application, which we can only assume was decided as a result of it “duplicating” iPhone’s features, aka: competing with iPhone’s features.

As that news came forth, the FCC issued a request for information from Apple, AT&T and Google regarding the issue, and that might have caused some immediate action to take place. As of today, Eric is no longer on the BoD, and the reason given by Steve Jobs is, “Unfortunately, as Google enters more of Apple’s core businesses, with Android and now Chrome OS, Eric’s effectiveness as an Apple Board member will be significantly diminished, since he will have to recuse himself from even larger portions of our meetings due to potential conflicts of interest.“

It’s hard to not disagree with that, because after all, that’s exactly the path that Google is going. Google is a company that thrives on being “open”, in that all platforms should be free of restrictions to allow some cool things to happen, thanks to dedicated developers. Apple’s of the opposite mindset. So, it was only a matter of time before the opinions of both companies clashed head-on. If you’re curious about Apple’s current Board of Directors, you can check out the respective page.

Apple is not about being open. It never has been. Every app on the iPhone (all 50,000 of them) must be approved individually, for instance. This difference in approach wasn’t a problem until Google started to have mobile aspirations of its own. Asked to choose between furthering Apple’s mobile agenda or Google’s, Schmidt must choose Google’s. It is his fiduciary duty. That conflict is only going to grow. And that is perhaps why Jobs says his “effectiveness as an Apple Board member will be significantly diminished.”

| Source: TechCrunch |

Discuss: Comment Thread

|

Intel Launches “Progress Thru Processors” Program

Distributed computing used to come off as being a buzz word, but in recent years, more and more computing enthusiasts have taken part in some form, whether it be with Folding@home, Rosetta@home, Seti@home, or the hundred other projects floating around. For those who are unaware, distributed computing is when one main process if computed on more than one computer, and in the case of the aforementioned projects, we’re talking thousands upon thousands.

Many of you who visit our website already take part in some projects, such as Kougar, who takes things very seriously. Intel is a company who has always taken such projects seriously as well (some of their employees are dedicated Folders), but to bring the importance of these projects to the mainstream, the company has just launched a program called, “Progress Thru Processors”.

If you happen to already take part in some distributed project, then this program is going to be of little use, since it caters more towards people who don’t know what it’s all about, or how to get things started. For fairly obvious reasons, Intel chose Facebook as the platform for this, and developed an application to help people get started right away. If you have a BOINC account, you can simply add this app to your Facebook, or if you are a new user, signing up will take only a few minutes.

After sign-up, you will be prompted to download an application called Progress Thru Processors, which is essentially a modified BOINC client suited for easy set-up. So easy, in fact, that all you need to do is launch it, enter your username and password, and you’re good to go. Because the client is automatically associated with this project, each project you opted into will be there. And if you want to add others, you could log into your account (same) at GridRepublic and add them there. As it stands, Intel’s focusing on Rosetta@home, Africa@home and also Climateprediction.

SANTA CLARA, Calif., Aug. 3, 2009 – Often in the fight against cancer, researchers are not limited by their ingenuity, but the resources available to make research effective. The processor power needed to handle complex calculations is often in short supply. To help address this need, Intel Corporation today announced Progress Thru Processors, a new volunteer computing application built on the Facebook platform that allows people to donate their PCs’ unused processor power to research projects such as Rosetta@home, which uses the additional computing power to help find cures for cancer and other diseases such as HIV and Alzheimer’s.

| Source: Intel Press Release |

Discuss: Comment Thread

|

Apple’s App Store Approval Process Gone Too Far?

When Apple’s “App Store” launched just last July, anyone who owned an iPhone or iPod touch found themselves in love with all of what was offered. In my talking to a few iPhone owners shortly after the store launched, many of them had more than ten apps already downloaded, and a few that they found themselves using on a regular basis. Overall, the App Store had incredible success, both for Apple, and the app developers.

There has been one major problem, however, regarding which applications can be found there by users, or put up by developers. Apple has a very stringent application approval process, and if absolutely anything about the app the company doesn’t like, they don’t approve it. In the past year, there have been so many reports of apps pushed away that I’ve lost count, but there seems to be a new story everyday.

Of course, some applications shouldn’t be posted on the App Store, because Apple does need to make sure that their users are safe. But it seems like lately, the majority of denied applications don’t carry along a good excuse. Take the Commodore emulator that was denied last month. The problem there was the ability for the emulator to run code, but to push away such an incredible app for such a reason is foolish. My ideal method would be to warn people about installing such apps. If something killed their iPhone (which is unlikely), then it’d be their fault, not the company’s. Plus, if emulators are denied, that to me says something about the iPhone’s security, or lack of.

Matt Buchanan at Gizmodo is far more aware of the situation with the App Store than I am, though, and his read posted just the other day is a good one. It pretty much sums up exactly why I have little interest in owning an Apple device. The company is too hardcore in their ways, and refuse good apps that are actually very useful… all seemingly in order to please either themselves, or AT&T. There’s far, far too much back and forth with the company. On one day, they might allow something, and the next, they don’t. Screw that… I’ll go where the choice can be found.

The situation crystallizes our worst fears about Apple’s dictatorial App Store. Users aren’t being protected from bad things or from themselves here. Even though it seemed ridiculous to us, when apps with objectionable content were blocked or booted before the ratings system was in place, it was in the interest of some paradoxically lazy but over-protective parent somewhere out there.

| Source: Gizmodo |

Discuss: Comment Thread

|

Microsoft Proposes Including Competitor Browsers in EU Windows 7

Last month, we pointed to a story that explained how the European Union effectively “broke” Windows 7. That conclusion was brought forth, because Opera, and later others, pressured the EU into believing that Windows shipping with Internet Explorer, and only Interenet Explorer, was a problem. I won’t reiterate my thoughts here, but suffice to say, I found their reasoning to be purely asinine.

Opera’s argument was that because Microsoft chose to ship Windows with IE being the only bundled browser, consumers were not getting the choice they deserved. It wasn’t so much just the fact that it wasn’t bundled, but more the fact that consumers might not even realize other choices exist, and therefore, it was a problem. The remedy, of course, would be for Microsoft to bundle the other browsers, and as it looks today, something like that is exactly what’s going to happen.

According to DailyTech, who talked to Opera’s CTO, Windows 7 in Europe will likely offer a ballot screen of sorts, which will list no more than 10 browsers, each of which must have at least 0.5% marketshare. As a result, Apple’s Safari, Mozilla’s Firefox, Google’s Chrome, and Opera’s browser would be included alongside Internet Explorer. Whether or not the browsers would actually be included on the disc, or be downloaded after a choice has been made, is unknown.

Oh, and here’s a quote-of-the-day for you, “This decision to give third parties a chance is good news for Microsoft [emphasis mine], users, and the free market says Mr. Lie.“. No, I didn’t make his name up.

According to Mr. Lie the currently proposal from Microsoft is to present users a ballot screen during Windows 7 installation. Any browser maker with over 0.5 percent Windows browsing marketshare would be eligible to be on the screen, with a maximum of 10 allowed options. This would mean that Opera, Mozilla’s Firefox, Google’s Chrome, and Apple’s Safari would likely be the browsers presented.

| Source: DailyTech |

Discuss: Comment Thread

|

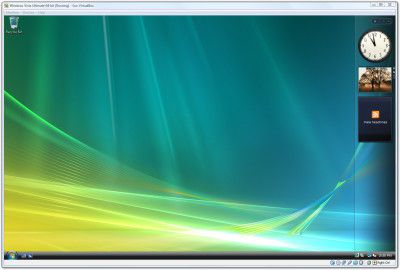

What is Virtualization?

If you somehow missed the latest article at the top of the site, I’m going to take a brief moment here to explain what it’s all about, just in case you’re a bit too weary to enter the realm of uncertainty. First and foremost, virtualization is one of the coolest technologies I’ve ever stumbled on, and as I note in the article, it’s not only for the IT environment. Rather, I feel almost anyone can find a use for it, and that’s why the article was written.

In case you don’t know what virtualization is, or how it works, this article tackles it all. If you’ve ever wanted to run another operating system from within your own operating system, that’s virtualization, and once you realize just how easy it is to get things going, you’re probably going to kick yourself for not realizing it a lot sooner!

What could someone possibly need a second OS for? Simple! Testing out the latest Linux distro is one scenario. Or, running a Windows XP installation inside of your Windows Vista install (or another OS) is an other option, especially if you have older software that might not run in Vista. Or, if you have really, REALLY old software, you could install an even older version of an OS… even DOS, to get the job done. The sky’s the limit.

If you’re curious, check out the article, and as always, please let us know what you thought of it in our comment thread (no registration required). Other comments and suggestions are also welcome!

…platform virtualization takes things to a new level, because you’re not only virtualizing an application, but rather an actual computer. So if you are to run, say, Windows XP inside of your Windows Vista, your virtualization tool creates a software-based hardware environment, so that Windows XP thinks it’s running on a real machine. Confused? As we progress through the article, you’ll hopefully gain a better understanding of virtualization as a whole.

|

Discuss: Comment Thread

|

Western Digital Releases 1TB Scorpio Blue Mobile Hard Drive

Don’t bother wiping your eyes… you didn’t read the news title incorrectly! It feels like storage companies just released 500GB 2.5″ mobile drives, but today Western Digital looks to put those to shame, with their latest Scorpio Blue models, available in both 750GB and 1TB densities. WD if the first to pull this off, and as a result, pretty soon you’ll be able to have a full 1TB worth of storage on your notebook… with only one drive.

The new 1TB density also opens up room for even more storage on your workstation notebook if you have room for more than one hard drive. Or, another way to look at is, is now you’ll be able to use an SSD as your main drive, and then use a 1TB Scorpio Blue as your secondary. Either way, 1TB in a drive that small, is rather incredible. But…

With a height of 12.5mm, the latest drives are close to 32% taller (depth-wise) than typical mobile drives, which means that they will not fit in the majority of notebooks. So, if you’re planning to purchase such a drive, it’s important to make sure your notebook will support it. WD realizes that adoption for mobile platforms will be limited, at least at first, so they’re pushing the new drives more for external storage purposes, such as with their My Passport Essential SE portable drives.

Any way you look at it, though, 1TB in such a small drive is nothing short of incredible. That’s an insane amount of storage to be able to carry with you anywhere… not to mention, it’d be perfect for backing up your computer to. It’s so small, you can stash it anywhere and not look at it until you need it again! Pricing will of course be a bit higher than double 500GB models, but not by much. The 1TB version will sell for $249.99 USD, while the 750GB will carry a price tag of $189.99.

The WD Scorpio Blue 750 GB and 1 TB hard drives have a 12.5 mm form factor[1] and are ideally suited for use in portable storage solutions, such as the newly released My Passport™ Essential SE Portable USB Drives. Other applications include select notebooks and small form factor desktop PCs, where quiet and cool operation are important. Both WD Scorpio Blue drives deliver high-performance with a 3 gigabits per second (Gb/s) transfer rate.

| Source: Western Digital |

Discuss: Comment Thread

|