- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

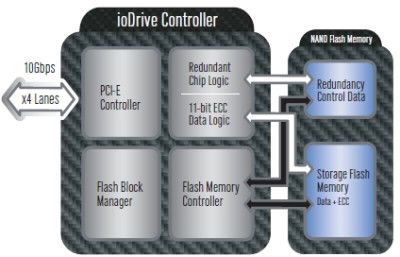

Fusion-io’s Ultra-Fast PCI-E Solid-State Disk Tested

This past October, I linked to a story about a brand-new SSD that didn’t only raise the bar where performance was concerned, but dropped some jaws along the way. The company is Fusion-io, and their product is an SSD that bypasses the S-ATA bus entirely, and instead utilizes the available PCI-Express bandwidth. So, on its PCI-E 4x card sits either 80GB, 160GB or 320GB worth of SLC chips, and speeds that are truly mind-blowing.

The least-expensive version of the product is the 80GB model, which puts it on par in density with Intel’s impressive X25-M. However, Intel wins in price, as the Fusion-io card will retail for $2,995. No, that’s not a joke. The 320GB version is even more humorous, at a staggering $14,400. As for performance, the drives offer speeds of 700 MB/s Read and 550 MB/s Write, compared to Intel’s X25-M speeds of 250 MB/s Read and 70 MB/s Write.

In the tests conducted, the Fusion-io card cleaned house, although it was beat in a few tests by a RAID 6 configuration of eight Seagate 15K RPM drives, although while at 450GB, it costs close to $8,000. In HD Tune, however, the PCI-E SSD didn’t manage to hit its claims, with an average of 469.6 MB/s Read and 353.2 MB/s Write, which might be a sign that the CPU is becoming a bottleneck… something that is completely opposite to how things are with typical hard drives. Still, despite its missed claims, it’s going to be cool when we see more affordable versions of these in the future. It’s bound to happen with performance like this.

When it comes to servers, size matters; 1.75 inches at a time. 1.75 inches is how tall a single Rack Unit (U) server is. I have seen 1U servers capable of holding four full size hard drives, but to hold eight you need to flip the drives around on end. In most cases, to fit eight drives you will need a 4U server. If a company rents rack space in a data center then going from 1U to 4U is a big deal since you are renting space by the unit.

PlayStation Home (Finally) Launches Today

If you own a PlayStation 3, then you might want to pay attention to a rather significant launch that will be available to you at some point today. After well over a year in closed beta, PlayStation Home, or simply “Home” for short, is finally hitting open beta status, and anyone who has the console is able to join in on the fun. It will of course require a download, and a USB-based keyboard is also recommended for the sake of conversation.

Home might have had a good reason to take a while, because it’s an ambitious project. Similar to Second Life kind of, Home delivers an open environment where you can walk around, converse, play games, and even buy things. So it’s like reality… but from your couch.

In the end, Home is nothing more than one heck of a marketing tool, and you’ll be sure that a lot of game developers, and other companies, will take full advantage of the new medium. This isn’t necessarily a bad thing, though, as it adds a bit to the realism, and if the marketing sells you something you actually like, then it might be a win/win all-around. I’m still unsure of the service myself, but I’ll give it a try soon and see if my doubts fade.

If this will be your first time in PlayStation Home, be sure to talk to folks that have been around for a while, as they can show you the ropes. We also have a tutorial built in that will get you started with the controls, as well as help menus for you to reference in the Menu Pad. If you don’t have a USB keyboard or Bluetooth headset paired with your PlayStation 3, now is the time to do so – either option will allow you to easily talk and meet everyone.

IntelBurnTest vs. SP 2004

Once in a while, I find myself with poor concentration, and perhaps a little bit of a lack of ambition. That just comes with the territory of writing so often. But, what also comes with that as a result is some strange ideas for either a piece of content or a news post. On Monday, I was having one such “blah” day (the “case of the Monday’s” really does exist!), so the idea of comparing the effectiveness of IntelBurnTest came to mind.

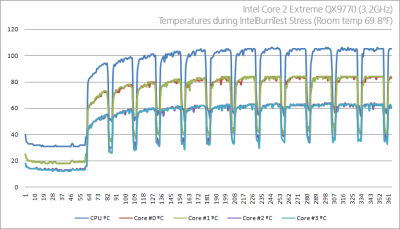

Partial results can be seen in the graph below, and no, that’s not a measurement of my concentration level that day, but rather the range of temperatures exhibited during a half-hour run of IntelBurnTest 1.9. For those who might be unaware, IntelBurnTest is a recent CPU stress-testing tool that carries huge claims of being the most effective method of testing a CPU for stability. After these personal tests, I believe those claims.

Although I’ve used SP 2004 to a great extent over the course of the past few years, it hit me one day just how linear its operation is. Judging by the results posted in the respective thread, the temperatures simply didn’t budge… it just seems to pummel the CPU with the exact same algorithms constantly, and that’s no way to test for true stability, right?

After testing, I’m confident IntelBurnTest is probably the best tool we’re going to find ourselves with right now, unless AMD or Intel ever chooses to release their own stress-testing tools, but that’s not likely. Why IntelBurnTest impressed me is because it showed lots of variation, and it managed to heat up our Core 2 Extreme QX9770 19°C hotter than SP 2004. That’s huge! I’m definitely confident in leaving that in our virtual toolbox for the time-being. It is winter, after all. If a benchmarking rig can dual as a space-heater, bring it on.

I think the results speak for themselves. At its peak, the CPU was pushed hard enough to hit 106°C, and 85°C on Core #0. That is a full 19°C hotter than SP 2004 on the CPU, and 18°C hotter on Core #0. What’s interesting, though, is that while the Small FFT test doesn’t fluctuate in its design at all, no matter how long it runs (it just runs with higher byte sizes), IntelBurnTest pushes more variability at the CPU, as shown in the above graph.

Source: IntelBurnTest vs. SP 2004

ATI’s 8.12 Catalyst Driver Gets Released

ATI today released their brand-new 8.12 Catalyst driver, right on schedule. This isn’t a usual driver release, though, because it not only packs in performance boosts in certain titles, but it also is the first to include ATI’s AVIVO video encoder tool, which is set to directly compete with Badaboom on the NVIDIA side, and while this first release has a few small issues, it’s also free, while Badaboom costs $30. Good value here all-around.

In case you haven’t checked out our latest graphics card article yet, I have to ask… what are you waiting for? Two weeks ago, we took a look at NVIDIA’s GTX 260/216 and pit it against ATI’s direct competitor, the HD 4870 1GB. At that time, NVIDIA won the round fair and square, but thanks to pricing changes, and also performance boosts with ATI’s new driver, the playing field is about even, depending at how you personally look at things. I recommend reading it if you are in the market for a killer mid-range offering.

According to the release notes, thirteen games should see a performance increase with the latest driver. ATI promises a 13% increase for Crysis Warhead (we saw 1%), 15% for Fallout 3 (we saw exactly that), and 10% in Far Cry 2 (we saw 7%). Although S.T.A.L.K.E.R: Clear Sky and UT III was used in our tests in the latest article, we didn’t benchmark them the first time, so we don’t have a comparison there.

Regardless, none of that is too important, since drivers are free, and if you own a recent ATI card, it will be worth the upgrade. For those still on the fence about which new mid-range card to choose, be sure to check out our article!

From the performance aspect, ATI made some nice improvements with their 8.12 driver, and they’re reflected here. Although the HD 4870 1GB didn’t manage to overtake NVIDIA in every test, they did catch up in some, and even overtook in a couple. Those couple being Crysis Warhead and Fallout 3. We also saw some great performance in non-holiday titles as well, which included S.T.A.L.K.E.R.: Clear Sky and Unreal Tournament III. In fact, we found that ATI performed better on all the non-holiday titles used here.

Source: ATI HD 4870 1GB vs. NVIDIA GTX 260/216 896MB: Follow-Up

Intel Core i5 Loses QPI and Triple-Channel Memory Controller

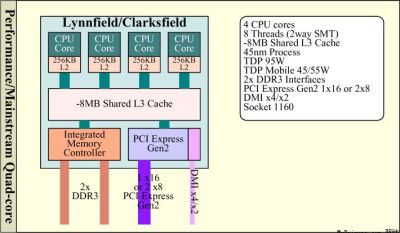

Information regarding Intel’s upcoming Lynnfield processor has been leaked, with a real processor sample benchmarked. From what we can see so far, Lynnfield is going to be quite different than Core i7, and from the CPU-Z screenshots, it looks like Lynnfield = Core i5. But it’s important not to think that it’s simply a slower i7, because that’s not the full story.

According to a few slides found on the originating thread at ChipHell, Lynnfield will remain a Quad-Core variety like i7, but the QPI bus will be removed, along with the tri-channel memory controller. Rather, we’d be moving back to a DMI solution and will revert to dual-channel DDR3 that we’ve become accustomed to. Also, given it’s seemingly budget focus, the maximum-supported GPU solution will be one PCI-E 16x, or two PCI-E 8x, which we can assume is more of a chipset issue than a CPU issue.

One other important piece of info is that Lynnfield will not follow along the same lines as i7 and be built around an LGA1366 socket. Rather, since QPI and other features will be shaved off, less contacts are needed, and that results in LGA1160 socket. That unquestionably has the power to make things a little confusing.

Along with the first Core i5 processors will come Intel’s 5-series budget-variant chipset, which we can assume will be called P55. It’s unknown at this point all of what that will bring, but it seems safe to say that it will be quite similar overall to P45, except with support for the latest socket and processors.

Where Lynnfield fits into Intel’s plan is a little tough to gage, but it seems likely that what Core i7 processors are available now, will remain the only ones available, with the possibility of one or two more above and below the current offerings being released. This is just personal speculation, however. Also, Core i5’s mobile counterpart is Clarksfield, and according to the same thread as mentioned above, it will also carry identical specifications to Lynnfield. The performance information I mentioned can be found at the Expreview URL below, but it was down at the time of writing.

Putting Cash to Good Use: Toys for Tots Fundraiser

I don’t dedicate much of our news space to contests or promotions outside of our website, but a recent fundraiser posted by our friends at TheTechLounge is too good to look over. They’ve teamed up with a countless amount of sponsors in order both raise funds for the Toys for Tots program, as well as to give you a good chance at winning a great prize.

The Toys for Tots program is one that helps deliver joy to children less-fortunate, which is important during a time of year where it’s easy to take things for granted. Although toys aren’t everything, as a child, it’s the simple things that can make a huge difference, which is why charities like this exist.

To help raise funds, tickets are being raffled off, and for every $5 donated, you receive one entry into the virtual hat for a chance at one of over eighty prizes. The event ends at the end of the month, and the site needs your help in order to hit or at least come close to their $25,000 target, with every cent earned going straight to the charity. I’ve known Kurtis and Brian for a while (and also the amount of effort put in here), so even if you have just five bucks to put somewhere, why not put it towards a great cause?

The objectives of Toys for Tots are to help needy children throughout the United States experience the joy of Christmas; to play an active role in the development of one of our nation’s most valuable natural resources – our children; to unite all members of local communities in a common cause for three months each year during the annual toy collection and distribution campaign; and to contribute to better communities in the future.

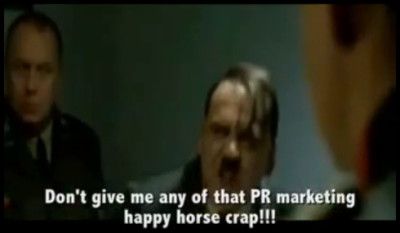

Hitler’s Rant About the Nikon D3x

When people take a movie and add their own custom subtitles in an attempt to make us laugh, it can be hit or miss. In the case of a new video parodying the movie “Downfall”, it’s definitely a hit. Downfall is a movie that follows Hitler on the final twelve days of his life in the Führerbunker, and there’s one particular sequence that people love to parody, and there’s proof of this all over YouTube.

The video revolves around Hitler’s whining about Nikon’s latest D-SLR, the ultra-expensive D3x, a 24 megapixel offering which doesn’t seem to greatly improve-upon the original D3 except in that area. Not for twice the price, at least. You may have to be a fan of cameras in order to enjoy the video, but probably not for all of it. It’s easily the funniest piece of video I’ve seen in a while though.

Classic lines include, “Nikon is asking $8000 for a 24 megapixel SLR when Sony can make one for FIVE THOUSAND DOLLARS less!!” and “Makes me wonder why the hell I went digital“. If cameras aren’t your thing, then you might better enjoy another parody that takes the same scene, but uses Windows Vista as the target. It’s also quite funny, so both are worth taking a look. The quote below is from the Wikipedia article on the movie, and gives a outline of the particular scene.

Hitler is discussing the situation with the generals. Outside, Junge still naively believes that General Felix Steiner will attack and save them. But she is wrong, as Steiner cannot mobilize enough men. Upon learning this, Hitler tells every one to leave the room except the four highest ranking generals present. Hitler then gives them a loud rebuke that can be heard by the people outside. Gerda begins to cry. When he has finished, Hitler states that he would prefer to shoot himself than to surrender.

OpenCL 1.0 Specification Released, ATI & NVIDIA Adopt Support

The open GPGPU computing language, known as OpenCL, hit a huge milestone today, making the release of the 1.0 specification. This is news we’ve been waiting for, for quite a while, because this might be just what we need to see general purpose GPU computing taken to the next level. For those unaware, OpenCL is, as the name suggests, an “Open Computing Language” based on C, that becomes a standard coding general purpose applications for use on the GPU.

Overall, OpenCL loosely resembles ATI’s Stream and NVIDIA’s CUDA technologies, but the problem with those is that they’re dedicated to their respective architecture. CUDA applications are not compatible with ATI’s cards, and vice versa. That’s why OpenCL is important, because it creates a standard that allows developers to code an application that will work on any OpenCL-compliant GPU, not just one.

Industry support has been rather favorable, with both ATI and NVIDIA adopting the standard, and Apple has been long known to have been planning support for the technology in Mac OS X 10.6, “Snow Leopard”, which is due in the middle of next year. It will become the first OS to support the technology, and the inclusion of its support is a great sign. If Apple is confident enough to utilize OpenCL in their most-important product, then I think it’s safe to say that the future looks good for the new standard.

Update: It turns out I wasn’t entirely clear here, so I’ll re-word myself a little bit. Above, I didn’t mean to insinuate that CUDA or Stream were computing languages of their own, but rather that each one is a hardware architecture in which the computing language sits. So, to correctly state things, OpenCL is a computing language that sits on top of ATI’s Stream or NVIDIA’s CUDA hardware architectures. Apologies for the incorrect wording!

“The opportunity to effectively unlock the capabilities of new generations of programmable compute and graphics processors drove the unprecedented level of cooperation to refine the initial proposal from Apple into the ratified OpenCL 1.0 specification,” said Neil Trevett, chair of the OpenCL working group, president of the Khronos Group and vice president at NVIDIA. “As an open, cross-platform standard, OpenCL is a fundamental technology for next generation software development that will play a central role in the Khronos API ecosystem and we look forward to seeing implementations within the next year.”

Mirror’s Edge PhysX Comparison Video, 2K & EA Join the PhysX Club

Last month, we posted about DICE’s agreement with NVIDIA to license PhysX technology for the upcoming PC version of Mirror’s Edge. On the same day, NVIDIA released a high-resolution video that showcased just what the game would look like with the technology enabled, and today, they make things a little easier to digest by showing a direct comparison, PhysX vs. No PhysX. You can view the video below, or head over to our Vimeo account for the full-resolution version.

The video shows that there is a rather substantial difference with no PhysX. It’s not so much a matter of less-realistic reactions to the environment, but in some cases, objects are simply not there, such as drapes hanging down or trash blowing in the wind. It’s clear that PhysX will add a bit to the immersion factor, but we’re unsure at this point how users with ATI cards are going to fare.

While ATI cards will be able to support some physics, some objects as I mentioned, simply won’t be there. The lack of such objects could result in far different gameplay, and if you watch the video, you can see what I mean. While certain physics animations will occur on an ATI card, or with PhysX disabled, they’re much more realistic with it turned on. Debris sticks around longer, and actually reacts to your movement.

As soon as we receive our copy of the game, we’ll test the game on both NVIDIA and ATI cards, with and without the PhysX capabilities, then draw our own conclusions. Also announced today, though, are new partnerships between NVIDIA and both EA and 2K Games. Both companies plan to use PhysX extensively in the future, but only 2K’s Borderlands has been announced to utilize it.

“PhysX is a great physics solution for the most popular platforms, and we’re happy to make it available for EA’s development teams worldwide,” said Tim Wilson, Chief Technology Officer of EA’s Redwood Shores Studio. “Gameplay remains our number one goal, with character, vehicle and environmental interactivity a critical part of the gameplay experience for our titles, and we look forward to partnering with NVIDIA to reach this goal.”

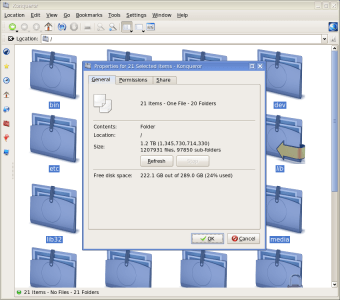

How Many Files Are On Your PC?

Back in October, I made a post that was half a whine-fest, but carried information about a special tool that saved me more than once from breaking down and crying like a little girl who just dropped her fresh ice cream. TestDisk was the application, and I recommend reading the post, because if you ever run into the instance where it would come in handy (and chances are, you will), you are going to want to know about it.

That aside, although TestDisk recovered my partition and allowed me to get back all of my data, I found out later on that half of my music collection had new-found skips, which was a result of my screw-up. Bad news, since it meant I had to re-rip my entire collection, which is something else I relayed through our news section. It took exactly one month, but I managed to re-rip my entire music collection to FLAC, and the time came to actually back it up this time.

So I ran out (sat in my comfortable chair and ordered it online) to pick up a Seagate Barracuda 7200.11 1TB drive, which was to serve the purpose of off-loading some of the data on my NAS box and also my main storage drive. It was also to serve the purpose of added backup… something I should have thought harder about in the past. That all said, the installation was great, and I now keep three exact copies of the music collection, so save for the house burning down, I should be good.

What this post is about isn’t about that exactly, though. Since I was a wee bit bored and decided to mess around with the computer, I decided to check to see how many files could be found on the entire computer. Last time I remember doing something like this was back when I used Windows XP full-time. Then, I think the total was around 300,000 total files, which was incredible. To picture that many individual files on a single PC was a little staggering.

To my surprise though, things are even crazier nowadays, although I’m sure this will be on a case-per-case basis. After scanning my entire machine (90,000 files are a result of Techgage backups), the total was 1.2 million… staggering. No matter how you look at it, THAT’S a lot of freakin’ files. So, how many files do you have on your PC? It’s definitely a useless metric, but it’s fun nonetheless. For Windows, you could simply bring up a search and point it to your main system drive, or all drives in your PC, then use ‘*.*’, without quotes, as the search term. Prepare to wait a little while after pushing go.

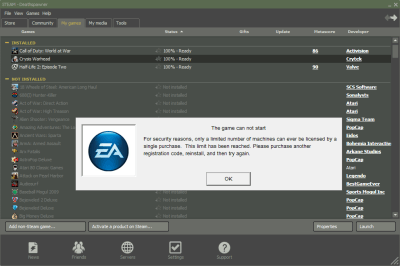

Crysis Warhead Features 5-Time Activation Limit

DRM is a hot topic… there’s no denying that. It’s a subject that I’ve tackled in our news section a lot, and in many ways, I might sound like a broken record. But, there’s just something about DRM that inspires me to write something. It’s more than just inspiration, though. It’s more of an anger-filled passion that forces me to say something. I just can’t keep shut. That’s just how it goes.

Even though I’ve despised DRM for a while, it never affected me (aside from Windows licenses, maybe) until this past September, when S.T.A.L.K.E.R.: Clear Sky came out. I was psyched to begin benchmarking with that particular title, but the fun was short-lived, if you recall. That game, like Spore and a few others, has a five-time install limit.

Clear Sky takes things to the next level, though, because while most games assume that the motherboard is equal to the computer, if you as much as swap out a video card with Clear Sky, say good bye to one of your installs. Even today, I’m forced to use a publicly available “crack” that allows me to bypass the security. I have no shame in admitting it, either. If any wants proof that I own the game, feel free to check here for it.

So why am I bringing this all up again? Well, it’s because I’ve been hit with DRM in the worst way once again, this time with Crysis Warhead. This is an issue that really took me by surprise, because I had no idea this title had DRM, much less an install limit. Unlike Clear Sky, though, this game actually does base it’s install limit on more than just the video card, which for most gamers, is a good thing.

Oddly enough, it took months for me to realize this, which might be partly thanks to Norton Ghost, which allows us to restore OS installations with ease. On top of that, since we generally use the exact same motherboard for GPU testing, the game had no complaints. Still, it’s clear to me that DRM continues to be a huge problem, and though I’ve said it before a hundred times, people who pirate the game have not a thing to stress over. They can play the game just fine.

What bothers me most about EA’s DRM, though, can be seen in the above screenshot. It isn’t so much the fact that the game isn’t usable, but it’s their recommendation: “This limit has been reached. Please purchase another registration code, reinstall, and then try again.” Wait, what? EA actually wants to you re-purchase the game if you expire all five of your installs? It doesn’t take a brainiac to realize how asinine this is. It could be just poor wording, as after searching around, I found out that you can give them a call and explain the situation. Then they’ll apparently reset your count to zero, which is very kind…

Bopaboo Offers Place to Sell “Used” Digital Music… Seriously

For as long as music has been sold, so has “used” music. Who hasn’t at least once gone into a music store and looked at the hidden gems in their used section? Doesn’t have to be music… could be video games, movies, anything. There’s a reason flea markets are still so popular. Well, since so many things have gone digital (including all three of those things), the idea of selling them “used” is probably something that not many people have even contemplated.

Until now, maybe. Whether this is a joke or not, it’s hard to tell, but online site Bopaboo (currently in a sign-up beta) has that exact idea in mind. Reading their site, you’ll see messages like “Bopaboo is your place to buy and sell digital music” and “start legally selling”. How exactly you “legally” sell digital music is beyond me, and it seems to be something that’s confusing a lot of people. One thing’s for sure, if this service ever goes public, the RIAA is sure to be knocking on their door fast.

The problem is twofold. The first problem is honesty. If you sell a track, nothing forces you to delete it from your computer… and this service sure isn’t going to have a way to verify that. Such an issue could result in huge piracy. Someone could either sell lots of the same song, or people could share collections and sell that way. This sounds about as shady as AllofMP3, back in the day.

The other problem is of course the music industry. They are going to have something to say about this, regardless of whether or not they can prove it’s wrong. It’s not illegal to sell a CD you bought at a store, so it’s very difficult to prove that digital music is any different. Realistically speaking though, you could easily sell an album and never delete it from your own computer, and that fact is where things will get sticky. This is going to be an interesting to watch. Despite it being an oddball service, I hope it actually does launch, just to see what kind of chaos ensues.

The logic behind it is that it’s legal to sell on a CD you’ve bought – so why can’t you do the same with a music file? In case you haven’t spotted it yet, the difference is that when you sell a CD, you don’t get to keep an identical copy of it for yourself. Which is probably a point that the record labels and their lawyers are making right now.

OCZ Releases Throttle eSATA-based Thumb Drive

When USB-based thumb drives first came out, they were an object of envy. But, initial prices were rather ridiculous. The first one I ever had was a “freebie” from Dell with the purchase of an overpriced notebook (at the time). At 64MB, I couldn’t really do much with it, except store very small files, but hey, it still came in handy at the oddest of times.

Because of the sheer popularity of these things, it’s hard for companies to differentiate themselves from the crowd. The top-end speeds were hit a while ago (due to USB 2.0 limitations), and there’s only so many different styles before things become a bit boring. Well, OCZ is trying to change things up, and might do so with their eSATA “Throttle” flash drive, which, as you probably guess, utilizes the eSATA port on your desktop or notebook.

Thanks to the connection, Read speeds are clocked at 90MB/s, while Write speeds remain the same as a typical USB thumb drive, at 30MB/s Write. Surely, most people would prefer faster Write speeds, but it sure doesn’t seem to be happening with the current flash chips available… at least at this price-range.

What would interest me, though, is booting an OS from this thing. People are already doing that with their USB drives, but with 3x the overall Read speed, theoretically, it should almost be as fast as a mechanical hard drive. This would also depend on whether or not your motherboard could boot from eSATA, but chances are good that it would be able to. Interesting product nonetheless.

The OCZ Throttle eSATA drive offers performance and versatility for enthusiasts that demand the best hardware. The integration of eSATA connectivity now extends beyond desktop systems to laptops, offering increased data transfer rates with extreme portability while eliminating extra cords and power cables.

GTA IV PC Release Plagued with Show-Stopping Issues

So, Grand Theft Auto IV was finally released for the PC yesterday, and what isn’t much of a surprise is that it’s proven to be one of the buggiest game launches of the year. People are comparing it to the original Crysis, but I don’t recall that game getting quite this much flack. Problems include missing textures, a non-launchable game, settings that can’t be changed, et cetera.

Even the Steam forums, an unofficial spot for chat, has seen its share of heated debate since the launch, resulting in what I’m sure is the most-active sub-forum currently there. So, what’s Rockstar’s excuse for the flopped launch? They don’t seem to have one, but they do offer word of advice for those experiencing performance issues:

“higher settings are provided for future generations of PCs with higher specifications than are currently widely available.“

That seems a bit strange, given the game is essentially a console port, and its graphics are nowhere near as good-looking as, say, Crysis Warhead, Call of Duty: World at War, Fallout 3, Far Cry 2… games that work just fine on good detail settings on mainstream computers. It seems this is due to a poor coding job, because there’s no way the graphics in this game are that intensive. As we can see with games like STALKER: Clear Sky, it’s not only “console ports” that can suffer this issue. That game also offers horrible performance even on the highest-end machines (especially using DX 10 lighting)”.

Rockstar is actively working on rectifying the issues here, though, and it really shouldn’t be that long before a patch is released. If you own the game and really want to enjoy it, it might actually be a good idea to wait for a fix so that you can avoid frustration.

Problems range from dire performance issues to numerous error codes to a lack of SLI support, with scores of unhappy users flooding forums with their “broken things” laundry lists and Steam even going so far as to start offering refunds for unhappy downloaders. The main bone of contention seems to be with the game’s graphics settings. Even powerful PCs are being forced to run the game at low settings (and even then, there are numerous texture errors).

Windows Usage Dips Below 90% for First Time

Microsoft’s Windows might still be the dominate operating system on the market, but as more and more people are learning about alternative OS’, their dominance is slowly decreasing, but seems to be doing so at an increasing pace. According to recent reports from Net Applications Inc., a service which monitors select major websites, Windows saw a large 0.84% decrease in usage compared to the previous month, which is the largest drop seen in the past two years.

Those decreases now put Windows right below 90% usage, which while still clearly represents Microsoft’s dominance, it’s still a rather significant change. Apple’s OS X has been the hogging the rest of the OS usage overall, with a recent 0.66% jump to sit comfortably at 8.9%. Linux, in third place, settles in at 0.83% usage.

In Windows-specific usage, Vista claims 20.45% of that usage, while XP continues to slowly drop, but is still the most commonly-used version, at 66.31%. Windows 2000, surprisingly, still has some good usage, at 1.56%. For even more information, you can go straight to the Net Applications site to view and fine-tune the information you’re looking for.

Vince Vizzaccarro, Net Applications’ executive vice president of marketing, attributed Windows’ slip to some of the same factors he credited with pushing down the market share of Microsoft’s Internet Explorer browser. “The more home users who are online, using Macs and Firefox and Safari, the more those shares go up,” he said. November was notable for a higher-than-average number of weekend days, as well as the Thanksgiving holiday in the U.S., he said.

AMD’s Processor Roadmap Revealed

The wait for Phenom II might be a little rough, but we’re now closer than ever to launch, and thanks to AMD’s updated roadmaps, we know just how close. According to the roadmap, which covers the entire first-half of 2009, AMD will be releasing a total of fourteen models, starting off with two Quad-Cores next month. We’ll then see follow-up chips the following month which will also introduce tri-core models. Dual-Cores will be released at the end of this cycle, in June.

Along with the new releases will of course be the updated number scheme, which turns out to be very similar to how Intel is currently handling their Core i7 models. The first two CPUs next month, for example, will be Phenom II X4 940 and 920… the exact same numbers being used by Intel’s own current mainstream offerings. These will be clocked at 3GHz and 2.8GHz, respectively, and include 8MB of total cache.

While the first releases will use the AM2+ socket, follow-up releases in February will introduce the first AM3 chips. There, the X4 910 2.6GHz (8MB Cache), X4 810 2.6GHz (6MB Cache) and X4 805 2.5GHz (6MB Cache) are the three Quad-Core offerings, while the X3 720 2.8GHz (7.5MB Cache) and X3 710 2.6GHz (7.5MB Cache) will be the first two tri-cores with the Phenom II moniker.

Moving past that, April will bring a new model, the X4 945, which features identical specs as the X4 940, but is designed for the AM3 platform. April will also introduce the return of the Athlon branding. The initial models will be available in both Quad-Core and Tri-Core varieties, but are more budget-oriented. Athlon Dual-Cores will come in June, as already mentioned.

Pricing isn’t yet known, but we can assume they’ll be quite competitive compared to Intel’s Core 2 offerings. As we found out the other day, the server-equivalents don’t manage to dominate Intel’s Xeon’s, but AMD has made large improvements to their architecture and that alone is reason to be excited. It’s going to be hard to wait to see how these CPUs handle in regular desktop scenarios… information that’s been rather scarce.

The launch date for the 45nm AM2+ quad-core Deneb is now reported to be January 8; the CPU was originally expected in mid-December. When February 2009 rolls around, AMD will unveil six socket AM3 45nm processors including the Phenom II X4 925 with 6MB of L3 cache and a Phenom II X4 910 with the same cache. A pair of 4MB L3 cache processors, the Phenom II X4 810 and Phenom II X3 710 will debut the same month. Also tipping up in February will be a pair of 6MB L3 cache CPUs including the Phenom X3 720 and the Phenom II X3 710.

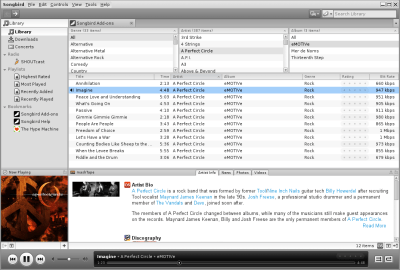

Open-Source Songbird Music Player Hits Version 1.0

The up-and-coming open-source music player “Songbird” has just hit a major milestone: Version 1.0. “Up-and-coming” might be a poor choice of words, though, as the developers have stated that usage is now above 160,000 users, which is an incredible show of support for an application that didn’t release a “stable” version until just now.

Songbird, unlike so many other music players, is unique in various ways. The first major feature is the fact that it’s open-source, so anyone can download and alter the code as they wish (and hopefully contribute to the project). In addition, it’s actually a combination music player / web browser, and is built on top of Mozilla’s XULRunner platform. This means that it has a solid base and that extensions (plugins) are plentiful.

At first glance, Songbird looks similar to iTunes, and overall, I suppose that’s a good thing. It’s an extremely clean design, one that’s neither busy or difficult to navigate. Plus, it also happens to look pretty-much the same regardless of what platform you’re using it on (Windows/Linux/OS X). Other key features include support for many formats (MP3, FLAC, etc), iPod support (including FairPlay support), built-in RSS capabilities, support for Last.fm and SHOUTcast, and more.

I installed Songbird on Linux, and it couldn’t have been easier. It includes all the required dependencies, so it was just a matter of extracting the archive and running the executable. It didn’t take too long to realize this might not be for me, but my reasons are going to likely differ from most. Being a long-time Amarok user, I’ve become very accustomed to how things work there, and Songbird is fairly different.

One thing I can say though, is that the application is fast. It took about two minutes to import my collection of 7,000 FLAC files, which isn’t bad at all. General navigation was also speedy, as well. I won’t get into the main problems I had with the program (not problems per se, just functionality I want) since I don’t turn this into a novel, b the user-base of 160,000 can’t be wrong. If you are looking for a new media player, this is definitely one that should top your list.

We set out to build an open, customizable music player. Today, we’re launching with dozens of integrated services, hundreds of add-ons, and a growing developer community. We’ll be the first to admit that there’s plenty left to do. And, while we’re not ready for everyone, 160k users a month are expressing their vote for an alternative music player.

Apple Recommends Using AntiVirus Under OS X

One thing that Apple users have long been able to brag about is the overall security available on the Mac. For the most part, though, it’s not so much that it’s secure, it’s that OS X has never been the main target of virus and malware writers, or the target of various other exploits. Given the continuing market share growth, though, that’s changing, and changing fast.

According to Apple themselves, users should consider using an AntiVirus, which is quite a statement, given their ambitious advertising campaigns that portray OS X as being impenetrable. The three recommendations given on their support page are McAfee VirusScan for Mac, Symantec Norton AntiVirus 11 for Macintosh and Intego Virus Barrier X5.

Being mostly a Linux user, I also feel like I don’t have to worry that much about viruses, but the truth is, I feel the same way whenever I use a Windows machine, too. As long as you are careful and don’t needlessly download everything you see, you absolutely don’t need one. I ran Windows full-time for two years without using one, before moving to Linux, and I didn’t encounter a single one. Just play it safe, that’s the key.

So what caused Apple to change its tune? One major factor appears to be the rise in non-OS attacks. While Apple’s base OS is relatively secure, many of its programs, both Apple and third party have numerous vulnerabilities; among them Flash and Apple’s Safari web browser. Dave Marcus, director of security research and communications at McAfee states, “Apple is realizing that malware these days is targeting data, and valuable data exists just as much on an OS platform that is a Mac as it does on an OS platform that is Windows.”

Adobe’s 64-bit Flash Plugin Worth the Upgrade

Adobe surprised the Linux world a few weeks ago with the release of their alpha 64-bit Flash plugin, as we covered here, and I said I’d follow-up with my thoughts, and I ‘ll do so now. It took me a week after the release to get around to upgrading, and I can honestly say I regret not doing so right away, because the differences between the previous version is huge.

Although Adobe has been good lately with keeping Linux users current, we (like Windows and Apple users) were stuck with using a 32-bit plugin in a 64-bit browser. The difference in Linux is that it simply wasn’t that stable. Things improved a lot when Flash 10 was released, but the situation was still far from ideal, with the Flash player actually crashing during browser use, requiring a constant reload of your tabs, or a restart of the browser entirely.

Well, I can say that this “alpha” is far superior to the current stable version. Since installing it, Flash hasn’t only been more stable, but half of its features have worked properly, too. The best example of “fixed” functionality would be at ASUS’ website. There, if you hover over the top menu (Products, About ASUS, etc), it will cue a menu. Before, this menu would hide behind their Flash animation up top (this is not the only website to do this, it was a universal problem), but now, it works without issue, just like it does on Windows and Apple.

I can’t assume that my experiences are going to be the norm, but if installed correctly (I used Gentoo’s Portage to handled the installation here), I’m confident there will be some impressed people out there. Since upgrading, Flash hasn’t crashed on me while surfing… something that happened multiple times a day before. Bear in mind that this is still alpha software, and you might experience some oddities that I didn’t. Worth the try if you are feeling brave, though.

![]()

This public prerelease is an opportunity for developers and consumers to test and provide early feedback to Adobe on new features, enhancements, and compatibility with previously authored content. Once you’ve installed the Flash Player 10 prerelease, you can view interactive demos. You can also help make Flash Player better by visiting all of your favorite sites, making sure they work the same or better than with the current player.

Half-Life Recreation “Black Mesa” Due Next Year

Less than two weeks ago, I posted about the tenth anniversary of the original Half-Life, and recalled on just how incredible of a title it was. If you were a PC gamer back in 1998, you knew what Half-Life was, and chances are, you completed the entire game. There might even be a chance that you loved the game, like so many others. Well, if you are one of those, prepare to get psyched.

“Black Mesa” is a mod like few others, as it’s not something simply layered on top of another game, but rather, it’s an entire game, period. Why it’s special is because it’s a total recreation of the original game, and if you are at all familiar with it, then you’re going to see many things that you recognize. The official site is linked to below, but if you want to see the trailer right away, you can grab an embedded version over at GameTrailers or download a 720p version from FileFront. I recommend the latter, because you can see all of the intricate details.

This mod isn’t a simple recreation of the original, but rather a completely new game in it’s own rite. Everything is built from the ground up, and utilizes the robust Source engine. Even the levels themselves are upgraded where it makes sense. The result is hard to explain… you really have to check out the trailer and also the media page. It’s coming in 2009, and it looks to be a “must play”. The trailer is the proof.

Our programmers have been hard at work overhauling and expanding the AI, and lots of our NPCs have been brought to life by our talented voice actors. Levels and chapters continue to be worked on and fine tuned, with large sections strung together and playable. We’ve also begun tackling the final content of the game, some of the most creative and technically challenging stuff we’ve had to do yet.

AMD’s Shanghai “Phenom II” Server CPUs Put to the Test

As we found out two weeks ago, AMD is doing a great job in boasting what their Phenom II processors are going to be capable of. That was proven when the company revealed a 4.00GHz overclock on air cooling, and if you’ve been paying attention at all, it’s easy to see why that’s an impressive improvement over their current-generation offerings.

One important bit of information we’ve been lacking, though, has been general performance increases. Overall, we knew that Phenom II was going to be faster than the original (sequels are always better than the originals, right?!), but the big question was with regards to how it would compare to Intel’s Core 2 line-up, or even Core i7.

Well, our friends at the Tech Report have taken a look at the server version of Phenom II, called Shanghai, and have developed conclusions that we were expecting. AMD made great effort to increase the power efficiency, and these new CPUs are indeed faster, but they’re still going to fall behind Core i7 performance. That was highly expected, however.

AMD isn’t at a total loss though… far from it. Their new CPUs excel in certain tests, and where they do seem to fall behind the most is with desktop-type applications, not server applications. So hopefully further improvements will be made to the desktop version of Phenom II before release, and if not, we’ll still be left with what seems to be a great improvement over the original.

In many cases, Shanghai at 2.7GHz was slightly behind the Xeon L5430 at 2.66GHz. The Opteron does best when it’s able to take advantage of its superior system architecture and native quad-core design, and it suffers most by comparison in applications that are more purely compute-bound, where the Xeons generally have both the IPC and clock frequency edge.

Microsoft’s “Warp 10” Will Allow CPU-Accelerated DirectX 10

Here’s one I didn’t quite see coming. Microsoft is working on allowing Direct3D 10 and 10.1 to run off of the processor, rather than require integrated or discrete graphics. The idea is to use the CPU to push out ample graphics performance to allow basic operation of Windows, in addition to light gaming, and I do mean light.

What that means for Microsoft is a potential end to the Vista-capable debacle. If Aero, or whatever the Windows 7 equivalent is called, could run off of the CPU, then the problem simply vanishes. According to them, this “WARP 10” system can run off of a CPU with an 800MHz frequency, which technically shows that the faster the CPU, the better the graphics performance.

According to the article, this technology proved to be even faster than Intel’s current integrated offering, but the results are a little hard to settle on. The test machine used was an eight-core Core i7 machine, which is a lot of power. If WARP 10 took full advantage of the spare CPU cycles and still only achieved a 2FPS increase over Intel’s integrated solution, then I don’t think there’s a reason to get excited right now.

The most important thing would be to gain enough graphics power to enable the special OS features. As long as that happens, then we can honestly say Microsoft made a smart development move.

(Picture this, but at -1 FPS)

Of course, software rendering on a single desktop CPU isn’t going to be able to compete with decent dedicated 3D graphics cards when it comes to high-end games, but Microsoft has released some interesting benchmarks that show the system to be quicker than Intel’s current integrated DirectX 10 graphics.

Micron Expects 1GB/s SSDs Within the Year

It’s become common knowledge that upgrading your PC to “top-of-the-line” status right now will mean little six months from now. Faster components come out all the time, from CPUs to GPUs and even storage. The latter is one area where things are happening really fast, though, and proof of that is with SSD technology. We are constantly being bombarded with new releases that are not only larger in density, but faster and less expensive.

The fastest “consumer” SSD on the market right now (to my knowledge) is Intel’s X25-M, which has a Read speed of 250MB/s and Write speed of 70MB/s. The company’s own enterprise SSD, the X25-E, increases the Write to a staggering 170MB/s, which is all fine and good, but Micron believes that we’ll be seeing SSDs within the year that will be able to withstand up to 1GB/s performance, four times what Intel’s current SSDs are capable of. It’s unknown whether that figure is just for Read performance, or both, but it’s likely to be the former.

Tests that Micron themselves have been showing off have seen results into the 200,000 IOPS area, which is truly incredible in every regard (Intel’s X25-E is 35,000). Using blocks ranging between 2KB and 2MB, the IOPS performance was still impressive, at ~160,000 IOPS. So the problem is, if we are going to be seeing performance like this within the next year, it’s going to make purchasing an SSD today a difficult process. It will be nice once things settle down, but it’s definitely fun to see the performance of these pushed to incredible heights. The future of storage is looking very good.

Klein added that Micron’s SSD uses “multiple channels” and was built interleaving 64 NAND chips to achieve its high throughput. The SSD is also based on several technology advances announced by Micron this year, including its 34 nanometer NAND chip architecture announced in May and the RealSSD P200 series drives announced in August.

Symantec Releases Norton AntiVirus “Gaming Edition”

One common complaint I hear often with regards to anti-virus applications is that they can suck the fun right out of your gaming. The problem could either be simple, such as a pop-up that boots you to your desktop, or a more complicated one where portions of your online game won’t function at all (eg: updaters). Sadly, the most common answer to someone’s predicament is usually, “Just turn the AV off.”, which is clearly the wrong one.

Symantec seems to recognize this fact and have released a “Gaming Edition” of their anti-virus software. Its “Gamer Mode” essentially allows you to halt almost all operation during gameplay, except virus detection. This means that you’ll receive no pop-ups during gameplay, nor will the AV even download updates. All it will do is continue to monitor the system in case a virus happens to make it onto your system during use.

That basically sums up what the “Gaming Edition” is all about. The respective product-page also has a few bits of information that might be of interest to some. Symantec touts that during regular use, the AV will use an average of 5.38MB of RAM, and that its install size is 49.7MB. That’s impressive, especially since Norton tends to be known as being one of the more “bloated” AVs out there. I’m not much of a Windows user, and I don’t run an AV even when I do use it, but this new version has me tempted.

Norton AntiVirus 2009 Gaming Edition is the fastest virus protection you can get. It stops spyware, worms, bots, and other threats cold—without slowing down your PC. When you’re gaming, your protection should get out of the way. Norton AntiVirus 2009 Gaming Edition does exactly that.

Current Core i7 Processor Pricing

It’s been two full weeks since Intel’s Core i7 processors have been launched, so I thought I’d take another look at current pricing to see where things stand. At launch, there were only two stores that had the CPUs and other required components in stock, but that has changed for the better. The motherboard and memory selection is great, so regardless of whether you want to upgrade as cheaply as possible, or go all out, there is much choice to be had.

The situation regarding CPU coolers is a little ridiculous though, as both NewEgg and NCIX seem to only stock one particular model each. I’ve searched other e-tailers as well, and not a single one had any in stock, and if they did, they’re making them far too difficult to find. Given that the embargo on these processors was lifted close to a month ago, there’s really no excuse to not have a better selection by now.

That rant aside, using our pricing engine, I found a flurry of e-tailers that are now selling the CPUs. I’ve ranked them from lowest-to-highest price to keep things simple.

- Intel Core i7 920 (2.66GHz) – Mwave ($294.00)

- Intel Core i7 920 (2.66GHz) – ZipZoomFly ($294.90)

- Intel Core i7 920 (2.66GHz) – NewEgg ($294.99)

- Intel Core i7 920 (2.66GHz) – Directron ($299.99)

- Intel Core i7 920 (2.66GHz) – NCIX ($309.09) ($391.04CAN)

- Intel Core i7 920 (2.66GHz) – Tiger Direct ($309.99)

- Intel Core i7 920 (2.66GHz) – NowDirect ($312.88)

- Intel Core i7 940 (2.93GHz) – ZipZoomFly ($567.50)

- Intel Core i7 940 (2.93GHz) – Mwave ($569.00)

- Intel Core i7 940 (2.93GHz) – NewEgg ($569.99)

- Intel Core i7 940 (2.93GHz) – NowDirect ($609.29)

- Intel Core i7 940 (2.93GHz) – Tiger Direct ($609.99)

- Intel Core i7 940 (2.93GHz) – NCIX ($686.04) ($771.66CAN)

- Intel Core i7 Extreme 965 (3.20GHz) – ZipZoomFly ($1,028.80)

- Intel Core i7 Extreme 965 (3.20GHz) – NewEgg ($1,029.99)

- Intel Core i7 Extreme 965 (3.20GHz) – Mwave ($1,039.00)

- Intel Core i7 Extreme 965 (3.20GHz) – Tiger Direct ($1,079.99)

- Intel Core i7 Extreme 965 (3.20GHz) – NowDirect ($1,086.85)

- Intel Core i7 Extreme 965 (3.20GHz) – Directron ($1,134.99)

- Intel Core i7 Extreme 965 (3.20GHz) – NCIX ($1,217.09) ($1,391.39CAN)

For the i7 920, either Mwave, ZZF and NewEgg are going to be a good choice, as the prices hover within the dollar. For the i7 940, things are still tight, so it’s hard to go wrong. The i7 Extreme 965 is another story, though, with one e-tailer selling above the $1,200 mark. That’s foolish when the same CPU can be had for $1,029 at ZZF or $1,030 at NewEgg. It definitely pays to shop around… something that’s tough if you’re a Canadian. The prices as seen at NCIX seem to be the cheapest in all of Canada, and by no means are they low when compared to the US equivalents.

I’ve only tackled CPUs here, but the motherboards is an area where it will pay off even more to shop around. Once you have a board picked out, being lazy will get you nowhere. Shop around! You might only save $20, but that’s $20 in your pocket, not someone else’s.