- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Notebooks Outsell Desktops for First Time

There’s been a few notable people in the industry over the course of the past few years who have made suggestions that mobile computers would outsell desktops very soon, but most of these “guesses” hovered around 2010. But thanks to the massive surge of netbook sales, that time has hit much, much quicker.

According to industry research firm iSuppli, notebook shipments increased a staggering 40% in Q3 of this year compared to last year, equating to 38.6 million units. During that growth, desktop sales declined 1.3% to total 38.5 million units. To say that both notebooks and desktops are head-to-head would be a massive understatement. It will be interesting to see the results from Q4 in the coming months, since we may see a more noticeable gap.

Who were the big winners? Well, Hewlett-Packard once again cleaned house, as they were responsible for 14.9 million units, while Dell came in second with ~11 million sold. Acer came in third, thanks to some massive growth throughout the year. They sold 3 million additional units over Q3 last year, and they have nothing other than their netbook lineup to thank.

“The big news from iSuppli’s market share data for the third quarter was undoubtedly the performance of Taiwan’s Acer Inc.,” Wilkins said. “On a sequential basis, the company grew its unit shipment market share by 45 percent, and by 79 percent on a year-over-year basis. Acer shipped almost 3 million more notebooks in the third quarter than it did in the preceding quarter, with the majority of those 3 million being the company’s netbook products.

Intel Launches Five 45nm Mobile Processors

I am not quite sure if new launches were to be expected, but Intel has updated their price list to include five new Penryn-based 45nm mobile processors, including a brand-new entry to their Quad-Core family, the Q9000. This 2.0GHz offering halves the amount of L2 Cache of its bigger siblings, to 6MB, but the front-side bus remains the same, at 1066MHz. The Q9000 is to be sold at a cost of $348/1,000.

It’s a little difficult to sound enthused with a 2.0GHz chip, but if you are a mobile warrior and need multi-threadedness more than raw single-threaded performance, then the CPU will prove to be a worthy choice for the money. Consider the fact that the next step up, the 2.26GHz Q9100, sells for $851/1,000… a rather significant difference.

On the Dual-Core side of things, the new models include the new highest-end T9800, a 6MB L2 Cache / 2.93GHz offering that simply screams. That’s a lot of power for a Dual-Core, but at $530, it’s definitely not cheap. The other models include the 2.66GHz P9600, the 2.66GHz T9550 (slightly higher power than the P9600), and also the 2.53GHz P8700, which becomes the lowest-end model to include 6MB of L2 Cache.

Since Intel doesn’t generally know themselves, we’re unsure of when you could expect to find these processors pop up in notebooks from various system builders, but it shouldn’t be too long. It is important, however, to make sure you get a good deal on that new notebook, and compare the prices with a given CPU installed. In a brief look, I found some builders who still charge a huge premium for older mobile Dual-Cores, when the newer ones cost less and are more powerful. It pays to shop around and do some research, that’s for sure.

First Blu-spec Audio CDs Hit the Market

Early last month, SonyMusic Japan sent out a press release touting the “Blu-spec” CD specification, which isn’t a new format in itself, but rather a new method for recording CD masters. Traditional methods use infra-red lasers, which as has become common knowledge, cannot produce pits on CDs as cleanly as a blue laser, which Sony’s Blu-ray discs use exclusively.

So with using a “Blu” laser, you’d imagine that you’d need a Blu-ray player to listen to the new discs, but that’s not the case at all. As mentioned, it’s a new method, not a completely new disc, so they can be used in any ROM drive that supports typical audio CDs. In the press release (300KB PDF), Sony shows how much cleaner the pits on the disk are, and it’s hard to disagree… it looks much better.

The big question though, is would this new technology make a real difference at all in the real-world, even to audiophiles? Well, it shouldn’t take too long to find out, as it seems this will be the week for the initial releases, with almost all of them being available on Amazon.

Sadly, because most of the launch discs are imports, they’re at least twice as expensive as a typical audio disc, so you’d have to enjoy one of the offered CDs an awful lot in order to want to pay such a premium for them. Still, I’m curious to see opinions from audiophiles who manage to score a few of these, because if Blu-spec really does make a difference, then we might see the process become more common-place.

Linux Kernel 2.6.28 Brings ext4, GEM, UWB Support and More

Although most people thought of last Thursday as being Christmas, it seems the Linux Kernel developers thought of it as being the perfect day for a fresh kernel launch: 2.6.28. Codenamed “Erotic Pickled Herring”, the updated kernel brings a lot of changes and updates that we were expecting, including a stable ext4, improved GPU memory manager, disk shock protection, ultra-wide band support and more.

One of the biggest additions is the ext4 file system, and although it’s been available inside the kernel for a little while, this is the first time it’s been marked stable. Because ext4 is backwards compatible with ext3, you don’t need to have the latter compiled, which is nice. The downside, though, if there is one, is that ext2 has been removed, and from my experience, that is not backwards compatible with ext4. So if you have an old partition using it, or a boot partition, the time to upgrade might be now.

Another major inclusion is the “Graphics Execution Manager”, which improves the kernel’s graphics memory-handling efficiency. GEM was introduced and is still developed by Intel, and currently will only work with i915, but I’d expect we’d see support for other GPU drivers in the near-future as well. “Ultra Wide Band” support also makes an entry here, which serves as a transport layer for various wireless technologies, including Wireless USB and the WiMedia Link Protocol.

For audiophiles, you might be happy to know that the kernel also adds support for ASUS’ Xonar HDAV 1.3 Deluxe audio card, in addition to a few other audio peripherals. This is of course not all that’s included in the new kernel, so check out the Kernel Newbies link below and find out all of what’s relevent to you.

There has been a lot of work in the latest years to modernize the Linux graphics stack so that it’s both well designed and also ready to use the full power of modern and future GPUs. In 2.6.28, Linux is adding one of the most important pieces of the stack: A memory manager for the GPU memory, called GEM (“Graphic Execution Manager”). The purpose is to have a central manager for buffer object placement, caching, mapping and synchronization.

NVIDIA Rolling Out 40nm GPUs in 2009

According to leaked documents seen by VR-Zone, NVIDIA is preparing to roll out their first 40nm graphics processors this spring, beginning with the high-end GT212. To launch 40nm so soon would be a rather impressive feat, given that few current GPUs from NVIDIA are even using 55nm, and neither of their GT200 series are, until the launch of the GTX 285/295 in early January.

The first 40nm chip will be, as mentioned, the GT212. Although the model code is all that’s known about the card, it’s going to be a high-end offering that will likely replace both the performance and high-end GT200 models available now, and also the upcoming launches in January. If the roadmap proves true, then both the GTX 295 and 285 will be short-lived, although at this point, it’s hard to judge whether the revision will be that much faster.

Past that, NVIDIA will be rolling out 40nm to the rest of their line-up in the fall, with the GT214, GT216, GT218 and also the integrated IGT209. By late next fall, all of NVIDIA’s current offerings should be built on the 40nm node. Also according to the roadmap, we’ll see the first major follow-up to the GT200 in the form of the GT300 in Q4 2009, but it’s too early to speculate what else aside from the 40nm node it would include.

This transition to 40nm will first take place with their high end GT212 GPU in Q2 follows by the mainstream GT214 and GT216 as well as value GT218 in Q3. GT212 will be replacing the 55nm GT200 so you can expect pretty short lifespan for the upcoming GTX295 and GTX285 cards.

Is the OpenOffice.org Project in Good Health?

That’s a question that’s being brought up in a semi-recent blog post by OOo developer Michael Meeks, and it’s a good one. While companies like Microsoft can afford a large team of developers to dedicate themselves to working on their product, OpenOffice depends mostly on volunteer work, and as it seems lately, the state of that support seems to be in question.

Michael takes a look at things from a few different perspectives. He aggregated stats from the commit logs to see who were contributing code to the project, who wasn’t, and who seems to be in some sort of hiatus. The findings are pretty interesting, with the vast majority of code being contributed from Sun themselves. To make things even worse, the number of external developers has decreased substantially over the past few years.

I’ve complained about OpenOffice in the past, specifically with regards to its design, but I’m having an easier time seeing exactly why that’s the case. If there’s a lack of developers, then the entire project is going to slow to a crawl. This is a bad thing, though, because it’s nice to have an alternative, especially a free one, to paid office applications, such as Microsoft Office. I’m no developer, and I have no recommendations for the project, but hopefully things can improve sooner than later. This is one project that’s far too valuable to lose.

Differences: most obviously, magnitude and trend: OO.o peaked at around 70 active developers in late 2004 and is trending downwards, the Linux kernel is nearer 300 active developers and trending upwards. Time range – this is drastically reduced for the Linux kernel – down to the sheer volume of changes: eighteen months of Linux’ changes bust calc’s row limit, where OO.o hit only 15k rows thus far.

Extreme Overclockers are a Unique Breed

Given that you’re reading our website, chances are good that you’ve thought at some point about overclocking, or even gave it a try yourself. Or it could be, like many of the posters in our forums, your computer is never not overclocked, even if it’s by a little bit. There’s just something about knowing that your computer is working faster than intended… even if it doesn’t make a noticeable difference in the real-world.

I’m one of the people who doesn’t mind applying a rather simple overclock and being done with it, but there are a select few who thrive on finding the highest-possible clock on a particular piece of hardware, whether it be a CPU or graphics card. Tech Radar takes a look at this lifestyle, and delves a little bit deeper into things than most people are aware of.

Kenny Clapham, member of the overclocking team Benchtec, notes that overclocking doesn’t just become a hobby, it can become an obsession. Minor goals to the rest of us might be major goals to professional overclockers, and given that the competition is fierce, the drive to push a component that much harder becomes much rawer. One great thing, though, is that professional overclocking brings people together from all over the world, and that, to me, is one of the coolest aspects of the “sport”.

Before you can go near a flask of LN2, a lot of time is spend raising one value – such as the FSB – under water cooling and then resetting it and moving on to the next. When the big day for testing comes, the sub-zero materials are pulled out and the known stable points are used as a starting position for record attempts.

How Porn has Changed the Internet

Despite the fact that pornographic material on and off the web is more popular than ever, it’s still one of those things that not too many people talk about. Some people may admit that they purchase such material, while others do their best to hide it in their own house. Then there are those others who shun it like the plague. Whatever your opinion on the subject, though, it seems we have pornography to thank for a lot of the web’s developments. Odd, but true, it seems.

PC World takes a look at twelve ways that porn has changed the web, and some are obvious, while others are less so. Unless you’ve been actively stalking the porn world since the beginning of the Internet, it’s unlikely that you’re aware of most of these. For example, did you know that online payment systems were first released for less-than-reputable websites?

How about streaming content? Live chat? Or broadband? The explanations behind all of these are found in the article, but with the good, also comes the bad. Porn is believed to be partially responsible for the start of mass e-mail spamming, malware and pop-ups… aka: the most frustrating aspects of web-surfing. So the question is, where will porn take us next?

Why would you limit yourself to watching videos of naked people when you could instead chat with them, suggest ingenious activities for them to undertake, test their grasp of Hegel’s metaphysics, and whatever else struck your fancy–all while using the finest in modern electronics? Porn plowed the path for video chat and its boring cousin, Web-based videoconferencing.

Can Apple Customers Still Feel Special?

That question might sound a bit odd at first, but it’s a good one. When things, whether they be products or habits, tend to grow in popularity, they lose their initial lustre. If you’re part of a rather small group of like-minded people, you might have reason to feel special. That’s how Apple fans likely felt even just five years ago, and even more so ten to fifteen years ago. But what about now?

Wired takes a look at “8 signs” that Apple customers are no longer special, and for the most part, their choices are good ones. What would take away the special feeling? Well, over-usage is a good one… the fact that when one thing becomes so popular, it can suck the fun right out of things. I’ve had this happen with things I’ve liked, so I know what it’s like. It’s like following an indie band for years, and then they explode and hit the mainstream… it’s hard to feel the same way.

In the case of Apple, the fact that Macs are deliberately shown on-screen in movies and TV shows is one good reason, and the fact that The Simpsons mocks the company doesn’t help either. Then there is the fact that Wal-Mart is going to be selling the iPhone. Oh, and how about the fact that President-elect Obama uses a Mac as his main PC? So while it might be hard to feel special anymore, if there’s one thing Apple fans have to be proud of, it’s that Macs do seem to be taking over the planet.

Windows PC owners always pull the “Macs aren’t compatible with any decent software” card when bashing Apple. But that insult is clearly outdated if Barack Obama was able to win the U.S. presidency with a Mac as his computing weapon (while using iChat to stay in touch with his family, no less). And wait – there’s one more thing: Obama has his own official iPhone application! Can we all “think different” if we’re all using the same trendy gadgets?

Want Scientology Training? Get a Job at Diskeeper!

A sad reality is that discrimination in the job market exists. You may not be hired for a position due to your ethnicity, gender, sexual orientation, religious beliefs or because you wear purple socks with blue pants. It’s wrong, but it happens, even if it’s not so obvious. That’s one problem… another is when a company tries to force religious beliefs on you. Wait… what?

When I first saw a headline at Slashdot that read, “Diskeeper Accused of Scientology Indoctrination“, I had a laugh. I figured it was a joke, because who on earth would make up such a bizarre lawsuit, which involves of all things, Scientology? Well, two former employees didn’t have to make up anything, and that’s apparent in the court filings. As it seems, in order to receive employment at Diskeeper, you must partake in Scientology-based training… training which CEO Craig Jensen attributes to the company’s success.

I admit, this is the first time I’ve heard of a problem like this, and common sense tells me that it isn’t allowed, since it seems to the be similar to not hiring someone because of their religion… which is discrimination. The CEO disagrees though, and believes that “religious instruction in a place of employment is protected by the First Amendment.” I could see that if you worked in a church, but at a company that has nothing to do with religion? This is one strange case, that’s all I know, and hopefully we can see the results of this lawsuit sooner than later.

In footnote 4 of the motion, Diskeeper claims that it in no way concedes that Hubbard Management and Study Technology are religious, but to anyone familiar with both Scientology and Hubbard’s supposedly secular “technologies,” the two brands are basically indistinguishable, and indeed, the establishment of supposedly secular fronts was intended by Hubbard to be a recruiting tool.

abit to Close its Doors on December 31

Earlier this year, rumors began to float around about the potential demise of abit, or at least their motherboard division, and while a few sites posted “fact” about the company’s closing, it never happened. Time went on, and the rumor seemed to be put to sleep. According to Cameron over at TweakTown, though, abit’s doors will officially be shut on December 31st.

The article doesn’t mention the source of the fact, but it does seem credible. The company’s last great motherboard was the P35-based IP35 PRO. After that, none of their follow-up models seemed to garner much attention, which is likely due to the lack of a push on the marketing side.

Another reason it sounds credible is that while in Taiwan this past summer for Computex, in meeting with abit, the only thing they could really talk about was their FunFab digital photo frames. Our guide didn’t have much to say on the motherboards, except that they were new, and used Intel’s latest chipsets. It was the FunFab that was the ultimate focus, and it seems that side of the business didn’t take off like the company hoped.

Many people consider abit to be the ones responsible for the overclocking revolution, and it’s rare to hear bad things about their motherboard’s reliability and stability. I haven’t used an abit board in quite a while, so I can’t comment much on that, but the impact that the company has had on the motherboard landscape is apparent.

Fast forward to this year and ABIT were left with a single product line to try and keep the company from collapsing – digital picture frames with the ability to print. They didn’t work out as planned, there were rumors of ABIT re-entering the graphics card market once again, but those rumors didn’t eventuate. Computer enthusiasts join me in bidding farewell to the once mighty strong ABIT – they are going to close the company once and for all on December 31st.

NVIDIA Now Offering Mobile Graphics Drivers on their Website

If you’re a notebook user, and especially a notebook gamer, you probably know the frustration of finding updated drivers for your particular model. Some manufacturers include updater programs, but most are finicky, and most manufacturer’s websites are so counter-intuitive that it makes finding updated drivers a chore (I’ve experienced a lot of them). Even worse is when you look for a graphics driver. Good luck!

NVIDIA looks to finally remedy the stress of finding the latest mobility driver by offering them on their own website, right alongside the desktop variants. I have no idea why this took so long, but now that it’s here, it should make our lives a lot easier. NVIDIA touts the ability to unleash the power for the latest games, Adobe CS4, PhysX and CUDA-based applications with the latest drivers.

Currently, all 8 and 9-series mobile GPUs are taken care of, with the 7 and Quadro NVS-series due out early next year. I can’t help but repeat myself… it’s about time. I’ve always been frustrated by the lack of mobile drivers available on the official vendor websites, and now we are at least half-way there. ATI’s been offering mobile drivers for a while, but unless you’re using a notebook with an X1800 or earlier, you’re going to be disappointed.

The first graphics driver release from NVIDIA will extend the NVIDIA CUDA architecture to notebook GPUs, enabling the growing number of consumers moving to a notebook-only lifestyle to immediately experience the wide range of CUDA-based applications-from heart-stopping GPU-accelerated game physics to GPU-accelerated video conversion.

OCZ Releases Faster-than-Life Triple-Channel Blade Series

Yeah, I know I just posted about some OCZ modules earlier this week, but the most-recent launch deserves an equal amount of praise, due to it’s sheer speed and timing set. If you’ve ever overclocked memory, then you know how important it is to have a good combination of high frequency and low timings. This latest kit has both, and is sure to see huge numbers with benchmarkers.

The “Blade” series is new to OCZ and features a brand-new sleek black heat-spreader. Though it looks rather modest, the first available kit features fast DDR3-2000 speeds and is equipped with 7-8-7-20 timings. I should note that our lead Core i7 benchmarking machine is using close to the same timings for DDR3-1333, so the accomplishment here is great.

The best part is that the modules manage to stick to Intel’s max voltage allowance of 1.65v for the Core i7 platform. Thanks to a CAS latency of 7, the latency ratings on these puppies must be drool-worthy. The best I’ve seen from other manufacturers, if they offer DDR3-2000 at all, is CL 8 and 9, but OCZ seems to be the first to hit the magical 7. Most impressive to me is that this is accomplished with only 1.65v… things sure change when we’re forced to adapt to a much lower threshold!

The Blade Series Triple Channel Memory kit is the latest maximum-performance RAM designed specifically for the Intel Core i7 processor / Intel X58 Express Chipset. At DDR3-2000, CL 7-8-7, the Blade Series harnesses industry-leading speeds at the low voltage required to safely run Core i7’s triple channel mode. With the ideal combination of all the factors that formulate the ultimate memory solution – density, speed, latency, and an effective new cooling design – the Blade Series is guaranteed to please enthusiasts looking to take the hottest Intel platform to new heights.

NVIDIA’s GTX 295 Looks Promising

As we found out last week, both ATI and NVIDIA currently offer mid-range cards that are pretty equal in performance, but things change when talking about high-end. Since ATI first released their HD 4870 X2 dual-GPU card this past August, they’ve rightfully held the crown for the fastest card on the planet, which we talked more about in in our review. But, NVIDIA wants the world to know that it won’t be much longer before they regain that crown, with the help of their GTX 295.

This card follows upon the general design of the 7950 GX2 and 9800 GX2 in that it’s essentially two graphics cards combined to share the same dual-slot form-factor. The GTX 295 is no different, and in most regards, it’s almost like putting a GTX 260 and 280 together. The upcoming card features the stream processors of the GTX 280, at 240 SP, and the clocks of the GTX 260, of 576MHz Core.

Ryan at PC Perspective took the card for a spin, and for the most part, the findings aren’t that surprising. Generally speaking, like the HD 4870 X2, it excels more at higher resolutions (especially 2560×1600), and when compared to the previously-mentioned card, it’s without question the better performer. Things will come down to pricing, though, but from how things look right now, the GTX 295 might well-deserve its price premium. Stay tuned for our full review of the new card upon it’s release next month.

What is interesting about these specs is the mix between GTX 260 and GTX 280 heritage. The 480 stream processors indicate that the GTX 295 is basically a pair of GTX 280 GPUs (since the GTX 260s have either 192 SPs or 216 SPs depending on your place in time) on a single board while the clock rates are exactly like those reference clocks on the GTX 260 GPU. Also, the 448-bit memory bus and 896MB of memory per core are also indicative of the GTX 260 product.

CoolIT Releases Affordable Liquid-Cooling for CPUs with the Domino

This might be the day to launch new cooling products, because in the previous post, we took a look at Sapphire’s latest GPU solution, while here, we’ll check out CoolIT’s new Domino CPU cooler. CoolIT is a company that immediately become well-known throughout the industry with their first products, because their coolers were robust, performed better than water-cooling setups, and helped overclockers push their CPUs just a little bit harder.

Today, the Alberta-based company unveils their “Domino”, an “Advanced Liquid Cooling” product for the masses, which retails just a bit higher than some of the top-of-the-line air coolers, at $79. You read that right… liquid CPU cooling for $79. You’d expect the product to look entirely lackluster for a price like that, but it’s quite the opposite.

CoolIT has kept a few goals in mind when designing the Domino, including simple installation, advanced configuration, quiet operation, and reliability. Given the price, you wouldn’t expect an LCD display, but there’s one here, which reports the temperature and radiator fan speed. It goes without saying that CoolIT’s own higher-end products would perform better than the Domino, but for what amounts to $20 more than Thermalright’s Ultra-120, it seems like a great deal. We’re currently putting the Domino to the test in our labs, so we’ll let you guys know soon just what it’s made of.

“Domino was designed for the PC Gamer looking to build a high performance rig with maximum value” remarks Geoff Lyon, CEO of CoolIT Systems. “For the same cost as most heatsink/fan combinations, PC Enthusiasts can now have an advanced liquid cooling solution that maximizes CPU performance, minimizes noise and facilitates complete domination in the gaming arena of choice.”

Sapphire’s Atomic HD 4870 X2 Water-Cools both GPU and CPU

As has been evident by recent announcements, ATI is in a fantastic position right now. Their products are great performers, well-priced, and look good. But, if there’s a lingering problem, it would be that the cards can get hot, and that’s an understatement, since by hot, I mean ~75°C idle for the X2. The good thing is that the cards operate just fine under such hot temps, but for those looking to cool things down a little, while still receiving incredible performance, Sapphire has you covered.

Their new Atomic edition HD 4870 X2 card, simply put, comes pre-equipped with a water-cooling loop. This has been done before by other companies, but the result here looks fantastic, and the best part? It cuts down the huge double-slot card to just a single-slot, so it’s a win/win all-around.

But it gets better. The pump also doubles as a CPU water-block, so with this one product, you not only cool the GPU, but the CPU as well. According to initial reviews, the solution is effective overall. With the CPU block being used, the Atomic manages to cool the HD 4870 X2 just as well as a stock card with its fan at 100%. Not bad, given that at 100%, the fan generates more whine than a vineyard.

The Atomic once it hits retail will sell for around $700, so it’s not exactly cheap. It’s not exactly a huge premium though, either, given that for the extra $200, you’re getting a water-cooled CPU and GPU, and also an array of other goodies.

Liquid coolant in the SAPPHIRE HD 4870 X2 ATOMIC is circulated through the graphics card cooler and a chassis mounted radiator by a pump assembly which fixes onto the standard system CPU mountings (both AMD and Intel mounts supplied). The graphics cooler, CPU cooler and radiator are connected in a closed loop by high quality flexible yet tough Teflon tubing and attached to each module with interference fit barbed joints that are sealed for life.

OCZ Releases Slim Flex Water-Cooled Modules

In an attempt to help power-users keep their overclocked rigs as cool as possible, OCZ has released a follow-up to their Flex memory line-up with the EX design. The biggest difference between the EX and previous releases is that a much slimmer design is used, so that using four modules at a time should be more than possible, as long as there is relatively good spacing in between each set of DIMM slots on the board.

However, that’s not an issue unless you want to use more than 4GB of RAM, and at this point in time, there seems to be little reason to go for the gusto. But, the option is here for those who want it (and it’s tempting when memory prices are at an all-time low). On the DDR2 side, the two available EX models feature PC2-6400 and PC2-9600 speeds, with very tight 4-4-3-15 timings on the former and 6-6-6-18 on the latter.

For those with DDR3 rigs, the speeds get even wilder, with the top kit scoring 8-8-8-20 timings and PC3-16600 (DDR3-2000) speeds. I’m unsure whether 8GB would be possible with such speeds, but both the PC2-6400 and PC3-12800 kits should work fine in that configuration. Like the original Flex models, the EX utilize 1/8″ tubing, which is thanks to the sake of space. These look like a great option for your robust water-cooling rig though, and they don’t look half-bad either.

The OCZ Flex EX heat management solution enables high-frequency memory to operate within an optimal balance of extreme speeds and low latencies without the high temperatures that inhibit or damage the module. Each Flex EX memory module features this breakthrough thermal management technology from OCZ, combining an effective new heat-spreader design with integrated liquid injection system and dedicated channels directly over the module’s Ics in a thinner form factor.

Apple Announces its Last Year at Macworld

In some rather surprising news yesterday, Apple has announced that the upcoming Macworld conference in San Francisco will be its last. Since the conference has always been Apple-focused, the fact that Apple themselves are leaving is going to make things very, very interesting. Some are already confident that with this news, the conference is destined to be no more in a few years.

Macworld has played a vital role in some major Apple announcements in recent years. In 1998, they unveiled their iMac, and we all know how that went. Two years later, they showcased OS X to thousands of drooling fans, while a year later, iTunes was introduced. More recently, it was the 2007 show where the iPhone was first revealed, and last year, Macworld was the place where Apple introduced their ultra-thin MacBook Air.

To say that Macworld has played a huge role in Apple’s unveiling of new products would be a huge understatement, and their decision to pull out of the show doesn’t give much confidence in trade shows as a whole. People have been calling for the death of such shows for a while, and it’s changes like these that make it easy to believe.

Apple is reaching more people in more ways than ever before, so like many companies, trade shows have become a very minor part of how Apple reaches its customers. The increasing popularity of Apple’s Retail Stores, which more than 3.5 million people visit every week, and the Apple.com website enable Apple to directly reach more than a hundred million customers around the world in innovative new ways.

XFX to Begin Selling ATI Graphics Cards

It’s been long-rumored, but today it’s official: XFX will begin producing ATI graphics cards. For the most part, this isn’t a surprising move, as having both company’s GPUs in your inventory is going to increase your overall sales, and given that ATI’s been impressing everyone lately, it’s hard to deal with only NVIDIA, it seems. The new relationship begins now, and the first products will be seen next month.

It’s not currently known whether XFX is going to carry ATI’s lower-end cards off the get-go, or stick to the 4800-series, but that’s all that’s mentioned in the press release. I’m sure we will be seeing a nice HD 4870 X2 release though, perhaps an XXX or Black Edition model, at the launch, along with the single-GPU HD 4850 and 4870 cards.

The question now is whether or not XFX will be the sole company to decide to move to providing both GPUs. Other companies, like ASUS and Palit, have been providing both for a while, and it seems to be working out well for them, so unless NVIDIA delivers blowout cards with their GTX 285 and 295, or is able to sweet talk these vendors, we might see more of this happening.

“In the world of PC gaming, XFX is synonymous with the extreme performance that enthusiasts crave,” said Rick Bergman, senior vice president and general manager, Graphics Products Group, AMD. “Their decision to partner with AMD and launch AMD GPU-based XFX graphics cards, including the ATI Radeon HD 4870 X2, widely regarded as the world’s fastest graphics card1 by technology enthusiasts around the world, speaks to the level of excellence achieved by the ATI Radeon HD 4000 series.”

Google Chrome Chucks its Beta Label

When Google released their Chrome browser back in September, it took the web-world by storm. Within the span of just a few days, we went from first learning about it to being able to test it out for ourselves. After doing so, I was personally very impressed. Google does a lot of things well, and a browser is definitely one of them for the most part.

One small problem with Chrome, though, was that it was launched with a “Beta” moniker, and retained it for the first few months. Using the “Beta” tag has become a common occurrence with most of Google’s products, but seeing it in the corner doesn’t instill much faith in those who are wary of the term, or want to deploy software in a business environment. Well, they’ll have nothing to worry about anymore, since Google has deemed Chrome stable enough to finally drop the label.

Since its launch, Chrome has been updated on a nightly basis, so Google didn’t simply launch a browser and leave it. As it stands today, Google claims that Chrome is faster than it was when first launched in September and also less buggy. Whether losing its beta label is what’s needed to see wider adoption is yet to be seen, but it certainly won’t hurt. Since launch, Chrome has retained just over a 3% usage rate on our site (Dec 1 – 14 is 3.66%), so its definitely on its way.

Since we first released Google Chrome, the development team has been hard at work improving the stability and overall performance of the browser. In just 100 days, we have reached more than 10 million active users around the world (on all seven continents, no less) and released 14 updates to the product. We’re excited to announce that with today’s fifteenth release we are taking off the “beta” label!

Windows XP – The OS that Just Won’t Die

Late next month, Windows Vista will turn two-years-old, at least on the consumer side, and what an interesting two years it’s been. The launch was rough, but things started to smooth out over time, and for the most part, Vista is more stable than ever, and a lot easier on the nerves to use. But, there still isn’t enough reason to make the shift for some people, so Windows XP continues to flourish.

As mentioned in the previous news post, Vista is gaining market-share, but XP is still the choice of many, gamers or not. Most of my family prefers XP, and even though I tell them that Vista has improved quite a bit since launch, they still don’t show interest in making the shift. That’s the case for a lot of people, but thanks to a recent change by Dell (and presumably, backed by Microsoft), the choice of XP is going to be made a lot more difficult.

Dell has offered XP “downgrades” on select machines for a while, with a small fee, but “small fee” it is no longer. Rather, it’s been bumped up to a staggering $150 (OEM Vista’s cost less than this), which is truly ridiculous. You have to realize that the $150 would be on top of what the Vista license would have cost, so you’re essentially paying almost twice for an older OS.

Analyst Rob Enderle said it perfectly, “that the desire is there at all should be disconcerting for Microsoft“. It’s true. If there are that many people still interested in XP, then there’s an obvious problem with Vista (or marketing). They can’t just sit around and wait for Windows 7 code to finalize, and punishing users who don’t like or understand Vista isn’t the way to go. Something tells me that this fee won’t last for too long, but stranger things have happened.

Enderle said the XP downgrade charge and the resulting pressure to move to Vista will put a magnifying glass on Microsoft in the coming year. “Instead of charging a penalty for XP, Microsoft should provide incentives for Vista,” he says. “They are too focused on margins for one product and are forgetting the damage they are doing to their brand.”

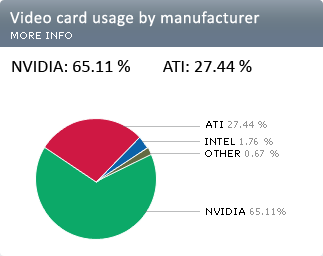

Steam’s Hardware Survey Gets More In-Depth

If you’ve ever wanted to get an idea of what video cards, processors, or Windows’ versions were the most popular, there’s always been one great source: Valve. Thanks to the wide usage of their Steam platform, they’ve been able to collect details about PCs for those who opt in, and their overall reports have been updated on a pretty regular basis. Last week, this survey saw a rather large overhaul, and the data is just as interesting as ever.

The revamped survey is in-depth, and if you want to know what system configurations are the most popular, this is the place to look. To help put things into perspective, the survey will now be updated once per month, and beside each figure will be an increased or decreased percentage number, so you can really keep on top of current trends.

The last update was completed just a few weeks ago, and some of the results are pretty interesting. Where GPUs are concerned, NVIDIA holds the vast majority, at 65.11%, with the 8800 GT proving to be the most common card. Thanks to how ATI names their GPUs, we can’t get real specific. All we know is that the “HD 4800 Series” is the most popular choice from that camp.

For CPUs, Intel holds a fairly similar share as NVIDIA for the GPUs, at 63.62%. Where multi-core CPUs are concerned, we can see that 40% of the gaming market is still using a single-core chip, while Dual-Core adoption is on a steady incline. Quad-Cores are seeing slower growth, but currently sits at 10.43%. It’s also not much of a surprise that Windows XP is still the leading OS, at 68.67%, but Vista 32-bit is slowly catching up, to currently sit at 23.19%.

We’ll be sure to take a fresh look at this survey every so often, because it’s a rather invaluable resource to see current trends. I do think there are improvements that could be made (eg: showing much higher options for both RAM and storage), but there’s still no other service like this one, that I know of, so it’s hard to complain.

Each month, Steam collects data about what kinds of computer hardware our customers are using. The survey is incredibly helpful for us as game developers in that it ensures that we’re making good decisions about what kinds of technology investments to make. Making these survey results public also allows people to compare their own current hardware setup to that of the community as a whole.

Taking a Closer Look at Intel’s Core i7-940

When we posted our original look at Intel’s Core i7 processors in early November, we managed to include performance data for all three of the launch chips in the same article. However, Intel wasn’t able to provide us all three, so through a little bit of trickery, we under-clocked the i7-965 to match the clocks of the i7-940, which was actually Intel’s idea.

Well, as Scott at TR discovered, both Intel and reviewers alike overlooked a few important details, and as a result, performance data does change between the simulated CPU and the real one. The problem boils down to the processor’s internal clocks, and in this particular case, the Uncore Clock (UCLK) is of most interest. As it turns out, how we managed the under-clocking overlooked the UCLK entirely, which meant that it was clocked higher than it would have otherwise been on a retail chip.

In the end, the performance differences aren’t that significant, but there are clear differences all-around… not to mention power consumption adjustments. Although Intel’s reviewer’s guide omitted the UCLK factor as well, I don’t think they did it on purpose, because it’s something that even reviewers didn’t catch until now, and no surprise given that the UCLK is usually hidden deep within the BIOS… especially true on Intel’s DX58SO.

As an added note, I’m not quite sure what we’ll be doing for our own CPU reviews thanks to these new-found discoveries, but we’ll figure it out shortly. I’ve actually been in the process of re-benchmarking our entire fleet, so I’m glad this information came right before I was to begin testing on our i7 machine.

…even with its slower L3 cache and memory controller frequencies, the system equipped with the retail Core i7-940 draws about 15W more than the one with our simulated 940 from our original review. Why? Likely because Intel sorts its chips for the various models based on their characteristics, and the Core i7-965 Extreme chips are the best ones-most able to reach higher clock speeds and to do so with less voltage.

School Teacher has Linux ALL Wrong

Our school teachers are supposed to be, well, intelligent, right? Well, let’s examine a situation that went down in some school. Some students were not only using the HeliOS Linux distribution in class, but they had the nerve (sarcasm) to demonstrate it to other classmates… in an attempt to show them what the big deal was. Well, the teacher, “Karen”, saw this, and took immediate action and sent the student to detention.

As if this wasn’t bad enough, Karen went ahead and sent the HeliOS project lead a blunt e-mail, stating, “Mr. Starks, I am sure you strongly believe in what you are doing but I cannot either support your efforts or allow them to happen in my classroom.“. She even went on to state that she used Linux in college, and that the legality claims made by the project are “grossly over-stated” and “hinge on falsehoods“.

Whew, I somehow gained a headache just then. The teacher obviously feels that Linux is illegal, and that no software is free, which is quite the statement to hear by anyone who has any decent computer knowledge. She went on to further prove her familiarity on the subject by stating, “This is a world where Windows runs on virtually every computer and putting on a carnival show for an operating system is not helping these children at all.”

Reading the full e-mail sent to Mr. Starks is good for a laugh, but what’s sad is the fact that this woman has Linux all wrong, and the fact that she claimed to have used Linux in the past makes the matter even worse. I’m sure by now, Karen has been corrected on all her statements, at least we can hope. It probably wouldn’t hurt if she began using the Internet, though. I hear there’s a lot of knowledge to be had out there…

Update: Ken Starks has made a new blog post updating the situation, and it seems that the schoolteacher is now in the process of learning more about Linux, which is a good thing. This blog post exposes more to the original story as well, so it’s a worthwhile (but long) read.

“At this point, I am not sure what you are doing is legal. No software is free and spreading that misconception is harmful. These children look up to adults for guidance and discipline. I will research this as time allows and I want to assure you, if you are doing anything illegal, I will pursue charges as the law allows.”

Nintendo Stomps the Competition with November Sales

Last month was huge for video game sales, and the data from NPD Group proves it. November is always a good month for sales of any kind, and the reason is obvious. Christmas and other common religious celebrations occur in December, and people attempt to get their shopping done in good time (as if that ever happens!). Of course, Black Friday and Cyber Monday sales help, so it goes without saying that it’s a good time to be a retailer.

For the most part though, where video games were concerned, it was Nintendo who was dominating. Last month, the company sold 2.04 million Wii consoles, which is 208% of what the company sold during the same month last year. That’s truly staggering, especially when you consider the console has been out since November 2006! Either people are breaking a lot of these things, or there’s really that many more hopping on the bandwagon.

If you think that’s where Nintendo’s streak ends, think again. During the same month, they sold 1.57 million copies of their Nintendo DS handheld, and surely, countless games. For two consoles that don’t offer near as much power as their competitors, it goes to show that it’s the games that sell these things, not the graphics. Although their numbers pale in comparison to Nintendo’s, both Microsoft and Sony had good months too, with 836,000 Xbox 360’s sold, as well as 378,000 PlayStation 3’s.

“The video games industry continues to set a blistering sales pace, overall, with total month revenues 10% higher than last November, even with 7 less days of post-Thanksgiving shopping this year [over last],” said NPD analyst Anita Frazier. “With $16B realized for the year so far through November, the industry is still on pace to achieve total year revenues of $22B in the U.S.”