- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

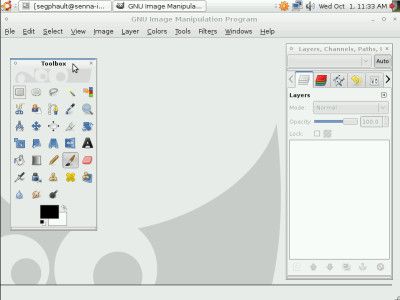

GIMP 2.6 Released – One Step Closer to a True Photoshop Replacement

It’s been less than six months since we’ve seen the launch of GIMP 2.5, but 2.6 is here and becomes one of the biggest upgrades the software has seen in quite some time. The most-common complaint about GIMP (besides the name) is that it’s not a competent Photoshop-replacement. That argument might not hold much salt soon though, as 2.6 paves the way to achieve that goal.

The most noticeable change in the latest version is a more streamlined GUI. No longer will there be multiple items in the taskbar open for each image or related window (finally!), and the main application window will be shown at all times, with the images and toolboxes settled within, similar to how Photoshop currently works.

Other new features include an ability for 32-bit floating-point RGBA color, although the legacy 8-bit code paths are enabled by default and will remain so until the GEGL framework is considered completely stable. There are far too many more new features to talk about here, but I’d recommend checking out the release notes page that explains everything, in addition to the Ars article below.

There are a number of important functionality improvements that will be welcomed by users, too. The freehand selection tool now has support for polygonal selections and editing selection segments, the GIMP text tool has been enhanced to support automatic wrapping and reflow when text areas are resized, and a new brush dynamics feature has added some additional capabilities to the ink and paint tools.

NewEgg’s Canadian Counterpart Launched

If you happen to be a computer enthusiast who lives in Canada, you are probably well aware that purchasing equipment isn’t the easiest of processes. The absolute first e-tailer I ever learned of was NCIX, and since then, I’ve used them for most of my PC equipment, but their selection is sometimes lacking. There’s Tiger Direct, but their shipping options (and shipping prices) are asinine. Aside from these two, the competition has been rather slim.

One common complaint from fellow Canucks was that American’s have NewEgg… the “ultimate” e-tailer. They offer almost anything most people need, and at reasonable prices. So when we first learned back in July the Californian-based e-tailer would be making their way up to Canada, people got psyched. Well, the new website has finally opened, and I have to say, I’m not too impressed.

At first glance, their prices seem competitive for non-sale items, but NCIX offers sales each and every week that trump almost any popular item. Seagate’s 1TB drive, for example, is $144 at NewEgg.ca, while it’s been $136 at NCIX for the past few weeks with their weekly sale. The same thing applies for all other items I checked as well, including processors and graphic cards.

The biggest caveat seems to be the fact that the Canadian website ships their product from their US warehouse, which means that Canadians will almost positively receive duty fees once the product arrives. I could be wrong, but I have never ordered from a US-based retailer and have not had that happen. The second issue is that they only offer shipping through UPS, who I’ve found to be completely and utterly unreliable. Hopefully NewEgg will eventually give us a reason to go through them, but so far, I don’t see a single one.

We define an excellent shopping experience to be one that combines unsurpassed product selection, abundant product information and fair pricing. With thousands of tech products in stock and numerous tools to help customers make informed buying decisions (detailed specs, how-to’s, customer reviews and photo galleries), we have earned the loyalty of tech-enthusiasts and novice e-shoppers alike.

Top Eight Firefox 3 Tweaks

Top lists are a dime a dozen, but once in a while one will catch my eye and actually shows me something I didn’t know before. In the latest from PC Magazine, we learn of eight tweaks that can be made to Firefox 3 to improve the overall experience… and some are actually quite helpful.

Two that I can personally appreciate is the duplicate a tab feature. All it takes is a simple click of the CTRL button while dragging a tab… easy, but hard to figure out by accident. The other is smart bookmarks hacking, which allows you to customize a string of code in order to display relevant links specified to your liking. As an example, you could have the bookmarks list show all recent URLs you’ve visited with a certain keyword.

Some of the tips are of things more noticeable, but if you aren’t one who likes to dig under the covers, then the article will be even more worthwhile to check out.

Smart bookmarks are live bookmarks that don’t just refer to particular sites but actually generate live lists of sites according to parameters you define. For example, you might have a smart bookmark that lists the 10 sites you visit most often, or the last 20 sites you’ve visited with a particular keyword in their title.

Super Talent Releases More Affordable SSDs

It seems not even two weeks can pass without seeing a new SSD launch from Super Talent, but with each one that hits our inboxes, the prices seem to go lower and the speeds higher. Well in this particular case, the speeds go lower, but so do the prices. Two weeks ago, the company announced two new sets of models, one super-fast, one moderately-fast – this one being modestly-fast.

These new model, MasterDrive LX, is the “low-end” variant of their SSD line-up, although neither the speed or price fit the “low-end” descriptor. The new drives at 100MB/s Read and 40MB/s Write, which actually puts it more on par with some of the highest-end hard drives out there. The VelociRaptor, for example, can sustain over 100MB/s Read, but has a far more exceptional Write than 40MB/s.

So with that in mind, the main benefit of these SSDs isn’t so much for speed as they are for size and sound, although I could be wrong. If they are capable of handling multiple writes and reads at the same time (something that a few recent JMicron-based MLC SSDs have suffered for), it still could be worthy of a look. I’m still waiting to see the prices even lower, though. They’re good now, but it’s obvious that they will continue to plummet, and even three months from now, we should be seeing some stark differences.

The MasterDrive LX has also undergone Super Talent’s rigorous battery of validation tests, ensuring the highest levels of compatibility and reliability. What’s unique about the MasterDrive LX is its incredibly low price point; the 64GB model will retail for about $179, while the 128GB unit is expected to retail for under $300. Both models will begin shipping this week.

Corsair Releases 64GB Voyager Flash Drive

Just earlier, I posted about storage in hard drives, and how today, you get so much for so little. Well, the same goes for portable storage, and in the case of Corsair’s latest Voyager flash drive, that fact couldn’t be any more true. The new model features a staggering 64GB worth of space, which is enough to hold a few full-resolution Blu-ray movies… incredible.

The new model carries an SRP of $249.99, but it shouldn’t since that’s not the price it’s being sold at. NewEgg carries the drive and is selling for $199.99, which, unless it’s changed the last time I checked, is still far less than $249.99. Normally, larger models like this come with a premium, but this one doesn’t – the 32GB models are selling for $109.99, so you actually get more GB for your dollar with the bigger model.

There is absolutely no performance information on the new model, and chances are, it’s probably for a reason. Generally speaking, when you add more storage to a similarly-sized device, speeds are going to decrease, but the fact remains… if you need a lot of storage in the smallest space possible, how can you go wrong with something like this?

Thanks to the 64GB USB Flash Voyager, users now have the ultimate solution for storing, transporting and backing up large amounts of personal and professional data. With storage capacity that just a year ago could only be found in hard drives, the 64GB USB Flash Voyager drive also provides the added ruggedness, water resistance and performance not found in storage drives utilizing rotating media.

NVIDIA at a Disadvantage Due to their Choice of Solder?

NVIDIA hasn’t been having the best of news to pass around lately, and it sure doesn’t look like that run is going to end anytime soon. New reports out of the Electronic Thin Film lab of the Department of Materials Science and Engineering at the University of California at Los Angeles (seriously!) show that NVIDIA’s choice of using a solder with high-lead will suffer the fate of cracking under high temperatures (70°C+), whereas eutectic solder, used by ATI, will last much longer.

At first glance, this doesn’t seem to be a large issue, but it looks like the reason NVIDIA’s had issues with their mobile GPUs might be due to this solder, and what a mistake to make. To date, it’s supposedly cost the company over $200 million to deal with, so changes definitely need to be made.

The problem now is that we need to wait to see a change happen, since it’s not as simple as simply switching out the solder used. It requires certain changes to be made to the power delivery, and it goes without saying, anything like that is going to take more than a few weeks to thoroughly test. There’s also the question of whether or not the current GPUs on the market are actually going to break down due to this solder, especially desktop cards.

Overall, Tu believes that the high-lead solder joint “has a built-in weakness” due to the thin layer of eutectic SnPb solder in the joint. “The eutectic SnPb has a low yield stress and it will deform first and lead to stress concentration and to accumulate high plastic strain energy,” Tu writes. In contrast, the “homogeneous eutectic SnPb solder joint tends to have a uniform composition and much lower plastic deformation so the accumulation of plastic strain energy per unit volume is lower.”

Seagate’s 1.5TB Hard Drive Now In Stock

Although Seagate announced their 1.5TB hard drive well over two months ago, it took quite a while before the model began to show up in retailers. That has apparently changed recently though, as NewEgg and a few others now have the monster hard drives in stock.

At current time, NewEgg, Tiger Direct and CompUSA all stock the drive, and for the exact same price of $189.99 – which is incredible. The price of storage has fallen so much over the past few years, that the case really is that anyone can have too much storage… far more than they’d ever need. Of course, that’s what we said a few years ago about 100GB drives, but I can’t see the progression keeping up. Even with today’s media-hungry lifestyle, it would take a lot of effort to fill 1TB or more.

Speaking of 1TB, those drives are also worth considering, as their prices have also plummeted. When those drives first hit the market, they carried a price tag of $400, but now? $130 on average. 500GB? $70. Prices are indeed amazing, and there’s no better time than now to be a storage glutton. With RAM and processor prices being what they are, it’s never been easier to build a stellar PC that won’t break the bank, that’s for sure.

This is the ultimate drive for the ages, and it will take ages to fill it up. By now, the world knows that Seagate Barracuda 7200 drives deliver years of reliable service and high performance. In one bold move, Seagate provides the largest single capacity jump in the history of hard drives – a half-terabyte increase from the previous high 1TB.

Two Monitors Are Better Than One

I’ve heard from what must be a hundred different people that adding a second monitor to your setup can increase your productivity two-fold, although I’m still not sold. Now, if you had two sets of hands, then I could see how that would be the life. But as Kim Komando of USA Today claims, once you go dual, you’ll never go back.

In case you are unaware how to choose and purchase a second monitor, tips are given, but believe it or not, bigger in this case is not always better. If your main screen isn’t huge, then the second one shouldn’t be either, and resolutions also need to be taken into consideration. If your main monitor supports 1680×1050 and the second supports 1280×1024, then the two will not melt into each other, which will make for some odd times if you run your mouse cursor along the bottom of the screen from one to the next.

The good thing about a dual monitor setup is the fact that it’s more affordable than ever to go that route. Solid monitors can be had for $200, and given the amount of extra productivity that’s promised, it could very-well pay for itself soon. I’m still wondering how one large monitor would fare compared to two smaller ones, though. If you have a nice 30″, like the Gateway shown below, couldn’t you be just as productive? Or is there something specifically about having a second physical display that keeps your attention better?

Monitors can be adjusted to different resolutions. However, flat panels usually work best at their native resolution, which is expressed by figures such as 1,600 by 1,200 pixels. The two monitors should have the same native resolution. But you may need a resolution other than the native resolution of a particular monitor, so check the monitors in the store to be sure they work for you.

Fourteen Best Linux Distros

Alright, I’ve been posting a lot of Linux-related news lately, but it’s hard not to. There have been so many good articles lately, that I’d feel like I’m doing a disservice by not posting them. Last week, I linked to Lifehacker, who posted a good article on choosing between Fedora, openSUSE and Ubuntu. This week, Tech Radar ups that ante by taking a brief look at fourteen.

Despite the fact that they’ve chosen fourteen distros to feature, all of them are rather well-known, with the more uncommon of the bunch being Slackware, CentOS (used more in servers) and Knoppix. The article takes a look at what makes each distro unique and for whom it’s built. Many of these “guides” usually overlook key points for certain distros, but this one is quite well-done, so it’s definitely worth a read if you are still undecided on which distro to call home.

My heart still belongs to Gentoo… for now at least.

The main difference though is that Gentoo does not try to hide the inner workings of the distro behind easy to use GUI tools. This is a distro for those that want to know what is going on behind the scenes and get their hands dirty tweaking it. For this is Gentoo’s greatest strength: the sheer amount of control that it offers the users.

Diamond Says 188 Cards Faulty, Not Thousands

Last Wednesday, I posted a story regarding thousands of Diamond Multimedia ATI cards that might be defective, and since then, there has been many updates. The day after that news was posted, TG Daily followed-up their coverage by relaying the message from Diamond that only 188 cards were faulty, not “thousands”.

The explanation is that while Alienware sent back 2,600 GPUs, only a relative handful were defective. In Alienware’s case, it was much easier to send back the entire lot than test out each card individually before doing something with it. The CEO of Diamond, Bruce Zaman, also stresses that the cards sold to the regular consumer doesn’t show a higher RMA rate than is normal.

Our friends at Tech Report received an e-mail from Zaman late last week that claimed the company had been hit by fraud. To grab a quote, “A disgruntled former employee, who was terminated due to presenting fraudulent credentials, reported the story. When this person was unable to solve a very minor problem that affected less than 200 cards, many red flags were sent up, resulting in an investigation and termination.“

Is this the end of it? Hard to say, but the fact is that the supposed faulty models from Diamond have been in circulation in a while, and if there were problems with “thousands”, we probably would have heard about it by now. We’ll have to give it another year.

While documents TG Daily has seen indicate that Alienware found higher than usual failure rates with Diamond’s cards and ended up returning its entire lot of more than 2600 graphics cards and eventually dropped Diamond as a supplier, Zaman said only 188 cards “out of many thousands that were shipped” were found to have caused problems.

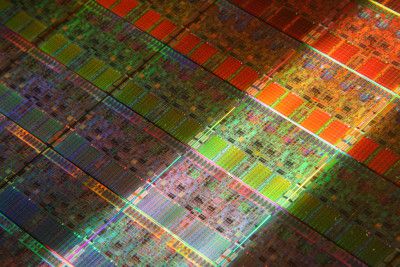

Intel’s Nehalem to Launch Week of November 9th?

According to a few sources close to Fudzilla, Intel’s first Nehalem processors will be launching in week 46, which equates to the week of November 9th. Whether or not product will actually be available that week is another thing, although it seems likely. We’ve already assumed that mid-November would be the launch anyway, so this rumor somewhat solidifies that.

You can expect our review of the new high-end processor prior to the release, so you can get a good idea of what you’re in for, but let’s quickly recap what makes Nehalem special. As we found out at Intel’s Developer Forum just last month, Nehalem will include many new features not found on current-gen processors, such as Turbo Mode (renamed to Dynamic Speed Technology), three-channel memory (insane bandwidth) and far-improved power features that allows the CPU to shut cores off when not in use.

As we’ve already established many times before, Intel plans to launch three processors on the same day, or so it seems, which would be far different than their usual launch plans which usually sees the Extreme Edition launched first. The highest-end Core i7 965 will be clocked at 2.93GHz, the Core i7 940 at 2.93GHz and the Core i7 920 at 2.66GHz. All three CPUs share the same amount of cache and other specs.

A few sources have told us that first of Nehalem generation, Core i7 CPU codenamed Bloomfield will launch in week 46. The launch should take a place between 10th and 14th of November and we’ve learned that Japanese part of Intel might get a green light to start selling its units a bit earlier.

Fedora, openSUSE, Ubuntu – Which is Right For You?

If you are a frequent reader of our news (I’ll assume you are), you are no doubt aware that I’m a Linux user, and a happy one at that. I’m not the type to discredit all other OS’ out there though, because even I have a dedicated Windows’ machine that I use for Adobe Photoshop and gaming. It’s almost a necessary evil to use more than one OS if you happen to want Linux and have important tasks that need to get done that you can only accomplish in Windows, and I envy those who don’t have to switch between both (what a time-saver!). But, I digress. If you finally decide to make the shift, how do you know which distro to choose?

Lifehacker hopes to take the complication out of figuring out which distro is right for you by taking a hard look at the three most popular on the market now: Fedora, openSUSE and Ubuntu. You might think that all distros are alike, but that couldn’t be further from the truth. Although all have the same underlying kernel, it’s the extra things that make a distro special, like the package manager, hardware detection, ease-of-use, et cetera.

Personally, I think all three of those distros are fantastic, but it all depends on what you are looking for. openSUSE is a bit on the friendlier side, so it’s great for newer non-techy users, while Ubuntu is for almost everyone… those who know a lot about Linux and those who know little. Fedora is sweet because the developers build a distro that’s ideal for the business environment, so stability is key. Those are just my opinions though. The article has even more.

Fedora is the free, consumer-oriented off-shoot of the enterprise Red Hat system, and is funded and founded by that same group. There’s a focus on the latest free software and technologies getting onto the desktop quickly, and it supports 32- and 64-bit Intel platforms, along with PowerPC-based Mac hardware—the main reason Linux creator Linus Torvalds uses Fedora 9.

NVIDIA Set to Rename Current GPUs

Back in May, it became known that NVIDIA had plans to do something with their lineup’s naming scheme… something to take out the complication for end-users. After all, enthusiasts like you who visit this site know the difference between a 9800 GTX and 9800 PRO, but the majority of people don’t.

When that news was posted, I said, and I quote, “How they plan to do this is unknown, but I’m personally glad I’m not the one in charge, because I have no immediate ideas.” Well as it stands now, I wish they did consult me, because the plans they have coming forward make things no-less confusing for the end-user… not from how I can see, anyhow.

According to TG Daily, NVIDIA’s planning to rename all current 9000 series to G100, which will result in models such as G100, G120, G140, and so on. Alright, I’m willing to admit that such a numbering scheme would be good for now, because obviously, G140 is going to be better than G120, but what happens when ATI comes out with a Radeon HD 160 that happens to be ultra-low-end? Not to say they will, but they’ve stooped that low before (so has NVIDIA).

If I ran things, I think I’d just include a single digit as well as the year (NVIDIA GeForce One 2008, NVIDIA GeForce Two 2008), but that’s impossible when even the companies themselves don’t know what GPUs they’ll be releasing over the course of the year. Oh well, we’ll see how this goes. I don’t expect it to make things any easier on the regular consumer though.

When the 2009 45 nm GPUs arrive, which seems to be the case around Q1 or Q2, Nvidia will have fully transitioned to the new branding structure: Enthusiast GPUs will be integrated into the GTX200-series, performance GPUs into the GT200-series, mainstream GPUs into the GS200-series and entry-level products into the G200-series.

NVIDIA Buys Into MotionDSP, Creators of CUDA-Based Video Encoder

NVIDIA has gone ahead and partnered themselves with another company, this time one that promises to give the company’s CUDA technology a huge boost. MotionDSP is a company that has been working on advanced video technology for some time, some of which I had the pleasure to see first-hand at NVISION last month. The fact that their software relies a lot on CUDA makes this partnership a no-brainer.

MotionDSP’s upcoming video software, called Carmel, promises to use CUDA to its fullest extent, and in some cases, it will be able to encode in real-time… something not yet seen on any desktop processors today, no matter the frequency or core count. The software will feature various enhancements, such as fixing of jittery videos, lightning up of super-dark videos, among others. You can see real-world examples here. That page also allows a beta sign-up.

Once this software gets released in Q1 2009, it’s bound to put NVIDIA’s CUDA technology in a great light, as long as it can live up to its promises. I’m confident it will, as I’ve been toying with various GPU-accelerated video encoders for the past little while and the results I’ve seen are good. But with MotionDSP’s taking a few more months before its final launch, I’d expect that solution to be far more robust. I’m already looking forward to it.

MotionDSP’s software, codenamed “Carmel”, uses sophisticated multi-frame methods to track every pixel across dozens of video frames, and reconstruct high-quality video from low-resolution sources. MotionDSP’s software significantly reduces compression and sensor noise, improves resolution, and corrects for poor lighting conditions.

Where’s all the fresh content?!

This month has been a little slow in terms of content posted, and for that, we apologize. I’ll take a moment or two here to explain what’s been going on, when things will go back to normal, and also divulge a little bit into what’s coming up. Over the past month, we’ve been working hard on things in the background, most notably with our testing methodologies, which I explained briefly last Wednesday.

As a result of the effort, we’ve completely revamped everything from how we install Windows Vista to how we run each one of our tests. Once we begin posting reviews under our new methods, we’ll be also posting an article that goes into some depth about every aspect of how we accomplish the results we do. I won’t get too much into this now, but trust me, you’ll want to check it out.

Content affected is graphic cards, processors, motherboards and storage, though I won’t get into great detail until reviews begin to be posted. Having “storage” listed there might seen a little strange, since it’s a category we’ve inadvertently shunned for quite a while, but you can be sure that it’s coming back in full force, and we now have a cool methodology to back it up.

Aside from all of that, a few of our staffers have been ultra-busy with non-site duties, from dealing with everyday hassles to moving to another state, but things should begin to settle down now. Starting next week, you can expect our content publishing schedule to be put back on track, just in time for the new month.

As always, thanks a ton for the support and for enabling us to be able to continue doing what we love to do!

Top Thirteen Error Screens

Ahh, the error screen. Where would we be without ’em? Whether it’s a Blue Screen of Death or a simple error pop-up from an application, they’re a required part of computing, but undoubtedly one of the most annoying aspects of computing as well. The Technologizer blog takes a look at their personal “top thirteen” greatest error messages of all time, and some are not going to be that surprising.

The BSOD is of course there, but did you realize there’s also a “Red Screen of Death”? It’s one that originally came with Windows Longhorn – before it became Vista – although there’s a slight chance it still could be in the retail version, but it would be incredibly rare. I’ve caught only one while testing Longhorn, and what a sweet day it was.

Other picks from their list go back as far as the eighties with the Guru Meditation, a random error for the Commodore Amiga that had the same overall effect of the BSOD, though it was red on black, rather than white on blue. It of course also included useless codes that meant nothing to the layman. The list is quite good overall… and I can’t really think of any that are missing off hand.

According to Wikipedia, some beta versions of Longhorn–the operating system that became Windows Vista–crashed with a full-screen error message that was red rather than the more familiar blue. Wikipedia seems to say that the final version of Vista can die with a red color scheme when the boot loader has problems, too. I’m relieved to say I’ve never encountered that, as far as I can remember.

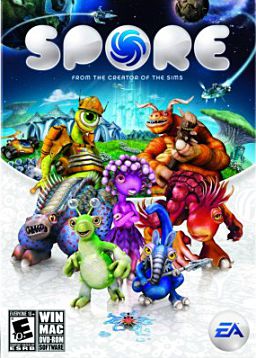

Spore’s DRM Leads to Class Action Lawsuit Against EA

Spore… can’t the poor game catch a break? Nope, not as long as DRM exists in the software. We’ve covered the DRM in Spore many times in our news already, but the latest happening is a class action lawsuit against EA, stating that the company violated two different laws in the state of California – though the laws could be similar in other states as well.

The reasoning behind the suit leads to SecuROM, the notorious “disk checker” DRM that has been loathed by many people for quite a while. The problem isn’t so much the software itself as much as it is the fact that EA doesn’t tell its customers that it will be installed. The company fails to mention the software specifically, but does mention that copy protection is used.

The biggest problem with SecuROM is that it takes a lot of effort to remove off the system if you don’t want it there anymore. You can uninstall every game that utilizes it, but it will still be there, lingering. Whether or not this should be considered a real issue or not could be debated, but as I always say, if you buy your software legally, you shouldn’t have to put up anything of the type. It’s going to be interesting to see how far this lawsuit will go, but one sure thing is… it’s not going to affect EA’s pocketbook that much even if they lose, sadly.

The suit accuses EA of “intentionally” hiding the fact that Spore uses SecuROM, which it alleges is “secretly installed to the command and control center of the computer (Ring 0, or the Kernel) and [is] surreptitiously operated, overseeing function and operation of the computer, and preventing the computer from operating under certain circumstances and/or disrupting hardware operations.”

Voodoo Still Alive and Strong

On Monday, we broke the news that big changes were coming to VoodooPC, and although we are still unsure of the end-effect these changes will have, we are a little better informed today. Phil McKinney, VP and CTO for Hewlett-Packard’s Personal Systems Group posted on the official Voodoo blog to help clarify the goings-on.

According to Phil, Voodoo isn’t going anywhere, and I’m sure many will agree that this statement deserves a *phew*. But our original predictions of what will happen with the name seem to be true. Phil says, “We’re migrating products and innovations from the high-end Voodoo portfolio into various parts of HP’s portfolio.” So while Voodoo the name isn’t going anywhere, the company itself will be integrated into HP.

This, to me, is a good thing overall. VoodooPC has been making some stellar products for a while, but since being acquired by HP, things haven’t exactly exploded. The integration promises to improve that situation and make Voodoo products better available for more people, which I assume means we’ll see an expanded portfolio in the near-future.

Phil goes on to state that Voodoo has new products in the pipeline, though he doesn’t go into any real detail whatsoever. Could it be the rumored larger Envy? An Omen sidekick? A netbook? I guess we’ll have to wait and see. If more information surrounding the layoffs and company changes come to light, we’ll be sure to let you guys know.

Integration into the larger HP means greater leverage of HP’s processes, partners, go-to market programs, distribution and infrastructure. This plan will allow more consumers broader access to the incredible products and technologies developed by this team. Rahul Sood, who continues to report to me as Voodoo CTO, blogged about this more than a month ago.

Hasselblad to Announce 60 Megapixel Camera Next Year

Just the other day, I posted about Leica’s new high-end D-SLR that features a sensor capable of capturing 37 megapixels, which is impressive in itself, but even more impressive to be coming from a company like Leica. But when you think of cameras with an insanely large megapixel count, it’s of course Hasselblad that first comes to mind.

The company announced a 50 megapixel camera over the summer, which is staggering to think about as is, but apparently they have an even bigger model en route that ups the ante to 60 megapixels. The camera will be called the H3DII-60, so in all likeliness, it will likely be very close features-wise to other current H3DII models.

The upcoming model will also feature a true 94% full-frame sensor, which Hasselblad stresses is important, since most “full-frame” cameras out there are not true full-frame. It will retail for £18,750, a £4,000 premium over the H3DII-50, so you better have a need for those ten extra megapixels.

A “revolutionary” new tilt and shift adaptor, the Hasselblad HTS 1.5, is also joining the Hasselblad line-up. The HTS 1.5 allows photographers to use tilt and shift functionality with most of their existing or new HC/HCD lenses. “The addition of digital sensors that read and record all movements and Hasselblad’s proprietary digital lens correction mean that we can offer photographers both a unique level of quality and maximum ease of use,” said Poulson.

Thousands of Diamond Multimedia HD 3800-series Potentially Defective

According an an industry source close to TG Daily, it appears that Diamond Multimedia have a rather significant problem on their hands, although right now they seem to be taking it in stride. It turns out that between 15,000 and 20,000 of their HD 3800 cards left the factory with manufacturing defects. Those include poor soldering, memory issues and in some cases, temperature issues.

Most of the GPUs have been shipped to regular consumers, but Alienware is the one who experienced the issues first-hand, and as a result of their testing, they had to return over 2,600 of these faulty GPUs back to Diamond. Even worse, in a previous instance, Alienware had found that 100% of the GPUs they received from Diamond were defective.

What’s important to note, however, is that Diamond weren’t the ones to manufacture these cards. In this case, it was one of their contractors, ITC, which is likely where the problem was created. The problem gets worse though, because the cards were not qualified by AMD, so some are claiming negligence in evaluation and testing.

Diamond is claiming that the return rates on the cards are extremely low, and that the problem with Alienware was due to their power supply of choice. But the fact of the matter is, the cards were proven to be faulty, and worse still, thousands have been sold, and the same product is still available through popular e-tailers like NewEgg. Should Diamond admit the problem and recall the cards? It would be the right thing to do, without question, but given they aren’t a huge company, something like that could really set them back. Sticky situation, that’s for sure.

Yes, it would have been the honorable thing to do, but this is a business world. A recall would have been a suicide business-decision since replacing 15,000 graphics cards can easily bankrupt a company like Diamond. We hear that Diamond is stuck between a rock and a hard place and was in no position to shoulder the cost of a recall due to a business agreement with GeCube.

Google’s Chrome Declining in Usage

When Google first launched their Chrome browser, excitement was high, but new reports show that interest is beginning to fade as people return to their usual browser. According to the findings from Net Applications, who track statistics from over 40,000 different websites, Chrome usage is down to 0.77% from the 0.85% it had before. Small percentage, but it results in a large number.

These results don’t mimic our own, however, which shows that the browser experienced a quick decline after the first week, but usage actually grew in week three, as you can see below. Interestingly enough, during those three weeks, Firefox saw its usage grow, while IE declined about 1%.

-

TG Chrome Usage

- Sept 3 – 9: 4.50% (Firefox: 49.76%, IE: 37.69%)

- Sept 10 – 16: 3.65% (Firefox: 50.47%, IE: 37.42%)

- Sept 17 – 23: 3.78% (Firefox: 51.08%, IE: 36.66%)

It’s important to note that though people might be returning to their old browser, it could be simply due to the fact that Chrome is still in beta and has a long way to go before the final version. Not everyone out there wants to use a beta browser, so when a new and improved version comes out, these numbers may change once again.

IE and Firefox still showed share erosion compared to the period immediately before Chrome’s Sept. 2 debut, but both browsers regained users last week, Vizzaccaro said. IE picked up 0.24 percentage points last week, while Firefox regained 0.06 points. Both, however, remained down for the month, as was Opera Software ASA’s Opera and AOL LLC’s now-defunct Netscape.

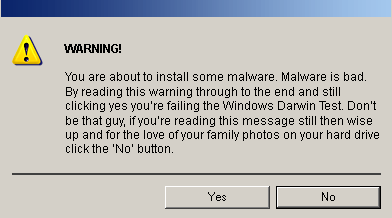

Study Shows Most Users Don’t Read Fake Windows Pop-ups

We’ve all seen them… fake Windows dialogs that pop up when we visit a certain site, begging us to click the OK button to either download some security software, to acknowledge a fact or anything else that could walk the line of believability. The sad thing is that these pop-ups are likely one of the leading causes of installed malware, because most users don’t actually read what’s being said, or they might actually believe the warning.

A new study had researchers develop a set of four different fake pop-ups to see how fifty college students would react when one popped up on the screen. Sure enough, the majority either clicked through to acknowledge the pop-up, moved it out of the way, or simply closed it. The amount of time between the actual pop-up and the action from the user was proven to be very low, showing that users really don’t take any time to see what’s being said, or to verify its validity.

It goes without saying that this is one of those tricks that all your friends and family should know about. You might know the difference between a real dialog and a fake, but most people don’t. That’s the sad reality, and it’s the reason so many security companies have a thriving business that won’t be fading anytime soon.

In all cases, mousing over the “OK” button would cause the cursor to turn into a hand button, behavior more typical of a browser control; all dialogs also had minimize and maximize buttons, while a second added a browser status bar to the bottom of the window. Finally, the most blatant one alternated between black text and a white background and a white-on-black theme. All of these should metaphorically scream, “This is not safe!”

STALKER: Clear Sky Features 5-Time Activation Limit

I hate to post more about copy protection in games, but I can’t help it. I’ve ranted about it a hundred times in the past, but ironically, I’ve never actually been the victim – until last night. Spore? Nope. Mass Effect? Nah. STALKER: Clear Sky? You guessed it.

Although Steam has its flaws, it’s great for the fact that almost all of the games we use for benchmarking in our GPU reviews is on there. At current time, the only one not there is Need for Speed: ProStreet, and being an EA title, I wouldn’t expect to see it there for quite a while – if ever. So when Clear Sky came out, it was a no-brainer to purchase it on Steam since it’s nice to keep everything together. Plus, you usually can avoid copy protection, since Valve has their own. But as I found out, that alone doesn’t please some publishers.

So, GSC decided to force even Steam users to put up with the TAGES copy protection system, which while I agree works well, it defeats the purpose of what Steam is all about. Since you have to be logged in at least once to activate the game through a Steam account, a secondary activation is redundant. The problem is not-so-much that though, as it is the fact that there is five-time activation limit…

… which I managed to exceed in one evening. As it turns out, even swapping a GPU will require another activation, and since I was performing a wide-variety of testing with different GPUs… I think the problem speaks for itself. So… I’m finally a victim of copy protection. Despite paying $40 for it just last week, it’s effectively useless on my machine as it stands.

I’ve stressed this point before, but I’ll do so again. The fact is… right now there are many gamers locked out of the game, who paid for it. I see it on both the Steam forums and the GSC forums, and it’s needless. If it’s in your Steam account, then you obviously paid for it, so the fact that any gamer is locked out at all is unbelievable. But, what’s sad is that while many of these gamers are locked out of their legally-bought game, those who downloaded pirated copies are enjoying it hassle-free.

Oh, and I can neither confirm or deny that a crack that’s floating around the web may or may not work with the Steam version of the game, but it’s worth a try if you are desperate.

Mega Man 9 Delivers Classic Gaming at its Best

Back in June, we first learned of Capcom’s brilliant move in releasing another 8-bit title, and as it happened, you could hear the millions of gamers lose their breath for a few moments after realizing that it meant a new Mega Man game was on the horizon. There’s few people who’ve grown up with a console and haven’t played a Mega Man game, so the excitement is definitely shared all over the world.

The game has launched on the Nintendo Wii already, and according to initial reports, it’s already sold 60,000 copies. Thursday will see the Playstation 3’s launch, while gamers on the Xbox 360, for whatever reason, will have to wait until next Wednesday to get their hands on the blue bomber.

IGN has a video review available that says what we were expecting… the game delivers more of what we love. It features 8-bit everything… graphics, audio, even the glitches that we’ve come to expect from old NES titles. Capcom really has a winner on their hands here, so hopefully we’ll see them continue this tradition and also see other developers take the hint.

You’re going to get the same experience on every version overall, but those that want to sit down and critique the truly minimal differences between the builds will find that the emulation between the three versions is going to differ ever so slightly. The Wii version has more of the 8-bit look overall to it, but that also means there are harder edges, and a more pronounced “blocky” look.

First Android-based Phone Hits the Market

It’s been close to a year since we first learned of Google’s Android mobile OS, and T-Mobile today becomes the first provider out the door with a product. The T-Mobile G1 features a 3.17″ screen supporting a resolution of 480×320, WiFi and Bluetooth and support for GMS, GPRS, EDGE, UMTS and HSDPA. The unit itself isn’t that much bigger than the screen at 4.6″ x 2.16″ x 0.62″. It weighs 5.6 ounces.

Being a Google-driven OS, the device comes pre-loaded with Google Maps and YouTube applications, so finding your way around town or watching an online video is a simple proposition. Although not launched yet, Google will unveil an “Android Market”, similar to the iPhone Apps Store and the similar offering Microsoft also has in the works. It will allow users to purchase whatever apps they please, and even develop their own if they so choose.

Unlike most mobile phones out there, Android is an open-OS, and developing applications for it is simple as long as your preferred language is Java. It requires Google’s own Java libraries, but those are pre-loaded, and the SDK is also easy to come by. Though I don’t have particular interest in this phone myself, yet, I look forward to seeing whether or not it will take off. It’s just too bad that right now it’s locked into a single provider.

Another direct shot is taken at Apple in the form of a dedicated Amazon MP3 Music Store application designed for Android. Customers will have access to over six million DRM free songs starting at just $0.89 each. Customers will be able to download the songs directly to the G1, but a WiFi connection is required for over the air downloads.