- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Apple Announces Revamped MacBook Family

As was expected, Apple today unveiled their brand-new MacBook’s, which include the MacBook, MacBook Pro and MacBook Air, and I have to say, even as a non-Mac guy, they look quite sharp. There is no $799 model available that some were hoping to see, but the original 13-inch model has been dropped to $999, which features a 2.1GHz processor and a 120GB hard drive.

The new models all feature an aluminum body, and according to Engadget, it feels “ridiculously solid”. To help increase vibrancy, Apple opted to use a glass display, although according to the same article linked to above, it creates a lot of glare… something that will cause difficulties outside or even in a really bright room.

As a recent rumor suggested, the new MacBook’s include NVIDIA GPUs, which is something definitely to be excited over. The smallest model, at $1,299, includes an NVIDIA 9400M GPU, while the MacBook Pro’s feature the same, but also an additional 9600M GT discrete card… also known as Hybrid SLI.

Unlike most other notebooks available, the new MacBook’s include a Mini DisplayPort port, which will work nicely with the new (and extremely expensive, at $899) 24″ Cinema LED display. Besides the fact that it offers amazing crispness and great color, the display also features exhaustive environmental improvements that meet Energy Star 4.0 requirements. The downside is the price, and the fact that it appears to only work with the new MacBook’s.

Overall, a good showing from Apple. Good luck making a purchase today, though. The overwhelming demand has taken the store down, although it should be back up later.

Every member of the new MacBook family features an LED-backlit display for brilliant instant-on performance that uses up to 30 percent less energy than its predecessor and eliminates the mercury found in industry standard fluorescent tube backlights. The ultra-thin displays provide crisp images and vivid colors which are ideal for viewing photos and movies, and the edge-to-edge cover glass creates a smooth, seamless surface. Every display in the new MacBook line uses completely arsenic-free glass.

Cracking Passwords with NVIDIA GPUs

Although NVIDIA isn’t exactly blowing anyone away lately with their GPUs, the company is hoping to sell the entire industry on their CUDA technology, as we’ve explored many times in the past, and will likely continue to talk about in the future. Essentially, CUDA is a C++/C-based language that utilizes the GPU to its maximum potential, and given that it’s a highly optimized parallel processor, extraordinary speed can be seen.

A few months ago, in talking to one graphics card vendor, the idea of using CUDA for the sake of hacking/cracking was brought to the conversation, but I don’t think either of us expected something to hit the market so soon. Thanks to a company called Elcomsoft, that is now a reality, and the results and what the application can do is impressive.

If you locked yourself out of a seriously important file, or your system, don’t fret. This software likely supports the encryption scheme you need to tackle, and according to their reports, a high-end NVIDIA GPU (8800 GTX) can process a password close to 14x faster than a typical Intel Core 2 Duo, which is highly impressive. The software can also handle upwards of 64 CPU cores and 4 physical GPUs to work together for even faster operation. The downside? The price… but it’s to be expected.

Elcomsoft Distributed Password Recovery employs a revolutionary, patent pending technology to accelerate password recovery when a compatible NVIDIA graphics card is present in addition to the CPU-only mode. Currently supporting all GeForce 8 and GeForce 9 boards, the acceleration technology offloads parts of computational-heavy processing onto the fast and highly scalable processors featured in the NVIDIA’s latest graphic accelerators.

Windows 7 Becomes Windows 7

Windows Vista may have been released to consumers last January, but the “Windows 7” moniker for the follow-up OS was coined long before that. Every few months since then, we’d hear more about the OS, and Microsoft is doing a great job in making sure everyone knows what’s going on with the Vista-replacement – they’ve even gone as far as to release a very in-depth development blog a few months ago.

Well, we’ve been calling it “Windows 7” for a while, so let’s stick with it, ok? Microsoft agrees, and sure enough, the next version of Windows is going to be called just that. It may seem lazy, but “Windows 7” actually has a nice ring to it, and hey, it does seem to work for Apple, right?

Wondering why Windows 7 is actually considered the seventh version? In the earliest days, the OS names were simple, with Windows 1.0 in 1985, 2.0 in 1987 and 30 in 1990. Things changed when Windows NT was released in 1993, which was essentially the fourth version. Windows 95/98/ME also shared the “4” version number, while 2000 and XP officially became the fifth. Vista of course is version six, which makes Windows 7 appropriately named.

“For me, one of the most exciting times in the release of a new product is right before we show it to the world for the first time,” Nash wrote. “In a few weeks we are going to be talking about the details of this release at the PDC and at WinHEC. We will be sharing a pre-beta ‘developer only release’ with attendees of both shows and giving them the first broad in-depth look at what we’ve been up to.”

OpenOffice.org 3 Released

We’ve been waiting for a little while, but wait no more – OpenOffice.org 3 is here. The initial release is available in eight different languages and all current platforms, including Linux, Unix and Apple. Opinions are mixed, but if you are already an OOo user, there is literally no reason for not upgrading. The UI looks slightly better and the functionality has been improved (although none of those enhancements have effected me directly, yet).

One writer at a Houston Chronicle blog seems entirely pleased with the latest release and states that it’s becoming an “even better alternative” to the more mainstream office suites, including of course, Microsoft Office. The best part is the fact that it’s free, and it’s hard to beat that. Plus, if you are a Mac user, you’ll notice a nice stability boost since this is the first version of OOo to run on that platform without the use of the X11 Unix shell.

In addition to native OS X support, the latest OOo updates support for more formats, including Microsoft Office 2007’s XML-based files, and you can see an example of that in the image below. In this particular case, OOo did import the proper graph graphic, but it’s non-editable… so the options for actually editing Office 2007 documents is going to be a bit limited. It’s definitely improving, though. That aside, that’s going to be a limited issue, and doesn’t detract from the great application it is. I’m still unable to shift from Office 2007… but I’m confident it will happen someday.

OOo supports several file formats, but uses OASIS’s OpenDocument Format (ODF) by default. ODF is rapidly gaining widespread acceptance and is also supported by Google Docs, Zoho, IBM’s Lotus Notes, and KDE’s KOffice project. ODF is increasingly being adopted as the preferred format by government agencies in many different countries. This trend has placed pressure on Microsoft, which has agreed to include native ODF support in future versions of Office.

Linux Kernel 2.6.27 Released

We saw the release of the 2.6.26 Linux kernel this past summer, but fall is in the air, and so is a new release. Nothing truly mind-blowing has been added to 2.6.27, but as always, if you are familiar with compiling your own kernel, there are many security and system-related updates that will make sure your computer is as good as it can be.

One nice addition is the UBI File System (UBIFS) which was developed by Nokia and the University of Szeged. Unlike more common file systems, this one will not work work common block devices, like hard drives, but rather pure flash-based devices, although aside from a Solid-State Disk, I’m not sure what else there is.

It doesn’t seem to be highly recommended that you jump over to this FS if you happen to have an SSD, but if you do, it would be wise to thoroughly read up on how it operates. Because SSD’s have a limited number of write cycles, UBIFS seems to be smart in how it operates in order to keep the drive healthy. Chances are there are few who are using SSDs on Linux right now outside of a server environment, but the option is now here for those who do.

Other notable additions include an ext4 upgrade to improve performance under certain workloads, multiqueue networking, improved video camera support using the gspca driver, a voltage and current regulator framework, and a lot more. If you happen to own an ASUS Xonar D1, you’ll be happy to know that support has been added for that, and the same goes for Intel’s latest wireless 5000AGN chipset.

UBIFS does not work with what many people considers flash devices like flash-based hard drives, SD cards, USB sticks, etc; because those devices use a block device emulation layer called FTL (Flash Translation Layer) that make they look like traditional block-based storage devices to the outside world. UBIFS instead is designed to work with flash devices that do not have a block device emulation layer and that are handled by the MTD subsystem and present themselves to userspace as MTD devices.

Apple’s Upcoming MacBook’s to Use NVIDIA Chipsets & GPUs

After countless rumors, Apple’s October 14th press event is official, and there’s no question that it will be new MacBook’s they’ll be showing off, and little else. Both MacBook lines are to be not only refreshed, but overhauled. The most popular rumor is that the new notebooks will feature an aluminum chassis, which will increase durability and weight. It’s also supposed to retail for a lot less than current offerings, potentially opening the doors for much wider adoption.

In late July, we linked to a story that claimed Intel chipsets on Apple’s notebooks were soon going to be a thing of the past, and NVIDIA was of course the replacement on everyone’s mind. Ryan at PC Perspective followed-up to an editorial he wrote around the same time and stresses that the notebooks next week will feature NVIDIA chipsets and GPUs.

This is one rumor I’m led to believe, and I trust Ryan’s confidence. To me, this is only a good thing. While NVIDIA chipsets tend to use more power (wattage) than Intel’s, their offerings in the IGP department are far better (especially on non-Windows machines). This is also the kind of announcement that NVIDIA must be simply dying to make, especially after a long summer of fairly unappealing press.

There are a couple other interesting points that lead me to the same conclusion that have come up since our July editorial. First, NVIDIA has been pushing OpenCL support on their integrated graphics solutions, a standard that Apple helped create. Because NVIDIA’s IGP chipsets would allow for OpenCL acceleration Apple would gain support for the programming technology across all of their platforms.

UAC in Windows 7 Should be Slightly Less Annoying

When talking smack about Windows Vista, one of the most common aspects to pick on is the User Control Panel, or UAC for short. Even I’ve whined about it. Mostly, I think it’s for good reason, and I think few could argue that. Microsoft themselves have even stated they went a little bit overboard, but they are quickly learning which improvements to make, so it should only improve in the future.

Though, according to the latest update in their Windows 7 blog, the improvements might not be here next month, or even next year – or until Windows 7. The blog goes into quite good detail regarding what UAC is all about, and what purpose it really serves. It goes as far as to delve into usage statistics as well, and surprisingly, the amount of UAC ‘pop-ups’ has drastically declined in the past year – although that might not be that surprising.

Their research further goes on to show that the number of applications to require a prompt has gone way down, which is a sign that the developers are being smarter when coding their application. The number was cut in half after the beta ended, and in half again since between the launch and now, so it’s certainly getting better. For Windows 7, the outlook is looking even better, but I’m still confident it will be the very first thing I disable. Call me a rebel.

Now that we have the data and feedback, we can look ahead at how UAC will evolve—we continue to feel the goal we have for UAC is a good one and so it is our job to find a solution that does not abandon this goal. UAC was created with the intention of putting you in control of your system, reducing cost of ownership over time, and improving the software ecosystem. What we’ve learned is that we only got part of the way there in Vista and some folks think we accomplished the opposite.

NVIDIA to Launch Three GPUs Next Month?

When NVIDIA launched their GTX 200 series in June, I don’t think anyone had an idea of how fast they would be overshadowed, but leave it to AMD… it was done. Since then, NVIDIA hasn’t had a truly competitive high-end part, but it appears they’re working towards changing that with a few upgraded releases.

To help better compete this holiday season, The Inquirer is reporting that the big green will be launching three ‘new’ models – GTX 270, GTX 290, and get this, a GX2. The reason why the GTX 260/216 wasn’t called the GTX 270 becomes clear with these findings. Both cards take advantage of a die shrink and clock boosts.

When the GTX 200 series first launched, a GX2 version of the cards seemed unlikely due to the sheer size of the GPUs, but thanks to these forthcoming die shrinks, it’s going to be possible. It doesn’t seem to be clear whether it will be based on the GTX 260 or GTX 280, but it will likely have to be the latter in order to overtake ATI’s HD 4870 X2.

Two problems I foresee with the GX2 is heat and the fact that it still seems to use a dual-PCB design, which in my experience, is nowhere near as reliable as having two cores on the same PCB. Sharing the same PCB makes it easier to share other components, like memory, and on top of it, the card’s production cost should be lower in the end. The dual-PCB factor was cute at first, but we really need to see a change here. You know… if this rumor is at all true.

NV is in a real bind here, it needs a halo, but the parts won’t let it do it. If they jack up power to give them the performance they need, they can’t power it. Then there is the added complication of how the heck do you cool the damn thing. With a dual PCB, you have less than one slot to cool something that runs hot with a two slot cooler. In engineering terms, this is what you call a mess.

Intel’s X58 Chipset to Cost Less than X48?

Regardless of the motherboard, the most expensive component is the chipset, which includes both the North and Southbridge. Though prices aren’t confirmed, the rumored price for an Intel P45 chipset is $40 in quantities of 1,000, while X48 hovers around $55. So with that, we’d imagine that the upcoming X58 chipset would be even worse, right? Well according to sources close to Fudzilla, that might not be the case at all.

In fact, their sources state that X58 is about $20 less expensive than X48. I have a hard time believing this, personally, because we are dealing with a revamped chipset that’s capable of a bit more than previous generations. Why it’s $20 less expensive is what I’d like to know.

Aside from the chipset itself, what strikes me odd is the prices of the motherboards, which are rumored to be over $400 at launch for a “mainstream” model, like the ASUS P6T or Gigabyte’s GA-X58-DS4. So, if the chipset is lower, then why are the boards so expensive? Fudzilla goes on to state that the reason lies with the required components, like an eight-layer PCB, which is apparently around 25% more expensive than a six-layer.

Could it be that Intel priced X58 accordingly to offset the prices of the motherboards? If X58 is indeed less expensive, I’d be curious to see if the tradition would continue onto future mainstream models.

We don’t know exactly what the price of a motherboard PCB is, but we’re sure it’s a fair share of the cost of a motherboard. However, we have a feeling that in this case, a lot of the motherboard manufacturers want to cash in on the new platform and they also want to sell their X58 products at a higher price than their X48 models, since it’s the latest and greatest model in their line-up.

Seagate to Begin SSD Production in 2009

No matter how much Seagate, Western Digital and all the other large hard drive manufacturers try to tell you otherwise, SSD is not just a fad, and it’s not going away. Though the longevity of Solid-State Disks is still in question, we’re unlikely to find a serious caveat at this point in the game, since SSDs have been around for a while. If there was a serious underlying issue, I’d assume we would have seen it by now.

Seagate, who in the past has shunned the idea of SSD (despite claiming to own patents earlier this year) is planning to launch their own line-up beginning in 2009. The first will be available at the enterprise level, with consumer SSDs to come later. At first, it might seem strange that they are waiting that long, when companies far smaller have been offering them for some time, but the market is still unquestionably small, so they aren’t exactly missing out on a huge opportunity here.

According to the Nanotech blog at CNET, Seagate might manufacturer the technology in their hard drives, but it will be a different story with SSDs, as they’ll purchase memory chips from others, rather than develop it in-house. That makes sense, as their experience in that area, as far as I recall, is minimal. Once their drives are launched though, they’re going to have one heck of a battle. The market is still small, but the competition is fierce, and I’d love to see the guy who will compete with Intel’s masterful controller, as seen in their X-25M.

Of course, it won’t be a cakewalk for Seagate. There is plenty of competition already. Intel has started shipping SSDs for both enterprise and consumer markets. And Samsung is a leading player in the consumer market–its drives are used by Dell and Apple–and it is now stepping up efforts to snag corporate customers. On Thursday, Samsung announced that its SSDs have been selected, after extensive testing, for use in the Hewlett-Packard ProLiant blade servers.

Xbox 360 Blu-ray Rumors Heat Back Up

It’s been a clean five months since we’ve last heard rumors about a prospective Blu-ray add-on for the Xbox 360, but that can of worms has just been re-opened with the help of X-bit labs. They claim that Microsoft is working on preparing an external Blu-ray drive, although the site is currently unsure when we’ll see an actual launch. They believe that since the format isn’t overly popular, Microsoft may decide to wait until January’s CES to build up a huge buzz.

Toshiba might not want anything to do with Blu-ray, understandably so, but their joint cooperation with Samsung is the company contracted to build these drives, which Microsoft is hoping to launch for between the $100 – $150 price-point, in order to compete with the Playstation 3, which of course includes support for the format without the need for an external drive.

This move would make sense for Microsoft, and the posting at X-bit seems quite sure that it’s going to happen. It may result in a clunky setup, but it would be nice to have a choice between paying for a high-def format or not having one. I’m incredibly happy that the Playstation 3 came with Blu-ray, but it’s obvious that not everyone takes advantage of the movie capabilities. The choice should be made easier when the next-gen consoles arrive, as long as another format isn’t going to be introduced.

The main reason why Microsoft is unenthusiastic regarding Blu-ray is mandatory support of BD-Java interactive technology and Sony’s reluctance to adopt competing tech called HDi that was developed by Microsoft. Even though Microsoft managed to push its VC-1 codec onto both Blu-ray and HD DVD markets, the company’s negative attitude towards Java prevented it from supporting the former standard in general. As a result, the company used to sell external HD DVD drive for Xbox 360.

What Does Linus Look for in a Linux Distro?

What does Linux founder Linus Torvalds look for in a distro? It might surprise you, but the guy who is one of the lead maintainers of the Linux kernel and could tackle most any issue that would leave the rest of us stumped… prefers a refined and simple distro. It’s not hard to see why, though. Even as a lead kernel developer, not many people enjoy battling with an OS to get it to work.

His distro of choice is Fedora 9, which in a way doesn’t surprise me. Fedora, though I don’t use it, seems to be one of the most stable distros on the market, and its package manager makes sure of it by not automatically allowing unsafe or untested applications or services to get on your machine. Ubuntu looks to be his leading second choice, and again, it’s easy to understand why.

Linus goes on to say that the sheer choice we have with distros is good, and that competition improves things all-around. I still can’t help but thing a few simple standards would improve things even further though, like a dependency manager that would actually work on all distros. That, to me, would be a good start to making sure that all applications install on all distros the same way. But there’s probably a reason I’m not a developer!

And when it comes to distributions, ease of installation has actually been one of my main issues – I’m a technical person, but I have a very specific area of interest, and I don’t want to fight the rest. So the only distributions I have actively avoided are the ones that are known to be “overly technical” – like the ones that encourage you to compile your own programs etc.

Xbox 360 Dashboard Overhaul On the Way

When Microsoft launched the Xbox 360 in late 2005, it was one heck of a product. Everything from the design, the software, the games, the high-def-ness… was fantastic. But that was three-years-ago, and now upgrades need to be made to help things keep fresh and to also make the experience an even better one. It’s with that, that a complete Dashboard overhaul is coming our way, and a CNET blog takes a thorough look at all of what’s new.

The most noticeable change with the new Dashboard is the design. Gone are the days of blades and in with pages that you can flip through, similar to looking at different albums on an iPhone. Interaction with other players is another huge feature, with the addition of “channels”, a feature that will allow you to hook up and chat with people even if you are playing a different game.

Following the insane popularity of Mii’s on the Wii, another important feature will be the addition of highly customizable avatars. You’ll be able to build yourself and change everything, from clothes, eyes, face, et cetera. There’s a lot more on the way, and I can’t wait to see it. I’ve never really been a fan of the typical UI that shipped with the console, but these changes look fantastic.

To begin with, players can choose an avatar from a large selection that run onto the screen looking like a group of school kids, each dressed differently and sporting diverse hair styles and skin color. Don’t like that group? Move on to the next one. And on and on, until you find one you like. Each group is presented randomly, and within the group, individual avatars seem to try to get your attention by jumping up and down and raising their hand.

Nehalem CPUs Shouldn’t Die Fast with High VDIMM

Last last week, we linked to a story that talked about supposed memory voltage limits with the Nehalem processor and its X58 chipset counterpart. This was all spawned by a warning that ASUS is sticking on their P6T Deluxe motherboards, clearly stating that it’s not recommended to go beyond 1.65v on the memory, as it can potentially damage your CPU.

Well, in talking to ASUS about the issue, I’ve found out that they’ve had no issue running that exact board in their labs with much higher VDIMM than what the warning label states… as high as 2.1v, whereas the supposed limit is 1.65v. Still, the issue remains, how long will the board or the CPU last with a higher voltage over time?

The recommended limit could become a problem in certain regards, although it’s too early to speculate. We do know that Intel themselves are going to recommend a lower memory voltage for use with the CPU, but what exactly would a memory high voltage do to the CPU? Nehalem does feature cutting-edge technology, after all. Surely the memory voltage plane is separate from the one that powers the CPU cores?

We’ll find out more soon enough, though. Rumored launch dates for Nehalem have been targeted at around mid-November, so it should be much earlier than that before we find out the true limits that the architecture imposes.

First GPU Review Under New Methodology Posted

Last month, I posted that we were working on upgrading all of our performance-related methodologies, and I’m happy to report that we pretty much finished towards the end of September. It took almost two straight weeks, but we feel the results are going to be worth it. We took the opportunity to re-evaluate everything, from how we go about installing Windows (and tweaking it for reliability), which applications to use for testing, finding the best test for each, et cetera.

Content that received these overhauls include processors (we’ll kick off the new methodology there with the Core i7 965), graphics cards, motherboards and storage. The first review to be posted with the revamp is of Palit’s Radeon HD 4870 Sonic Dual Edition, which you might have noticed at the top of the page. I’ll give a quick rundown of what exactly we’ve changed here or improved here.

The first and most notable is the addition of “best playable” settings on the bottom of each game page. Along with our regular graphs showing a GPUs scalability at a given resolution, these “best playable” tables are completely separate and are a simple way to see what we feel was the best playable setting for that particular card. In the case of the card mentioned above and Crysis Warhead, we found 2560×1600 on the Mainstream profile to be the best setting possible, delivering close to 35 FPS. Even if you don’t run that resolution, it can show the capabilities of the GPU, and you can remain confident that if the card is capable of that, then it’s going to only improve at any lower resolution.

Other new features include temperature reports and also revised power consumption and overclocking tests. Minor updates this time around include replacing the original Crysis with Crysis Warhead, and S.T.A.L.K.E.R. with S.T.A.L.K.E.R.: Clear Sky – both two very GPU-intensive games in every sense of the word. We of course welcome thoughts on our new methodology, so if you have any questions or comments, please take a look at the review and post your comment in our related thread, which doesn’t require a registration.

You can expect more good things to come in the weeks to come, so definitely stay tuned.

Let’s face it. The overclock available with a flick of the switch on this Sonic card is small, and the differences in real-world tests are minimal. What makes this card so great is the general performance we’ve come to appreciate, along with the features and cooler. It’s all made better by the fact that despite the additions, the card is still priced less than most of the competition.

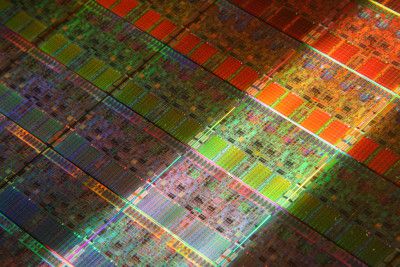

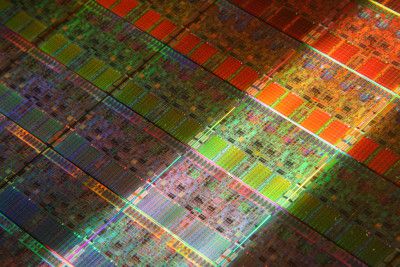

AMD Splits In Two, Planning New Fab in 2009

We first learned about AMD’s “Asset Smart” plan a few months ago, but the company today made an official announcement that the company would finally be splitting up into two entities. To help rid some of their debt and also make production easier, AMD has teamed up with an Abu Dhabi company, Advanced Technology Investment Company, who will together create “The Foundry Company” – a temporary name.

Once the new company is established, it will have a total value of $5B, which consists of AMD’s current Fab, ATIC’s contribution of $1.4B and another $1.2B of debt assumed by ‘The Foundry Company’. The only two owners of the new business will be AMD and ATIC, although the latter will own a slightly larger stake at 55.6%. Both companies have equal voting rights, however.

This move is no doubt going to help AMD improve both their business and products over time, and the plans laid out so far look good. Construction will begin in early 2009 in Saratoga County, NY for the first 300mm Fab producing silicon on a 32nm process. This promises to create thousands of jobs in upstate New York and will become the only independently-managed semiconductor manufacturing foundry in the US.

When all said and done, AMD will be part of two companies based in five different locations. Their main HQ will remain in Sunnyvale, California, while their current Fab in Dresden, Germany, will also. Their offices in East Fishkill, New York and Austin, Texas will stay put, with the addition being the upcoming Fab in upstate New York, resulting in two NY-based locations.

In related news, Mubadala, also based in Abu Dhabi, has bought $314 million worth of newly-issued shares and as a result, has bumped its stake in the company to 19.3%, from 8.1%. The stark increase for so little money is due to the fact that the company is now split in two, so the values of each has dropped. They say today is a landmark day for AMD though, and it’s hard to disagree. Their debt is being remedied and a new Fab is right around the corner (at 32nm, no less), which should help push AMD towards becoming much more serious competition for Intel. The coming year is going to be an interesting one.

On Oct. 7, 2008, AMD and the Advanced Technology Investment Company announced the intention to create a new global enterprise, The Foundry Company, to address the growing global demand for independent, leading-edge semiconductor manufacturing. There is a strong shift to foundries occurring – particularly to foundries with the capacity to produce devices using leading-edge process technologies.

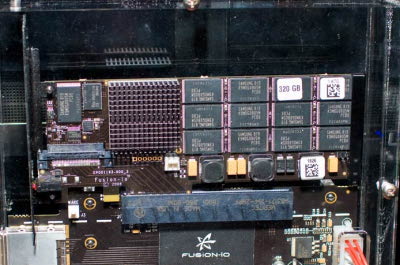

Fusion-io’s PCI-E SSD to Put Current SSD’s to Shame

It’s no secret that SSDs are the new black. They’re incredibly fast, lightweight, have fantastic power consumption and have no moving parts. The only problem is that they are expensive, but prices are dropping like bricks from the sky, and it should only be another year before they become mainstream. But what if you are looking for even faster performance than what current products offer? Do you RAID a couple SSD’s together? That’s one option, but a company that goes by Fusion-io has another solution – a PCI-Express-based SSD.

You read that right. It’s an SSD that plugs straight into an available PCI-E slot and promises to take full advantage of the insane bandwidth there. What kind of numbers are we dealing with? While the fastest SSD’s on the market promise bandwidth of around 200MB/s Read, Fusion-io’s solution can deliver upwards of 500MB/s and top out at 700MB/s. Latency is also improved and results in about 50,000 IO’s per second.

The company was showing their goods at the E For All conference that happened over the weekend, and to prove to gamers just how useful their device is in gaming, they loaded up thirteen instances of World of Warcraft… in 36 seconds. They also promise streaming of 1,000 DVD’s at one time to be possible which means it can handle tons of requests without issue. The 80GB model is set to retail for under $1,000 at launch, so it’s definitely not going to be for everyone. It’s still great to see that such speeds are possible, though. All I know is… I want one, now.

Credit: TG Daily |

The rep then copied the file to the Fusion-io drive. He also moved both the Photoshop and Windows swap files from the hard drive to the SSD. To make sure there wasn’t any funny business, the machine was rebooted to flush out any latent memory in system cache. This time the file took 28 seconds [750 megabyte file] to load, a full seven-fold improvement from before. With this drive, you could conceivably open, edit and save this document in the same time that it takes to for a hard drive-equipped computer to just open the file.

Five of the Best Free Media Encoders

Need to encode video, but don’t know where to look? I’ve been in this situation a hundred times, and for whatever reason, I’ve never settled on a particular app, nor know what’s “best” – the fact that I primarily use Linux doesn’t help either. The biggest problem is that there seem to be hundreds of these similar apps around, and there are many “trick” sites out there that trick users into downloading their lackluster malware-infested application.

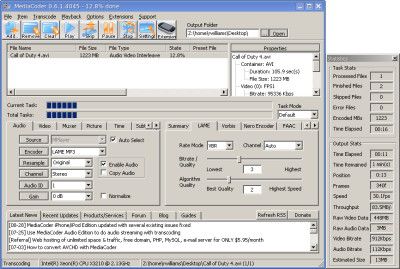

Well, Lifehacker has again taken the hassle out of finding the best media encoder out there by giving us a choice of five, and all of them look great. Super, a Windows-based encoder, looks to be the most visually-pleasing and robust, although it all depends on what you are looking for. MediaCoder is one in particular that promises to run on Windows, OS X and Linux, although the latter two will require Wine. I gave it a quick go, and I have to say, I’m extremely impressed.

Since I’m a PSP owner, I like to encode videos to it from time to time, and I’ve never been able to find a Linux-based application that could encode the file reliably. Even though the file looked good and was presented in the PSP format, it would always error once on the actual device. I tested MediaCoder with a DVD .VOB file, a .WMA and also a FRAPS RAW .AVI file and it successfully encoded them all. It’s fast too, taking just one minute for the RAW AVI test, which used a 1.1GB 720p source file. I’m impressed.

MediaCoder is a batch media transcoder that converts video and audio from and to most popular formats through a powerful graphical interface. Like Super, MediaCoder is a front-end for a number of command line media tools, allowing you to get as simple or complex as you want with your conversions. MediaCoder is available on all platforms, but its best supported on Windows.

Real Networks Forced to Halt RealDVD Sales

Last month, we posted about Real Network’s latest product release, “RealDVD”. As you can judge by the name, it’s an application that handles your DVDs, both in ripping and playback. The difference between RealDVD and the countless DVD rippers found en masse online was that Real’s product is designed to be ‘legal’. It tried to accomplish this by leaving the CSS encryption in tact when ripping to the PC, but that alone doesn’t seem to be enough for the good ole MPAA.

Apparently, some shareholders (who know nothing about the product) complained and now the MPAA took the issue to the courts. As a result, Real Networks was forced to temporarily suspend distribution of the application, at least until the judge reviews all of the information. If this doesn’t show what kind of people are hanging out at the MPAA, nothing will. While there are countless “illegal” DVD rippers available online, they go after the only one that tries to be legal.

It’s of course obvious that suing Real will do nothing to thwart DVD ripping, as it takes little to no effort to find a replacement. RealDVD sought after legal consumers and made it obvious to anyone who read up on the product. The DVD could only be played through RealDVD, while other DVD rippers allow you to decode the CSS encryption and use it anywhere – yet the MPAA doesn’t seem too interested in those.

A temporary restraining order has been issued against Real while the judge takes time to review all of the available documents. A decision will apparently be made on Tuesday as to whether the suspension will remain in place and for how long, a Real representative told NewTeeVee. Given the tenacity of the movie studios when it comes to copyright infringement, however, the MPAA isn’t likely to let the restraining order be lifted without a fight.

VoodooPC Confirms Integration into HP

Towards the middle of last month, we posted about a rumor that VoodooPC as we knew it was shutting down, but found out later that not all of our facts were spot on. Though, it was quickly evident that changes were being made that would drastically effect how the company operates.

Our friends are Gizmodo sat down with Voodoo’s co-founder Rahul Sood to find out what’s really happening, and unearthed everything we needed to know. Rahul reaffirms the fact that Voodoo is not disappearing, but changes are being made for the sake of becoming more effective. In gist, Voodoo will become better intertwined with Voodoo so that each company can sponge off of each other with ease. Voodoo is also shifting over a lot of the manufacturing to Asia, which will cut down on their workforce in Calgary, Alberta, but improve output.

Rahul brings up some interesting points in the chat, such as the fact that only 25% of their customer-base are gamers. That’s not surprising when you think of it – there’s clearly a much larger market for those seeking out a “luxury” PC, something that the Dell’s of the world cannot offer. After all, most boutiques are just for that, cool chassis’, great design and looks that make your friends drool. Regardless, it’s good to see that Voodoo is alive and well, and hopefully the merge into HP will result in a slew of cool products in the near-future.

Rahul also addressed the concerns that some gamers had that Voodoo had abandoned its core audience. He surprised us with an interesting statistic: 25% of the Voodoo customer base are gamers, he says. The other 75% are “fortunate people who love the style and the fact that our products are so different.” On the matter of the sleek new Envy not being a gaming PC at all, he mentions that it’s not the first time, and that the hot-selling 12-inch Envy had integrated graphics too.

Going Higher than 1.65v DIMM on X58 Can Damage CPU?

We’ve talked a bit about memory on Nehalem, but it appears there’s a few big caveats regarding overclocking we didn’t know about. At the Intel Developer Forum in August, we learned that the skies the limit when it comes to memory overclocking, and I was told directly that DDR3-2000 kits had been tested in Intel’s own labs on Nehalem. The problem is though, what about the voltage required to do so?

According to pictures of the ASUS P6T Deluxe as found on a Chinese forum, going beyond 1.65v on the memory is dangerous, but it’s difficult at this point to understand why. With Nehalem, the memory controller is built into the CPU itself, unlike current Intel motherboards where it’s built into the chipset. So it’s easy to assume that the voltage is shared by both the CPU and memory, and that’s why going too high could damage not the memory, but the CPU.

But the issue is that the CPU itself in no way would require 1.50v – 1.65v to operate, so the DIMM slots would have to be on a different power plane. There must be another limiting factor, though, because if ASUS puts a warning like this on their motherboard, there has got to be a good reason for it. It’s going to be interesting to see how things play out, because as it stands, most performance kits want far more than 1.65v.

We can go back to our findings about the new three-channel memory feature though, because as we saw at IDF, the bandwidth on even a DDR3-1066 kit was huge… potentially negating the need for a high-end kit at all.

Mushkin’s VP Steffen Eisenstein said that they are re-designing specifically for the X58/Core i7 combo, and that their kit should be out some time next month… in Tri-Channel packs. Other memory vendors across the globe are claiming their kits are still undergoing certification with motherboard vendors so they still don’t know whether they qualify or not.

AMD’s 45nm Opteron’s to Launch this Month?

We found out just a month ago that AMD would be launching their 45nm desktop Quad-Cores in January (it could be even earlier), and today we learn that on the Opteron side of things, we’ll be seeing product a lot sooner – as early as this month.

The first processors to hit the market will be those with an “average power consumption” – of around 75w – and will range between 2.3GHz – 2.7GHz, which is supposedly comparable to 1.7GHz – 2.5GHz of the 65nm products. Out of the nine processors to be launched sometime this month, five will be of the 2-way variety while the other four will be for 8-way configurations.

In January, AMD also follow-up with their Shanghai launch, with initial processors having a power-consumption of around 55w. Clock speeds don’t seem to be known right now, but it does appear that higher-performance models will also become available at the same time, or shortly after. Those will feature a TDP of 105W – still much less than current offerings. Hopefully they will be able to breath new life into AMD’s offerings.

AMD quite apparently has pulled in the launch of its Shanghai processor at least one full month, which probably has been motivated by Intel’s strong showing in the 2-way and 8-way segments. If Shanghai in fact is as good as AMD claims it is, then Shanghai really is what Barcelona should have been.

Nintendo Announces Upgraded DS, Features Online Store and Camera

It’s been rumored for quite a while that a brand-new Nintendo DS revision was en route, and sure enough, that rumor has now been confirmed. The ‘DSi’ becomes the third revision of the popular handheld console, with the first being released in late 2004 and the second in summer 2006 – proof that Nintendo is one of the very few companies that is able to release new versions of the same product and actually have consumers who already own the original consider a purchase.

Though the overall design is the same as the DS Lite, the DSi brings not one, but two 0.3 megapixel cameras to the mix – one on the front and one on the back. The device is not geared towards becoming an all-in-one device, so the lackluster photo resolution is warranted. The DS itself supports a lower than 0.3 megapixel resolution, so face shots for ingame use (or even potential video chats), the camera is perfectly acceptable.

Improved is both the screen size and thickness, although both seem to be pretty minor modifications. Aside from the physical characteristics, Nintendo will also launch a ‘DSi Store’ which wil; allow you to download games and applications via your WiFi connection. Sounds great, but it’s too bad that it’s not being back-ported to the current DS versions. Oh, and if there’s a downside to the the DSi, it might be the omission of the GBA cartridge port. If you don’t play the older Gameboy games though, this should be no big deal.

The other highly touted feature of the Swiss-army knife-like DS is music functionality. The DS now features both an SD slot and an undisclosed amount of internal storage to help support its newly acquired music playback. One unique feature of the unit’s MP3 player is the ability to slow down or speed up tracks via user control. Says Mr. Iwata on this new feature, “We want our customers to individualize their Nintendo DSi .”

Apple to Close iTunes if Royalty Increase Passes?

Some rather shocking headlines have been making the rounds this morning, from who else but Apple. By this point, I’m sure many are aware that the record industry is chalk-full of the greediest people on earth, and as a result, the consumer and retailers have been affected. Music costs more, it’s harder to find in a retail store and the bands who deserve the cash, don’t see it. Bad situation all around.

Well, the execs still aren’t happy and are looking to increase their share of the pie from digital music sales, which would affect all of the digital music stores available now, including iTunes. The prospected increase is so extreme that Apple has stated that they will consider closing down iTunes if they go through, which is rather extreme in itself.

If the rate increase does goes through, it will come to a point where iTunes isn’t near as profitable as it is now, and in some cases, they might even take a loss. Even still, it would seem highly unlikely that Apple would ever consider closing the online music store, and I really can’t see it happening even if this increase proceeds. They’d not only lose money on iTunes, but iPod sales as well.

It’s going to be interesting to see how it plays out regardless. If Apple doesn’t close iTunes, they could very well increase their rates, despite claiming that they refuse to. It’s a business, after all. But then, who wants to pay $1.15 per song rather than the $0.99 we’ve been used to? It’s a bad situation.

The National Music Publishers’ Association, however, argues that the royalty increase will help everyone and will not hurt online music growth. David Israelite, president of the NMPA, the organization which is requesting the increase, stated, “I think we established a case for an increase in the royalties. Apple may want to sell songs cheaply to sell iPods. We don’t make a penny on the sale of an iPod.”

Google Brings Back Their 2001 Index

As hard as it might be to believe today, the Internet hasn’t been around all that long (~13 years in full force) and Google has been around even less – only since 1998. To help celebrate their tenth anniversary, the company has been offering many cool features on the site to help both bring you back to 1998 and also allow you to view a timeline to bring you back up to the present.

Their latest feature addition is a search engine that utilizes their earliest index snapshot, which they say was captured in January of 2001. Anything you search for here will essentially put you back in that year. If a site didn’t exist, it won’t be found, it’s that simple. It’s a cool way to go back and search for yourself though, to see if you even existed online by your name or online moniker, or your company for that matter. There are many possibilities.

I’m not sure how entirely full this index is, though. I searched for a few sites that were launched long before 2001 (such as Shacknews) and it didn’t give a single result related to the site. Still, if you do happen to get results, you are sure to be captivated. If you see a result that intrigues you, you can click on the Wayback link beside it rather than the link itself to see if there is a copy of the page or website in the web archive.

It’s a very cool tool, and I know I lost about fifteen minutes with it. It’s incredible to see just how much the ‘net has changed in only seven years. Google has gone from spidering 1.3 billion pages at that point in time, to now spidering well over 1 trillion – and they are adding billions more each day. Try to wrap your head around that one!