- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Exploring MAGIX Vegas Pro 16 Encode & Playback Performance

Vegas Pro is a popular video editor that’s been around long enough to develop a faithful fanbase – one that has asked us on multiple occasions to take a better look at performance. We’re taking care of that here, encompassing CPU encode, GPU encode, and playback performance.

Aug 25 Update: Our look at Vegas Pro 17 performance can be found here.

The list of GPU-accelerated software we test with at Techgage used to be modest, but over the past few years, it’s grown quite a lot. Some of the additions have come by way of reader suggestion, including MAGIX’s Vegas. In fact, we’d wager that we’ve received more requests for Vegas performance than any other software suite.

We first tested with Vegas a little over a year ago, tackling straight-forward AVC and HEVC encodes in our workstation GPU content. With community feedback, our original tests were deemed too lacking, leaving a lot of information on the table. That was true, and it’s the reason behind us revamping our test scripts, adding some new encode tests, and also playback tests.

We’re not going to claim that the performance testing seen on this page is the best it can be, but it’s improving over time, as we become more familiar with the software. We benchmark with about 25 pieces of software, and we’re masters of very few. If you have suggestions on further improving our Vegas tests, please leave a comment.

Considerations & Potential Performance Roadblocks

April 30 Addendum: One day after this article was published, MAGIX released the fifth major update to Vegas Pro 16, build 424. We conducted follow-up testing with this version, and have found that the issues detailed here continue to persist.

Work on this article began in December, following publication of our Radeon Pro WX 8200 review and a some good feedback from the community. We overhauled our test scripts, and got back to work on performance testing – only to run into an immediate roadblock.

From the get-go, AMD Radeon GPUs had no problems in our testing, but the story was different for NVIDIA. Instead of 60 FPS playback with a LUT FX filter, we instead saw 1~5 FPS. Something seemed broken, so we reached out to MAGIX and NVIDIA to figure out what was going on. We continued to talk with both companies multiple times since December, but nothing improved on the performance front.

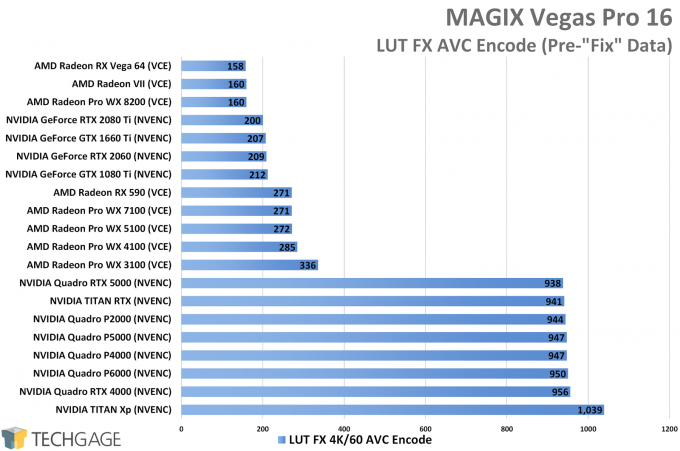

After putting twenty graphics cards through the gauntlet of revised tests, more was revealed about the issue. We hate to show a “broken” performance graph, but it’s important to highlight because the issue continues to survive.

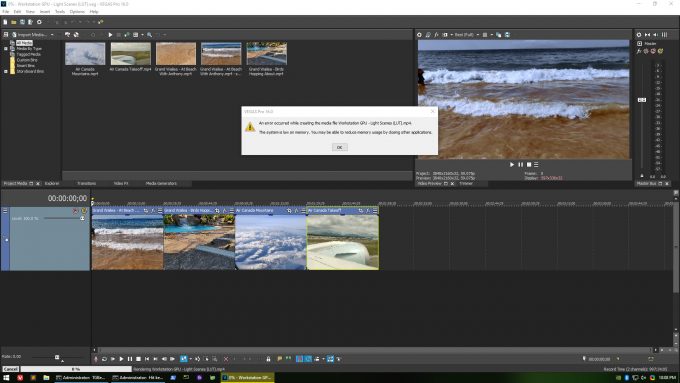

Every GeForce card performed their LUT jobs without issue, but all of the Quadros, and both TITANs, delivered performance that makes a $400 GPU look no less effective than a $2500 one. In a similar vein, the same LUT encode used would render an error on some occasions, stating that the GPU was out of memory, even though that wasn’t the case (system memory was also okay).

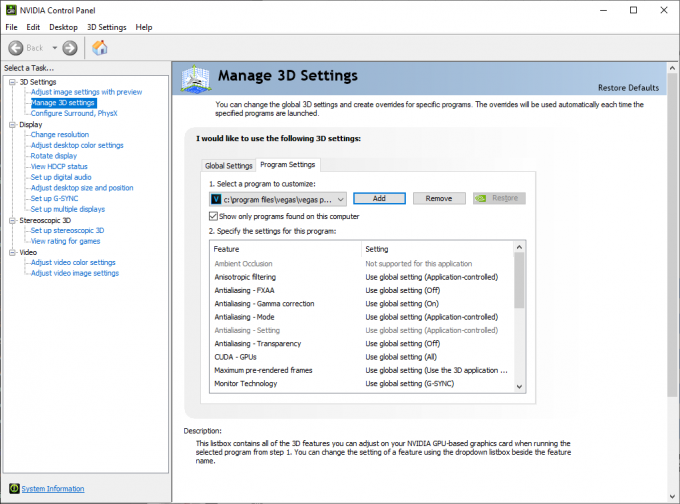

Despite the oddities in testing, all twenty of the GPUs had their data compiled. Graphs were created, and then writing was going down… until we discovered a fix that’s only kind of a fix. In the NVIDIA Control Panel, at least on Quadro and TITAN, a profile for Vegas is not automatically created. Normally, this shouldn’t matter, but if you are experiencing these same issues and manually add the application (as seen in the below image), the problem goes away. Again, kind of.

After simply adding the Vegas profile to the NVIDIA Control Panel, the issue of poor LUT performance disappeared. Encode times of 900 seconds dropped to 300, and 5 FPS playback changed to 60 FPS. The kicker? You can keep every single setting in this new profile as “Use global setting”. You just need the profile to exist.

Given this bizarre behavior, it seems like it might make sense to have NVIDIA automatically add Vegas as a profile, and it probably should… but adding this profile actually comes with a caveat. After adding the profile, our LUT and playback performance improved, but every other encode suffered a small drop in performance. This was consistent across multiple GPUs. An encode that might have been 2m 30s originally, for example, would become 2m 36s.

We’re not well-versed enough in MAGIX’s or NVIDIA’s software designs to comment much more, but we feel like the ultimate fix has to come by way of an update to NVIDIA’s driver. The fact that performance is decent on GeForce but abysmal on Quadro and TITAN by default suggests a basic fix shouldn’t be that difficult.

Tests & Hardware

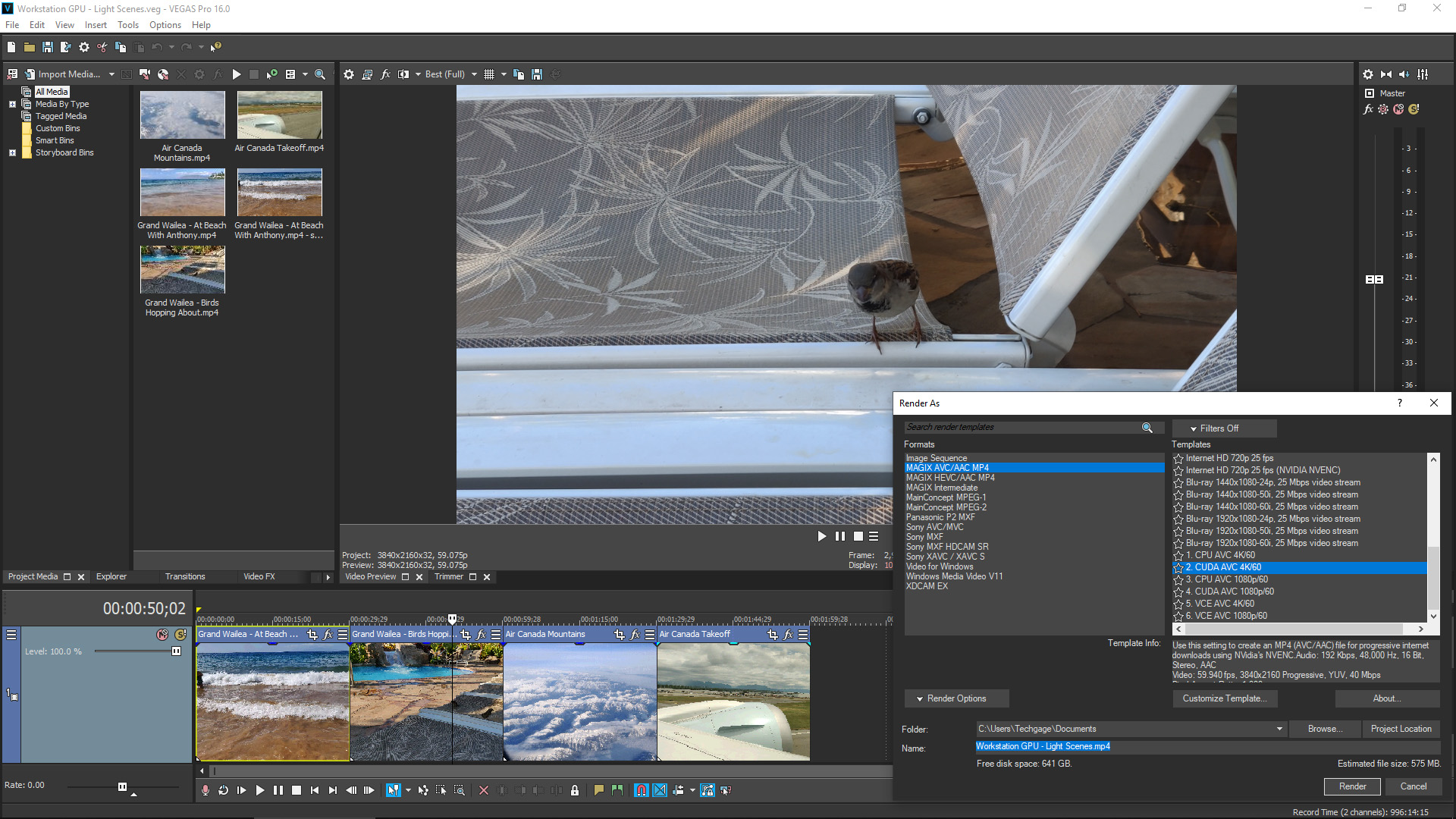

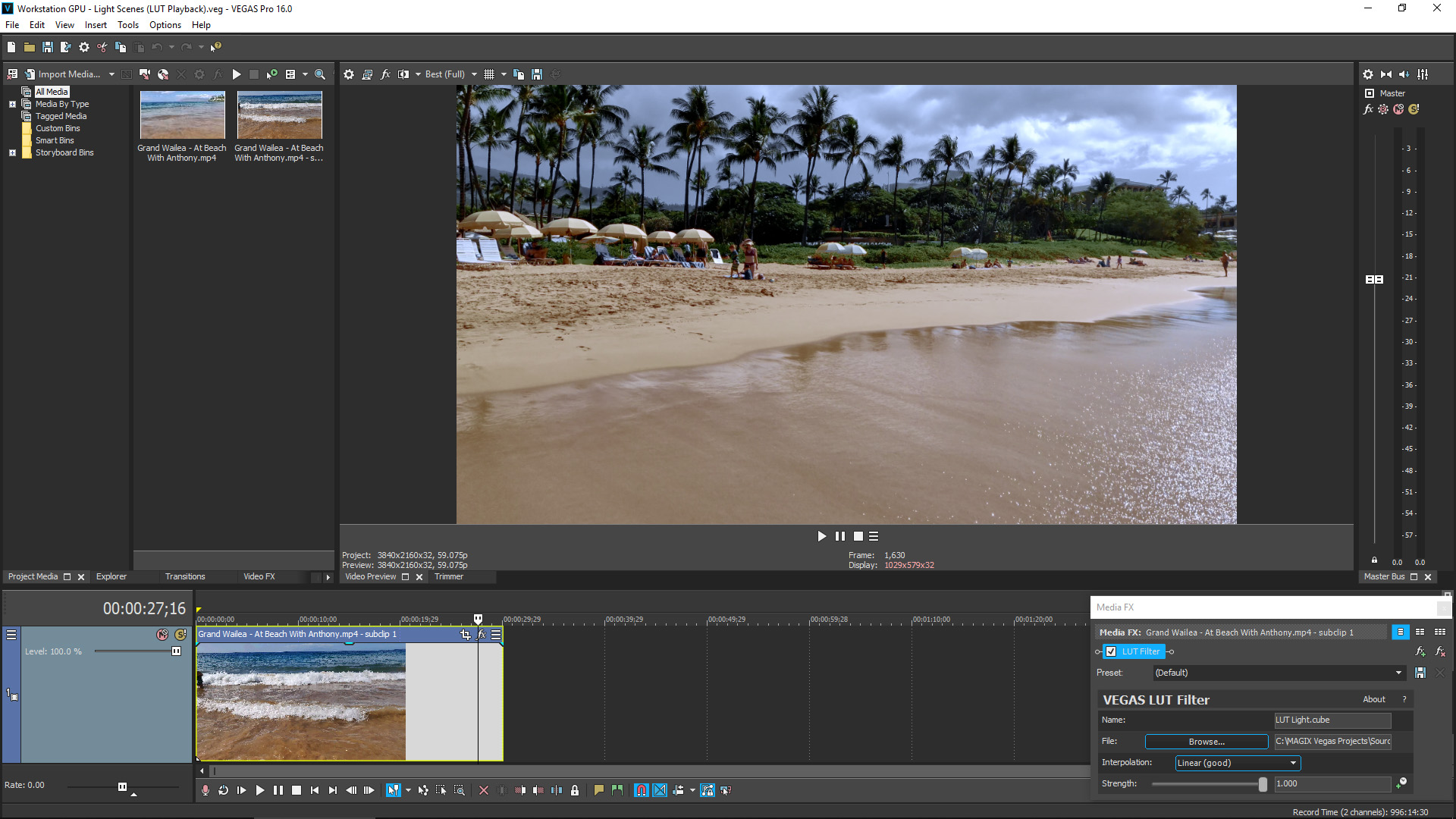

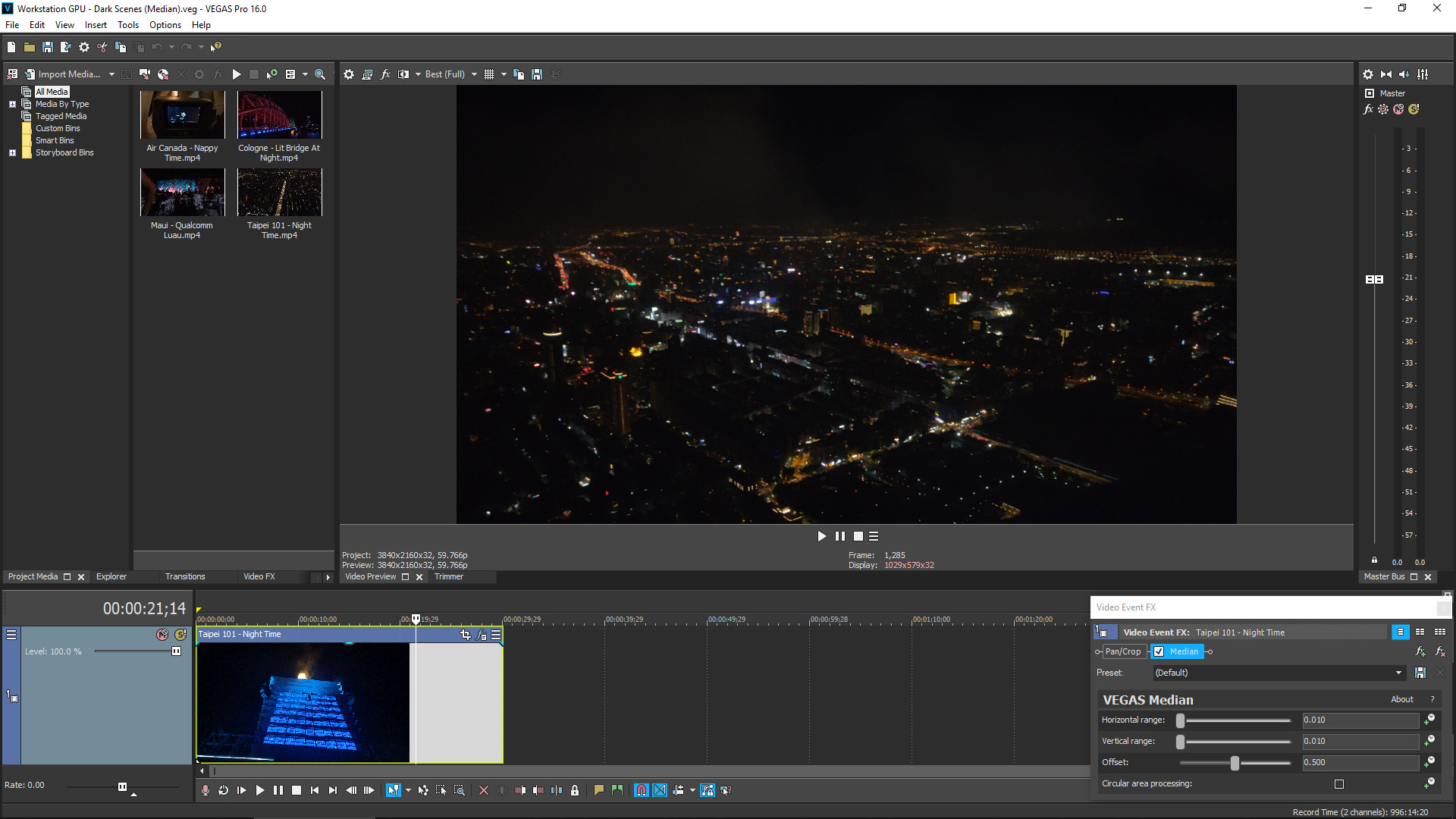

We use a few different projects for both our encode and playback tests. To gauge basic AVC and HEVC encode performance, an identical scene is encoded to both on all GPUs. To then get the GPU to do real work, two more projects are used: one with a LUT filter, and another with Median. The Median test continues on to our CPU encode tests.

Here’s the basic specs of our test rigs and chosen processors:

Because this article focuses on performance of a workstation application, all relevant CPUs and GPUs (that we have) are included for testing here. Some gaming GPUs were tossed in for expanded testing, with specific reasons for each. The GTX 1660 Ti was included as it’s the top-end non-RTX GeForce based on Turing, while the RTX 2060 was included because it’s the lowest-end RTX GeForce based on Turing. Conversely, the RTX 2080 Ti gives us a look at top-end performance with an NVIDIA gaming GPU. On the AMD side, included gaming competitors are the Vega-based Radeon VII and RX Vega 64, and the Polaris-based RX 590. To complete the picture, both the Pascal-based TITAN Xp and Turing-based TITAN RTX from NVIDIA are also included.

GPU Encode Performance

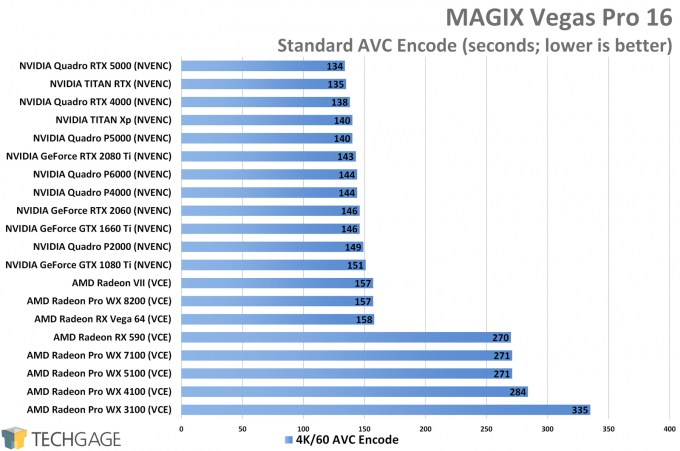

For our first set of results, we see some obvious detriment to AMD’s older Polaris-based graphics cards, including the WX 3100 ~ WX 7100, and RX 590. Any modern GPU beyond that is going to deliver good performance, with the top-end of NVIDIA’s range leading the pack.

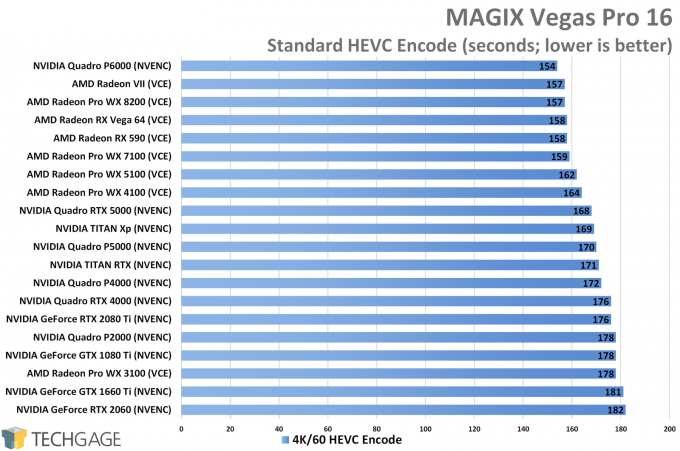

HEVC fares quite a bit differently:

With HEVC, there’s little difference across the entire range of GPUs, with the previously suffering low-end WX series cards finding better positions in this chart. Ultimately, every GPU takes a bit longer to encode with HEVC over AVC (aside from those aforementioned Radeons).

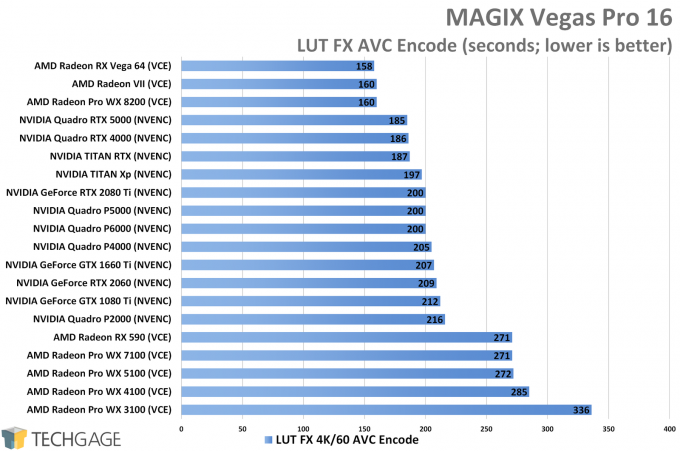

With every GPU working properly, the LUT FX encode chart looks a lot different from the skewed one shown earlier in the article. AMD has some clear strengths in Vegas, with the Vega-based cards sitting comfortably on the top. NVIDIA’s bigger Quadros and TITANs sit under those, while the AMD Polaris series of cards once again find themselves being held back due to their dated VCE encoder.

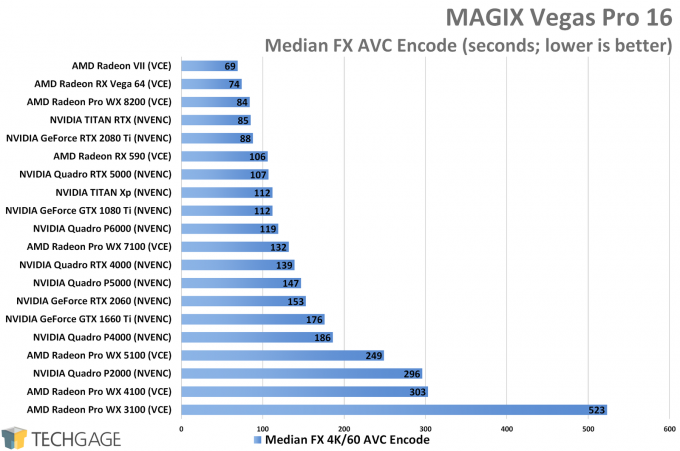

The LUT filter is admittedly not that demanding in the grand scheme, but the Median FX sure is. With the chart below, we can see great scaling from top to bottom, with AMD once again shining bright at the top:

AMD’s performance overall is really impressive, save for maybe the Polaris cards that struggle in multiple tests. They struggle less in this Median test overall though. Essentially, the more graphics horsepower you have, the better, but AMD’s Radeons have a definite advantage.

CPU Encode Performance

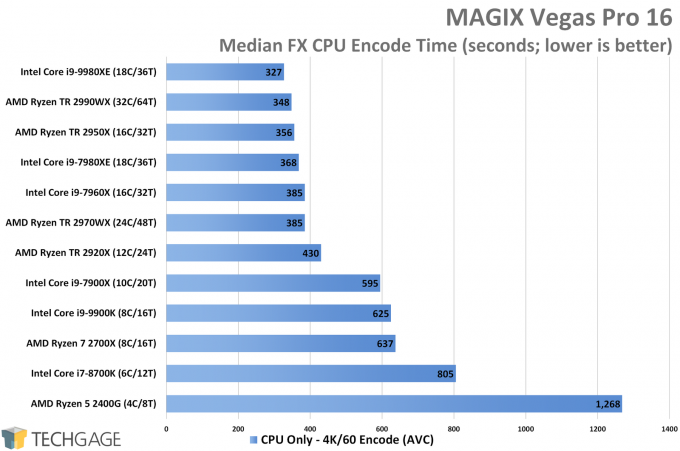

Graphics cards scale pretty well in Vegas, but CPUs do as well. Clearly, there’s a big difference in the results above between the lowly 4-core Ryzen and 18- and 32-core top dogs. That’s a great thing, but ultimately pretty much expected, given how long CPUs have been optimized for. It’s important to note some anomalies as well, though, mostly with the top AMD Ryzen Threadripper chips. These are known to behave oddly with some video encoders, thanks in part to less-than-ideal thread management in Windows. We talk more about this in our Coreprio article. The 24-core 2970WX should place higher than the 16-core Intel chip in a test like this, and likewise, the 32-core 2990WX should dominate an Intel 18-core – but neither is true here.

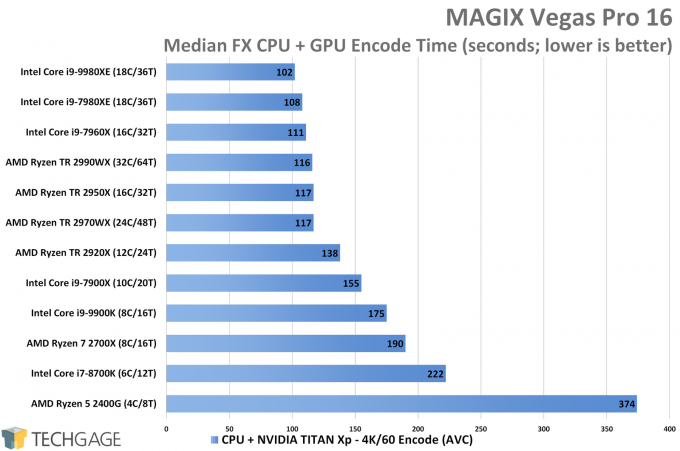

As we saw in our Blender 2.80 performance deep-dive last month, some software projects favor the GPU so heavily, that you can get by with a smaller CPU if your GPU is powerful. With Vegas, GPU encoding is clearly faster overall, but the CPU is still heavily involved, so the better your CPU and GPU, the faster your encodes are going to happen. Case in point:

For its price, the 2400G is a great processor, but for those who want to get serious work done, it’d clearly pay off to opt for a higher-end option. Even the 2700X 8-core delivers massive gains over the 4-core 2400G. It’s only at the really high-end of core counts where minimal advantages will be seen when moving up a model or two.

Playback Performance

To capture playback performance, we used the same Median project as above, and a slightly edited LUT one, configured the viewport for Best (Full), and recorded 30 second stints of playback. For each run, the entire 30 seconds was allowed to play through twice, with the third run being recorded. This was done to help smooth out hiccups that can really throw off tabulated test results.

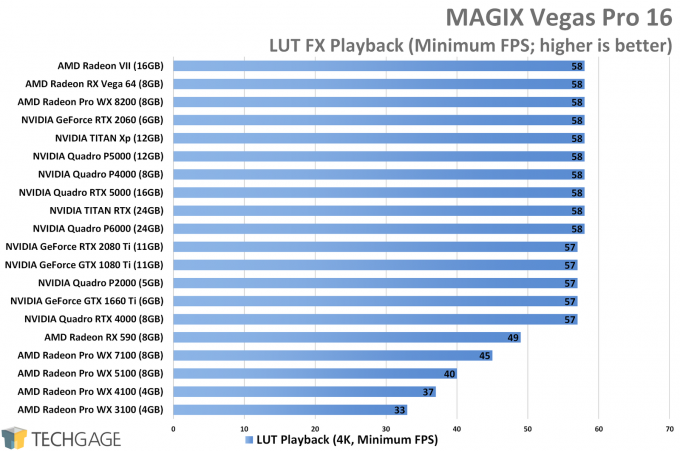

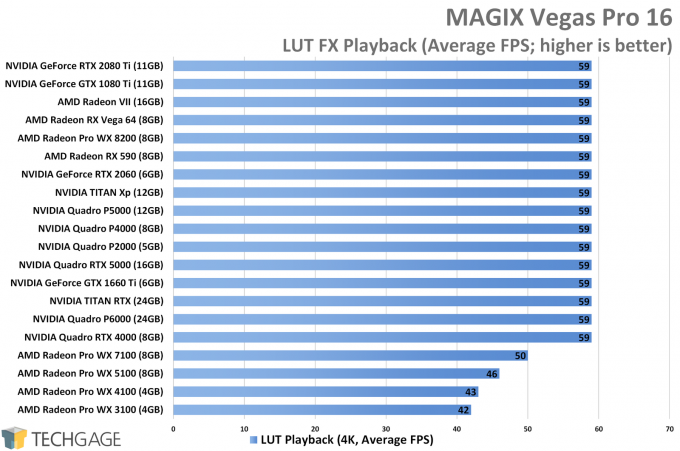

LUT, as mentioned above, isn’t as demanding as Median, but you’ll still want a sufficient enough GPU to ensure that you’ll be able to play back at 4K/60 without issue. Below are the minimum and average FPS results from these runs:

The majority of the GPUs here could deliver suitable performance, while AMD’s Polaris cards fell back a bit, yet again, albeit not to as an extreme a point as it could be. Things change quite a bit with the much heavier Median test:

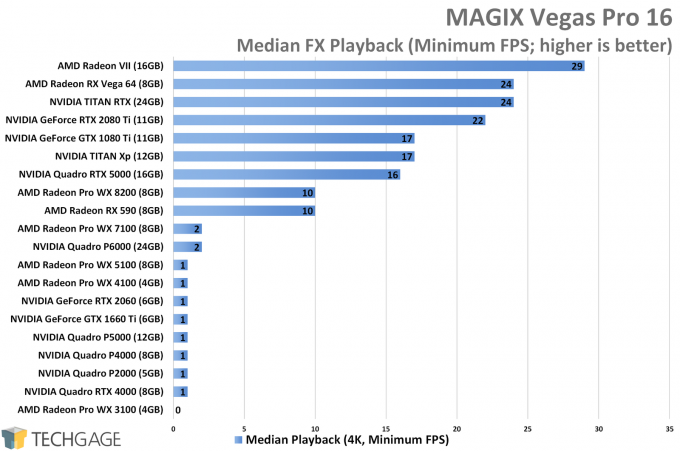

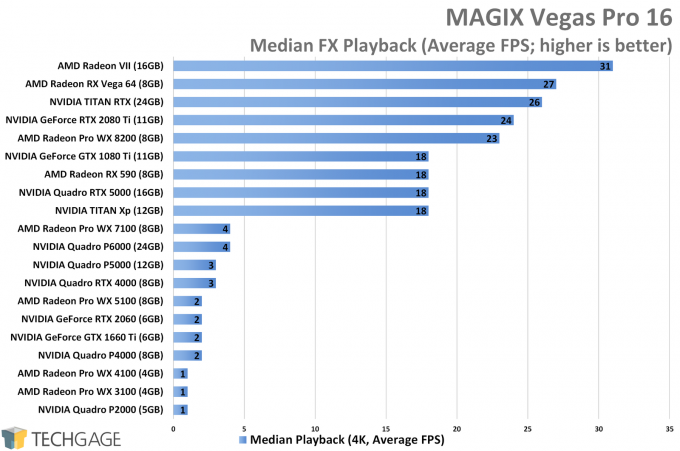

These graphs require a bit of an explanation. Remember the huge “Considerations & Potential Performance Roadblocks” section from earlier? Oddities continued into the Median playback test, where many GPUs could simply not play back the scene without a serious struggle. If every GPU behaved the same way, the test could be easily declared too grueling, but many GPUs survive.

Whereas with the poor LUT performance seen from NVIDIA GPUs earlier in the article (with default NVIDIA Control Panel settings), some of the GeForces that fared fine there didn’t here. That “0” for the WX 3100 isn’t a typo, either. Median in particular is a strenuous effect, and will require a beefy GPU for reliable playback at good quality.

There’s another kicker here. Even with some of the GPUs that placed at the bottom of these charts, there were times when we experienced better playback performance when testing, but it’d never persist for long. There’s clearly more optimization that can be done somewhere. We can honestly say it doesn’t make sense that an RX 590 scores better in this test over much faster GPUs. Look at the RTX 5000’s 18 FPS and RTX 4000’s 3 FPS to see how sporadic performance can be. We’re dealing with video here, but Median FX is in effect graphics rendering, and you only have to quickly look at one of our related articles on that to understand how things can scale.

Final Thoughts

A lot of benchmarking went into this article, so it’s a great thing that a lot of interesting information came from it. Conversely, it’s unfortunate that so much of the NVIDIA performance in our GPU testing was so hit-or-miss, with GeForces largely performing better than Quadro and TITAN, with a half-fix available to get things working better. We’re hoping this situation won’t last for long, or there will be an awful LUT of headache out there.

Given the current situation with NVIDIA and Vegas right now, Radeon wins as the go-to choice for the software. Even against NVIDIA’s GPUs that appeared to behave fine from the get-go, AMD’s top chips led the pack. These Radeon strengths have carried over from version 15. We didn’t encounter issues with NVIDIA GPUs in that version, but that could be thanks to the simple fact that we only did basic tests at that time. Once filters get involved, the situation changed a lot for NVIDIA.

It can be argued that CPUs are technically better for encoding, but GPU-based encoding has come a long way over the years, so it’s becoming increasingly unlikely that you’ll notice a quality difference between the two types of encodes. You’ll notice the encode time differences, however. In our testing, adding a GPU gave a 300% speed-up to most comparisons.

There are some workstation scenarios where a GPU may be the only thing that matters, but that’s not the case with Vegas. While the GPU is extremely important, the better your CPU, the better the overall encode performance, so both a good GPU and CPU will deliver a great experience.

We’re never done testing, so we’ll definitely be revisiting Vegas again down the road. Hopefully right after NVIDIA performance and stability improves…

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!