- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Intel Launches 4th-gen Xeon Scalable & Xeon Max Processors

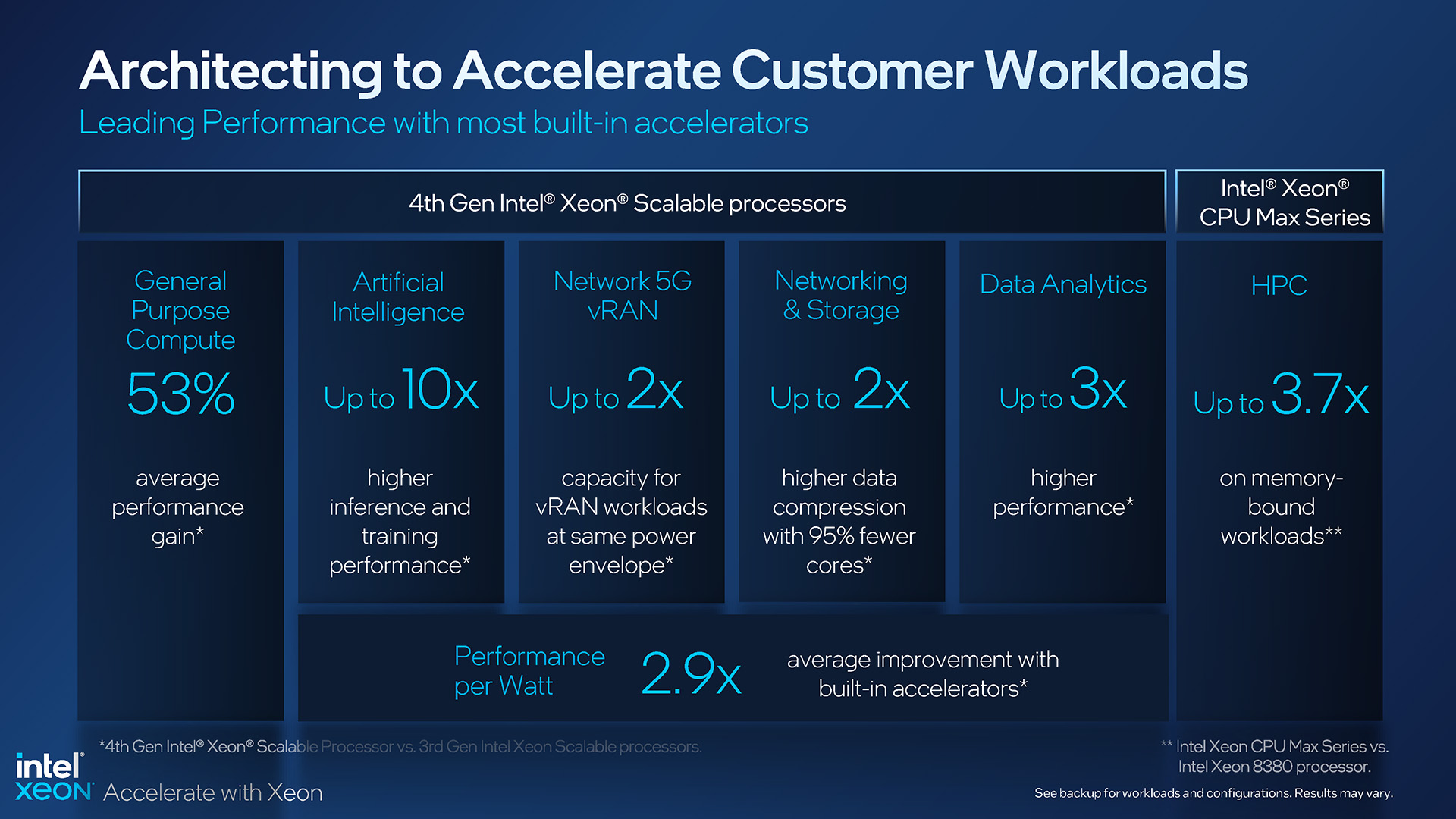

Today’s the day when Intel launches its long-anticipated 4th-gen Xeon Scalable and Xeon Max-series processors, and the company has given us plenty to talk about. We have CPUs with built-in HBM2e memory, many accelerators scattered across the entire range, and notably, AMX AI acceleration built into every one.

It’s seemed to have taken its good ol’ time getting here, but today, Intel is officially launching its 4th-gen Xeon lineup built around the Sapphire Rapids platform. This lineup includes not just the typical Scalable SKUs we’ve seen from the previous few generations, but also a brand-new “Max” series, which Intel first talked about a handful of months ago.

With Sapphire Rapids, Intel says that the latest Xeon is its most sustainable to date, and that it also has the most built-in accelerators of any server processor to date. Both parts are believable, but it’s the latter that means business, because Intel’s packed lots of acceleration goodness into these CPUs, some of which will require software to be updated in order to take full advantage of potential gains.

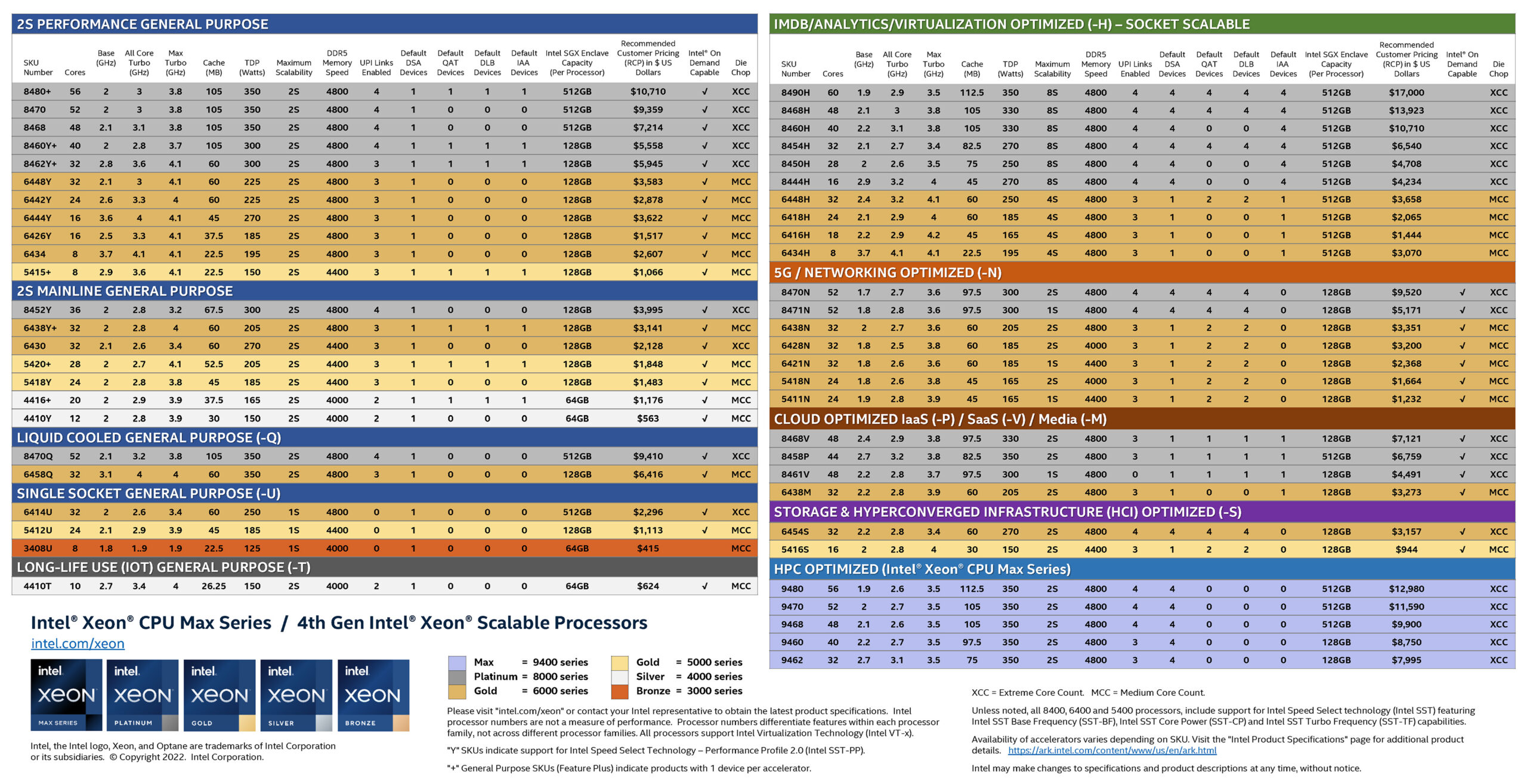

The sheer number of processor SKUs that Intel offers almost reaches meme-levels of humor. It’s the same on desktop as it is on server. Who doesn’t love this updated colorful server SKU sheet?

With so many Xeon options, it’s clear that Intel is trying to capture as much of the server market as it can with its latest offerings. While there may be some areas that additional models could slide in, this still feels like an exhaustive list.

While Intel offers Xeons for many different purposes, none of them are developed without a good reason. You may look at the two liquid-cooled options and wonder who actually uses them, but Intel’s put a lot of effort into making those CPUs stand out, working with industry partners to develop better methods for liquid-cooling server chips, and eking as much performance out of them as possible.

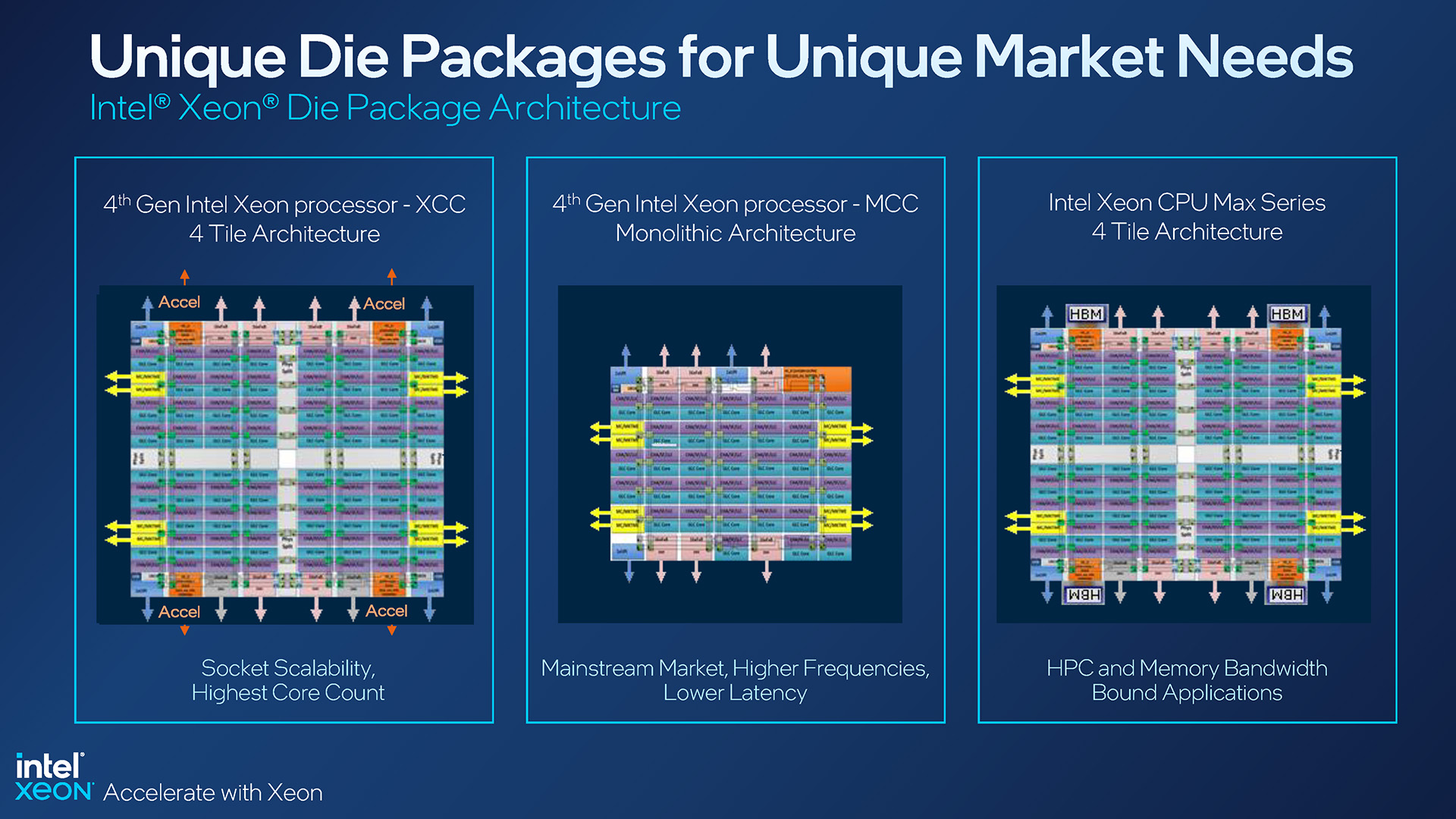

We’ve seen SKU sheets like the one above ever since the original Xeon Scalable launch, but this one has many extra details that are worth mentioning. To start, the core configuration will either be listed as XCC or MCC. XCC represents CPUs with multiple “tiles” inside, while MCC is a monolithic, more traditional design:

An XCC design is what helps Intel reach such high core counts at the top-end, with this generation of Xeon peaking at 60 cores with the 8490H. The current-gen MCC designs max out at 32 cores, such as with the virtualization-optimized 6448H and 2S performance-bound 6448Y.

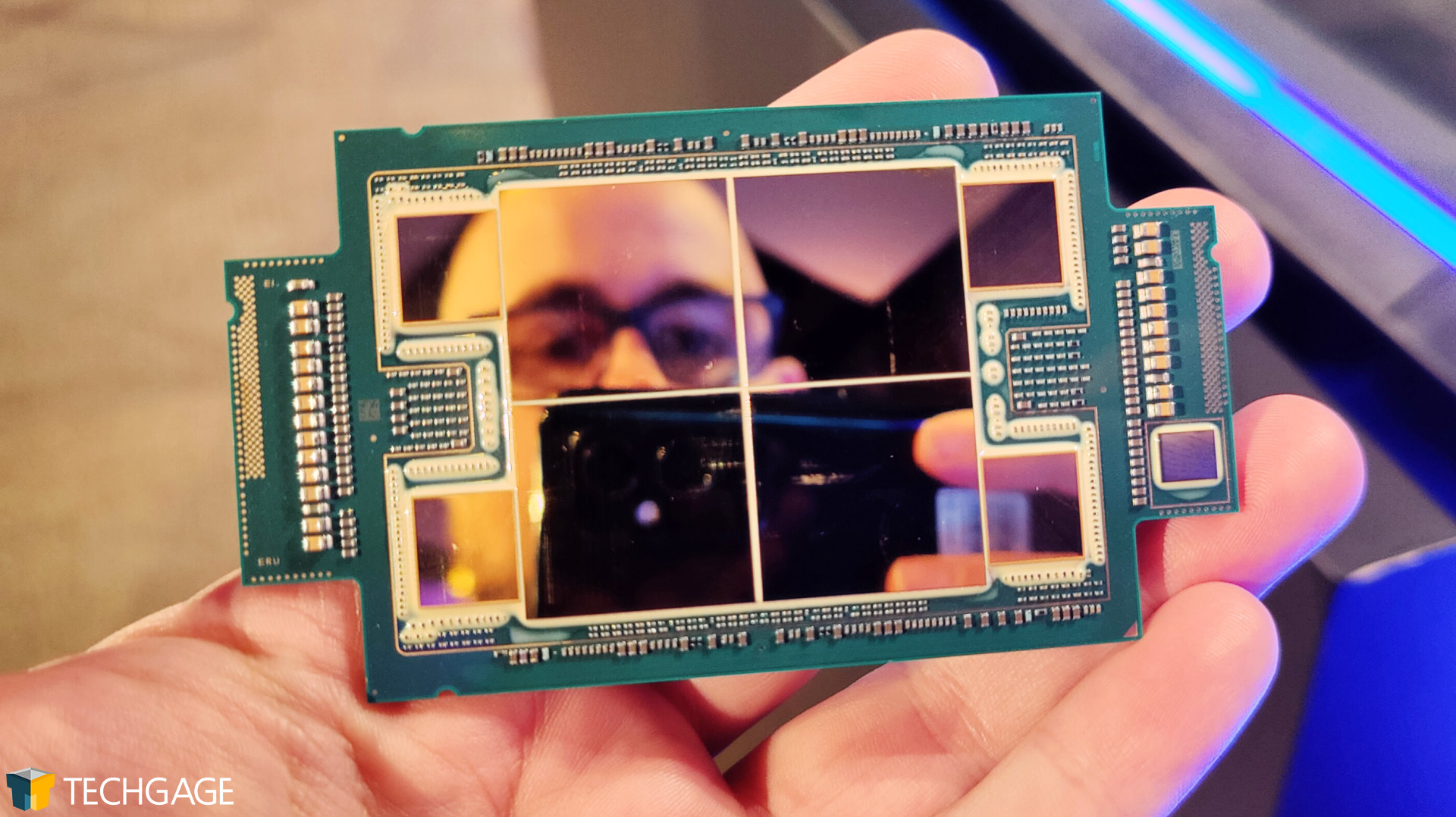

The Xeon Max series represents the third design, one that’s effectively the same as XCC, but with HBM2e memory placed around the dies. We went “hands-on” with a Max CPU last month:

While Xeon Max CPUs take up just five spots in the overall lineup, they’re arguably the most interesting of the bunch. These CPUs are equipped with enough built-in memory to allow a server to run with no additional memory at all. Picture that – a server booting up, operating, with absolutely none of the memory slots occupied. That’s deliciously geeky.

Xeon Max CPUs place HBM2e chips next to each tile, ultimately delivering a total density of 64GB, up to 1.14GB per core. Intel is targeting these Max SKUs squarely at the HPC market, and while many HPC workloads will require lots of memory, there are many still that can fit within 64GB easily. Of course, you can build on top of this HBM2e with DDR5-4800 should that 64GB HBM2e density not be sufficient.

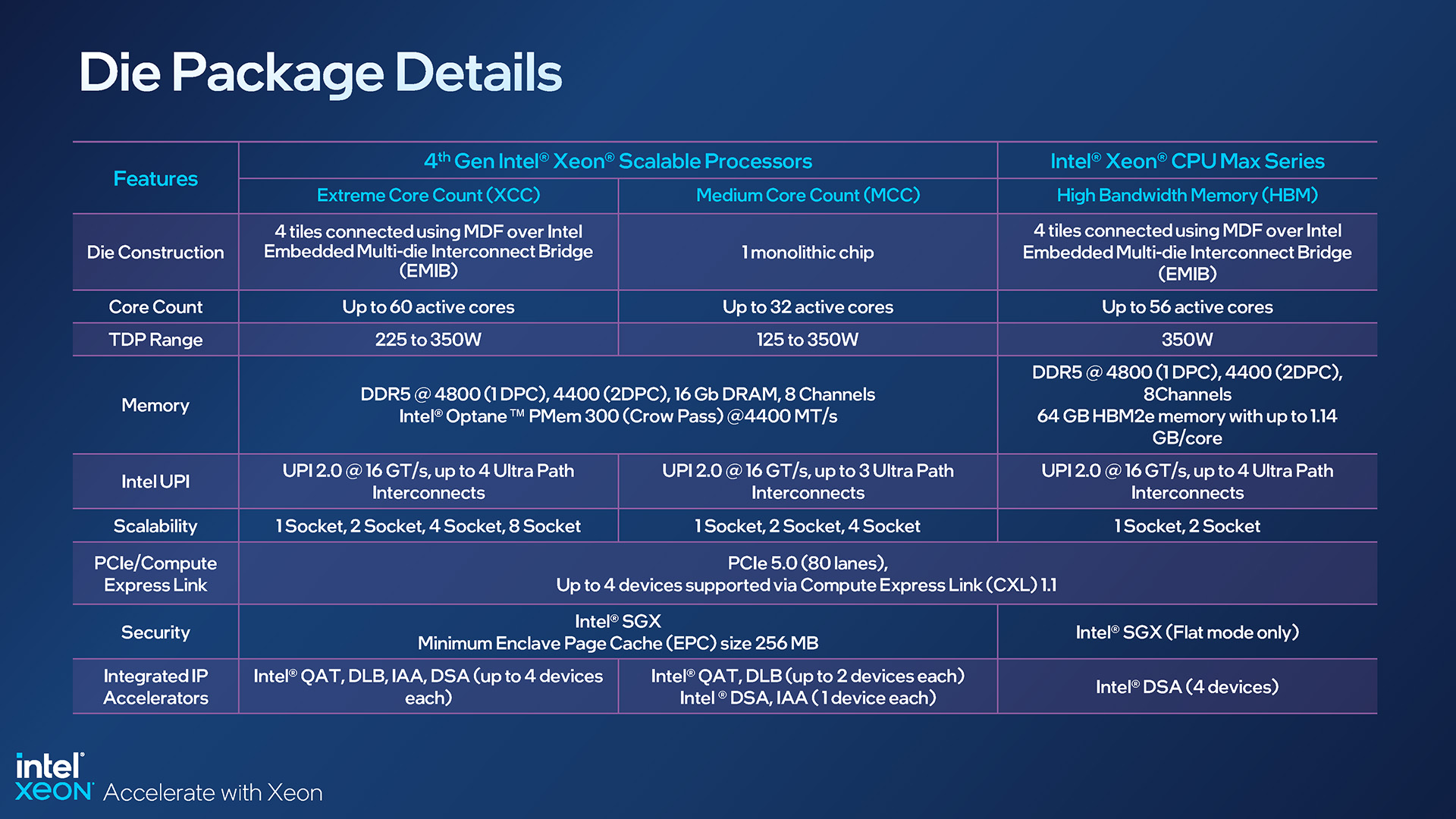

Here’s a more detailed look at the stated specs for each CPU configuration:

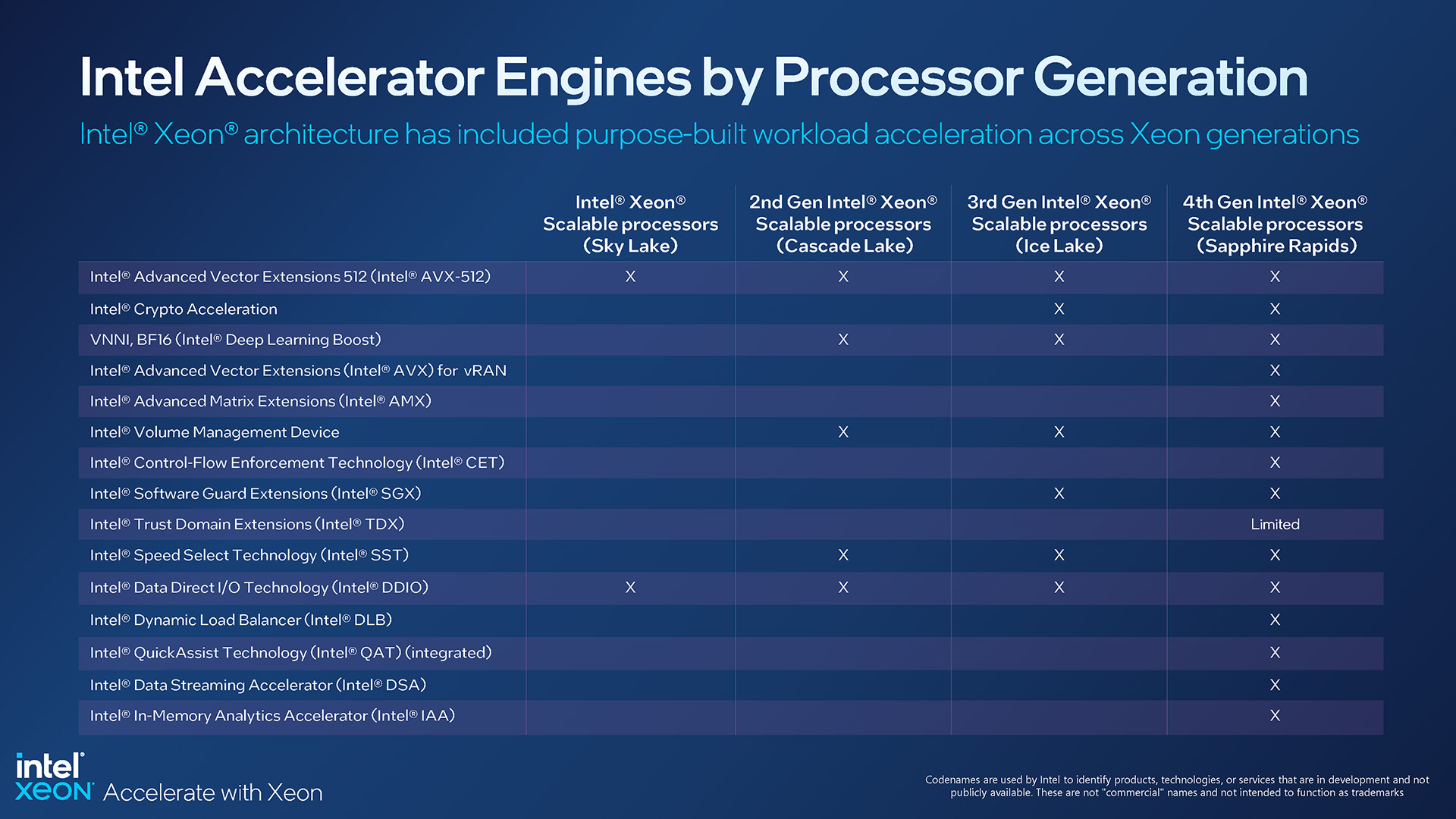

That slide above helps highlight another angle worth looking at. On Max CPUs, many of the features the other SKUs have are gone, such as Intel’s QuickAssist Technology, Dynamic Load Balancer, and In-Memory Analytics Accelerator. Data Streaming Accelerator remains, highlighting a laser-focused target audience for Max CPUs.

Those technologies not built into Max leads us to another difference in this latest-gen Xeon lineup. You may have noticed a new entry above, “Intel On Demand Capable”; this refers to CPUs which could be purchased without every premium feature, but have them enabled later via system partners and Intel itself.

While in briefings last month, the topic of On Demand seemed to confuse many, and even today, we’re left not fully understanding the mechanic. On Demand sounds like a DLC for server CPUs, but it should enable some to purchase CPUs at fairer prices due to technologies not being used, and customers will still have the option to reverse course later.

Another angle could be that some of Intel’s technologies might not be properly taken advantage of in a working environment, but if support is built, then On Demand can unlock the feature from a CPU. As far as we understand it, Xeon CPUs that have features locked will not have those features contribute to the overall power load. They’re effectively dormant until they’re unlocked and engaged.

If you refer back to the SKU sheet shared earlier, you’ll notice that these On Demand-affected features can vary heavily in number of devices across the board. Cloud-optimized SKUs, for example, have few DSA, QAT, DLB, and IAA devices, while the top-end 8490H has plenty. Depending on the workload you’re after, you may feel shoehorned into whichever model you ultimately go with.

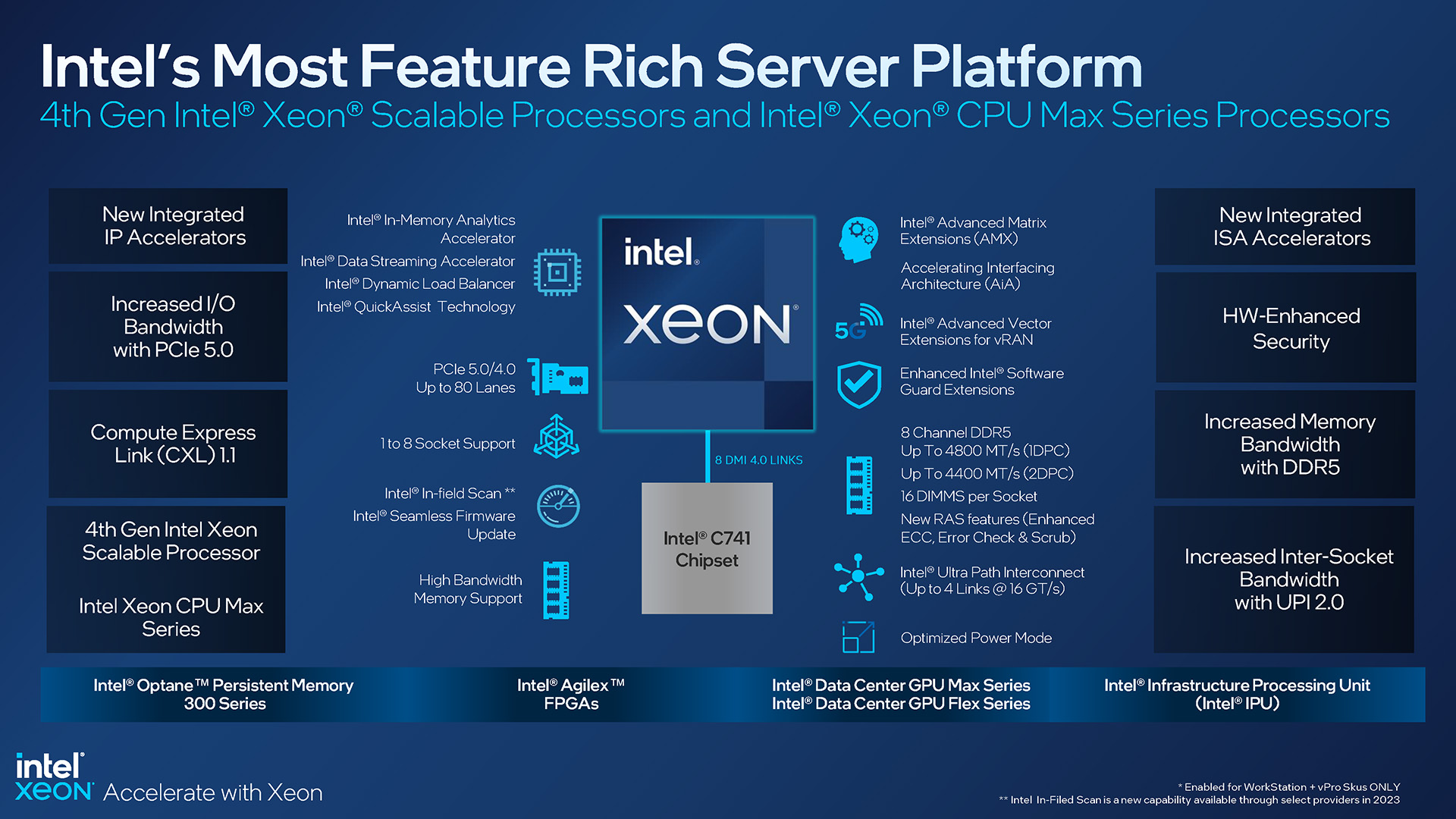

Here’s an overview of the Sapphire Rapids (ahem, 4th-gen Xeon) features, which helps cover things we’ve yet to:

This latest-gen Xeon platform supports both PCIe 5.0 and 4.0, as well as DDR5 with frequencies up to 4800MHz. As before, this platform has an 8-channel memory controller, and ultimately supports up to 16 DIMMs per socket.

It’s worth noting that Intel continues to support its 4- and 8-socket systems, which is interesting considering its main competition, AMD EPYC, peaks with 2-socket configurations. Intel says that it still sees demand for these many-CPU servers, especially for in-memory database intelligence work, data warehousing and visualization, DC consolidation, and so on.

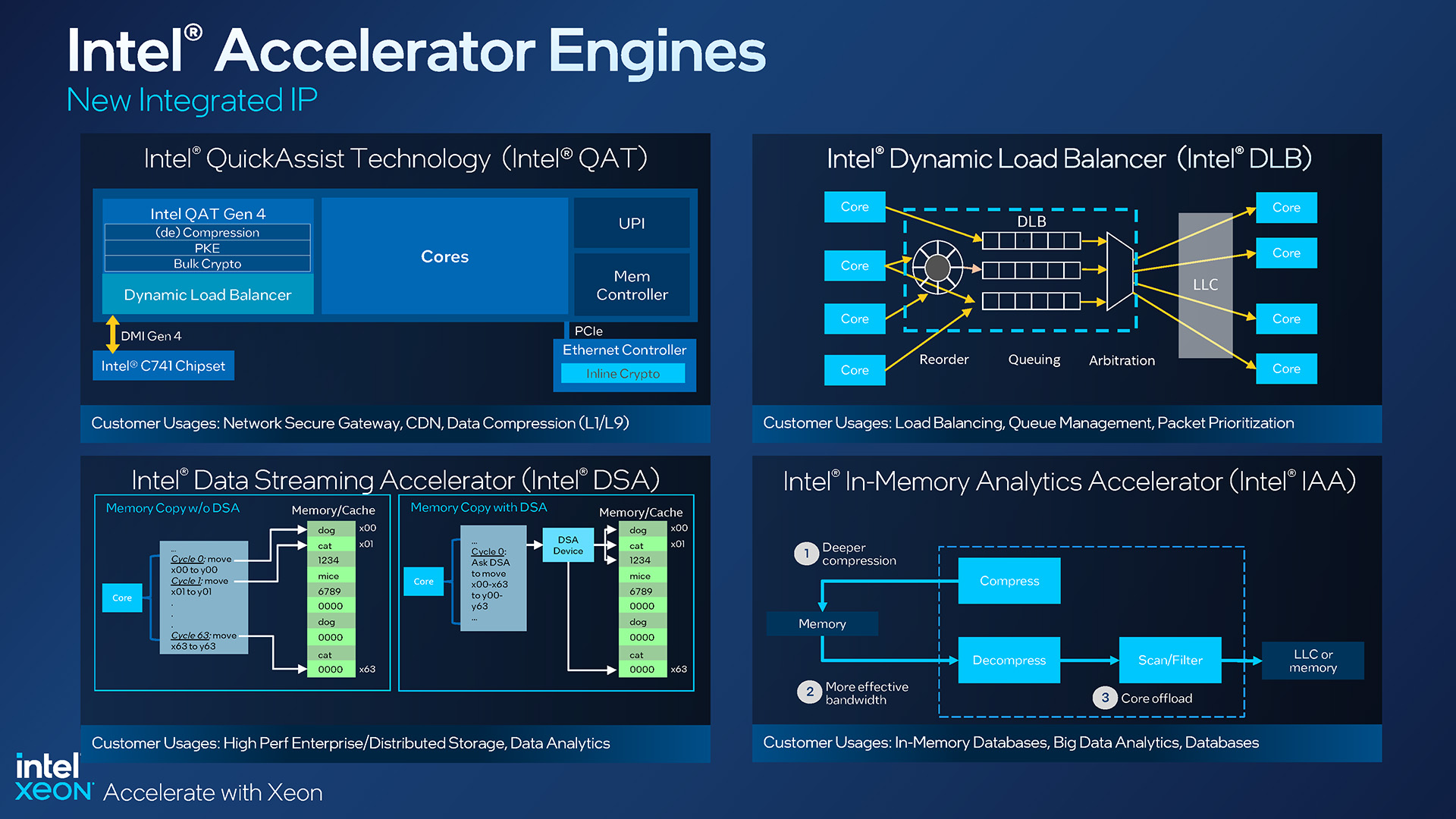

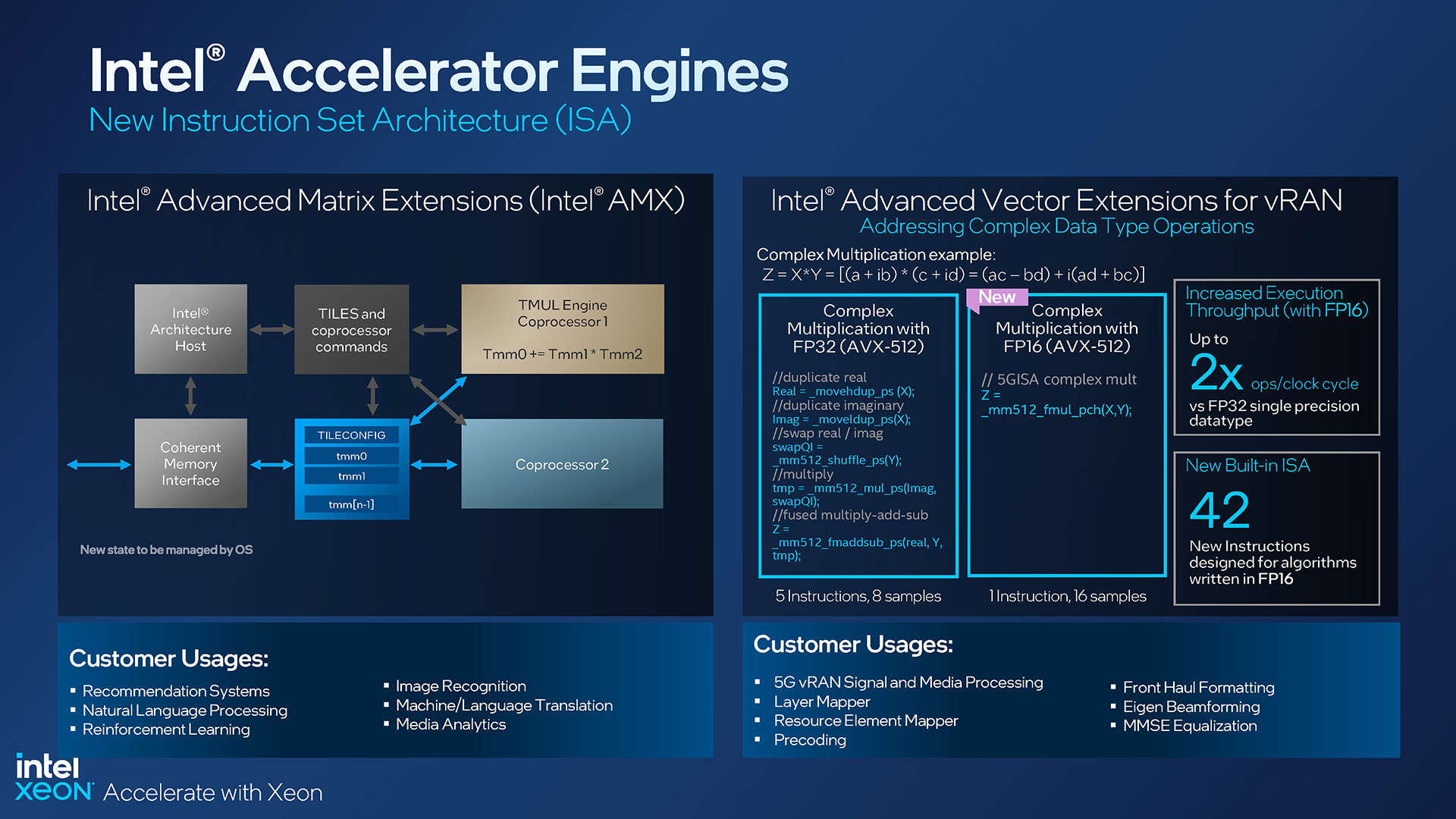

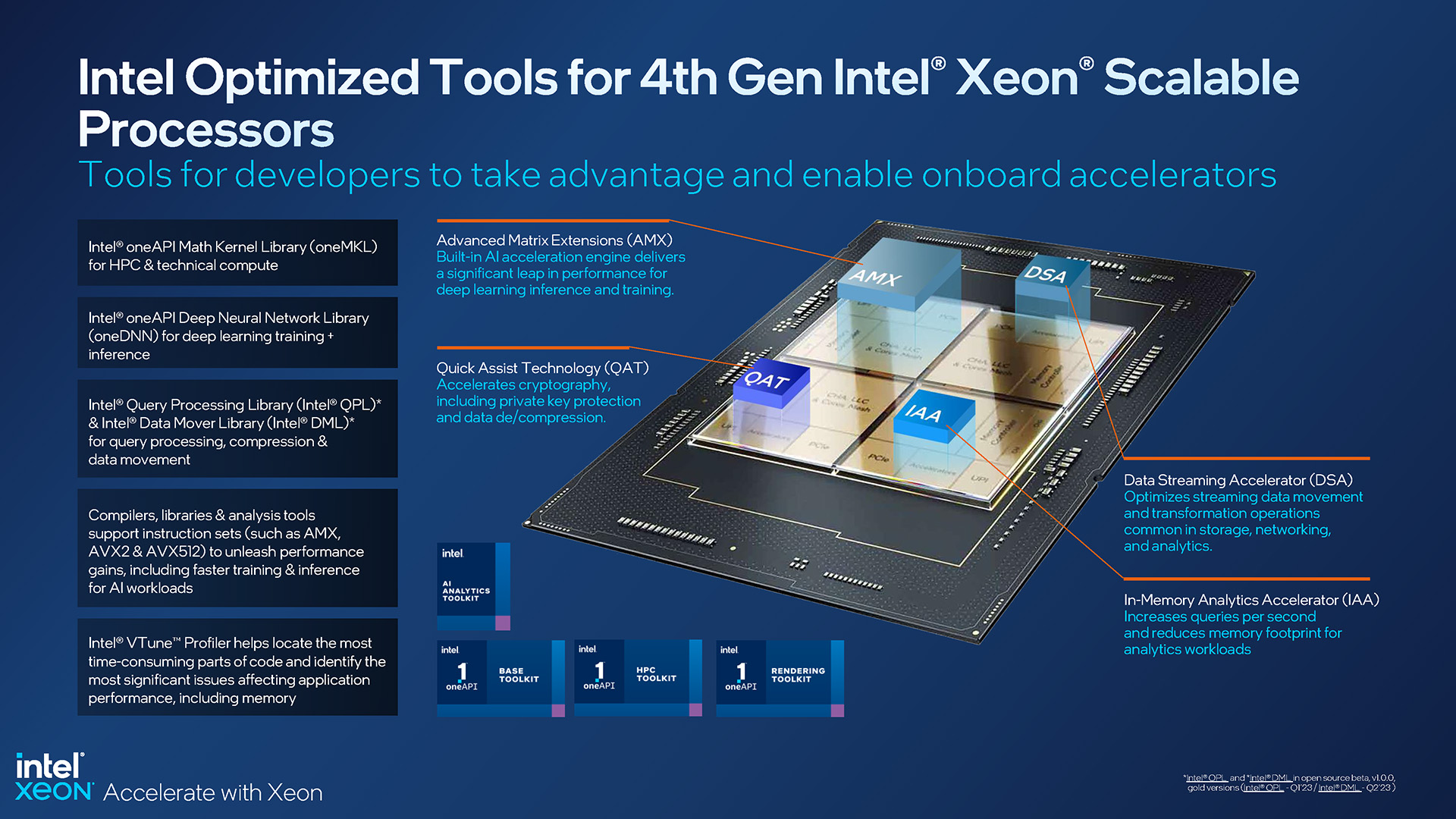

At the outset, we mentioned that the 4th-gen Xeon processors include accelerators which may (or more accurately, probably will) require updates in order to take full advantage of. While many 4th-gen Xeon Scalable and Max processors sport different levels of DSA/QAT/DLB/IAA support, all of them include the brand-new AMX matrix accelerator, the latest entrant to Intel’s ISA (instruction set architecture).

Intel loves AI, and with regards to Xeon CPUs, it’s been talking about it for quite a while. But at a time when GPUs are so fast at many AI workloads, what causes Intel to want to implement a solution like AMX here? For starters, where the CPU itself also participates heavily in an AI workload, having AMX right beside the cores can deliver great performance – perhaps enough to not even need discrete GPUs installed, depending on the overall workload.

Intel claims that its AMX is about eight-times faster at computing linear algebra vs. its vector engine, and target customers include those operating recommendation systems, image recognition, natural language processing, and so on.

vRAN has also been infused with some AVX updates, with 42 new instructions added that take advantage of algorithms written in FP16, with usages here targeting signal and media processing, front haul formatting, and layer mapping.

Acceleration really seems to be the name of the game with Sapphire Rapids. Multiple accelerators have been implemented to reduce load on the CPU itself, ultimately getting more done in the same amount of time.

Thankfully, while certain accelerator features are absent in select Xeon SKUs, AMX is becoming a de facto standard. That’s important, as we know that many software vendors will need to update their bits in order to take full advantage of it.

Support for everyone may not come as quickly as we’d like to see it, but because AMX is a standard, it means developers can feel safe devoting time to developing around it. It’s a harder sell when a cool new feature has limited support across the lineup. Notably, both TensorFlow and PyTorch have AMX support built-in, so we seem to be off to a good start.

Further, all of these accelerator engines share a coherent, shared memory space between the cores and accelerators, once again highlighting Intel’s efforts to deliver as efficient an architecture as possible, with minimal bottlenecks.

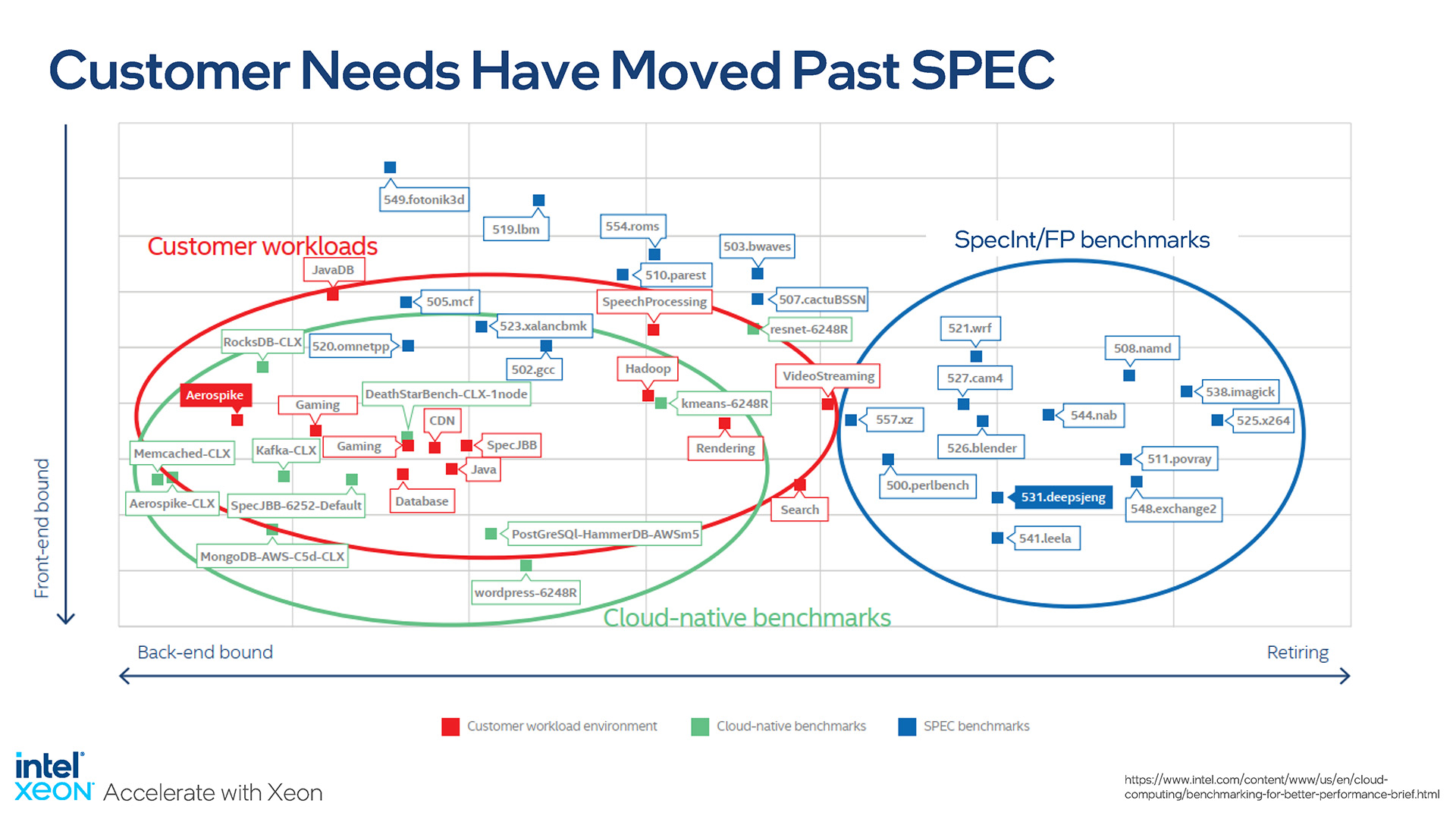

Speaking of performance, Intel Senior Fellow Ronak Singhal really spoke to us with his presentation, as he noted how important it is to benchmark processors with relevant tests. Pre-built and pre-tuned benchmarks have their purpose, but ultimately, what matters to customers is how the new platform affects their specific workload.

This slide says it all:

SPEC is a consortium that involves most of the industry’s biggest semiconductor players, so released benchmarks tend to be agreed-upon by everyone – there will be no inherent bias in the tests. But, these tests are rigid, and their results will not be reflective of most workloads. Intel itself worked with customers to gauge how disconnected most benchmarks were from in-practice scenarios, and thus stress the need to test with relevant benchmarks.

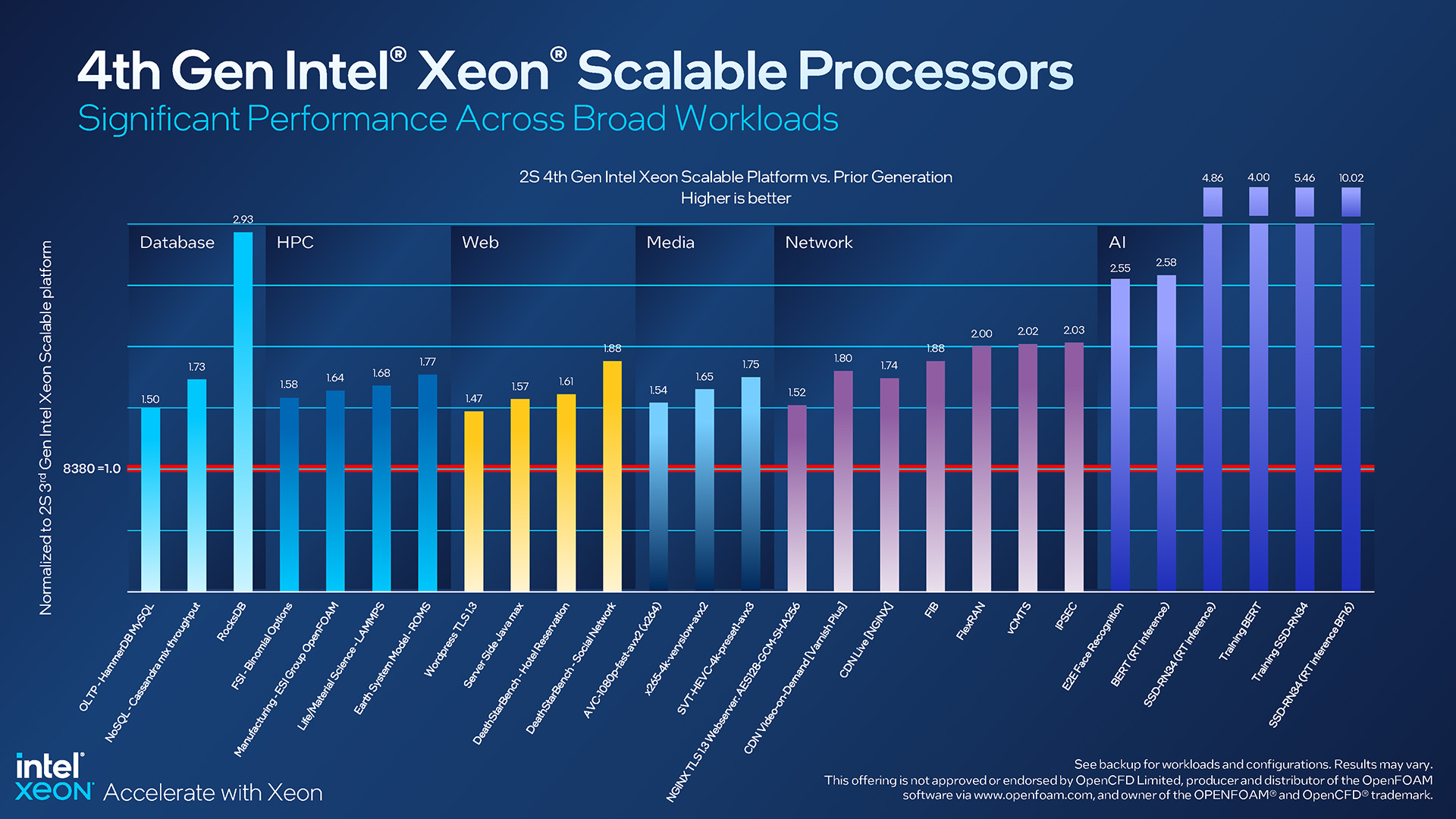

To that end, in more real-world tests, Sapphire Rapids performs well against the previous-generation top-end Xeon 8380:

The performance improvements seen across-the-board are great to see, but of course, some of these results stand out more than the others. The RocksDB one in particular is interesting, as it highlights the benefits with Intel’s updated In-Memory Analytics Accelerator. But even the modest gains remain impressive, such as with a ~50% improvement to WordPress performance while using TLS.

We can’t find explicit mention of it, but we have to imagine all of the AI workloads on the right side of that slide have seen such mammoth boosts thanks to the AMX introduction. While the final four bars all soar to the top, the real-time inference BF16 result, at around 10x, truly stands out.

Because Intel’s CPUs have so much unique hardware, the company goes to great lengths to make sure that everyone has the right tools and information for the job. Intel’s oneAPI is continually updated, as are many other tools, like oneDNN, QPL, and DML. The documentation is oft-updated, too.

Similarly, Intel also knows that for customers to take best advantage of its AI features, examples should be provided. This is why the company offers dozens of pre-trained AI models, which folks can integrate right into their projects, or learn from.

During one presentation, Intel noted that only 53% of AI projects get kicked-off and end up seeing completion, which is a rather massive failure rate. With solutions like oneAPI, which can draw processing power from the CPU or built-in accelerators, the goal is to make sure users can get their computation done in the most effective manner possible. In time, we’ll hopefully see that 53% number rise.

Final Thoughts

Intel’s divulged lots of information about its 4th-gen Xeon Scalable and Max lineup, and ultimately, we’ve just scratched the surface here, despite so much good information being discussed. Walking away from in-person briefings last month, it became clear that this launch is dear to Intel’s heart, and it’s for good reason. There’s a lot here that’s new and intriguing.

As always, Intel offers plenty of options – no matter your use case, you’re bound to find a CPU that fits the need. At the bottom, there’s an 8-core 3408U for general purpose use in a 1-socket platform, and on top is the behemoth 60-core 8490H that can be used in 8-socket systems.

Admittedly, it’s the Xeon Max series that has us most intrigued. To have 64GB of super-fast memory built right into the CPU could be a boon for certain workloads, and especially scenarios where lots of memory simply isn’t necessary. The fact that a Xeon Max server could boot up and operate as normal without a single DIMM installed isn’t just impressive, but useful.

How Sapphire Rapids ultimately stacks up against the competition will require exhaustive benchmarking from others to figure out, and we’re sure plenty of performance is coming. That said, we’re not entirely sure launch performance is going to paint the most accurate picture, because some design traits of Sapphire Rapids will require software updates to be made. We’ve already discussed that PyTorch and TensorFlow supports AMX out-of-the-gate, but there’s a lot more AI-targeted software out there.

Ultimately, we’re just glad to see Sapphire Rapids launch, as it’s been a long time coming, and we’re likewise pleased that there has been so much of interest to talk about. As with all things server, the trickle out of new product into the ecosystem is going to be slow, so it will be interesting to see where things stand mid-way and later in the year.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!