- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce RTX 3090 Performance In Blender, Octane, V-Ray, & More

NVIDIA launched its first Ampere-powered GPU just over one week ago, and on schedule, the second has arrived: GeForce RTX 3090. This GPU targets users who demand uncompromising performance and capabilities out of their graphics hardware, and with 24GB of GDDR6X, this GPU has plenty of breathing room for your biggest creative workloads.

Unless you’ve actively been ignoring all sources of tech news and discussion on the internet, you’re probably aware that NVIDIA released its first Ampere-powered GeForce last week. To say the launch was eventful would be an understatement. While we found ourselves blown-away with the performance offered by the RTX 3080, our readers found themselves with blown tops after failing to purchase one.

It seems likely that the RTX 3080 is going to remain elusive for a little while, so anyone who’s desperate for one will be finding themselves refreshing the same few browser tabs throughout the day. Since last week, NVIDIA and its partners have made improvements to their web portals, and are working to increase supply, so we’re hoping the second Ampere GeForce, RTX 3090, will find itself a little more available.

Speaking of the RTX 3090, that happens to be the topic of discussion today. As we did last week with the RTX 3080, we’ve put the new RTX 3090 through our ProViz gauntlet to see how it performs against the TITAN RTX that it’s effectively replacing. For those that prefer to watch rather than read, you can check out our video review, too.

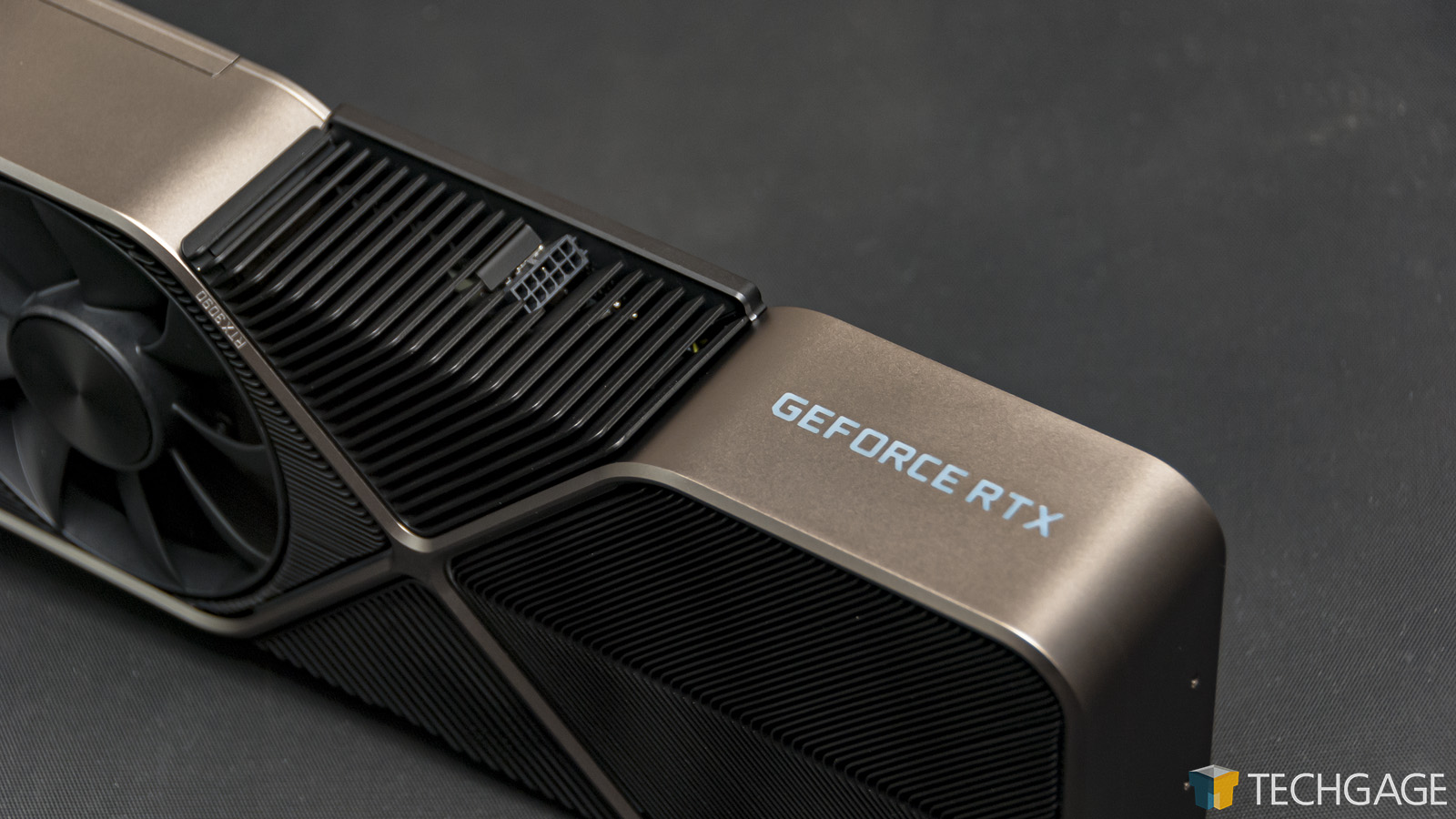

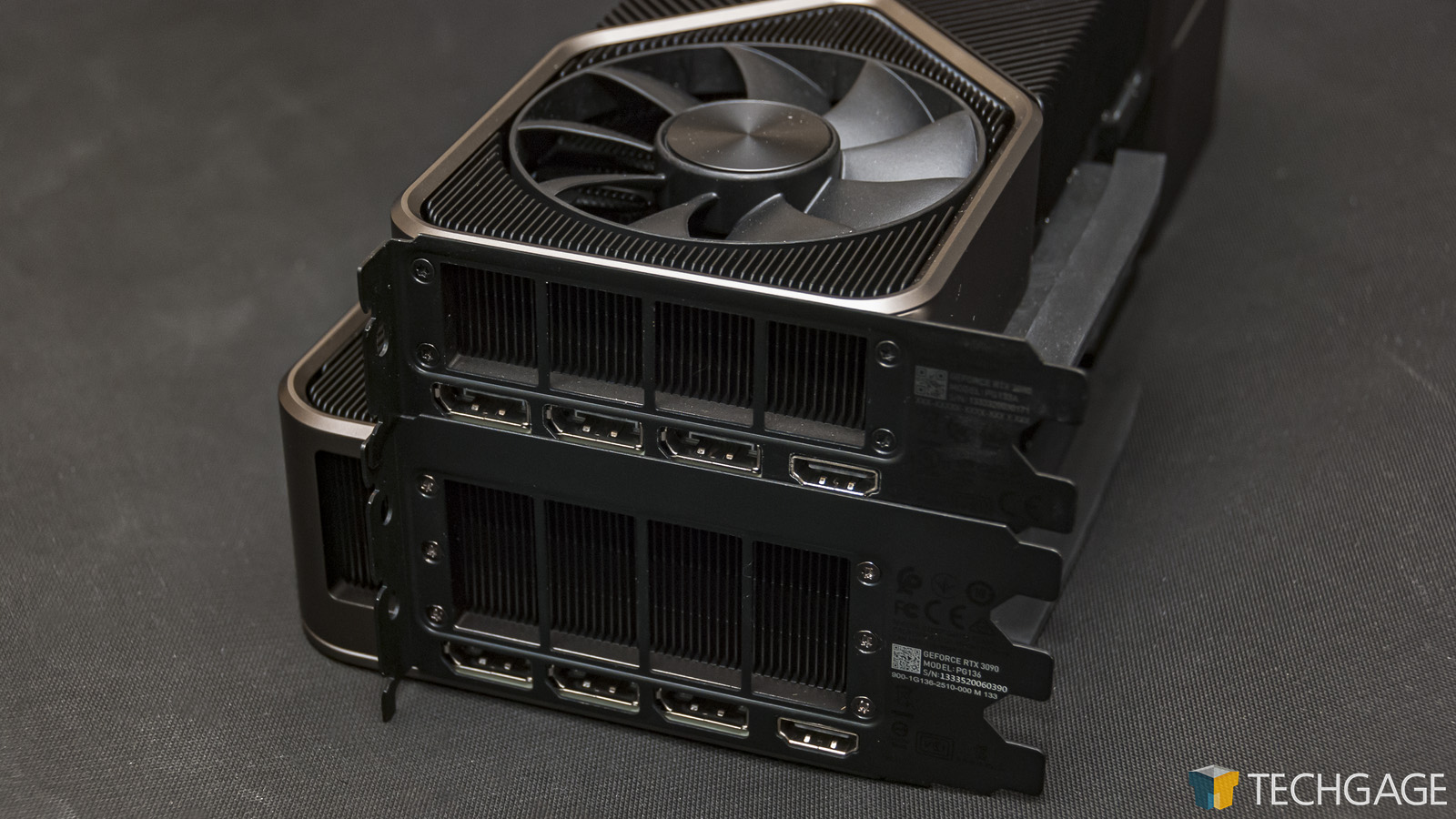

Here’s a look at it up close:

We think it’s fair to call this card a “beast”, not only because it will prove to be the fastest gaming and creator GPU on the market, but also because it will take up three PCI slots in your PC. The RTX 3080 itself was a bit larger than the last-gen top-end GeForces, but the RTX 3090 almost feels like it is the computer. You’ll need a big chassis to ensure you won’t run into clearance issues.

Like the RTX 3080, the Founders Edition of the RTX 3090 uses a new 12-pin power connector, which can be utilized with the help of an included adapter. In time, we suspect PSU vendors will adopt the connector, saving some adapter hassle. We love the look of this 12-pin connector, and appreciate being able to whittle two connectors down to one, but even so, it’s a little painful that you won’t be able to avoid having a cable hanging in front of the installed FE cards.

The reason the power connector isn’t found at the end of the card is because the PCB itself is actually pretty small, taking up only a little over half of the total card length. As you might expect, the Ampere chip under-the-hood of these top-end GPUs need lots of cooling ability, and so with a cooler this large on the RTX 3090, the roughest of workloads should still be fairly silent on this card.

The majority of vendor RTX 3090s are also going to exceed two slots in width, although there do exist a few models that stick close to a dual-slot design. How that will impact cooling, we’re not sure, but we do recommend that if you have the room inside of your rig, and don’t need the extra PCIe slot the card will completely cover up, then you may as well opt for the bigger coolers.

Before going further, here’s a breakdown of NVIDIA’s current lineup:

| NVIDIA’s GeForce Gaming GPU Lineup | |||||||

| Cores | Base MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 | 10,496 | 1,400 | 35.6 TFLOPS | 24GB 1 | 936GB/s | 350W | $1,499 |

| RTX 3080 | 8,704 | 1,440 | 29.7 TFLOPS | 10GB 1 | 760GB/s | 320W | $699 |

| RTX 3070 | 5,888 | 1,500 | 20.4 TFLOPS | 8GB 2 | 512GB/s | 220W | $499 |

| TITAN RTX | 4,608 | 1,770 | 16.3 TFLOPS | 24GB 2 | 672 GB/s | 280W | $2,499 |

| RTX 2080 Ti | 4,352 | 1,350 | 13.4 TFLOPS | 11GB 2 | 616 GB/s | 250W | $1,199 |

| RTX 2080 S | 3,072 | 1,650 | 11.1 TFLOPS | 8GB 2 | 496 GB/s | 250W | $699 |

| RTX 2070 S | 2,560 | 1,605 | 9.1 TFLOPS | 8GB 2 | 448 GB/s | 215W | $499 |

| RTX 2060 S | 2,176 | 1,470 | 7.2 TFLOPS | 8GB 2 | 448 GB/s | 175W | $399 |

| RTX 2060 | 1,920 | 1,365 | 6.4 TFLOPS | 6GB 2 | 336 GB/s | 160W | $299 |

| GTX 1660 Ti | 1,536 | 1,500 | 5.5 TFLOPS | 6GB 2 | 288 GB/s | 120W | $279 |

| GTX 1660 S | 1,408 | 1,530 | 5.0 TFLOPS | 6GB 2 | 336 GB/s | 125W | $229 |

| GTX 1660 | 1,408 | 1,530 | 5 TFLOPS | 6GB 4 | 192 GB/s | 120W | $219 |

| GTX 1650 S | 1,280 | 1,530 | 4.4TFLOPS | 4GB 2 | 192 GB/s | 100W | $159 |

| GTX 1650 | 896 | 1,485 | 3 TFLOPS | 4GB 4 | 128 GB/s | 75W | $149 |

| GTX 1080 Ti | 3,584 | 1,480 | 11.3 TFLOPS | 11GB 3 | 484 GB/s | 250W | $699 |

| Notes | 1 GDDR6X; 2 GDDR6; 3 GDDR5X; 4 GDDR5; 5 HBM2 GTX 1080 Ti = Pascal; GTX/RTX 2000 = Turing; RTX 3000 = Ampere |

||||||

While NVIDIA doesn’t always invite TITAN into its GeForce discussions, the company directly pits the RTX 3090 against the TITAN RTX in the card’s reviewer’s guide, which is interesting for a few reasons. On one hand, TITAN bundles extra workstation optimizations, such as improved performance in certain CAD suites, while the GeForce series doesn’t (something we verified with RTX 3090). We’re not sure if this means TITAN is done like the dodos, or if we’ll end up seeing a new model with even more VRAM in the months ahead.

That leads us to an all-important question: Who needs 24GB of VRAM? The answer is “not many people”. If you’re not sure if you could benefit from having such a large frame buffer on your GPU, chances are good that you couldn’t at this particular time. A card like the RTX 3080, with its 10GB frame buffer, will offer plenty of breathing room for most people, so with 24GB on tap, the RTX 3090 targets those with the heaviest possible workloads; we’re talking large 3D projects or gaming extending beyond 4K resolution. In fact, the 3090 has been targeted as an 8K ready GPU in gaming, with an HDMI 2.1 port allowing for an 8K display to be pushed at 60 FPS.

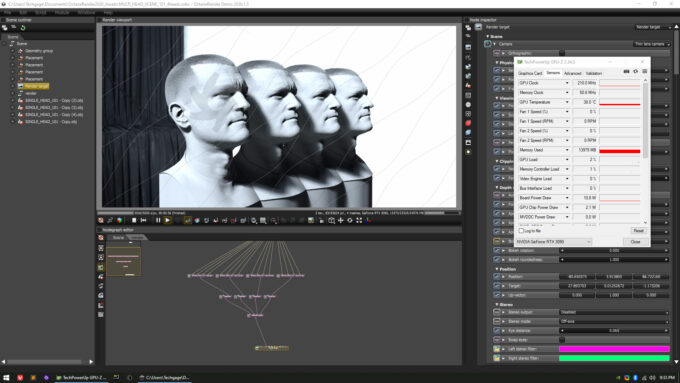

While it bears a GeForce name, NVIDIA has talked lots about the creator aspect of the RTX 3090. In OctaneRender, as we’ve verified, huge projects will be able to load entirely into GPU memory, whereas GPUs with limited VRAM will need to use system memory with an out-of-core mode. It’s nice to have that option, but you will see severe performance hits when using it.

The above shot is of an OctaneRender model provided to us by NVIDIA which will require more memory than a GPU like the RTX 3080 could provide, peaking at 14GB. If out-of-core had to be used, then the end render time bloats about tenfold (36 seconds on RTX 3090; ~400 seconds on RTX 3080 with out-of-core.)

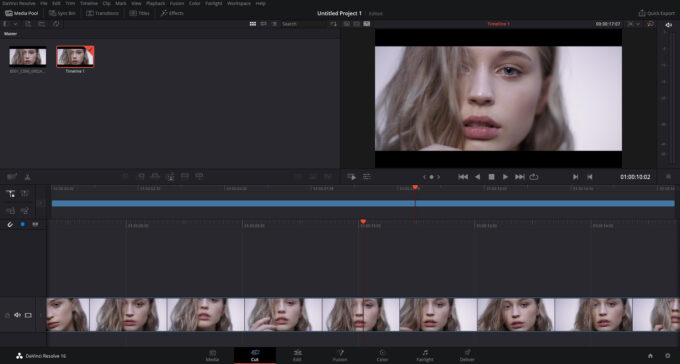

We’ve been hearing about 8K video editing for a few years now, and it’s a workload that could benefit not just from so much memory, but also the PCIe 4.0 bus. When AMD revealed its Navi-based RX 5700 XT last summer, it promoted DaVinci Resolve use with really high-end 8K content. Using PCIe 4, we saw smooth playback in the editor, whereas with PCIe 3, some stuttering was evident.

This article is going to focus largely on the same benchmarking found in last week’s RTX 3080 look, but we’ll be exploring ways to expand our testing to actually take better advantage of GPUs with so much memory, in much the same way we had to find new workloads to exercise top-end Ryzen Threadripper CPUs as AMD rolled them out to market.

We’ll also take this opportunity to mention that we have more workloads that need to be evaluated in the near-future, largely inspired by requests from our readers. We have plenty of ideas, and just need to find the time to explore them.

Since you’re likely dying for some performance information at this point, let’s get to it. Here’s a look at our test system, followed by the first test results:

| Techgage Workstation Test System | |

| Processor | Intel Core i9-10980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL FlareX (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA RTX 3090 (24GB, GeForce 456.38) NVIDIA RTX 3080 (10GB, GeForce 456.16) NVIDIA TITAN RTX (24GB, GeForce 452.06) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 452.06) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 452.06) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 452.06) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 452.06) NVIDIA GeForce RTX 2060 (6GB, GeForce 452.06) NVIDIA GeForce GTX 1080 Ti (11GB, GeForce 452.06) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19041.329 (2004) |

| All product links in this table are affiliated, and help support our work. | |

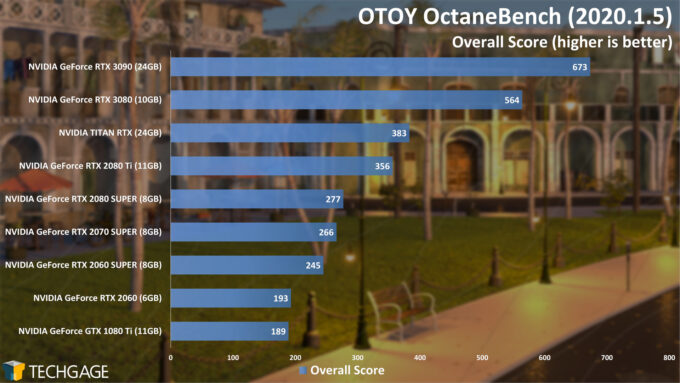

OTOY OctaneRender 2020

OctaneBench 2020 released ahead of the RTX 3080, and as before, it offers the ability to test with RTX on or off. Unfortunately, the scaling between those two modes in this version is really uneventful, so we’re going to continue focusing on the primary result here. With those results in hand, OctaneBench says that the RTX 3090 is 19% faster than the RTX 3080, which is about what we hoped to see.

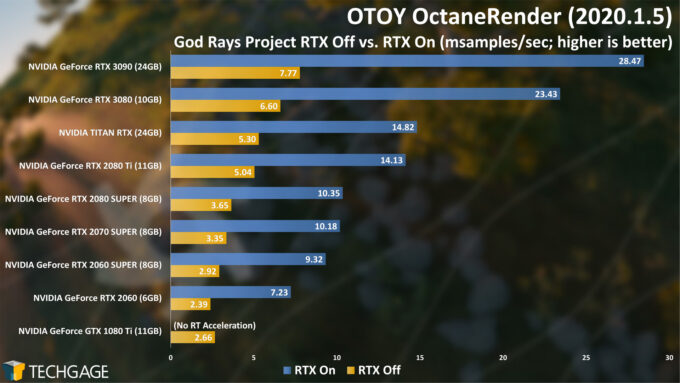

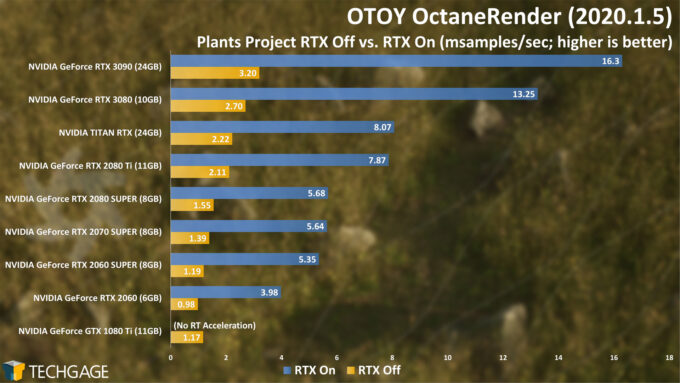

Here are some results from the actual OctaneRender client, using two different projects:

With OctaneRender’s built-in RTX benchmark, we actually do see useful results between RTX on and off, leading us to hope a future OB update will make the RTX off option more fun to use. Either way, it’s been made super clear that the RTX 3090 is one crazy-fast GPU.

In both of our OctaneBench and OctaneRender tests, the RTX 3090 has proven itself to be up to two-times faster than the TITAN RTX. That’s really notable, because the RTX 3090 costs $1,000 less than the TITAN RTX did. A lot faster and cheaper? It’s hard to complain about that.

Of course, comparing the RTX 3090 to the RTX 3080 reveals less impressive gains overall, but we’re dealing with a premium product here, and one with a lot more VRAM.

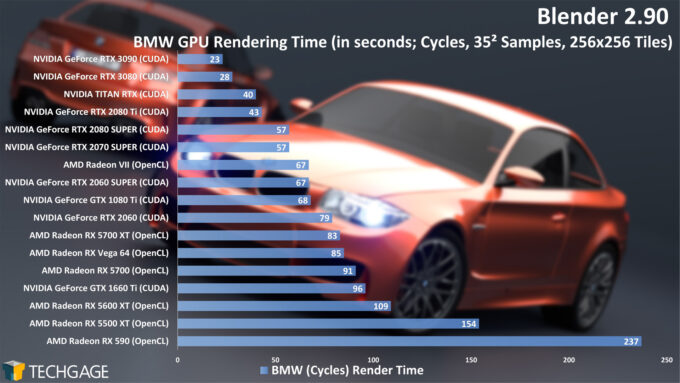

Blender 2.90

Over the past three years or so, NVIDIA’s dramatically improved the rendering performance in its stack. It was once exciting when the BMW project broke through the 60 second barrier, but today, we have these new RTX GPUs cutting through the 30 second mark. The RTX 3090 comes closer to 20 seconds than it does 30 seconds. These new GPUs are really, really fast.

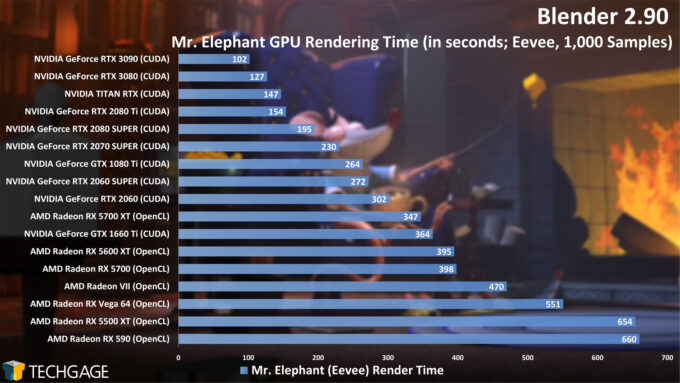

In this comparison, the RTX 3090 manages to cut the render time almost in half, when compared to the TITAN RTX. How does that carry over to the Eevee engine? Let’s find out:

The gains for the RTX 3090 over RTX 3080 were noticeable in the BMW project, but they’re a bit more pronounced in this Eevee project. It’s important to note here that while we’re brute-forcing the project with a ton of samples, we’ve verified that the performance will still directly scale with normal animations using far fewer samples. Overall, NVIDIA seems to easily win the Eevee battle in general, with AMD not appearing in the chart until its midway point.

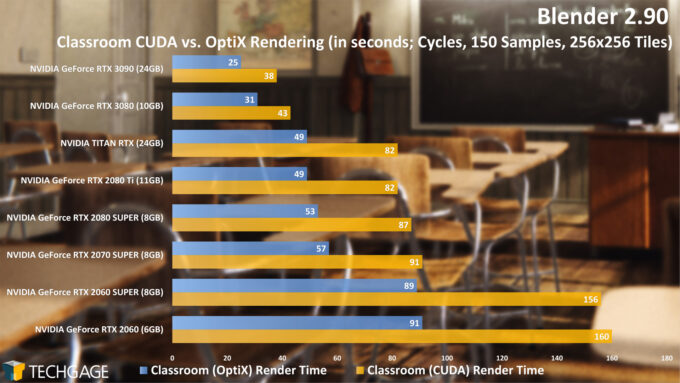

Back to Cycles, infused with some OptiX ray tracing acceleration:

The RTX 3090 again cuts down nicely on the time against the RTX 3080, but absolutely dominates against the TITAN RTX that it’s replacing. In one generation, we’re seeing NVIDIA’s 24GB option drop from 49 seconds to 25 seconds when using OptiX, and drop more than half with the CUDA-only render. It’s really hard to not be blown-away by this performance, and it makes us hope that AMD has some surprises up its sleeves for its upcoming RDNA2 launch.

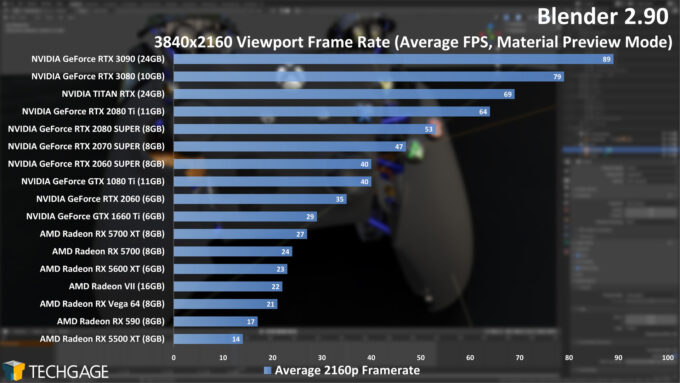

On any recent generation GPU, viewport performance with Blender’s material preview mode should work pretty well, assuming the project isn’t too complex, and you’re using a modest resolution. While we didn’t include the 1080p and 1440p results here, this Controller model we use will not scale that easily at those resolutions due to what we’re sure is a CPU bottleneck. At 4K, that becomes less of an issue.

Naturally, being the fastest gaming and creator GPU on the planet, it shouldn’t surprise anyone that the RTX 3090 becomes the most capable graphics card for Blender’s advanced viewport mode. We’re actually pretty happy to see it still scale on the top-end, gaining 20 FPS over the TITAN RTX, and more than doubling what the old 1080 Ti could muster.

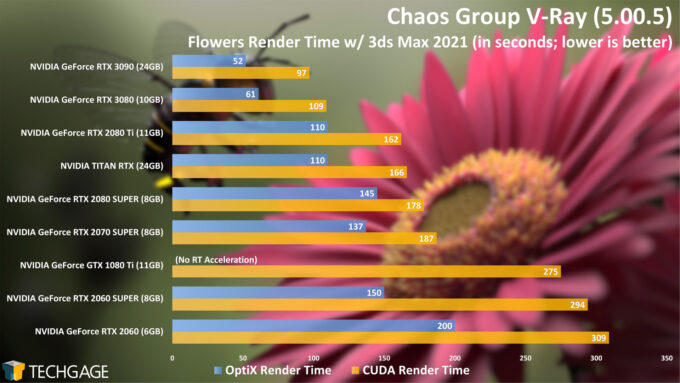

Chaos Group V-Ray 5

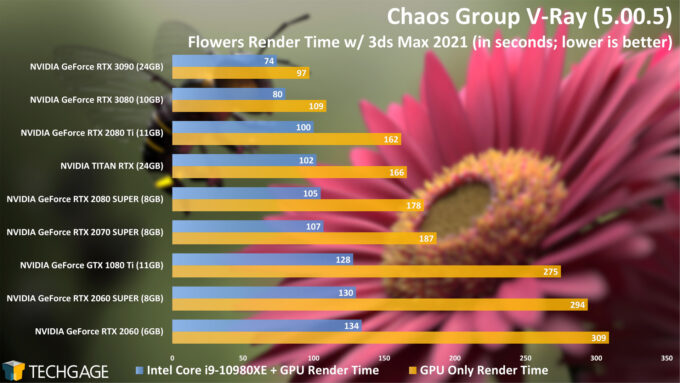

With our first V-Ray result, we’re seeing less of a gain on the RTX 3090 over the RTX 3080 than with the other tests, although when compared to the TITAN RTX, the RTX 3090 cleans house. When combined with the use of a many-core CPU, heterogeneous rendering can provide a rather noticeable improvement to render time. Similarly, NVIDIA’s OptiX aims to accomplish the same, so let’s check those results out:

With OptiX enabled, both the RTX 3080 and RTX 3090 beat out the fastest results seen with the heterogeneous rendering result. Last-gen, the top-end TITAN RTX rendered this project in 110 seconds, whereas the RTX 3090 cuts that down more than half to 52 seconds. That is a tremendous gain from one generation to the next.

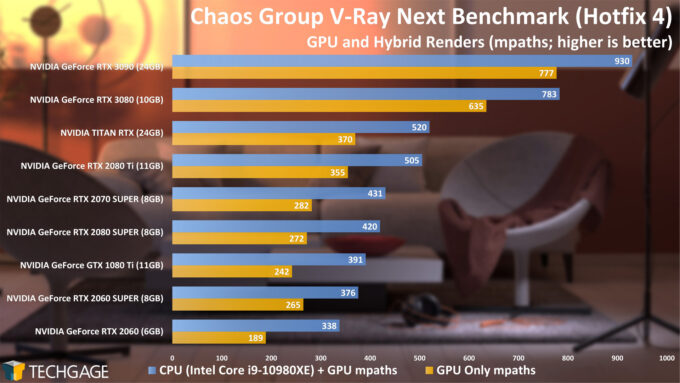

The standalone V-Ray benchmark, which is based on the older 4.X engine, puts the RTX 3090 in an even better light, boasting a 19% gain over the RTX 3080. We’re hopeful that the next standalone V-Ray benchmark will drop sooner than later, equipped with OptiX capabilities built-in, to show more modern performance in the event our standalone project isn’t flexing the hardware properly enough.

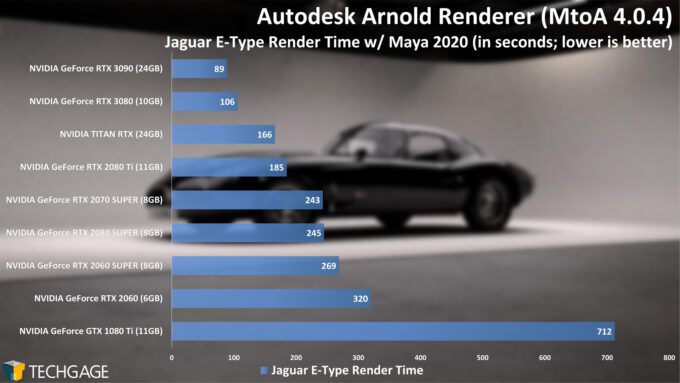

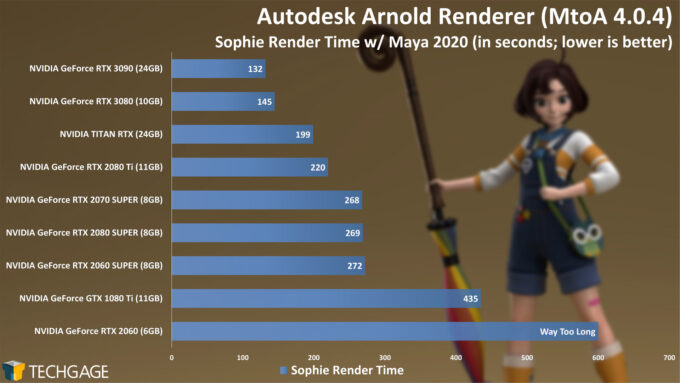

Autodesk Arnold 6

In an example of two projects not scaling exactly the same, the advantage of the RTX 3090 over the RTX 3080 in the E-Type test was more pronounced than it was in the Sophie one. As mentioned before, though, the best comparison to the RTX 3090 is the TITAN RTX from last-gen, which also offered 24GB of memory, and compared to that, some major generational leaps in performance can be seen.

Arnold uses OptiX by default, and if RT cores exist, they automatically get included in the render. Unlike some of the other renderers here, there is no RTX off option to test, but when comparing to the older GTX 1080 Ti, we almost don’t need an RTX off value. The gains in performance between that GPU from three-and-a-half years ago and the new RTX 3080, which carries the same SRP, is simply incredible. And to think, Arnold was a CPU-only renderer not too long ago.

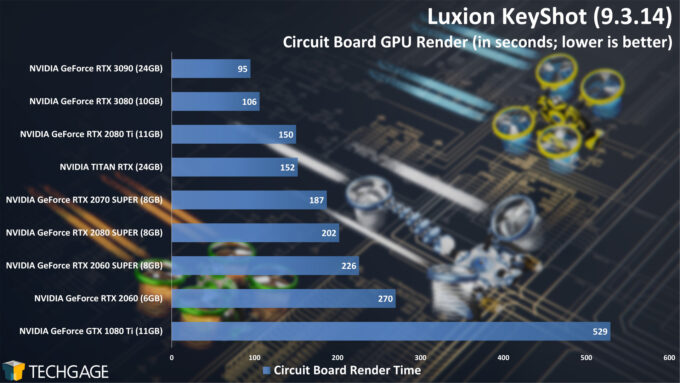

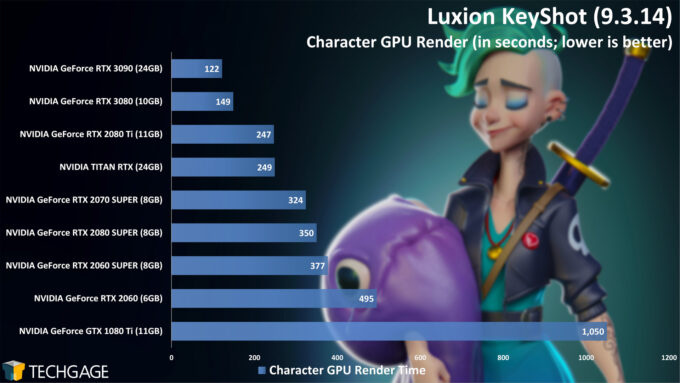

Luxion KeyShot 9

Interestingly, our KeyShot results follow in the same footsteps as our Arnold results, in that one project flexes the RTX 3090’s brawn better than the other. With the Character render, it’s nice to see the RTX 3090 pull a bit ahead of the RTX 3080, and literally halve the rendering time when compared against the TITAN RTX.

This set of results shows yet again just how much slower the 1080 Ti is in rendering than more modern GeForces. All things considered, the 1080 Ti is still a strong GPU, but if you’re spending a lot of your time rendering, you really owe it to yourself to make the move to a newer card. Even last-gen’s smallest GeForce RTX card managed to cut the render time of the 1080 Ti in half.

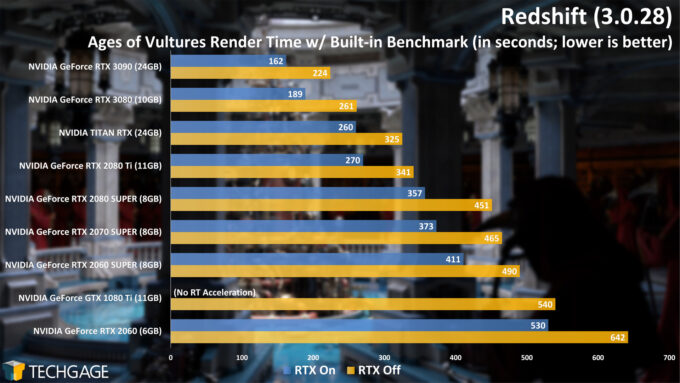

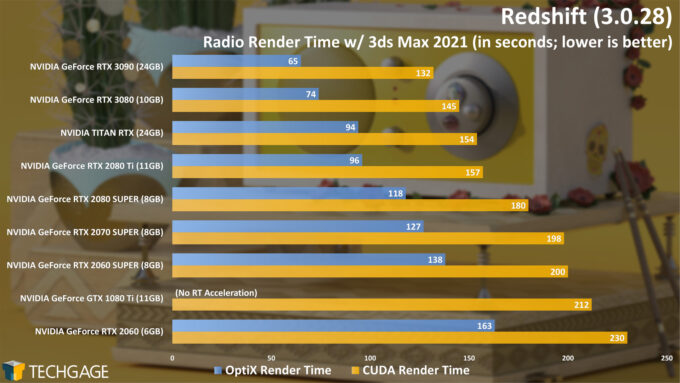

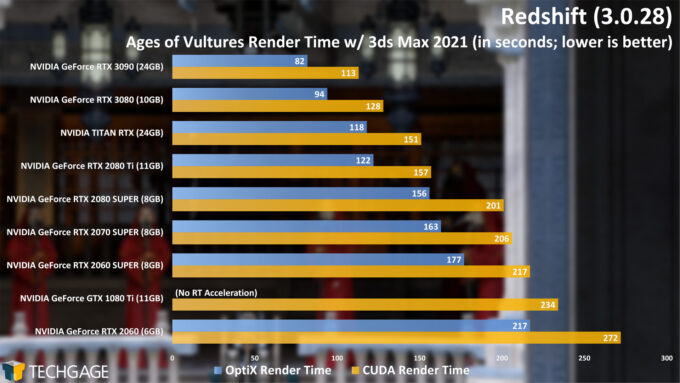

Maxon Redshift 3

After we posted our look at NVIDIA’s RTX 3080 last week, we received multiple requests to retest Redshift using its built-in benchmark. When we first dove into Redshift testing, we didn’t realize a standalone benchmark existed, but going forward, we’ll be sure to include the results from it, as well as have a second opinion via our usual 3ds Max test.

Nonetheless, in this benchmark, the RTX 3090 again pulls ahead of the 3080 with a comfortable – but not mind-blowing – lead. The differences between RTX on and off is what’s really notable here – enabling RTX gives a quick and simple boost to rendering performance that will actually be noticed. The RTX 3090 didn’t quite halve the render time against the TITAN RTX in this test like we’ve seen in some others, but we have a suspicion that we’ll see performance improve over time, as more updates roll out (either to Redshift or NVIDIA’s own graphics drivers).

Here’s a look at real-world performance using 3ds Max:

For the most part, our real-world Ages of Vultures project render scales the same as it does in the standalone benchmark, which is good to see. It’s also something that will likely lead us to abandon that AoV real-world test, and just stick to the Radio one instead. That project, which is lighter in nature, shows a far greater delta between the RTX off and on modes.

Ultimately, the obvious thing to take away from these results is that you definitely want to just leave RTX on. Note that this is not done by default, so you will need to fish through Redshift’s settings to enable it.

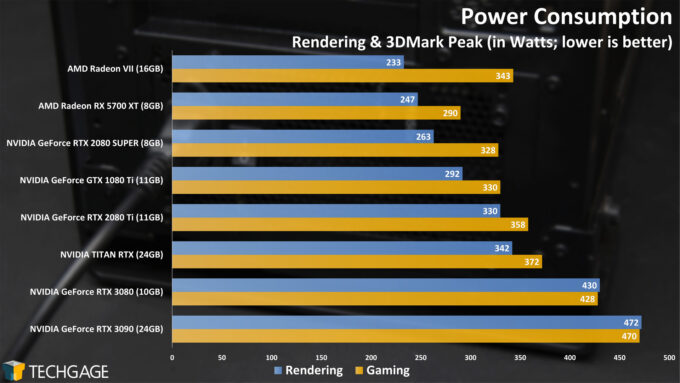

Power & Temperatures

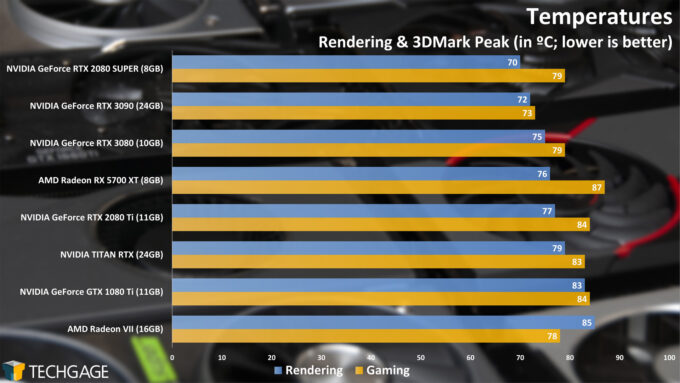

To test this collection of GPUs for power and temperatures, we use both rendering and gaming workloads. On the rendering side, we run the Classroom project in Blender; for gaming, we use 3DMark’s Fire Strike 4K stress-test. Both workloads are run for ten minutes, with the peak values recorded. Power was recorded at-the-wall with a Killawatt meter, measuring full system power draw during their respective workloads. Temperature was recorded by the card’s own temp sensor.

The RTX 3080 gave us interesting results last week when both the rendering and gaming stress-tests topped the GPU out the same (even after a retest to verify). Perhaps not-so-interestingly, the RTX 3090 behaves for us the exact same way. Ultimately, the RTX 3090 can prove up to twice as fast as the TITAN RTX, and will draw an extra 100W to help accomplish it.

Temperatures-wise, the RTX 3090 actually ran cooler than the RTX 3080, something that’s perhaps not a big surprise considering the cooler is massive. In our limited testing, it also proved quiet overall compared to the rest of tested cards. You can hear it, but at full load, it’s still pretty modest. Cards like the Radeon VII from AMD stood out like a sore thumb from a noise perspective, but the RTX 3090 didn’t stand out any more than any other recent NVIDIA card we’ve tested.

Final Thoughts

NVIDIA’s GeForce RTX 3090 is an interesting card for many reasons, and it’s harder to summarize than the RTX 3080 was, simply due to its top-end price and goals. The RTX 3080, priced at $699, was really easy to recommend to anyone wanting a new top-end gaming solution, because compared to the last-gen 2080S, 2080 Ti, or even TITAN RTX, the new card simply trounced them all.

The GeForce RTX 3090, with its $1,499 price tag, caters to a different crowd. First, there are going to be those folks who simply want the best gaming or creator GPU possible, regardless of its premium price. We saw throughout our performance results that the RTX 3090 does manage to take a healthy lead in many cases, but the gains over RTX 3080 are not likely as pronounced as many were hoping.

The biggest selling-point of the RTX 3090 is undoubtedly its massive frame buffer. For creators, having 24GB on tap likely means you will never run out during this generation, and if you manage to, we’re going to be mighty impressed. We do see more than 24GB being useful for deep-learning and AI research, but even there, it’s plenty for the vast majority of users.

Interestingly, this GeForce is capable of taking advantage of NVLink, so those wanting to plug two of them into a machine could likewise combine their VRAM, activating a single 48GB frame buffer. Two of these cards would cost $500 more than the TITAN RTX, and obliterate it in rendering and deep-learning workloads (but of course draw a lot more power at the same time).

For those wanting to push things even harder with single GPU, we suspect NVIDIA will likely release a new TITAN at some point with even more memory. Or, that’s at least our hope, because we don’t want to see the TITAN series just up and disappear.

For gamers, a 24GB frame buffer can only be justified if you’re using top-end resolutions. Not even 4K is going to be problematic for most people with a 10GB frame buffer, but as we move up the scale, to 5K and 8K, that memory is going to become a lot more useful.

By now, you likely know whether or not the monstrous GeForce RTX 3090 is for you. Fortunately, if it isn’t, the RTX 3080 hasn’t gone anywhere, and it still proves to be of great value (you know – if you can find it in stock) for its $699 price. NVIDIA also has a $499 RTX 3070 en route next month, so all told, the company is going to be taking good care of its enthusiast fans with this trio of GPUs. Saying that, we still look forward to the even lower-end parts, as those could ooze value even more than the bigger cards.

If you have questions that weren’t answered here, please let us know in the comments. We’re of course not finished testing the RTX 3090, and we’ll continue to augment our benchmarks over time to better accommodate the extra memory, and also offer other perspectives to how these cards can be used. We’re not going to be bored for a while yet!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!