- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GeForce RTX 4090: The New Rendering Champion

NVIDIA has just kicked-off its Ada Lovelace generation with a new flagship GPU: GeForce RTX 4090. Our first performance look will revolve around rendering, and once you see the results, you’ll understand why. Whereas Ampere itself brought huge rendering gains over Turing, Ada Lovelace does the same over Ampere.

NVIDIA had some bold claims when it announced its Ada Lovelace GeForce generation a couple of weeks ago. If you were to ignore those claims, and just stick to the paper specs, even that implied that we’d be seeing an effective doubling of ray tracing performance. After testing a range of popular render engines, we can confirm that the hype converts to reality in this case.

We’re not quite through benchmarking the nine additional graphics cards that will be piled on top of results published in our Intel Arc A750 and A770 review from last week, so we wanted to dedicate this first GeForce RTX 4090 performance look to rendering. With such large gains, it almost deserves a dedicated piece.

While this article revolves around rendering performance in 3D design software, our future looks are going to expand the creator angle to involve encoding, photogrammetry, AI, and math workloads, and naturally, gaming tests are in store, as well. There’s only so much time in the day, and only so much benchmarking that can get done in any given day.

As covered before, the Ada Lovelace generation brings 4th-gen Tensor cores and improved Optical Flow to the table. In creation, these accelerate things like denoising, while for gaming use, they’re taken advantage of for upscaling through DLSS – which is now at version 3. On the ray tracing core side, Ada Lovelace introduces the 3rd-gen implementation, which promises (and largely delivers) a 2x performance boost over the Ampere generation.

Other features worth noting is Shader Execution Reordering, which further improves ray tracing performance, including in games, where one such example shows a 44% bump in Cyberpunk 2077. Also, Intel was first to market with an AV1 accelerated GPU encoder, and NVIDIA wasn’t far behind, as Ada Lovelace packs one in, as well. Intriguingly, NVIDIA offers dual encoders on-board, which it claims will quite literally halve encode times. We’ll explore this with our upcoming full creator performance look.

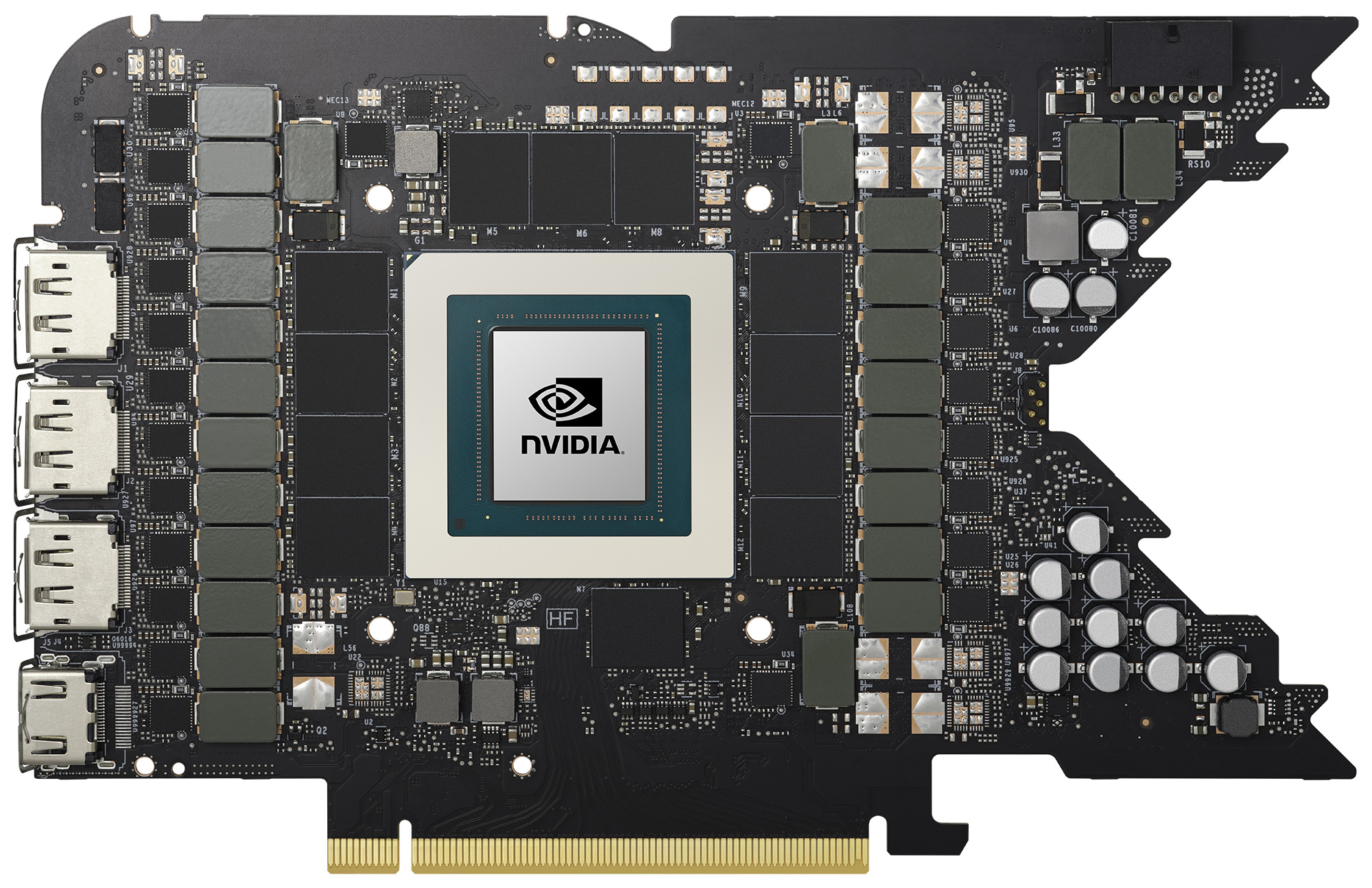

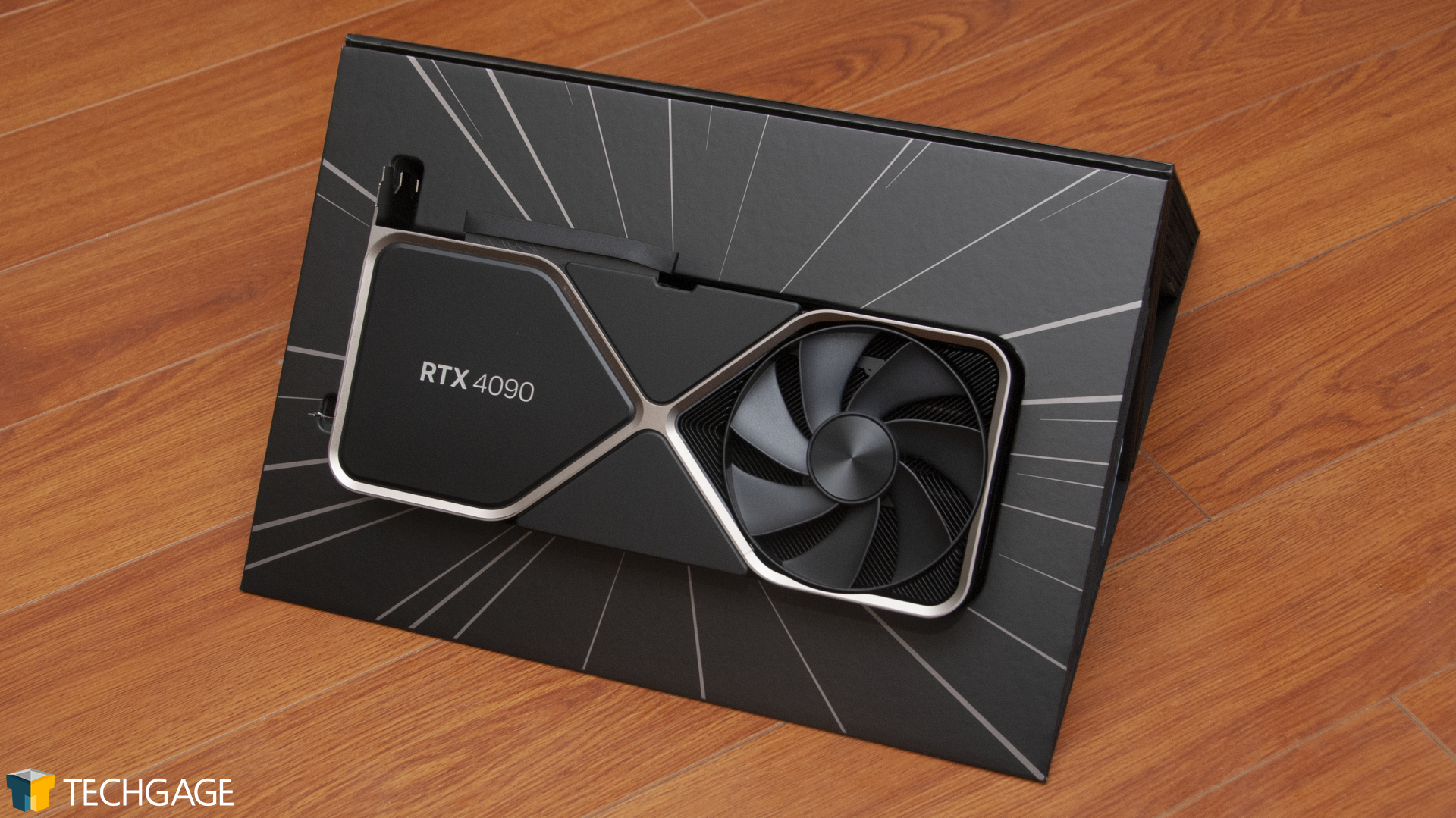

Before jumping into a look at rendering performance with NVIDIA’s newest flagship, let’s take a look at the hardware, with NVIDIA’s Founder Edition:

If the RTX 4090 looks large, that’s because it is. When the RTX 3090 launched two years ago, we were amazed at just how bulky the FE card was – and here, the RTX 4090 is even larger. Thankfully, it’s just the width of the card that’s increased, not length (or else we would have been in trouble with our test machine).

Spec’d at 450W, the RTX 4090 is a power-hungry GPU, and thus the bigger the PSU, the better. NVIDIA’s stated minimum is 850W, and as we’ve peaked at 650W while running an intensive 3DMark test, we’d say that’s accurate. If you’re building a new machine around the RTX 4090, however, we’d highly suggest going for at least a 1,000W model.

Because the RTX 4090 demands so much juice, 3x 8-pin power connectors are required, or a single power connector provided with a new PCIe 5-supported PSU. As our test rig’s PSU just matches the minimum requirement, we’ll be shifting to a bigger one in the future.

With regards to the RTX 4090’s cooler, the design remains similar to that seen on the last generation with the RTX 3090, but under-the-hood improvements have benefited temperatures. The latest model has a larger fan, and at the same time reduces the number of blades. We haven’t done thorough temperature testing yet, but we have tested the RTX 4090 enough to see that it draws an additional 100W over the 3090 (650W total) during a 3DMark Fire Strike Ultra test.

| NVIDIA’s GeForce Gaming & Creator GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 4090 | 16,384 | 2,520 | 82.6 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,599 |

| RTX 4080 16GB | 9,728 | 2,510 | 48.8 TFLOPS | 16GB 1 | 717 GB/s | 320W | $1,199 |

| RTX 3090 Ti | 10,752 | 1,860 | 40 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,999 |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| RTX 3050 | 2,560 | 1,780 | 9.0 TFLOPS | 8GB 2 | 224 GB/s | 130W | $249 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere; RTX 4000 = Ada Lovelace |

||||||

While the RTX 4090 is the first Ada Lovelace GeForce to release, NVIDIA’s already announced the next two: RTX 4080 16GB, and RTX 4080 12GB. Despite sharing the same model name, the 16GB 4080 is going to be much faster than the 12GB variant, in both raw performance and even with memory bandwidth. The 4080 12GB has a 192-bit memory bus width, which seems extraordinarily odd for a premium product. We’ll have to wait until that SKU gets benchmarked so we can see how much of a limitation that will ultimately be.

Update: NVIDIA has sinced “unlaunched” its 12GB RTX 4080 variant.

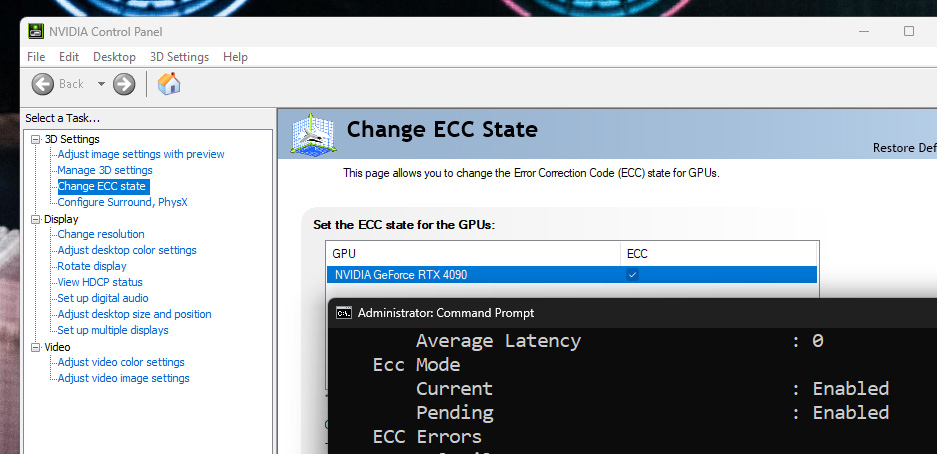

During testing, one thing caught us off-guard with the RTX 4090: it features ECC memory. At first, we thought the option in the driver could have been a bug, but not so. It enables just fine:

After pinging NVIDIA about this, we realized that the RTX 3090 Ti also included ECC memory. We’re not entirely sure why the company decided to put ECC memory in a card focused on creator and gaming, but we suppose it’d be a nice feature for those who truly need it, and can score it on a GPU that’s not a more expensive workstation or Tesla card.

In quick tests, enabling ECC memory dropped the benchmarked bandwidth from 845 GB/s down to 742 GB/s. Comparatively, enabling ECC memory on the Quadro RTX 6000 dropped bandwidth from 513 GB/s to 433 GB/s.

With that all covered, let’s take a quick look at our test PC’s specs:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.80 03/02/2022) |

| Memory | Corsair Vengeance RGB Pro (CMW32GX4M4C3200C16) 8GB x 4 Operates at DDR4-3200 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 XT (16GB; Adrenalin 22.9.1) AMD Radeon RX 6800 (16GB; Adrenalin 22.9.1) AMD Radeon RX 6700 XT (12GB; Adrenalin 22.9.1) AMD Radeon RX 6600 XT (8GB; Adrenalin 22.9.1) AMD Radeon RX 6600 (8GB; Adrenalin 22.9.1) AMD Radeon RX 6500 XT (4GB; Adrenalin 22.9.1) |

| Intel Graphics | Intel Arc A770 (16GB; Arc 31.0.101.3435) Intel Arc A750 (8GB; Arc 31.0.101.3435) Intel Arc A380 (6GB; Arc 31.0.101.3430) |

| NVIDIA Graphics | NVIDIA GeForce RTX 4090 (24GB; GeForce 521.90) NVIDIA GeForce RTX 3090 (24GB; GeForce 516.94) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 516.94) NVIDIA GeForce RTX 3080 (10GB; GeForce 516.94) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3070 (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 516.94) NVIDIA GeForce RTX 3060 (12GB; GeForce 516.94) NVIDIA GeForce RTX 3050 (8GB; GeForce 516.94) |

| Audio | Onboard |

| Storage | Samsung 500GB SSD (SATA) (x3) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 11 Pro build 22000 (22H1) AMD chipset driver 4.08.09.2337 |

| All product links in this table are affiliated, and help support our work. | |

Our look at rendering performance will revolve around seven different solutions. Both Blender and LuxCoreRender can be run on all three graphics vendors, while Arnold, KeyShot, Octane, Redshift, and V-Ray are currently NVIDIA-only.

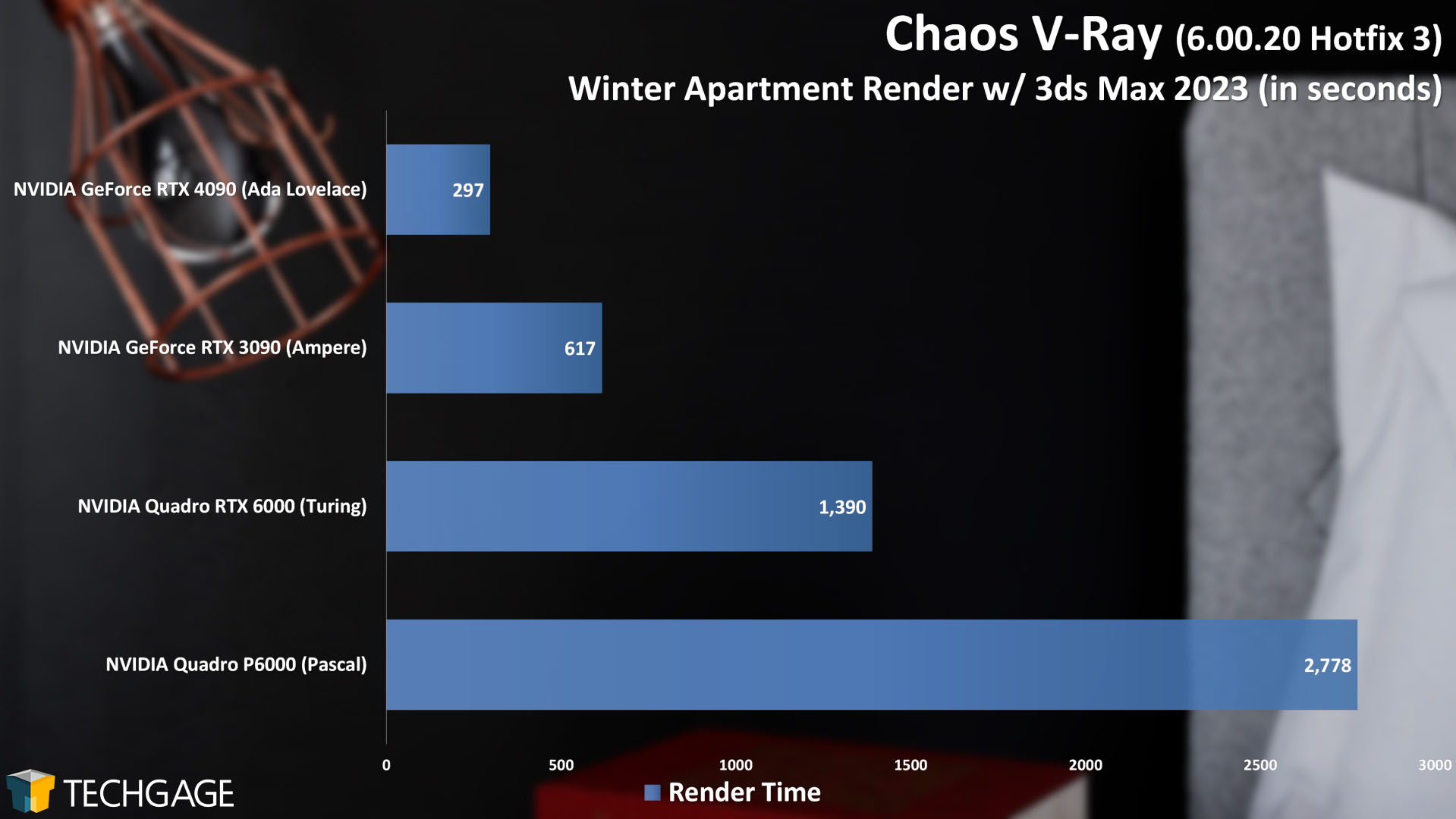

To kick off our look at rendering performance with the RTX 4090, we couldn’t help but test a few generations of top-end GPUs to highlight how far we’ve come in a relatively short amount of time. To do this, we grabbed a handful of 24GB GPUs, and the Chaos V-Ray project Winter Apartment, which requires over 16GB of VRAM to render. Here are those results:

This is one of those times when a picture’s worth a thousand words. In four generations, NVIDIA’s top-end SKU slices this project’s render times to almost 10% of what we saw with a top-end Pascal card. When we reviewed that Quadro P6000 in 2017, we called it the “fastest graphics card ever”. Well, it’s now clear that if you’re still rocking that class and generation of GPU, you’re sorely missing out on huge rendering performance gains.

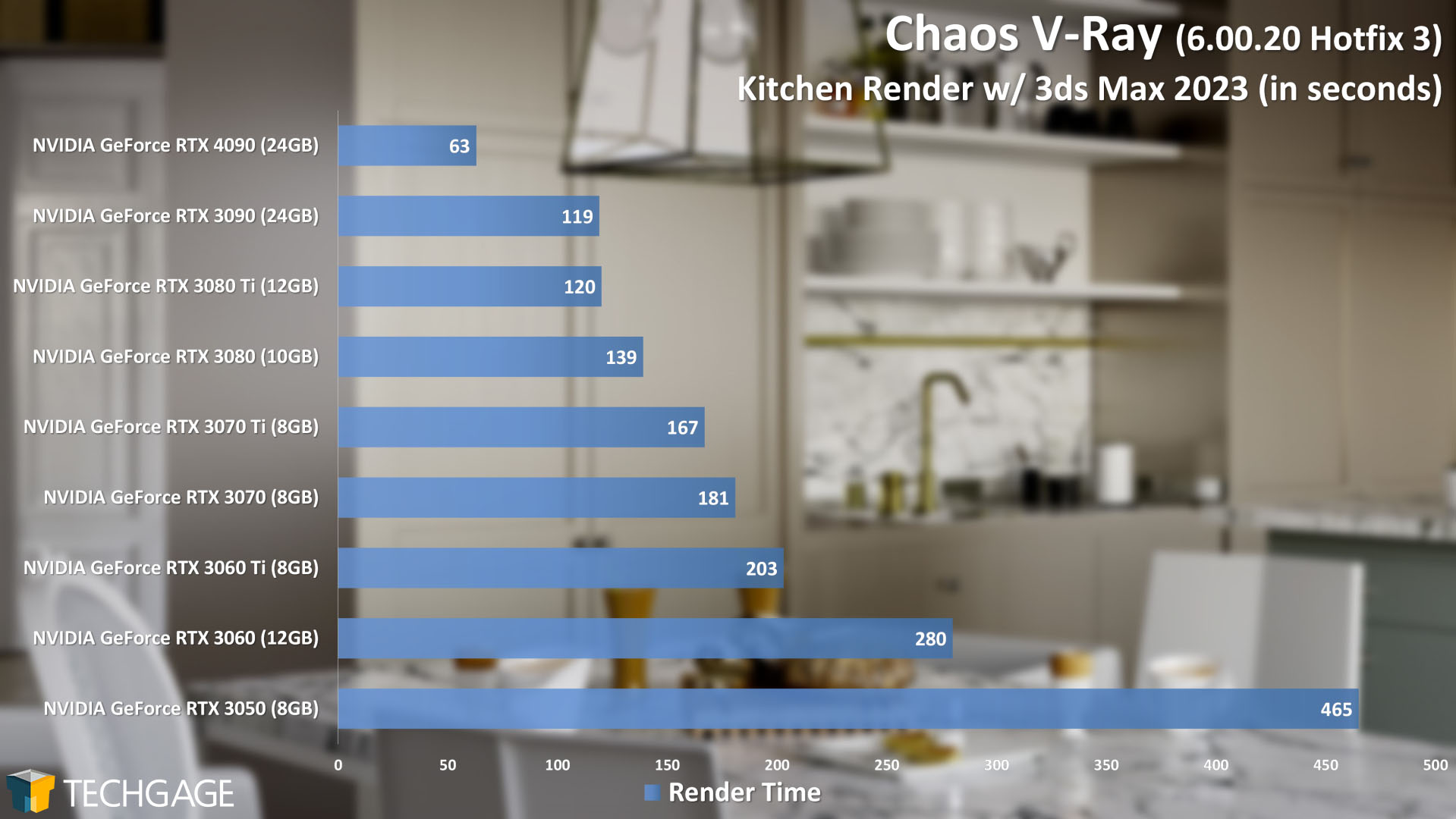

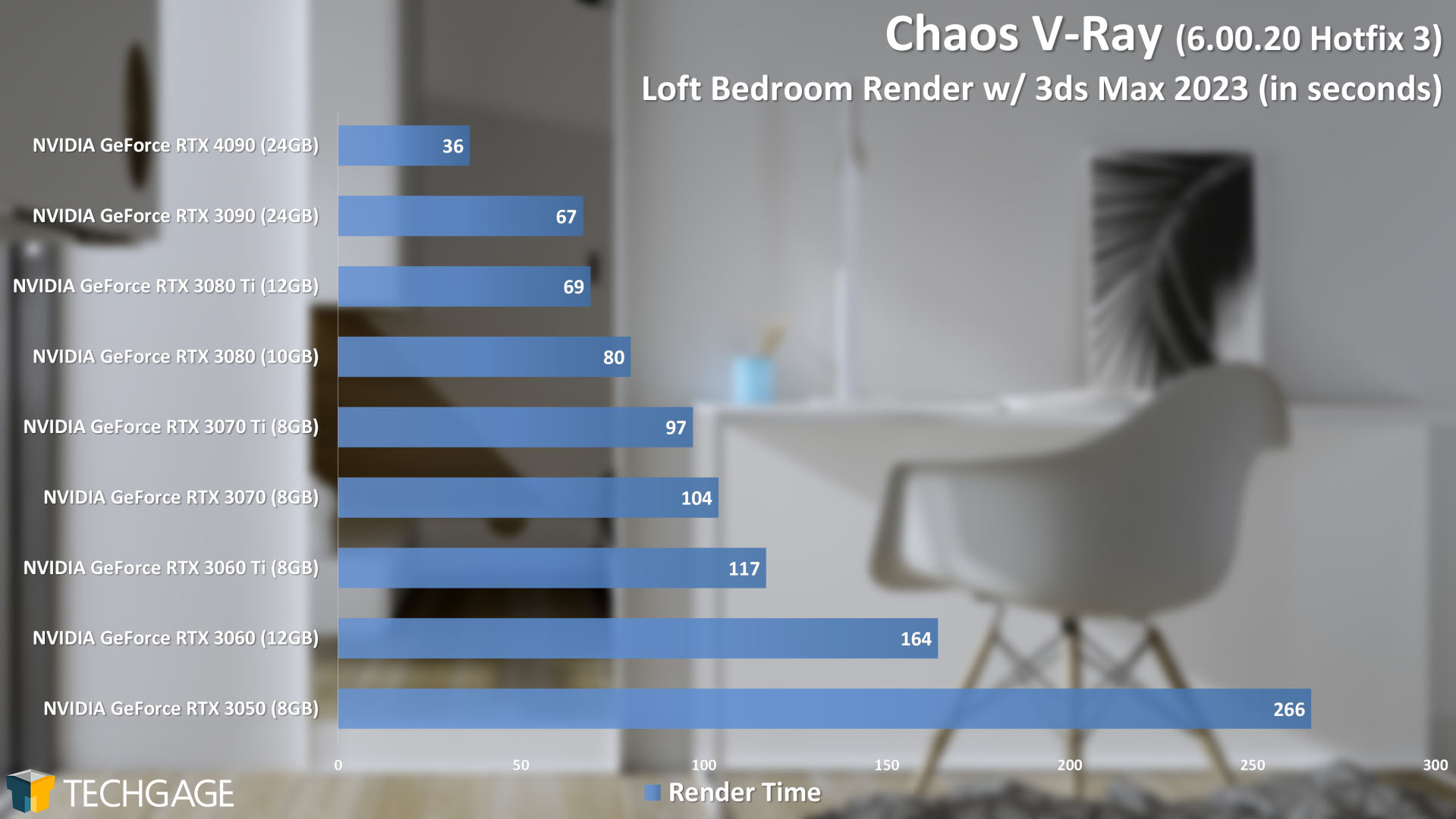

A couple of other V-Ray projects show tremendous gains, as well. It’s not quite an exact halving of render times vs. RTX 3090, but it’s close:

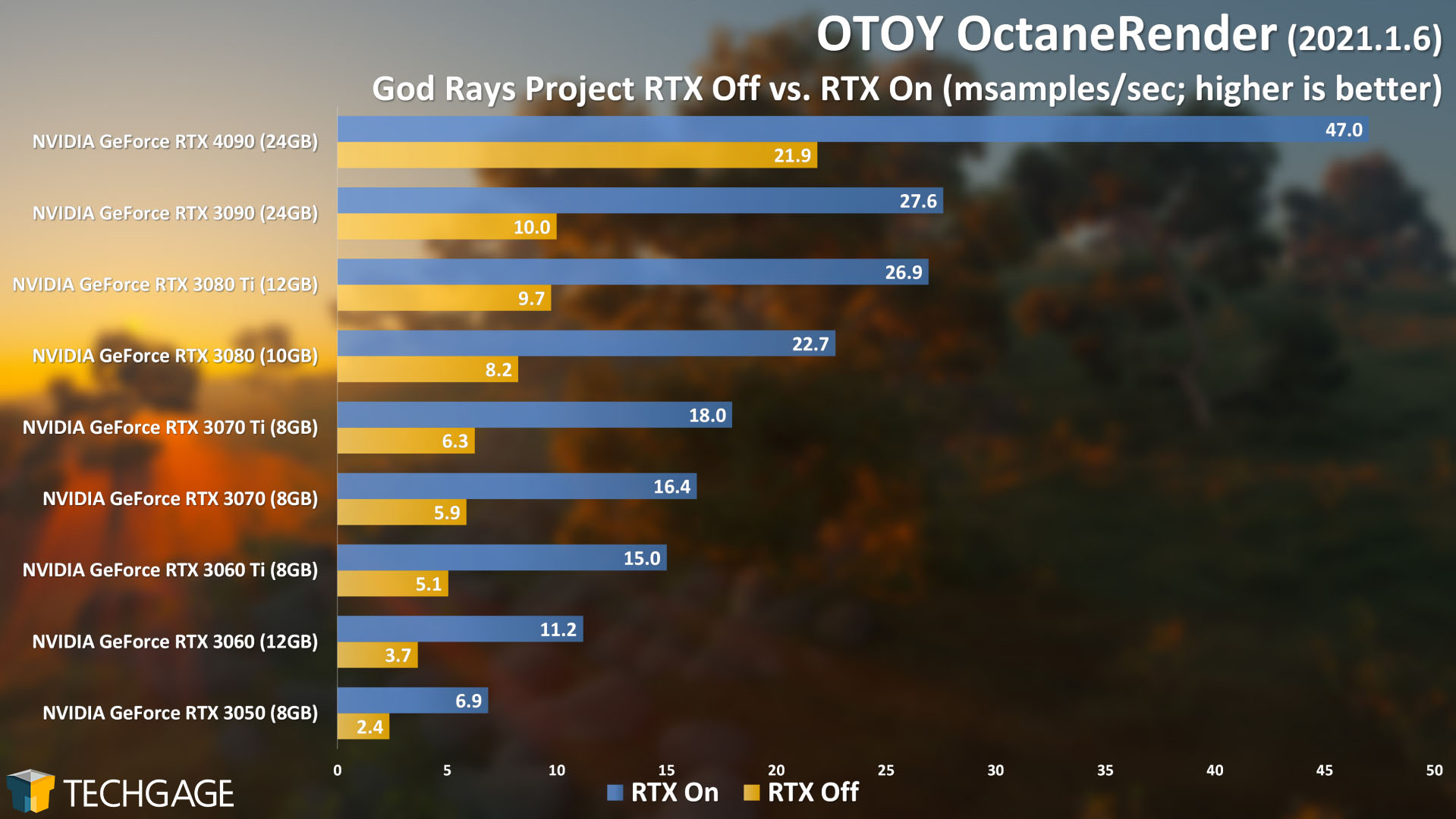

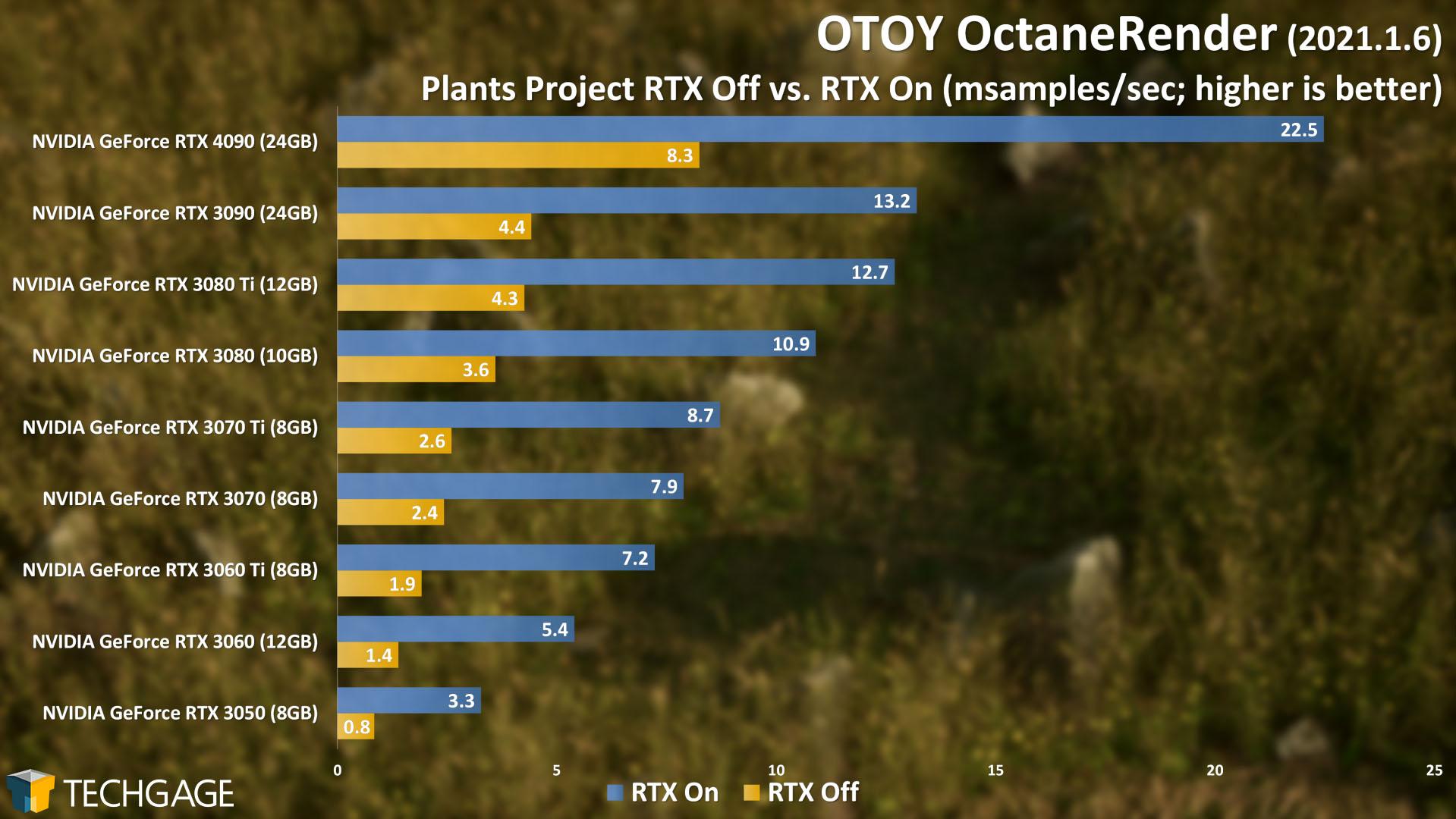

With OctaneRender, the performance advantage of the RTX 4090 across two projects is about 70%, and obviously quite a bit better when opting to use OptiX over CUDA:

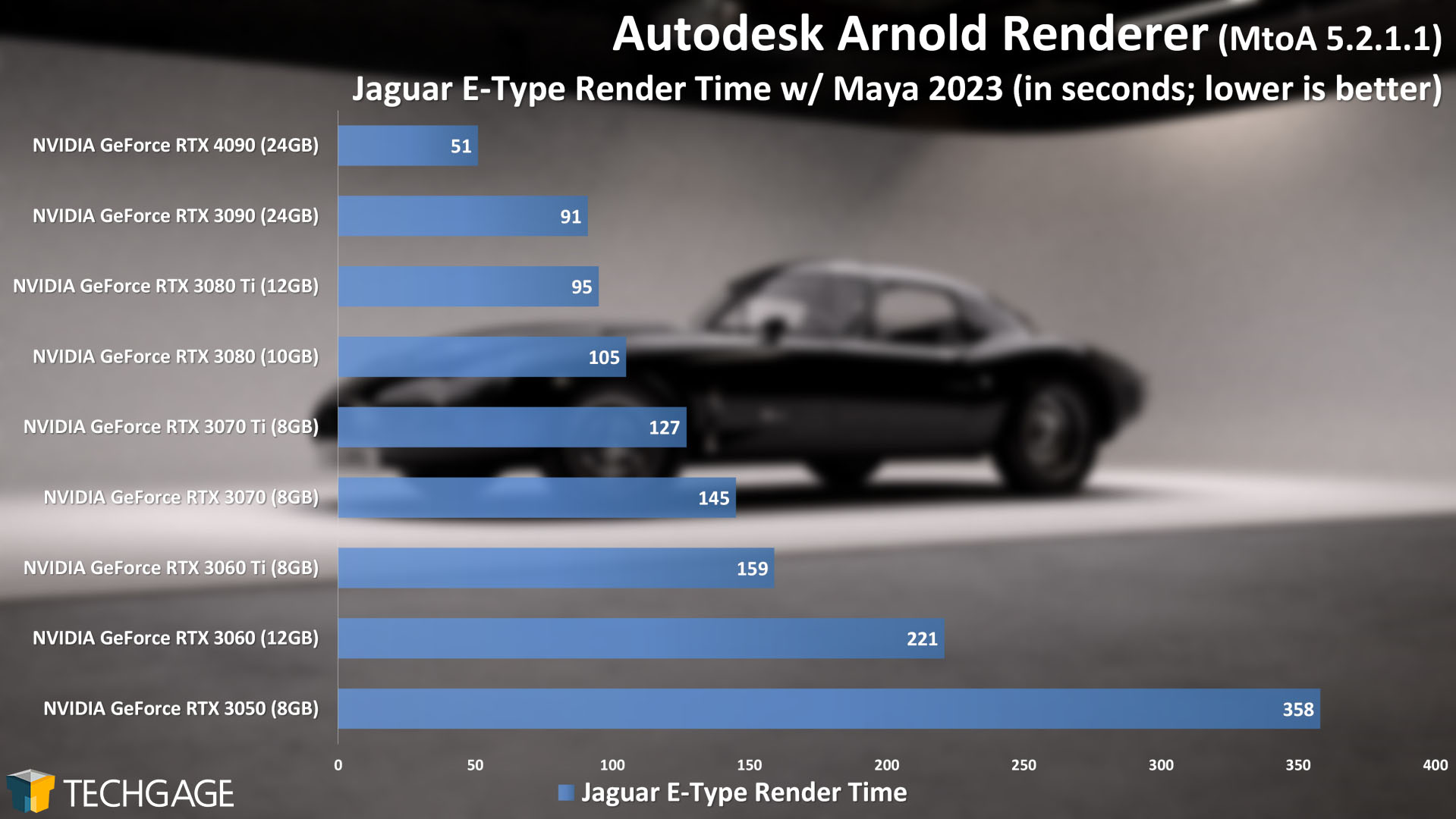

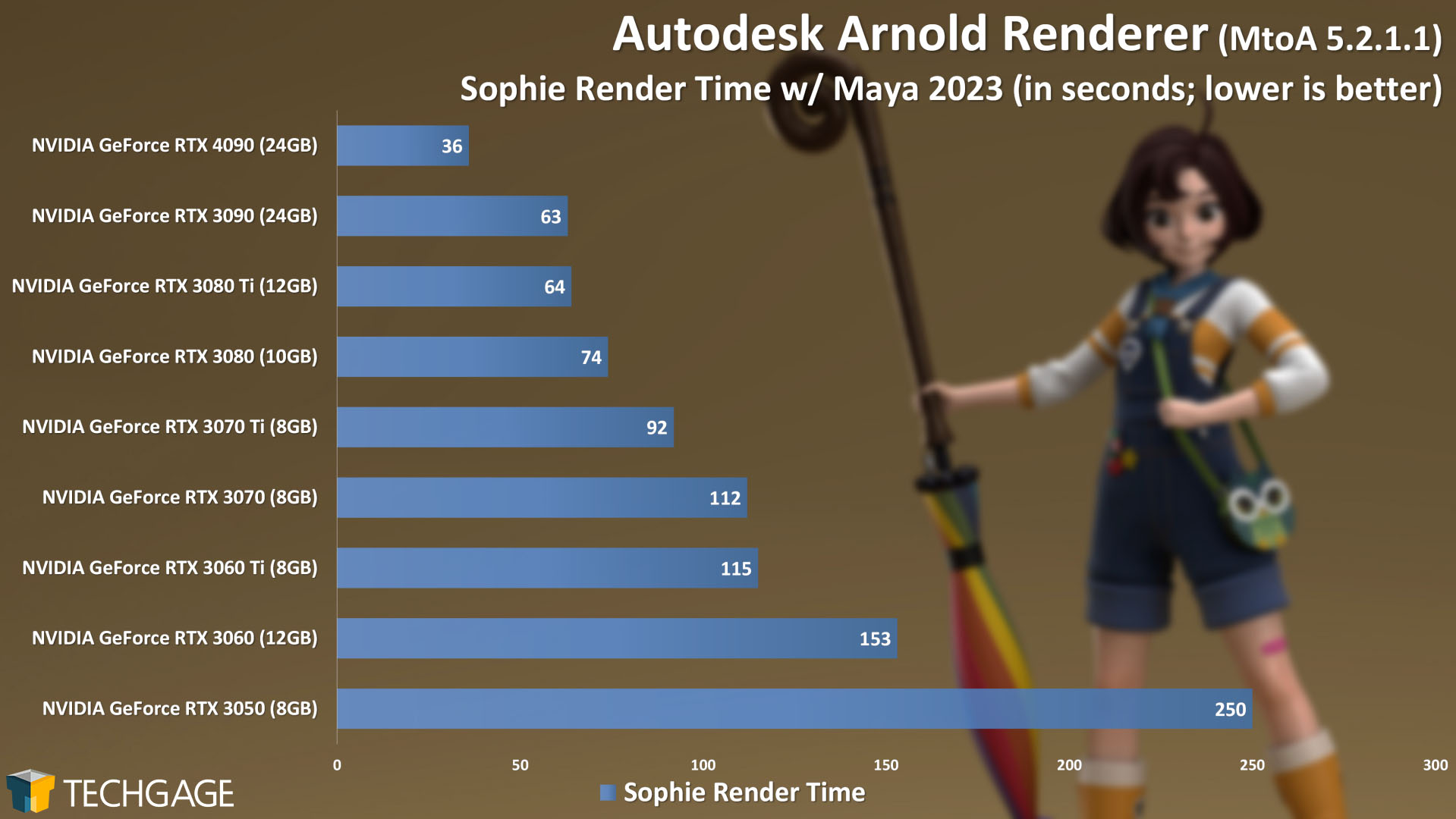

With Arnold, we see similar leaps in performance as we did with Octane; not quite double, but still a massive reduction in render times:

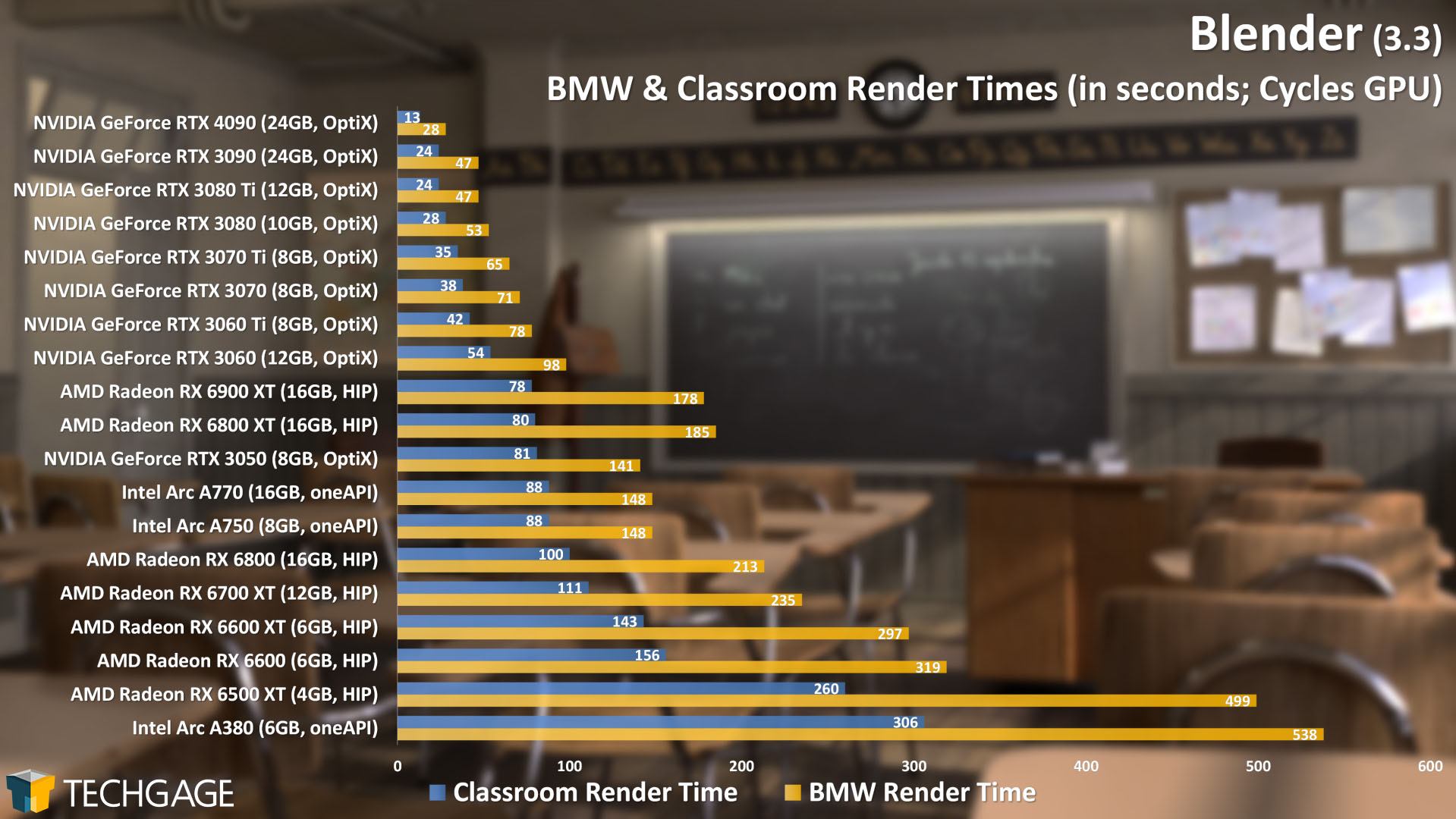

Continuing on to a look at Blender, the Cycles engine also benefits heavily from NVIDIA’s latest architecture:

A couple of years ago, we adjusted some of our long-standing Blender projects because they were rendering too quickly on new high-end hardware, and we’re starting to see the same thing happen again here. The overall performance gains across these four projects are not as pronounced as some of the others, but they’re still undeniably huge. Especially when you’re rendering multiple frames for a given scene, the overall time savings adds up.

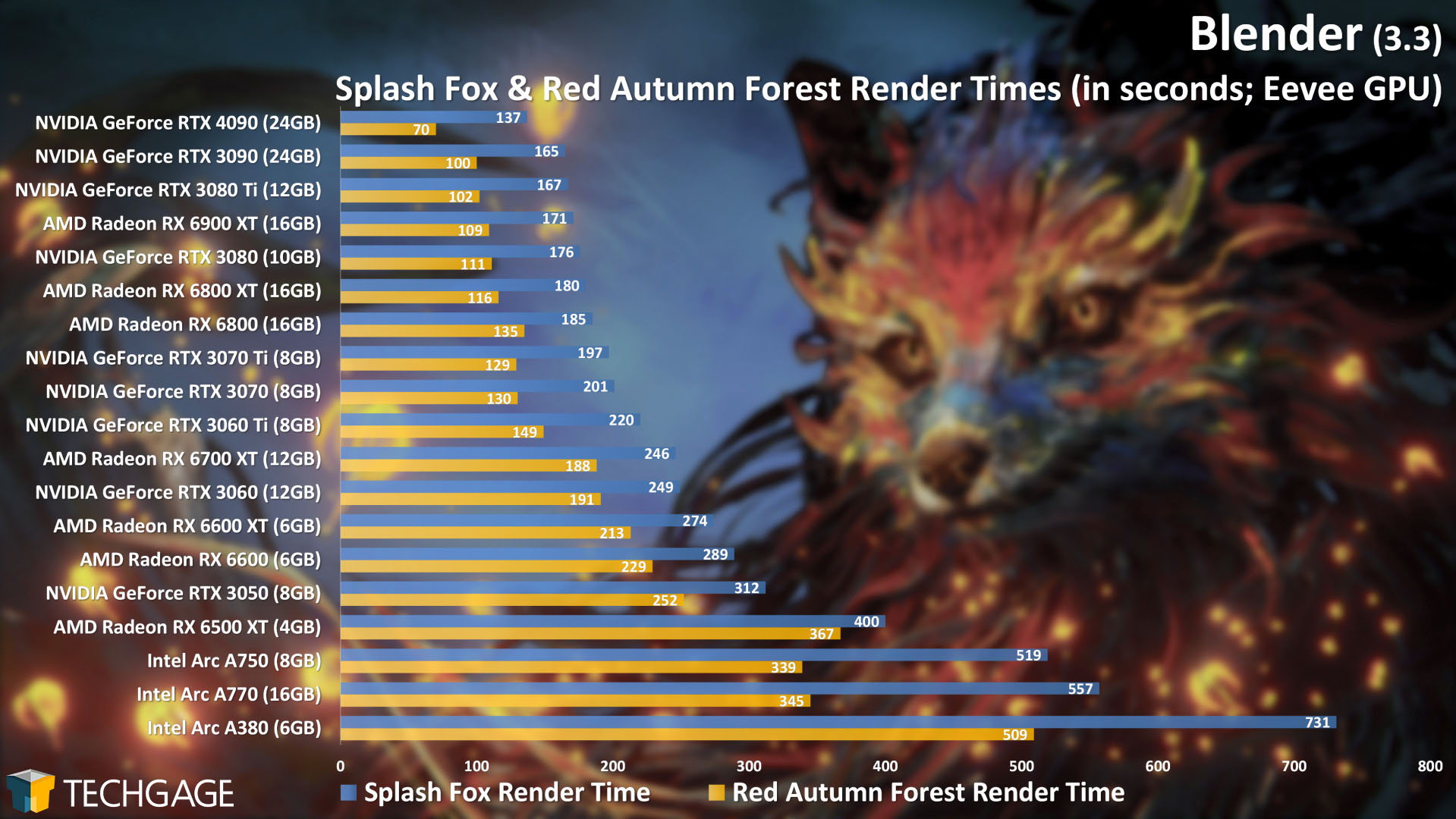

With Eevee, which doesn’t take advantage of accelerated ray tracing, we still see notable gains. They’re not quite as stark as the rest in this article, but viewed on their own, the upticks in rendering performance is still impressive:

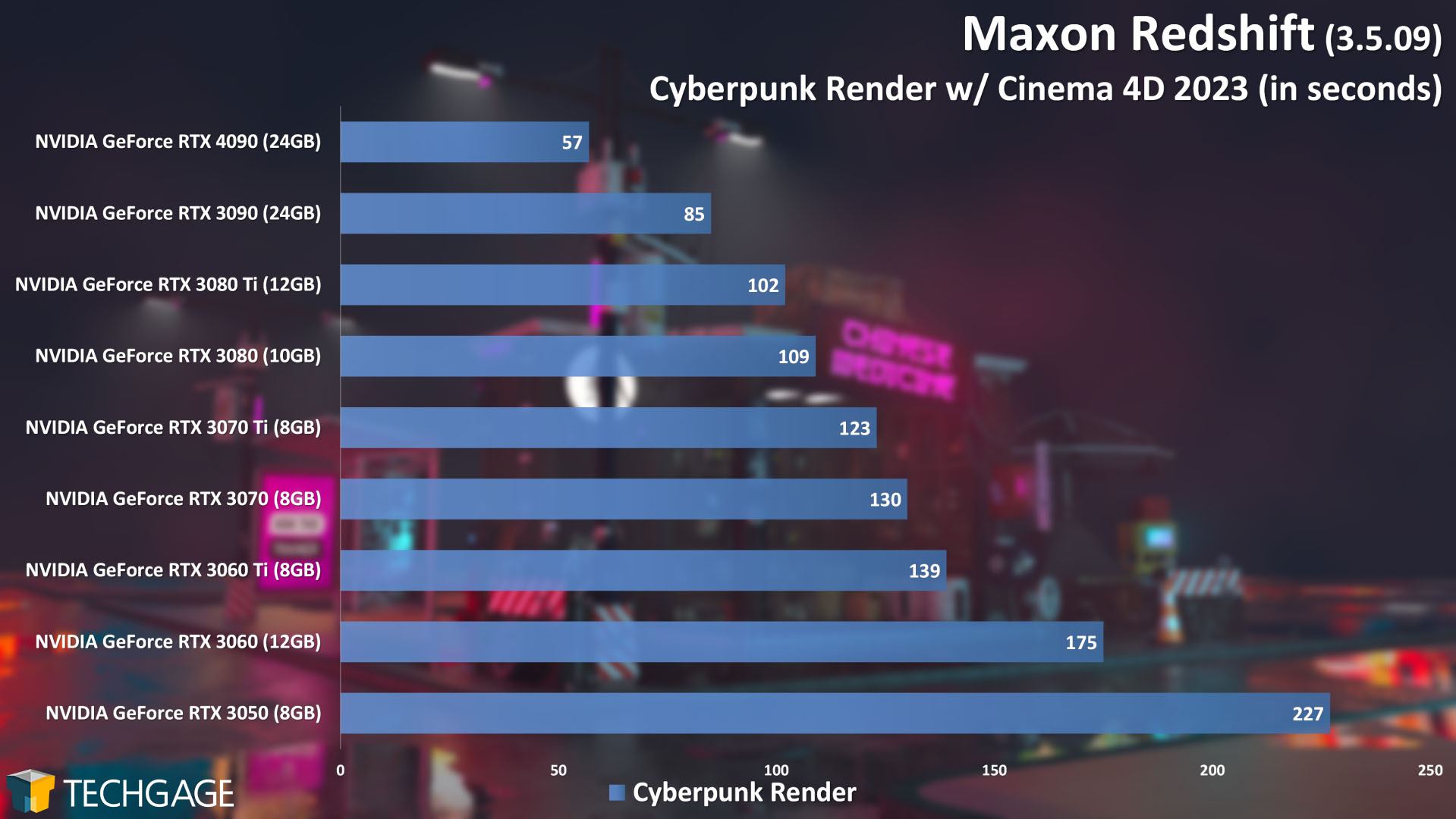

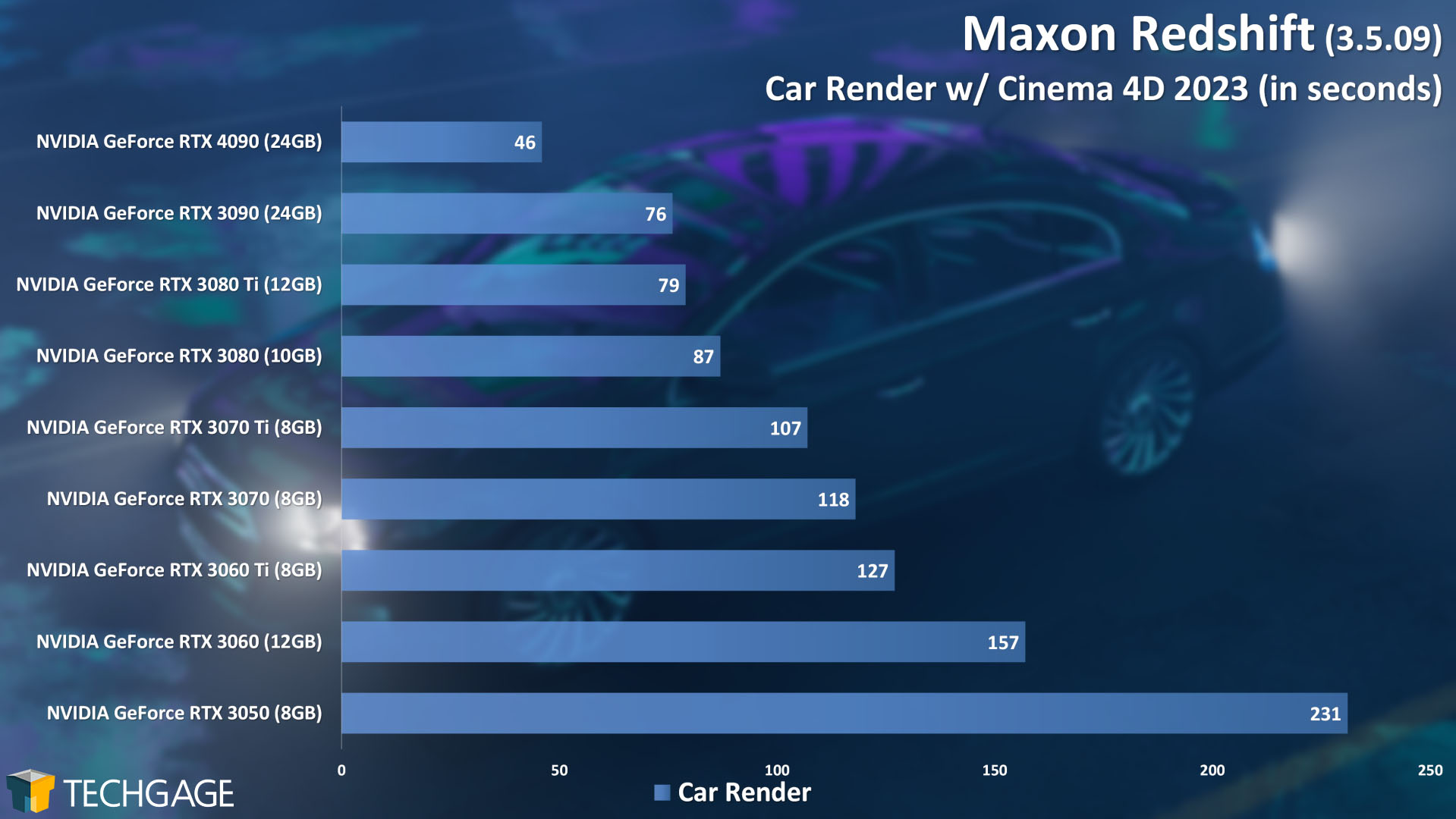

Our Redshift testing shows that the gains with RTX 4090 might not be on the same level with some projects as others; the more complex Cyberpunk scene saw relatively modest (in comparison to the rest) gains between RTX 4090 and 3090, but the simpler Car scene saw a much more impressive leap ahead.

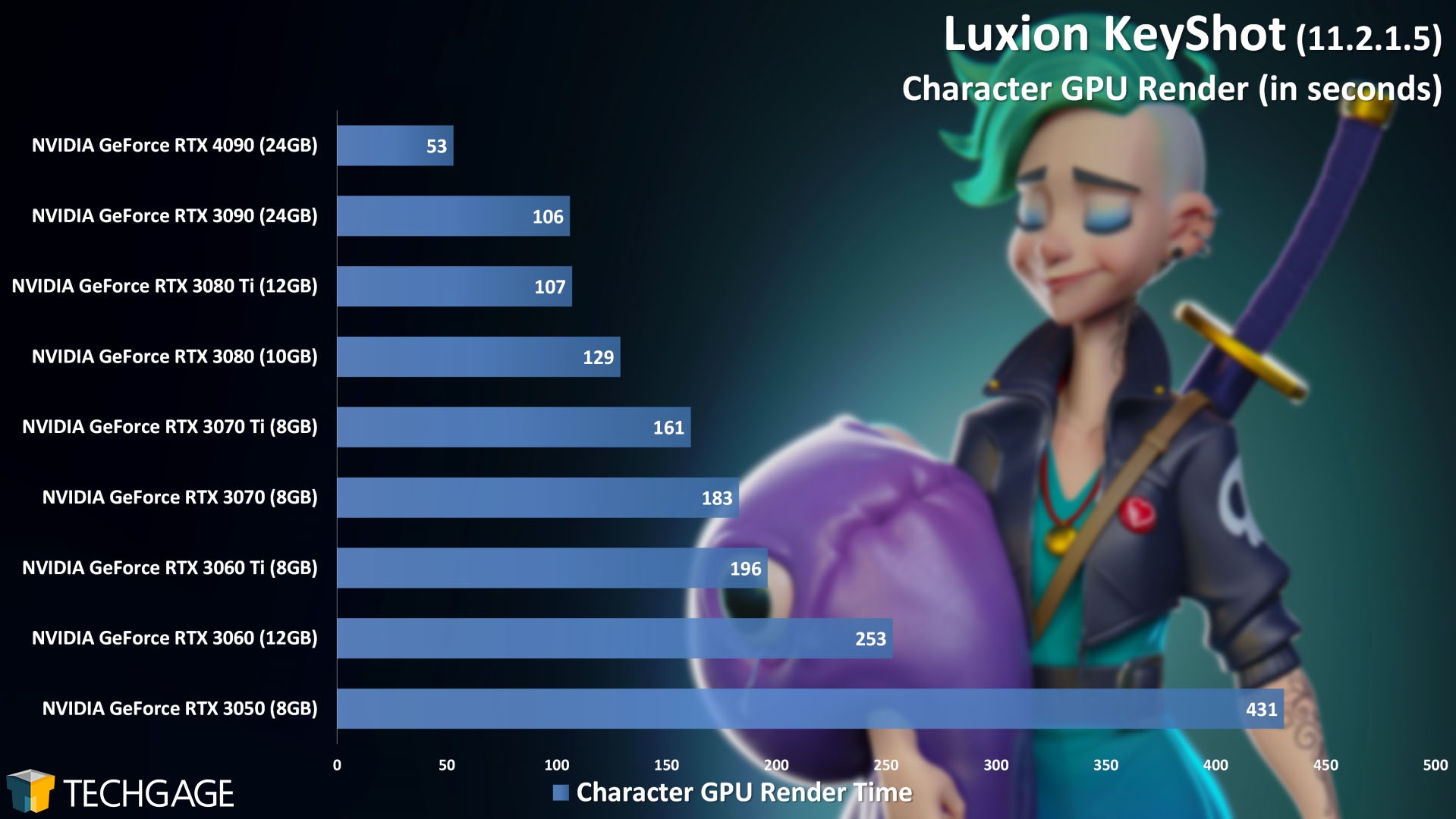

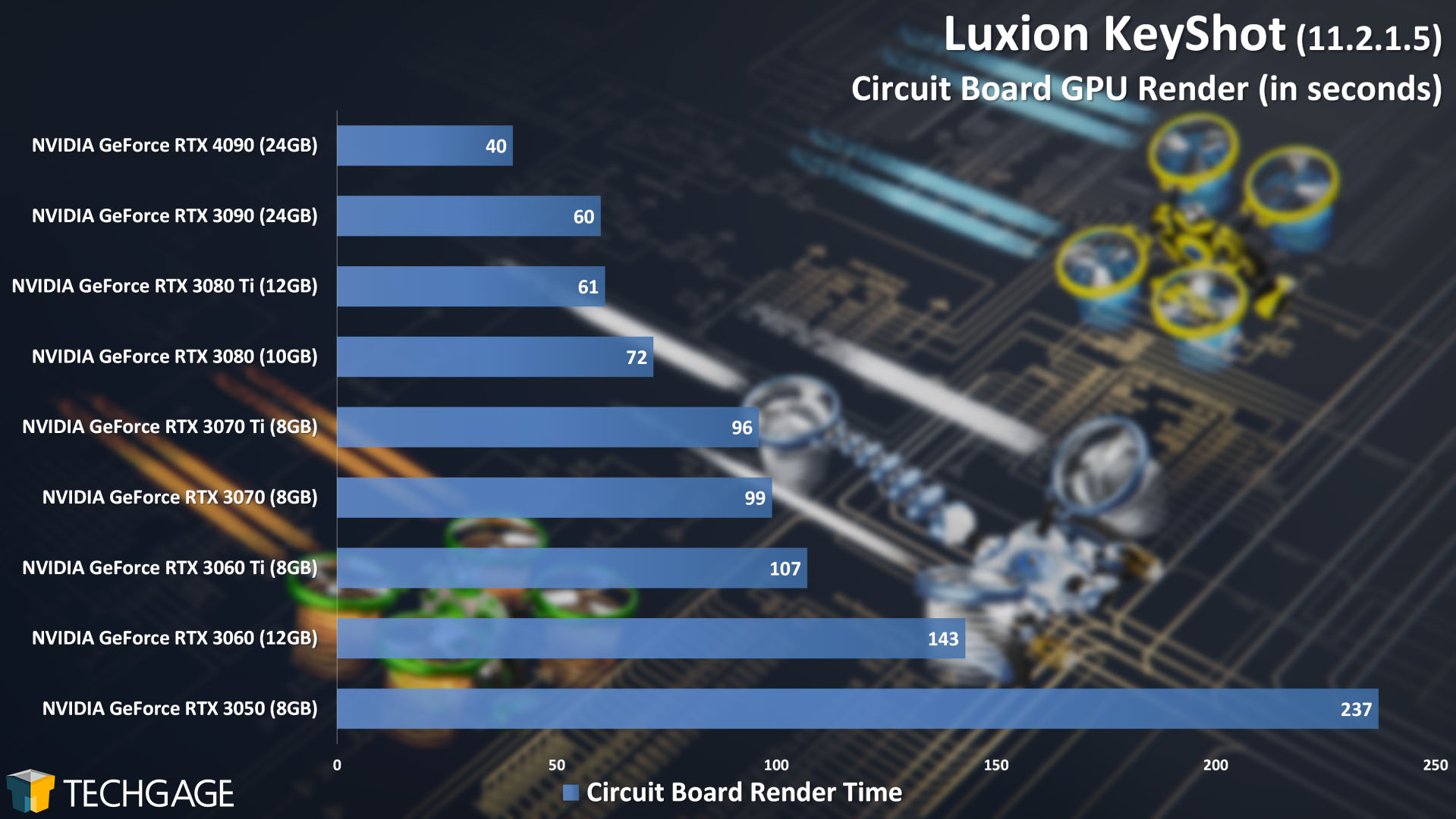

It’s been some time since we’ve been able to give KeyShot a fresh test, and as far as we can remember, this is the first such test since version 11 came out. As we saw with Redshift above, different projects will vary in the level of performance gain between the 4090 and 3090. With the Character scene, a literal halving of render time is seen, while the Circuit Board render drops to 2/3rd of the total render time.

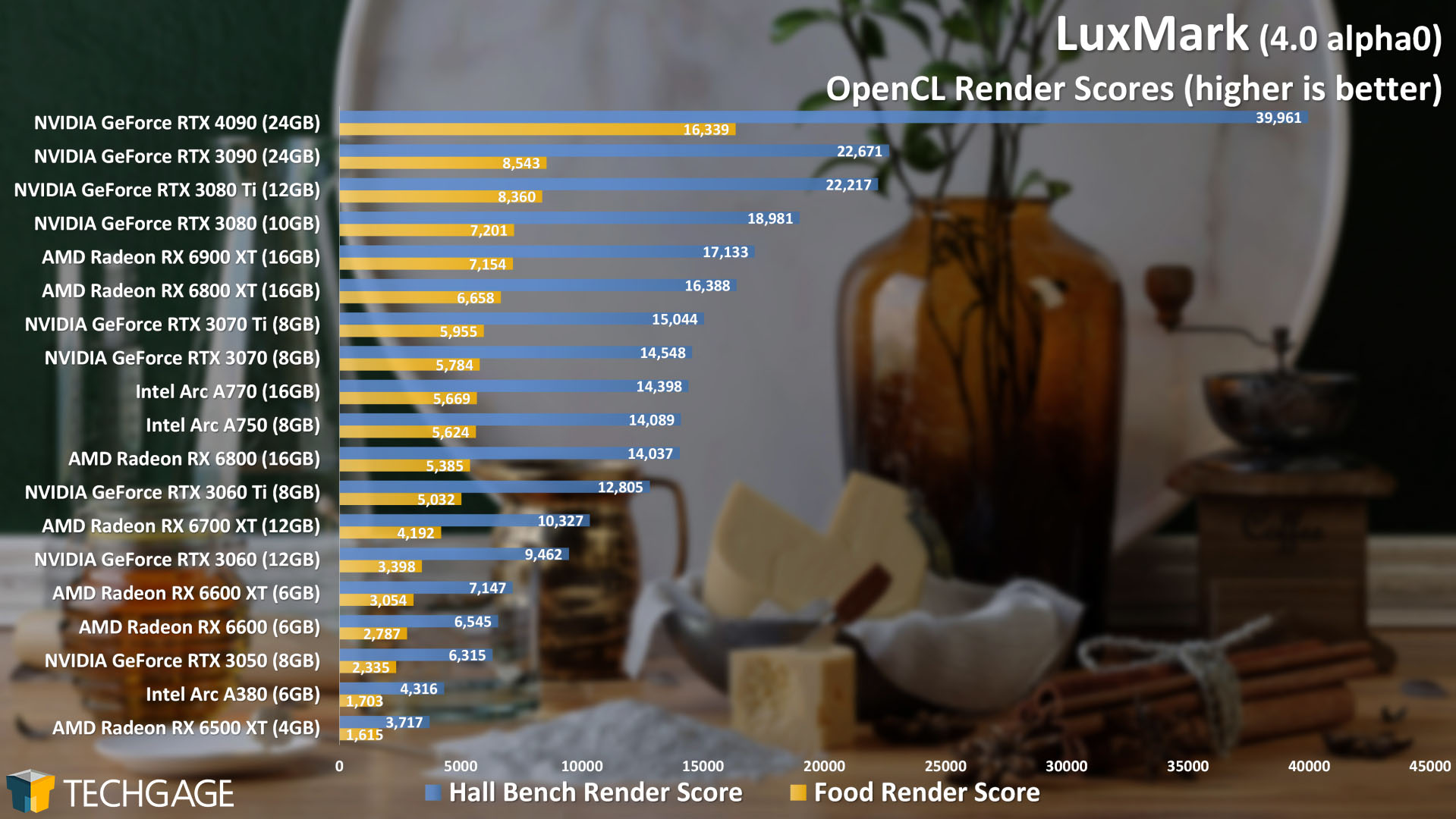

To wrap up this performance look, we turn to LuxCoreRender, via the LuxMark benchmark:

It seems obvious at this point that Ada Lovelace is going to offer a huge leap in performance over Ampere, no matter the renderer. We just tested seven different ones, and all of them showed tremendous leaps in performance, gen-over-gen. We hoped also to have Radeon ProRender here, but because the latest version doesn’t support Blender 3.3, we shelved it for now.

Final Thoughts

As alluded to in the intro, NVIDIA made some huge promises when it announced its Ada Lovelace GeForce generation, so we went into benchmarking holding our breath, because we really wanted those promises to live up. As the myriad results above highlight, we feel safe in saying that they have indeed.

Admittedly, we felt the same when we reviewed the first Ampere GeForces. The Turing generation was NVIDIA’s first that brought accelerated ray tracing (and denoising) into the mix, and when Ampere launched, we didn’t really expect to see the level of gain in rendering that we did. We also didn’t expect to see Ada Lovelace pull the same thing off, only because a literal doubling of GPU performance isn’t exactly what we’re used to seeing.

What’s really impressive is that in some cases, the RTX 4090 performed even faster than 2x the RTX 3090. The Winter Apartment V-Ray project in particular revealed that.

So, it’s clear that Ada Lovelace is a beast of a rendering architecture, and those who adopt one of the new GeForces will enjoy huge upticks in performance over the previous generation. That said, we’ve only tested the RTX 4090 so far, so it’s hard to suggest right now that the dual 4080 options will leap ahead over their respective predecessors just the same – but we hope to find out sooner than later.

Another thing that’s clear is that the Ada Lovelace generation is more expensive than the last. The RTX 4090 itself carries a $100 price premium over the RTX 3090, which to be honest, doesn’t even seem that bad, given the leaps in rendering performance. We’re still talking about a GPU that packs 24GB of memory in, and it effectively halves the rendering times that the RTX 3090 can muster. In that particular match-up, the price increase doesn’t sting too much.

As for the 4080 and 3080-class cards, however, the verdict remains out on how their price premiums will convert to an uptick in performance. The RTX 4080 16GB follows in the footsteps of the $1,199 RTX 3080 Ti, while the RTX 3080 12GB carries a $200 price premium over the RTX 3080 10GB.

All told – if you care about rendering performance to the point that you always lock your eyes on a top-end target, then the RTX 4090 is going to prove to be an absolute screamer. You can effectively look at it as being equivalent to having two RTX 3090 cards in the same rig. That’s a lot of horsepower.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!