- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA SC22: H100 Quantum-2 Networks, HPC, Omniverse, and Digital Twins With Lockheed Martin

The scaling of AI and high performance computing is fascinating. At SC22 NVIDIA explored more uses for its Omniverse and Digital Twin approach to the metaverse, It showcased HPC deployment of its H100 GPUs, edge computing, and developing true to life models of the planet with Lockheed Martin.

NVIDIA has a very large and diverse portfolio these days when it comes to high-performance computers. We’ve already seen the announcements of the RTX 4000 series GPUs on the gaming side of things, but in the enterprise market, there is a completely different scale going on.

We got a glimpse at what was coming at NVIDIA’s annual GTC event, with big news about the long-awaited next generation of GPUs in the form of Ada Lovelace, but it was also an opportunity to catch everyone up on some of the enterprise and content creation side of NVIDIA’s technology arsenal. From Hopper to NeMo, LLMs and IGX; there was a lot to cover.

At SC22, NVIDIA talked about its High Performance Compute (HPC) platform being a solution to a full-stack problem. The hardware, the software, the platform, and the applications, all need to be in sync with each other to maximise performance. On the hardware end, we have the H100 GPU and the two Grace platforms. At the software level, there’s the familiar RTX, CUDA, and PhysX, but also DOCA and Fleet Command. At the platform layer, we delve into NVIDIA’s HPC platforms like DGX servers and racks, its AI frameworks, and of course the ever changing and evolving Omniverse. At the application layer, we see a whole host of frameworks and AI enabled suites such as Holoscan, Modulus, CuQuantum, and Large Language Models (LLMs).

H100 And HPC Platforms

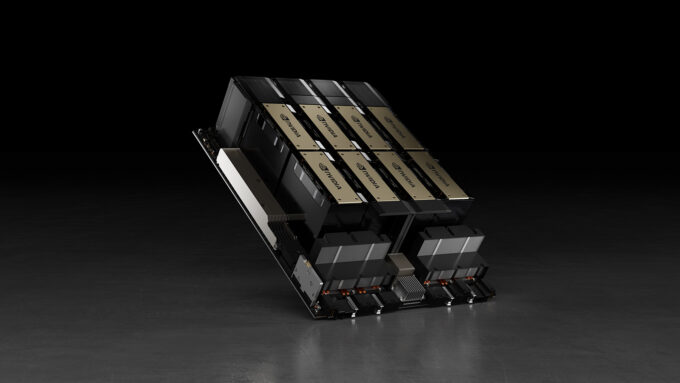

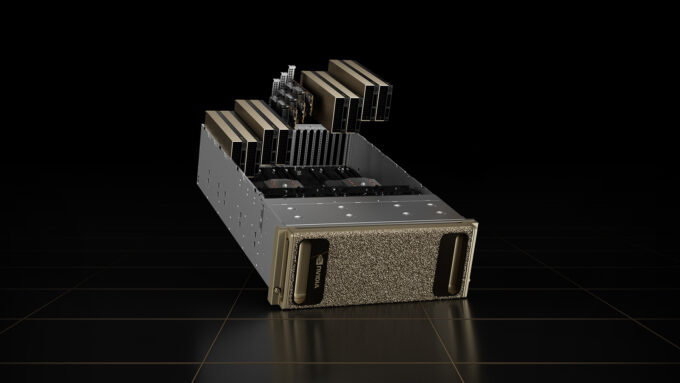

We got a glimpse at what was going to be the next-gen Tensor Core compute cards at GTC, the Hopper series GPUs for enterprise, compute, and AI; the H100 being the flagship of the generation. Several different platforms were announced for the H100, starting with just a single PCIe-based card, a DGX desktop supercomputer, and scaling up to HGX rack-mounted systems making use of NVIDIA’s SXM form-factor cards.

These compute cards have been available on LaunchPad for a bit – a try before you buy way of testing out enterprise hardware from NVIDIA in a cloud infrastructure setting, built around the same technologies as GeForce NOW.

The difference in processing performance of the H100 in comparison to the A100 launched last year, is significant. The A100 was by no means a slouch, especially when it came to AI performance compared to not only CPUs, but other GPUs at the time. The H100 is another leap in performance that warrants its own deep dive, but the gist of it is that energy efficiency is 3.5x better, and requires 5x fewer processing nodes to match an equivalent A100 datacenter.

There were additional H100 announcements centered around edge-AI with the CNX converged accelerators – PCIe-based GPUs with integrated 400Gbps network connections, and distributed hyperscale all-in-one integrated CPU/GPU/Network ‘Superchips’, called NVIDIA Grace.

At SC22, it was Microsoft announcing its support for the new Quantum-2 network interfaces, 400G Infiniband interconnects, and H100 Tensor Core GPUs, that are now being deployed across its Azure cloud infrastructure. Other platform providers such as ASUS, Dell, HP, GIGABYTE, Lenovo, and Supermicro also showcased their respective solutions for the H100 in its different varieties, including the PCIe cards, 4-way and 8-way H100 solutions, and in air or liquid cooling setups.

A New Level Of AI

At GTC 2022, NVIDIA gave special attention to Large Language Models (LLMs), extremely complex neural networks based on billions of parameters and hundreds of gigabytes of text and/or artwork to train from, such as Transformer, BERT, GPT-3, and more recently the explosion in AI-generated artwork made by Dall-E 2, artwork generated based on simple words and descriptions. This is not the AI we’ve seen with chatbots that we have come to deride over the years, but artistic expression in art and in music, generated by user submitted prompts. Although, how this AI artwork is generated is becoming a hotly debated topic, mostly centered around the datasets the AI is pulling examples from.

LLMs are being used extensively in the chemistry and biomedical fields, with a lot of attention in protein folding and synthesis. NVIDIA announced its NeMo service of pre-trained LLMs, and by extension, its BioNeMo service, a pre-trained LLM to predict molecule, protein, and DNA behavior. Just select your model, input your molecule or protein sequence, and let the AI inference away to predict stability or solubility.

NVIDIA announced its IGX platform. These IGX systems carry an Orin module from NVIDIA’s automotive division, ConnectX-7 400 GbE networking, an RTX Ampere GPU, as well as safety and security integrations. IGX’s primary focus is on automation of manufacturing, logistics, and medical at this time. Siemens is one of the first companies to make use of IGX in conjunction with NVIDIA’s Omniverse platform for building digital twins of factories, allowing developers and engineers to experiment in a digitally identical factory to train AI and automation, and then deploy changes to robots on the factory floor to assist workers.

IGX will also be used for real-time medical imaging (real-time, as in human perception, rather than say as a real-time safety critical system) and robotics assistance. NVIDIA Clara Holoscan sits on top of IGX for AI assisted imaging, augmented/extended reality, and robotic integration for hyperspectral endoscopy, surgical assistance, and surgeon telepresence.

What is Omniverse?

This is a hard question to answer because its meaning keeps changing depending on the context. Many years ago, it was rather straight forward, as it was a creator-focused aggregation tool for bringing together 3D assets, textures, models, and such from various applications and vendors. It was meant to unifying the 3D workspace so changes in one suite of tools was reflected in other applications. This sort of workflow integration was already happening in the background before, such as Adobe tool integration into Autodesk. Omniverse just kicked it up a notch with its own SDK to spread that integration much further, into game engines like Unreal and Unity, as well as other design suites.

What’s changed over the years is the scale of the Omniverse project. It’s not just about integration of textures and 3D models anymore, it’s about entire frameworks and statistical models, multi-spatial data such as animations and time-dependent data such as airflow and thermal analysis. Omniverse went further with AI modelling layers that used LLMs to predict, change, and work with the other assets. This big change happened because of help with the Universal Scene Description (USD) format, originally developed by Pixar. USD can be best described as HTML for 3D design; it pulls together the resources of many different formats into one scene. Instead of building a webpage, you end up with a fully interactive 3D environment, but with extra information such as wind velocity, predictive engines, and other meta information.

To confuse matters, NVIDIA now has different types of Omniverse, which include Omniverse Cloud, Omniverse Nucleus, Omniverse Farm, Omniverse Replicator, and Omniverse Enterprise, but you can throw in Isaac Sim and DRIVE Sim too, since they are built on one form of Omniverse or another. There is also OVX, or Omniverse hardware servers running on DGX systems.

Omniverse basically encompasses the entire software integration stack and brings together data from every source imaginable, into one giant ‘blob’ which can be used to simulate entire ecosystems, Digital Twins of the real world, or virtual environments to train AI and robots working in the real world. It’s already being used for autonomous vehicle training and medical imaging.

Digital Twins and Omniverse Nucleus

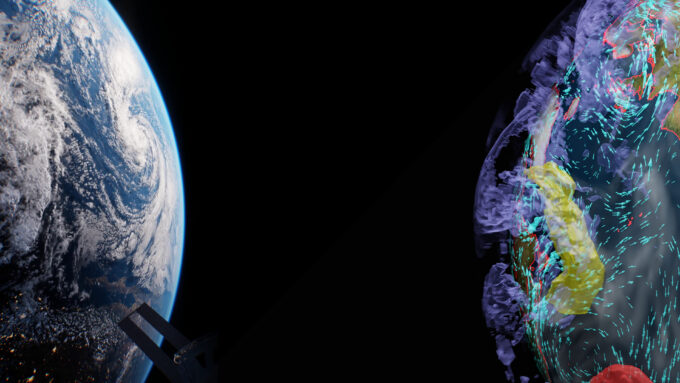

Digital Twins is a phrase that you should get used to seeing in the future, as it plays with the whole concept of the metaverse and augmented reality. In essence, it’s recreating an environment digitally, not just as 3D CAD with photorealism, but also inclusion of meta information, such as heat maps, traffic management, wind vectors, statistical models, and so on. Lowes announced its Digital Twin project back in GTC as a way to visualize stock, store layout optimization, and product placement.

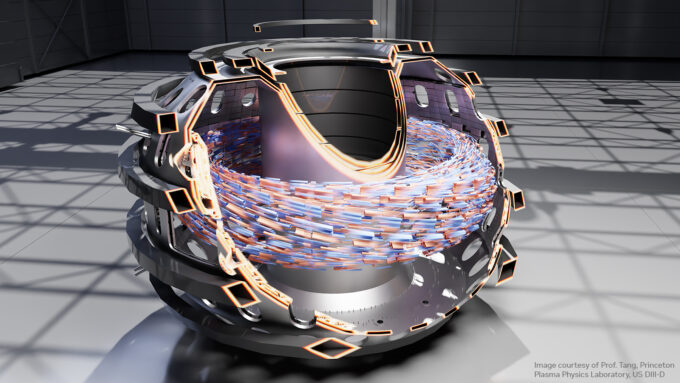

NVIDIA’s Omniverse Nucleus is now moving into creating weather systems for the US National Oceanic and Atmospheric Administration (NOAA) with the help of Lockheed Martin and its OpenRosetta3D software. This will be a first-of-a-kind project for NOAA to create the Earth Observation Digital Twin. EODT will use Omniverse and OpenRosetta3D to bring together a complete visual and statistical map of the Earth, built with terabytes of geophysics data, orthographic maps, atmospheric data, and space. Its primary goal will be to visualize temperature and moisture profiles, sea surface temperatures, sea ice concentrations, and solar wind data.

EODT’s first big demonstration will be sea surface temperature for September next year, as this will likely help meteorologists make decisions around hurricane activity, and NASA with space launch windows. Eventually it will lead to drought and storm predictions, too. This will involve using Omniverse to bridge USD datasets into Lockheed’s Agatha 3D viewer, which is built on the Unity engine, and allow users to see the sensor data over an interactive 3D earth.

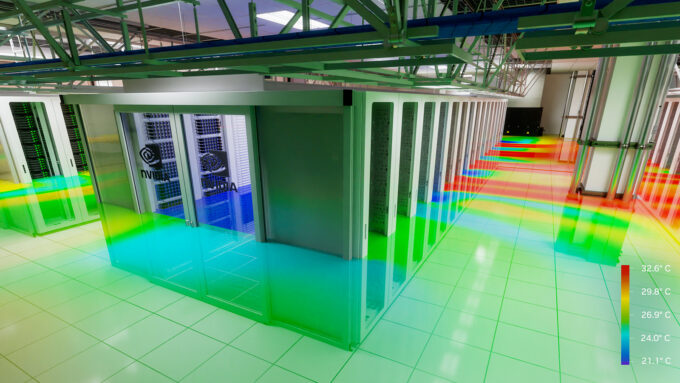

At SC22 there was a showcase of NVIDIA Air, a data center simulator that creates a Digital Twin with Omniverse that watches and manages data flow, manages the entire network stack, and predict heat and airflow with computational fluid dynamics with Cadence 6SigmaDCX. Omniverse was used to help design one of NVIDIA’s latest AI supercomputers, by bringing together CAD data from Autodesk Revit, PTC Creo, and Trimble Sketchup to layout the entire datacenter.

PATCH MANAGER was then used to plan the cable management for the network topology in a live model, and see dependencies. Once the data center was complete, the real-time sensor data, such as power and temperature, could be fed into the digital twin built within Omniverse to enable real-time monitoring of the racks. This data could then be used to predict future upgrades and validate the physical data center, before deployment.

There’s a lot more going on at SC22 that we haven’t covered, plus some other upcoming announcements for the data center and supercomputer developments. There’s a lot to take in and mull over, especially with the implementation of Omniverse on the metaverse, integrating robotics and construction, as this could all lead to the development of automated space exploration, and other fanciful ideas. AI and these huge leaps in computation are getting harder to keep up with, but it’s certainly fascinating to watch unfold.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!