- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA SIGGRAPH 2017: AI Assisted Rendering, Animation, Robots, And eGPUs

SIGGRAPH 2017 has begun, and NVIDIA is quick to show off its latest research projects. From AI-assisted rendering and denoising, animated faces built from neural networks, robots that teach themselves to move, and external workstation GPUs. There is also a great deal coming in the AR and VR space with new HMD designs and haptic feedback.

Research is key when it comes to developing new technologies, as well as understanding what’s required to advance further. It’s a reciprocal process where new technologies create new products, providing more research capabilities to create new technologies. NVIDIA is undergoing an explosive development cycle right now, all centered around AI.

While NVIDIA’s core has largely remained around the creative industries, with games, video production and rendering, it’s those creative industries that have created some of the most powerful hardware in the world. This hardware is now being used outside the creative industries, such as with financial analysis, physics simulation, and now, Artificial Intelligence. This AI is now being fed back into the creative industries to speed up rendering, enhance games with realistic facial animation, and to teach robots how to interact with the real world.

The amount of research that’s going on and around NVIDIA is staggering, and SIGGRAPH provides a glimpse into what’s going on. The creative industry is experiencing some impressive developments over the last couple years. Physical-based rendering using real-world material profiles has created some of the most realistic 3D renders possible, but it’s still a slow process.

Earlier this year at GTC, we saw some interesting technologies being tested with AI assisted denoising – filling in the blanks of an image. When you look at iterative rendering systems, you can see why this particular research is useful, since during the rendering process, a lot of the image remains unknown until multiple rendering passes have completed, so being able to ‘guess’ the next stage can significantly speed up the rendering process – which is precisely what NVIDIA are doing.

Optix 5.0 running on NVIDIA’s DGX Stations will potentially transform the creative industries by placing a server farm on your desk to provide AI assisted rendering and motion blur effects to animation. Using the AI engine, artists can visualize environments and characters extremely quickly, at near-final render quality, in real-time. No more change and render, change and render, as you will be able to see the changes applied as you make them.

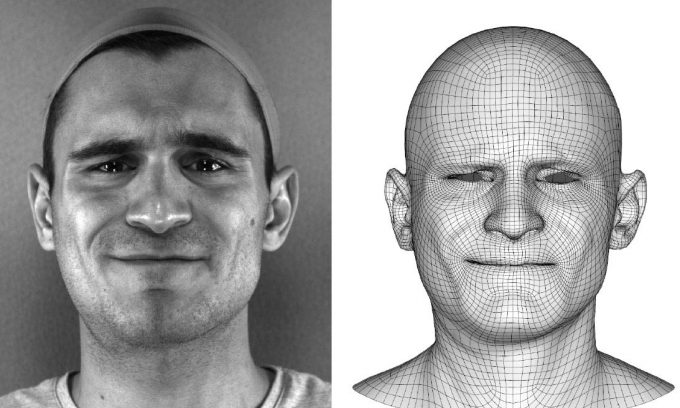

This AI isn’t just being used for rendering, but also animation. If you look at video games, and even some 3D films, you’ll notice that while bodies animate very well, fluid even, through the help of motion capture, facial animation is the bane of the industry. Numerous technologies have been used from video overlay, motions capture, and library assisted pre-animated sequences, but they all remain rather static. Live video capture of an actor which is then used to create a virtual double, usually produces the most natural render, but it’s not easy to change once captured.

Remedy Entertainment, the studio behind games such as Quantum Break, Max Payne, and Alan Wake, approached NVIDIA with an idea to produce better animations with a lot less effort. Using Remedy’s extensive library of facial animations, NVIDIA created a neural network to turn the actor’s videos into facial animation.

As you can see from the video, the animations are not only accurate, but go beyond being a straight copy. They were able to take emotional presets generated from the faces, and apply them to the generated animations. They were then able to take just voice actors and generate facial animations to reflect the speech. It’s not perfect, but it’s much faster than doing it by hand, and much better quality than the lip-sync libraries found in-game engines.

While AI is able to make faces look less robotic, NVIDIA is also using AI to train robots as part of its Isaac Lab robot simulator. Again, this was something we saw at GTC, but this time there was a live demonstration as participants could play a game of dominoes with a live robot trained through Isaac. Participants could even interact with Isaac in the virtual world with a VR headset, simulated through Project Holodeck.

One thing that was somewhat surprising from all this AI research and development was the announcement of something not AI related – an external workstation GPU dock. With increased reliance on mobility and smaller form factors from laptops, content creators are being asked to do more on the go or in the field, with laptops half the size of what they used to be. The idea behind an external GPU is not new, but it was only in the last year or so that technology had advanced enough (through the likes of Thunderbolt 3.0), that such an option was possible. Now NVIDIA has created a certified Quadro eGPU solution that can be used to accelerate workloads with Maya and Adobe Premiere Pro.

We should note the GPU docks are not new, and have been available from the likes of ASUS and Razer for some time. The only real change here is the certification, or guarantee that the hardware will work, with both the workstation card and the software. eGPUs are not as powerful as a native desktop solution, but will more than likely be faster than the integrated graphics in most ultrabooks and small laptops.

The last piece of news was more about demonstrations of our good friends virtual and augmented reality. From live stitching eight 4K stereo cameras together to create virtual environments, to new near-field optics solutions for displaying virtual and augmented environments to users. There is also research into shape-shifting controller for haptic feedback, feeling the virtual world in the real world.

There’s a lot going on, and there’s a good chance we’ll start seeing the dividends from this research soon enough – now if only we could get our own DGX station to test this stuff out!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!