- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Snapdragon Summit 2022 Day 2: Augmented Reality, Snapdragon For Windows, And Spatial Audio

Qualcomm’s focus for the first half of its latest Snapdragon Summit revolved around the new Snapdragon 8 Gen 2 platform, while the second half of the event (and our coverage) targets AR, Snapdragon Compute, advances in spatial audio, and lossless audio over Bluetooth.

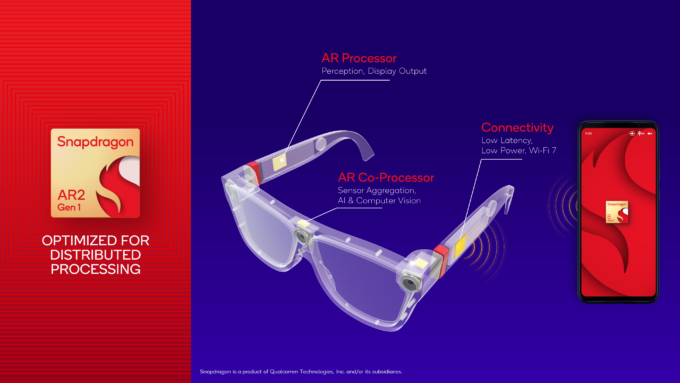

Snapdragon AR2 Platform

At its latest Snapdragon Summit, Qualcomm announced its first purpose-built augmented reality platform: Snapdragon AR2 Gen 1. VR is immersive, but Qualcomm believes the future of spatial computing is going to look like an evolution of AR, because it seamlessly allows for the blending of physical and virtual. You can wear glasses all day long but have the benefit of additional information being available and displayed privately.

One of the biggest challenges to this is integrating all the necessary features and functions into a small enough package that is not only comfortable to wear but doesn’t look like a glass brick strapped to your face with Velcro. The power and battery life, keeping the processor cool, having a wide enough field of view, ease of use, the display, comfort, weight, the physical layout, and wiring of the components; trying to fit all that into a small package is big ask.

Qualcomm has released several XR platforms in the past, used as foundation platforms and development kits for several successful VR headsets. This new AR-only platform builds on that success but with a ‘from the ground up’ focus on AR. It will rely heavily on the AI technologies that were being implemented in the Snapdragon 8 Gen 2 release, for better edge computing. It has also been optimized for distributed processing, spreading the load over different chips to work in unison, rather than as a monolithic chip.

This distributed approach is necessary for a number of reasons. A single monolithic chip takes up more space in a single area, and has much higher thermal demands, but it does make the implementation simpler and cheaper. However, these localized hotspots are at conflict with what AR needs to be more widely accepted, sleeker AR glasses.

The AR2 platform will feature three chips: an AR processor, a co-processor, and a connectivity chip. A lot of the heavy lifting will still need to be done by a tethered device, such as a phone or a laptop, so these will not be stand-alone devices; part of the sacrifice in creating a much sleeker AR experience.

The AR processor will sit in one of the arms of the glasses and is responsible for feeding key information back to the tethered device. This will be things like 6DoF (motion tracking), spatial information (contextual highlighting of objects and tracking), and the display engine.

The co-processor is situated in the bridge of the glasses, and is used for sensory aggregation, AI and compute vision. The connectivity chip uses the same FastConnect system as the SD8gen2 platform and is placed in the other arm of the glasses, along with a battery, and will run the low-latency Wi-Fi 7 connection to stream data to and from the host.

Things get interesting from the more technical deep dive. The AR processor will be built on a 4nm process and shares a lot of the architectural design philosophy from the mobile processors. It will have its own memory, Spectra ISP, CPU, Adreno display, sensing hub, Hexagon and Adreno processors, all just rearranged, and scaled differently. The new features, though, is the reprojection engine and the visual analytics engine.

The visual analytics engine works in conjunction with the 6DoF tracking to mark key points of interest as a frame of reference, so that tracking remains accurate. This is done in hardware rather than software, to speed up the motion tracking and remove motion latency, the primary cause for motion sickness with these setups.

The reprojection engine does the heavy lifting of matching up virtual objects with the real world, using the tracking information. This is responsible for allowing virtual objects to remain static relative to the real world, so that toys and virtual displays are anchored to a physical object, even when moving your head around.

The co-processor was designed as a means of distributing some the sensor tracking and camera connections away from the central processor, so that the wiring and thermal footprint can be moved away from a single bulky bridge or thick arms on glasses that don’t fold flat. The other advantage is offloading eye-tracking and iris identification from the main CPU, reducing power usage by not having to wake-up the main CPU for simple tasks.

This split between the AR processor (10mm x 12mm) and co-processor (4.2mm x 6.2mm) reduces PCB real-estate by 40%, and the number of wires that need to be routed by 45%, compared to a single monolithic chip and PCB, allowing for a much slimmer and more stylish pair of glasses.

The other big advantage of this AR2 platform is the power requirement. Coming in at less than 1 Watt of power (if you exclude the display power requirement), AR2 uses 50% less power than the previous generation XR2 platform.

From a performance perspective, that big focus on AI speeds up hand and object tracking by 2.5x compared to XR2. Faster tracking means less processing time, and thus lower power, too.

A large group of developers and OEM providers have already signed up to AR2, including some familiar names like Lenovo, LG, OPPO, and more.

Snapdragon Compute

Qualcomm has been at the forefront of a generation of Arm-based laptops that run Microsoft Windows, and a primary partner for Microsoft Surface devices. These ultra-low power laptops started a generation of ‘always-on’ devices, where you could have a laptop with 20 hours run-time for long-distance travel, or a whole month of standby time.

What made these devices different from previous Arm-based Windows attempts was the inclusion of an x86 compatibility mode built into hardware. While not very efficient, it at least let most 32-bit x86 applications work, even if a bit slow. While x86 ran slow, it at least provided a stop-gap measure to encourage adoption of the platform, and from that perspective, it’s done a good job.

The problem has been encouraging developers to rebuild their software with native Arm APIs for Windows, and allowing native performance for key applications. One of the key players in this regard has been Adobe, as it has been rather slow to adopt Arm, despite being a launch partner in 2019. It initially launched native versions of Photoshop and Lightroom, but has since wavered in that upkeep. Even then, the native version of Photoshop runs less than optimal (to put it mildly). At Snapdragon Summit, Adobe has announced it will be continuing support and committing to native Arm support for its software going forward, by announcing Arm native versions of Fresco and Acrobat, coming in 2023.

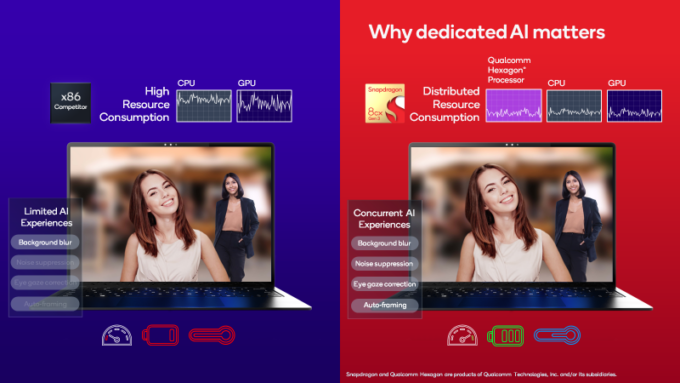

Qualcomm also wanted to talk about some of the changes coming to laptops, and that includes on-device AI, and its importance. We went through a phase of hardware acceleration using the GPU, taking advantage of its massively parallel nature, but in the last few years, that focus has now moved to more AI with Tensor cores.

A lot of AI in the last few years has been more to do with gaming and video enhancing through huge GPUs. The bulk of the work was done beforehand through training the AI with cloud compute and huge data centers. Not much AI was being used on the device itself. Voice to text systems have used plain old algorithms for the most part, the only AI involved was after the text was sent to data center where its natural language engine took over to try and infer what you said and meant.

What’s taken the time is that training and putting it to use for things we do every day. One aspect that a lot of people have come to accept, and even demand, is working from home and the dreaded conference meetings. Busy backgrounds, random noises from people’s microphones; telling people to mute themselves when not participating in a call is common advice that few seem to follow.

We’ve seen NVIDIA Broadcast in action and what it can do to clean up background noise on mics, and that’s through the power of AI. Background blurring so that faces become the focus, and background replacement without a green screen, possible thanks to AI. We now have auto-framing and cropping, motion tracking, always keeping you in-frame and in focus. Brightness and color correcting for dark rooms. And now, real-time multi-language translation with transcripts and subtitles, all thanks to AI. We could do all of this before AI, sure, but it was computationally expensive. AI just made things so much faster, to the point we can now do it all at the same time, but with a fraction of the power. This is what Qualcomm wants to bring to the next generation of Snapdragon for Windows.

Part of this endeavor is the announcement of Qualcomm’s next CPU project, called Oryon (pronounced Orion)… and that it’s coming in 2023, along with any details about what this CPU will do.

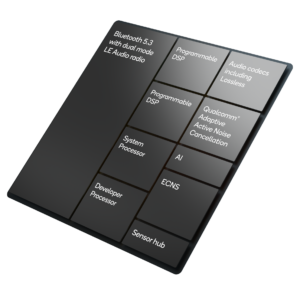

Snapdragon Sound and Spatial Audio

Another market segment that Qualcomm is part of, is audio technologies, mostly centered around Bluetooth and wireless audio. At Snapdragon Summit 2022, Qualcomm wanted to showcase its advancements in spatial audio, which is 3D tracking of sound and the soundscape.

This is an effect that several other companies have been experimenting with over the last couple of years, mostly in conjunction with VR headsets. Moving your head around makes the sound come through your left and right speakers at different levels, creating the impression that the sound is coming from a fixed point in space. As part of Qualcomm’s research, 41% of people it surveyed wanted this feature, and were willing to pay more for it. This feature will be coming as part of the Snapdragon 8 Gen 2 launch, and the Snapdragon Sound S5 and S3 Gen 2 platforms.

This spatial tracking also needs to be low-latency and doing this over wireless can be very challenging, especially over Bluetooth. Qualcomm has managed to bring the latency down on its sound platform to 48ms, from 89ms last year, which when you compare that to the <8ms people expect from displays (120 FPS+), it seems quite high. Our ears are generally less sensitive to delays in audio compared to vision, and typically doesn’t make people nauseous, fortunately. However, high latency in audio can be very distracting, especially in films and games, so any method to reduce the latency is greatly appreciated.

One item on the Snapdragon Sound platform that will likely have a fair bit of interest among certain crowds is support for lossless audio on both Bluetooth Classic and LE standards. This will be delivered through the aptX Lossless standard and will be a feature you’ll want to keep an ear out for in new headphones and earbuds.

A new wave of devices will also be soon announced, such as bringing aptX sound to speakers, and premium features like lossless audio, to mid-tier budget headphones – so you may not have to splash out as much cash for lossless audio.

Adaptive Noise Canceling (ANC) is getting an update, and you may start to see it supported on more devices, too. It’s been tweaked to better block out wind-howl, but also better detect voices so that you can still hold a conversation with ANC enabled if you wish.

There will also be support for the new Auracast Broadcast audio system, being able to stream Bluetooth audio to multiple connected devices at the same time.

With all that out of the way, that summarizes the Snapdragon Summit, and what we can expect to see in the new year. Be sure to check out Qualcomm’s first day launch article as well, to see what’s coming in the mobile sector.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!