- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

A Look At GPU Performance In Destiny 2: 1080p, 1440p, Ultrawide & 4K

With Destiny 2 having just dropped, it felt like a good idea to take a look at the game’s performance across 12 different graphics cards, with a multi-GPU set of results tossed in for good measure. So, if you’re wondering which GPU you need for the ultimate Destiny 2 experience, we have your answer!

As a huge Destiny fan, I couldn’t be more excited that the game’s sequel has finally dropped for the PC. Now, I get to enjoy the universe I’ve come to love so much in high-resolution and with high frame rates, and my console-less sci-fi aRPG-loving friends finally have an opportunity to discover what it is that pulls me in so much.

For newbies to the series, and also returning Guardians shifting from console to PC, I penned an in-depth “top 10” list the other day of things you should be aware of; either tackling confusing mechanics, and some other things you may stress over, but don’t need to. Being the addict that I am, I streamed the game for many hours on Tuesday to our YouTube channel, which was a nice treat, as the following day would encompass a lot of benchmarking.

Following-up on my Destiny 2 benchmarking article from the beta, this revised look has me using slightly different settings, and an area of the game that’s proven to be extremely reliable for repeated benchmarking.

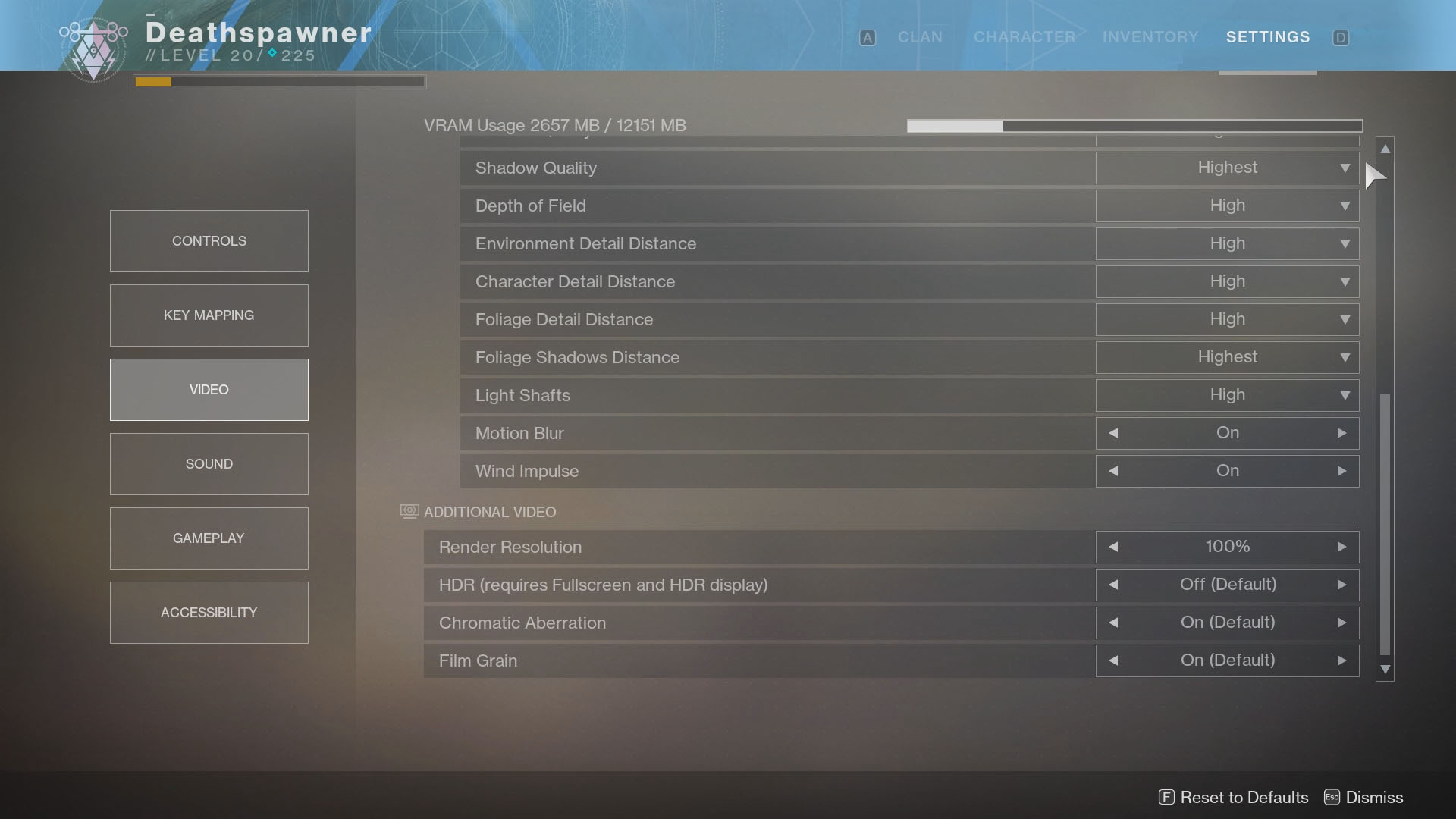

In the beta benchmarking article, I mentioned that I regretted simply choosing the “Highest” graphics profile and running with it, because it turned out that one setting in particular is overly rough on the GPU. That setting is Depth of Field, where moving from Highest to High will save you around 20 FPS at some resolutions (yup, it really is that crazy) for negligible gain.

This time around, I decided to choose an area in the game that allows me to generate reliable results very quickly (relatively, anyway), and ultimately, I went with a Lost Sector that resides near The Rig drop on Titan. This is the same Lost Sector featured in my PlayStation 4-focused Destiny 2 HDD vs. SSD video, and takes about 2 minutes for me to exhaustively clear.

For the first-half of the beta, Bungie allowed Fraps to be used for framerate and screenshots capture, and just as I was about to wrap things up, the company killed it. So this time around, I used PresentMon for the entirely of the testing. This is the same tool used for some DX12 and Vulkan capture; Destiny 2 helps mark the first time in a very long while when I couldn’t use Fraps in a non-DX12 non-Vulkan game.

Allow me to rant for a moment. Bungie’s code is so aggressive against overlays and other injectors, that I had a hard time even taking screenshots for this article. Windows’ built-in function captured just blackness, and the same applied to ShareX. In some rare instances, ShareX would show something, but it was truly hit or miss. As a self-proclaimed screenshot addict (1,592 for Destiny; 242 for Destiny 2 on PS4), this disappoints the heck out of me. Especially with a game that I’d kill to see a feature like NVIDIA’s Ansel in (I still want that to happen, if you’re reading, Bungie).

Nonetheless, before jumping into the performance data, here’s a table I’ve put together that can give you an idea of what to expect overall from a given GPU, based on our testing (with two exceptions). As of the time of this article’s publishing, NVIDIA’s GeForce GTX 1070 Ti had only been announced earlier in the day, and as it’s a bit of a different launch, we didn’t receive one in time for benchmarking. It could be that the 1070 Ti would earn a “Great” ultrawide rating, but I can’t say for sure until I get it tested.

| 1080p | 1440p | 3440×1440 | 4K | |

| TITAN Xp | Overkill | Overkill | Excellent | Great |

| GeForce GTX 1080 Ti | Overkill | Overkill | Excellent | Great |

| TITAN X (Pascal) | Overkill | Overkill | Excellent | Great |

| GeForce GTX 1080 | Overkill | Excellent | Great | Good |

| Radeon RX Vega 64 | Overkill | Excellent | Great | Good |

| GeForce GTX 1070 Ti | Excellent* | Great* | Good* | Poor* |

| Radeon RX Vega 56 | Excellent | Great | Good | Poor |

| GeForce GTX 1070 | Excellent | Great | Good | Poor |

| Radeon RX 580 | Great | Good | Poor | Poor |

| GeForce GTX 1060 | Great | Good | Poor | Poor |

| Radeon RX 570 | Great | Good | Poor | Poor |

| GeForce GTX 1050 Ti | Good | Poor | Poor | Poor |

| Radeon RX 560 | Good* | Poor* | Poor* | Poor* |

| GeForce GTX 1050 | Poor | Poor | Poor | Poor |

| Radeon RX 550 | Poor | Poor | Poor | Poor |

| Overkill: 60 FPS? More like 100 FPS. As future-proofed as it gets. Excellent: Surpass 60 FPS at high quality settings with ease. Great: Hit 60 FPS with high quality settings. Good: Nothing too impressive; it gets the job done (60 FPS will require tweaking). Poor: Expect real headaches from the awful performance. Note that this chart does not take into account 60Hz+ goals. * based on assumption, not our in-lab testing. |

||||

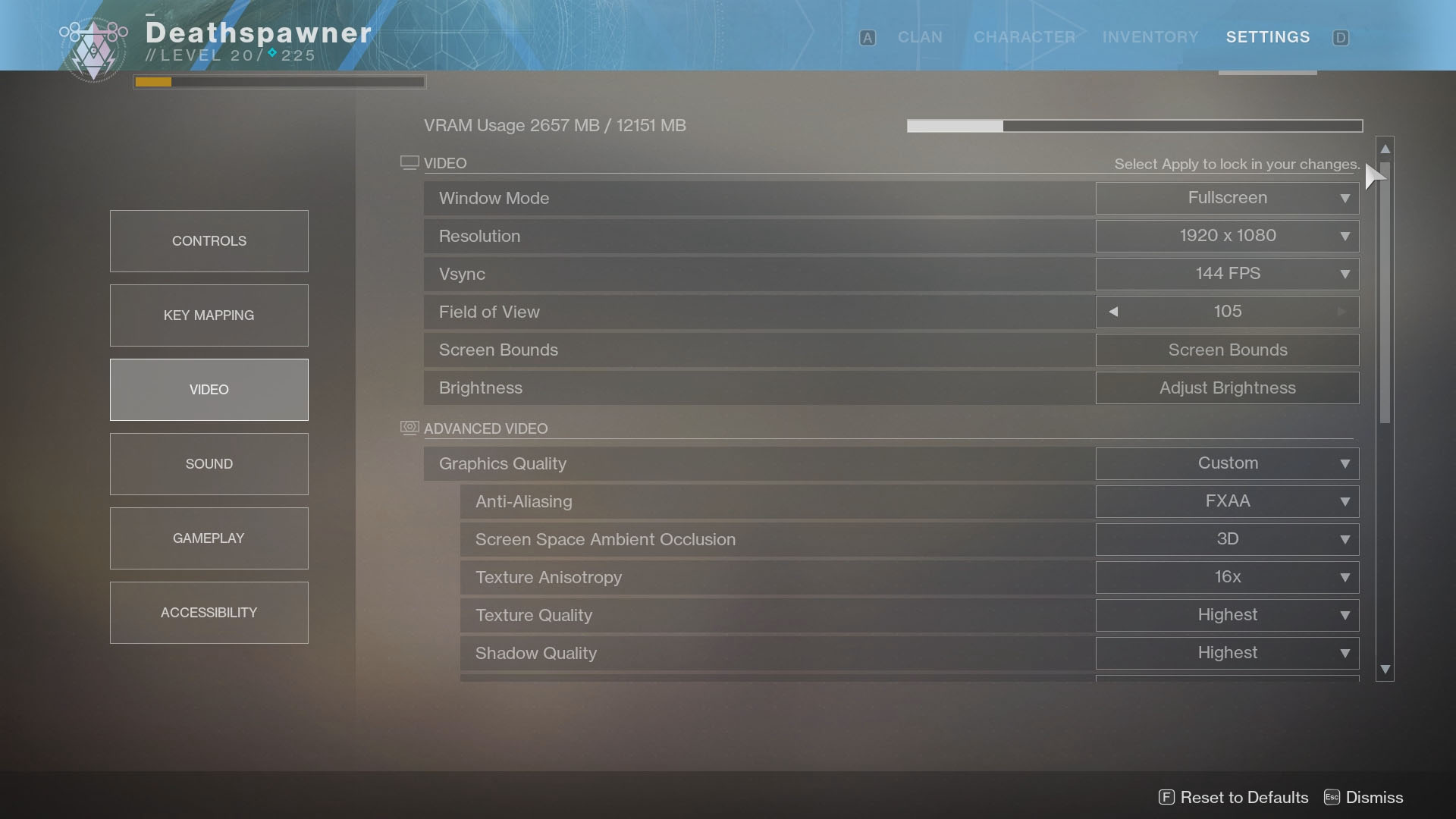

In the beta, an MSAA option for anti-aliasing existed, and due to its buggy nature, Bungie told us to stay away from it. Because such toxic AA modes are no good for anyone, the company decided to yank the option entirely out of the retail build, leaving SMAA and FXAA as the go-to options. For this testing, I chose to use FXAA, as I feel it’s the most reliable AA mode out there (it’s also the least taxing for the amount of benefit it provides).

As mentioned before, I originally tested each configuration in the beta using the “Highest” profile setting, which was a bad move. That’s because the Depth of Field option at “Highest” is almost broken in terms of how much it can impact your framerate. The same can be said in the retail build, although I didn’t retest to generate new results. The results from the beta were:

| DoF High | DoF Highest | |

| Radeon RX Vega 56 | 67 | 43 |

| GeForce GTX 1070 | 72 | 49 |

With all of that covered, here’s a look at game settings and PC used in testing. Note that the latest available driver was used for both AMD and NVIDIA, so Destiny 2-specific optimizations are present.

| Techgage Workstation Test System | |

| Processor | Intel Core i7-6900K (8-core; 4.20GHz OC) |

| Motherboard | GIGABYTE X99-Ultra Gaming |

| Memory | G.SKILL TridentZ (4x8GB; DDR4-3200 14-14-14) |

| Graphics | AMD Radeon RX 550 (2GB; Radeon 17.10.2) AMD Radeon RX 570 (4GB; Radeon 17.10.2) AMD Radeon RX 580 (8GB; Radeon 17.10.2) AMD Radeon RX Vega 56 (8GB; Radeon 17.10.2) AMD Radeon RX Vega 64 (8GB; Radeon 17.10.2) NVIDIA GeForce GTX 1050 (2GB; GeForce 388.00) NVIDIA GeForce GTX 1050 Ti (4GB; GeForce 388.00) NVIDIA GeForce GTX 1060 (6GB; GeForce 388.00) NVIDIA GeForce GTX 1070 (8GB; GeForce 388.00) NVIDIA GeForce GTX 1080 (8GB; GeForce 388.00) NVIDIA GeForce GTX 1080 Ti (11GB; GeForce 388.00) NVIDIA TITAN Xp (12GB; GeForce 388.00) |

| Audio | Onboard |

| Storage | Kingston SSDNow V310 960GB SATA |

| Power Supply | Corsair RM650x |

| Chassis | Corsair Crystal 570X Mid-Tower |

| Cooling | Corsair Hydro H100i V2 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro (64-bit; build 16299.19) |

| For an in-depth pictorial look at this build, head here. | |

Because I couldn’t use Fraps for benchmarking Destiny 2, I can’t provide minimum FPS data as I haven’t the foggiest clue on how to extrapolate the information from the results files generated. While PresentMonLauncher’s results analysis tool accurately displays the average FPS, the minimum reported FPS is so sporadic, there’s clearly some hook broken. I’ll dig into the possibilities of improving the data from PresentMon better in the future, but for now, all I have to provide are the averages.

What I can say about the averages, though, is that they were extremely consistent overall. There greatest delta was 6 FPS, 121 vs. 127, which was a one-off. Most of the two runs differed by no more than 2 FPS on average, even at 1080p. After some experimentation, this is likely attributed to the fact that I tested within a Lost Sector, which is off of the main map, and free of other players. When I benchmarked an A-to-B run-through on Titan multiple times, the reported average FPS was sporadic enough to not be trusted, because I simply didn’t have time to run the same run-through five times per GPU to get a trustworthy average.

That said, while Lost Sectors are amazing for the sake of repeatable benchmarking, they’re not as demanding as the outside world. That means that the data here isn’t the worst-case scenario as is ideal, although after follow-up testing, I deemed that the end results shouldn’t be that different, although you’ll probably want to deduct 10 FPS to predict the “worst case”.

Take this, as an example: on the RX Vega 64, the average framerate from my Lost Sector testing was 95 one run, and 92 the next. An A-to-B run-through on Titan gave me 82 and 87. I found that in the open world, the framerate varied way too much, whereas in the Lost Sector, it was kept much more even.

OK, isn’t it about time we get into the performance results?

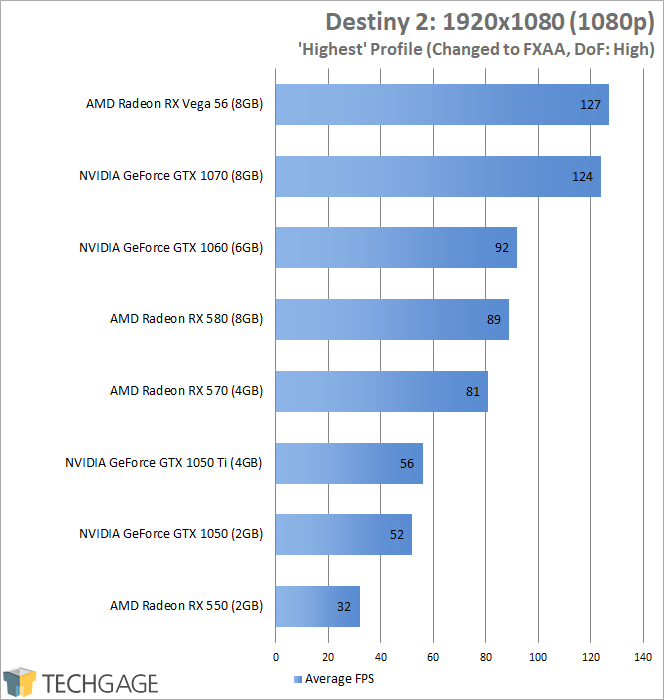

The overall scaling here doesn’t change much from the beta, and in fact, the only change is at the top: Vega 56 edged ahead of the GTX 1070, whereas the latter trumped the former in the beta. No GPU performed worse with the retail game than it did in the beta, likely helped by the fact that I eased off on the “Highest” Depth of Field option this go around.

Ultimately, you don’t need more than a GTX 1050 to enjoy Destiny 2 at excellent framerates with great-looking gameplay. If you’d rather see 60 instead of 52 with the GTX 1050, it wouldn’t require much. You’d want to start by changing the AO from 3DAO to HBAO, and perhaps lower the DoF setting even further.

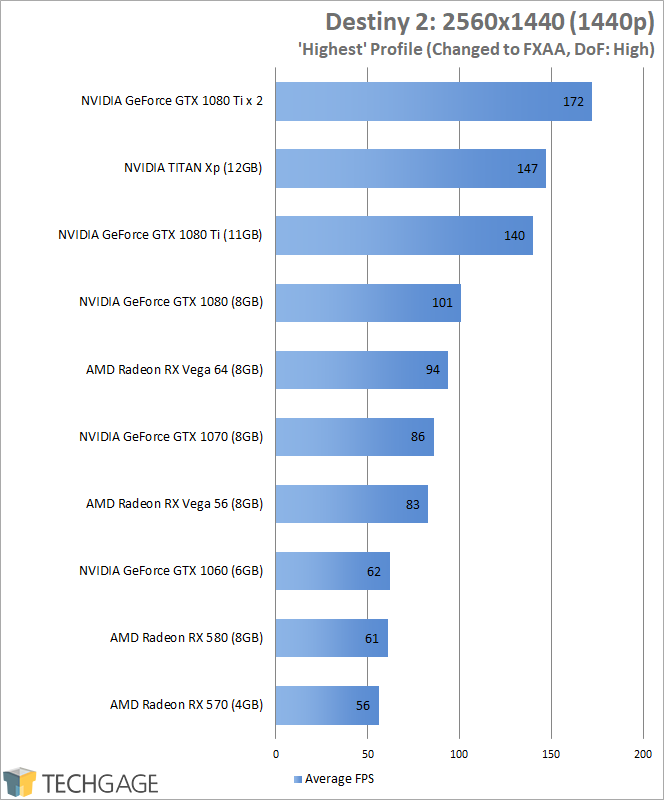

Whereas the GTX 1050 is required for great 1080p gameplay, 1440p requires at least an RX 570. That GPU or any above it is going to deliver sufficient framerates, and if you happen to have one of the bigger guns, you might just be justifying the purchase of your 100Hz+ monitor.

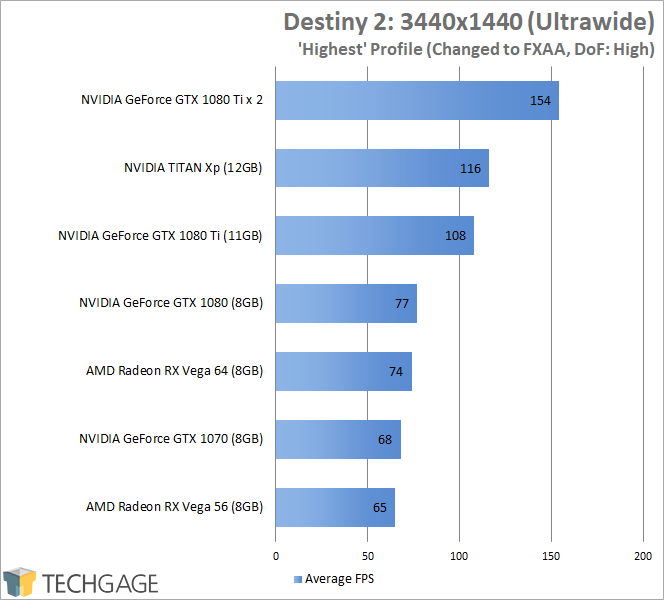

Speaking of bigger guns, how does the game fare at high-resolution? Let’s start with ultrawide, 3440×1440.

As long as you have an RX Vega 56 or GTX 1070, you’re going to be getting fantastic performance at 3440×1440. Again, I should be clear in saying that these framerates may be averages, but there will definitely be times in the game when you will see your performance drop quite significantly, such as in the open world, but overall, it’s going to be a very smooth experience. With the TITAN Xp and dual 1080 Ti configurations showing those kinds of results, 144Hz ultrawides can’t get here a moment too soon.

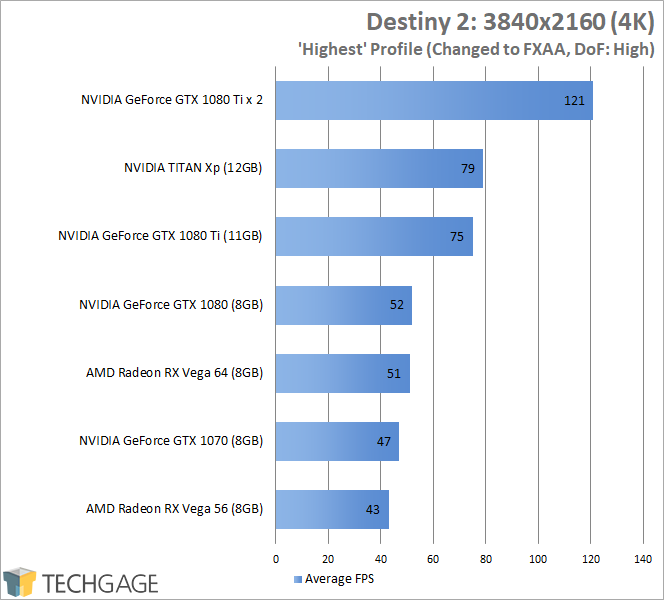

The PlayStation 4 Pro might be able to run Destiny 2 at 4K, but it’s at an appalling 30 FPS. That helps fuel my ire for 4K on consoles, because for the most part, 4K on PCs is downright difficult. With Destiny 2, if you want a great 4K experience, you need at least an RX Vega 64 or GTX 1080. GTX 1080 Ti SLI is all sorts of overkill, but it’s included as well just to show that the game does scale on multiple GPUs, and scales better than I’d expect, to be honest.

Final Thoughts

I didn’t expect to be surprised by what I saw after benchmarking Destiny 2, and that’s a great thing, because I wasn’t. All of the GPUs scaled the same as before, for the most part, although AMD’s latest driver release has applied some nice polish to overall performance.

Ultimately, my overall recommendations for GPUs at certain resolutions doesn’t really change from the beta, nor does it even deviate too much from what’s seen in the general comparison table above. It simply doesn’t take much GPU to deliver an enjoyable experience, which is ultimately a great thing given how good the game looks.

- AMD Radeon RX 550: Good for 1080p.

- AMD Radeon RX 560: Good for 1080p.

- AMD Radeon RX 570: Excellent for 1080p.

- AMD Radeon RX 580: Good for 1440p.

- AMD Radeon RX Vega 56: Good for 3440×1440; Excellent for 1440p.

- AMD Radeon RX Vega 64: Great for 3440×1440; Good for 4K.

- NVIDIA GeForce GTX 1050: Good for 1080p.

- NVIDIA GeForce GTX 1050 Ti: Great for 1080p.

- NVIDIA GeForce GTX 1060: Good for 1440p; Excellent for 1080p.

- NVIDIA GeForce GTX 1070: Great for 3440×1440; Poor for 4K.

- NVIDIA GeForce GTX 1070 Ti: Great for 3440×1440; Poor for 4K.

- NVIDIA GeForce GTX 1080: Great for 3440×1440; Good for 4K.

- NVIDIA GeForce GTX 1080 Ti: Excellent for 3440×1440; Great for 4K.

- NVIDIA TITAN Xp: Excellent for 3440×1440; Great for 4K.

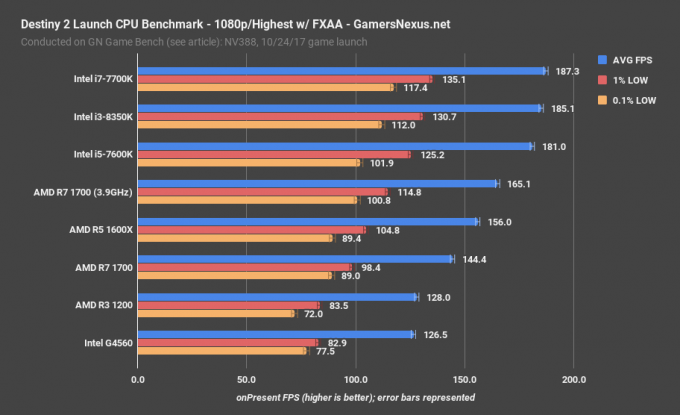

I do have a word of note about CPUs, though. All of the results above were conducted in the same test machine, with an overclocked 10-core Intel Core i7-6900K. Our good friends at Gamers Nexus took a look at the game across eight CPUs, and found some considerable differences.

In the chart above, we can see that some lower-end CPUs hold back upwards of 60 FPS, which is really quite incredible when you think about it. 60 FPS itself is significant, so to have that entirely cut off from a total is something to behold. Ultimately, it’s a testament to how poorly optimized the game is for the PC – at least with CPUs – with Steve noting that anything more than 4 threads isn’t going to make a difference.

What can make a difference is the clock speed. If you have one Intel chip quad-core or higher clocked to a speed that matches another Intel chip quad-core or higher, regardless of its total number of cores, the performance is going to be the same. Remember how much AMD pushed the message that game developers need to start taking advantage of these cores after Ryzen launched? That’s really emphasized here.

You can put me down as one of the people who aren’t surprised by this at all – we’re almost lucky to have the game on PC at all, so at least if there is some performance degradation on modest CPUs, the overall FPS should still be more than suitable. Ultimately, those who will care the most will be those hoping to get as close to 144Hz as possible, which is most easily done at 1080p. At 1440p, the CPU differences don’t matter quite as much, which is what we’ve come to expect from AMD vs. Intel gaming comparisons of late.

If you have any additional questions after reading, hit up the comment box below!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!