- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 3.0 – How Fast Are GPUs in Cycles X, EEVEE & Viewport?

Blender has just released its latest major update, pushing the software to new 3.0 versioning. As always, we’ve dug into the latest update to take a look at it from a performance perspective. Join us as we throw all of the current-gen GPUs from GeForce and Radeon at Cycles X, EEVEE, and the viewport.

Page 1 – Blender 3.0: What’s New & Cycles Rendering Performance

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

In our Blender 2.93 performance deep-dive posted months ago, we mentioned that we’d soon be seeing the “2” version go away, in favor of finally seeing a bump to 3.0. While some software tools, like web browsers, seem to enjoy hiking up version numbers quicker than they probably should, Blender has been different.

To provide some perspective, Blender 2.0 released in August 2000. So why, after 21 years, would the developers want to jump to 3.0? A recent acceleration of development sure can’t hurt. In recent years, the Blender Foundation has received a huge increase in funding support, even from companies you’d think would be considered competitors – eg: Epic – and others like AMD and NVIDIA.

The truth is, there’s not so much new from 2.93 to 3.0 that a new major version number was necessary, but that’s because every Blender release lately would have deserved the same jump. In recent years, Blender developers have been seriously busy, with each new launch encompassing a huge collection of improvements and new features. Way back during our in-depth look at Blender 2.80, we mentioned that it could have been called 3.0 quite easily.

Nonetheless, going forward, we’re going to start seeing a bit of a different versioning scheme for Blender. As we recently reiterated, we’re likely to see a new major version number every two years, meaning Blender 4.0 will release in 2023. But, none of this actually matters too much – what does matter is what these updates include.

What’s New In Blender 3.0?

In Blender 3.0, there’s a lot to love. This is the release that ushers in a completely overhauled Cycles render engine, dubbed during its development as Cycles X. This updated engine is completely compatible with the previous Cycles, and will improve performance on both CPUs and GPUs.

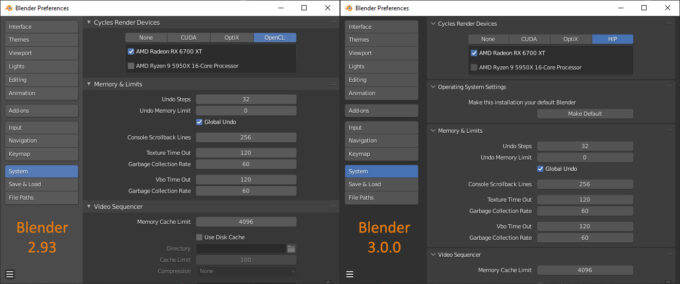

As we’ve also covered before, Blender 3.0 removes OpenCL rendering, which has led AMD to implement its HIP API so Radeon users won’t notice the change. Well… that assumes you’re at least using an RDNA (RX 5000) or RDNA2 (RX 6000) Radeon, as earlier models are not yet supported. Likewise, Linux support for HIP is also still in the works. If you want to give HIP a try, you will need to download the beta driver. Radeon Pro Enterprise 21.Q4 will officially add support, and we’d assume the next gaming driver will, as well.

If you’re an Apple M1 user, then not only is this article not entirely relevant to you, but you’ll have to wait until Blender 3.1 for initial Metal support.

While the viewport hasn’t shown any real differences in our benchmarking vs. 2.93, Blender 3.0 does include improvements there to improve the speed of the rendering mode, resolving faster than before. This is especially true when it’s not just a flat scene, but an animation with interactive lighting.

Also on the performance front, Blender has changed from gzip to Zstandard compression for .blend files, allowing projects to load much quicker – especially noticeable for projects that have thousands of data block links.

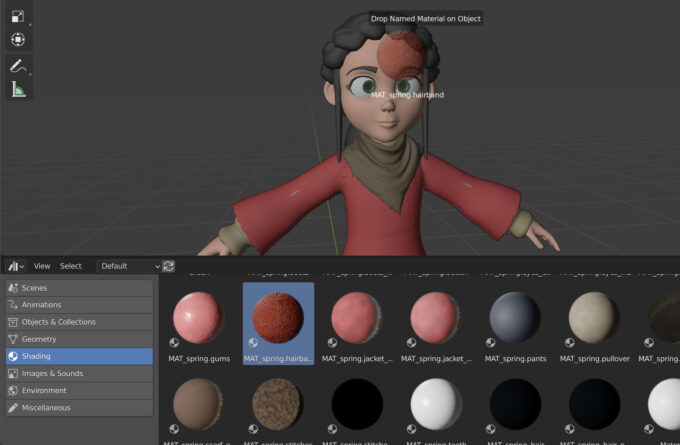

Another major improvement to Blender 3.0 is the arrival of a much-desired feature: an asset browser. Asset browsers have been standard fare for many design suites, as they not only allow you to access your own assets quickly and easily, but also use additional libraries so you can have plenty of options at-the-ready. Blender notes that this feature isn’t perfect right now, and that some additional functionality is found in the current Blender 3.1 alpha. Still, the base has been laid, so it’s really great to see the feature finally come to fruition.

One last addition we want to call out is USD importing, something else, like the asset browser, that really propels the seriousness of Blender forward. Really – with as often as new Blender versions come out, the feature set grows at a seriously fast pace.

If your coffee (or drink of choice) is full, and you feel a little ambitious, you can peruse all of what’s new in the Blender 3.0 release notes.

AMD & NVIDIA GPU Lineups, Our Test Methodologies

First things first: let’s talk about the lack of CPU-specific performance in this article. In our testing, Cycles has improved CPU rendering performance as it has with the GPU, but the differences are not to the same extent. For the sake of time, we wanted to focus on GPU here, and do more in-depth CPU testing in the future, including taking a look at CPU + GPU rendering for both GeForce and Radeon, not just one or the other.

With that said, let’s take a look at AMD’s and NVIDIA’s current graphics card lineups, with six on the Radeon side, and seven on the GeForce side – and all thirteen of which have been tested here.

| AMD’s Radeon Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| RX 6900 XT | 5,120 | 2,250 | 23 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $999 |

| RX 6800 XT | 4,608 | 2,250 | 20.7 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $649 |

| RX 6800 | 3,840 | 2,105 | 16.2 TFLOPS | 16 GB 1 | 512 GB/s | 250W | $579 |

| RX 6700 XT | 2,560 | 2,581 | 13.2 TFLOPS | 12 GB 1 | 384 GB/s | 230W | $479 |

| RX 6600 XT | 2,048 | 2,589 | 10.6 TFLOPS | 8 GB 1 | 256 GB/s | 160W | $379 |

| RX 6600 | 1,792 | 2,491 | 8.9 TFLOPS | 8 GB 1 | 224 GB/s | 132W | $329 |

| Notes | 1 GDDR6 Architecture: RX 6000 = RDNA2 |

||||||

For creative purposes, AMD’s Radeon RX 6700 XT may offer the best bang-for-the-buck – at least if we ignore the ongoing problem of rampant price-gouging that’s going on in the market. That GPU includes 12GB of memory, making it a bit more “future-proof” than the 8GB offerings. The higher-end Radeons improve performance, and the increase the frame buffer to 16GB.

While AMD’s RDNA2 generation supports hardware ray tracing, that acceleration is not currently supported in Blender 3.0’s HIP implementation. AMD intends to fix this in the future, as well as polishing the CPU + GPU heterogeneous rendering mode. We did see improvement with CPU + Radeon rendering in quick tests, but some projects threw errors, hinting that further HIP updates need to be made.

| NVIDIA’s GeForce Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

In NVIDIA’s current-gen lineup, we’d have to say that the GeForce RTX 3070 is the best bang-for-the-buck, given its pricing and performance delivered. That said, while the RTX 3070 includes hardware ray tracing support in Blender 3.0 like the rest of the current stack, it only has an 8GB frame buffer, which could be considered a hindrance in creative workloads before long. If you want greater than 8GB, you’ll need to opt for at least the RTX 3080.

On the topic of memory, the 12GB frame buffer of the RTX 3060 might strike you as alluring, but bear in mind its memory bandwidth is less than the RTX 3070, so you’d be making one trade-off for another. But, ultimately, our performance will help clear up any obvious detriments.

Here’s a quick look at the test PC used for our Blender 3.0 benchmarking:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.60 08/03/2021) |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 16GB x4 Operates at DDR4-3200 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; 21.40 Beta) AMD Radeon RX 6800 XT (16GB; 21.40 Beta) AMD Radeon RX 6800 (16GB; 21.40 Beta) AMD Radeon RX 6700 XT (12GB; 21.40 Beta) AMD Radeon RX 6600 XT (8GB; 21.40 Beta) AMD Radeon RX 6600 (8GB; 21.40 Beta) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 496.76) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 496.76) NVIDIA GeForce RTX 3080 (10GB; GeForce 496.76) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 496.76) NVIDIA GeForce RTX 3070 (8GB; GeForce 496.76) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 496.76) NVIDIA GeForce RTX 3060 (12GB; GeForce 496.76) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 10 Pro build 19043.1081 (21H1) AMD chipset driver 3.10.08.506 |

| All product links in this table are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using updated software, including the graphics and chipset driver. An exception to the “updated software” rule is that for the time-being, we’re choosing to stick to using Windows 10 as the OS, as Windows 11 has so far felt like a chore to use and benchmark with. Do note, though, that the performance results we see in this article would be little different, if not entirely the same, in Windows 11.

Here are some other general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

For this article, we’re once again benchmarking with the BMW, Classroom, and Still Life projects, as well as the brand-new Blender 3.0 official project, Sprite Fright.

Sprite Fright is no doubt the beefiest project to be found on Blender’s demo files page, requiring upwards of 20GB of system memory after it’s opened. Since projects like BMW and Classroom are pretty simple in the grand scheme, we’re happy to be able to now include a comprehensive project like this to test with. Sprite Fright isn’t just a demo project, but a Blender Studio movie, which you can check out here.

All of the projects tested here were pulled from the aforementioned Blender demo files page, and adjusted accordingly (in 2.93, with the exception of Sprite Fright, which was only used in 3.0):

- BMW: Changed output resolution to 2560×1440 @ 100%.

- Classroom: Changed output resolution to 2560×1440, changed tile size to 256×256.

- Still Life: Disabled denoise and noise threshold, changed tile size to 256×256.

- Sprite Fright: Disabled denoise and noise threshold.

- Red Autumn Forest & Splash Fox: As-downloaded.

Note that all of our testing is conducted in an automated manner via the command line, so the results will vary slightly if you render inside of Blender itself.

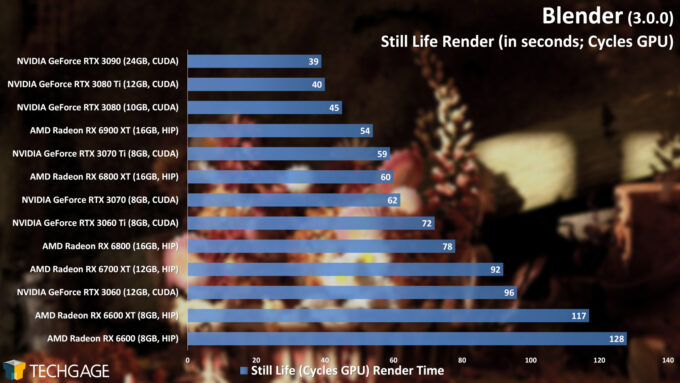

Cycles GPU: AMD HIP & NVIDIA CUDA Rendering

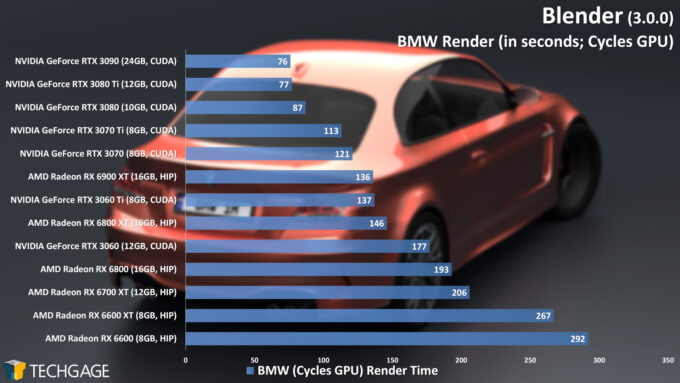

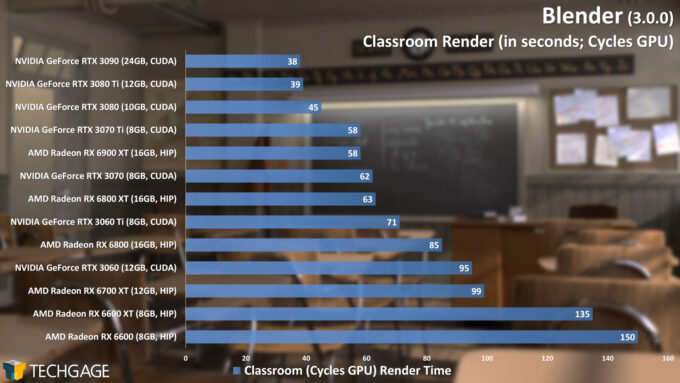

These four sets of results all show similar scaling, with NVIDIA’s GeForces ultimately leading in performance vs. each model’s respective Radeon competitor. It’s rather telling when AMD’s top-end Radeon RX 6900 XT is going head-to-head more often than not with NVIDIA’s mid-range GeForce RTX 3070.

Of these four projects, it’s the Sprite Fright one we were keen to analyze most; not just because it’s brand-new, but also because it’s the most complex Blender project we’ve ever tested. As mentioned above, merely opening the project will use upwards of 20GB of system memory.

Sprite Fright‘s resulting render is effectively three renders in one, with each being layered on top of the others in the final composition stage. Due to this design, there’s a slight delay in GPU work in between each of those renders, so we opted to retain default sample and resolution values to make sure the CPU wouldn’t interfere too much with our scaling. That leads to a super-fast GPU like the RTX 3090 taking 10 minutes to render a single frame, and a lower-end RX 6600 taking over 40 minutes.

When it takes that long to render a single frame, we can only imagine how long we’d be waiting to render the Sprite Fright movie! If you’re a paying Blender Studio member, you can find out yourself, since the entire thing is being offered, with all assets in tact.

CUDA and HIP are just two of the APIs found in Blender 3.0. Let’s check out NVIDIA’s OptiX API next, and see how much quicker it can make all of these renders – especially Sprite Fright.

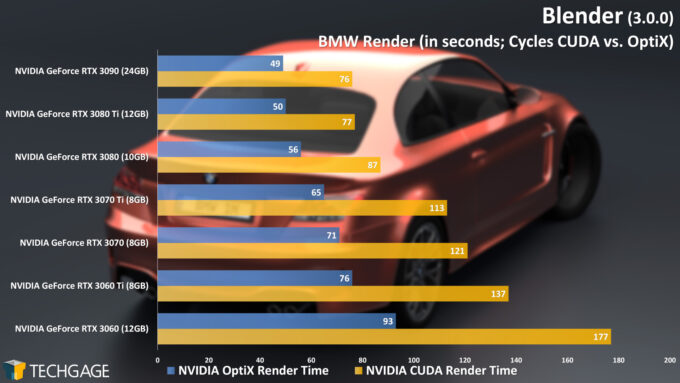

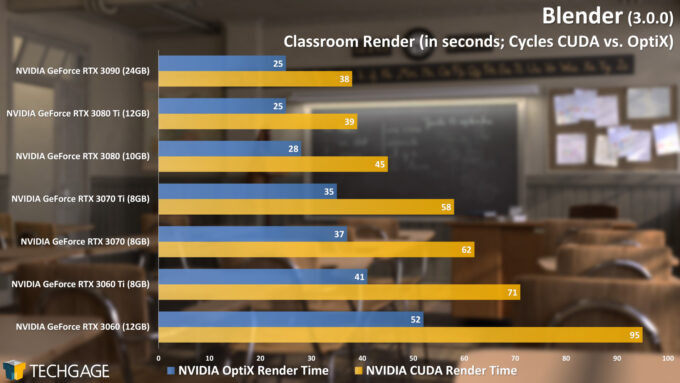

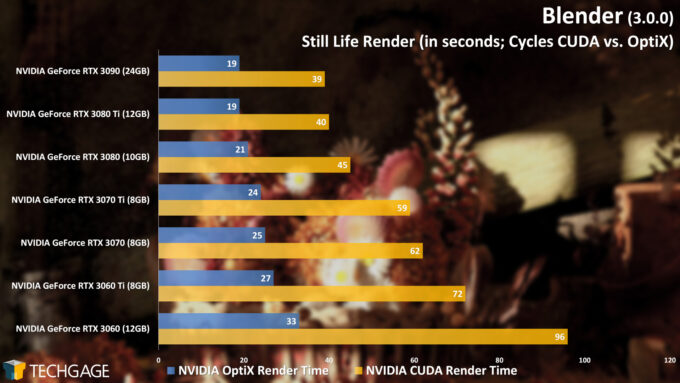

Cycles GPU: NVIDIA OptiX Rendering

Looking over these results should make it immediately clear that using OptiX over CUDA can provide an enormous performance improvement, while the level of gain will depend on the project design. Interestingly, Still Life sees the greatest performance improvement, with every single one of these GeForces taking less time to render the final image vs. using CUDA.

With Sprite Fright, we wondered just how much benefit we’d see from OptiX considering the project is just so intensive, and given that there are short periods of time where the GPU might be under-utilized. Well, ultimately, we’re seeing similar gains in that project as we do in the BMW and Classroom ones.

What sounds better to you: 625 seconds for a single frame render, or 406 seconds? If we were just talking about one frame, that’d be one thing, but when you add up all of the time saved over the course of an entire animation render, it really adds up.

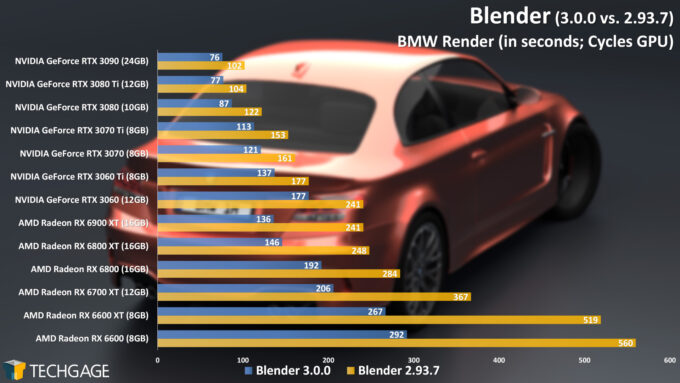

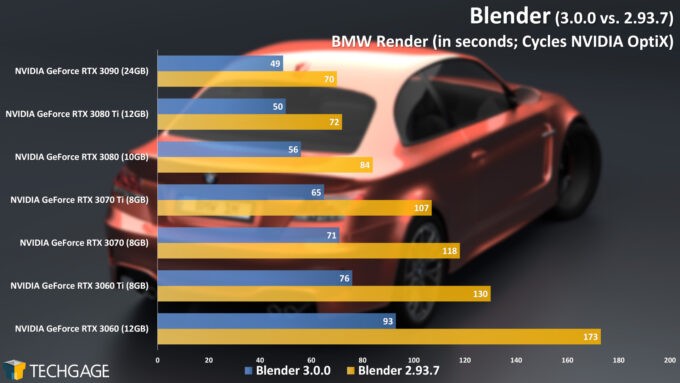

Cycles GPU: 3.0.0 vs. 2.93.7

All of the results above look great on their own, but they don’t exactly help highlight the performance improvements of the new Cycles X render engine. So, we’ll take care of that here, with the BMW project. Because render times were becoming a bit too quick, we increased the output resolution to 1440p to stress the cards longer, and create more interesting scaling.

Here’s a look at CUDA and HIP performance in 3.0.0 vs. CUDA and OpenCL in 2.93.7:

When we compared our render outputs from 2.93 and 3.0.0, they didn’t appear any different at all, which is pretty neat considering the render engine has seen a massive overhaul. This upgrade is similar enough to OptiX, which also aims for identical end quality even with its different way of rendering.

It’s interesting to see that AMD appears to benefit more from this Cycles upgrade than NVIDIA, as its before and after deltas are much starker. The new Cycles especially breathes more life into AMD’s lower-end stack, where GPUs like the RX 6600 and RX 6600 XT effectively see doubled performance!

Fortunately, these Cycles improvements extend beyond CUDA and HIP. As we mentioned earlier, the CPU also sees some improvement, just not to the extent that we see from the GPUs. And, because GPUs are clearly the most important focus for Blender rendering, CPU gains won’t ultimately matter that much for most people (but for rendering houses, it could.)

The new Cycles even benefits OptiX, not just CUDA:

The performance delivered with the new Cycles X is simply staggering. In 3.0, some NVIDIA GPUs become as fast when rendering with CUDA as they were in 2.93 using OptiX. But, then OptiX takes things one step further again, giving us some seriously impressive performance.

Despite that onslaught of charts, we’re not quite done yet. On the following (and final) page, we’re going to take a look at both EEVEE rendering performance, and viewport performance, using Solid, Wireframe, and Material Preview modes.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!