- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Intel P67 Roundup: ASUS, GIGABYTE, Intel & MSI

We’ve been a little short on motherboard content lately, so to kick things back into action we’re taking a look at four P67-based motherboards at once – all benchmarked using our newly revised test suite. The boards we’re looking at are the ASUS P8P67 Deluxe, GIGABYTE P67A-UD4, Intel DP67BG and MSI P67A-GD65.

Page 14 – I/O Performance: USB 3.0 & Ethernet

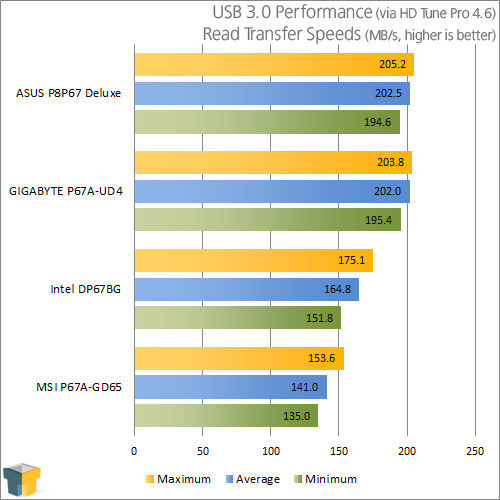

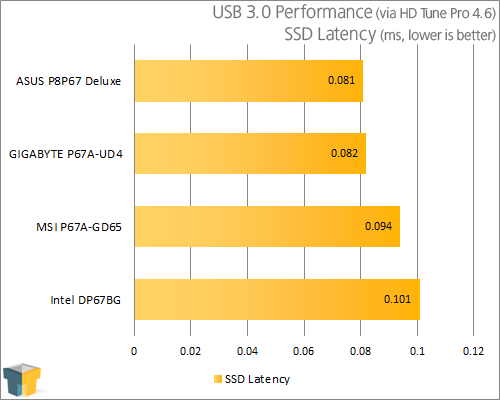

Thanks to the introduction of the super-fast USB 3.0 bus, we’re able break free from the rather limiting ~30MB/s transfer speed that we were confined to, to now being able to plug in an external SSD and in some cases double or triple the transfer speed of a regular hard drive. Just like how the SATA chipsets can affect hard drive and SSD performance, USB 3.0 chipsets (and their drivers) can impact the overall performance just the same.

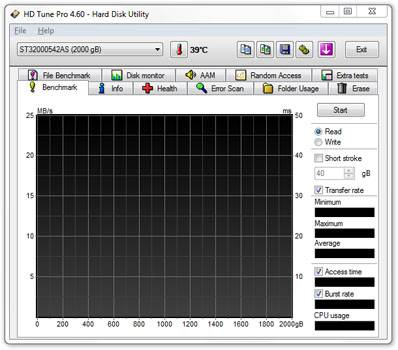

For the same reasons that we use HD Tune a our standard I/O benchmark, we also use it to test for USB 3.0 performance as it’s perfectly suited for the task.

I’m starting to feel like a broken record here, but ASUS has managed to keep on top of the pack, with GIGABYTE keeping right up with it. Similar to our SATA tests, MSI’s board falls behind Intel once again.

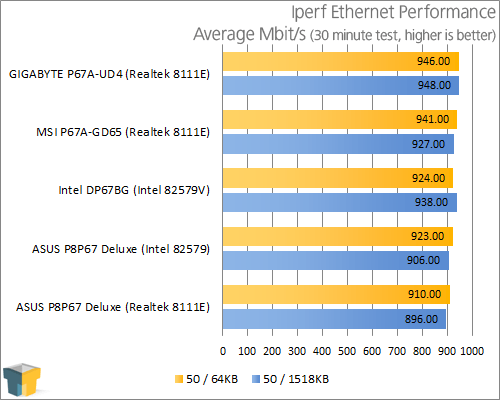

Ethernet Performance

To some, a LAN port is a LAN port, but for those who take networking seriously, there’s can be major differences between one chipset and another. While some may handle a 100GB transfer before slowing to a crawl, another might not even reach 20GB – and trust me, we’ve seen that sort of difference in our personal machines here.

Benchmarking Ethernet performance and getting reliable results is tough, and we in fact don’t believe there is a “perfect” methodology to it, as many variances can come into play from run to run. Plus, it’s difficult to replicate the kind of stress where a NIC might stall, because while the first five runs might be fine, it could be the sixth that decides to die out.

Our test server is my personal PC, featuring Intel’s Core i7-980X processor, 12GB of Kingston RAM, Windows 7 Ultimate x64 and also Intel’s server-grade I340 network card. A network card such as this is an important piece of the equation, as it’s in most regards “overkill”, meaning that it cannot become a bottleneck when testing on-board solutions.

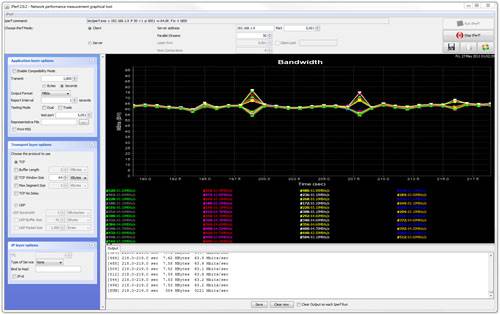

To both stress-test and benchmark our network we use a cross-platform tool called “Iperf”, and for the sake of easier testing, opt to use a Java-based GUI front-end called JPerf. We run two different tests here, with one using 64KB sized packets (a standard test) and another using 1518KB packets (typical of HTTP transfers). Each of these tests opens up 50 connections between the client and server, simulating a true server-like workload.

Note: Performance is just half the equation where NICs are concerned. While one might out-perform another, that doesn’t tell us anything with regards to the predicted lifespan of a given model. Based on the personal experience of both myself and Jamie, we’ve been able to conclude that overall, Intel’s NICs are much more reliable over time.

While ASUS has out-performed all of the other boards consistently in each test, it falls a bit short where network performance is concerned. From the results above, we can also see something interesting. In some cases, it’s not just the NIC itself that has the ultimate say with the performance, as GIGABYTE’s use of Realtek’s 8111E out-performed ASUS’ implementation by 36~52MB/s.

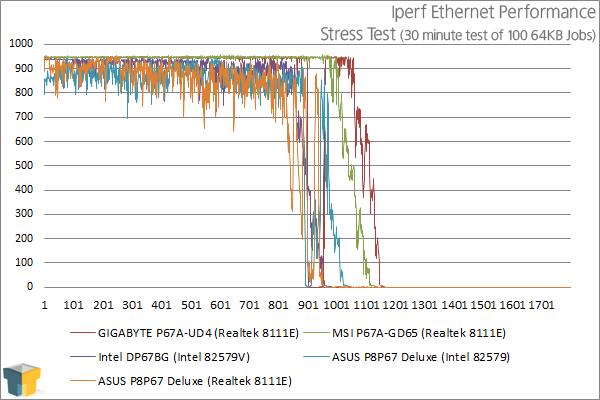

To help see if one NIC being tested is more durable than another, we run a stress-test using the same tool as above, but double the number of jobs in order to see which NIC fails first. True server-grade NICs, such as our I340, would be able to handle a stress like this for quite a while, but no on-board solution we’ve tested up to this point has been able to make it past the 20 minute mark.

Interestingly, both of the Intel NICs stalled before the Realtek’s, with both Intel’s and ASUS’ boards being affected. The Realtek-equipped GIGABYTE and MSI boards not only lasted the longest, but managed to retain near-1Gbit/s speeds right up until the time of their failure.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!