- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA Announces GeForce RTX 2080 GPUs: Now With Ray Tracing

After months of some of the worst kept secrets in NVIDIA’s history, the new Turing-based GeForce RTX GPUs are set to bring real-time ray tracing to the masses. With the launch of the RTX 2080 Ti, 2080, and 2070, we’ll get to see if the wait was worth. Dedicated ray tracing cores, Tensor cores, deep learning on a gaming card, this is some truly exciting stuff coming from Gamescon 2018.

People have been waiting for months, but it’s finally arrived at Gamescom 2018, Germany. NVIDIA announced its latest generation of gaming GPUs, just a week after the Quadro RTX at SIGGRAPH 2018.

They are not 11-series, they are not GTX, but they are still GeForce. This is NVIDIA’s GeForce RTX 2080 and 2080 Ti GPUs, built on the Turing architecture and will be coming very soon.

The launch of RTX cards is quite different from previous generations, at least as far as gaming is concerned, as we see new hardware architecture trickling down from its enterprise siblings.

This will end up being a dramatic change in gaming pipelines in the years to come as we see the first consumer cards with dedicated ray tracing pipelines in the form of the new RT cores and Tensor cores for AI and deep learning used in accelerated rendering.

NVIDIA made its big RTX API announcement a few months ago, along with the DirectX Raytracing (DXR) launch. What wasn’t expected was dedicated hardware coming when the Quadro RTX cards were announced last week. For the last few generations, NVIDIA has focused most of its efforts on CUDA cores, its general purpose processing cores which are used for both graphical processing and compute. More cores were added, more ROPs and TMUs, bigger frame buffers, faster memory, the usual stuff, but at the architectural level, it was mainly just adding more and more as manufacture process shrank.

Then last year we saw the introduction of Tensor cores with Volta. These were basically very quick math accelerators for integer and low accuracy floats, used extensively in deep learning applications. In terms of gaming application, there is very little benefit, at least back then. But those Tensor cores started to be used for graphics, by training AI to denoise renders for faster previews.

Then last week we saw the introduction of RT cores, dedicated hardware for ray tracing. These pull double duty in not only professional rendering applications from Autodesk Maya, Arnold, V-Ray, etc, but tie in with the RTX API that NVIDIA showcased with the help of Epic’s Unreal Engine with that fantastic real-time render of a Star Wars scene. These RT cores are then coupled with the Tensor cores to guesstimate the finished render, and boom, 30 FPS ray tracing from a single QUADRO RTX 6000

Well, soon enough we’re going to start seeing that same tech in games coming out in the next couple of months, including Metro Exodus, Shadow of the Tomb Raider, and Battlefield V. But first, let’s take a look at those specs on the three announced RTX cards from the GeForce range (highlighted in black).

| NVIDIA GeForce Series | Cores | Freq | RT | Memory | Mem Bus | Mem Bandwidth | SRP |

| Quadro RTX 6000 | 4608 | 1730 MHz* | 10.0 GR/s | 24GB | 384-bit GDDR6 | 672 GB/s | $$$$$ |

| GeForce RTX 2080 Ti | 4352 | 1545 (1635 FE) MHz | 10.0 GR/s | 11GB @14Gbps | 352-bit GDDR6 | 616 GB/s | $999 |

| GeForce GTX 1080 Ti | 3584 | 1582 MHz | 1.21 GR/s | 11GB @ 11Gbps | 352-bit G5X | 484 GB/s | $699 |

| Quadro RTX 5000 | 3072 | 1630 MHz* | 6.0 GR/s | 16GB | 256-bit GDDR6 | 448 GB/s | $$$$ |

| GeForce RTX 2080 | 2944 | 1710 (1800 FE) MHz | 8 GR/s | 8GB 14GHz* | 256-bit GDDR6 | 448 GB/s | $699 |

| GeForce GTX 1080 | 2560 | 1733 MHz | <1GR/s | 8GB @ 10GHz | 256-bit G5X | 352 GB/s | $599 |

| GeForce RTX 2070 | 2304 | 1620 (1710 FE) MHz | 6 GR/s | 8GB | 256-bit GDDR6 | 448 GB/s | $499 |

| GeForce GTX 1070 | 2560 | 1683 MHz | <1 GR/s | 8GB @ 10GHz | 256-bit GDDR5 | 352 GB/s | $379 |

| *Unconfirmed | |||||||

There’s a lot of information to take in, and some new metrics to consider. Ray tracing performance will be measured in gigarays per second (GR/s), and NVIDIA was throwing around another term as well, as a measure of complete performance of the GPU, taking RT, Tensor, and CUDA into consideration, with RTX Operations Per Second, or RTX-OPS. With the RTX 2080 Ti achieving 78T RTX-OPs, or 78 billion mixed mode operations per second.

The tensor cores are now able to perform simultaneous integer and float operations at the same time, hence the absurd number of OPS the new Turing architecture can perform (but remember those are low accuracy).

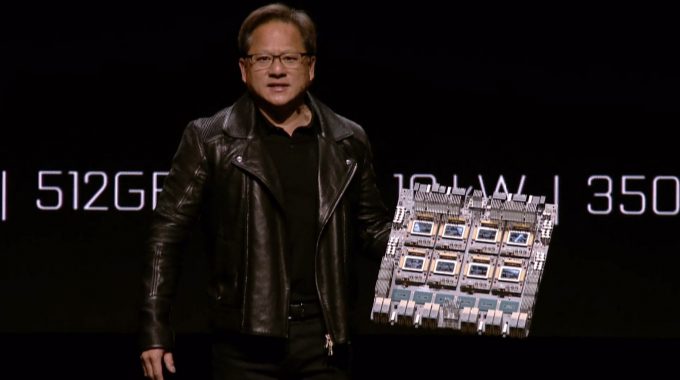

But there’s a lot of back-end software going on with RTX as well, as NVIDIA also announced other things that tie in with the gaming engine. The DGX 2 was quickly shown, an impressive beast of a server we’ve talked about in the past that houses 8x GV100 GPUs, which are used for deep learning. This is then set to work on improving the rendering pipeline of graphics technologies and the image quality of games.

NVIDIA NGX – Neural Graphics Framework, is a pre processing system that takes images and processes then on of these DGX supercomputers, building a neural network that studies image quality and upscaling. Developers and gamers can then put that neural imprint into a game to effectively upscale the quality in real-time as a post-processing effect. This creates yet another anti aliasing mode called DLSS, which we can guess to be Deep Learning Super Sampling, which makes better AA predictions to smooth out edges, better than even NVIDIA’s latest Temporal AA.

Another addition to the hardware of the cards is the new VirtualLink interface, a USB Type-C connector that will be used as a single cable for virtual and augmented reality headsets that combines data, display, and power, into a single connector – something the industry has sorely needed for a long time.

The three new GeForce RTX GPUs will initially launch with the 2070, 2080, and the 2080 Ti, in both standard and pre-overclock Frontier Editions (FE). I think one of the biggest surprises is the new reference cooler. Gone is the single-fan blower/exhaust style, and in with the dual-fan cool. This is a design change that has been massively overdue. The reference boards will also make use of 13 power phases as well, not that the Pascal design was lacking in that department.

One oddity though is that NVIDIA has replaced SLI with its new NVLink connector that its brought over from its professional cards. These offer 50x the bandwidth that was available with SLI, and is used for direct sharing of VRAM, not just cloning, which means you should technically be able to double VRAM with an extra card. How this will work in games, we don’t know, and NVIDIA may not even allow that functionality on its GeForce cards, so we’ll have to wait and see.

What was largely missing though, was how do these cards perform with the other 99% of the games on the market, the DX11 games that most of us play, the ones without the fancy new ray tracing effects. The straight up CUDA core count over the previous generation is still increased, so there should be greater performance in most standard games. The new GDDR6 memory is also faster, so overall, that cards should be faster, but by how much we’ll have to wait and see. So while most of the focus has been on the ray tracing, normal games should still be faster too.

So when can you get these cards? Well, pre-orders are open up on NVIDIA’s website, with the official release being September 20th. Definitely some exciting tech, not just for gaming, but also the prospect of deep learning from a home computer without forking over huge sums of money for a TITAN V. If you happen to be at or going to Gamescon in Germany, try and hit up NVIDIA’s both to see the RTX cards in action. More details will come over the next couple of days as we ask lots of questions and find out what makes these cards tick!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!