- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

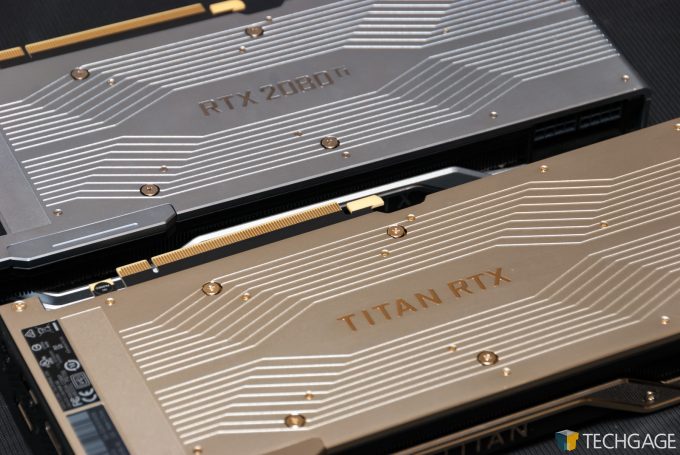

NVIDIA TITAN RTX Workstation Performance Review

NVIDIA’s TITAN RTX means business – and a lot of it. This jack-of-all-trades graphics card caters to those with serious visual computing needs, whether it be designing and rendering 3D scenes, or poring over repositories of photos or other data with deep-learning work.

Page 1 – Introduction & Testing References

NVIDIA’s TITAN series of graphics cards has been an interesting one since the launch of the original in 2013. That Kepler-based GTX TITAN model peaked at 4.5 TFLOPS single-precision (FP32), performance that was boosted to 5.1 TFLOPS with the release of the TITAN Black the following year.

Fast-forward to the present day, where we now have the TITAN RTX, boasting 16.3 TFLOPS of single-precision, and 32.6 TFLOPS of half-precision (FP16). Double-precision (FP64) used to be standard fare on the earlier TITANs, but today, you’ll need the Volta-based TITAN V for unlocked performance (6.1 TFLOPS), or AMD’s Radeon VII for partially unlocked performance (3.4 TFLOPS).

Lately, half-precision has garnered a lot of attention by the ProViz market, since it’s ideal for use with deep-learning and AI, things that are growing in popularity at a ridiculously quick pace. Add specifically tuned Tensor cores to the mix, and deep-learning performance on Turing becomes truly impressive.

Tensors are not the only party trick the TITAN RTX has. Like the rest of the RTX line (on both the gaming and pro side), RT cores are present in the TITAN RTX, useful for accelerating real-time ray tracing workloads. The cores need to be specifically supported by developers, using APIs such as DXR and VKRay. While support for NVIDIA’s technology started off tepid, industry support has grown a lot since the original unveiling of RTX at SIGGRAPH last year.

At E3 in June, a handful of games had ray tracing-related announcements, including Watch_Dogs: Legion, Cyberpunk 2077, Call of Duty: Modern Warfare, and of course, Quake II RTX. On the design side, some developers have already released their RTX accelerated solutions, while many more are in the works. NVIDIA has been talking a lot lately about the Adobes and Autodesks of the world helping to grow the list of RTX-infused software. We wouldn’t be surprised if more RTX goodness was revealed at SIGGRAPH this year yet again.

For deep-learning, the TITAN RTX’s strong FP16 performance is fast on its own, but there are a few perks onboard to help take things to the next level. The Tensor cores aid in much of the acceleration, but the ability to use mixed precision is another big part. With it, minimal data tracking will be stored in single-precision, while the key data will get crunched in half-precision. Everything combined, this can boost training performance by 3x over the base GPU.

Also notable for Turing is concurrent integer / floating-point operations, which allows games (or software) to execute INT and FP operations in parallel without tripping over each other in the pipeline. NVIDIA has noted in the past that with games like Shadow of the Tomb Raider, a sample set of 100 instructions included 62 FP and 38 INT, and that this concurrent feature directly improves performance as a result.

Another important feature of TITAN RTX is its ability to use NVLink, which essentially combines the memory pools of two cards together, resulting in a single framebuffer that can be used for the biggest possible projects. Since GPUs scale generally very well with the sorts of workloads the card targets, it’s the true memory pooling that’s going to offer the greatest benefit here. Gaming content that could also take advantage of multi-GPU would see a benefit with two cards and this connector, as well.

Because it’s a feature exclusive to these RTX GPUs right now, it’s worth mentioning that NVIDIA also bundles a VirtualLink port at the back, allowing you to plug in your HMD for VR, or in the worst case, use it as a full-powered USB-C port, either for data transfer or phone charging.

With all of that covered, let’s take a quick look at the overall current NVIDIA workstation stack:

| NVIDIA’s Quadro & TITAN Workstation GPU Lineup | |||||||

| Cores | Base MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| GV100 | 5120 | 1200 | 14.9 TFLOPS | 32 GB 8 | 870 GB/s | 185W | $8,999 |

| RTX 8000 | 4608 | 1440 | 16.3 TFLOPS | 48 GB 5 | 624 GB/s | ???W | $5,500 |

| RTX 6000 | 4608 | 1440 | 16.3 TFLOPS | 24 GB 5 | 624 GB/s | 295W | $4,000 |

| RTX 5000 | 3072 | 1350 | 11.2 TFLOPS | 16 GB 5 | 448 GB/s | 265W | $2,300 |

| RTX 4000 | 2304 | 1005 | 7.1 TFLOPS | 8 GB 1 | 416 GB/s | 160W | $900 |

| TITAN RTX | 4608 | 1350 | 16.3 TFLOPS | 24 GB 1 | 672 GB/s | 280W | $2,499 |

| TITAN V | 5120 | 1200 | 14.9 TFLOPS | 12 GB 4 | 653 GB/s | 250W | $2,999 |

| P6000 | 3840 | 1417 | 11.8 TFLOPS | 24 GB 6 | 432 GB/s | 250W | $4,999 |

| P5000 | 2560 | 1607 | 8.9 TFLOPS | 16 GB 6 | 288 GB/s | 180W | $1,999 |

| P4000 | 1792 | 1227 | 5.3 TFLOPS | 8 GB 3 | 243 GB/s | 105W | $799 |

| P2000 | 1024 | 1370 | 3.0 TFLOPS | 5 GB 3 | 140 GB/s | 75W | $399 |

| P1000 | 640 | 1354 | 1.9 TFLOPS | 4 GB 3 | 80 GB/s | 47W | $299 |

| P620 | 512 | 1354 | 1.4 TFLOPS | 2 GB 3 | 80 GB/s | 40W | $199 |

| P600 | 384 | 1354 | 1.2 TFLOPS | 2 GB 3 | 64 GB/s | 40W | $179 |

| P400 | 256 | 1070 | 0.6 TFLOPS | 2 GB 3 | 32 GB/s | 30W | $139 |

| Notes | 1 GDDR6; 2 GDDR5X; 3 GDDR5; 4 HBM2 5 GDDR6 (ECC); 6 GDDR5X (ECC); 7 GDDR5 (ECC); 8 HBM2 (ECC) Architecture: P = Pascal; V = Volta; RTX = Turing |

||||||

The TITAN RTX matches the Quadro RTX 6000 and 8000 for having the highest number of cores in the Turing lineup. NVIDIA says the TITAN RTX is about 3 TFLOPS faster in FP32 over the RTX 2080 Ti, and fortunately, we have results for both cards covering a wide-range of tests to see how they compare.

What’s not seen in the specs table above is the actual performance of the ray tracing and deep-learning components. This next table helps clear some of that up:

| NVIDIA’s Quadro & TITAN – RTX Performance | ||||||

| RT Cores | RTX-OPS | Rays Cast 1 | FP16 2 | INT8 3 | Deep-learning 2 | |

| TITAN RTX | 72 | 84 T | 11 | 32.6 | 206.1 | 130.5 |

| RTX 8000 | 72 | 84 T | 10 | 32.6 | 206.1 | 130.5 |

| RTX 6000 | 72 | 84 T | 10 | 32.6 | 206.1 | 130.5 |

| RTX 5000 | 48 | 62 T | 8 | 22.3 | 178.4 | 89.2 |

| RTX 4000 | 36 | 43 T | 6 | 14.2 | 28.5 | 57 |

| Notes | 1 Giga Rays/s; 2 TFLOPS; 3 TOPS | |||||

You’ll notice that the TITAN RTX has a higher “rays cast” spec than the top Quadros, which might owe its thanks to higher clocks. The other specs are identical across the top three GPUs, with obvious downgrading taking place as we move downward. Currently, the Quadro RTX 4000 (roughly a GeForce RTX 2070 equivalent) is the lowest-end current-gen Quadro from NVIDIA. Again, SIGGRAPH is almost upon us, so it could be that NVIDIA will have a hardware surprise in store; perhaps an RTX 2060 Quadro equivalent.

When the RTX 2080 Ti already offers so much performance, who exactly is the TITAN RTX for? NVIDIA is targeting it largely at researchers, but it secondarily acts as one of the fastest ProViz cards on the market. It could be opted for by those who want the fastest GPU solution going, and not to mention a huge 24GB framebuffer. 24GB might be a bit much for a lot of current visualization work, but with deep-learning, 24GB provides a lot of breathing room.

Despite all it offers, TITAN RTX can’t be called an “ultimate” solution for ProViz since it lacks some Quadro optimizations that the namesake GPUs have. That means in certain high-end design suites like Siemens NX, a true Quadro might prove better. But if you don’t use any workloads that experience specific enhancements, the TITAN RTX is going to be quite attractive given its feature-set (and that framebuffer!) If you’re ever confused about optimizations in your software of choice, please leave a comment!

A couple of years ago, NVIDIA decided to give some love to the TITAN series with driver enhancements that brings some parity between TITAN and Quadro. We can now say that TITAN RTX enjoys the same kind of performance boosts that the TITAN Xp did two years ago, something that will be reflected in some of the graphs ahead.

Test PC & What We Test

On the following pages, the results of our workstation GPU test gauntlet will be seen. The tests chosen cover a wide range of scenarios, from rendering to compute, and includes the use of both synthetic benchmarks and tests with real-world applications from the likes of Adobe and Autodesk.

Nineteen graphics cards have been tested for this article, with the list dominated by Quadro and Radeon Pro workstation cards. There’s a healthy sprinkling of gaming cards in there as well, however, to show you any possible optimization that may be taking place on one or the other.

Please note that the testing for this article was conducted a couple of months ago, before an onslaught of travel and product launches. Graphics card drivers released since our testing might improve performance in certain cases, but we wouldn’t expect any notable changes, having sanity checked a bunch of our usual tested software on both AMD and NVIDIA GPUs. Likewise, the previous version of Windows was used for this particular testing, but that also didn’t reveal any disadvantages when we sanity checked in 1903.

In recent months, we’ve spent a lot of time polishing our test suites, and also our internal testing scripts. We’re currently in the process of rebenchmarking a number of GPUs for an upcoming look at ProViz performance with cards from both AMD’s Radeon RX 5700 and NVIDIA’s GeForce SUPER series. Fortunately, results from those cards don’t really eat into a top-end card like the TITAN RTX, so tardiness hasn’t foiled us this time.

The specs of our test rig are seen below:

| Techgage Workstation Test System | |

| Processor | Intel Core i9-9980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | HyperX FURY (4x16GB; DDR4-2666 16-18-18) |

| Graphics | AMD Radeon VII (16GB) AMD Radeon RX Vega 64 (8GB) AMD Radeon RX 590 (8GB) AMD Radeon Pro WX 8200 (8GB) AMD Radeon Pro WX 7100 (8GB) AMD Radeon Pro WX 5100 (8GB) AMD Radeon Pro WX 4100 (4GB) AMD Radeon Pro WX 3100 (4GB) NVIDIA TITAN RTX (24GB) NVIDIA TITAN Xp (12GB) NVIDIA GeForce RTX 2080 Ti (11GB) NVIDIA GeForce RTX 2060 (6GB) NVIDIA GeForce GTX 1080 Ti (11GB) NVIDIA GeForce GTX 1660 Ti (6GB) NVIDIA Quadro RTX 4000 (8GB) NVIDIA Quadro P6000 (24GB) NVIDIA Quadro P5000 (12GB) NVIDIA Quadro P4000 (8GB) NVIDIA Quadro P2000 (5GB) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 17763 (1809) |

| Drivers | AMD Radeon: Adrenaline 19.4.1 AMD Radeon Pro: Enterprise 19.Q1.2 NVIDIA GeForce & TITAN: Creative Ready 419.67 NVIDIA Quadro: Quadro 419.67 |

Our benchmark results are categorized and spread across the following six pages. On page 2, we’re looking at some CUDA-based renderers, including V-Ray, Redshift, OctaneRender, and Arnold GPU. Some of these will add support for non-CUDA GPUs in time, and when that happens, we’ll introduce the tests to our Radeon benchmarking suite. Page 3 includes a number of more neutral renderers, like Blender, Radeon ProRender, and LuxMark.

On page 4, we’re tackling encoding with the help of Adobe’s Premiere Pro and MAGIX’s Vegas Pro, while page 5 is home to viewport performance, largely covered with the help of SPECviewperf. In total, 8 test results are featured here, covering important design suites like CATIA, SolidWorks, Siemens NX, Creo, as well as Autodesk’s 3ds Max and Maya. Our own Blender viewport test wraps the page up. And speaking of wrapping things up, page 6 covers mathematical performance with the help of SiSoftware’s Sandra.

And with all of that covered, let’s get on with things:

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!