- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Summer 2020 GPU Rendering Performance In Arnold, KeyShot & Octane

Over the past couple of weeks, we’ve taken a look at current performance for a couple of CUDA-only renderers, V-Ray and Redshift. For this article, we’re going to group a trio of others together, and bring is up-to-date on current CUDA and OptiX rendering with Autodesk Arnold, Luxion KeyShot, as well as OTOY Octane.

We recently took an in-depth look at performance in Chaos Group’s new V-Ray 5, as well as Maxon’s Redshift 3, but that wasn’t all of the recent CUDA/OptiX testing we completed. For this article, we’re going to take a look at updated GPU rendering performance with the help of Autodesk’s Arnold (inside of Maya), Luxion’s KeyShot, and OTOY’s Octane.

This is far from being the first time we’ve performance-tested any one of these solutions, but because optimizations are being released through updates all of the time, it’s worth revisiting our tests once in a while to see if obvious improvements (or in rare cases, regressions) can be seen.

Since the last time we took a full look at Autodesk’s Arnold, we’ve seen an update from 3.2 to 4.0, while Luxion’s KeyShot has been bumped from 9.0 to 9.3. With OTOY’s Octane, we’re a bit stuck, since we don’t have access to the full plugin, or even a project to test with. While OctaneRender 2020 just released, we’re sticking with the preexisting OctaneBench and OctaneBench RTX for our testing here.

Here’s the test rig used for all of our benchmarking in this article:

| Techgage Workstation Test System | |

| Processor | Intel Core i9-10980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL FlareX (F4-3200C14-8GFX) 4x16GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA TITAN RTX (24GB, GeForce 446.14) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 446.14) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 446.14) NVIDIA GeForce RTX 2060 (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 SUPER (6GB, GeForce 446.14) NVIDIA GeForce GTX 1660 Ti (6GB, GeForce 446.14) NVIDIA Quadro RTX 6000 (24GB, GeForce 446.14) NVIDIA Quadro RTX 4000 (8GB, GeForce 446.14) NVIDIA Quadro P2200 (5GB, GeForce 446.14) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 19041.329 (2004) |

| All product links in this table are affiliated, and help support our work. | |

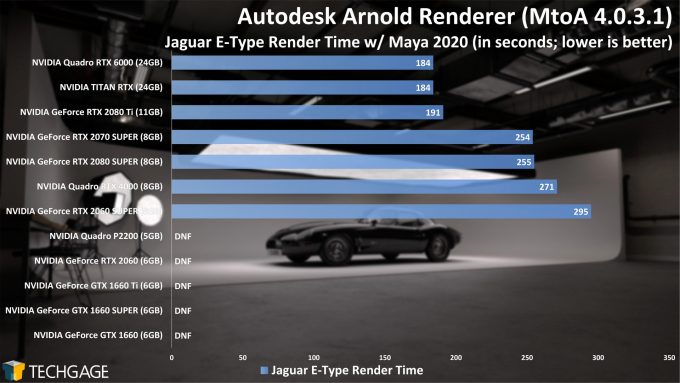

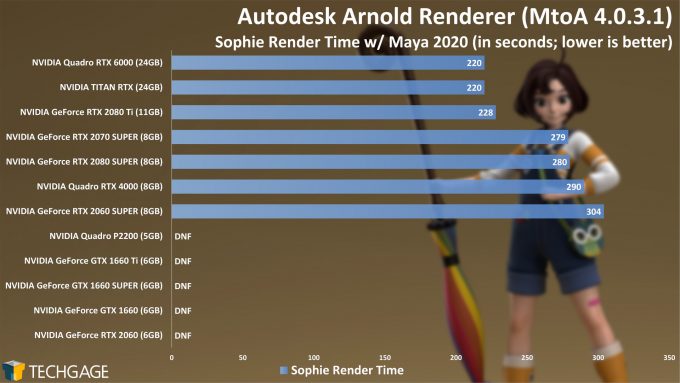

Autodesk Arnold

We’ve seen it time and time again, ever since the Arnold GPU beta: low-memory GPUs are bad news. There have been occasions where we’ve managed to get one of these projects to render on these smaller GPUs, but success seems to be blind luck. If you want to do GPU rendering with Arnold, you’ll want to make sure you have at least an 8GB frame buffer to help negate this problem. This is likely the result of not fully implementing out-of-core rendering, proving GPUs access to system memory.

Beyond those did-not-finish results, scaling is pretty much what we’d expect across the remaining GPUs, with both the TITAN RTX and RTX 6000 performing on par with one another. The RTX 2070-class Quadro RTX 4000 inches ahead of the 2060 SUPER, while the RTX 2080 SUPER performs the same as a 2070 SUPER – something we’ve seen before, and will see again before this article is through.

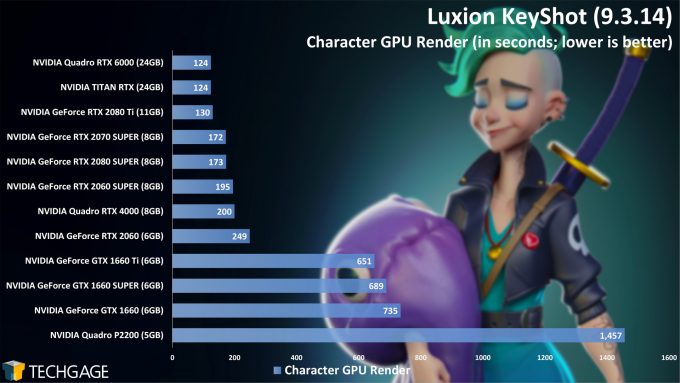

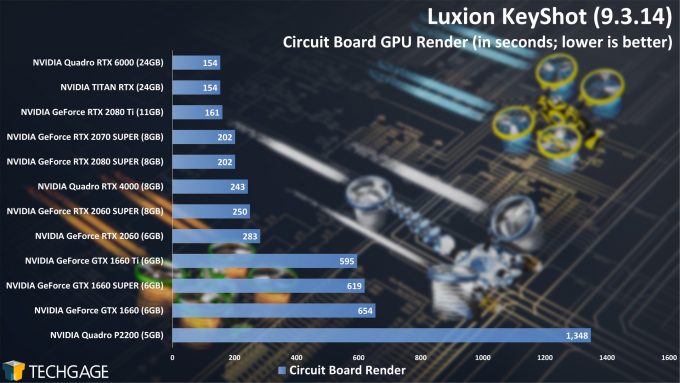

Luxion KeyShot

We’re seeing much more interesting scaling in KeyShot, compared to Arnold, thanks hugely to the fact that even the lower-end GPUs can survive the test. Remember how the 2080 SUPER nonsensically performed the same as the 2070 SUPER in Arnold? Well, the same thing happened here. Again, this is not the first time we’ve seen this behavior, and each time, it’s repeatable in our testing.

Clearly, a card like the Quadro P2200 isn’t designed for rendering to begin with, but these results prove why. The difference in time it takes between the P2200 and the next step up (GTX 1660) is huge, so if you care at all about rendering performance (and obviously you do), low-end GPUs need to be avoided. And, if you don’t avoid them because of weak performance, you should ignore them because of their limited memory. But these low-end GPUs are normally used for CAD, rather than 3D design and rendering.

Aside from the 2080 SUPER and 2070 SUPER performing the same, the rest of the scaling is just what we’d expect. While we’ve already said that lower-end GPUs should be avoided, it’s still interesting to see how the three different GTX 1660 models perform against each other, and across different projects.

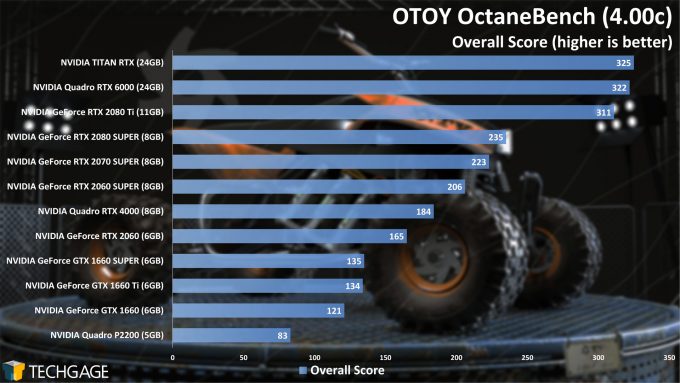

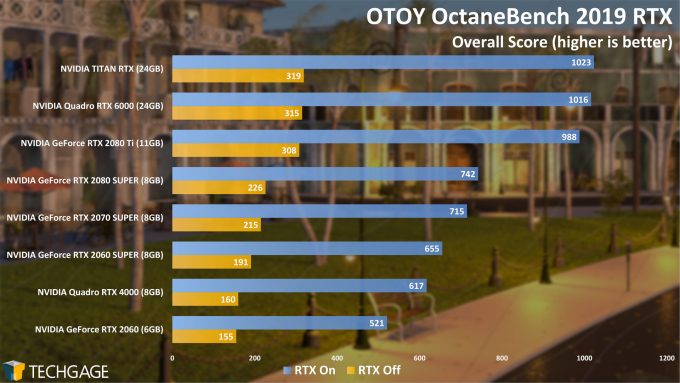

OTOY Octane

As mentioned earlier, we don’t have access to the latest version of OctaneRender, and even if we did, we don’t have projects kicking around to test with. For now, we’re going to stick to using the outgoing OctaneBench benchmarks, which should still scale in a similar manner as the new 2020 renderer. Of course, if OTOY carries its 2020 vision over to OctaneBench, we’ll upgrade and share that performance once it’s available.

Overall, we’re seeing expected scaling once again, with the top-end GPUs doing a good job of separating themselves from even the middle of the pack. In the RTX-specific test, the improvements seen when enabling OptiX accelerated ray tracing is simply huge. How this carries over to the real-world is one thing we’re not sure of, and while we haven’t seen gains to the level exhibited here, our recent V-Ray 5 and Redshift 3 performance articles highlight that OptiX is almost certainly worth enabling for a free performance boost (free if you already have RTX hardware, that is).

Final Thoughts

There isn’t much that can be said here that isn’t obvious. Where rendering is concerned, it’s impossible to ignore NVIDIA’s RTX line of GPUs. The Turing architecture itself has proven to bring notable performance improvements to rendering, but when accelerated ray tracing is added in, the overall reduction in render times can be enormous.

For most creative use, unless your budget is seriously limited, we’d have to suggest the $399 USD 2060 SUPER as the ideal starting point. It gains 2GB of memory over the non-SUPER 2060, and the performance boost it bundles is probably worth that premium alone. For those willing to spend a bit more, the $499 USD 2070 SUPER is also an alluring choice. It has the same 8GB frame buffer as the 2060 SUPER, but ups the performance fairly well to justify the price premium.

In this article, we’ve seen odd scaling out of the 2080 SUPER twice, where the 2070 SUPER somehow performed the same (or fractionally better). Unless you explicitly know that the 2080 SUPER doesn’t behave like this in your particular workload, then you should likely settle with the 2070 SUPER, or splurge on the ~$1,000 USD 2080 Ti. That top-end offering would offer a huge performance boost over the others, and also includes a beefier 11GB frame buffer.

This article finishes off our latest round of CUDA-specific testing, something we’ve been wanting to get done since our look at AMD’s Radeon Pro W5500 in May. On the Radeon front, our Octane inclusion in a CUDA-only article is going to change in the future, as OTOY is working on AMD GPU support for both Windows and Mac, and as we noted in our look at Redshift 3 a couple of weeks ago, Radeon support will eventually also be making its way there.

That said, even if all of these solutions did support Radeon right now, NVIDIA is offering attractive options that would otherwise be impossible to ignore. We’ve seen proof of what ray tracing acceleration in rendering can do, as well as AI denoising when accelerated by Tensors. Like everyone else, we’re excited to see what AMD has in store next, and hope it evens the playing field a bit better. For that matter, we’re also intrigued by what NVIDIA will offer next – whenever that will be. What we do know for sure is that once these future GPUs arrive, we’ll be quick to get back to the test bench.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!