- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

ATI’s Eyefinity Technology Put to Proper Test

In our news section a few weeks ago, we talked about ATI’s new Eyefinity technology, one that would allow you to play most of your games across multiple monitors with relative ease. This kind of thing has been done before, but the difference here is that it seems to work well with more games than other technologies, and the best part? The price tag is far lower. All you need is an ATI Radeon HD 5000-series card.

I admit that at first, I wasn’t that impressed. I even saw a demo in person last week, and even then, it didn’t seem like something I could picture myself using. For me personally, the monitor bezels are a huge reason for that… I just can’t stand having my gameplay split up to multi-monitors. After checking out a fully-detailed video review of the product at HardOCP though, I have to say… I think I changed my mind.

It’s one thing to see one game being run on Eyefinity, but it’s another to see many different games being put through the ringer, such as Counter-Strike: Source, Need for Speed: Shift, Crysis Warhead, Half-Life 2: Episode Two, Call of Duty: World at War, and more. There’s a lot of gameplay shown during the video, and it’s become clear to me that after a while, your mind is just going to play tricks on you, and the bezels will disappear. It really does look like it could make gaming a lot more fun.

There are some caveats, primarily with the display adapters you must use. A DisplayPort or DVI to Mini DisplayPort adapter isn’t exactly inexpensive, although ATI is apparently testing out different adapters in their labs to see if they can’t certify some more affordable ones. After all, if you have 3x 24″ or better monitors, who wants to spend another $100 or so on a cable or cables? Still, despite the cost-of-entry, this is very cool technology.

We have been holding off to hopefully give a more robust look at what Eyefinity might do for you in a gaming scenario. There are still some unanswered questions as to Eyefinity compatibility, but certainly AMD is aware of your questions and is working hard to get those answered. I felt as though I wanted to cover Eyefinity in a video format, and we have published that video below. We hope you enjoy it and it gets you a bit more acquainted with what Eyefinity might actually do for your gaming experience.

| Source: HardOCP |

Discuss: Comment Thread

|

Who’s the Winner of the Core i7 Gaming PC?

As I sit here, sipping my morning coffee, it looks to be a rather depressing day outside. It’s pouring rain, and not so bright out. So to lighten the day and mood, I think now would be as good a time as ever to announce the winner to our Core i7-870 gaming PC. Before I do that, though, I want to give a big thanks to everyone who has participated… the contest was a great success!

Without further ado, the official winner of our gaming PC is “srpoole“! On behalf of the entire staff, I’d like to give a huge congratulations to our lucky winner. As a reminder, srpoole must contact us within two weeks in order to claim the prize. If I don’t hear back by then, I will have to draw a new name from the hat. It’s not too likely that this will happen, though.

With that out of the way, I’d like to take a moment to stress that if you cheat in one of our contests, you won’t be getting away with it. Believe it or not, the first name I drew from the hat wasn’t srpoole. After investigation of that entry, however, it was revealed that the person entered more than once. Each entry was minutes apart, on the same IP address with a 100% identical answer. Entering one of our contests like this is just a waste of time. We will find out if you cheated or not. Plus, it wastes my time. I had thought I already had all the cheaters eliminated, but this forced me to go through the huge list a second time. *grumble*

If you’re not srpoole, don’t fret… there are more contests on the way. Not all our contests will consist of such stellar prizes as a gaming PC, but our goal is to begin rewarding our loyal readers on a regular basis. Winning something like a motherboard is a lot better than nothing at all, for example. With this contest wrapped up… I’ll give a quick preview of our next contest, which will launch in the next few weeks.

I won’t discuss details of how the contest will work (still working on it), but the prize will consist of NVIDIA’s 3D Vision, Samsung’s SyncMaster 2233 22″ 120Hz gaming monitor, a copy of Resident Evil 5 and also Batman: Arkham Asylum. Since I received more than one copy of the latter, the spare will be given away to a runner-up. Stay tuned as I hope to post the contest by the middle of October (I wanted to review at least one of these games before launching the contest, and that’s still the goal).

Huge thanks to Intel for teaming up with Techgage to give away the Core i7-870 gaming PC!

|

Discuss: Comment Thread

|

Is RAID Almost a Thing of the Past?

We’ve gone over the importance of keeping your data safe many times over the life of the site, but one method of doing so, RAID, we haven’t talked about too much. RAID, in layman’s terms, is the process of taking more than one drive and making your data redundant, so that if a drive fails, you can easily get your machine back up and running. RAID can also be used for performance, or both.

For those who use RAID for redundancy, though, a writer at the Enterprise Storage Forum questions the future of the tech, and states that it could be on its way out. Back when hard drives were measured mostly in gigabytes rather than terabytes, rebuilding a RAID array took very little time. But because densities have been constantly growing, and speed hasn’t been at the same rate, rebuilding an array on a 1TB+ takes far longer than anyone would like… upwards of 20 hours.

What’s the solution? To get rid of RAID and look for easier and quicker backup schemes, or something entirely different? The writer of the article offers one idea… to have a RAID solution that doesn’t rebuild the entire array, but rather only the data used. Still, that would take a while if your drive is loaded to the brim with data. Another option is an improved file system. Many recommend ZFS, but that’s only used in specific environments, certainly not on Windows.

This question also raises the issues with Microsoft’s NTFS, and we have to wonder if now would be a great time for the company to finally update their file system (chances are they are). It’s not a bad FS by any means, but with this RAID issue here, and SSDs preparing to hit the mainstream, it seems that we’re in need of an updated and forward-thinking FS. My question is, if you use RAID, what’s your thoughts on it? Do you still plan to use it in the future, despite the large rebuild times?

What this means for you is that even for enterprise FC/SAS drives, the density is increasing faster than the hard error rate. This is especially true for enterprise SATA, where the density increased by a factor of about 375 over the last 15 years while the hard error rate improved only 10 times. This affects the RAID group, making it less reliable given the higher probability of hitting the hard error rate during a rebuild.

| Source: Enterprise Storage Forum |

Discuss: Comment Thread

|

Move Over RRoD, Welcome the Yellow Light of Death

Since the console’s launch, Microsoft has received a lot of flack for the infamous “Red Ring of Death”, named after the red ring that appears around the console’s start button. If you happen to see it, you’re going to be in for some disappointment. My first Xbox 360, which I bought the first second (alright, about 5 minutes after) the console launched, died of the RRoD in December of 2007. I was not pleased.

I’m certainly not alone, as the RRoD failure rate was incredibly high. If you saw a full or half ring, you were usually fine as it’s usually a fixable error. However, if you saw 1/4th or 3/4th of a ring, you might as well waste no time and give Microsoft a call. The big question is… how is it that Sony has escaped such problems with their PlayStation 3? The answer? They haven’t.

As BBC’s Watchdog programme exposes, the PlayStation can fail just as well and the result will be what gamers have called the “Yellow Light of Death”. It refers to the console’s power button, and if yellow, the console will need to be repaired. When Sony was questioned about this, the company stated that they offer repair service, although it’s a little expensive, at ~£128.

Surprisingly, a YLoD doesn’t always mean an unfixable console. In the Watchdog’s tests, all 16 consoles killed by a YLoD were fixed by a local repairman, by heating up the circuit board to re-melt the solder. Sadly, 5 of those 16 died again, so the solution isn’t always an ultimate fix. It also appears that the launch 60GB consoles are of the most affected, which is a little scary since that’s what I own. Even swapped with a new console I’d be a bit concerned, due to reasons I posted about just a few weeks ago.

More than 150 Watchdog viewers have contacted us to say they’ve experienced it, and by Sony’s own admission, around 12,500 of the 2.5 million PlayStations sold in the UK have shut down in this way since March 2007. The problem is mainly thought to affect the 60GB launch model, but Sony repeatedly refuses to release the failure rate for that model, claiming that the information is “commercially sensitive”.

| Source: BBC: Watchdog |

Discuss: Comment Thread

|

GIMP 2.8 Brings a Streamlined Interface (a la Photoshop)

Artists don’t have to look too far to find a good image manipulation tool. More often than not, Adobe’s Photoshop is right at the top of their list. But the problem is obvious… the cost. At $700, it’s not exactly welcoming to the novice, or even those who are professional but quite haven’t made a business out of it. There’s always the much lesser-expensive Photoshop Elements, and that’s a great choice, but it’s not exactly geared towards savvy artists.

Of course, free tends to be a preferred option by many, and that’s where applications like GIMP come in. One of the primary reasons for this may be in the fact that it’s one of the oldest – it’s been around since 1996. It’s continued to get better and better over time, and the future for the application looks bright. In version 2.8, the developers have really listened to the application’s users, and as a result, it may be taken more seriously by the bulk of the artists out there.

If there’s just one feature to be pointed out about 2.8, it’s something that many people have wanted for a while… a UI style similar to Photoshop. Don’t worry… if you happen to dislike Photoshop’s UI, the option to revert to the classic style will remain. I, for one, am really looking forward to this, though, because I’ve always despised having separate windows open for a single application. This was made even worse with version 2.6, when two windows would appear in the task bar at all times (the tools and main application). It’s this reason alone that I’ve stuck with 2.4.

Like Photoshop, the toolbox would be found at the left side of the entire application, with image-specific options on the right, such as layers, paintbrushes, et cetera. So unlike Photoshop, which stores a fair amount of options at the top under the menu, GIMP 2.8 is focusing more on the left and right sides (which is typically fine given the wide resolutions of today).

You can read a lot more about the upcoming version at the link below. The release is unknown, and I can’t even guess as to a date, but if I had to, I’d guesstimate ~4 – 6 months (don’t quote me).

The high number of contributions in the singleâ€window category confirms that this is a major issue where action needs to be taken. The many forms the contributions take shows us that a measure of flexibility and configurability is needed. Next, I reminded everyone that it is a fifty–fifty world: about 50% of GIMP users love the multiâ€window interface of today and do not want to lose it, about 50% of GIMP users would love to move to a singleâ€window interface.

| Source: m+mi works |

Discuss: Comment Thread

|

Lucid’s Multi-GPU Solution Out Next Month

We first reported on Lucid’s multi-GPU solution last fall, and to be honest, I didn’t expect much of an update by this point. I was wrong, as the company states that they will be shipping product next month. It won’t be an add-in solution, at least right off, but rather a feature built into an upcoming motherboard.

Details are somewhat scarce since we haven’t had a private meeting with the company up to this point (that will happen tomorrow). I checked out their booth in the showcase area, however, and up and running were two demo machines, showcasing what the technology is capable of. At this point, it looks like their promises of last year have come to fruition, so the next few months should be very interesting.

As a quick refresher, Lucid’s technology is to essentially replace the need for CrossFireX and SLI, by splitting up the gameplay across multiple GPUs. You don’t even need to use the same GPUs, but are free to mix and match between models (you can even mix and match ATI and NVIDIA).

MSI will be the first motherboard vendor to include the feature on their upcoming “Big Bang” P55-based board. The larger motherboard vendors are understandably skeptical as to market success, but I have little doubt that they will be incredibly quick to implement it on their own boards if it’s the least bit successful.

I’ll know a lot more after the meeting, where I’ll get some hands-on time with the tech across various GPU configurations.

| Source: Lucid Press Release (PDF) |

Discuss: Comment Thread

|

S-ATA 6Gbit/s HDDs and Motherboards Right Around the Corner

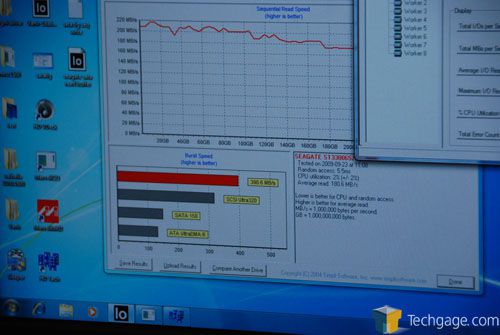

At IDF, there are many technologies floating about that are close to shipping, including S-ATA 6 Gbit/s. On the show floor is Marvell, showing off their latest controller for upcoming S-ATA 6 Gbit/s drives, along with an ASUS P7DP55D Premium motherboard, which will launch with a few such ports on the board. To show overall performance, Marvell also had a prototype S-ATA 6Gbit/s SSD on-hand (the company only sells the controllers, not SSDs as a whole).

You can see below the test machine that was set up. You can’t see it from this view, but a Seagate Barracuda 7200.12 2TB drive was settled in behind, and it was what was hooked up to the latest S-ATA 6 GBit/s port. We have both this particular board and drive en route, so you can expect some in-depth reports soon.

Curious about performance? As you can see in the screenshot below, with HD Tach RW3, the drive hit near 400MB/s burst, 180MB/s average read (!) and a low random access time of 5.5ms. I’m not entirely confident that this wasn’t a RAID setup, but I was told it wasn’t. However, 180MB/s read is a little (lot) higher than I would have expected. We’ll get to the nitty gritty in the coming weeks when we can get down to our own testing.

As S-ATA 6Gbit/s boards launch, it’s unlikely that every port on any particular model will support it. Rather, companies are likely to start off small, and devote 2 ports to the new tech, and make them all S-ATA 6 across the board on really high-end boards. S-ATA 6GBit/s is a good thing… faster bandwidth, lower latencies, and ultimately, better performance. Stay tuned… we have lots more to share in the coming weeks.

|

Discuss: Comment Thread

|

Intel Sees Bright Future for Atom & Moblin

At IDF, Paul Otellini gave the opening keynote, and by the end, it became clear that Atom is a huge part of the company’s future. Otellini stated that Atom isn’t only going to be used for netbooks and nettops, but in the future, Atom will act as a base to virtually all devices that don’t require high raw performance… from netbooks to MIDs to phones and so forth.

To prove just how serious the company is about the future of Atom, they have launched a developer network for use as a resource for developing applications for use across the wide-variety of platforms that will feature Atom. In addition, you can expect to see “app stores” available for Atom-equipped device, similar to app stores for devices like the iPhone.

The best part? Because these applications will be based on Intel’s Atom development toolkits, they will run across both Windows and Moblin-based devices. This means huge potential for developers, since they can focus development on IA, and have it run on multiple platforms. Moblin is another focus of Intel’s, and I’m happy to hear it. It’s open-sourced, and can be used anywhere, from cell phones to MIDs to small desktops.

What should you take away from this? Expect Atom to become an integral part of future computing, especially where mobile is concerned. Otellini even went on to state that he sees Atom outselling the company’s desktop processors in the near-future. That’s a pretty bold statement, but given that many people own just one or two computers, yet many mobile devices, it’s easy to believe.

The overall theme of the keynote was this… the continuum. Intel wants to see technology grow and constantly improve, and to help accomplish this, their product developments and the developers are going to make some great things happen. During the keynote, Otellini showed a slide of ideas that the regular consumer had for future technology, and though some were wacky, some ideas were great (such as a retina-based security system). The future is indeed bright.

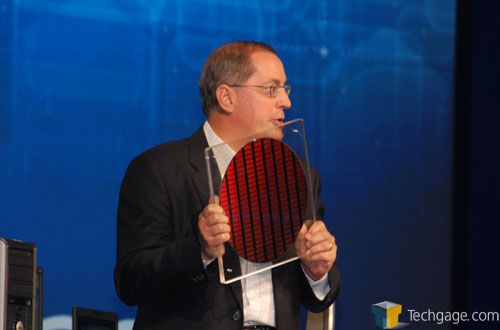

On the performance desktop side, 32nm Westmere is currently in production and will become available during Q4 of this year, with Core i3 processors due in early 2010. A 22nm wafer, as seen above, was also showed off (of SRAM), and Intel stated that it’s on track to launch in 2011, complying with the company’s Tick/Tock cadence.

More later…

|

Discuss: Comment Thread

|

Off to IDF 2009

Welp, I’m out the door once again to head to the west coast, we’re I’ll be attending the latest iteration of Intel’s Developer Forum. As always, you can expect regular updates throughout the show, not just of Intel’s own developments, but their partners as well (and there are plenty).

If you recall our Taiwan trip from a few months ago, I kept a regular “diary” of sorts in our forums which let you experience Computex along with us… not just with information from the show, but other fun stuff outside the show as well. In all honesty, IDF and San Francisco isn’t near as interesting as Taiwan (at least, in my opinion), but I plan to continue the tradition and give an inside peek of what goes on when we attend IDF (minus the shower scenes).

So what’s to look forward to this year? Well, there won’t be as much desktop talk this year, primarily due to the fact that Lynnfield was released just weeks ago. I don’t expect there to be a total lack from that front though… Gulftown isn’t too far off, after all. This year, there is going to be a lot of focus on mobile, small desktop and other related devices, including technical information on Intel’s 32nm SoC and the Jasper Forrest embedded chip, a follow-up on Moore’s Law and it’s direction, Clarksfield (notebook Nehalem) and a lot more technology hovering around TV and entertainment. Oh, and there will be no Larrabee developments, at least we’re told.

Aside from all the really technical information, Intel’s bringing in alternative rock band Maroon 5 to play to a large crowd of geeks. I’m really interested to see some of the company’s engineers rock out. Another tradition is going to dinner with Intel’s coolest press rep, Dan Snyder, and a small group of US (and lone Canadian (me)) journalists. These dinners are always a lot of fun, especially given that Dan really knows how to pick the best wine (last year, we even enjoyed a bottle that was under embargo until just then. No joke).

As always, stay tuned, and post your thoughts/comments/questions in the related thread!

| Source: Techgage IDF Coverage |

Discuss: Comment Thread

|

OCZ Announces Availability of its Z-Drive PCI-E SSD

There has been some doubt over the past few months (especially from competitors) as to whether or not OCZ would deliver their PCI-E “Z-Drive” solid-state disk to market, but according to a press release issued late last week, we should be able to expect immediate availability (as in, availability soon to the end consumer). At the time of this writing, I couldn’t find any e-tailers to stock the product, but I’d expect places like Newegg to stock it very soon.

For those unaware, OCZ’s Z-Drive is the company’s answer to the S-ATA performance bottleneck. Because the drive utilizes the PCI-E bus, speeds faster than what the S-ATA bus can provide are possible. In the case of the P84 1TB drive, 870MB/s Read and 780MB/s Write speeds are possible. That kind of performance is simply a pipe dream where S-ATA drives are concerned.

Because the Z-Drive will be expensive, and understandably so, OCZ has released two versions, the E84 and P84 (I’m unsure what 84 represents). The E version uses SLC chips, so it’s faster, and has a longer lifespan, but should also be considerably more expensive. The P version on the other hand sticks to the much more affordable, yet still fast, MLC chips.

Pricing as of press time haven’t been made available, but I’d expect these to target only businesses and server environments. Considering each Z-Drive utilizes four standard SSDs in RAID, they’re likely to be more expensive than four SSDs of a given speed if you were to buy them individually. Either way, the release of these will hopefully help boost SSDs in the marketplace. The sooner these high-end drives become common, the sooner they become a reality to the end-consumer.

With 8 PCI-E lanes and an internal four-way RAID 0 configuration, the Z-Drive delivers exceptional performance that translates to professional-class data storage in a complete, all-in-one form factor. Additionally, OCZ offers unique customization options for OEM clients that may require tailored hardware or firmware solutions for their business.

| Source: OCZ Press Release |

Discuss: Comment Thread

|

Upgrading the HP dv2 to Windows 7 Home Basic

It’s a little embarrassing to admit, but up until now, I haven’t given Windows 7 much of an honest go. It’s not due to a lack of interest, but rather boils down to available time, which has been scarce lately. But, since we received some RTM copies of the OS recently, I decided that I put off the testing for too long. So, I sucked it up and decided to give it a reasonable test.

Well, for now I won’t give too much opinion on what I think of the OS, but I can say that I’ve been enjoying it quite a bit. In fact, I feel it completely makes up for Vista’s various shortcomings. Within a half-hour of testing it out, I really felt compelled to install it on my main machine, to replace my Windows XP installation (I run Linux as primary, but Windows as secondary). To feel that way about an OS after about 30 minutes of usage says something.

Because a lot of people will be choosing 7 as an upgrade path, rather than a fresh install, I decided to give that scenario a go on two notebooks here, including my HP dv2. This notebook is modest in all regards, and the fact that it came included with Vista Home Basic proves it. So naturally, I chose Windows 7 Home Basic as the upgrade path (actually, I was forced into it… you cannot mix and match versions).

I have always shunned upgrading OS’ in the past, but I have to admit that I was surprised by just how easy it was to upgrade from Vista to 7. The entire process took about 90 minutes, and before I knew it, I was at the desktop, 7-style. Because I prefer a clean install, I then installed fresh, as I wanted to see how much of the hardware it would pick up, especially the GPU, since I was unable to find a 7 driver for it anywhere.

Well as you can see above, the install went quite well. The WEI is rather low, but this is a budget computer after all. What impressed me, though, is that absolutely every piece of hardware in the machine was taken care of. After the install, I was good to hop on the WiFi and download whatever I needed to via Windows Update, including the GPU driver (ATI X1270). I was actually quite surprised that a driver was available at all, and it was a big relief given it would have been a show-stopper.

So far, I’m happy with the overall performance. I have all the applications installed that I need, and though the notebook has a slow processor, it seems totally manageable. Whether or not it’s faster or has better battery-life than Vista, I’m not sure. I plan on dedicating this notebook for use during IDF, so I’ll be able to find out soon enough. Stay tuned!

|

Discuss: Comment Thread

|

AMD Launches Industry’s First Sub-$100 Quad-Core

When Intel released their Lynnfield processors a few weeks ago, we saw some unbelievable value. These Quad-Cores, equipped with their robust Turbo feature, were simply fast, as heck. But, while the Core i5-750 comes in at $200, and P55 motherboards kick off at $109, some might still want to hold off and save even more when the right chip comes along.

Well that right chip might be the new Athlon II X4 620 or 630, released yesterday. The X4 620 in particular becomes the first Quad-Core in the world to be sold for under $100. In this case, $99. Think back to when Quad-Cores first hit the market. The absolute first was $1,000 (Intel Core 2 Extreme QX6700), and shortly after that, we saw the Core 2 Quad Q6600, for just over $500. But here… a Quad-Core, for under $100. That’s impressive.

“Rob, there must be a catch!”, and well, there is. The new Quad is part of the Athlon II series, not the Phenom II, and as such, it doesn’t have any L3 Cache. But, it’s based on a “new” core, Propus, which is based on Deneb. In reviews around the web, the chip doesn’t seem to really lose it’s overclocking-ability, so for those willing to go down that path, you might be able to make up for the lack of L3 Cache.

The best part might be that both the 620 and 630 (~$122) are available right now, and can be paired up with AMD’s latest 785G motherboards – many of which can be found for at or under $100. We’ll be taking a look at the new chip shortly, though the way things are going lately, I know better than to make a promise of a certain day… so I’ll just say soon.

As part of the new desktop platform designed for mainstream consumers, AMD (NYSE: AMD) today announced the first ever quad-core processor for less than $100 Suggested System Builder Price (SSBP). By balancing the power of new AMD Athlon II X4 quad-core processors and the AMD 785G chipset featuring ATI Radeon HD 4200 graphics, AMD delivers smooth HD visuals and the foundation for a great Windows 7 experience.

| Source: AMD Press Release |

Discuss: Comment Thread

|

Microsoft Charges “About $50” for Windows to OEMs, Bing Hits 10%

There’s always been mystery surrounding how much Microsoft charges OEM’s for copies of Windows, but part of the truth was revealed during the Jefferies Annual Technology Conference in New York. Microsoft’s Charles Songhurts, the GM of Corporate Strategy, said that the benchmark for a PC yesterday and today has been $1,000, and for that PC, Microsoft charges “about $50“.

That’s fine, but it becomes confusing when talking about PCs that are either higher or lower than $1,000. Given the $50 value on a $1,000 PC, we can assume that either it’s $50 for most PCs, or 5% up to a certain value. Truly, unless you work for an OEM, chances are you’ll never know exactly how it works. With netbooks, and Windows 7’s ability to run on them, it’s very unlikely that it will cost $50 per copy, but I could be wrong.

If I had to make a hunch, I’d assume that Microsoft scaled the pricing, but I cannot see the OEM pricing going much above $100. Yes, the retail copies are far higher, but while you are purchasing a $200 copy of Home Premium, companies like Dell are buying millions. I think it’s safe to say, though, that when you buy a pre-built PC, you’re getting Windows for less than you would straight from a store.

In unrelated Microsoft news, the company is sure to be thrilled at the news that their decision engine “Bing” has hit a 10% market share, and to that I say wow. There are various Microsoft entities that utilize Bing, sure, but the search only became available in June. At 10.7%, it has roughly 1/6th of the search traffic as Google. It’s important to note that this is all US metric, and the rest of the world is not included.

I’m actually glad to see Bing doing so well, because I use it as my primary search engine and like it quite a bit (see, Linux users do use Bing). It even helped me find the best price for plane tickets last month for the trip down to San Francisco for IDF. If you need to book a flight.. give it a try. It’s actually quite intuitive and fun to use. I admit, I still go to Google for some things, but if I do, it’s always really technical. No other search engine has been able to touch Google where those kinds of searches are concerned.

Songhurst went on to reveal a number that Microsoft has made a point not to disclose to the general public: how much it charges OEMs for Windows. “If you think of the $1,000 PC, which has kind of been the benchmark for the last decade or so, then we’ve always charged about $50 for the copy of Windows for that PC,” Songhurst revealed.

| Source: Ars Technica (OEM Pricing), CNET (Bing) |

Discuss: Comment Thread

|

One Week Left for Our Gaming PC Contest!

Guess what today is? If you guessed, “Why, it’s the one-week before contest end day”, then you couldn’t have been more correct. We launched our gaming PC last week with the help of Intel to help celebrate the launch of the company’s Lynnfield-based processors, and since then, we’ve received many, many, many entries (I am already tired of sorting through ’em!).

But! There’s still a week to go, so if you haven’t mustered up the energy to go seek out our contest words, there’s still some time. Also, if there was any original confusion with our rules, or you couldn’t find all of the instances and gave up, please re-check our rules as we made some slight modifications to get the point across a little bit better. It’s a unique contest, but a little difficult to explain as well!

I’ve also had many people message me to ask if their sentence was approved or if they are able to send in a second just in case, and all I can say is don’t worry. As mentioned on the contest page, I’m certainly not going to obsess over minor details. As long as we receive a sentence with the contest words that simply makes sense, we have no problem accepting it. As proof that there are many ways to say the final contest sentence, only two (yes, 2) people who’ve entered managed to get it right, word for word.

As a reminder, the contest ends next Thursday, at 4:00PM CST (2:00PM PST, 5:00PM EST), which is just about when Intel’s Developer Forum 2009 will also end. It will take me a few days afterwards to sort through the results, pinpoint the cheaters (there have been a few) and then prepare for the random drawing.

As mentioned before, we’re on track to have more contests in the near-future, and I expect that the next will be posted around the first week of October. I still haven’t received what I’ve been waiting for, but I should well before then (it is two weeks away, after all). Either way, stay tuned, because some good stuff is en route!

| Source: Win an Intel Core i7-870 Gaming PC! |

Discuss: Comment Thread

|

Is the Archos 5 the Killer Media Tablet?

Our news section is home to numerous topics, but when it comes to media players, it’s usually either an Apple or Microsoft product we’re talking about. But, those two companies are far from being the two only players in the market. Another is Archos, and they’re certainly not an unknown brand. In fact, if you talk to someone who doesn’t vehemently recommend one of the two above, you can expect “Archos” to come up.

Archos has established a name for itself for releasing quality products with nice displays and tons of functionality. While some other media players offer support for common media formats, Archos tends to support formats you might not have even heard of – which is a huge plus. Of course, they do more than just play media. The new Archos 5, which Computerworld writer Steven J. Vaughan-Nichols takes a look at, boasts many – from Internet, e-mail, GPS, games and more.

The primary benefits that the Archos carries over competing devices comes down to both the size and resolution of the display (4.8-inch, 800×400) and also the fact that it can hold a lot of media. Compared to other players which top out at 32GB or 64GB, because the Archos can use a mechanical hard drive, the current options go up to 500GB.

In addition to the ability to play pretty much any video or audio format, it has the horsepower to playback 720p content right on the device (at a down-scaled resolution, of course). And thanks to its WiFi capabilities, you can still browse the web, check your e-mail, and take advantage of online services like Hulu and Pandora (neither of which are available in Canada!). Not to mention that because it runs Android, downloadable apps are also on the way.

If you want to buy one, they’ll be available tomorrow, September 16th, from Amazon and the Archos Web site. There is one major caveat: this second-generation Archos 5 has the same name as its predecessor and, just to look at it, you could mistake it for its immediate ancestor. If you want to buy one, make darn sure you’re getting the new model. Prices range from $249.99 for the 8GB device to $439.99 for the 500GB top-end model.

| Source: Computerworld |

Discuss: Comment Thread

|

Is Touch in Windows 7 Dead on Arrival?

Although Microsoft has many features they can tout about their upcoming Windows 7, InfoWorld is beginning to question whether the OS’ touch capabilities should be one of them. I don’t entirely disagree, as I have found little use for touch capabilities outside of handheld devices, or for “fun” activities on the computer, such as moving around photos, playing modest games, et cetera.

The question the InfoWorld writer poses, is more whether touch is even needed, rather than whether Windows 7 offers ample enough support. I’ve had many discussions with a few people in the past about this, and for me, it all comes down to lack of use. For most things on the computer, I’m simply not interested in ever using touch rather than a mouse. With a mouse, you have precise control… it’s simple.

With touch, though, buttons generally need to be bigger (or the resolution lowered), and that in itself carries two problems. Either the OS is going to be uglier as a result, or if you keep a normal resolution, then certain buttons / pixels are difficult to push, simply because your fingertip is bigger than what you’re trying to tap. You’re bound to touch something around what you’re trying to touch on a regular basis.

There’s one more aspect that bugs me when I think about touch computing… the fact that you have to touch your PC. No duh, right? Well, imagine having to lift your hand all day to touch areas all over your monitor. It sounds a little counter-intuitive. I can’t say I’ve done a marathon mouse session before and felt exhausted. So, touch obviously isn’t for me, but what do YOU think? Post in the thread and speak your mind!

Credit: madstork91 |

In using a Dell Studio One desktop and an HP TouchSmart desktop — whose touchscreens based on NextWindows’ technology are quite responsive — I found another limitation to the adoption of touch technology in its current guide: The Windows UI really isn’t touch-friendly. A finger is a lot bigger than a mouse or pen, so it’s not as adept at making fine movements.

| Source: InfoWorld |

Discuss: Comment Thread

|

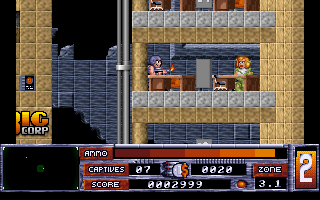

Playing Old PC Games for Free

If there’s one thing that never fails to make me experience some nostalgia, it’s either playing classic games, or reading about them. I pretty much grew up on the PC, with my first “real” PC (meaning one that didn’t break) being a 286. From there, we kept upgrading and played the games that came along with them. Perhaps you were in a similar situation, and even if not, if you played games from the late 70’s, 80s and even 90s, you no doubt would have a blast revisiting them.

Believe it or not, a lot of older games have been decommissioned, meaning, they’ve essentially become freed from the developer and publisher and became public domain (with some rules in place, usually, such as the request to not reverse engineer). That means, there may very-well be many games you played years and years ago that are now completely free, ready to be downloaded.

“Abandonware” is so popular, in fact, that there exist many sites dedicated to it, and many have hundreds upon hundreds of games for download. One of my favorite such sites is Abandonia, and while they say they are dedicated to classic DOS games, you’ll find some Windows titles as well.

So what games can be found there? In a quick look, I see Abuse, Alien Carnage (pictured below), Blackthorne, Blood, Cannon Fodder, Descent, Ghosts ‘N Goblins, The Goonies, Grand Theft Auto, Jazz Jackrabbit 2, Ken’s Labyrinth, Raptor, Skyroads, System Shock… and so much more. And this is just one site! If it’s missing what you’re looking for, it might still be out there.

The site was founded in 1999, when the concept of abandonware was merely two years old. After a few inactive years, it was and has continued to blossom, with new ‘abandoned’ games added nearly every day. At the time of writing, the Abandonia database hosts 1,063 downloadable games and counts a total of more than 100.000 members.

| Source: MakeUseOf |

Discuss: Comment Thread

|

Benchmarking the Latest Hardware is Fun, Unless… Part Two?

Earlier this month, I posted a small rant about how benchmarking can be fun unless something goes wrong. I’m not sure what it is, but no matter what I do, or how hard I try to get on top of things, something is always destined to go wrong. This past weekend was no different! Once again, we planned for a busy week of content, ranging from motherboard reviews, to even a new CPU review, but alas, the poltergeist that sits in our lab won’t let that happen easily.

As we received our copies of Windows 7 recently, I decided it would be a great idea to begin using it for our testing where able. Since we polished off a new methodology for our motherboards, I figured it’d be a great idea to begin using the OS there. So far, so good, and all the issues I’ve experienced are absolutely not related to the OS, which is a stark contrast with how things went down during the Vista launch.

So what problem could I have possibly run into? Well, to understand that, it may pay to check out our recent testing methodologies article (still a work-in-progress). Essentially, to make our lives easier we install the OS we’re going to use, along with the applications we need, and then back up the entire thing as an Acronis backup, so that we can restore that same image each time we test a new motherboard, processor, graphics card, et cetera.

Of course, we don’t install system drivers prior to making a backup, because that would cause issues down the road. Neither do we use an image on platforms it’s not intended for. For example, if we create an image built on a P55 motherboard, we don’t use it on anything except P55 motherboards. We’ve done this for quite a while, and haven’t had a real problem up to this point.

Our Windows 7 Desktop for Motherboard-Testing |

Until this weekend, of course. I am not pointing fingers here, but the problem lies with our Gigabyte P55-UD5, and the issue comes down to AHCI. We have used AHCI mode for our S-ATA and ODDs for the past year or so, as it’s faster and newer, but something about the Gigabyte board doesn’t agree with the others. Since it’s been the P55-UD5 that we began out our testing with, I decided to create the image on there.

The problem is this. After taking that image and restoring it to any other board with AHCI enabled, such as the ASUS P7P55D Pro or Intel DP55KG, Windows would blue-screen-of-death upon boot. After a lot of trial and error, I deemed this issue specific to Gigabyte’s board. If I create an image on ASUS’ or Intel’s board, and restore it to either or, there’s no problems. It all comes down to the P55-UD5, and how it handles AHCI.

Recently, Gigabyte introduced a feature called “Extreme HD” on their boards, which essentially overwrites AHCI – meaning, you will not see mention of AHCI in their BIOS’… just Extreme HD (or xHD). If you enable Extreme HD, it either means you’re going for a RAID setup, or you simply want AHCI enabled. So my question is… what is it about Gigabyte’s board that’s causing issues between boards? This is not a real issue, or a fault of Gigabyte’s (per se), as it’s only specific to people who would ever want to move their Windows over to another machine, but since I’ve never experienced the issue before, I’m definitely a little stumped.

Any motherboard vendor has the option of using secondary HD controllers, but for the primary on the system, they use the S-ATA controller in the chipset, so I’m ruling out the possibility of it being a secondary controller that’s causing an issue. Even then, I’ve restored images across many motherboards before without issue, which adds to this confusion. I’m in contact with Gigabyte regarding this, and I hope to hear back soon. If you have any idea what the problem could be, don’t hesitate to shoot off your ideas in our thread!

|

Discuss: Comment Thread

|

Pat Gelsinger Leaves Intel for EMC

Intel yesterday announced a few organizational changes, and one of the most notable is the loss of one of their top executives, Mr. Pat Gelsinger. Pat was the General Manager of the Digital Enterprise Group for the company, and after 30 years of breathing in microchips and chipsets, he has decided to try new things. He has become the President and Chief Operating Officer for storage company EMC’s Information Infrastructure Products.

There is some speculation as to why Pat would decide to leave Intel after dedicating 30 years of his life there, but similar to rumors about why Craig Barrett retired this past May, Gelsinger may not have liked the change-ups that Intel was planning to make. Either way, the company has certainly lost one of their most notable executives and public speakers.

Pat joined Intel in 1979, where he had numerous jobs throughout the years, including heading up Intel Labs, and also becoming the company’s first Chief Technology Officer. Prior to 1992, he become well-known for being the general manager of the division which was responsible for the Intel 486, Intel DX2 and also the Pentium Pro. Do any of those names awaken any nostalgia?

Alongside his work at Intel, Pat also holds six patents related to the area of computer architecture and communications, and also has published numerous technical publications, including “Programming the 80386” (a must-read by today’s standards, I’m sure!). As Pat gave some great keynotes at previous IDF’s, I was looking forward to seeing him there next week, but this was quite the shake-up. Good luck in the new position, Pat!

Intel also announced today that Pat Gelsinger and Bruce Sewell have decided to leave the company to pursue other opportunities. Gelsinger co-managed DEG and Sewell served as Intel’s general counsel. Suzan Miller, currently deputy general counsel, will take the role of interim general counsel. “We thank Pat and Bruce for many years of service to Intel and wish them well in their future endeavors,” said Otellini.

| Source: Intel Press Release |

Discuss: Comment Thread

|

Is the Zune HD All it’s Cracked Up to Be?

Last week, I spoke about Microsoft’s refusal to sell the Zune HD outside of US. After all, it looks as though it’s more than capable of delivering a compelling experience, thanks in part to NVIDIA’s Tegra SoC (system-on-a-chip). So, why would they shun the rest of the world? Well, at this point, it’s really hard to say, but according to Apple-reporting site AppleInsider, there are five main reasons that the Zune HD is destined to fail, regardless of where it’s sold.

The site tears apart the Zune HD’s most redeeming features, starting with the OLED technology. While still in its infancy, OLED has some real potential as becoming a killer display choice, but for now, it’s far too expensive to implement top-quality OLED displays into affordable products. According to both AppleInsider and other sites, OLED on the Zune HD, as well as other recent consumer products, is either too dark (200cd/m2 compared to ~400cd/m2 on desktop displays), or drains the battery too fast (the details are too technical to tackle here).

The next issue is the fact that NVIDIA’s Tegra isn’t all it’s cracked up to be. To make a long story short, Apple used to use an ARM processor built by PortalPlayer in iPods up to generation 5. Apple dropped them, and brought PA Semi on board. NVIDIA bought PortalPlayer in hopes they could revamp the technology and get back into the iPod. It didn’t happen.

So essentially, deep-down, Microsoft is using a CPU core in their Zune HD that was at one time pushed aside by Apple. And to make the odd connections even odder, the GPU core in Apple’s current iPods/iPhones is the PowerVR SGX… a derivative of the GPU used in the Sega Dreamcast (a console we wrote about also last week). So this, coupled with the lack of true HD capabilities, is what’s apparently going to hurt the Zune HD.

I haven’t had the time to do all the fact-checking, but what AppleInsider is reporting on looks to be quite well-researched. I’m still going to hold out for real reviews of the final product though, before forming my own conclusions. Thanks to Don for the heads-up on this news.

In contrast, the modern Cortex-A8 used in the iPhone 3GS, Palm Pre, Nokia N900, and Pandora game console represents the latest generation of ARM CPU cores. It also employs a DDR2 memory interface, erasing a serious performance bottleneck hobbling the Zune HD’s Tegra. It’s difficult to make fair and direct comparisons between different generations of technology, but NVIDIA’s own demonstrations of Tegra’s ARM11/integrated graphics show it achieving 35 fps in Quake III. The same software running on Pandora’s Coretex-A8 with SGX GPU core achieves 40-60 fps.

| Source: AppleInsider |

Discuss: Comment Thread

|

Is the Time Right for Apple to Produce Netbooks?

Over the course of the past few years, there’s been a major shift in what people want or need in a computer, especially a mobile one. While 13″ or 14″ 7 or 8lb offerings used to be okay, with the advent of the netbook, it became clear quick that consumers have wanted them for a while. After all, like is so often reported, most people only want a notebook for Internet and e-mail use. You don’t need a Goliath of a notebook to get that done. Then there’s the battery-life issue…

The fact is, for most people who read our site, myself included, a netbook isn’t going to offer all we’re looking for all the time. But for others, or for when particular need arises, having a netbook that’s compact, has long battery-life and enough speed to do the Internet/e-mail thing, not to mention the price… it’s a win/win overall. Brooke Crothers at CNET had a recent change of heart regarding netbooks, thanks to their improved hardware, and he asks whether or not it’s time for Apple to finally take action.

Apple’s been rumored to come out with a tablet for quite some time, and while that product-type isn’t exactly typical of the company, it’s easy to understand why they would consider one. After all, Mac’s are known for being the prime machine for graphics artists, so what better platform for a tablet? But a netbook is something entirely different. Apple’s well-known for charging a premium for their machines, and netbooks from every-single company out there who produce them have the opposite goal… to sell the most affordable notebook – without sacrificing features too much – as possible.

As far as I’m concerned, though, Apple sells premium products, and it’s probably best that they remain as such. If they did release a netbook, it would without question be sold for more than typical netbooks, so what’s the point? It’s rare for a company that sells premium products to release something “low-end”, as it can damage their brand. You don’t see BMW releasing an affordable, smaller car. Ugh, bad example. Or Mercedes-Benz! Ugh, another bad example. And you certainly don’t see companies like Ferrari put their name on anything that will sell. Ugh, I give up. Maybe Apple should release a netbook.

People like cute, light, and cheap–especially in a laptop. This sentiment won’t be overcome, as Intel believes, by the emerging ultrathin laptop category, which ranges from about $500 to $1,000 (formerly called CULV or consumer ultra-low-voltage). Certainly not this year. Ultrathins are not different enough in appearance from a standard laptop and not cheap enough.

| Source: nanotech: the circuits blog |

Discuss: Comment Thread

|

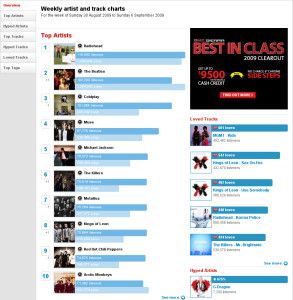

Last.fm to Launch HD Radio Stations in Top 4 US Markets

In today’s day and age, where the Internet can deliver everything from music to movies to games to news to virtually anything else you need, it seems unlikely that something like typical radio will ever grow from this point forward. In fact, numbers are likely dwindling already. It’s easy to understand why. If you have an MP3 player with music or podcasts you enjoy, why bother tuning into a radio station and hope they play something you want to hear?

Over the past few years, content in some regards has gotten a bit better on some stations, and thanks to things like Satellite and HD Radio, the quality has gotten better as well. But with the overall lack of HD receivers being sold, or HD Radio capabilities bundled with our media players (the Zune HD is one of the few to support HD Radio), adoption overall has been slow, despite fair support from various radio stations.

So it was with great interest when I read that CBS, the owners of social music site Last.fm, will be rolling out an HD Radio station based on the service in the US’ top 4 markets: New York (102.7 HD2), San Francisco (105.3 HD3), Chicago (93.1 HD3) and Los Angeles (93.1 HD2). If the stations prove to be a success, we could imagine we’d see an even greater rollout across other large cities.

What makes this move so interesting is that it’s the first time an online radio service has ever taken it to the real airwaves (there have been times when the opposite has occurred). Plus, because the charts on Last.fm are adjusted via real usage from the service’s millions of users, essentially what’s most popular on the service, will get played on their HD Radio station.

I think it’s probably safe to say that if you have music tastes that sway from what’s popular on the Last.fm charts, like mine, then the station isn’t likely going to be for you. But, if the first launched station proves successful, it’d be interesting to see if CBS would launch follow-up stations based on the most popular genres on the site. You’d imagine that such a move would have real potential.

It’s an interesting notion, to create one centralized station consisting of the top-rated and most popular stuff on Last.fm, because the whole idea behind web radio is that you don’t have to listen to what everyone else is listening to. On the other hand, Last.fm’s charts will surely do a better job of finding interesting music than the robots in charge of other radio stations will ever find.

| Source: Wired |

Discuss: Comment Thread

|

Taking Pictures from Space… On a Budget

For the sake of retaining good mental health, keeping your mind active with a good hobby is one of the best things you can do. Hobbies could range from, oh I dunno, playing with a paperclip, to taking pictures from space. One is far more expensive than the other, and seemingly out of reach for a lot of people. What if it wasn’t as expensive as you thought, though?

MIT students recently proved that when strapped for cash, you’re more likely to explore possibilities you might not have otherwise explored. Believe it or not, these students managed to put together a system that cost them no more than $150, and one of the resulting images can be seen below. That’s right… for $150 (excluding a camera), they managed to get pictures far from above earth.

Sure, that image might not seem that high, but they did actually manage to go quite a bit higher than that, to see both the tip of the earth’s atmosphere, and also the blackness of space. Images like these used to be possible only by space agencies, such as NASA, but not so anymore. The best part? The entire build requires little in way of tweaking, and all of the parts are available right off the shelf to regular consumers.

Though there’s a bit more information available at the URL below, the entire process requires a cell phone (pre-paid) with GPS capabilities, a camera that can be pre-equipped with special software for automatic picture-taking, an 8GB SD card, an external antenna for increased signal, and of course, a balloon that can hold on to dear life for as long as possible. To battle the cold, the students purchased Coleman hand warmers to wrap parts of the equipment with. Where there’s a will, there’s a way, that’s for sure!

Two MIT students have successfully photographed the earth from space on a strikingly low budget of $148. Perhaps more significantly, they managed to accomplish this feat using components available off-the-shelf to the average layperson, opening the doors for a new generation of amateur space enthusiasts. The pair plan to launch again soon and hope that their achievements will inspire teachers and students to pursue similar endeavors.

| Source: iReport & 1337arts, Via: Slashdot |

Discuss: Comment Thread

|

EA’s Latest Marketing Techniques Stir up Controversy

Often used for good or evil, marketing can work in mysterious ways to help sell a product, regardless of what it is. In the case of marketing being used for evil, you might imagine that the target product wouldn’t sell at all. After all, who’s interested in purchasing a product surrounded by controversy. Duh… pretty much everyone. Take a look at Grand Theft Auto: San Andreas. With all the controversy that surrounded the “Hot Coffee” mod, copies of the game sold like hotcakes.

Now, if there’s one company who markets like there’s no tomorrow, it’s Electronic Arts. In fact, recent rumors have been floating around that claims the company actively spends 3x as much money to market a game than to develop it. It’s insane to think about, but at the same time, it’s believable. EA’s games are even advertised on TV… aside from generic Xbox or PlayStation commercials, how often do you see a specific game advertised?

With the company’s upcoming title, Dante’s Inferno, EA has gone far to market it, in some cases, too far (depending on who you ask). Most recently, GameSpot and EA teamed up to offer this hellish-3D shooter a $6.66 discount for those who pre-ordered the game. Clearly, it’s attention EA is looking for.

As Ars Technica reports, the company has been marketing the game for a while, in some of the most unimaginable ways. At one convention, for example, “Christian” protesters showed up to protest the game, and even held signs that said, “EA = Electronic Anti-Christ”. Usually this would be bad for publicity, but not so here. EA actually hired these protesters. The hilarious thing? Bad publicity is good publicity, because we’re all talking about it, and that’s exactly what EA wants. Funny, huh?

EA has finally decided to simply send editors of prominent gaming sites checks for $200. The point? If the checks are cashed, the gaming press is greedy. If they’re not, the gaming press is wasteful. “By cashing this check you succumb to avarice by harding filthy lucre, but by not cashing it, you waste it, and thereby surrender to prodigality. Make your choice and suffer the consequence for your sin,” the included note stated. “And scoff not, for consequences are imminent.” The sin theme remains, if nothing else, on-topic.

| Source: Ars Technica |

Discuss: Comment Thread

|

Site Contests, What About ‘Em?

Here at Techgage, contests aren’t too common. The best reason I have for that boils down to time. Even simple contests require a fair bit of planning and time to implement, so it always comes down to whether time is better spent on our bread and butter (our content), or holding a contest, which very rarely delivers a return-on-investment. But, I was thinking about it a few weeks ago, and I think it would be a good idea to get a contest up every now and then, as a way to give back to our loyal readers, and as a result, that’s what we’ll be doing.

As you can see at the top of the page, we’ve just unveiled a new contest where you can win a gaming PC from iBUYPOWER valued at $2,199, which features both Intel’s awesome Core i7-870 and also the X25-M 80GB SSD. If you haven’t checked out our rules yet, what are you waiting for? The steps to enter yourself are easy and the entire process should only take you a few minutes. And you know what? You might just win!

I have a couple of contest ideas in mind for the future, but I will say that few contests will be as grandiose as the one above. After all, it’s not all-too-often that we’re offered a robust gaming PC to give away! So, prizes from future contests will vary in their wow-factor, from modest to :-O!. The ultimate goal is to give you guys a chance to win some cool stuff, and have fun while doing it.

We already have another contest lined up for the beginning of October, and it’s also a good one. It’s not a gaming PC, but it does relate to gaming, and how you see gaming. No, it’s not a game, but it’s something to enhance the experience. That’s all I’m saying! So stay tuned, and if you haven’t entered our current contest yet… GET ON IT!

| Source: Win an Intel Core i7-870 Gaming PC! |

Discuss: Comment Thread

|