- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

We Have Server Issues, But Not for Long

Well, I should have seen this coming. It looks as though I’ve exhausted all of my bad luck at home, so now it’s time for it to pass on through to our servers. For the past week or two, I’ve been noticing some site slowdown, but I had figured it was just my ISP since my Internet has seemed a little bit slower lately. I found out yesterday that I was wrong, because when your server sends you an SMS (yes, the actual server) saying there’s a problem, you know there’s a problem.

After digging around, it turns out that the second drive in our RAID 1 array failed, and chances are that it failed weeks ago, as that’s about as long as I’ve been experiencing slowdown issues on the site. Although I complain about the complications of RAID for personal use, it potentially saved the day for us, since if we didn’t have a RAID 1 array and the main OS drive died… well, I don’t need to go on.

Instead of hauling out the dead drive right now and going non-RAID, we’re just going to wait for the replacement drive, which should arrive before the end of the week. That way, we’ll go down once, not twice. As soon as we get the drive, we’ll install it that evening, and I’d expect downtime to be no longer than an hour. I probably won’t have much warning before this happens, but I’ll try to make the process as swift as possible. When the main site goes down, our forums will remain up as they’re on one of our other servers.

Oh, that picture? That’s just an example of what happens when someone messes with one our servers! Well, not really, but it’s the best comparison image I had to a server going down.

|

Discuss: Comment Thread

|

How Music Companies Can Earn 150x More from Illegal Downloads

Regardless of your whether or not you’ve ever pirated something yourself, or do so on a regular basis, very few people will shun the fact that it’s a real problem and has been for a while. It’s so large a problem, in fact, that organizations such as the RIAA, MPAA and others use piracy as their number one reason for lost profits. Of course, it’d have nothing to do with the fact that music is usually too expensive, or the fact that they don’t exactly cater to their customer in any possible way. No, of course not… it’s all piracy.

But did you know that organizations such as the ones mentioned above, and other music/movie companies the world over, are able to earn more revenue off of illegal downloaders, rather than from customers who purchase their music from a store or off of a downloadable music store, such as iTunes? According to recent reports found by TorrentFreak, that 150x estimation isn’t made up… that’s really how much more music companies can earn by, get this… requesting payment from the suspected downloaders.

How this company works (DigiRights Solutions) is that they offer a service to these companies that will monitor what I assume are just IP addresses from all over the area, keeping track of what’s being downloaded. If an illegal download is spotted (I assume it can tell by the filename, and the protocol used), it will automatically send out an invoice of sorts to the suspected customer. How DRS and other similar companies can get the customer’s address information so easily, I’m not so sure, but it’s scary as heck to think about.

The kicker is this. Although companies such as DRS send out invoices requesting payment, the person receiving it is not legally required to do so. According to information the company gives, however, upwards of 25% of people will just pay it. We’re talking about fees that start at €90 and can go into the hundreds. So essentially, music companies can make more money off pirates… by simply asking for the money. It’s going to be interesting to see if this kind of practice is even allowed to continue too far into the future. After all, although it’s not illegal, per se, I can’t see many people approving of sending random and unsubstantiated invoices to customers. If only it were that easy to make money for the rest of us…

DRS says it generally sends out emails to alleged file-sharers requesting them to pay €450 (650$) per offense. According to the company they get to keep 80% of the money, leaving 20% for the copyright holders. The anti-piracy outfit claims it uses the money to cover their IT costs, administration costs, attorney fees and other costs. So, for every illegal download the copyright holder gets €90 (130$), and that is where the presentation turns into a marketing talk where the company explains how piracy can be turned into profit. They start by comparing the profitability of legal and pirated downloads.

| Source: TorrentFreak |

Discuss: Comment Thread

|

Intel’s Core i7 Custom Desktop Challenge (Calling All Modders!)

Intel has sent us details of a new contest it’s holding, and modders, this one’s for you!

When: Oct. 5 through Dec. 14, 2009

Where: Intel Core i7 Custom Desktop Challenge Contest: www.intelcorechallenge.com

What: Intel Corporation has begun a multi-country “Intel Core i7 Custom Desktop Challenge” for PC enthusiasts to build desktops powered by Intel Core i7 and Core i5 processors that envision the possibilities of tomorrow’s technology – from new gaming PCs to innovative platforms for home automation.

The contest is promoted in coordination with local Intel Channel Partner members, sponsors (CPU Magazine, Extreme Tech, PC Magazine) and other media publications and blogs.

Participants can choose to compete in two contest categories with final winners receiving prizes such as gift cards (up to US $1,500), Intel Processors, an Intel Atom processor-based netbook, a Flip HD pocket camcorder, 160GB Intel solid-state drives and more. The contest categories are:

- Mod Creativity: a mod PC desktop that showcases innovations in lighting, cooling, liquid emersion, cut case and creativity.

- Lifestyle Innovation: a futuristic home automation PC platform to improve one’s lifestyle through wireless media centers, lighting automation and security integration.

Submission and Contest Rules: Interested participants can submit entries from Oct. 5 through 11:59 p.m. PST on Nov. 16. Final submissions will be judged on five factors by a panel of judges comprised of sponsors, Intel employees and industry experts.

Contestants will be awarded up to ten points per factor, for a total of 50 points. The five factors are:

- Overall mod creativity

- Mod paint job/creativity

- Technical enhancements

- Demonstration creativity

- Futuristic vision

Final submissions will also be entered into the “People’s Choice” competition. From Nov. 23 through Dec. 7, entries will be displayed on the contest Web site (www.intelcorechallenge.com) where individuals around the world can vote for their favorite mod. In addition to the “People’s Choice” winner, 12 final winners will be selected for prize categories such as “Best in Show,” “Best Mod Creativity,” “Best Lifestyle Innovation,” “Best in Country” (one prize for each country) and “Best Video.” All 13 final winners will be notified on Dec. 14.

Who: The contest is open to anyone 18 years or older who wants to build a desktop that demonstrates a bold vision made possible by Intel’s newest, smartest and fastest processors. Participating countries are Belgium, Canada (except Quebec), Japan, Netherlands, Norway, Sweden, United Kingdom and the United States.

|

Discuss: Comment Thread

|

Are You Upgrading to Windows 7?

As I whined about in our news section a few weeks ago, I recently had the main hard drive in my machine die. Yes, the machine that I use day in and day out to get whatever work I need done, done. At the time, I had a dual-boot setup with Linux (using KDE 3.5) and Windows XP, but with that crash, I figured I might as well upgrade both OS’ at the same time, and move on up to KDE 4, and with 7 right around the corner, I thought it’d be a good time to finally replace XP.

I’ve already talked at some length about my thoughts on KDE 4, but it wasn’t actually until this past weekend when I got around to installing Windows 7 on the machine. Before doing so, though, I already knew that I much preferred it over Vista, simply because we’ve been using it to some extent on our benchmarking machines for the past month. Compared to Vista, it seems far, far “smarter” about things, and I’ve also run into fewer inconvenience issues, like I did in Vista.

The result of my install can be seen above (which I posted in our “Show off your desktop!” forum thread… feel free to add your own!). As you can tell, despite not using Windows as my primary OS, I still install a full gamut of applications, just in case. So far, my experience with 7 on my own machine is quite good. Oddly enough, though, I have experienced quite a few BSOD’s up to this point (five in total), but all have happened while gaming, so I’m not quite sure what the deal is. I swapped out the GPU, and the issue seemed to go away, but last night, when I shut down to go back to Linux, sure enough… BSOD.

What makes it odd is the fact that under Linux, I haven’t had a single issue. It’s only when I’m in Windows that the computer will crash. In Windows, I use the GPU often, and under Linux… not so much. So, I almost wonder if there’s a problem with the NVIDIA driver for Windows 7, or if I just happen to have the worst luck? If anyone out there has had a similar issue, please post in the related thread below and let us know!

Alright, I went a little off-topic there. My question to you all is this. With Windows 7’s launch happening next Thursday, how many of you are planning to make the upgrade? And for that matter, if you are upgrading, are you upgrading from XP or Vista? If you’re not upgrading, what’s the main reason for it?

|

Discuss: Comment Thread

|

TG Roundup: Cheap Gaming Notebooks, $99 Quad-Cores, Linux Lovin’

Whew… *wipes sweat from brow*. It’s been busy over here! Over the past week or so, we’ve published a variety of content that simply shouldn’t be missed, from a review of an affordable gaming notebook to a look at the industry’s absolutely first sub-$100 quad-core processor.

Last Saturday, we posted an in-depth look at the latest gaming notebook from ASUS, the G51Vx, coming in at 15.4-inches. What makes this notebook special isn’t so much the fact that it can handle pretty much any of today’s games at the display’s native resolution of 1366×768, but also that it looks good while doing it. The notebook features awesome styling, great performance, and costs only $999. What’s not to like?

Towards the middle of the week, Bill posted his look at SilverStone’s latest full-tower chassis, the Raven RV02. This chassis is a follow-up to the RV01 (no surprise, huh?), and does a lot to remedy complaints from the original. It features a very unique design with a superb airflow scheme, is built incredibly well, and caters to making installation a breeze. Although the RV02 retails for close to $200, Bill was so impressed, that aside from a few minor issues, he believed it was almost the perfect chassis.

Late last week, we somehow managed to post two Linux-related articles back-to-back, and each of the two have been very well-received, or… very popular at least! Brett published another one of his fantastic editorials, this time taking a look at Ubuntu, and the reasons why experienced Linux users tend to look down upon it. Brett says there’s no need of it, and I agree. The following day, I posted an updated view of what I think about the KDE 4 desktop system, and all I can say is… I really, really hate that I went so long before giving it another go. I’m still using it as I type this, and I’m loving it.

On Tuesday, I posted an article that may have caused many to ask, “What the?!”. It was of Gigabyte’s GeForce GTX 260 Super Overclock, and yes, it is a little weird to post a review of such a card today (the GTX 260 is over a year old), but Gigabyte didn’t create a new model for nothing. Nope, the Super Overclock boasts an overclock that’s actually impressive, and that’s rare. It’s overclocked so high, in fact, that it actually beats out NVIDIA’s own GTX 275, which costs about $40 more than a typical GTX 260.

To wrap this week up in style, I posted an article taking a look at AMD’s latest quad-core processor, the Athlon II X4 620. Yes, you read “Athlon” there, but don’t balk… this is a $99 processor we’re talking about. That fact in itself is rather impressive, but the chip is actually a great performer for the money, so it’s well worth a look if you are wanting to build a cheap machine that has a lot of raw horsepower. The lack of L3 cache hurts, but since we managed a stable overclock that added 35% to the total clock speed, we’re not complaining too much!

We’re set for another busy week coming up, and I can assuredly say that you don’t want to miss some of our content next week, so check back, and check back often! Also, don’t forget about our forums, which is a great place to hang out while you wait for us to publish some fresh content!

Is NVIDIA Stopping Chipset Development?

One of the biggest tech rumors of 2009 is the one that has NVIDIA pulling out of the chipset market, and it’s not one that’s going away until it actually happens. The rumor is stronger than ever at this point – so much so, that many don’t even consider it a rumor, but rather absolute fact. How on earth could NVIDIA actually fall to a point that would force them out of the chipset market? Simple, ask Intel.

Due to an ongoing lawsuit, NVIDIA has absolutely no ability to further development on its chipsets based around Intel’s QPI bus. This all boils down to the fact that it’s not in the signed agreement between both companies that NVIDIA is able to create chipsets for future Intel processors. Rather, it is currently locked into development for LGA775 and earlier processor-types only.

NVIDIA is dancing all around the issue, which isn’t helping its case. The company is outright about the fact that it will cease development on chipsets for last-gen processors, but states that its fully-committed to AMD processors and Intel’s processors for which they’re still allowed development (from a forward-thinking standpoint, that’s seemingly only Atom at this point). The problem with these arguments, is that on the AMD side, the market is much smaller than Intel’s right now, so what draw is there to actually further development? There isn’t.

From many different perspectives, NVIDIA is in a very, very difficult spot right now. There doesn’t seem to be a market that it partakes in that hasn’t been shaken up against it, and on the chipset side, the company simply can’t develop for current processors even if they wanted to. Whatever your thoughts on NVIDIA as a company, one thing’s for certain… the lack of competition in any market is not a good thing.

Although the company’s ION has been well-received for the most part, the future of that is even shrouded in doubt. Intel is aggressively developing on-die graphics, and Atom is one day going to be included in the fun. When that happens, ION is going to look even less attractive, because rather than having a CPU and GPU on a motherboard, Intel’s going to pair the two together on the same chip. How’s a CPU-less company to compete with that?

Regardless of how dire the situation is being played out at various media outlets, I don’t think the writing’s on the wall for NVIDIA quite yet. Though I think the company pushes certain technologies a little more than it should, there’s huge potential in its other products, such as the Fermi architecture, both from a GPGPU and gaming standpoint. Though I’m a little skeptical that its upcoming cards are going to wipe the floor with ATI’s latest releases, let’s hope for the sake of real competition that it’s true.

|

Discuss: Comment Thread

|

Building a Windmill to Power a Village

One of my favorite sayings is, “Where there’s a will, there’s a way.”, because it’s true. If you are truly stumped, and can’t see a way out of a problem, there’s likely always a solution. If you have the will to get it done, then it will get done, in some form or another. If you have doubts, you need to learn about Kamkwamba, a young man from Malawi, and he’ll tell you different.

Kamkwamba was actually kicked out of school due to the fact that his family couldn’t pay the $80 fees required, but despite that, he kept on learning and did what he could to get by. His country was facing an incredible famine, and to do his part, he wanted to figure out a cost-effective (as in, cost nothing) approach to generating power for his village. He learned about windmills from a library, and with that, he tried to build his own.

So he did… out of scraps he found in the area. People thought he was nuts, or a “witch”, but he continued on to build a windmill that actually did end up powering up a lightbulb. Today, there are five such windmills in his village, and this is all without having ever seen a real one. He did see a real one not long ago, and I can only imagine the expression his face when he saw how “proper” it was..

Today, Kamkwamba is attending an “elite” African school, with the help of donations, and he looks to have a “bright” future (pun intended). In all seriousness though, stories like these prove that if you have a will, there will be a way. I couldn’t possibly imagine building a windmill from parts found around various junkyards, but as is proved, dedication can lead to some great things!

His story has turned him into a globetrotter. Former U.S. Vice President Al Gore, an avid advocate of green living, has applauded his work. Kamkwamba is invited to events worldwide to share his experience with entrepreneurs. During a recent trip to Palm Springs, California, he saw a real windmill for the first time — lofty and majestic — a far cry from the wobbly, wooden structures that spin in his backyard.

| Source: CNN Eco Solutions |

Discuss: Comment Thread

|

VMware Workstation 7 Brings Windows 7, OpenGL, DirectX 9.0c Support

I posted a news item yesterday about VMware’s upcoming Fusion 3 hypervisor software for Mac OS X, and one complaint I had was the fact that while Mac users have such an excellent virtual machine option from the company, PC users get the short end of the stick and have one that’s just too pricey. It’d be hard to argue that if the Mac OS X version cost the same, but that’s not the case. Rather, Fusion 3 can be had for $80, compared to $200 for what PC users would need, Workstation.

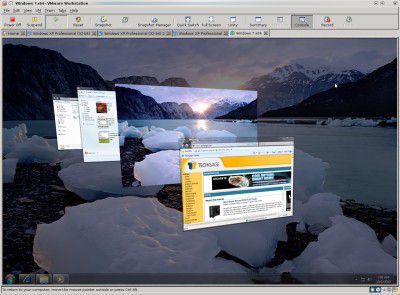

I checked in with the company to ask why Fusion 3 offers some sweet features and Workstation doesn’t, and I was quickly given a link to a blog post made by the company just the other day, announcing Workstation 7. Whoops… there’s one blog I should really add to my RSS feed! Sure enough, like Fusion 3, Workstation 7 will offer full Windows 7 support, along with support for DirectX 9.0c and OpenGL.

Still, even with Workstation 7, my earlier complaints can’t just disappear. Fusion 3 costs Mac users $80, while Workstation 7, upon release, is likely to retail for the same as the current release, at $200. What’s a PC user to do? You could say, “go to a competitor”, but that’s tough, since no one currently does VM as well as VMware does (in my experience), at least from the end-consumer perspective. Servers and real workstations may be totally different, I don’t know.

I followed-up with the company to ask why desktop users don’t have a more affordable choice, and I hope to receive a response soon. Onto the good stuff. What does Workstation 7 bring to the table? For Linux users, of which I am included, there is better support for choosing an audio card, ODD and other things, and let me say, “yes!”. Without getting into details, I’ll just say that WS7 should be even easier to deal with for Linux users.

Other features include Windows 7 support, along with the ability to run Aero, support for new operating systems (Windows Server 2008, Debian 5, ESX), running VM’s with up to four cores and/or processors (limited to 4 threads per CPU), 256-bit AES encryption for your VMs, improved drag and drop support, more efficient VM pausing and more. In my quick tests, the 3D graphics support IS much better (most quirks I had before are gone), but I found performance overall to be worse. I am just using the beta, though, so I’d be pretty confident in saying that the release version won’t have such issues. Let’s hope.

Today, VMware has posted the Workstation 7.0 Release Candidate. It is publically available for all of our customers and loyal fans to download and try out! New versions of VMware ACE and VMware Player are also available (Player has a new twist that we are very excited about!!!!) We can’t share our targeted release date (so please don’t ask) and we don’t have any pricing or upgrade information available yet, but we don’t anticipate a radical change from what we have today! But we will keep you posted.

| Source: VMware Workstation Zealot Blog |

Discuss: Comment Thread

|

Are $1,000 Processors a Waste of Money?

Over the course of the past few years, the processor landscape, and others, have changed drastically. Even just five years ago, people thought very little of paying $1,000 for a processor. Back in those days, we were stuck to single-core models, and models that didn’t overclock to the same degree that today’s processors do. When you paid $1,000 for an “Extreme” edition of those processors of the day, you actually did see a fair boost in overall performance.

The situation is much different today. The premium on a $1,000 CPU still could have made a lot of sense on the first dual-core processors, because once again, the overall performance doesn’t come close to processors of today, nor did the CPUs back then overclock quite as well as they do today. But since then, things have improved dramatically, from both AMD and Intel. Not only are the architectures as a whole much better, but we have affordable quad-cores, and unbelievable overclocking-ability.

So is it worth spending $1,000 on a processor in today’s day and age? In a newly-posted video interview with AMD’s Pat Moorhead (ironically posted on Facebook by an Intel employee), the answer is no. According to Pat, today, there is so much value in processors, that there just isn’t a need to spend $1,000 on a chip. He also notes that we’re reaching a point where the GPU is just as important as the CPU, although I personally think he’s thinking a little too far in the future at this point.

I’m all for GPGPU, but the selection of applications is slim, and if you are looking to take advantage of what Pat speaks about, you’re going to be forced towards a certain application. But from a gaming perspective, he’s right… there just isn’t a major advantage of a faster CPU in most of today’s games. He notes that instead of spending $1,000 on a CPU, it would make more sense to spend $250, and then use the extra on better GPUs, or on the companies latest Eyefinity technology.

My ultimate question is this. Would Pat’s attitude be the same if AMD had a product capable of competing directly with Intel’s Core i7’s? If AMD had a top chip that could take on the i7-975, would they charge much less, or go back to how it used to be and sell their highest-end model for $1,000? What do you guys think?

| Source: Pat Moorhead Interview (YouTube) |

Discuss: Comment Thread

|

VMware Fusion 3 for Mac Due Later this Month

Whether you are a Mac OS X or Linux user, to run Windows on your machine, you have a few options. One of the more common is to dual-boot your machine, so that when you boot up, you can run one or the other. Another option is to run software that allows you to run Windows software, such as Wine or Crossover Office. Both of these methods work well, but personally, I can’t really think of any other way but by virtual machine, given how robust the technology is today.

Although PC users have many virtualization options available to them, for the end-consumer, none seem to compare features-wise to VMware Fusion, exclusively for the Mac. VMware is a company that produces some of the best virtualization software available, and their Workstation application is what I use exclusively. But there’s something lacking… and everything it lacks, VMware’s upcoming Fusion 3, for the Mac, seems to fix.

Mac users who use Fusion 2 will definitely want to upgrade if they are using a relatively new PC, because the feature-set is undeniably sweet. First, we have optimization for Snow Leopard, and native support for the 64-bit kernel. Then there’s what VMware calls the “Ultimate Windows 7 Experience”… that is, the ability to run Windows 7 with full GUI effects, such as Aero and Flip 3D.

The most notable feature, to me, is the “Best-in-Class 3D Graphics”, which opens up full support for OpenGL 2.1 and DirectX 9.0c Shader Model 3. Going by its claims, it looks as though games for Windows may possibly run at almost full-speed, and if so, that’s going to be unbelievably impressive. After all, gaming is generally a downside of non-Windows OS’, so if this proves as reliable as the company is leading on, then this is going to be one hot product.

Fusion 3 will become available later this month, but is available for pre-order now, for $79.99. Those who are using a previous version of Fusion can upgrade for $39.99.

“For more than 10 years, VMware virtualization has given users the choice of where to run their favorite applications. We’re excited about the rapid adoption of VMware Fusion in the Mac community since its introduction just over two years ago, making it the #1 choice to run Windows on a Mac,” said Jocelyn Goldfein, vice president and general manager, desktop business unit. “VMware Fusion 3 builds on our proven platform and makes it even easier for users to run Windows applications on the Mac.”

| Source: VMware Press Release |

Discuss: Comment Thread

|

Gentoo Linux Turns 10

In the fall of 1999, Daniel Robbins decided to create his own distro, one that focused on a non-binary packaging system, and was designed to be built from the ground-up, on a per-user basis. This distro was first known as “Enoch Linux”, but due to complications with how the compiler fared with the package manager, “Gentoo Linux” didn’t show up until early 2002. Why the name change to “Gentoo”? Why, it’s because Gentoos are the fastest swimmers of all penguins. Likewise, Gentoo Linux aims to be the fastest distribution available.

Although Daniel is no longer in charge of the distro, nor has really anything to do with it anymore, Gentoo Linux sticks to its roots and remains as a source-based distro, offering users the ability to fine-tune their OS to their liking, not someone else’s. Just how configurable is it? To better explain, I’ll point you to a review of one of the Gentoo releases I posted just over three years ago, as I explain the basic perks there.

So what am I getting at? Well, just a few days ago, Sunday, Gentoo Linux turned 10, and since I’ve been a long-time user of the OS (more than three-and-a-half years as a full-time OS), I can’t help but get a little excited at knowing just how far the distro has come since it’s first version. Its development has been a roller-coaster ride to say the least, especially over the course of the past few years, but as it stands today, I can confidently say that things have shapen back up, and development is more active than ever (just look at the upcoming version of Portage… it’s more than just a simple upgrade).

If you happen to be a Gentoo Linux fan and want to give praise, you can head over to the official 10th anniversary forum thread. If you want to check out a variety of screenshots that were submitted for a celebratory contest, you can go here. If you are unfamiliar with Linux, I highly recommend checking that out, since you can see the OS in a wide-variety of configurations. For mine, you can look no further than the screenshot below (KDE 4.3.2 on Gentoo using the 2.6.31 kernel).

The Gentoo-Ten LiveDVD is available in two flavors, a hybrid x86/x86_64 version, and an x86_64-only version. The livedvd-x86-amd64-32ul-10.0 will work on x86 or x86_64. If your arch is x86, then boot with the default gentoo kernel. If your arch is amd64 boot with the gentoo64 kernel. This means you can boot a 64bit kernel and install a customized 64bit userland while using the provided 32bit userland. The livedvd-amd64-multilib-10.0 version is for x86_64 only.

| Source: Gentoo Linux |

Discuss: Comment Thread

|

How to Get to Mars in 39 Days

Getting humans to Mars is something that’s on a lot of minds, especially astronauts and space scientists, but understandably, the number of hurdles in our way is seemingly innumerable. The biggest issue is space radiation, which is caused by all of the cosmic rays shot forth by the stars in our galaxy – especially the Sun when we’re talking about close to home. Although sending people to the moon is an incredible feat, the risk of radiation causing issues to the astronauts is relatively low, given the required amount of time to reach the destination.

Mars is another story. While the Moon sits at about 240,000 Km from our Earth, Mars at any given time can be 30,000,000 Km away, and that’s the minimum distance. Using similar rockets that get us to the Moon, getting to Mars would take at least 6 months, which is a major issue where space radiation is concerned. Astronauts may be safe for a little while, but we’re talking six months… not a mere week.

But a company from Texas hopes to have something that will improve not only the goal of making it to Mars, but other space activities as well. Their new “VASIMR” rocket, with plasma-based propulsion, is capable of producing thrust unseen by any other current rocket technologies. What would this mean for a Mars expedition? A 39 day trip, rather than 6 months. That’s a huge difference.

Of course, VASIMR has other uses as well. NASA has already signed up for use of the technology by 2013 to aide with occasional repositioning of the International Space Station, and even missions to the Moon would be made much easier with VASIMR. Aside from the obvious performance improvements, VASMIR also manages to cut down on fuel by a very large degree. While NASA uses 7.5 tonnes of fuel per year for the ISS boosters alone, VASIMR can cut that down to 0.3 tonnes. That’s insane!

But Ad Astra has bigger plans for VASIMR, such as high-speed missions to Mars. A 10- to 20-megawatt VASIMR engine could propel human missions to Mars in just 39 days, whereas conventional rockets would take six months or more. The shorter the trip, the less time astronauts would be exposed to space radiation, which is a significant hurdle for Mars missions. VASIMR could also be adapted to handle the high payloads of robotic missions, though at slower speeds than lighter human missions.

| Source: PhysOrg |

Discuss: Comment Thread

|

Creating Images From Scratch with PhotoSketch

One of the coolest things to do on a PC is photo editing. You can pretty much do anything with a photo today, from putting yourself in space, decreasing the size of your love handles, or simply altering the color to make a photo look better. But, if you happen to lack any skill required to create some cool images in applications such as Photoshop, it can be frustrating. That’s where a cool new technology called PhotoSketch comes into play.

The process works like this. You draw a sketch, just roughly, of what you want the scene to include. You can draw the divide between the land and sky, for example, and then draw outlines of objects, such as people. In one example, you could draw a line across the image and name it “river”, and then draw a bear and a fish, and name them appropriately. After PhotoSketch gets to work, it should provide you with an image that it has stitched together, showing the desired scene.

Although I’m sure the algorithm to make this happen is very complex, the way it works is actually pretty simple. The application takes advantage of image searches online, and matches your rough sketches and the term you applied to photos found around the web. If you draw a bear, and say “bear catch”, then it will match the terms and hopefully find images of bears catching something. Another example is drawing a dog and then a Frisbee.

Once you draw your sketch, the application will search the Internet for images that match each one of your terms, and do what it takes to put arrange them in the perspective you requested. It will deliver a handful of results, and you can pick whichever one suits what you were looking for best. Sadly, the site is down right now, so I haven’t been able to give the application a try, but when it is up, this should be the URL.

PhotoSketch’s blending algorithm analyzes each of these images, compares them with each other, and decides which are better for the blending process. It automatically traces and places them into a single photograph, matching the scene, and adding shadows. Of course, the results are less than perfect, but they are good enough.

| Source: Gizmodo |

Discuss: Comment Thread

|

Why You Can’t Get Good Earbuds for Under $100

You know what really grinds my gears? When someone claims that music is their life, but refuse to purchase a good pair of earbuds or headphones, and instead stick to the free pair that came with their MP3 player. If you are truly passionate about music, and listen to it often, then it makes all the sense in the world to care just as much about the audio quality as the music itself.

I’m no audiophile, and I’ve made that clear in the past, but I do appreciate clean sound, and it’s for that reason that I believe quality equipment makes the music-listening experience a whole lot better. Upgrading to a worthwhile set of speakers, headphones or earbuds, can pretty-much prove the difference they can make. Music becomes more accurate, subtle tones become more noticeable and even the vocals become richer and more in-your-face.

That said, I’ve always hated earbuds and have found them entirely useless. I know I’m alone here, and I admit that I haven’t tried a truly high-end pair, but I’d like to soon after reading an article posted last week at Gizmodo. My problem with earbuds is this… their clarity and total lack of bass. As this article points out, though, higher-end earbuds do actually improve on the lower-end ones, as they feature more than one driver, allowing for finer and more accurate audio, and far improved bass.

Just how high can you go? Well believe it or not, Shure offers a pair of $500 earbuds, called the SE530, while Ultimate Ears offers their UE-11 Pro’s for, wait for it… $1,150. That pair in particular offers a staggering four drivers in each ear, which is why the price is so high. It’d be an interesting feat (and needless) to have 10 drivers in a pair of headphones, but four in a bud so small is undeniably impressive. If you ask Shure and true audiophiles where the “sweet-spot” for earbuds is, many will say that at $100, the men begin to get separated from the boys. Under $100, it seems that most of what’s focused on is the style, and not the audio design.

The law of diminishing returns tends to kick in above that point: The difference between $300 set of buds and a $400 pair is nowhere near the jump from $20 to $100. Even smaller is the difference in models between generations. The best value on the market might be a previous-gen version of Shure’s 500 series buds at a cut rate ($290), but if you can find $100 earbuds for 70 bucks, it’s even better.

| Source: Gizmodo |

Discuss: Comment Thread

|

Palm Pushing its Luck with iTunes Syncing

When Palm released their Pre smartphone, it brought forth many notable features. These included combined messaging, layered calendars, activity cards, a clean interface and more. One feature kind of stood out from the rest, though, and that was iTunes syncing capabilities. With the Pre’s launch, Palm became the first company to officially offer this ability. Why? Because syncing with iTunes isn’t exactly a simple task, and any mistake could result in lost data.

Plus, there’s also the fact that Apple simply doesn’t want anyone to include iTunes syncing, and they’re actually strongly against it. The company proved that this summer when an iTunes update officially broke support for the Pre, and it was no mistake. That particular release was the first of many to come where Apple would deliberately break support once again. It’s truly a cat and mouse game, and it’s probably annoying for both companies (and their customers) to deal with.

Does Palm have the right to offer iTunes syncing support without Apple’s permission? Is Apple creating a monopoly by barring support for non-Apple devices? It’s hard to say right now, but it should become a lot clearer in the months to come. Palm, just a few weeks ago, was slapped with a warning by the USB Implementers Forum to cut what they’re doing out. The problem isn’t that they are offering iTunes sync support, but the fact that they are mimicking Apple’s unique USB device ID.

Despite USB-IF’s warning, Palm released another update late last week that once again re-enabled support for the feature, so what happens from here on out is yet to be seen. To be a member of the USB-IF, you must abide by their rules, and using another vendor’s ID is strictly prohibited. The real question is what difference it would make for Palm if they weren’t part of the USB-IF, and also what difference it would make if they didn’t have their own unique ID. This should get interesting.

Now that Palm has “clarified its intent” with regard to this potential violation, I wonder how Apple and the USB-IF will respond. Do they have any recourse? The USB-IF could revoke Palm’s membership in the group, but what would that accomplish? Very little, as far as I can tell. Certainly, it wouldn’t prevent Palm from continuing to update its devices to synch with iTunes.

| Source: All Things Digital |

Discuss: Comment Thread

|

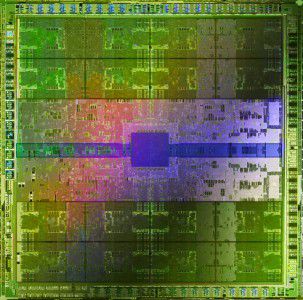

NVIDIA’s Fermi Goes Pro-GPGPU, Aims to be Faster than HD 5870

Earlier this week, NVIDIA unveiled their “Fermi” architecture, one that has a massive focus on computational graphics processors. As far as GPGPU (general purpose GPU) is concerned, NVIDIA has been at the forefront of pushing the technology. Others, such as ATI, hasn’t been too focused on it until very recently, so in many regards, NVIDIA is a trailblazer. The adoption of GPGPU as a standard has been slow, but I for one am hoping to see it grow over time.

The reason I want to see GPGPU grow is this. We all have GPUs in our computers, and some of us run out and pick up the latest and greatest… cards that deliver some incredible gameplay performance. But how much of your time spent on a computer is actually for gaming? It’d be great to tap into the power of our GPUs for other uses, and to date, there are a few good examples of what can be done, such as video conversion/enhancement, and even password cracking.

No, I didn’t go off-topic, per se, because it’s NVIDIA who’s pushing for a lot more of this in the future, as Fermi has been designed from the ground up to both be a killer architecture for gaming and GPGPU. According to their press release, the architecture has great support for C++, C, Fortran, OpenCL and DirectCompute and a few others, it adds ECC, has 8x the double precision computational power of previous generation GPUs and many other things. Think this is all marketing mumbo jumbo? Given that one laboratory has already opted to build a super computer with Fermi, I’d have to say that there is real potential here for a GPGPU explosion. Well, as long as Fermi does happen to be good for all-around computing, and isn’t only good for video conversion and Folding apps.

Since Fermi was announced, there has been some humorous happenings. Charlie over at Semi-Accurate couldn’t help but notice just how fake NVIDIA’s show-off card was, and pointed out all the reasons why. And though NVIDIA e-mailed him to tell him that it was indeed a real, working sample, Fudzilla has supposedly been told by an NVIDIA VP that it was actually a mock-up. At the same time, Fudzilla was also told by GM of GeForce and ION, Drew Henry, that launch G300 cards will be the fastest GPUs on the market in terms of gaming performance, even beating out the newly-launched HD 5870. Things are certainly going to get exciting if that proves true.

“It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore,” said Jen-Hsun Huang, co-founder and CEO of NVIDIA. “The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry.”

| Source: NVIDIA Press Release |

Discuss: Comment Thread

|

Windows 7’s “XP Mode” Goes RTM

When Windows 7 launches later this month, the majority of users will adopt Home Premium, thanks in part to its robust feature-set and also its price-point, at $199 for a full retail copy. For some, though, the decision to upgrade to either Professional or Ultimate probably lingers on one thing: XP Mode. Somewhat similar to a virtual machine, XP Mode would allow you to either run a full-blown XP environment, and also run applications through XP mode natively under 7.

In the screenshot below, you can see an example of this. Internet Explorer 8 is running in Windows 7 as normal, but IE 7 is running through XP mode, and you can tell because of the window border. All in all, it’s a rather seamless experience by the looks of things. If you have a program that doesn’t work well under Windows 7 for whatever reason, then trying your hand at the XP mode might be your solution.

I have yet to play with the feature myself, primarily because I didn’t know how to install it under my Windows 7 Ultimate installation, but I didn’t actually realize that it was a separate download, and not simply something that came with the OS. You must download Virtual PC, Microsoft’s virtual machine platform, by going to the official website. From there, you select whether you have a 32-bit or 64-bit architecture, and also your desired language for XP Mode, and then download both the Virtual PC and XP mode software.

Note that although the RTM stage has just been hit, it’s only available as the RC currently. You could expect the website to reflect the final version shortly. My question to you guys is this. If you are planning to upgrade to Windows 7, are you concerned by lacking a proper XP mode in the Home Premium version, or will you splurge the extra for this feature? Although Professional and Ultimate offer many features Home Premium doesn’t, I don’t think many of them cater to the typical user, so in that sense, XP mode would have quite the cost premium.

For home users, the new feature is equally exciting, giving many their first taste of the benefits of virtualization. Not only can it help them save on software costs, by running older versions of Office software or other programs, it can also allow them to play games that would run within Windows XP, but had trouble running within Windows Vista. For fans of PC gaming, this is a welcome feature. The virtual machine also helps to protect computers from online attackers when running. Many attacks exploit virtualization features as a foothold to launch attacks from.

| Source: DailyTech |

Discuss: Comment Thread

|

Do I Have the Worst Luck with Hardware?

That’s the question I have to ask. I hate to rant in our news section, but sometimes, I can’t help it. I’ve posted in recent weeks about the complications of benchmarking, because as soon as you think something is going well, you might hit a huge roadblock that stops you right in your tracks. Some fixes are easier than others, but more often than not, I hit something that wastes a lot of time, and it’s beyond frustrating.

Two weeks ago, for example, I posted about some complications I was having with regards to testing the P55 motherboards we have on-hand. In the end, the problem was my fault, and I felt like an idiot for overlooking something so simple. But when you are behind schedule, and trying to rush to get things done, your mind begins to short-circuit and you don’t make the wisest decisions or take the time to sit back and actually look at things as they are.

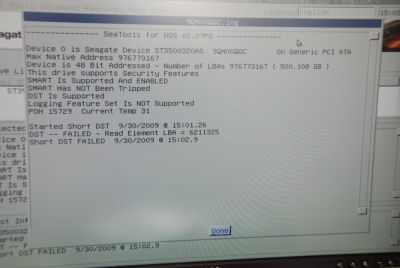

Earlier this week, I thought my problems were behind me. Things were going well, and for the first time in a while, I finally felt like I was on a roll, and that the rest of the week, and weeks to come, would be rich with content. Well, there’s clearly some mysterious force that doesn’t want me to ever get ahead, because that very evening, the hard drive in my main system crashed. For what it’s worth, it was a Seagate Barracuda 7200.11 500GB with a total power-on of 15,500 hours. The drive is under warranty, but that doesn’t do much to mend the frustration.

See, it’s funny. Although I religiously use a superb system backup problem for our benchmarking machines, I’ve been lazy in getting around to backing up my own machine (both Linux and Windows) in case a problem like this ever arose. I didn’t lose any data, but since I use a rather complex version of Linux, I wasn’t looking forward to spending a whack of time on the installation process. Luckily, I did get things up running sooner than I thought, and my PC is now better than ever. I took the opportunity to give KDE 4 an honest go, and you can see the results of that in a newly-published article here.

Have you ever run into a computer problem and wanted so bad for it to be something minor, rather than major, so you trouble-shoot for hours in hopes you’ll come out ahead? Well, that was the case with me here. I knew the hard drive had a problem, but then I thought… “What if it’s the S-ATA cable, or the port?”. I swapped out the S-ATA cable, and had far better luck with it, oddly enough, but Seagate’s SeaTools still gave errors. I swapped out for yet another S-ATA cable, and SeaTools passed its tests many times over. I thought maybe it was indeed the cable.

Of course, five or six hours after I began troubleshooting, I realized that the drive was a lost cause, and I was only wasting time rather than trying to make the lost time as minimal as possible. I installed a replacement drive, installed my operating systems and as I type this, the machine is working great. It wasn’t the motherboard, or the cables… it was 100% the hard drive. Time to fill out that RMA form…

|

Discuss: Comment Thread

|

Can Amazon.com Become the Walmart of the Web?

When is the last time you went to Walmart to pick something up? Amazon.com? If you’re like me, the latter was the most recent. I admit… I’m just not a fan of Walmart. It’s not so much the people who tend to shop there, but the fact that the aisles (at least here) are too small given how many people there are in the store at any given time. I just don’t like to stress out in a store just by walking through it, and since Walmart is the worst for that, I do anything I can to not go there, regardless of the deals.

So when I need to purchase something, like a movie or audio CD, I tend to either go to another store, or if the price is right online, I’ll order it in and hold my patience until it arrives. I’m sure I’m not alone in preferring to purchase something online than off, because the convenience factor is unparalleled. There are some things I just won’t purchase online, such as food or clothing, but for electronics or items of any other sort, I wouldn’t think twice.

When Amazon.com was launched in 1995, it sold books. That’s it. Over time, the service has evolved to sell pretty-well anything else, and according to the New York Times, within the next year, general merchandise for the first time will see more units sold than books, which is what many people still use the service for (I myself have bought books through Amazon.com in the past). The big question is, with Amazon.com’s rapid growth, is it be possible that the company could become the “Walmart of the Web”, or even better… overtake Walmart in sales?

The latter is an almost laughable thought, but I wouldn’t discredit the notion so fast. After all, no one said that such a thing would happen next year, or even in the next ten. But with online shopping growing at an unbelievable rate, it may be mail couriers who really stand to benefit. Once Amazon offers color-accuracy on any monitor and the ability to smell and feel products they sell (hey, it could happen), Walmart could really be wary.

Credit: Jim Wilson (New York Times) |

“Amazon has gone from ‘that bookstore’ in people’s mind to a general online retailer, and that is a great place to be,” said Scot Wingo, chief executive of ChannelAdvisor, an eBay-backed company that helps stores like Wal-Mart and J.C. Penney sell online. Mr. Wingo envisions e-commerce growing to 15 percent of overall retail in the next decade from around 7 percent. “If Amazon grows their market share throughout that period, and honestly I don’t see anything stopping it, that is pretty scary,” he said.

| Source: New York Times |

Discuss: Comment Thread

|

Can Phoenix’s HyperSpace Become the Ultimate Instant-On OS?

As last week’s Intel Developer Forum, I had the opportunity to chat to Phoenix (the BIOS makers) about both their “Instant-On” technology, and also their quick-boot HyperSpace OS. Since I’ve had a keen interest in competing technologies for a while, such as DeviceVM’s SplashTop, I couldn’t help but want to take HyperSpace for a spin. Is it better? In the short amount of time I had to spend with it, yes, it goes without saying.

To be fair to DeviceVM, though, it’s been quite a while since I’ve been able to take SplashTop for a spin (at least a year), but there’s one thing Phoenix has that everyone else will have a hard time to compete with… the development rights to the BIOS. Because Phoenix produces both this software and the system BIOS, their solution has the ability to be much faster than anything else out there. The company assured me that competitors also have access to key features in the BIOS as well though, so as to avoid a monopoly.

The other benefit of HyperSpace is that it’s not exclusive to one notebook, because you are able to download the program yourself (it carries a fee past the trial), and then install it to your notebook to see if it works. If it does, you’ll be able to access the HyperSpace desktop just as you would on a notebook that came with the software pre-installed. As for functionality… you won’t have to worry there. Currently, there is support for light gaming (think Web games), the Internet, video and image viewing, DVD playback and even the ability to edit office documents. It’s definitely the most robust instant-on OS I’ve seen to date.

HyperSpace comes in two editions… Dual and Hybrid. You’ll require Intel’s VT for Hybrid to work, as HyperSpace will require virtualization access to the CPU. What makes it cool, though, is that you can switch between both Windows and HyperSpace very quickly. If you are in Windows, you simply double-click the icon on the desktop, wait about 8 seconds, and then you’ll be right back into HyperSpace, where battery-life is far improved (so I’m told). To get back, you can click the Windows icon, and 3 – 4 seconds later, you’ll be right back at your Windows desktop. For the Dual version, you can only run one OS at a time.

I’m currently working towards getting a notebook equipped with HyperSpace in, because I’m really interested in seeing just how it compares to SplashTop as a whole, and what benefits it offers. If you’ve ever scoffed at the idea of an instant-on OS, HyperSpace may just change your mind.

HyperSpace lets you access the Web without having to wait for Windows to boot up. Power your computer and instantly surf the Internet, check e-mail or watch the YouTube video of the day. And when you want to switch off, just close the lid and off you go. But HyperSpace is much more than just instant-on. Perform your Web-based activities in HyperSpace to preserve precious battery life. You can use your notebook up to 30% longer if you compute in the HyperSpace section and use Windows only when absolutely necessary.

| Source: Phoenix HyperSpace |

Discuss: Comment Thread

|

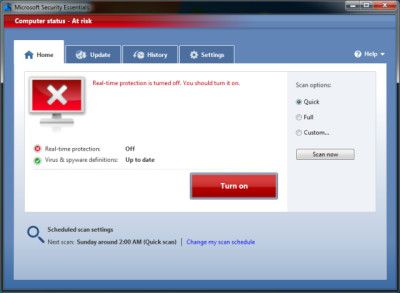

Microsoft’s “Security Essentials” is Now Available

Microsoft’s long-awaited security suite is here, and believe it or not, the public response so far has been not just good, but great. For those who might remember “OneCare”, Microsoft’s Security Essentials fixes what was wrong with that product, tosses in some new features, and one of the best parts… becomes a free solution. Don’t rush to download if you don’t have a legit copy of Windows though… a prerequisite of the install is to pass WGA screening.

Security Essentials is formally classified as being an Anti-Virus application, although like Windows Defender (which MSE replaces), it’s also a tool to protect against spyware, rootkits and other malware. Like most other security tools on the market, MSE is picky about what other software is installed on the PC, so if you are already using an anti-virus or malware protection application, especially a commercial one, you’re likely to want to stick with it.

With the name “Security Essentials”, it looks like Microsoft is planning to use the word “Essentials” in more places than one. But despite their goal for integration, the company has not included a link to download the suite from within Windows 7. As Ars Technica pointed out, this is highly likely due to potential antitrust issues, which would surely arrive from companies like Symantic and McAfee. If the browser guys can complain, surely these security companies could too.

There’s a lot more information about MSE at the Ars Technica link below, but the overall opinion is that the suite is well worth the download if you aren’t currently protected by any anti-virus or anti-malware software. Microsoft claims that between 50 – 60% of Windows users aren’t, so there’s a clear need for word to get around. MSE isn’t the first free security option, but it’s likely one of the better, partly in thanks to it’s lack of “Buy the Premium version!” nag screens.

In fact, the same engine is used for of the company’s security products, including MSE, Forefront, and the Microsoft Malicious Software Removal Tool. Engine updates for MSE and Forefront are delivered at the same time, while signature updates, on the other hand, can be delivered at different times and frequencies compared to Microsoft’s other security software. Microsoft expects the use of MSE will significantly improve Forefront since the data the company gets from additional users will be able to improve both products.

| Source: Ars Technica |

Discuss: Comment Thread

|

Dell Intros “Wireless Charging” Latitude Z Notebook

When I purchased a notebook from Dell about five years ago, I was quite impressed with what I got. Today, I realize just how ridiculously expensive it was compared to notebooks of now (it felt mid-range, but cost $3,300), but I remember at the time wondering, “What can they possibly do to make notebooks even better?” Aside from the obvious, battery-life and performance, I wondered what features could be tacked on that would help keep things fresh.

Back then, “wireless power” didn’t come to mind, although I would have been really excited to even just ponder the idea. But today, that’s a reality – kind of – from Dell, with their Latitude Z business notebook. I say “kind of” because to me, it’s not truly wireless power, and I’m not sure who could consider it as such. Wireless to me assumes some leeway about how far you could get from the source, and in the case here, we’re talking millimeters, not meters.

Like many other notebooks, this one comes with a dock. What’s different here, is that rather than the notebook slide into a connector or a cord need to be inserted, you simply have to set the notebook down and it will do it’s thing. Technically, it is wireless, because there are no cables running from the notebook to the dock, but it has to be physically on the dock, so it’s hard to tell the difference. This isn’t exactly a cheap option, either. It’s yours for $199, and as such, it’s clearly targeted at business users (fair enough given it’s a business class notebook).

Just how useful this feature is, is really up to you. One additional feature I really like, though, is Dell’s version of an “instant-on” environment. Inside the notebook is a secondary (but much smaller) motherboard complete with an ARM processor. Users, when booting up, can boot into this non-Windows OS for basic tasks, such as e-mail, Web browsing and handling contacts and calendars. Why should you should care? Using this mode, you can get upwards of 2 days worth of battery-life, compared to 4 hours in a Windows environment. It’s numbers like these that may finally give the “instant-on” OS the push it needs to hit mainstream popularity.

Credit: Erica Ogg |

What Dell, and DeviceVM, and Phoenix, and plenty of others are doing is part of a trend that’s gaining steam: doing a sort of end-run around Windows. HP came out with its own interface on Touchsmart PCs last year that allows for quick sorting between photos, e-mail, and Web browsing on a few models. Lenovo recently introduced a new touch-screen interface for its tablet, and Asus has its own for its popular Eee PC Netbooks and touch-screen desktop called TouchGate.

| Source: CNET |

Discuss: Comment Thread

|

How to Get Ripped-Off By Dell and Mesh

Mention the words “customer service” to pretty much anyone on the street, and you can be sure that they’ll have a story, or horror story, to tell. I haven’t personally experienced any real major issue with customer service in the past, but since I did at one point own a Dell notebook, I did get to taste how frustrating it can be at times. With Dell in particular, they outsource to India for most of their customer service, and while I’m all for people having a job, customers really shouldn’t be expected to deal with someone who’s native language is clearly not English.

UK publication PC Pro decided to see how things stand today with a couple of major PC sellers, Dell and Mesh, and in particular, see how much they could be ripped off. They posed as a regular consumer… one who isn’t that well-versed in technology, to see if they could purchase a PC that was best-suited for them. In the end, both companies tried to upsell this faux consumer, while making some outrageous claims at the same time.

One Dell employee, for example, stated that a better graphics card was required to download photos. When the consumer stated that his max spending limit was £599, the machine that the representative came up with was of course £599. When another rep was asked about the number of photos that could be stored on a 250GB hard drive, the response was, “In other words is it [sic] around 10 lakh. 1000*250.“. Don’t worry… I didn’t know that lakh meant “one hundred thousand” in the Indian numbering system either.

Mesh as a whole was a lot better in giving advice, but they still tried to upsell. In truth, while Dell and Mesh may try to upsell whatever you’re looking to buy, it’s hardly only these two companies that are guilty of this. It’s unfortunate, because most people aren’t even going to realize that they are being ripped off by buying into a bigger computer than they actually need. It pays to do your research… if only more consumers realized this.

Hold on sunshine. What about these cheap netbook devices I’ve seen Dell advertise in my daily newspaper? Are these not good enough? “They are netbooks and not laptops,” he snapped back. What’s the difference? “The netbooks comes [sic] with a slower processor, lesser memory, lesser hard drive, no optical drive and it would not be possible to have any software loaded on this netbook,” he stated, once again playing hard and fast with the truth.

| Source: PC Pro |

Discuss: Comment Thread

|

Apple’s App Store Hits 2 Billion Downloads

When Apple first launched their “App Store”, I have to wonder if they expected it to take off so quickly. Sure, the company has sold millions of iPhone’s and iPod touch’s, but if you recall this past April, the App Store hit a staggering 1,000,000,000 (one billion!) downloads in just nine months. At that rate, downloads would have averaged at close to 4 million per day… every day. Whew, it kind of boggles the mind, huh?

Well, if you think that’s impressive, than a release the company made yesterday is even more so. Their App Store just hit the 2 billion mark, which shows that the growth in popularity of these apps isn’t slowing down at all… in fact, it’s accelerating. While it took 9 months for the first billion, it only only five for the second. Will we see 3 billion in three months… ish? Given that half of a billion have been downloaded in this quarter alone… chances are, it could happen.

Not only has the number of apps downloaded per day increased, but so has the overall total number of apps themselves. Back in April when the first billion was hit, the store had around 35,000 apps to choose from. Currently, there are over 85,000. When you look at it this way, the number of apps is actually growing at a faster rate in relation to the number of downloads. This means, that while more downloads are taking place, developers might actually be making less money overall due to the increased competition.

Apple today announced that more than two billion apps have been downloaded from its revolutionary App Store, the largest applications store in the world. There are now more than 85,000 apps available to the more than 50 million iPhone and iPod touch customers worldwide and over 125,000 developers in Apple’s iPhone Developer Program. “The rate of App Store downloads continues to accelerate with users downloading a staggering two billion apps in just over a year, including more than half a billion apps this quarter alone,”

| Source: Apple Press Release |

Discuss: Comment Thread

|

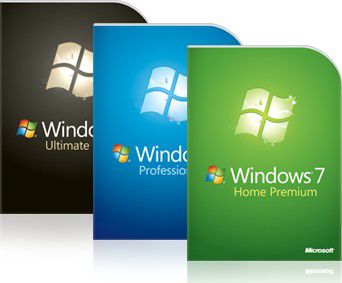

Windows 7 OEM Pricing Revealed

Over the course of the past few months, we’ve learned pretty much all there is to know about Windows 7 pricing, from upgrade costs to full retail costs to Anytime Upgrade costs. But there was one crucial piece of the puzzle missing: OEM pricing. Thanks to Newegg, though, and the catch made by Computerworld, even that is now revealed, so now is the time to decide which edition you’re going to pre-order or pick up at launch.

For convenience, we’ve thrown together a table that can help you find the exact pricing on all the editions quickly. Note that we haven’t included Windows 7 Starter or Home Basic, as those editions are not likely to ever be sold to regular consumers, so pricing of course isn’t known. What’s interesting about OEM pricing though, and this could have always been the case with previous versions, is that the OEM price is actually less expensive than an upgrade copy.

The reason that’s so odd is because OEM editions are essentially a full-blown OS, just like the full retail copies. The difference is that OEM copies are supposed to be used by system builders, and as a result, it’s only meant to be used on one PC, ever. If you purchase an OEM copy, and your PC dies, you’re not supposed to use it on another machine. You likely could after giving a call to Microsoft, but whether that’s right or wrong is for you to decide.

|

Type

|

Home Premium

|

Professional

|

Ultimate

|

|

Upgrade

|

$119.99

|

$199.99

|

$219.99

|

|

Anytime Upgrade

|

$79.99

(From Starter) |

$89.99

(From Home P.) |

$139.99

(From Home P.) |

|

Retail

|

$199.99

|

$299.99

|

$319.99

|

|

OEM

|

$109.99

|

$139.99

|

$189.99

|

|

OEM 3-Pack

|

$309.99

|

N/A

|

$549.99

|

So there you have it. Hopefully by now you have a good idea of which edition you want or need. If you are unsure whether you should pick up Home Premium, Professional or Ultimate, a good place to start is at this Wikipedia article, as it pretty much covers every possible angle. If you don’t feel like doing the research, just pick up Home Premium. It includes most everything anyone will need, with the possible exception of Windows XP Mode, which you can read more about here.

|

Discuss: Comment Thread

|