- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Maxon Redshift 3.5 GPU & CPU Rendering Performance

It’s been some time since we’ve taken a detailed look at rendering performance in Maxon’s Redshift, so this article will get us up to speed. While Redshift is soon to gain support for AMD’s Radeon GPUs, it’s not quite here yet – so for now, we’ll take a look at rendering five different projects with NVIDIA’s current-gen GeForce stack.

It’s been almost exactly two years since we last posted a dedicated look at rendering performance in Maxon’s Redshift, so let’s get up to speed, shall we?

In the grand scheme of things, two years doesn’t feel like too long a time, but in tech, the opposite can feel true. Our previous dedicated Redshift article revolved around NVIDIA’s previous-generation Turing GeForces – none of the newer Ampere ones. In that same time frame, Redshift added support for rendering on modern Apple computers, as well as CPU rendering in the Windows version.

One of the biggest reasons we wanted to revisit Redshift in a more detailed way right now is because of the recent adding of support for rendering to AMD Radeon GPUs in Windows. Unfortunately, our perceived timeline on availability was off, so we can’t yet post any numbers relating to Team Red.

As we recently mentioned in our news section, Maxon invites Radeon users who are eager to test Redshift to reach out and request closed alpha access. We’ve since gained access to this alpha, and learned that the first rule of Redshift alpha is you do not talk about Redshift alpha. We’ll talk more about Radeon in Redshift when the public plugin drops.

As mentioned above, our previous dedicated Redshift look involved NVIDIA’s Turing-based GeForces, while here, the focus will be on Ampere. That’s not the only change, however. We’ve also migrated our root software from 3ds Max to Cinema 4D, which allowed us to fetch some publicly available projects that are well-worth benchmarking. One is even found inside of C4D’s own asset manager.

NVIDIA GPU Lineup & Our Test Methodologies

Since our Redshift GPU rendering testing remains tethered to NVIDIA, let’s take a quick look at the company’s current-gen lineup:

| NVIDIA’s GeForce Gaming & Creator GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 Ti | 10,752 | 1,860 | 40 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,999 |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| RTX 3050 | 2,560 | 1,780 | 9.0 TFLOPS | 8GB 2 | 224 GB/s | 130W | $249 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

All of these GPUs will be used for testing here, aside from the RTX 3090 Ti. Even without it, though, it won’t be difficult to understand where it’d fall into place. With its nine Ampere options, our choice of best NVIDIA “bang-for-the-buck” hasn’t changed since its release: GeForce RTX 3070.

We can’t call $500 “cheap”, but for the level of performance offered, the RTX 3070 is a great value – it’s half of an RTX 3090 Ti for 1/4th the price. If you’re planning to create more complex projects, you would be doing yourself a favor by going for a higher-end option. If there was such thing as a best bang-for-the-buck at the top-end, that’d have to be the 3080 Ti, which includes a 12GB frame buffer.

With that covered, here’s a quick look at our test PC:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.80 02/16/2022) |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 16GB x4 Operates at DDR4-3600 16-18-18 (1.35V) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 512.96) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 512.96) NVIDIA GeForce RTX 3080 (10GB; GeForce 512.96) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 512.96) NVIDIA GeForce RTX 3070 (8GB; GeForce 512.96) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 512.96) NVIDIA GeForce RTX 3060 (12GB; GeForce 512.96) NVIDIA GeForce RTX 3050 (8GB; GeForce 512.96) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 11 Pro build 22000 (21H2) AMD chipset driver 4.06.10.651 |

| All product links in this table are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using updated software, including the graphics and chipset driver, and Redshift itself. A point release (3.5.04) of Redshift became available after our testing was completed; a sanity check with that version showed no differences in our results.

Here are some other general testing guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

Alright… time to dive into some performance numbers!

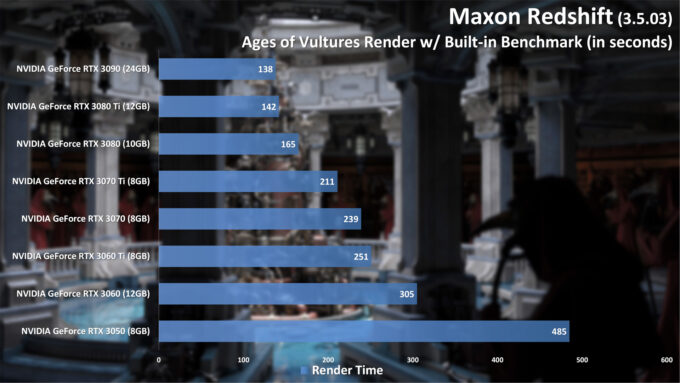

Redshift GPU Rendering Performance

If you’d like to see how your own NVIDIA GPU compares against those we’ve tested in Redshift, you can do so fairly easily. Once Redshift is installed (even the trial), a batch file (RunBenchmark.bat) can be found in the “C:\ProgramData\redshift\bin” folder. The ProgramData folder is hidden by default, so you will have to type the path in manually (or copy it from here to paste into your Explorer address bar).

After double-clicking the RunBenchmark.bat file and waiting for the test to download and finish, an output image named “redshiftBenchmarkOutput.png” will be generated in the same folder. That image will include the render time in the bottom left-hand corner.

If a tested NVIDIA GPU includes RT cores, then the Redshift benchmark will automatically opt to use them. This is a good thing, of course, as those cores help greatly accelerate ray tracing workloads, which includes 3D rendering. You can see OptiX On/Off comparisons that we tested last year here.

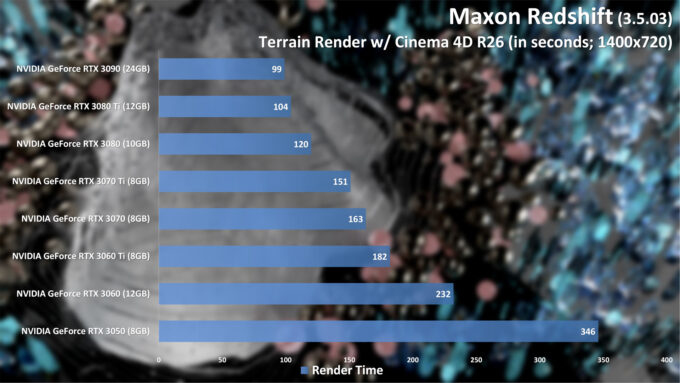

A simple performance graph like the one above does a great job at explaining what a faster GPU will net you, and how a slower GPU can hold you back. An option like the RTX 3060 may include a beefy 12GB frame buffer (with worse bandwidth than the RTX 3060 Ti and higher, it must be said), but it won’t offer any benefit unless a workload will use all of that memory.

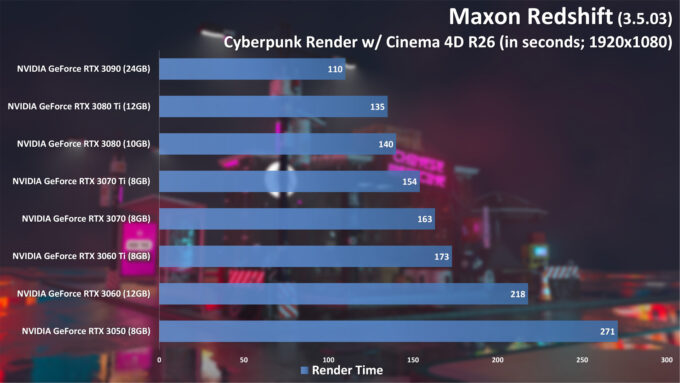

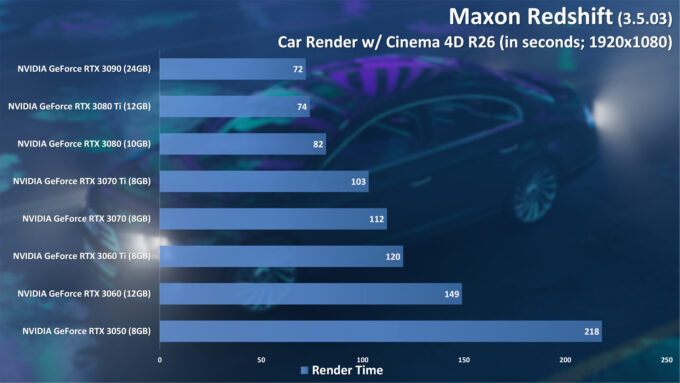

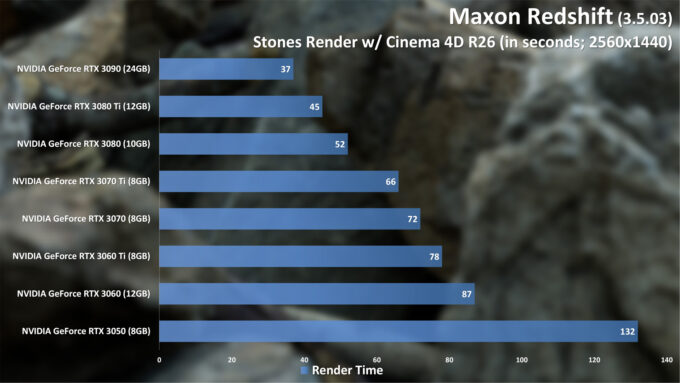

In the past, we used Autodesk’s 3ds Max to benchmark a couple of Redshift projects, but for this one, we’ve migrated to Cinema 4D (for a couple of reasons). As benchmarkers, not designers, we’re always at the mercy of testing projects folks put online, and perhaps not surprisingly, there are many released for C4D rather than 3ds Max. Because of this, we not only have better projects to test with, but more of them, as well.

To generate the test results below, we took advantage of a few projects that you yourself can go and snag. Both the Cyberpunk and Car scene come from the same source: an official Redshift tutorial. The Stones project can be found right inside of C4D’s own asset manager, while the Terrain project comes from professional designer Bihhel. Thanks to everyone who created these projects, and especially for offering them up for free.

Alright, enough preamble, let’s take a further look at performance:

All of these performance graphs paint a similar picture, and because there’s only a single vendor involved, the order of the GPUs is just what we’d expect. While scaling is similar, though, it’s not identical from test to test. Interestingly, the Cyberpunk and Stones scenes showed healthy gains for the RTX 3090 over the 3080 Ti, with much more modest advantages in the other two.

While you can certainly take good advantage of Redshift with slower GPUs, it’s clear that you’ll want to go that route only if you really need to. The RTX 3050 should be considered a last resort sort of GPU, because if you’re buying new, you really should spend a wee bit extra in order to reduce every single one of your render times until you upgrade again. That said, you can still get by – it’s just not ideal for the sake of efficiency.

Even the RTX 3060 is a bit of an odd duck, because while it sports a huge 12GB frame buffer, its lower overall bandwidth won’t allow it to shine as much as it could. To our knowledge, none of these tested projects will use more than 8GB of VRAM, but if you happen to design complex memory-hungry projects, you should still see a notable gain if you go with RTX 3060 over RTX 3050.

Overall, the RTX 3070 looks to continue its reign as the best bang-for-the-buck creator card. With its modest gains, it’d be hard to even suggest the 3070 Ti as a possible alternative. It costs $100 more; for $200 more, the RTX 3080 will deliver a more impressive performance boost, and increase the frame buffer size at the same time.

Redshift CPU Rendering Performance

Since the last time we took an in-depth look at Redshift performance, the engine gained CPU support, so we couldn’t help but give that a quick test. What we found was kind of expected: GPUs are still the go-to for the fastest, and most efficient performance. Those who benefit most from Redshift CPU will be those with the biggest CPUs going. Let’s break it down a little bit.

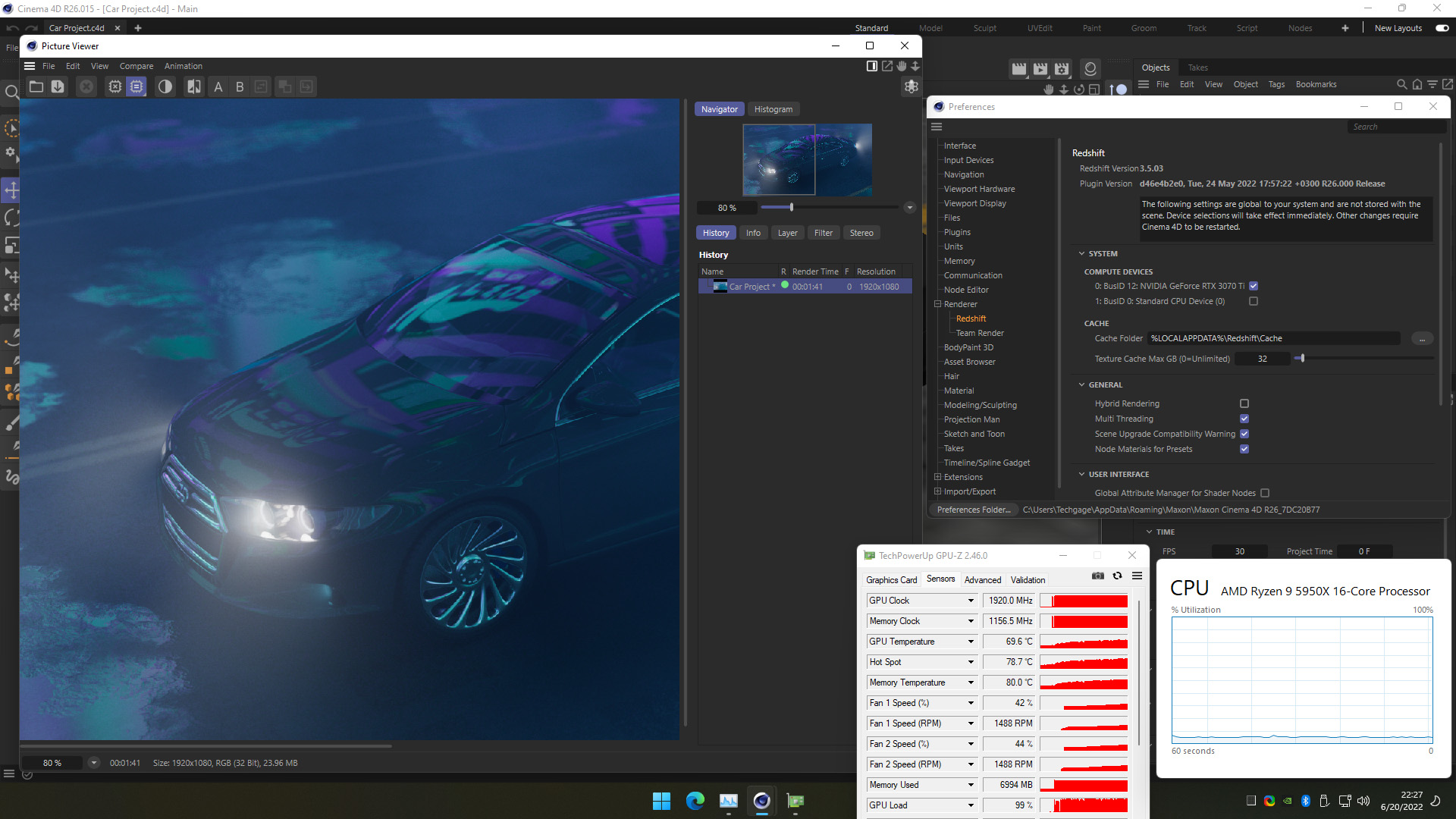

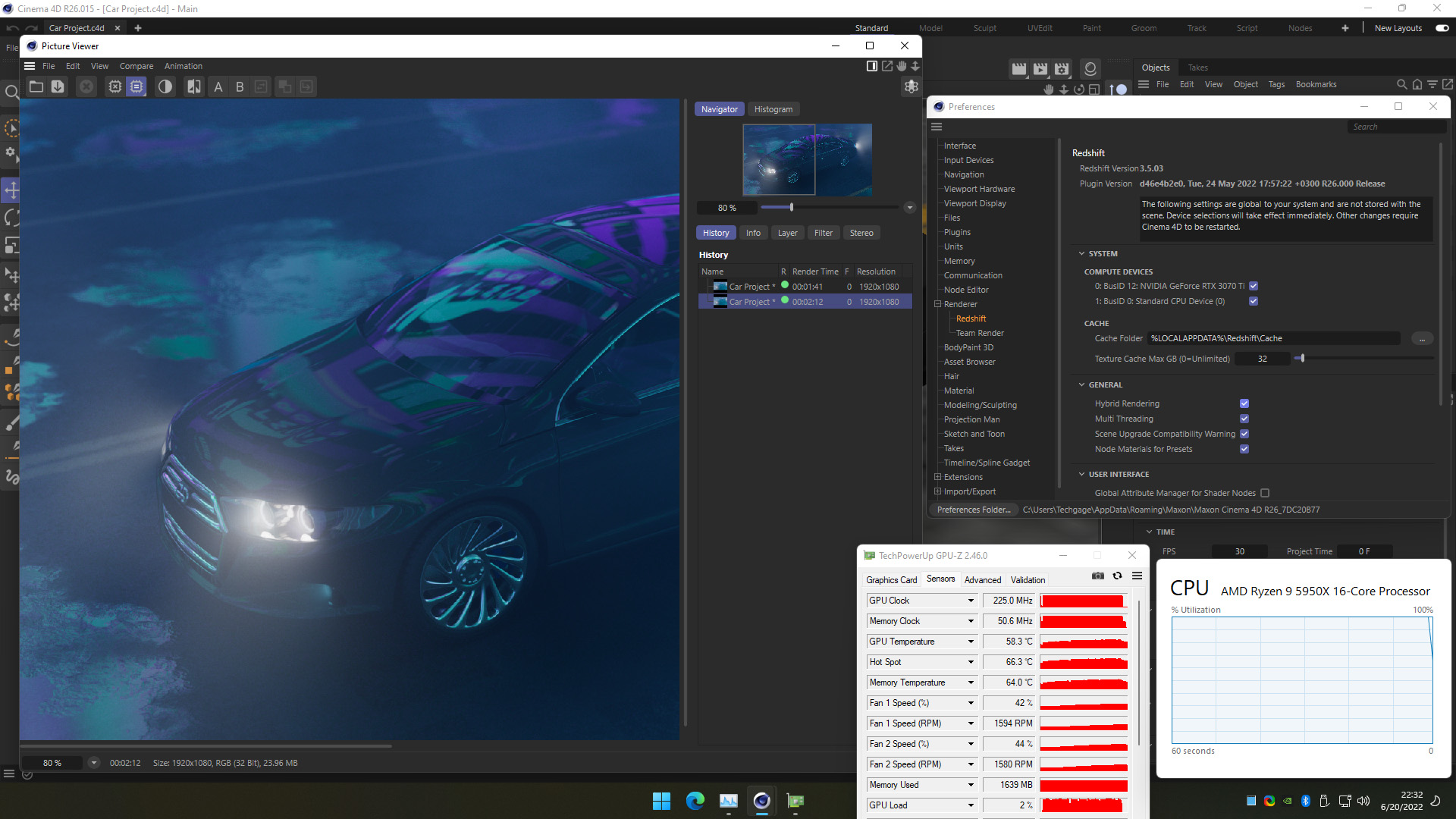

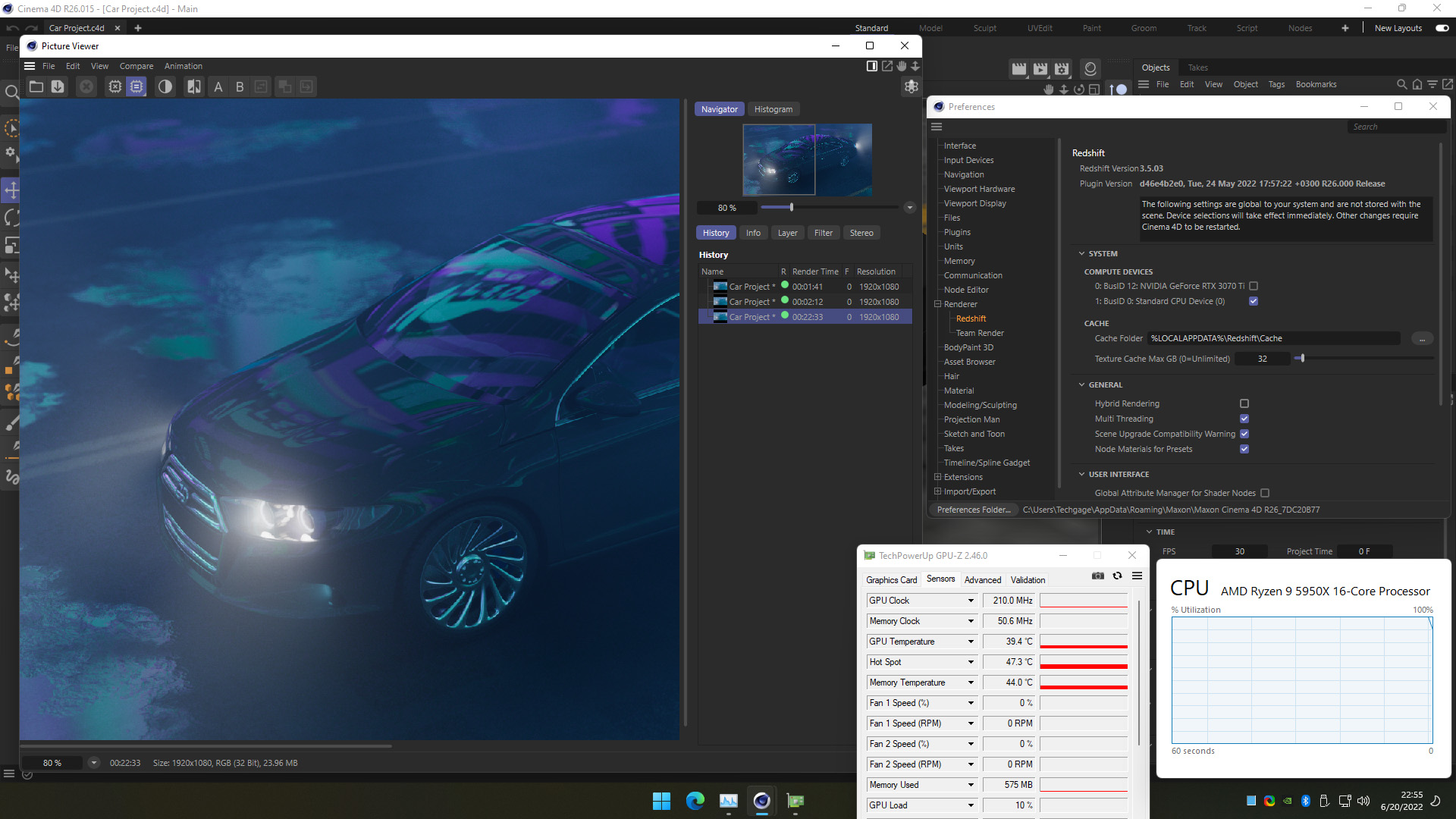

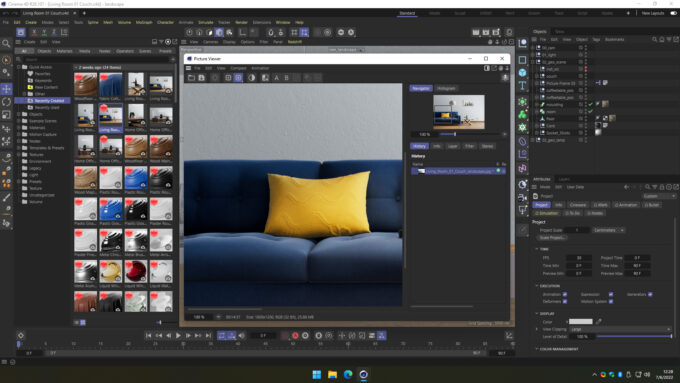

In the image carousel below, you can see processor usage with Redshift while rendering the same scene three different ways. You only really need to take a look at the final image, as it includes all three render times in the picture viewer:

When rendering with only the GPU (RTX 3070 Ti), the image completes in 1m 41s. When Hybrid rendering mode is engaged – taking advantage of the CPU (16-core Ryzen 9 5950X) and GPU together – the render time actually drops to 2m 12s. This isn’t hugely surprising, as CPU+GPU rendering is hard to perfect, since both processors handle the render in very different ways. When we render with only the CPU, the render takes a staggering 22m 33s.

It could be that there are ways to optimize a project so that the CPU will complement the GPU better, but this type of performance scaling doesn’t seem too unusual. For comparison’s sake, we loaded up Blender, and ran the same general tests:

| Blender | Redshift | ||||||

| CUDA GPU | 226 s | N/A | |||||

| CUDA GPU+CPU | 220 s | N/A | |||||

| OptiX GPU | 77 s | 101 s | |||||

| OptiX GPU+CPU | 84 s | 132 s | |||||

| CPU | 690 s | 1,353 s | |||||

| Notes | GPU: NVIDIA GeForce RTX 3070 Ti CPU: AMD Ryzen 9 5950X (16-core) |

||||||

As you can see, both Blender (Cycles) and Redshift have a lot in common when it comes to rendering to either the GPU or CPU, or both together. With OptiX in Blender, adding the CPU to the mix hurts performance much the same, and rendering to the CPU only will take between 9x or 10x longer than rendering to only the GPU.

Final Thoughts

With the help of the five performance graphs above, you should now have an easier time figuring out which GPU solution you should be going after. Thankfully, the graphics card drought seems to be completely over by this point, so you should have no problem finding any one of these tested models on store shelves.

It should be noted that NVIDIA released its newest top-end GPU, the RTX 3090 Ti, a couple of months ago, so if you’re a user who wants to splurge on the best, you’ll probably want to seek that card out over the non-Ti we tested – assuming you can get the Ti for the same price – or just with a modest premium over the non-Ti.

For those who crave lots of horsepower, but are not able to splurge on a top-end GPU, there’s always the option of purchasing two smaller GPUs and combining their forces. We’d like to test this for ourselves at some point, but based on testing in the past, it shouldn’t be difficult to pair two smaller cards that match the higher-end options – ignoring the frame buffer size and memory bandwidth boosts you will see with the higher-end parts.

Similarly, if you already have a last-gen NVIDIA GPU, and are planning to upgrade to an Ampere card, you may want to consider saving the old card, and letting it help out in your renders. Both Turing and Ampere should still work efficiently together, and deliver even faster render results than your new card alone. Of course, all of this assumes that A- your PC has proper airflow for two GPUs, and that B- your power supply can handle both.

Of course, should you be confused over anything or want further advice, you can feel free to hit-up our comments section below.

We would have loved to have been able to include AMD Radeon performance here, but since that official launch is a little ways off, we’ve had to hold off on that for now. That said, we’re still eager to jump on full-blown Radeon testing once we’re able to do so. Depending on time, that may result in an entirely new article, or an updating of this one. Either way, stay tuned, if you’re interested to learn more about Radeon and Redshift.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!