- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

NVIDIA GPU Performance In Arnold, Redshift, Octane, V-Ray & Dimension

We recently explored GPU performance in RealityCapture and KeyShot, two applications that share the trait of requiring NVIDIA GPUs to run. With more results in-hand, we’re now going to explore performance from five other renderers that also require NVIDIA: Arnold, Redshift, Octane, V-Ray, and Adobe Dimension.

We recently published a performance look at both Capturing Reality’s RealityCapture photogrammetry tool, as well as the major update to Luxion’s popular design and rendering tool, KeyShot. Both share the distinction of requiring NVIDIA’s CUDA to run, a trait that still seems common after all these years. That’s unfortunate for AMD and Intel GPU users, so we hope things change in time.

There has been a lot of benchmarking going on here the past couple of weeks in preparation for content, which included the aforementioned pieces. We’ve almost finished retesting all of our NVIDIA GPUs with our latest workstation suite, but have to wait until after CES to jump on AMD’s and get some fresh numbers posted in what will likely become a Quadro RTX 6000 review (since we’re due).

To get some more juicy render numbers up before CES, we wanted to take advantage of the completed NVIDIA data we have, and focus on the other tests in our suite that work only on NVIDIA. In our minds, there isn’t enough performance data from any one of these applications to warrant a standalone article, so we’re combining them all into one here. We plan to expand our testing on each of these renderers in time.

At the moment, none of the workloads featured here, to our knowledge, has support for non-NVIDIA GPUs planned – except OTOY, which will use Vulkan sometime in the future to enable support for AMD and Intel GPUs on Windows. We wrote the other day that the company will soon be releasing the first preview of Octane X for macOS, which will deliver on the same goals of AMD/Intel GPU support. CUDA will remain locked to those running an older version of macOS, since Apple killed support in later versions.

Some of these tests include support for NVIDIA’s OptiX ray tracing and denoising acceleration through its RTX series’ RT and Tensor cores. The lone exception is Redshift, but that’s because it’s the current stable version of 2.6. 3.0 with full OptiX support is coming, and we’ll definitely be digging into testing that soon enough.

For this article, we’re taking a look at straight-forward rendering performance. All are real-world workloads except for OctaneBench, which has scaled well enough over time to give us enough confidence to trust it. But, we’d love to test a real Octane RTX implementation sometime.

Here’s a look at the PC used during testing:

| Techgage Workstation Test System | |

| Processor | Intel Core i9-10980XE (18-core; 3.0GHz) |

| Motherboard | ASUS ROG STRIX X299-E GAMING |

| Memory | G.SKILL Flare X (F4-3200C14-8GFX) 4x8GB; DDR4-3200 14-14-14 |

| Graphics | NVIDIA TITAN RTX (24GB, GeForce 441.66) NVIDIA TITAN Xp (12GB, GeForce 441.66) NVIDIA GeForce RTX 2080 Ti (11GB, GeForce 441.66) NVIDIA GeForce RTX 2080 SUPER (8GB, GeForce 441.66) NVIDIA GeForce RTX 2070 SUPER (8GB, GeForce 441.66) NVIDIA GeForce RTX 2060 SUPER (8GB, GeForce 441.66) NVIDIA GeForce RTX 2060 (6GB, GeForce 441.66) NVIDIA GeForce GTX 1080 Ti (11GB, GeForce 441.66) NVIDIA GeForce GTX 1660 Ti (6GB, GeForce 441.66) NVIDIA Quadro RTX 6000 (24GB, Quadro 441.66) NVIDIA Quadro RTX 4000 (8GB, Quadro 441.66) NVIDIA Quadro P2000 (5GB, Quadro 441.66) |

| Audio | Onboard |

| Storage | Kingston KC1000 960GB M.2 SSD |

| Power Supply | Corsair 80 Plus Gold AX1200 |

| Chassis | Corsair Carbide 600C Inverted Full-Tower |

| Cooling | NZXT Kraken X62 AIO Liquid Cooler |

| Et cetera | Windows 10 Pro build 18363 (1909) |

Throughout most of our benchmarking, three runs is standard fare for our tests, but many renderers are exceptions, due to their ridiculously stable performance. Four of the five tests in this article fit that bill – you could run them over and over and rarely see more than a 1% or 2% maximum performance delta from the previous run. Adobe Dimension is that one oddball among this lineup, but we’ll save talking about that for when we get to its performance later in the page.

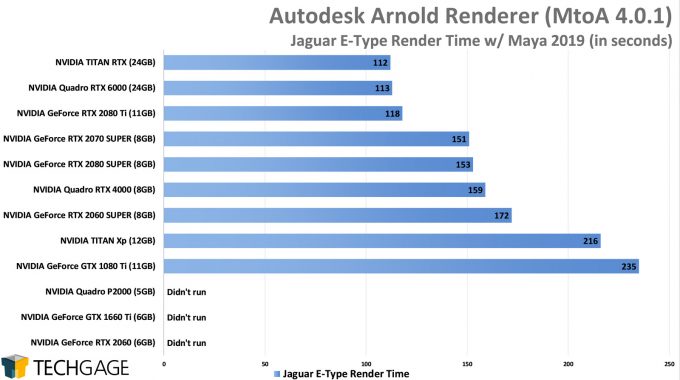

Arnold

The fact that three GPUs couldn’t finish either of their renders here is a good place to start. It’s obvious that a healthy framebuffer matters a lot with GPU rendering, and that’s the reason we’ve been suggesting going no lower than 8GB for design work. That’s one thing to note; another is the fact that NVIDIA’s RTX series speeds things up a lot. Provided you have the memory.

Despite having RT cores, the RTX 2060 struggled in our Arnold renders here, again to what we suspect would be a VRAM issue, given the other low-VRAM chips suffered just the same. But when framebuffer doesn’t matter, such as in the match-up between the TITAN Xp and TITAN RTX, we can see massive gains from one generation to the next. In the battle of GTX 1080 Ti vs RTX 2080 Ti, the latter cuts the end render time in half.

With Arnold, you want RTX, and also 8GB. At the top-end, your best value would be with the RTX 2080 Ti, while those with seriously complex projects would want to consider the much larger framebuffer of the TITAN RTX or Quadro RTX 6000. The 2060S looks to provide a great all-around value.

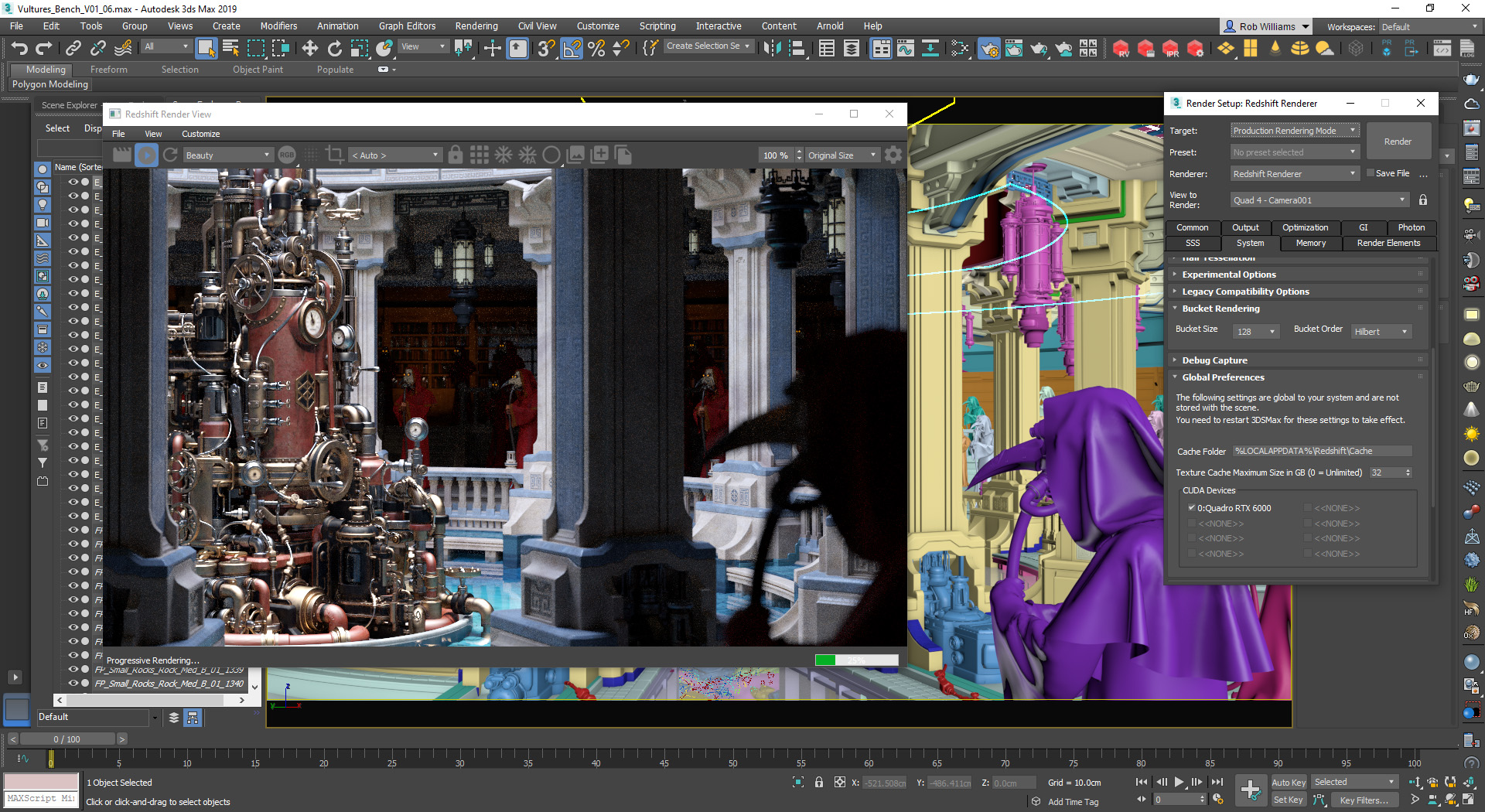

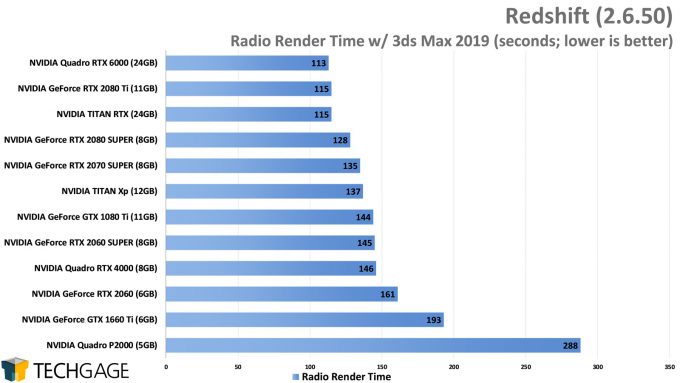

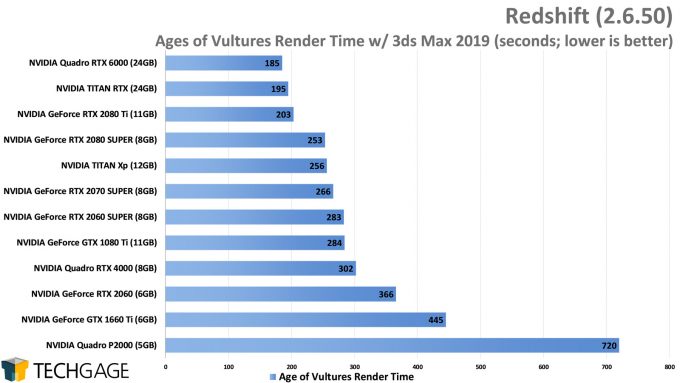

Redshift

Redshift has version 3.0 coming, and we’re planning to take a look at it as soon as we can. For now, we’re going to stick to the battle-tested Redshift 2.6, in particular, its recent .50 release. Overall, all of the GPUs scale quite nicely here, with even the last-gen NVIDIA Pascal GPUs delivering great performance in comparison to the newer Turing RTXs.

We mentioned memory being a big potential limitation earlier, and further proof of that drops here by way of the Quadro P2000. Remember when 5GB would have felt like a really healthy amount of VRAM? Today, 8GB should be considered the minimum, which fortunately opens up three main options in the GeForce line, and an affordable Quadro RTX 4000 option on the workstation side.

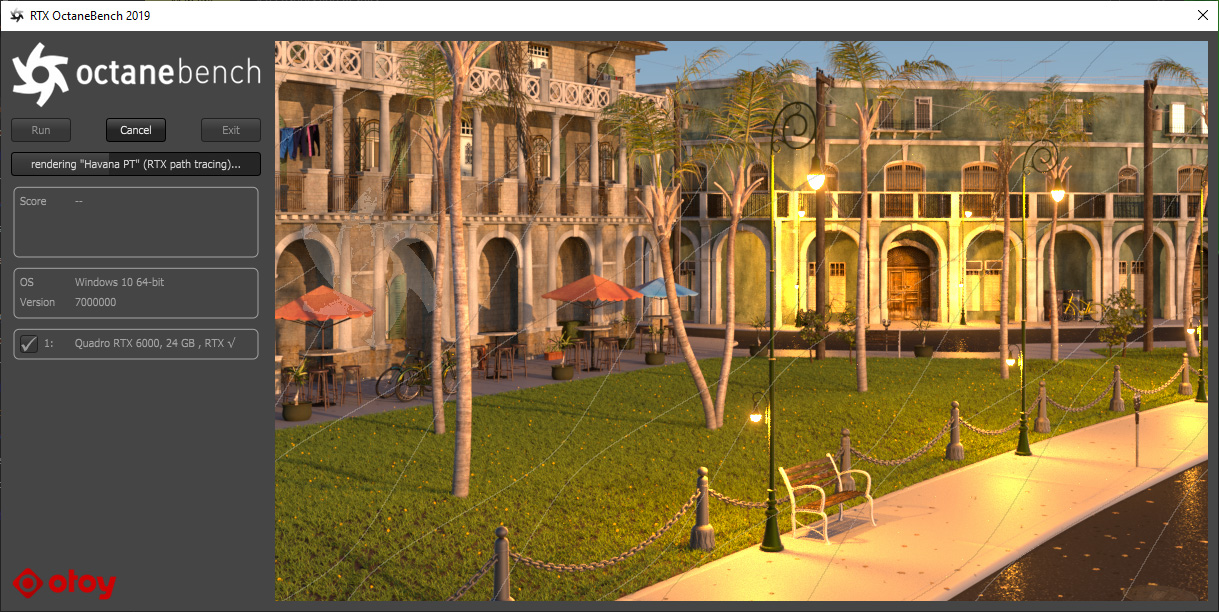

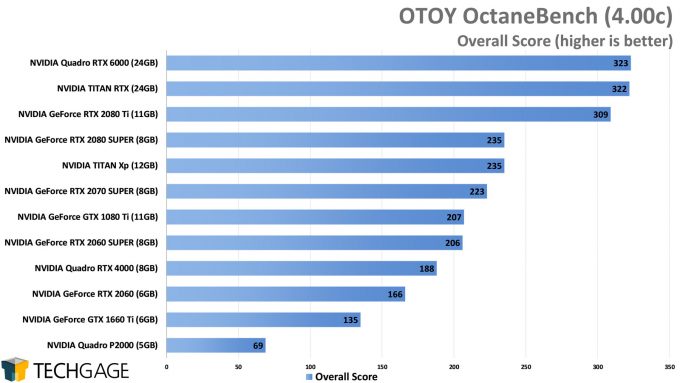

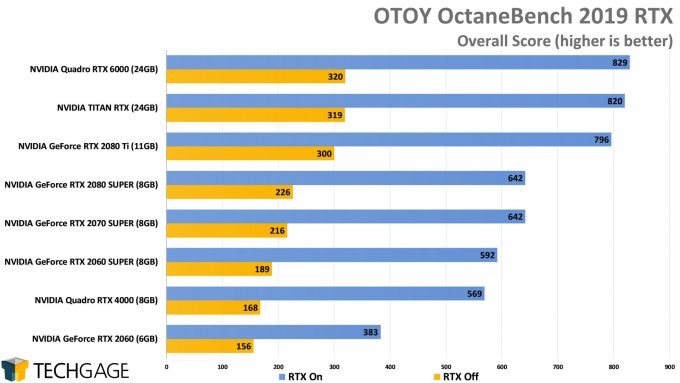

OTOY Octane

OTOY has a sickness, and that’s that it never wants to stop improving on Octane’s feature set, or its performance. The original OctaneBench uses the regular CUDA processors to render their image, while the RTX version released last year engages the hardware’s RT cores. As you can see, enabling RTX capabilities doesn’t just enhance performance, it brings it to a new level.

Since we haven’t tested Octane yet in an actual design suite with an actual project, we can’t yet state how much this performance actually correlates with real-world gains, but the previous scaling has seemed to be bang-on, so we truly hope to see the RTX gains here carry over into the real-world. Octane 2020 is going to be released in a few months, and we’re not entirely sure if this RTX benchmark represents the latest code, but we’d imagine it comes close. Since we’re addicted to benchmarking, we’ll update our numbers as soon as an updated build releases.

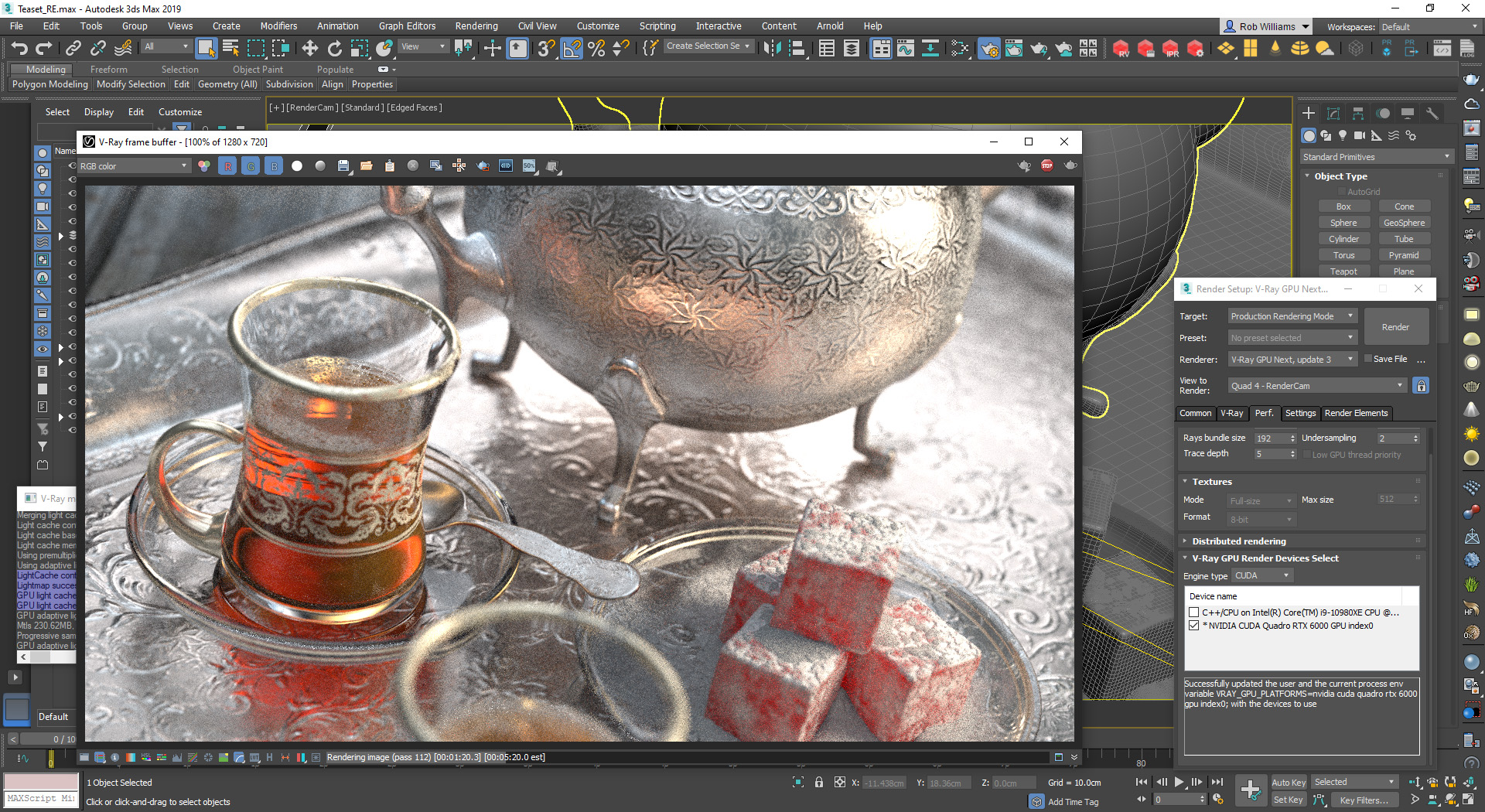

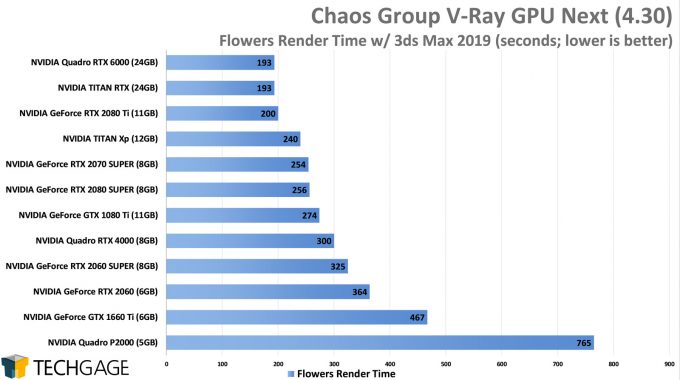

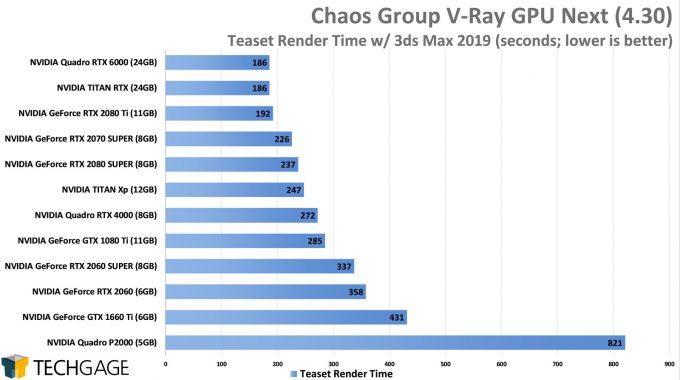

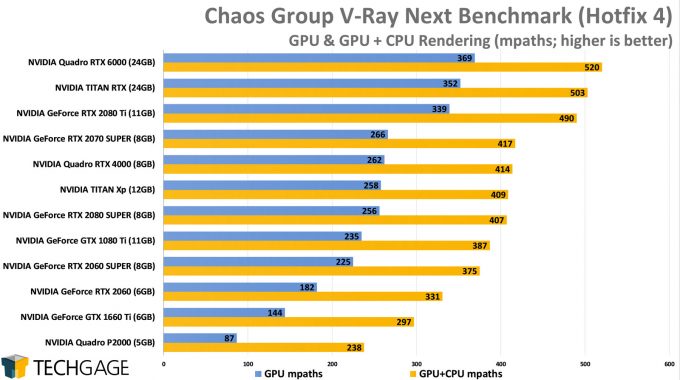

Chaos Group V-Ray

V-Ray is one of the oldest, and definitely one of the best-respected renderers out there. While it’s spent most of its life focusing on the CPU for rendering, recent years have opened up access to NVIDIA GPUs. Chaos Group became one of the earliest supporters of NVIDIA’s OptiX technologies. We remember V-Ray being one of the first places we saw AI denoise hit consumers.

On the CPU side, the renderer seems to favor Intel CPUs a bit more than AMD, as we’ve seen in the past – although that’s just from a core count standpoint, not an overall chip value standpoint. For GPU, the scaling seems almost ideal. You get what you pay for when moving up to a bigger model, although based on the RTX benchmark, going with one of those supported GPUs seems like a no-brainer at this point.

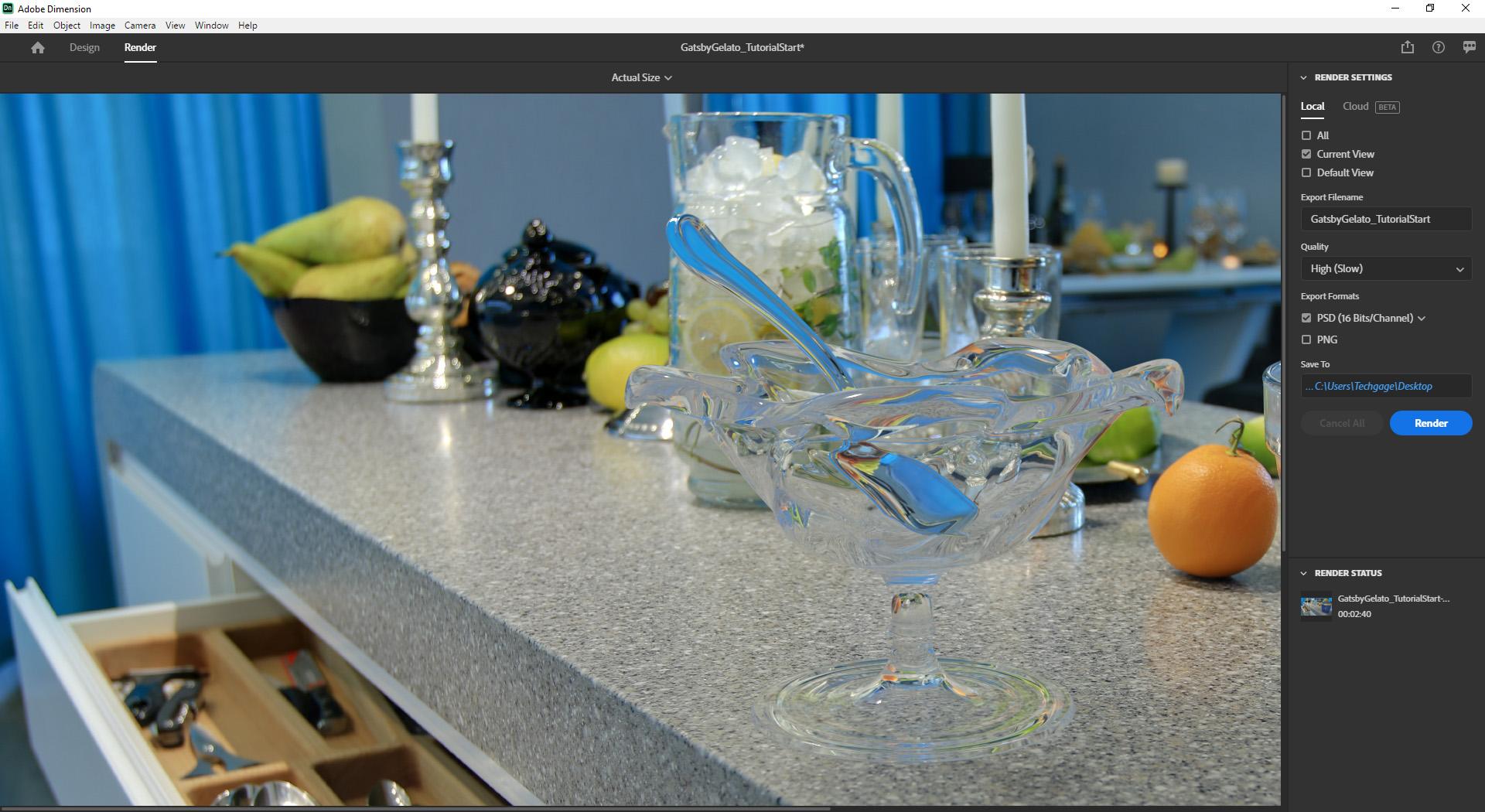

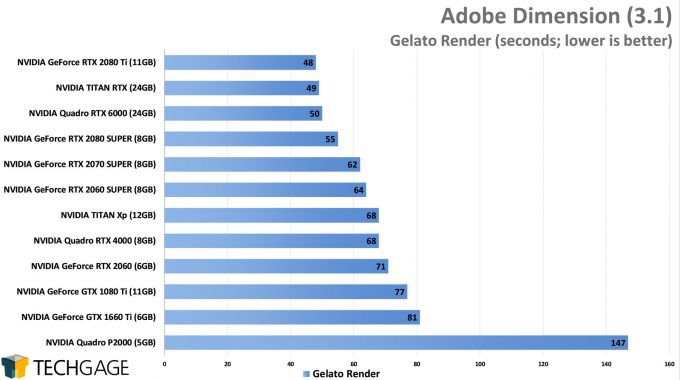

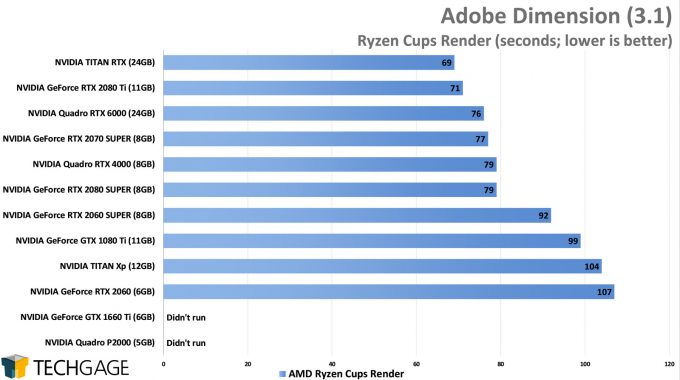

Adobe Dimension

Adobe Dimension is a bit of an oddball in this lineup, but not because it’s not a good GPU benchmark. When Dimension 3.0 released, it clearly changed a lot of the mechanics in the back-end, because we haven’t yet found a way to keep using it as a CPU-only benchmark and deliver truly scalable results. We need to look into it more when we have time, but for now, it looks like Dimension has shifted from our CPU suite right on over to our workstation GPU one.

With our two projects in-hand, some GPUs struggle quite a bit, just as we saw in Arnold. Given the two models we see sitting at the bottom, it seems safe to say that this is more proof that an 8GB GPU should be your minimum target. That said, the 6GB RTX 2060 actually did manage to get through its renders without error, so it could be that RTX’s acceleration is paying off there. It’s interesting to note that the 2060 SUPER beats out the last-gen top dogs, GTX 1080 and TITAN Xp.

It’s unlikely the same situation here, but in our past testing with deep-learning, we found that GPUs equipped with Tensor cores are efficient enough to reduce the amount of memory needed at any given time; eg: certain high-end workloads would croak on 12GB TITAN Xp, but not the Volta-based 12GB TITAN V. Nonetheless, it does seem clear that GTX is just not a good path to take for Dimension, when the lower-end RTXs beat out last-gen’s top GTX offerings.

Final Thoughts

As mentioned before, we decided to post this article because we had almost all of our NVIDIA GPU testing done, and it made sense to tackle the CUDA-only tests here. After CES, whatever leftover tests need to be run on NVIDIA will be done, and then AMD’s cards will go through the gauntlet, and we’ll post some fresh overall proviz numbers.

Whenever we post content like this, someone inevitably asks why we didn’t include AMD, or better: why we even bothered posting it if AMD isn’t supported. We’re obviously in the business of trying to provide relevant benchmarks to our readers, and while it’s unfortunate that so many solutions are locked to NVIDIA, there is always hope that some will begin to open up their code and invite competitors on in. OTOY is working on its solution to this with Octane, but we don’t know about the others.

That all said, in these particular workloads, AMD would struggle even if it were supported. With RT and Tensor cores on tap, NVIDIA’s RTX series is seriously powerful for design work when implemented properly. In solutions like Blender, you must enable OptiX acceleration separately, whereas in Arnold, for example, RT cores are used by default. That’s what we’d call a perfect implementation. We have a feeling once AMD releases GPUs with a similar feature set, some developers might feel more compelled to branch their support.

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!