- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Blender 3.1 – How Fast Are GPUs in Cycles X, Eevee & Viewport?

We’re taking a performance look at Blender’s latest version, 3.1, with the help of a huge collection of current-gen GPUs. As before, we’re going to be taking a look at rendering performance for both Cycles and Eevee, as well as viewport performance with Solid, Wireframe, and Material Preview modes.

Get the latest GPU rendering benchmark results in our more up-to-date Blender 3.6 performance article.

It’s almost hard to believe, but Blender’s 3.0 version released a full six months ago. With it came a vastly overhauled Cycles engine, the introduction of HIP (Heterogeneous Interface for Portability) for AMD’s Radeon graphics cards, the arrival of the much-anticipated asset browser, a doling out of additional geometry nodes, and more.

In addition to all that Blender 3.0 brought to the table, it effectively laid the groundwork for some major updates to come. We talked a bit about this last fall, where an official blog post talked about how every angle of Blender would be looked at, but no change would ever be made that would alienate the existing user base. If a feature you love didn’t get much of an update in the current version of Blender, it’s bound to be tackled in a later one.

At this point, Blender 3.1 has been out for a couple of months, so this article should have come a lot sooner. Truth be told, though, the performance we evaluate was largely unchanged from 3.0 to 3.1, so there’s not much this article could tell you that the previous 3.0 one couldn’t. That said, we do have two additional GPUs in the rankings here that weren’t available at 3.0 launch, including AMD’s Radeon RX 6500 XT, and NVIDIA’s GeForce RTX 3050.

What’s New In Blender 3.1?

Most of the major features in 3.1 were covered in our launch news post, but let’s recap some of them here. Perhaps above all, one of the most notable improvements in 3.1 is the introduction of Apple’s Metal API. Its inclusion means that Macs with either Apple’s own M1 graphics, or AMD’s Radeon graphics, can be used for rendering. While we haven’t tested rendering on the M1 ourselves, we’d have to imagine that Radeon will be the ideal route on Apple.

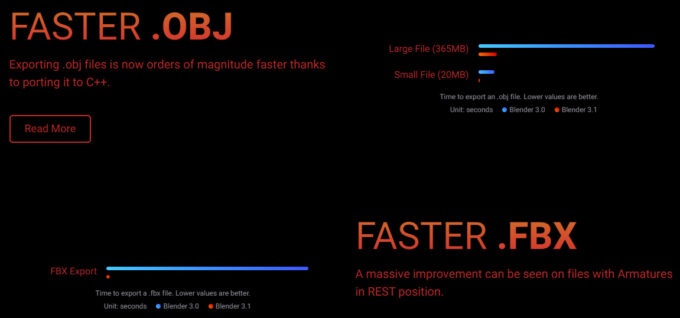

In a tool like Blender, performance obviously matters a lot with viewport interactions and rendering, but there are so many other areas that can be fine-tuned, and 3.1 helps prove it. This version greatly improves export times to both OBJ and FBX. Simply saying the exporting is faster is one thing, but check out Blender’s official results to grasp it in full:

The built-in image editor has also been greatly improved, allowing huge images to be manipulated without obscene lag. A direct example given shows an image being used that weighs in at 3 gigapixels, so really, even in performance aspects we don’t test (or are difficult to test), there have been some major improvements made here.

It’s also worth noting that the asset browser introduced in 3.0 has also seen improvements in 3.1, including those revolved around performance, thanks to the introduction of indexing.

If you haven’t played around with Blender’s asset browser yet, and want to give it a quick go, you should head on over to Blender’s demo files page to check some out. The project above, which includes Spright Fright‘s lead character, Ellie, is a perfect way to understand how the asset browser can work. While playing the scene, you can double-click any of the assets to instantly change Ellie’s facial expressions or pose – it’s all quite seamless.

As always, if you want the real nitty gritty on all that’s new in 3.1, you should head on over to the official release notes page. We’ve really just scratched the surface here, so it’s worth checking that page out.

AMD & NVIDIA GPU Lineups, Our Test Methodologies

As mentioned above, the performance angles we regularly test in Blender haven’t seen notable changes in 3.1. We have noticed, though, that in most cases, performance has become a bit worse, but nothing to a worrying degree. What could be lost in performance could be made up in quality. Any change at all highlights the fact that Blender’s developers are continually eking as much out of their respective features, like Cycles, as possible.

Since our last deep-dive, we’ve added the AMD Radeon RX 6500 XT and NVIDIA GeForce RTX 3050 to the mix. We don’t have any of AMD’s just-released RX 6X50 XT cards on-hand to include, nor NVIDIA’s GeForce RTX 3090 Ti, but ultimately, as they are speed-bumped SKUs rather than a new architecture, it wouldn’t be difficult to surmise where any of them would fit into the forthcoming graphs.

| AMD’s Radeon Creator & Gaming GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | Price | |

| RX 6950 XT | 5,120 | 2,310 | 23.6 TFLOPS | 16 GB 1 | 576 GB/s | 335W | $1,099 |

| RX 6900 XT | 5,120 | 2,250 | 23 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $999 |

| RX 6800 XT | 4,608 | 2,250 | 20.7 TFLOPS | 16 GB 1 | 512 GB/s | 300W | $649 |

| RX 6800 | 3,840 | 2,105 | 16.2 TFLOPS | 16 GB 1 | 512 GB/s | 250W | $579 |

| RX 6750 XT | 2,560 | 2,600 | 13.3 TFLOPS | 12 GB 1 | 432 GB/s | 250W | $549 |

| RX 6700 XT | 2,560 | 2,581 | 13.2 TFLOPS | 12 GB 1 | 384 GB/s | 230W | $479 |

| RX 6650 XT | 2,048 | 2,635 | 10.8 TFLOPS | 8 GB 1 | 280 GB/s | 180W | $399 |

| RX 6600 XT | 2,048 | 2,589 | 10.6 TFLOPS | 8 GB 1 | 256 GB/s | 160W | $379 |

| RX 6600 | 1,792 | 2,491 | 8.9 TFLOPS | 8 GB 1 | 224 GB/s | 132W | $329 |

| RX 6500 XT | 1,024 | 2,815 | 5.77 TFLOPS | 4 GB 1 | 144 GB/s | 107W | $199 |

| RX 6400 | 768 | 2,321 | 3.57 TFLOPS | 4 GB 1 | 128 GB/s | 53W | $159 |

| Notes | 1 GDDR6 Architecture: RX 6000 = RDNA2 |

||||||

In the grand scheme of creation or gaming, it’s not too difficult to look at AMD’s current Radeon lineup and pick one that strikes us as the best bang-for-the-buck, but sadly, as we’ll see in this article, and our handful of previous Blender deep-dives, AMD performance is not where it needs to be. If you put a $400 Radeon up against a $400 GeForce, the latter is going to win almost every time. We’ve received some ire in the past for this take, but you don’t even need to listen to us – just look at the performance graphs.

Still, if we had a highly-detailed gun render pointed in our direction, we’d have to say that the RX 6700 XT is a good target, because it lands right in the middle of the RDNA2 lineup in terms of performance and memory bandwidth – and not to mention memory density. The 6750 XT would be even more ideal, if you can swing it, since any gain to the memory bandwidth will be appreciated.

| NVIDIA’s GeForce Gaming & Creator GPU Lineup | |||||||

| Cores | Boost MHz | Peak FP32 | Memory | Bandwidth | TDP | SRP | |

| RTX 3090 Ti | 10,752 | 1,860 | 40 TFLOPS | 24GB 1 | 1008 GB/s | 450W | $1,999 |

| RTX 3090 | 10,496 | 1,700 | 35.6 TFLOPS | 24GB 1 | 936 GB/s | 350W | $1,499 |

| RTX 3080 Ti | 10,240 | 1,670 | 34.1 TFLOPS | 12GB 1 | 912 GB/s | 350W | $1,199 |

| RTX 3080 | 8,704 | 1,710 | 29.7 TFLOPS | 10GB 1 | 760 GB/s | 320W | $699 |

| RTX 3070 Ti | 6,144 | 1,770 | 21.7 TFLOPS | 8GB 1 | 608 GB/s | 290W | $599 |

| RTX 3070 | 5,888 | 1,730 | 20.4 TFLOPS | 8GB 2 | 448 GB/s | 220W | $499 |

| RTX 3060 Ti | 4,864 | 1,670 | 16.2 TFLOPS | 8GB 2 | 448 GB/s | 200W | $399 |

| RTX 3060 | 3,584 | 1,780 | 12.7 TFLOPS | 12GB 2 | 360 GB/s | 170W | $329 |

| RTX 3050 | 2,560 | 1,780 | 9.0 TFLOPS | 8GB 2 | 224 GB/s | 130W | $249 |

| Notes | 1 GDDR6X; 2 GDDR6 RTX 3000 = Ampere |

||||||

On the NVIDIA side, one GPU that will immediately stand out to anyone looking for a great value is the GeForce RTX 3060, as it costs just $329, and sports a 12GB frame buffer. Ultimately, though, our test results should help steer you in one direction or another, because while that GPU does have plenty of memory, it doesn’t provide as much bandwidth as options higher in the ladder, which means if you’re not actually using anything above 8GB, then you’re not really gaining much at all.

To us, the RTX 3070 remains the best bang-for-the-buck in the current-gen GeForce lineup, which is the reason we choose that GPU as the pairing in all of our CPU-related workstation content.

Because market pricing can fluctuate greatly sometimes, it’s always worth scouring for even better pricing on higher-end models, because you never know – you may just get lucky and be able to get a nice performance uplift for a modest premium.

Here’s a quick look at the test PC used for our Blender 3.1 benchmarking:

| Techgage Workstation Test System | |

| Processor | AMD Ryzen 9 5950X (16-core; 3.4GHz) |

| Motherboard | ASRock X570 TAICHI (EFI: P4.60 08/03/2021) |

| Memory | Corsair VENGEANCE (CMT64GX4M4Z3600C16) 16GB x4 Operates at DDR4-3600 16-18-18 (1.35V) |

| AMD Graphics | AMD Radeon RX 6900 XT (16GB; Adrenalin 22.2.3) AMD Radeon RX 6800 XT (16GB; Adrenalin 22.2.3) AMD Radeon RX 6800 (16GB; Adrenalin 22.2.3) AMD Radeon RX 6700 XT (12GB; Adrenalin 22.2.3) AMD Radeon RX 6600 XT (8GB; Adrenalin 22.2.3) AMD Radeon RX 6600 (8GB; Adrenalin 22.2.3) AMD Radeon RX 6500 XT (4GB; Adrenalin 22.2.3) |

| NVIDIA Graphics | NVIDIA GeForce RTX 3090 (24GB; GeForce 511.79) NVIDIA GeForce RTX 3080 Ti (12GB; GeForce 511.79) NVIDIA GeForce RTX 3080 (10GB; GeForce 511.79) NVIDIA GeForce RTX 3070 Ti (8GB; GeForce 511.79) NVIDIA GeForce RTX 3070 (8GB; GeForce 511.79) NVIDIA GeForce RTX 3060 Ti (8GB; GeForce 511.79) NVIDIA GeForce RTX 3060 (12GB; GeForce 511.79) NVIDIA GeForce RTX 3050 (8GB; GeForce 511.79) |

| Audio | Onboard |

| Storage | AMD OS: Samsung 500GB SSD (SATA) NVIDIA OS: Samsung 500GB SSD (SATA) |

| Power Supply | Corsair RM850X |

| Chassis | Fractal Design Define C Mid-Tower |

| Cooling | Corsair Hydro H100i PRO RGB 240mm AIO |

| Et cetera | Windows 10 Pro build 19044 (21H2) AMD chipset driver 3.10.08.506 |

| All product links in this table are affiliated, and help support our work. | |

All of the benchmarking conducted for this article was completed using updated software, including the graphics and chipset driver. An exception to the “updated software” rule is that for the time-being, we’re choosing to stick to using Windows 10 as the OS, as Windows 11 has so far felt like a chore to use and benchmark with. Do note, though, that the performance results we see in this article would be little different, if not entirely the same, in Windows 11.

Here are some other general guidelines we follow:

- Disruptive services are disabled; eg: Search, Cortana, User Account Control, Defender, etc.

- Overlays and / or other extras are not installed with the graphics driver.

- Vsync is disabled at the driver level.

- OSes are never transplanted from one machine to another.

- We validate system configurations before kicking off any test run.

- Testing doesn’t begin until the PC is idle (keeps a steady minimum wattage).

- All tests are repeated until there is a high degree of confidence in the results.

For this article, we’re once again benchmarking with the BMW, Classroom, and Still Life projects, as well as the Blender 3.0 official project, Sprite Fright.

Sprite Fright is no doubt the beefiest project to be found on Blender’s demo files page, requiring upwards of 20GB of system memory after it’s opened. Since projects like BMW and Classroom are pretty simple in the grand scheme, we’re happy to be able to now include a comprehensive project like this to test with. Sprite Fright isn’t just a demo project, but a Blender Studio movie, which you can check out here.

Please note that if you wish to compare your performance against ours, it’s important to make sure that you apply the same tweaks to a project as we have done:

- BMW: Changed output resolution to 2560×1440 @ 100%.

- Classroom: Changed output resolution to 2560×1440, changed tile size to 256×256.

- Still Life: Disabled denoise and noise threshold, changed tile size to 256×256.

- Sprite Fright: Disabled denoise and noise threshold.

- Red Autumn Forest & Splash Fox: As-downloaded.

We’ve received some complaints about adjusting these projects, rather than using the as-downloaded versions, but it’s all done for a reason. In some cases, projects (like BMW) simply render too quickly, which means that any potential overhead could eat away at the scaling. In other cases, the as-downloaded projects may have the opposite problem, and take far too long to render. Note that if you open any one of these official projects in 3.0+, you won’t actually have to touch the tile size.

It’s also worth noting that all of our testing is conducted in an automated manner via the command line, which means results could be slightly different than what you would see inside of the Blender UI itself. If you are intent on comparing your results to ours accurately, and need help with the CLI aspect, please feel free to comment and let us know.

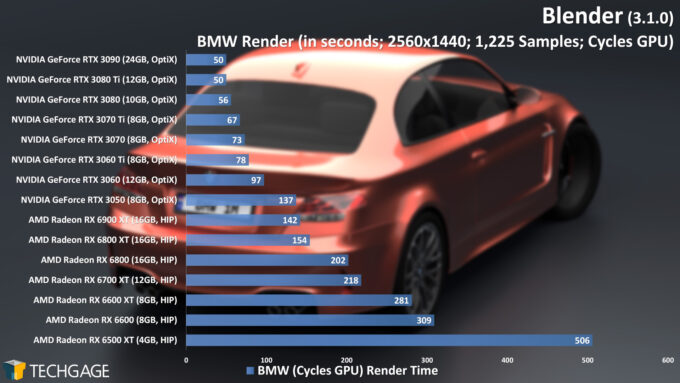

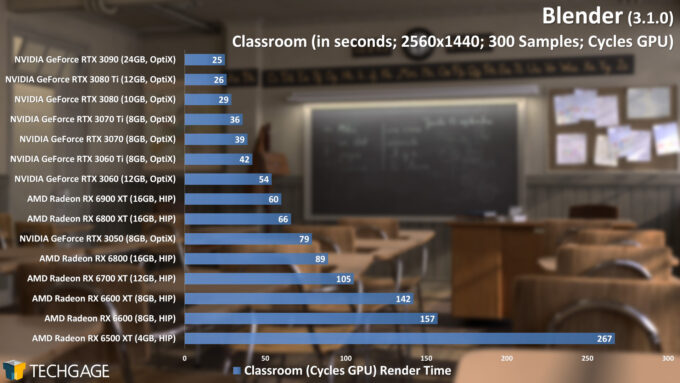

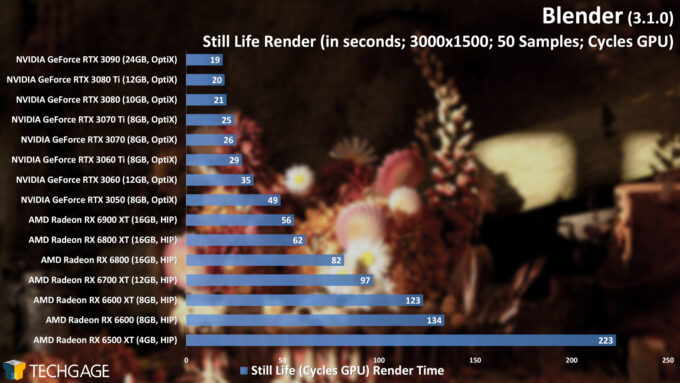

Cycles GPU: AMD HIP & NVIDIA OptiX Rendering

Regular readers of our Blender performance deep-dives may notice a change with these first graphs. Ever since NVIDIA introduced its OptiX accelerated ray tracing API in Blender 2.81, we’ve kept things fair by separating it from the rest of the normal CUDA vs. OpenCL/HIP pack. In the beginning, OptiX wasn’t feature complete, so to use it as a primary result would have been unfair. Fast-forward to today, and OptiX is much more feature complete, so it now seems fair to pull the trigger.

Focusing on OptiX instead of CUDA makes things easier for both sides of this equation – we have fewer graphs to produce, and presenting the data as such instantly highlights the benefits on NVIDIA’s GeForces that should be known about. Quite simply, if you’ve been using CUDA all of this time, and have an RTX graphics card, you should open up the system preferences and switch over to OptiX.

Another thing that should be noted is that AMD’s own RDNA2-based Radeons can support accelerated ray tracing, but the feature currently doesn’t exist in Blender. That will surely change at some point down-the-road, but we get the sneaking suspicion AMD may wait until its next-gen launch to do so. AMD’s own Radeon ProRender currently does support accelerated RT, but as we’ve seen in our testing, it doesn’t change the fact that NVIDIA still comes ahead in many match-ups.

These charts reinforce what we said above about the strength of NVIDIA’s OptiX. NVIDIA clearly knew what it was doing when it decided to integrate its RT cores into its GPUs. If AMD doesn’t change enough next-gen, then the lead is only going to be extended further.

We hate to see AMD lag behind here, but it’s the reality of things. When RDNA1 was launched, we were under the assumption that accelerated ray tracing wouldn’t even come along for AMD until RDNA3, but we were wrong. That said, we’re sure AMD would have wanted an even stronger solution in RDNA2, so we are really hopeful that RDNA3 will successfully shake things up again.

All of that said, if we were to again choose a best bang-for-the-buck GPU in these results, it’d be the same choice we made earlier: NVIDIA GeForce RTX 3070. That GPU offers a nice bump in performance over the 3060 Ti. If you have the budget for it, the RTX 3080 would be a no-brainer, as going even higher than that won’t deliver as large a leap in performance as 3070 > 3080 would.

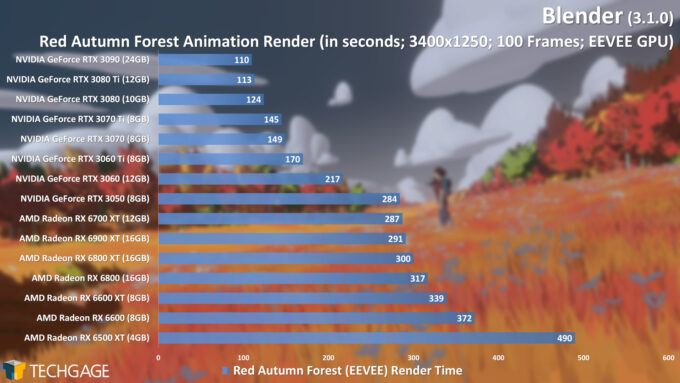

Eevee GPU: AMD & NVIDIA Rendering

While Cycles remains the most popular render engine for Blender users, Eevee is rapidly growing in popularity, and for great reason. It’s a real-time rasterization engine that’s built for speed, and can deliver incredibly detailed scenes right inside of the viewport. It renders PBR materials just like Cycles, and even taps into the same shader nodes, meaning many people should be able to switch over to Eevee pretty easily.

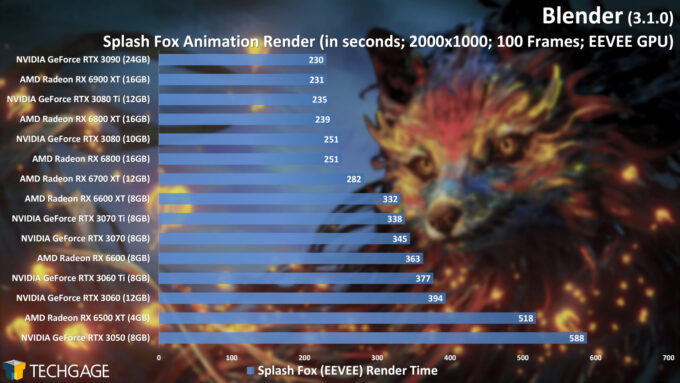

Unlike Cycles, which can render with a CPU, Eevee is designed entirely around rendering using the GPU, and the performance seen is really impressive to see. Even detailed scenes can render a frame in mere seconds, making Eevee a perfect candidate for animation renders – and speaking of, we have two of those here:

Whereas all four of our Cycles projects render similarly enough, these two Eevee projects shake things up nice. There’s a reason for this: the Red Autumn Forest project relies on the CPU very little, meaning that the GPU is spending most of the time doing the work. Splash Fox is different, as it’s a more complex project that involves the CPU more in between each frame render.

Ultimately, NVIDIA seems to clean house if the CPU is not relied-upon much, while AMD manages to catch up if the opposite is true.

We also feel compelled to highlight an odd result here, in which the RX 6700 XT outperforms the RX 6900 XT, RX 6800, and RX 6800 XT in the Red Autumn Forest test. This is an anomaly, but one that’s repeatable. You wouldn’t expect these odd scaling issues to creep up, but they do on occasion. We saw this same behavior in our Blender 3.0 performance look.

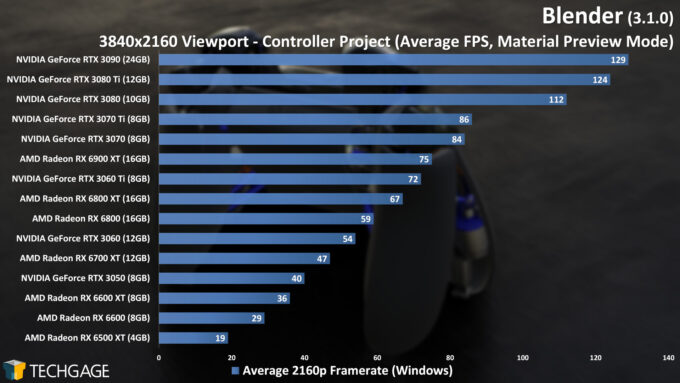

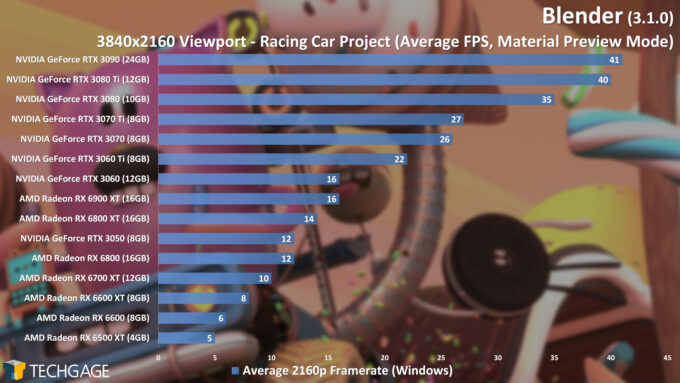

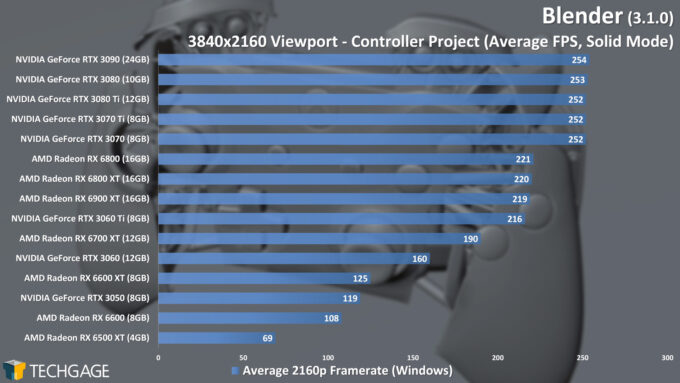

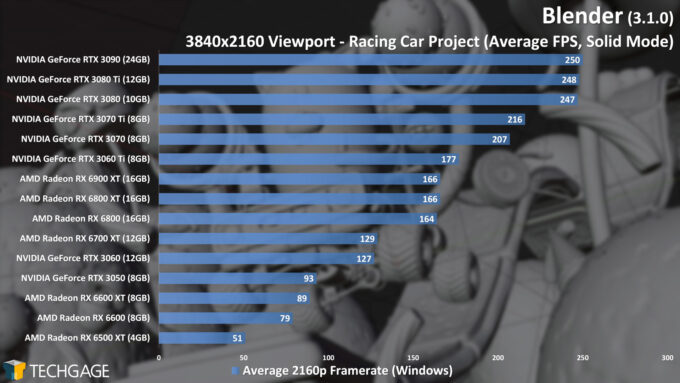

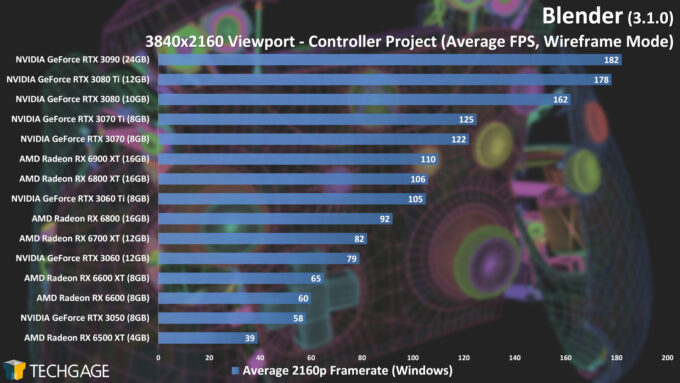

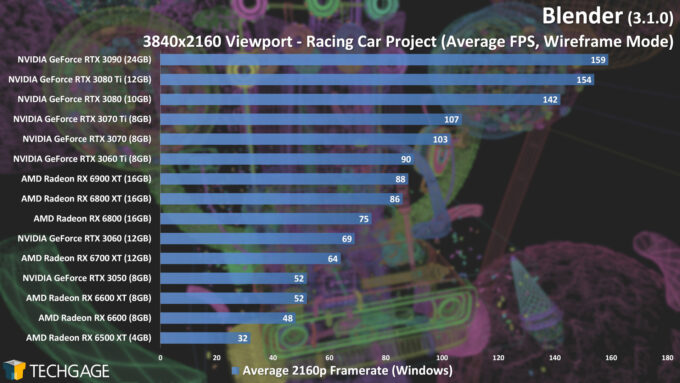

Viewport: Material Preview, Solid & Wireframe

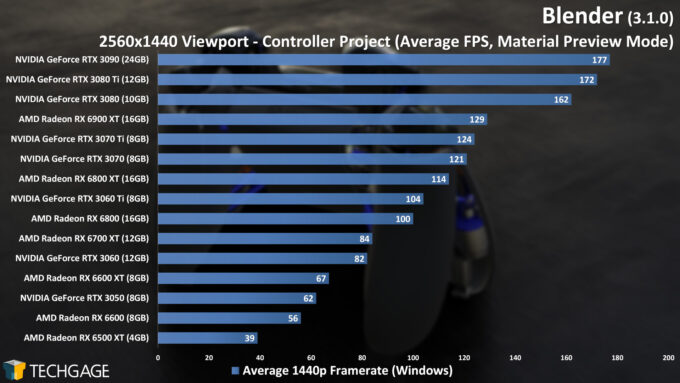

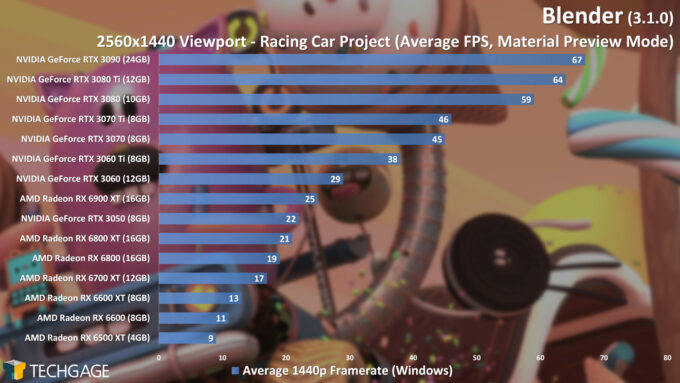

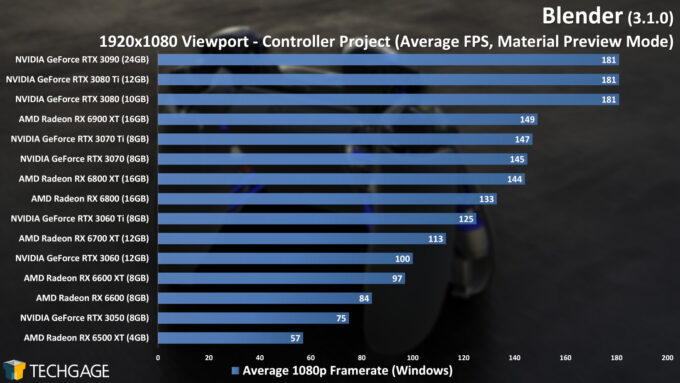

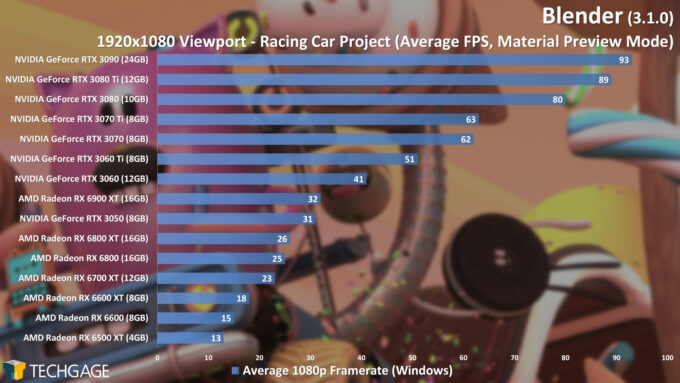

Rendering performance is important, but so is the performance found inside of the viewport – that big box where you will spend most of your time working. For our testing here, we’re taking two projects of differing complexities, and testing the average frame rate at 1080p, 1440p, and 4K across the three primary modes (Material Preview, Solid, and Wireframe).

Let’s start with the most grueling of them all, Material Preview, at 4K:

The complexity differences between these two projects can be easily seen in the resultant frame rate – Controller effectively delivers triple the frame rate. It should be emphasized, though, that since this is just a viewport, and not a live action game, you don’t need 60 FPS for a decent experience. We’d consider 20-30 FPS to be a minimum, though, and fortunately with a project like Controller, that’s hit on virtually every one of the GPUs tested here.

With the overall lack of detail, it’d be easy to believe that the Wireframe mode would be the easiest on a GPU, but in actuality, that honor goes to Solid. Both projects here soar to 250 FPS on the top cards, hinting that at some point, Solid mode becomes more of a CPU bottleneck, than a GPU one. Either way, every single GPU here delivers apt performance in this mode, so it barely requires consideration.

Wireframe is a bit different, simply because it reveals a lot of complexity that Solid doesn’t:

Overall, every single one of these GPUs provides enough performance in Wireframe mode to get you by, but obviously, the faster the GPU, the smoother camera rotations will be. It’s also quite clear that a lower-end GPU like the RX 6500 XT really isn’t going to feel that great for Blender use – it’s definitely worth aiming for a mid-range option if you can swing it, because it will just make your designing life a whole lot better.

For those interested in lower resolution Material Preview testing, here are the 1440p and 1080p results:

Wrapping Up

Considering that the performance element of Blender 3.1 hasn’t changed much from 3.0, it almost feels challenging to come up with an interesting closer here. As before, we consider NVIDIA’s GeForce RTX 3070 to be the best bang-for-the-buck card, but if you are actually dealing with a restrictive budget, even the RTX 3050 is going to deliver solid performance thanks to its OptiX capabilities.

For Eevee use, much remains the same, although AMD’s strengths do become more apparent there. Even so, it’s hard to ignore the fact that NVIDIA pounces AMD’s equally-priced parts overall in Cycles, Eevee, and the viewport. We’d love to say that Blender 3.2 might spice things up, but we’re not going to jump the gun.

While further AMD driver optimizations will undoubtedly be able to improve overall performance, we still feel like a hopefully overhauled RDNA3 will make Radeons as competitive in Blender as they are in gaming and some other creator workloads (eg: Adobe Premiere Pro, and MAGIX VEGAS Pro).

On the AMD front, we do know that Blender 3.2 is going to restore Radeon support in Linux, although as with Windows, full support only covers RDNA and RDNA2. That said, with this restored Linux functionality, 3.2 might be a good time for us to revisit he penguin OS for some additional testing – as we did for our Blender 2.92 performance look.

If you want a sneak peak as to what Blender 3.2 will bring, you can check out the in-progress release notes. Until the next Blender performance look, happy designing!

Support our efforts! With ad revenue at an all-time low for written websites, we're relying more than ever on reader support to help us continue putting so much effort into this type of content. You can support us by becoming a Patron, or by using our Amazon shopping affiliate links listed through our articles. Thanks for your support!