- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

The State of OnLive’s Internet-Streamed Gaming

During last spring’s Game Developers Conference, a company by the name of OnLive took the stage and intrigued many with promises of high-end gaming on low-end PCs, or on no PC at all. The service was touted as being a service in the cloud that would allow you to play the latest games with little more than a broadband Internet connection, with either the service’s PC client, or module that you hook up to your TV.

The service, to my knowledge, still doesn’t have a set launch date, but it’s been in beta ever since the announcement, and because it’s a closed beta, not much information has trickled out since then. But, PC Perspective found its way into the closed beta, and since no NDA was flashed in front of it, it’s published a great look at the service from where it stands today.

One of the biggest issues that most people worried about when OnLive was first announced was latency, and it’s easy to understand why. On our desktop PCs, our mouse latency is well under 1ms, and where there’s online play, which tends to hover around 50ms at a minimum, an obvious issue of input lag rises. From Ryan’s tests, he mentions that it’s a problem on a per-title basis. Fast-paced game? It’s a problem. Slower-paced? It’s sufficient.

To be fair, Ryan was further from the data center than is preferred, but even so, it sounds like latency is going to be problematic for a lot of people. The article notes that there’s going to be a WiFi feature added later, and to be honest… I couldn’t imagine adding even more latency like that on purpose. Not unless you actually enjoy lag, I suppose.

The other potential issue is that the games through OnLive don’t look near as good as they do on a regular PC, installed locally. It’s hard to explain, but through OnLive, the graphics look kind of hazy, as if there’s a filter of some sort over top of it. On the PC, lines are smooth, colors rich… just an overall much better experience. Still, we’re likely early in OnLive’s life, and I’m sure there’s a reason it’s still in a closed beta, so I’m still hopeful we may see some real improvements before the service goes “Live”.

Having never played Burnout: Paradise on the PC before I was a bit surprised to find how much BETTER it looked than what I was just playing using OnLive. For my testing, I setup the local game to run at the highest possible image quality settings (8xAA, etc) at 1280×720 – the same resolution that OnLive uses. Because we are running it at such a low resolution, I thought it was fair to assume that any GPU of $90 or more today would allow you run at these maximum settings.

| Source: PC Perspective |

Discuss: Comment Thread

|

No Half-Life 2: Episode Three in 2010?

Who’s with me in thinking that episodic content should only be done when it’s certain that the “episodes” will be delivered in a timely manner? I’m not sure who first started the idea of releasing games in bite-sized chunks, but so far, it seems to have worked out for few. It could be argued that it’s worked out for companies, and that’s probably true, but for gamers… it’s at times been frustrating.

Whenever I hear talk of game episodes, I think back to SiN Episodes: Emergence. Following the original SiN game, this was essentially “SiN 2”, but to be released in increments in order to prolong the gamer’s interest. This isn’t much different to how popular TV dramas and series work, but could you imagine waiting six or six months minimum for the follow-up? With many episodic games, that’s what happens.

Or in the case of SiN Episodes, the entire project was deemed to ambitious, and it was canceled after just the first episode, likely due to lackluster sales (I’m unsure of what they were). It was upsetting, though, because I thoroughly enjoyed the first one, and played through it twice, and to think… the original goal was to release nine of them! It’s amazing just how ambitious these developers were. If the original goal was three, they might have actually stuck around and completed it.

So why am I ranting like an old man? Because the rumor-mill has it that Half-Life 2: Episode Three is not going to see a 2010 release. No 2010 release?! For fans of the series, this is yet another blow. Sure, Valve has kept gamers busy with titles like Portal, Left 4 Dead and Team Fortress 2, but where’s the love for Half-Life 2? As far as I remember, Valve’s original goal for the three episodes was to space them out between 1 – 1.5 years. Even that seems reasonable, but bear in mind that Episode Two was released on October 10, 2007.

Am I the only one that’s just a wee bit miffed at the fact that there may be literally 3.25 years at a minimum between the release of Episode Two and Three? Valve stated back in 2008 that Episode Three was going to be more ambitious, but even so. There were 16 months between One and Two, so to picture 39 months at minimum between Two and Three seems a little ridiculous.

The amazing thing is that as I look through the screenshots from my review of Episode Two over two years ago, the memories of the entire thing are still very vivid. I guess that means that Episode Three will be worth the wait… whenever it gets here.

The last Half-Life entry, Half-Life 2: Episode Two, arrived in October 2007. Since then, Valve has described Episode Three–the final entry in the Half-Life 2: Episode trilogy–as “a more ambitious project” and teased “the next time you play as Gordon will be longer than the distance between Half-Life 2 to Episode One, and Episode One to Episode Two,” later telling Shacknews “Freeman’s not done…stay tuned for more.”

| Source: Shacknews |

Discuss: Comment Thread

|

Report: Kids Spend 8 Hours on Media Daily

At 26, I’m not what I’d consider old, but as I was growing up, I remember my mom limiting the amount of time I could spend sitting in front of a screen, whether it be a TV or a computer. Which sucked, because you have no idea how addicted I was to Blockout on our 286! Looking back though, I think it was a good move to limit that time spent in front of a TV, because it forced me to to something else, possibly educational, which obviously can lead to greater rewards than finding out the plot to a TV show.

I’m not entirely sure just how unique my mom was in limiting daily TV/computer use, but after taking a look at a newly-published report, I’m starting to wonder if gone are the days when kids are really being limited to anything at all. Believe it or not, the average kid spends near 8 hours (7h 38m) per day taking in some form of media, and with multi-tasking, that number is bumped up to just under 11 hours (10h 45m). Note that these numbers don’t include media use for things like homework or studying.

Think about that for a second… 8 hours (not counting the multi-tasking) is 1/3 of the day. Another 1/3 is dedicated to sleeping, or maybe 1/6 given how many kids today like to stay up half of the night text messaging! That’s quite a bit of time, to say the least. I’m not necessarily saying it’s a bad thing, but 1/3 of everyday being spent on media… it seems quite stark.

Of the total, music seems to top the list for most time spent on something, and I can’t see much wrong with this. Going to school, sitting on a bus, waiting around… why not listen to music? In second place is TV, with computers and video games in a close third. Time spent reading (you know, books and stuff) has, not surprisingly, gone down, but just a wee bit.

Social networking was the most popular computer use accounting for an average of 22 minutes a day. The average was 29 minutes among 11- to 14-year-olds and 26 minutes for teens 15 to 18. “In a typical day,” the study pointed out, “40% of young people will go to a social networking site, and those who do visit these sites will spend an average of almost an hour a day (:54) there.” Fifty-three percent of 15- to 18-year-olds use social-networking sites.

| Source: Safe and Secure Blog |

Discuss: Comment Thread

|

YouTube Allows Viewing Videos with HTML5

Are you excited for HTML5? Alright, I admit that’s a little bit of a foolish question to ask, given that most people who would be excited for a new HTML spec would either be developers or serious Web enthusiasts. But, for the rest of us, refreshed HTML specs mean good things. The biggest thing is new features, that both developers and regular users alike will enjoy taking advantage of.

In HTML5, one of the most noticeable additions will be the <video></video> tag, which as you can imagine, will embed a video into a page. It’s of course not going to be quite that easy, as you’ll also have to specify the source, width and height, its codec, whether it autobuffers, and so forth. But, believe it or not, this is a fair bit easier than embedding a Flash file. Speaking of, what about Flash?

The reason people are getting excited with <video> is because it’s set out to potentially replace Flash for this usage. Go to YouTube, or pretty much any other video site on the planet, and its Adobe’s Flash that’s powering it. But because so many people are tired of Flash’s performance, or how it can bog down a browser (I had this happen to me in the worst way last night), HTML5’s <video> is looking bright.

What better place than YouTube to let people test out such functionality? Although the HTML5 spec isn’t completed, it’s still supported up to the latest revision by many browsers. However, for YouTube’s HTML5 videos, you’ll need to be using Google Chrome, Apple Safari 4 or Internet Explorer with Chrome Frame installed. No Firefox, Opera, or others, at this time.

To test out HTML5 video, you can go to http://www.youtube.com/html5 and click “Join the HTML5 Beta”. Then, if you visit a video that doesn’t have any sort of advertisement, citations, or anything else, you can hopefully watch the video without issue. One video that this works with is with one I uploaded the other day for our NVIDIA Fermi deep-dive.

In my quick tests, the video quality I saw was nowhere near as refined as it was with Adobe’s Flash, but I’m hoping that’s more of a codec issue, or something to do with how the video is rendered. At Mark Pilgrim’s site for HTML5 (thanks to our elite coder Ben for pointing this page out), there’s a video at the bottom of the page that’s embedded with HTML5, and it looks a lot better than what I saw on YouTube. Either way, HTML5’s <video> is no doubt going to become more commonplace in the future, and I can’t wait.

|

Discuss: Comment Thread

|

The Games that Kill Development Studios

Want a good laugh? Just think back to all of those published reports that laid out an imminent death for gaming as we knew it, either for game consoles or PCs. Of course, we all know that gaming is at its healthiest point right now (although, sales were down a bit in 2009), and there’s a sheer number of titles to choose from. Just look at Modern Warfare 2, which recently broke through the $1B revenue barrier. Gaming is dead? Hah!

But to be fair, the gaming industry does see a lot of death in some form or another, whether it be a character death inside of a game, or a more serious death… the closing down of a development studio. Over the years, there have been some quality developers that have closed shop, and not just entire studios, but also a single key person if that has to be the case.

If a development studio shuts down, or a project lead gets the boot, you can believe that it had to do with an underwhelming game. This has happened far too often in the past, and even recently, we’ve seen some top-rate developers and studios make some major changes. Develop Online helps us put everything into perspective with a look at some of the biggest shutdowns the industry has seen, and man, this isn’t my kind of trip down memory lane.

The most recent development studio shake-up would likely be 3D Realms, which for over ten years, failed to deliver its most hyped game, Duke Nukem Forever. The studio still exists, but its future is unknown. And then there’s Enter the Matrix, developed by Shiny and published by Activision. I hate to admit that I own this game for the Nintendo GameCube, but I can vouch for its truly horrible and unexciting game play, not to mention buggy design.

Probably my favorite mention on this list would be Daikatana, which still remains as a game that I can’t bear to bash. I was so hyped for it back in the day, and even when I got my hands on a copy, I think the hype warped my brain, because I ended up having a lot of fun with it. Sure, it had issues, and because of those issues, the game received an average rating of 53. Ouch.

Daikatana was originally planned to be released in time for Christmas 1997. It was based on the Quake engine, and early advertising consisted of a blood red poster with the words “John Romero is about to make you his bitch”. But when id Software showcased the much-improved Quake II engine, Ion Storm realised that it would need to upgrade. The switchover, and a range of design challenges, led to delay after delay. Daikatana was finally released in June 2000, over two and a half years late.

| Source: Develop Online |

Discuss: Comment Thread

|

For a More Secure Browser, Go with Internet Explorer?

Ten years ago, choosing a Web browser to use on a daily basis wasn’t that difficult. For most people, Internet Explorer wasn’t just a choice, it was a given, because who knew that other options existed? Well, of course they did, but back then, they were few, with Netscape Navigator being one of the main choices for those who wanted to break tradition and spice things up a bit.

The situation today couldn’t be more of a stark contrast compared to how it was back then. Although there are still only a handful of browsers that tend to dominate the charts, there are literally dozens to choose from that could be worthy of your usage. According to our most recent Web stats, Mozilla’s Firefox has a dominating lead, with 49.72% of the share, with Internet Explorer in second, at 30.46%. Google’s Chrome is beginning to make some real headway, with 10.01%, with both Opera and Apple’s Safari following it, each with about 4%.

Back when we first started using Google Analytics to monitor our site stats, May 2006, Internet Explorer was the top browser, with a 47.69% share. Firefox was trailing not far behind, though, at 44.68%. What’s the reason behind such a major shift, with IE diving in the charts, and Firefox growing? I think it’s obvious… more and more people are both learning of the risks of sticking with IE, and also learning of alternatives that are available.

I’ll be the first to say that Internet Explorer is more secure than ever (at least, if we’re talking about 8), but let’s be real… it’s also been the cause of some major Windows issues that history as ever known – especially since IE6 with Windows XP. So it’s rather funny that a Microsoft exec in the UK recently told TechRadar that “The net effect of switching [from IE] is that you will end up on less secure browser“.

You can draw your own conclusions from that, but for me, I think I’ll stick with Firefox, which happens to be open-sourced, and is usually patched ultra-fast whenever vulnerabilities are found.

With France and Germany both advising a move away from Internet Explorer, things are far from rosy for Microsoft’s browser, and although the vulnerability has only been used against IE6, the company has not ruled out that something similar could be used against the later versions. With Microsoft not prepared to give details of how soon a fix will be released, and advising people to leave the appalling IE6 and its successor for the latest version – IE8 – Microsoft’s UK security chief Cliff Evans insists that a non-Microsoft browser is the worse option.

| Source: TechRadar |

Discuss: Comment Thread

|

Bugatti’s Veyron is Expensive, But Just How Expensive?

Ferrari. Lamborghini. Porsche. These are just a few go-to names that people tend to mention when talking about sports cars, but for the car enthusiast, most would argue that the best cars aren’t built in some mega-factory, but rather by builders who prefer to keep things as small as possible. The reason is usually simple. People who build just one or a few cars at a time tend to be pretty passionate, and really, really care about both quality and design.

One example of this is Bugatti. Sure, it’s now owned by Volkswagen, but the heart of the original company remains intact, and that came to light when the ultra-luxurious Veyron was released in 2005. This car took everything the company gained from its near 100 years of operation and built what was the fastest supercar on the planet, with incredible mechanics and design. But, what makes it super?

For starters, it’s equipped with a W16 quad-supercharged 1,001 horsepower engine, and with its design, the beast is able to top out at over 250 MPH. That’s so fast, that chances are you can’t get close to a track where a speed like that is even made possible. Of course, there’s more than just performance that makes this car, but there’s too much to mention in a simple news post. One spec worth noting could be the price tag, though, of at least $1,500,000.

Alright, the Veyron is expensive… no one is surprised. But just how expensive? You know… for the long haul? Believe it or not, it’s so expensive to drive, that one owner who wanted to let loose on a track several hundred miles away found it was actually cheaper to send his car there and take his private jet instead. Damn, now that’s saying something.

After taking a look at some numbers, it’s not too hard to understand the reason behind this. For routine service on the Veyron, it will set the owner back about £12,866 ($20,922 USD). Compare that to £1,680 for the even rarer Ferrari Enzo. Oh, and the tires, which Bugatti recommends changing often (if you use the car to its potential, this is understandable)? £23,500 for a set of four.

Yes, for the price of replacing a Veyron’s set of tires, you could have bought a brand-new modest BMW. Now that’s what I call expensive.

Most of us probably won’t be too disposed to sympathising with Bugatti Veyron owners over the maintenance costs they’ll face when running one of these magnificent machines – you practically have to be a billionaire to afford one – but you can understand why anyone might wince at some of the bills that Volkswagen’s finest can run up. A routine service, for instance, costs £12,866 or the price of a middling Polo, whereas an annual service for a Ferrari Enzo is £1680, which seems like a bargain by contrast.

| Source: Autocar |

Discuss: Comment Thread

|

Apple Dominates the Mobile App Market in 2009

The term “cell phone” used to mean one thing… a portable phone that used cellular towers to place a call. Today, the term almost seems defunct, because today’s phones do much, much more than place a simple call. Strangely enough, today’s phones seem to do that one single thing worse than phones from ten years ago, and if there’s a reason for it, it’s because the models we have today are designed to do more than ever.

At first, smart phones and the like came pre-equipped with various software packages, and while that’s all fine and good, there’s little room for customization. Then more robust models came out, and consumers for the first time were able to add their own applications at their leisure. I believe that the ability to add apps to a phone has existed for a while, but when it really took off is when Apple released the App Store as part of iTunes, for use with its iPods and iPhones.

To say that it “took off” would be an understatement, because even though the App Store has only been available for just over a year-and-a-half, it hit a staggering two billion downloads this past September, and at the rate it’s going, it’s set to hit 3 billion soon, and 4.5 billion by the end of the year. Of course, there are other app stores available for non-iPod/iPhone devices, so just how successful are those?

Well, take a look at this number: 99.4%. That represents the number of mobile app sales that belonged to Apple and its App Store during 2009. That’s a bit of a domination, right? So much so, that it seems pointless to even explore numbers of the competitors, but simple math would suggest that the numbers are low (I am one of those who hopes that changes in the future, though).

Thanks to these 2.5 billion apps on the App Store, a total of $4.2 billion in revenue has been brought in, and at the rate things are going, that number could rise to, get this, $29.5B by the end of 2013. Just think about it… that’s just for mobile apps, not the hardware!

Apple first opened the App Store in July 2008, along with the launch of the iPhone 3G and the release of iPhone OS 2.0. Sales were brisk, with 300 million apps sold by December. After the holidays, that number had jumped to 500 million. Earlier this month, Apple announced that sales had topped 3 billion; that means iPhone users downloaded 2.5 billion apps in 2009 alone. Gartner’s figures show another 16 million apps that could come from other platform’s recently opened app stores, giving Apple at least 99.4 percent of all mobile apps sold for the year.

| Source: Ars Technica |

Discuss: Comment Thread

|

Could Gigabyte’s H55M-USB3 be the Ultimate H55 Board?

As I mentioned in our news last Friday, we paid a visit to Gigabyte’s suite at the Venetian in Las Vegas a little over a week ago, and just as we expected, there were many, many motherboards on display. All that were there ranged between many different chipsets, but one that the company was obviously interested to talk about was its H55 line-up, which as you likely know by now, is required to take full advantage of Clarkdale with its integrated graphics. If you still haven’t read our Clarkdale article, you can do so here.

Currently, Gigabyte offers three H55 models to choose from, the H55M-USB3, H55M-UD2H and also the H55M-S2H. The model that the company was most keen to show off was the H55M-USB3, which you can see running in the photograph below. As the name suggests, it supports USB 3.0, and of the three boards mentioned above, it’s currently the only one, hence why Gigabyte is making it ultra-clear with the model name.

Of the company’s H55 boards, the H55M-USB3 could be considered the flagship, with all the bells and whistles. Unlike the other H55 boards we’ve taken a look at up to this point, this one features DisplayPort support, along with the others that we’d expect, DVI and HDMI. Other improvements over other H55 models we’ve looked at include additional S-ATA ports (7 onboard, 1 eSATA), two full PCI-E 16x slots for CrossFire X, and for those who refuse to let go, floppy and IDE connectors.

Things like overclocking are hard to predict without having a hands-on test with the board, but if the ASUS P7H55D-M EVO we took a look at during our Clarkdale launch article is anything to go by, H55 is hardly going to be limiting in that regard. If you’ll recall, we hit a 4.33GHz stable clock on ASUS’ board, so I’m hoping to see the same thing on Gigabyte’s H55M-USB3 when I receive a sample in the coming week.

In addition to all that’s mentioned above, this board features the wide-range of other Gigabyte motherboard technologies we’ve come to expect, such as Ultra Durable 3, Smart 6, DES2 power management, 3x USB power boost (for USB 3.0), the DualBIOS and more. In addition, though Intel’s H55 chipset doesn’t support AHCI or RAID by default, Gigabyte adds in the support with the help of a JMicron controller, so that you won’t have to go without.

I am not certain of the board’s pricing at this time, but I think it’s safe to say that we could expect it to be around the ~$120 mark. Stay tuned to the site as I hope to have our full review of the board posted in the weeks ahead.

| Source: Gigabyte GA-H55M-USB3 Product Page |

Discuss: Comment Thread

|

Windows 7 Gains Ground on Steam’s Hardware Survey

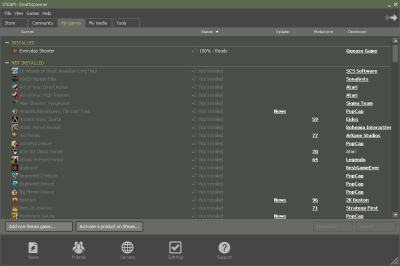

It’s been a while since I launch took a look at Steam’s hardware survey results, but in recent weeks, I’ve been told by more than one person that I need to get on it, so here we are. The reason I was pushed towards checking it out is because a lot has changed in the gaming landscape over the course of the past few months, and believe it or not, Windows 7 is looking good in overall usage.

As no surprise to anyone, I’m sure, Windows XP is still the dominant version, with a 44.77% overall share, with Windows Vista (32-bit) coming in second with 20.71%. Windows 7 is growing, though, and currently sits at 15.61%, which is rather substantial when you consider that the OS just came out towards the end of October, and this survey is for anyone who runs Steam and has taken part. Also interesting is that this is with the 64-bit version… the 32-bit version accounts for 7.45%, for a combined share of 23.06%.

Operating systems aside, Valve’s survey also found that the quad-core trend has also been growing, with a 13.31% increase in adoption over the past 18 months. To be honest, I would’ve expected that number to be a bit higher, but it’s understandable since many people don’t upgrade quite so often, especially not the majority. Currently, Intel holds 68.97% of the current CPU share, with AMD in second with the remainder of 31.03%.

The most common DirectX 10 GPU for users who can take advantage of it (Vista/7) is the HD 4800 series from ATI. But those running DirectX 10 GPUs without the ability to take full advantage is even more common, with NVIDIA’s GeForce 8800 accounting for 13.64%. But, again, the HD 4800 doesn’t fall too far behind, with 12.88%. Overall, all of these results are quite interesting, especially with Windows 7. It’s just too bad that so many people are still sticking with the 32-bit version in lieu of x64!

Every few months we run a hardware survey on Steam. If you participate, the survey collects data about what kinds of computer hardware and system software you’re using, and the results get sent to Steam. The survey is incredibly helpful for us as game developers in that it ensures that we’re making good decisions about what kinds of technology investments to make, and also gives people a way to compare their own current hardware setup to that of the community as a whole.

| Source: Steam Powered Survey Results |

Discuss: Comment Thread

|

Modern Warfare 2 Hits $2B… What Have We Learned?

Love or hate the series, there’s little denying that Infinity Ward has done right with Call of Duty. Each major addition to the series has sold really, really well, and with the latest iteration, Modern Warfare 2, the company hit an incredible feat… it’s sold over $1 billion worth of product. It can be assumed that this number counts for things other than the game as well, such as official strategy guides and other related product.

The interesting thing, I find, is that Modern Warfare 2 has been filled with various forms of controversy since its launch, yet it still managed to hit such a staggering number of sales. Ars Technica takes a look at this very thing, and looks back to find out what we can learn, or have learned, from the fact that despite the downs, Modern Warfare 2 still managed to fill the company’s pockets.

One point mentioned here, and one I agree with, is that “PC gaming doesn’t matter”. It’s hugely unfortunate, but the gamers have spoken, with the vast majority of the sales belonging to the console versions (which still blows me away, since I could never imagine actually preferring an FPS with a gamepad). As mentioned in our news before the launch, PC gamers were getting shafted, and it looks like it didn’t matter. Those who were miffed with the issues with the PC version didn’t care enough about the cause to refuse a purchase, so… we can’t exactly expect much of an improvement in the future.

The other points mentioned here is that pricing matters, and also that the gaming press can be shoved around. The only way sites could publish a launch review was to go to straight to Activision to play the game under close watch. This even broke the policies of some websites, yet they went anyway. That’s the equivalent of giving someone a talk show, and then taking it back seven months later!

The majority of gamers will experience the game on consoles, and PC gamers don’t need things like a console for tweaking the game or support for mods. Infinity Ward and Activision locked down the PC version of Modern Warfare 2 to make sure it was played how and when they want. New content is coming for the game, buts let’s hope that the Microsoft exclusivity doesn’t extend to the PC.

| Source: Ars Technica |

Discuss: Comment Thread

|

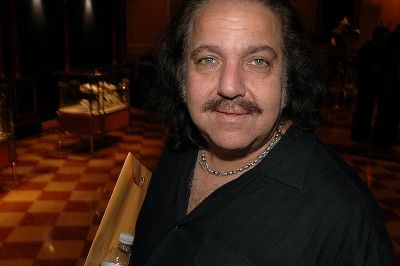

Why Does Ron Jeremy Hate Technology?

Arguably, one of the most famous people to ever star in a pornographic movie isn’t someone you’re likely to immediately think of. Sure, there’s the Jesse Jane’s and the Jenna Jameson’s, but it seems no matter how old you are, and regardless of whether you watch such films yourself, you’ve likely heard of Ron Jeremy. Given that he’s starred in over 2,000 pornographic films, among real movies, it’s hard to not know of him.

But why I am I bringing him up, on a tech website? Well, like most years, Jeremy appears at the annual Adult Entertainment Expo, which just so happens to occur at the exact same time as CES. This year, he appeared at CES itself, though, and publicly went on to explain why he hates technology, the Internet in particular, and how the porn industry has little to do with the reasons many children are growing up with major issues.

The reasons behind his hatred of the Internet boils down to a couple of things, namely that it allows crooks to succeed and become millionaires, and it also allows pretty well anyone to download any type of content they want for free, also known as piracy. Another reason he hates the Internet is easier to understand. Jeremy has had his identity stolen twice, and although we’re not sure how it happened, having it happen at all would be enough to make anyone hate the Internet just a wee bit more.

Jeremy also goes onto say that pornography isn’t of a huge concern for kids as violent video games are. I can agree with this to an extent, although I think that anything can influence anyone, but when it comes down to it, being violent to someone else is going to be one of the worst things you can do. Still, if a child gets their hands on pornography, it can’t really be considered a good thing. But, Jeremy does state something I can wholeheartedly agree with:

“Parents can block this stuff and need to stop blaming porn for a bad case of parenting. Parents should watch what their kids are doing online and take some responsibility. Don’t blame us. We have disclaimers, age notifications and software blockers. We are doing our bit.”

He first attacked the internet, stating, “The internet has allowed a lot of crooks, thieves and squatters to become millionaires. Normally, they wouldn’t get a job washing dishes. I have a lot of problems with the internet and with identity theft. It has happened to me twice with my bank account, so I am not a big fan. People can download stuff for free these days, so why the heck are they going to buy it? The only ones making money out of porn are the novelty companies. I just hate the internet in general.”

| Source: DailyTech |

Discuss: Comment Thread

|

Gigabyte Shows Off its Graphics Card Line-up

At last week’s CES, I met up with our good friends at Gigabyte to see all of what was new from the company for Q1, and as you could expect, there were many, many motherboards, and likewise, many graphics cards. I’ll focus on the GPU side of things here, and as you can see below, the company had a rather modest collection to show off. Gigabyte has been a little quiet with graphics cards for a while, but over the past year, it has begun to devote more of a focus to its line-up, however, so we should be able to expect a wide gamut of models in the future.

Because the company doesn’t simply want to deliver a card that looks like most of the others on the market, it has delved deep into its idea bucket to find out what it is that gamers, and overclockers, are looking for. For both of these crowds, Gigabyte has recently launched its “Super Overclock” series, which essentially amounts to models that are really overclocked. We took a look at the company’s GTX 260 SO a few months ago and were left very impressed.

During our meeting, Gigabyte reiterated the reasons why its Super Overclock cards are so special, and the main one is thanks to something called the “GPU Gauntlet”. This is essentially an advanced sorting process, with only the highest-binned parts making their way into a Super Overclock part. That would explain the reasons why Gigabyte’s SO parts are the highest-clocked on the market, at least up to this point.

So far, the company has focused on NVIDIA parts for its SO series, but it has ATI cards coming up, including the HD 5870, which you can see in the front in the image below, second from the right. Also on display was the company’s GeForce GT 240, which you can see in the back to the right, sporting an interesting silver-techy cooler, which our Gigabyte rep nicknamed the “Transformer’s cooler”. On the opposite end of the table was another GPU with a strange black cooler, which she also nicknamed… “Batman”. After taking a look at them both, you can really understand why.

In addition to all of the models featured here, Gigabyte also talked about the other enhancements they make to their GPU line-up, in particular with what’s called the Ultra Durable VGA, which I’m sure most of our visitors are familiar with at this point in time. Essentially, these GPUs feature 2oz of copper, like the company’s motherboards, premium memory, Japanese solid capacitors , Low RDS(on) MOSFETs and Ferrite Core Chokes. If you own a Gigabyte motherboard, these will all sound very familiar.

After taking a look at Gigabyte’s GTX 260 Super Overclock, and walking away so impressed, I hope to get in one of its upcoming Radeon SO cards, as I can’t help but be curious to see just how far it’s going to push these already very powerful GPUs.

|

Discuss: Comment Thread

|

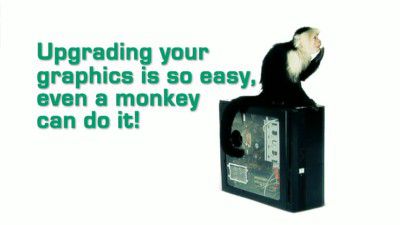

Who Knew Upgrading a GPU Was So Easy?

I’m going to jump to conclusions like usual and assume that most people visiting our site know how to install a graphics card. In fact, I’m sure most know how to build a computer from scratch. Often considered to be difficult by the layman, building a PC is actually quite simple. I like to compare it to building something from Lego. It’s fairly obvious where most pieces fit, with potential complications being things like the ATX chassis connectors, or installing the CPU cooler.

But, believe me, it’s easy. It’s not only me saying this, though, but AMD as well. The company is taking it just one step further, and claims that installing a GPU is so easy… even a monkey can do it. Yes, you read that right.

The company got a cute monkey, Louie, to not only take the side panel off of the machine, but to install a brand-new single-slot GPU. You know he did good, because he smiles afterwards, and even loads up the machine and installs the Catalyst drivers. The monkey then wastes no time at all in loading up some movies and Tom Clancy’s H.A.W.X.

Though I don’t particularly agree with it, one comment that struck me funny in the comments thread is from YouAreThick, “ATI – You shouldn’t be using monkeys for advertising, Louie would obviously be put to better use working on your appalling drivers.“.

| Source: AMD Unprocessed YouTube Video |

Discuss: Comment Thread

|

OCZ Teases Portable USB 3.0 Solid-State Drive

As I have mentioned in our news a couple of times over the past week, USB 3.0 is a fast-growing technology, and the vast number of equipped products either on the show floor or in one of the many hotel suites around Vegas proved to me that we should all be expecting a quick upswing of adoption in the months to come.

USB 3.0 can be used for many things, but most commonly it will be used for storage devices, at least right now. These could include external enclosures where you throw your own hard drive inside, external enclosures that have a fixed amount of storage straight from the vendor, flash drives, and also SSDs. Yes… SSDs via USB, with no AC adapter requirement.

This in particular is the kind of USB 3.0 product I’m looking forward to being able to test out in the near-future, because the idea of being able to carry around 100GB+ in my pocket and be able to plug it into any USB 3.0 port and get speeds similar to a regular hard drive excites me. Of little surprise, OCZ is soon to release such a drive.

The product isn’t named yet, so for now the company is calling it “USB 3.0 Portable Solid State Drive”. Under its hood is a Symwave controller and MLC chips, similar to those used in current consumer SSDs. When plugged into the PC, you will see speeds of well over 100MB/s in both directions. I am not sure of the exact figures, because I forgot to photograph the monitor. But either way, it was fast?. At the speeds I saw, it will outpace most mechanical hard drives.

Need some real performance details? OCZ says that with a typical USB 2.0 drive, a Blu-ray movie (25GB) could be copied in 13.9 minutes. With its USB 3.0 drive, that time drops to 70 seconds. Likewise, for a 1TB backup (this is theoretical since these things won’t see that kind of density for quite a while), USB 2.0 would take 9.7 hours, while the USB 3.0 drive could finish the transfer in 47 minutes.

Because the drive uses MLC, which is higher quality than most other flash NAND, speed degradation shouldn’t be as noticeable, so copying a huge folder wouldn’t lose its copying speed during the process, which is far different than how it is on a mechanical hard drive. You can expect to see OCZ’s portable drive sometime this spring, with pricing to be decided then.

|

Discuss: Comment Thread

|

CES 2010: It’s Almost Old News!

The past week has been super-long, so I’m glad that I can finally say that CES 2010 is a wrap! The entire event was good overall, although it seemed to be quite a bit smaller than last year, which I wasn’t really expecting (I expected an upturn). It could still be the economy, or the fact that CEA (the folks who handle CES) charges insane rates, but either way, I’m hoping to see a larger event next year.

I do have to admit one thing… I was completely off the mark when I thought I’d have lots of time to get to a PC and write content, hence our overall lack of CES-related content. From the early morning to the late night, I was out of the hotel and away from Internet access, so it was virtually impossible for me to get to posting. By the time I got back to the hotel after a long day, I was wiped.

The lesson? To bring a mobile wireless card with me next year. I wish this thing called “WiMax” would finally catch on over here, since it would make things a whole lot easier! That aside, the event overall was quite good, and I stumbled on numerous products that I found to be pretty cool. Most of these I haven’t talked about yet, but I will over the next few days. If time permits, I’d like to also do a “Best of” article once again, as there are a couple outstanding products in mind that deserve such recognition.

Later today, I’ll be making a post in the forums with a slew of photos that I took while in Vegas, so you can see all of what I saw – at least, what was of interest. A lot of photos I wish came out, didn’t, so I won’t be posting as many as I’d like, but I’ll still get some good ones in there. Shooting photos in a seriously dark club / restaurant is tough.

As I write this, I’m still in Vegas, and will be home mid-week. After I get back, I’ll be posting a review of a new graphics card shortly afterwards (no names!), and within the next few weeks, as always, we’ll continue to be delivering a barrage of quality content for you to eat up (it won’t make you fat, I promise).

That picture above was taken from a suite in the Palms hotel, which is where Gigabyte and Patriot’s joint party was held. That suite easily offered the best view I’ve ever seen of Vegas!

|

Discuss: Comment Thread

|

Thermaltake Shows Off USB 3.0 BlacX Hard Drive Dock

Of little surprise I’m sure, USB 3.0 devices were all over CES this year. So much so, that it was just plain difficult to keep track of them all. You can expect us to be posting reviews of many of them in the months to come, from flash-based drives to external enclosures, as there’s going to be an obvious demand for information on which products rock, and which could be better.

I’m willing to bet that one of the better is Thermaltake’s BlacX hard drive dock. Not an enclosure, the BlacX allows you to plug in a S-ATA drive, 2.5″ or 3.5″, and immediately read and write to it on your PC. It’s like a thumb drive, but this method gives you far more storage to deal with, and with the help of USB 3.0, you can get the far improved performance as well.

Thermaltake had the latest dock on display, and set it beside the older USB 2.0 version. Each one of these docks had a Patriot 128GB SSD plugged in, and Thermaltake was giving real-world performance examples to prove just how worthy USB 3.0 is. When copying the same folder to each drive, the process took just over 2.5x times longer on the USB 2.0 device.

That’s not quite the 10x USB speed you’d expect to see, given that’s the improvement we were promised, but like most theoretical limits, there’s a lot that the performance is dependant on. In this case, there were many files in this folder, slowing things down. The USB 3.0 connection went about 30MB/s, while the USB 2.0 was stuck at around 12MB/s.

The BlacX USB 3.0 will become available within the next few months, and the cost shouldn’t be much different than the USB 2.0 model, at around ~$35.

|

Discuss: Comment Thread

|

OCZ Shows Off Modular PCI-E Solid-State Disk, Z-Drive p88

Mentioning the name “OCZ” a couple of years ago would have spawned thoughts of the many different kits of memory that the company offered. Today, the company’s major focus has shifted towards SSDs, and this was evident by the fact that not a single memory module was on display at its Aria suite at CES. It’s no surprise, either, given the sheer popularity of SSDs in the marketplace.

We’re all familiar with OCZ’s PCI-E solid-state disks, the Z-Drive, but on display was a soon-to-be-released model that’s completely unlike the others. Designed for those with huge storage performance needs, the Z-Drive P88 is a customizable PCI-E solution that will allow the user to physically change the card from RAID 0 to non-RAID, both offering their own set of benefits.

As you can see in the image above, the card consists of many modules that look like SO-DIMMs, but are rather modules with the same flash chips we’ve come to see in all other SSDs, except here, the modules are user-replaceable, in case one happens to go bad, or for storage upgrade purposes. You can purchase the card with a single rack of modules, and also add on a daughter card which turns it into RAID 0 configuration.

When you take the RAID 0 route, the speed improves dramatically, to top out at 1,300 MB/s read and 1,200 MB/s write. It gets even better, because the “e” versions of these drives can get even faster, with up to 1,400MB/s read and 1,500MB/s write. Yes, the write is indeed faster than the read in this case. When launched later this month, the p88 will see densities of 512MB, 1TB and 2TB. The price tag? It’s not finalized, but expect it to soar up towards ~$8,000 for the 2TB model.

|

Discuss: Comment Thread

|

OCZ Shows Off Modular PCI-E Solid-State Disk, Z-Drive p88

Mentioning the name “OCZ” a couple of years ago would have spawned thoughts of the many different kits of memory that the company offered. Today, the company’s major focus has shifted towards SSDs, and this was evident by the fact that not a single memory module was on display at its Aria suite at CES. It’s no surprise, either, given the sheer popularity of SSDs in the marketplace.

We’re all familiar with OCZ’s PCI-E solid-state disks, the Z-Drive, but on display was a soon-to-be-released model that’s completely unlike the others. Designed for those with huge storage performance needs, the Z-Drive P88 is a customizable PCI-E solution that will allow the user to physically change the card from RAID 0 to non-RAID, both offering their own set of benefits.

As you can see in the image above, the card consists of many modules that look like SO-DIMMs, but are rather modules with the same flash chips we’ve come to see in all other SSDs, except here, the modules are user-replaceable, in case one happens to go bad, or for storage upgrade purposes. You can purchase the card with a single rack of modules, and also add on a daughter card which turns it into RAID 0 configuration.

When you take the RAID 0 route, the speed improves dramatically, to top out at 1,300 MB/s read and 1,200 MB/s write. It gets even better, because the “e” versions of these drives can get even faster, with up to 1,400MB/s read and 1,500MB/s write. Yes, the write is indeed faster than the read in this case. When launched later this month, the p88 will see densities of 512MB, 1TB and 2TB. The price tag? It’s not finalized, but expect it to soar up towards ~$8,000 for the 2TB model.

|

Discuss: Comment Thread

|

CoolIT Shows Off Ultra-Thin Omni Liquid GPU Cooler

CoolIT has long been known as a company to provide innovative and effective cooling for the enthusiast, and at CES, the company showed off four new products that aim to bring even better cooling performance to all of the components inside our PC’s. The company even managed to snag four CES Innovation awards for these products, so congrats to them for an impressive showing!

Of the products shown, the one to attract my attention the most was the Omni A.L.C. GPU cooler, which utilizes CoolIT’s liquid-cooling technology in order to keep your GPU as cool as can be, even during overclocking. There’s two reasons the Omni is interesting. The first is that once you buy the system, it’s good to last a while, regardless of whether you upgrade your GPU or not.

The cooler itself is a long metal plate, designed specifically for a certain graphics card. You slide that onto your GPU and secure it, and then secure the large radiator to the top of your chassis. Though it’s liquid-based cooling, I don’t believe it requires a motor, or at least I don’t remember seeing one. The product also doesn’t include fans, so you are free to use your own.

Because CoolIT will create new custom plates for all of the high-end cards, as soon as you upgrade to the latest model, you will just need to swap out the plate, and not the rest of the system. How much these plates would cost, I’m entirely unsure. Currently, the Omni isn’t set to go on sale, but CoolIT is rather looking for partnerships to see it bundled with a graphics card or used in an PC by a system builder. I’m hoping that it will become available to DIYers when it’s released.

One of the biggest benefits I see here is the space it frees up in your chassis. Sure, you need a huge rad, but that stays at the top of your chassis, and essentially, you free up tons of room in the central part of your PC. Take a look at this picture to see what I mean. These are dual-GPU graphics cards, but they’re as thin, if not thinner, than a regular single-slot cooler!

| Source: CoolIT Omni GPU Cooler |

Discuss: Comment Thread

|

EVGA’s W555 Dual-Socket Motherboard Redefines High-End

Just over a week ago, EVGA sent out a teaser image to its Twitter feed that showed off an upcoming motherboard like no other. The first major feature is its size, which is too large for even the most common full-tower chassis’ out there, and the second would be the fact that it includes two CPU sockets and twice the number of DIMM slots compared to a typical Nehalem-based motherboard.

No surprise, EVGA had the board on display at its private suite in the luxurious Wynn hotel, and I have to say… this is easily one of the most jaw-dropping products I’ve seen at the event, for a couple of different reasons. When I first saw this board, I immediately thought of not only “Skulltrail 2”, but a Skulltrail 2 that might actually be affordable to the enthusiast.

Well, I’m not getting that second wish, but this could indeed be considered a Skulltrail 2 in some regards, as it’s enthusiast-targeted and includes support for two CPUs, which thanks to the chipset, have to be Intel’s Xeon’s, not Core i7’s. There’s not only two CPU sockets, but twice the number of DIMM slots as well, with support for up to 48GB of DDR3 memory.

EVGA has quickly been rising in the ranks where overclocking is concerned, so it’s no surprise that the company wants to go all out on such a high-end product. In addition to the two 8-pin motherboard connectors, there are also two 6-pin PCI-E power connectors to be used for increased power delivery, which may actually be needed for extreme overclocks – especially when Gulftown arrives.

When overclocking four or more GPUs, the power to the PCI-E slots is going to be a bit lacking, and for that reason, EVGA includes yet another 6-pin PCI-E power port directly above the slots. The company’s recent GTX 285 Classified cards feature 3x 6-pin power connectors, so just picture the number of such connectors that could possibly be used here!

About a year ago, EVGA brought on legendary overclocker Peter “Shamino” Tan to help design the company’s motherboards, primarily on the overclocking side, and he’s put huge focus on this board in particular in recent months. You can expect it to bring both overclocking and high-performance to a new level when the board is released within the next few months, in all it’s $600+ glory.

|

Discuss: Comment Thread

|

Logitech’s Lapdesk N700 Adds Comfort to Laptop Computing

As one of the largest makers of peripherals in the world, Logitech usually unveils some pretty cool product at CES. This year was a little different, though, as the company surprisingly only had one product to show off on the show floor. Yes, just one. The situation was similar to last year, where there were also few, and of those few, most were upgrades.

Either way, the one product that was showed off is pretty interesting, despite it being a notebook dock of sorts. The original name for our mobile PCs was “laptops”, and I think it’s easy to understand why the “lap” moniker was dropped fairly quickly. it sucked to use a PC while being scolded… it’s not difficult to figure out that heat was a problem.

As a result of this issue, laptop coolers have become all the rage, and even companies like Cooler Master are dedicating entire lines to them. But Logitech’s is a bit different, because it’s not just a cooler, but a tray for allowing to you use the laptop on, get this… your lap, and also to increase the sound capabilities of your notebook.

To make the Lapdesk as comfortable as possible, the entire bottom is essentially a cushion, which I believe is removable. It’s also not an entirely flat bottom, but rather curved so that no matter the position you are sitting, the notebook will be raised up a little bit in order to increase the comfort further.

On both sides are small speakers, which utilize your notebook’s sound card to deliver far better audio than what your tinny notebook speakers could provide (assuming you don’t have a notebook that has better-than-normal speakers). Oh, and there’s of course the fact that the Lapdesk cools your notebook, with user-adjustable fan speeds.

I’m unsure of a launch date, but it should be soon. When it does launch, it will sell for a suggested price of $79.99.

| Source: Logitech Lapdesk N700 |

Discuss: Comment Thread

|

Seagate Releases BlackArmor PS 110 USB 3.0 External Drive

With USB 3.0’s launch occurring mere months ago, it was to be expected that equipped devices would be seen all over CES, and so far, that’s proven true. At the “CES Unveiled” press event, I stopped by Seagate’s booth to see what was new, and lo and behold… a USB 3.0 external hard drive. That in itself seems simple, but there’s a bit more to this drive that makes it well worth a look.

First is the fact that this 2.5″ drive is 7200 RPM, so it’s actually able to push speeds that make choosing the USB 3.0 connection beneficial. Second, if you’re using a notebook that doesn’t offer a 3.0 connection (aka: all of them up to now), Seagate includes an ExpressCard right in the box that opens up the support… talk about one heck of a nice feature.

To top it all off, this BlackArmor PS 110 drive includes Acronis’ fantastic backup software, which assures your data is safe, both in the backup respect, and also the grubby-hands respect. You have the option to encrypt all of your data with the AES 256-bit algorithm, which means your data is very, very secure.

One last feature which doesn’t seem to be a big deal, but might be for some, is that this 2.5″ drive is a bit shorter than most. Seagate redesigned the internals of the enclosure itself in order to compress the innards around the drive as much as possible. In a quick comparison, I’d guess that Seagate shortened it by at least 0.5″. Not a major difference, but nice nonetheless.

Unlike so many products at CES, the BlackArmor PS 110 is actually available now, and for $179.99. That’s a bit higher than most other 2.5″ drives of the 500GB density, but the inclusion of Acronis’ backup software and the USB 3.0 ExpressCard helps make up for it.

|

Discuss: Comment Thread

|

Techgage Goes to CES 2010

Whew, it’s hard to believe it, but yet another CES is upon us! With all the trade shows we attend each year, they seem to simply fly by, but CES is always one that’s easy to look forward. We don’t only get to check out the latest products on the market, but meet up with old friends, eat some good food and perhaps partake in copious amounts of alcohol consumption (after content is written, of course!)

I admit that I haven’t been keeping up on CES related news lately, as I’ve been knee-deep in preparing content, but I have a good feeling this year is going to be a good one. Last year, people considered CES to be the smallest ever, but this year, it doesn’t seem like the economy is doing much to hut the event, as we’ve received more e-mails from companies this year than ever before.

At the show, Intel will unveil it’s Westmere-based CPUs, while AMD is likely to remain low-key, though it may announce its newest mid-range graphics card (not sure if there’s an embargo so that’s all I’ll say). During the show, we’ll be meeting with all of the companies we deal with on a regular basis, among others, so I suspect I’ll see some amazing new product there. Of course, I’m not totally gluttonous with my information… I’ll share, as much as I possibly can.

Like previous years, I’ll keep our news section updated with information from the show, whether it be new products, technologies or something off the beaten path. I might also do a “diary” forum post as I did with Computex, where I keep everyone in the loop regarding what I’m doing while at the event, so you can see first-hand what it’s like to go to such a conference.

In addition to our news coverage, I’ll likely write a one-pager article for companies that have a lot of interesting products to show off, and also will keep a static article at the top of the page which will include all of the URLs to our coverage. As I write this, my mind’s a blur thanks to a hectic weekend, so what you should take away from this is this: visit the site often this week. We’ll have lots of great stuff for you, CES-related and not!

As for that picture above… no, it’s not CES. But, it’s definitely Vegas!

|

Discuss: Comment Thread

|

Intel’s Westmere IGP vs. NVIDIA’s GF 9300

With Intel’s Clarkdale processor set for launch very shortly, there’s a lot of speculation as to the performance of the integrated GPU. To recap Clarkdale, this Westmere-based processor is built on a 32nm node, and includes an IGP on the same substrate. Both the CPU and GPU are separate (the GPU is 40nm), but both are still located right beside each other on the same chip.

Intel has had a notorious history when its come to its integrated GPUs, and for what I consider to be good reasons. I have never enjoyed using the company’s IGPs, as I’ve found them to require a lot more work than I want to put in. When I want to use a GPU, I like to install a driver and know that it will work, even if performance will be lacking. It’s no fun trying to run a 3D application and not have it run at all.

But, with the GPU located so close to the CPU, it can be surmised that there may be a chance that it will perform a lot better than if it were located in the Northbridge, or as a single chip elsewhere on the board. According to tech site OCWorkbench, that seems to be true, but it still falls short when compared to NVIDIA’s popular GF 9300.

What’s interesting, though, is that the test was done on a Pentium G6950, which has its IGP clocked at 533MHz. Since Pentium is Intel’s lowest-end processor model, it’s not unreasonable to believe that the mainstream models, such as Core i3 and Core i5, would have much higher clocked GPUs which could end up beating out NVIDIA’s GF 9300.

But personally, as far as I’m concerned, IGP doesn’t equal a “gaming” processor, because the performance is just far too lacking. What Intel stresses most about its Westmere IGP is the ability to run “mainstream” titles, which for example includes Sims 3, and also handle HD content. Given that Intel’s recent chipsets have been able to handle HD content fine, there’s no reason to believe Westmere would be any different. Of course, we’ll have a definitive answer to this at next week’s launch, so stay tuned.

| Source: OCWorkbench |

Discuss: Comment Thread

|