- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Are Girls Bigger Hardcore Gamers than Men?

For as long as there has been gaming, there has been “hardcore” gaming. No, this doesn’t refer to triple-X titles, but rather to those who devote a lot of time to a particular game, or genre, or gaming in general, and at the same time, being quite skilled overall. The fact that a lot of time is devoted pretty much lends to natural improvement, though, so it’s hard to have one without the other.

Are you hardcore? Well, you might be if you’re a female, if a recent sample group is to be believed. In total, 7,000 Everquest II players were questioned about their play habits, and though it might sound a bit hard to believe, females averaged 29 hours of playtime per week, while males averaged 25 hours. Likewise, the more hardcore of the hardcore saw girls playing an average of 57 hours per week, and males, 51 hours per week.

I have a couple issues with this group survey, primarily because I don’t consider MMO’s to be that “hardcore”, in the sense that chess isn’t hardcore, and also in that this focused around a single game, which might actually appeal more to female players for whatever reason. But, it’s an interesting result regardless. So often, women aren’t considered to be gamers, and the common stereotype is that it’s men who spend long hours fragging away.

My gut feeling tells me that for FPS games, the results would be quite a bit different, and it’d be nice to see a similar poll done with one of the top games, such as Counter-Strike: Source, or Left 4 Dead. On the casual gaming side, it’d also be interesting to see the results, although today, thanks to games found on popular social networking sites, it seems like everyone takes part in some casual game (not me, and proud of it!).

And female gamers spent, on average, far more hours than their male counterparts. Top female players logged 57 hours a week, while top male players only logged 51 hours a week. And on average, girls logged 29 hours a week versus 25 hours for males. Age isn’t the only thing women tend to lie about, according to the survey. More women than men responded that they lied to their friends and loved ones about how much time they played.

| Source: DailyTech |

Discuss: Comment Thread

|

NVIDIA’s Fermi Launch Delayed Until March

This news can be filed under “rumor”, because nothing is verified, but the word that’s spreading like wildfire is that NVIDIA’s first Fermi-based graphics cards are going to be delayed until March. As you may recall, Fermi was originally supposed to launch late this year, but was rumored to have been pushed back until January. As it stands, it’s being pushed back even further, which I’m sure makes ATI all the happier.

Interestingly enough, Fermi shared similar goals as Intel’s Larrabee, a chip that was also delayed just earlier this month. Could Fermi’s delay be related? It’s hard to say, because if that were the case, then it would mean that NVIDIA was more interested in simply getting something out the door, rather than have a truly revolutionary product.

More likely would be other causes, that could range from efficiency issues to problems with producing the die on a 40nm node. You may recall that this past fall, NVIDIA showed off a Fermi card that was touted as being the real thing, but was actually a mock-up, which led everyone to believe that there were issues somewhere. But just last month, the company teased with an image of a Fermi-based card running Unigine’s DirectX 11 demo, although even that couldn’t be verified as true since it’s just a photo.

The one thing that’s certain is that the major beneficiary of this delay is AMD, whose ATI cards are still market-leading in terms of performance, and by a rather sizable margin, even when pricing is taken into consideration. With Fermi’s launched pushed to March, it means AMD has two more months to soak up the success, and now that his HD 5800 series cards are starting to finally see ample supply, it’ll definitely be reaping the rewards in the months to come.

A multi-quarter delay in the launch of a flagship product is always bad news under any circumstances, but this development strikes NVIDIA at a particularly awkward time. Earlier this year, the company bowed to the inevitable and halted development of its chipsets for non-Atom x86 platforms. This move was due in part to lack of a DMI bus license, and in part to the fact that the GPU’s impending move onto the CPU die means that integrated graphics processors are a losing battle in the long-term if you aren’t also in the x86 CPU business.

| Source: Ars Technica |

Discuss: Comment Thread

|

Linus Torvalds Turns 40

Yesterday, Linux Torvalds, creator of the Linux kernel, turned 40. While birthdays for the most part aren’t too important for someone other than whose birthday it is, this one is special for a couple of reasons. It was 19 years ago that Linus took all of his Christmas and birthday money, and spent it on a PC in order to focus on programming and create some cool things.

The computer was a Sinclair QL, first released in 1984 and discontinued a mere two years later. The processor was based on Motorola’s 68008, clocked at 7.5MHz, and the basic model featured 128KB of memory. In lieu of floppy disks, the QL used a proprietary format called the Microdrive, which had cartridges capable of holding 85KB of data. The interesting thing I find is that the storage on the disk almost matches the system memory, and the true could be said today, where a DVD holds 4.5GB of data, and many home PC’s have 4GB of memory.

But I digress. According to Linux Journal, Linus purchased this computer knowing it had a few limitations due to the fact that it had multi-tasking abilities, which believe it or not, was more of a luxury back then. But, it was with this system that kick-started his programming, and today, the Linux kernel is used in close to 90% of the world’s supercomputers, on many desktops and netbooks, and it’s even starting to see some growth in the mobile device segment.

It would be interesting to picture just what Linus was thinking about when he purchased that computer, and follow-up machines. Did he realize he was going to create something that would affect the world so greatly? It’s hard to imagine that anyone could predict something like that, but I for one am very glad things worked out as they have. Huge thanks, Linus!

By mid-summer -91, “Linux” was able to read the disk (joyful moment), and eventually had a small and stupid disk driver and a simple buffer cache. So I started out trying to make a filesystem, and used the Minix fs for simple practical reasons: that way I already had a file layout I could test things on. After some more programming (talk about glossing things over), I had a very simple UNIX that had some of the basic functionalities of the real thing: I could run small test-programs under it.

| Source: Linux Journal |

Discuss: Comment Thread

|

UK ISP Piracy Surcharge Could Force 40,000 Households Offline

Over the past couple of years, media companies have been battling piracy in many different ways, but at the end of the day, their measures all but fail, and prove to be nothing more than an inconvenience to those who ran into the temporary roadblock. Despite draconian measures like DRM, sales all-around are up, and it’s for good reason. On the movie side, there have been some stellar titles out there, and for music, the likewise can be said.

But despite that, companies involved in the music and movie industry are infinitely greedy, and no amount of money would ever appease them. And since piracy exists, what better situation to take advantage of than that? Earlier this year, it was announced that a music industry-backed measure would be taken to help recoup money lost by piracy, by inflating the bills of all Internet customers.

This of course is ridiculous. The main reason is because all those people who don’t ever pirate anything would have to front the extra money, and as a whole, we all know that the “one download = one lost sale” theory is all but a pipe dream. According to the UK Government Ministers, the end cost to consumers per year could be upwards of £500 million, an average of £25 per year per Internet customer.

An exec from one of the UK’s leading ISPs, BT, stated that if these changes go into effect, then it could render 40,000 users Internet-less. BT offers Internet packages for as low as £15.65 and as high as £24.46, so it’s easy to understand why exactly a £25 surcharge is so ridiculous… it’s essentially like adding another month onto the year, bill-wise. I really hope to see this plan shot down before it’s put into action. If it gets passed, I have little doubt we’ll see the same thing creep up on these shores, and others.

Jeremy Hunt, the Shadow Culture Secretary, said that it is “grossly unfair” for the government to force all broadband customers to foot the bill, and noted that forcing tens of thousands offline will go against government targets of increasing Internet take-up among the most disadvantaged communities. “We are confident that those costs will be a mere fraction of the stratospheric sums suggested by some ISPs,” a BPI spokesman told The Times, adding, “..and negligibly small when set against their vast annual revenues.”

| Source: TorrentFreak |

Discuss: Comment Thread

|

Microsoft Told to Stop Selling Office Before January 11

It’s the giving season, and we saw a major example of that last week when the FTC slapped Intel with a barrage of accusations. This week, it’s Microsoft on the receiving end, with the gift-giver being the US Court of Appeals. Earlier this year, XML specialist company i4i won a suit against Microsoft for infringing on one of their XML technologies, which resulted in a quick halting of Office sales, but not for long.

This recent event is going to be a bit trickier to get through, because it’s not the first appeal Microsoft has lost, so it needs to figure out a way to end this charade fast. As it stands, the US Court of Appeals has stated that as of January 11, Microsoft will be unable to sell the infringing products, which include only Excel and Word, as far as I’m aware.

What’s likely to happen is that Microsoft will either change the functionality (which is a bit hard to understand from a non-developer standpoint), and re-release the software package, then subsequently remove the previous functionality from already-installed versions with the next patch. Or, Microsoft and i4i could negotiate something and leave things be.

It’s important to note that i4i isn’t the usual scum company looking to make a quick buck, as it is responsible for the algorithms present for the specific handling of XML data (the x at the end of recent Office documents denotes XML support), but I’m not quite sure how the code got to be used in the first place. As far as other solutions are concerned, OpenOffice.org users have nothing to worry about… probably because the suite doesn’t support the latest documents , except in a read-only manner.

Microsoft says it’s moving quickly to prepare versions of Office 2007 and Word 2007 that don’t have the “little-used” XML features for sale by January 11, and that the Office 2010 beta “does not contain the technology covered by the injunction,” which can be read in a number of ways. It’s also considering an appeal, so we’ll see what happens next.

| Source: Engadget |

Discuss: Comment Thread

|

Epic to Release Unreal Engine 3 to Mobile Platforms

In a news post I made yesterday, I touched on the fact that mobile devices are becoming more popular by the day, and if you look back to just a few years ago, you’ll see that the landscape has changed dramatically. Today, mobile devices are being used for a lot more than just talking or texting to someone. Just take a look at the sheer number of apps available for various platforms… it’s amazing.

The most popular alternate use for a mobile device, at least where sales are concerned, is gaming. I admit that I abhor gaming on a device that’s not 100% designed around it, and that includes the iPhone, but I’m so far in the minority, that I’d be harder to pick out than a “Where’s Waldo?”. Gaming on mobile devices, like the mobile lifestyle in general, is more popular than ever, and it’s going nowhere anytime soon.

It’s not just the hardware vendors who are working to increase capabilities on these devices, but developers as well. Let’s face it… 3D shooters don’t always work so well on a mobile device, and they certainly aren’t the easiest on the eyes. But, Epic Games is looking to change that by porting the Unreal Engine 3 to mobile platforms. No joke. It seems ridiculous, but it’s working, and it looks amazing (that’s a direct screenshot below).

Anand Shimpi met up with Epic Games VP Mark Rein last week, and he was given a hands-on demonstration of where things currently stand. The demo is just that, a demo, with no real depth, but rather acts a tech showcase. There’s a small 15s video that shows just how smooth the gameplay is, though, and I have to say, it’s impressive. This is all done on an iPhone 3GS, which goes to show just how powerful that device’s hardware is. Unfortunately, due to the fact that UE3 mobile requires OpenGL ES 2.0, any iPhone older than the 3GS is incompatible.

I only have one thing to say about all this… “Incredible”. To think that we’re still early in this mobile gaming thing… just imagine where things will stand 3 – 5 years from now. I admit, though, that as much as I respect mobile devices, I’d love to see the same kind of engine ported over to an actual portable game console, such as the next PSP or Nintendo DS. Of course, it wouldn’t surprise me if we did see something like this on the very next major launches.

Mark said they planned to make this available to licensees at some point in the near future. That’s great for end users because it means that any Unreal Engine licensee can now start playing around with making iPhone games based on the same technology. Unfortunately the recently announced, free to the public, Unreal Development Kit (UDK) is Windows only – the iPhone version isn’t included. I’d guess that at some point Epic will change that, it just makes too much sense. Doing so would enable a whole new class of iPhone game development using an extremely polished engine.

| Source: AnandTech |

Discuss: Comment Thread

|

FCC Investigates Verizon’s Early-Termination Fees

Without question, one of the biggest advances in technology over the past decade or so that affects a great number of people is in the mobile space. Today, it seems like the majority of people out there own a cell phone, and many who do, own one in place of their home phone. It’s no wonder, either, when pricing and minute allowances make it possible. But, there is one major downside to cell phones, and as far as I’m aware, there isn’t a single telco without the issue.

Of course, I’m talking about the EFT, or early-termination fee. This is a fee that’s incurred whenever you opt-out of your contract before it’s completed, and it’s never modest. Up until a month ago, Verizon’s EFT’s were priced at $175, which seems truly ridiculous, especially for those who’ve completed at least half or more of their contract. What did it change too, then? Well, it doubled, so it sits at $350. As far as I know, the EFT is only this high on “high-end” cell phones, like the Curve, Droid, Dare and so forth.

Any way you look at it, the fees are incredibly high, especially given the monthly cell phone plans we all pay, which again, are overpriced. The FCC apparently agrees, because it’s intervened and is asking the hard questions to Verizon. The biggest issue isn’t that there exists an EFT, but the fact that it’s so high, and also that it never changes, regardless of when you opt-out (even if you cancel a month before, the fee is the same).

Verizon has a variety of excuses it’s using, and the most common one is that it has to get back the cost of the device, and in most cases, it loses money, even after an EFT, because the prices for the handsets are so expensive. I have a hard time believing this, because if companies are buying these handsets in vast quantities as they are, they’d be getting incredible deals, not paying anywhere near the retail price.

This isn’t the only problem the FCC is investigating, but it’s one that could help consumers a great deal in the future. I understand an EFT, but I don’t understand why it doesn’t go down as you go through your contract. If that changes, consumers will win. And if you still don’t like the EFT at all, then don’t get the expensive phones… it’s that simple, really.

The new ETFs comes with a new pro-rating system—ten dollars sliced off the fee each month—that still leaves quitters paying over 100 bucks, even if they terminate at the very end of the two-year deal. Klobuchar sent a letter to Verizon president and CEO Lowell C. McAdam calling the hike “anti-consumer and anti-competitive,” and asked the FCC to probe the telco about the move.

| Source: Ars Technica |

Discuss: Comment Thread

|

DFI Rumored to Cease its Consumer Motherboard Production

Well, this is dissapointing. According to rumors, DFI, long praised for its innovation and quality, may be shuttering its enthusiast part production in January, and instead will focus solely on the industrial PC business. This might be just a rumor for now, but given various circumstances hovering around DFI over the past couple of years, it wouldn’t come as a major surprise to me if it happened.

Though it’s not related, beginning back in 2006-ish, the PR situation at DFI was messy. It was hard to get in contact with someone, because by the time someone saw your e-mail, you’d find out that the person you were trying to contact left the company. In a single year, I remember dealing with four different press representatives at the company, and it left a bad taste in my mouth, not to mention uncertainty in the company as a whole.

After all, if DFI didn’t want its boards taken a look at, just how much focus does its enthusiast side have? If you think back to the past couple of years, how many DFI motherboard reviews have you seen? Now think back to 2004 – 2005, or even earlier. At that time, you couldn’t visit a tech site without a review, but today, DFI seems to have majorly shifted its focus. But despite that, it’s continually been innovating, and in many ways, it rarely gets the recognition it deserves.

Charlie at SemiAccurate says that one of the reasons for the removal of DFI’s enthusiast segment is due to the fact that the company tends to get the shaft when it comes to chipset allocation. It’s hard to take that as a surprise, given that the bigger motherboard vendors out there have a total domination, and if you’re a company with a hundred thousand chipsets, you’re likely to sell to your best customers. It’s unfortunate, because it hurts the “little guy”.

That banter aside, let’s hope this rumor proves to be nothing more, or that whatever may be broken can be fixed so that DFI can get on with its business. It was unfortunate to see abit shutter its doors earlier this year, and it’d be just as unfortunate to see DFI go the same route.

You would think that the chipset vendors would have realized that any firm doing innovative things was worth supporting, but that doesn’t seem to have been the case. If all this is true, get your DFI boards while you can. Lets hope someone comes in at the last minute and changes things to prevent another Abit. DFI was one of the last interesting small players out there, and if these rumors are true it will be a shame to see it go away.

| Source: SemiAccurate |

Discuss: Comment Thread

|

Rob as a Guest on The Tech Report’s Latest Podcast

Late last week, I woke up to a phone call (I sleep in late, but for the sake of not looking like a slacker, I’m not going to admit how late), and about 20 minutes later, I was recording a podcast with our friends at The Tech Report. To say I was unprepared would be a gross understatement, but it was still great fun. I always enjoy discussing tech with Scott, so to put it to podcast form for others to hear was certainly interesting.

Before I jumped into the conversation, James Cameron’s latest blockbuster, Avatar was discussed. After I joined, subjects were tackled such as the Radeon HD 5800 series availability, Flash GPU acceleration, netbook v. ultra-portable notebooks and enough Intel talk to overwhelm your brain. You can check it out here or by clicking the picture below. Big thanks to Scott and his crew for having me on!

|

Discuss: Comment Thread

|

Reading or Watching the News… Which is Better?

This subject is one I’ve been juggling around for a while, and I’m wondering if I’m one of the last people on earth who would rather read a news article rather than watch it. The reason, is that over the past year, I’ve noticed an obvious insurgence of video being posted in lieu of an actual news article, and I have to admit… the entire idea doesn’t enthrall me.

The reason behind my anti-video attitude is twofold. The main reason, is that most of the time while I’m on the PC, working or not, I’ll be listening to music. The last thing I want to do is to mute it just to be able to listen to a news item. Secondly, I prefer reading, because I find I garner more details about the story that way, and in most cases, it’s also faster. Plus, there’s no need to wait up to 30 seconds before being able to actually read it, as would be the case with most videos.

At the time of writing, and ultimately the reason I decided to pose this overall question, there’s a news site with three articles that pique my interest… all being video. Instead of clicking on any of them, I click on none, and just convince myself that the news either isn’t important, or interesting. For me, this “issue’ raises a question. Are the huge media outlets lazy? After all, it’s much easier to just paste an already-recorded video to the site rather than write a news post. Or, do the majority of people genuinely prefer video over text? Am I in the minority here?

Even as I write this, I feel like I am. After all, video on the Internet is far from being a fad, and it’s grown at an alarming rate. Plus, while I seemingly refuse to watch video on the Web, that’s not entirely the case. I regularly watch video at sites like YouTube, for example. But in that case, I go to sites like that for the video… I don’t expect text. On a news site, it’s vice versa.

In talking to a few friends and family members over the past few months about it, I know I’m not alone, but I am still wondering if myself and everyone I know who shares a similar opinion is in the vast minority. My question is this. Would you rather watch news video or read a real article? Or do you even care?

|

Discuss: Comment Thread

|

Adobe and Firefox Top Buggiest Software List

According to statistics compiled by vulnerability management firm Qualys, Adobe’s software products and Mozilla’s Firefox took the top two spots in a list of the buggiest software. The source of the information gathered comes from the National Vulnerability Database, a US government-run organization which tracks all of the important vulnerabilities from hundreds of popular software products.

It’s important to note that while both Adobe’s products and Firefox top this list, it doesn’t necessarily mean that they’re the most vulnerable software on the market, but rather that they have the most reported holes. Firefox in particular, due to its open-sourced nature, has holes patched up fairly quickly, so it can be argued that despite having a record number of vulnerabilities, it’s also incredibly secure.

On the opposite end of the stick, Adobe’s software is not open-sourced, so vulnerabilities are completely left up to the company to both acknowledge (unless an exploiter spots it first) and repair. Given the sheer number of times my installed Adobe software asks me to update, I’d have to assume that the company is fairly quick when it comes to issuing patches, but it’s hard to say for certain.

What is known for certain is that in the span of a single year, Adobe’s vulnerabilities sky-rocketed – from 14 in 2008, to 45 this year. Any way you look at it, that’s a major boost. It also shows that software crackers/attackers have been making a gradual shift from exploiting operating systems to applications. As Microsoft’s number dropped from 44 holes in 2008 to 41 this year, it helps back up the theory. As a whole, though, all of this information emphasizes the need to keep your software up to date, regardless of what it is… but especially if it’s a popular application.

Research from F-Secure earlier this year provides further evidence that holes in Adobe applications are being targeted more than Microsoft apps. During the first three months of 2009, F-Secure discovered 663 targeted attack files, the most popular type being PDFs at nearly 50 percent, followed by Microsoft Word at nearly 40 percent, Excel at 7 percent, and PowerPoint at 4.5 percent.

| Source: InSecurity Complex |

Discuss: Comment Thread

|

Ubuntu 10.04 to Integrate Social Networking into System Panel

A couple of weeks ago, I reported on an upcoming feature in KDE 4, the popular Linux desktop environment. It’s called “Tabbed Applications”, and acts just as it sounds. A simple description would be the “combining of multiple applications to a single window”. Today, I’m still unsure if I’d personally ever take advantage of such a feature, but it seems likely that it’s one that would eventually brand out into other OSes, because it could have use for many people.

An article I noticed over the weekend introduced me to arguably even more interesting plans in the Linux world, or at least with Ubuntu specifically. And once again, I have to wonder if it’s something we’ll begin to see spread out into other OSes in the near-future. “Social Networking Menu”… do I even need to explain? Probably not, but I’ll do so anyway.

Beginning in Ubuntu 10.04, the developers are going to continue building off of an idea that was introduced in a recent version of the distro, which is the integration of social features in the main system panel. In today’s Ubuntu, if you minimize Pidgin, for example, it won’t be found as an icon in the system tray, but rather nestled in a separate menu for messaging. The refining of this idea would integrate a new applet called “Me Menu” to support services like Facebook, Twitter, among others.

Picture, for example, being logged into your Facebook account without ever opening a Web browser. You’d be able to control it all from this applet, and either chat to friends online, or post to your wall. The same idea could be added for other popular Web services, of which there are obviously many. Whether or not such a feature would come directly pre-configured with specific services is unknown, but it would seem unlikely to me, since promotion of commercial applications or Web services in general isn’t something I remember ever seeing in a Linux OS. However it works, we can imagine it’d be easy to configure, and I have to admit… I’m intrigued.

Much like the current presence menu, it will offer tight integration with the Empathy instant messaging client, allowing users to control their status and availability settings. It will also integrate with the Gwibber microblogging client to make it possible for users to post status messages to Twitter, Identi.ca, Facebook, and other services directly through a textbox in the Me Menu.

| Source: Ars Technica |

Discuss: Comment Thread

|

TG Roundup: HD 5770’s in CrossFireX, HDD Encryption, Virtualization for Free

Given the amount of content we’ve posted since our last roundup, I’m a bit behind on things here. But, as I always say… well, never mind, I don’t often say anything worth repeating! So, let’s get right to it. At the end of November, we posted a review of a board that helped finish off three different P55 motherboard reviews within a two week span, and unfortunately, we didn’t save the best for last. Intel’s DP55WG isn’t a bad board, but for the price, I was expecting a lot more.

But this past week, we took a look at is ASUS’ P7P55D-E PRO, also known as the first sub-$200 P55 offering from the company, which offers full S-ATA 3.0 and USB 3.0 performance. And thanks to the inclusion of a PLX chip, your primary PCI-E 16x slot won’t have degraded performance like is common on some other solutions. In addition to that though, the board is feature-packed, overclocks like a dream, and is well worth its price-tag of ~$189.

From a components standpoint, we also posted our look at AMD’s latest Athlon II models, the X2 240e and X3 435. Both proved to be quite the good contenders, delivering great performance for their price-point (sub-$100). What was cool to see was that the 240e beat out Intel’s more expensive Pentium E5200 in almost every test. AMD works hard to dominate the budget CPU market, and it’s doing a great job at it.

On the GPU side of things, I decided to pit two Radeon HD 5770’s together for some CrossFireX action, and I’m glad I did, because the results surprised me. When you picture CrossFireX, or multi-GPU in general, you never expect to see performance that outperforms a higher-end card that costs twice as much, but that’s what happened here. In most of our tests, the CrossFireX HD 5770 configuration either matched or surpassed the performance of the HD 5870 just slightly. The best part of course, is that taking this route saves you money, and unlike the HD 5800 series, it’s a lot easier to equip yourself with two of these cards.

Continuing our Radeon HD 5770 theme, we took a look at Sapphire’s Vapor-X model earlier this week, and as expected, it was an impressive card. Not only does it run with lower temperatures, but thanks to its improved chokes, it also uses less power than the reference card, despite the +10MHz to the Core clock. The card also happens to include a voucher for a free copy of Dirt 2, so there’s not too much to dislike here, if anything.

Here at Techgage, we don’t often post content that focuses on security, more specifically encryption, but in the past two weeks, we broke from our mold to deliver a look at two products that deliver similar goals. The first was Kingston’s 16GB DataTraveler Locker+, a thumb drive that actually requires you enter a password in order to access data. Unlike some solutions, the entire drive here is encrypted, making it an ideal solution for those who don’t want to take chances with their sensitive information.

The other encryption product was from Addonics. Called the CipherChain, this internal hardware solution goes in between your hard drive and the motherboard, and it will essentially encrypt all of the data on the fly. The best part is that this happens without much slowdown, and there’s absolutely no drivers at all that need to be installed on the PC, so it doesn’t matter which OS you use. Even better, no password is needed, but rather a key that plugs into the device.

We wrap up our round-up with a quick look at two different software articles. The first was published last Friday, and is focused around a completely free virtualization solution, Sun’s VirtualBox. If you are new to virtualization, or aren’t quite sure what it is, I highly recommend reading through. Or better yet, first check our our Introduction to Consumer Virtualization, and then read our newer article.

Lastly, just today I published an article that looks through ten different tips and tricks I’ve discovered in the time I’ve been using KDE 4. I still love the desktop environment, and use it daily, and with these tips, it’s made even more enjoyable. Even if you’re not a KDE user, or Linux user in general, check it out. You might be impressed by just how cool some aspects of Linux are!

|

Discuss: Comment Thread

|

Work-in-Progress: Updating our CPU Test Suite

Ever have one of those sudden realizations that something you meant to do six months ago still isn’t done? Well that’s me right now, and it has to do with our proposed CPU test suite upgrade. It’s still a work-in-progress, and one that I hope will be completed soon, but once again I want to open the floor for reader recommendations, or at least for thoughts on our current choices.

It’s not that our current methodologies and suite is poor, but I like to keep things fresh and revise our test selections from time to time. This time around, a great reason to upgrade things is thanks to the introduction of Windows 7, which we’ve already begun using as a base in some of our content (namely, P55 motherboards).

For the most part, the applications and scenarios we test with in our current CPU content isn’t going to change too much, and instead of dropping or replacing a bunch, we’ll instead be adding to the pile, for an even broader overview of performance, and to see where one architecture may excel or be lacking. So with that, we’ll be retaining Autodesk’s 3ds Max (upgraded to version 2010) and also Cinebench R10 and POV-Ray. In addition, we’ll also be adding Autodesk’s Maya 2010, where we’ll be taking advantage of the very comprehensive SPECapc benchmark.

For Adobe Lightroom, we’ll be upgrading to 2.5 (unfortunately, it doesn’t look like 3.0 will be made available until well after our suite is completed), and also adding in some similar batch processes with ACDSee Pro 3. Also on the media side, we’ve revamped our test with TMPGEnc Xpress. We’ve dropped using DivX AVI as our main test codec, and will begin using MP4 and WMV instead. In all of my tests, both formats are of a much higher quality, and they’re both very taxing on any CPU.

SiSoftware recently released its 2010 version of SANDRA, so we’ll begin testing with that right away. The biggest feature there that I want to make use of is with the encryption part, as it’s designed to take advantage of the brand-new instruction found on Intel’s upcoming Westmere processors, AES-NI. It’s going to be very interesting to see just what kind of difference that instruction will make for applications that can take advantage of it.

Another entry to our test suite will be CPU2006, an industry-standard benchmark from SPEC that’s used to gauge the overall worth of a PC in various scenarios, from computing advanced algorithms, to compressing video, to compiling an application and a lot more. This is easily the largest benchmark we could ever run on our machine, and in our initial tests, it takes 12 hours to complete on a Core i7-975 Extreme Edition. No, I’m not kidding. The default of the application is to run three iterations, however, so I’m likely to back that down to just one, as in my many experiences, I’ve found the results from individual tests to be incredibly reliable. If the entire suite took 12 hours on a top-of-the-line CPU, I cringe when I imagine how long it would take on a dual-core…

In addition to all this, we’ll be revising our game selection as well, since the games we’ve been using for a while are obviously out of date (especially Half-Life 2, although it still -is- a decent measure for CPU performance). There are some other benchmarks I have in mind, but I won’t talk too much about them right now since I’m really not sure how they will play out.

So with that, I’d like to again send out a request for input from you guys. Do you think we should include another type of benchmark, or scenario? With Westmere right around the corner (beginning of January), it’s highly unlikely that I’ll be able to put a new suite into place for our launch article, but who knows…

|

Discuss: Comment Thread

|

Dell’s Online Store Hit with Changed Prices

As a boss, one of the biggest hurdles you may ever have to face is the letting go of a single employee, or many. In the tech world, and well, in any business in general, layoffs are inevitable, and when they happen, it’s seriously unfortunate. It means hard workers are out of a job, and are unable to easily feed their families, pay their rent and generally take care of the necessities of life. So I’m sure it was with angst that about 700 Malay Dell employees found out they were getting the boot.

Dell currently employees about 4,500 people in Malaysia, so 700 is about 16% of its workforce. Some speculate the reason for such layoffs, as the general consensus is that Dell hasn’t been blowing our minds with new products as it once has. But, the global economy issues is sure to be at fault as well, and who knows, perhaps netbooks have a lot to do with it. It’s hard to tell, at least right now.

For those 700 to-be-laid-off employees, one or more may have decided to have a little bit of fun before leaving, as many prices of products on Dell websites were changed to outrageous figures, both on the low and high-end. Of course, no one can say for certain that this was the result of a disgruntled employee, but the timing is impeccable. So what could you have potentially bought if you were quick enough?

Well for modest peripherals, a USB laser mouse was being sold for $3,999.99, while an Inspiron AC adapter was listed at $710 (not a far stretch from the regular price, though). Need a 120GB SATA hard drive for your notebook? Look no further than the $21,000 option. On the opposite end of the spectrum, there were many processors marked down to unbelievable prices, such as an X3110 Xeon for $16.99, L5430 Xeon for $12.99 and 5060 Xeon for $10.99. Apparently, thousands of orders went through, but I’d be highly doubtful to believe that they will be processed. It’s still funny, though.

Maybe it’s a coincidence, but Dell’s recent round of layoffs seems to have sparked a flurry of price mistakes on Dell.com. According to the Wall Street Journal, Dell has plans to lay off 16 percent of its 4,500 strong workforce in Malaysia — that means that 700 people will be out of a job by the end of June 2010. Interestingly enough, around the same time the layoffs were announced, numerous price mistakes started hitting Dell’s website.

| Source: DailyTech |

Discuss: Comment Thread

|

Cherrypal Releases “Africa”, $99 Notebook

The search for ultra-affordable yet competent notebooks has been on-going for years, and as time passes, we continue to see numerous potential models. Some of these do indeed hit the market, such as the OLPC and Classmate, while some are still works-in-progress. But even as these new notebooks get released, there never seems to be a lack of complaint, and people want to see more, for even less.

It was with surprise to the entire industry the other day when a company called Cherrypal released a 7″ notebook that costs only $99, called the “Africa”. According to Cherrypal, it’s “small, slow, sufficient“, so there’s no secret behind the fact that it’s going to be slow, but again, you’d expect nothing else. A slow PC is a lot better than no PC, after all, especially if you’re a young child in a developing country who’s never had the chance to even use one before.

The Africa might be one small notebook, but it still has a little bit of weight to it, at 2.64lbs (this is obviously light compared to most other notebook models, however). It features a 7″ 800×600 display, and is measured at 213.5mm x 141.8 mm x 30.8 mm. It features a 400MHz XBurst processor by a Chinese company called Ingenic Semiconductor. Little is known about the chip, but it’s rumored to be based on the ARM core.

Other specs include 2GB NAND flash and 256MB of memory, along with three USB ports, a memory card reader and 86-key keyboard. Overall, it’s small, and simple, but for $99, it’s quite a feat as far as I’m concerned. It runs either Windows CE or Linux, and it’s available for order now, should you want one. Cherrypal also offers another small notebook, the “Bing” (no relation to Microsoft’s search engine) that has beefier specs, and a $389 price tag.

“We buy access inventory and package it up; that’s why we are able to offer such a low price,” Seybold said. “In other words, we use XBurst and similar inexpensive processors in order to stay below the $100 mark, we reserve the right to make changes on the fly, that’s why we didn’t go into great detail on our site. There are a number of customers who will get a much more powerful system than advertised. Yes we take orders and we started shipping last week. Naturally our margin on the “Africa” is very thin but we are not losing money either.”

| Source: PC Magazine |

Discuss: Comment Thread

|

31% of Windows 7 Issues Related to Installation?

Yesterday, I posted a brief report about Windows 7 usage from our readers, and the results were quite impressive. As it stands today, almost 30% of our Windows readers use Windows 7, and for an OS that’s been out for just over two months, that’s quite a feat. I also mentioned that that Windows 7 suffers far fewer issues than Vista did at launch, and while that’s true, it appears that a fair number of issues still remain.

One issue that came to light shortly after launch was related to the actual installation. Even some in our forums have complained of such issues, but I didn’t realize until now that it was just so widespread. According to a report, a staggering 31% of issues with Windows 7 were related to the installation. As I’ve installed Windows 7 successfully at least 30 times since launch, I’m amazed at this figure because I’ve yet to run into such an issue.

I don’t think the precise explanation behind the issue exists, but I believe it to be various, related to hard drive controllers, graphics cards and so forth. Aside from install issues, 26% experienced missing applets or components, which again, is far, far too high of a figure. Aero’s inability to function accounted for another 14%, but I’m willing to bet almost all of those cases are related to not having a proper graphics driver installed. Also, to be clear, these percentages are of 100,000 people who sought help with iYogi, so they may not be entirely definitive, but they’re definitely worth a look at.

There are a slew of other problems reported, but all are relatively minor in severity compared to these other ones. It’s interesting that install issues account for such a large portion of user complaints, though, given that the OS went through such an exhaustive beta-testing phase. You would have imagined that such issues would have been found long ago, but I guess the fact is, the vast majority of consumers don’t beta test, enthusiasts do.

The FTC’s administrative complaint charges that Intel carried out its anticompetitive campaign using threats and rewards aimed at the world’s largest computer manufacturers, including Dell, Hewlett-Packard, and IBM, to coerce them not to buy rival computer CPU chips. Intel also used this practice, known as exclusive or restrictive dealing, to prevent computer makers from marketing any machines with non-Intel computer chips.

| Source: Ars Technica |

Discuss: Comment Thread

|

FTC Investigates Intel’s Alleged Anti-Competitive Practices

Intel’s blue logo couldn’t be more appropriate for the company this year, as it has had one hassle to deal with after another. In the latest case, none other than the FTC is speaking up against the company’s anti-competitive tactics, which according to the it has “stifled innovation and harmed consumers“. Well, I’m not sure about the latter, because “harmed” seems like a strange word to use in a case like this, but whatever the result, Intel sure can’t shake this off quick enough.

The FTC isn’t being too subtle in its accusations, either, stating, “Intel has engaged in a deliberate campaign to hamstring competitive threats to its monopoly“. Overall, the FTC believes that Intel has been deceptive, and unfair to both its competition and the end consumer, and it wants Intel to remedy all of the damage done. Of course, the remedy would likely result in even more money being given to AMD, but we’ll have to wait and see.

Intel has been quick to respond to the FTC’s accusations, stating that “Intel has competed fairly and lawfully. Its actions have benefitted consumers. The highly competitive microprocessor industry, of which Intel is a key part, has kept innovation robust and prices declining at a faster rate than any other industry. The FTC’s case is misguided. It is based largely on claims that the FTC added at the last minute and has not investigated. In addition, it is explicitly not based on existing law but is instead intended to make new rules for regulating business conduct. These new rules would harm consumers by reducing innovation and raising prices.“

Intel goes on to say that the issue should have been settled long ago, but the FTC insisted on unprecedented remedies, all of which could result in making it “impossible” for Intel to conduct business. Even further, Intel also states that this case will cost American taxpayers tens of millions of dollars to pursue, and that the issue it hasn’t even been “fully investigated”. Intel wraps up its press release stating that it has invested $7 billion into its US manufacturing operations and has over 40,000 employees domestically.

There’s a lot of back and forth going on here, and from an outsider’s standpoint, it’s hard to see who’s right and who’s wrong. When Intel settled with AMD last month, I figured that would be the last we heard of it, but not so. It’ll be interesting to see where this goes, and see whether or not it results in Intel coughing up even more money.

The FTC’s administrative complaint charges that Intel carried out its anticompetitive campaign using threats and rewards aimed at the world’s largest computer manufacturers, including Dell, Hewlett-Packard, and IBM, to coerce them not to buy rival computer CPU chips. Intel also used this practice, known as exclusive or restrictive dealing, to prevent computer makers from marketing any machines with non-Intel computer chips.

| Source: FTC Press Release |

Discuss: Comment Thread

|

Kaspersky Lab Utilizes NVIDIA’s CUDA for Faster Threat Identification

I’ve said this for quite a while, and still stick to it, that where GPGPU (general purpose GPU) is concerned, things seem to be slow to catch on. We’ve seen real benefits of the technology in the past, as the hugely parallel design of a graphics processor can storm through complex computations with ease. In many cases, the process can be accomplished much faster on a GPU than a CPU.

But up to now, most of what we’ve seen on the consumer side has been related to video encoding or enhancement and minor image manipulation, and most often, even those two are limited in what they allow you to do. But, there has also been some other interesting uses, such as cracking passwords. So with that, it led me to believe that we could see other similar uses for the home user. Not with cracking, but at least the ability to speed up things like virus scanners, malware-detectors and so forth.

According to a freshly-issued release from Russian-based Kaspersky Lab, it has begun using NVIDIA Tesla GPUs in order to speed up the process of detecting new virus’ quicker, which involves identifying unknown files, and then scanning through it to detect what kind of virus it could contain, or what kind of threat it offers. Compared to an Intel Core 2 Duo 2.6GHz, Kaspersky Lab says that the process completes up to 360 times faster on the Tesla GPU. That’s impressive.

As it stands today, this technology benefits Kaspersky Lab internally, not to the end-consumer, but as it has seen such stark increases in its lab, so much so as to actually adopt the technology, then it seems likely that the possibility of seeing it spread over to the consumer side for even faster scanning could be beneficial as well. Of course, the largest bottleneck on the consumer side is storage, not the processing power, but with the advent of SSDs, taking advantage of GPGPU could likely make a noticeable difference in a lot of scenarios.

The use of Tesla S1070 by the similarity-defining services has significantly boosted the rate of identification of unknown files, thus making for a quicker response to new threats and providing users with even faster and more complete protection. During internal testing, the Tesla S1070 demonstrated a 360-fold increase in the speed of the similarity-defining algorithm when compared to the popular Intel Core 2 Duo central processor running at a clock speed of 2.6 GHz.

| Source: Kaspersky Lab Press Release |

Discuss: Comment Thread

|

Corsair Releases 24GB Dominator Memory Kit

In our forums last week, I posed a simple question. For your next PC build or upgrade, how much memory will you be installing? If you’re taking the Core i7 route, then you’re likely to choose either 6GB or 12GB, and for everything else, 4GB seems to be the most common option, with 8GB being the choice for most higher-end enthusiasts. But just how much memory is overkill for most people? If you’re using your computer for the more casual reasons, even if it includes gaming, having a ton of memory isn’t going to help you out too much.

If I had to pick a “sweet spot” for various architectures, I’d say for in the high-end scheme of things, 12GB is fine for Core i7, and 8GB for everything else, because while it may be overkill for many people, it really allows for some breathing room. If you aren’t what you’d consider to be a power user, then half of each figure is fine, as it’s truly beneficial mostly with intense multi-tasking, or when performing tasks that happen to be total RAM hogs.

But what if even 12GB isn’t enough? As hard as it may be to believe, there’s a market out there for those who want insane amounts of memory, and for those, Corsair has just released a 24GB Dominator kit, for Core i7 platforms. Yes, that’s a total of 6 4GB modules. Because of this higher density, enthusiast frequencies and timings we’re used to seeing just isn’t possible at this point in time, so what you’ll see here are DDR3-1333 frequencies and 9-9-9-27 timings.

Though those are lower values in today’s scheme of things, if you’re actually pushing your PC to use so much memory, I’m highly doubtful that the lower specs are going to make any difference at all, because at that point, it’s the breathing room you need, not the ultimate speed. But, it also shows that if you go with such a kit and don’t need it, you’re going to have a lot more bandwidth to work with, but slower speeds, so for the vast majority of us, a 12GB or lower kit is going to make way more sense. You can still drool about the idea of 24GB in your PC, though.

“Corsair’s 24GB Dominator memory kit is perfect for high-performance computing applications, including computational research, HD digital content creation, working with multiple virtual machines, and other data-intensive applications,” said John Beekley, VP of Technical Marketing at Corsair. “The latest multi-core Intel and AMD CPUs, combined with sophisticated graphics processors from Nvidia and AMD, are capable of performing incredible workloads. Corsair’s 24GB Dominator memory kit enables the large number of concurrent threads and substantial datasets required by these applications.”

| Source: Corsair Press Release |

Discuss: Comment Thread

|

Techgage Readers Continue to Adopt Windows 7

About ten or so days ago, I posted some initial statistics regarding our readers Windows 7 usage, and since we’re two weeks into December, I feel like I have enough solid data to portray just how popular the latest OS is. If you’ll recall, in the first three days of the month, Windows 7 accounted for 23.80% of all our Windows users. Has anything changed? Well yes, but not for the worse.

To put things into perspective, of all our readers, 87.86% used a Windows OS between December 1 – 14. Of those, 28.23% were using Windows 7, 49.44% for XP, and 19.91% for Vista. The other 2.42% used various other versions, with the most popular being Server 2003, at 1.22%. So as you can see, Windows 7 usage hasn’t gone down, but rather up, so the growth still continues to be seen.

For alternative OSes, Linux is the second most popular on the site with 6.14% of our total visits, while Mac OS X sits in third with 4.89%. All other visits belong to mobile devices, the most popular being the iPhone, with Android and BlackBerry mixed in as well. Unfortunately, Google doesn’t distinguish between Linux distros, but of our Mac OS X readers, 47.30% were using Snow Leopard, with 36.17% on Leopard.

While we’re on some stats kick, how about some browser usage information? Mozilla’s Firefox has been in our top spot for a while, and that hasn’t changed, with 51.39% usage. Internet Explorer has experienced a rough decline this year, with a current usage of 30.37%. Google’s Chrome is growing in popularity fast, and that’s reflected in our stats, with its usage share of 8.99%. The fourth spot belongs to Opera, with 4.26%, with Safari taking the fifth spot with 3.70%.

I’m a bit of a statsaholic, so to take a deeper look at the trends throughout the year, I’d like to write a one-page article showing the real growth and declines with graphs, since it’s far easier to get the fuller picture that way. I think I’ll put something like this together in mid-January, after CES is all over and done with. So stay tuned, the results should be interesting!

|

Discuss: Comment Thread

|

UAC Pop-up with No Password Box?

Since its launch, Windows 7 has received a fair amount of praise and is being enjoyed by a rather significant number of users, and after what Vista brought to the table, it’s great to see. But, as refined as the OS may be, there are still a number of issues with the OS that remain, and one of them I experienced first-hand last night. I’ll explain how it happens here, and also the fix.

During the initial OS setup process, you’ll create a primary account, and that same account will become the default administrator account. So, if an application needs to be installed, you just have to click “Yes” to allow it and that’s that. If you set up a second account, you can choose either Administrator or Limited, with the latter being highly recommended for reasons too broad to mention in this small post.

So where’s the issue come in? Well, I was setting up a fresh copy of Windows 7 on my mother’s PC, which is shared with my brother (the second account was to be his). Because my mother preferred her account to automatically log on when the PC is boot up, I went ahead and did that (using the netplwiz tool). I then reboot the PC, and all was good, until I went to install an application.

Because I didn’t enable the true administrator account, I was essentially stuck in a bad position. If you attempt to do anything that requires administrator permissions, a box like the one below will pop-up, but what’s different is that a password box will not be there. This is directly related to the fact that the administrator account was not enabled. A simple mistake, but a pain in the rear to fix.

To prevent this issue from happening, or to fix it, you’ll have to go into the Start menu and right-click “Computer”, and then “Manage”. From there, go to the “Local Users and Groups” menu and then into “Users”. From there, you’ll see all of the user accounts on the PC, including Administrator. Double-click that one, and uncheck “Account is disabled”. This is all it takes to either prevent or fix that issue. Not too tough, huh?

If you got stuck the same way I did, then you’ll have to boot into Safe Mode (using F8 immediately before Windows begins booting). If you’re being automatically logged into a user account, then you’ll just have to log out, and log straight into the Administrator. Unfortunately, the old Ctrl+Alt+Delete login trick in Windows XP doesn’t exist in Windows 7, which is unfortunate since it would allow you to access the real Administrator account without having to go into Safe Mode. Oh well, at least there’s a fix that doesn’t require a format!

|

Discuss: Comment Thread

|

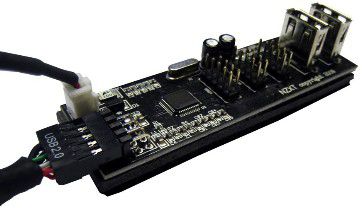

NZXT Releases IU01 Internal USB Expansion Module

Over the past few years, NZXT has become a Techgage favorite, as it has consistently developed some great products, many of which we’ve reviewed. Although most known for its chassis’, NZXT produces much more than that. Of these, power supplies are fairly popular, not to mention some of the “coolest” fan controllers out there. Then there’s gaming peripherals. Yes, NZXT seems to be all over the map, but as long as the released products live up to the name, there’s not much wrong with that.

I admit that I’m not much of a DIY-er, but every so often a product that caters to those people stands out, and in the most recent case, it’s a simple USB expansion product. USB expansion isn’t at all new, but NZXT has developed a product with a twist. First, it’s an internal product, not external. What it requires from your machine is A) a connection to the power supply and B) one of your chassis’ internal USB cables (typically blue).

It may seem weird to take a USB connector in order to offer expansion, but it’s necessary, and unimportant, because in return, the device will open up three new internal USB connectors, along with two regular USB ports, for a total of 8 additional USB ports to the PC (it’s actually six, since installing this essentially overtakes two). You might wonder why on earth an internal product would have regular USB ports, and the reason is for things like Bluetooth or WiFi modules, where installing it internally is fine. Doing so would free up one of the USB ports in your front panel or in the back of the motherboard.

When I first saw this product, I couldn’t help but think about the lack of need, because so many motherboards today ship with far more USB ports than most people need. But there’s another twist. Because this connects directly to your power supply, it’s going to be able to power whatever you plug into it, and if you’ve ever worked on an older PC, or perhaps experienced the issue yourself, USB bandwidth and power can become a real problem when you have a lot of peripherals plugged in. This is the very problem and reason why I recently built a friend a new PC

So as I look at it, this product seems better suited for older PCs, but if you are a USB-aholic, installing it into a newer PC is definitely an option. Concerned about the price? There’s really no need, as it’s set to retail for an easy-to-stomach $20. I’d expect availability relatively soon at e-tailers like Newegg.

“We strive to make products that provide enthusiasts options for enhanced expansion and control” said Johnny Hou, Chief Designer at NZXT. “Motherboards typically feature only 2 internal USB ports which tend to be occupied by a media card reader and the case’s USB cable. The IU01 provides a total of 8 additional USB ports expanding the users’ multimedia connectivity and ensures peripherals have sufficient power with the direct connection to the PSU.”

| Source: NZXT IU01 USB Expansion |

Discuss: Comment Thread

|

Contest Reminder: Nero 9 Reloaded & Dirt 2

Our Nero 9 Reloaded contest has been going on for almost a week, and it has one more week to go. If you haven’t entered yet, I only have one question… WHY? Alright, I know it’s hard to get uber-excited about a piece of software, but I made it so insanely easy for you to enter, so no excuse you have is going to be good enough for me! Already own a copy or Nero, or don’t want it? How about entering and give the copy away as a gift?

To enter, you just need to answer three quick questions, which Nero will use to better understand what its customers and non-customers look for in such an application. As a long-time user of Nero’s products, I can vouch for the quality, and believe it to be the best in its field. Don’t believe me? Just download the demo, and you can see for yourself, and then enter our contest for a free copy.

That contest concludes next Tuesday, so you still have time to linger on your decision to enter, but another contest we’re holding for a copy of Dirt 2 ends tomorrow night, so if you want a chance at winning a free copy of North America’s first DirectX 11 title, you’ll want to head on over to our related forum thread and make a post. That’s it. How many contests allow you to enter simply by posting something in a thread? Not too many, that’s how many.

We don’t hold too many contests, I admit, but I’m planning on things being a lot different in the new year. I have another contest queued up for the beginning of January, which should have been posted at least a month ago (story of my life, lately!), and as we’ve had many large companies express interest in contests to us over the past couple of months, I believe we’ll have enough fuel to have at least one good contest a month. Like these two current contests, any upcoming contests won’t be too much of a pain to enter. I believe contests should be fun to enter, not a chore, and I hope that’s reflected in whatever contests we have coming up.

So, GO ENTER. For each person reading this who doesn’t enter, I’ll shed a tear. Don’t make me dehydrate!

| Source: Nero 9 Reloaded |

Discuss: Comment Thread

|

Ten “Must-Have” Google Chrome Extensions

After waiting for more than a year, Mac OS X and Linux users last week finally had a version of Google’s Chrome Web browser to take advantage of. At the same time, Google also unveiled a new “Extensions” feature, which is just as it sounds, and is not much different than what you’re likely already familiar with from other browsers. This feature, like the release of the browser for those operating systems, was just as long-awaited, and it kicked things off in style.

Despite the fact that Extensions is a brand-new feature, developers had been hard at work for the past few months in order to hit the release date, and as a result, over 300 extensions are available for installation. The folks at Download Squad wasted no time in testing out a bunch of them, and have come up with the ten “must-have” extensions for anyone who uses Google Chrome as their main browser.

One of their recommendations is “Web of Trust”, which is also available for other browsers. I haven’t ever touched this one before, but after seeing what it does, I’m almost tempted. It’s essentially a grading system for websites, and if you run the toolbar, you can vouch for its trustworthiness, privacy, reliability and child safety. I think it’s meant more for e-tailers and the like, but it’s a good way to see if the site you just wound up on is trusted overall or not. Fortunately, we were graded well. See? I told you we could be trusted!

Another interesting extension is “Shareaholic”, and I’m sure just by the name, you can tell what it does. See something on the Web you want to share? Click the icon in the browser, then the service you want to post it to, and voila, it’s pretty much just that easy. “LastPass” is another useful extension, and it aims to be the definitive password manager, and gets huge kudos from the site. Of course, you’d imagine that Chrome would come with something like this from the get-go, but that’s what makes the browser so great… it’s not bloated, which is probably why it’s so freakin’ fast.

LastPass is the password manager — no other tool or add-on even comes close to LastPass in its functionality or usability. You can import password databases from almost every other similar service, and the developers say that it picks up more password fields (AJAX forms for example) than any other password-scanning tool. LastPass has other neat bits too, like the ability to store secure notes and generate secure passwords. This is one of those vital extensions that every security-aware user should download.

| Source: DownloadSquad |

Discuss: Comment Thread

|