- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Source Code for Multiple Atari 7800 Games Released

It’s not all too often that a cool piece of video gaming history becomes available to the public, but that’s just what happened last week with the release of the source code of multiple Atari 7800 games… the source code! For current or up-and-comer game developers, this is a real treat, because such code can give insight into seeing how games were coded over 25 years ago. Dig Dug, Robotron 2084 and Ms. Pac Man are among the included.

The code has been released “unofficially”, as it wasn’t Atari themselves who released it. They don’t seem to have much of a problem with it though. Just don’t go trying to rename it and sell it as your own… I hear the 25-color game market is quite hot right now!

In case you’re not familiar with the Atari 7800, don’t worry… many aren’t. Instead, the Atari 2600 seems to be the most remembered console from that era, and for good reason. It did many things right, while consoles such as the Atari 5200 did many things wrong. That console did so much wrong, in fact, that the 7800 was essentially the successor, and was designed to right what was wronged, such as with the gamepads and 2600 backwards-compatibility.

Either way, what matters is that the code is available for a few classics, so hopefully the trend will continue and we’ll see even more come out over time (I can’t help but feel like I need to take a look at the code for Custer’s Revenge (possible NSFW). I know, I know… I can’t help it).

During those times nobody would have imagined in their wildest dreams the games that Atari’s developers floated into the gaming thirsty market and instantly swept across continental boundaries. But things changed soon after that and a company once regarded as one of the most successful gaming console manufacturers and developers faded away in the pages of our technology’s hall-of-fame.

| Source: ProgrammerFish |

Discuss: Comment Thread

|

Current Overclocked CPU World Records

With overclocking seemingly more popular than ever, maybe you’ve caught the bug and have begun pushing your own machine to the limits? Given the room here is too warm even in the winter, it’s the last thing I’m thinking of doing right now, but I still can’t help but look around the web and remain in awe of some of the accomplishments others have made. While I tend to be far more impressed with stable overclocks (on air or water), it’s hard to not be impressed by what LN2 or even dry ice can accomplish.

In checking to see if I had the latest version of CPU-Z on hand, I decided to check out the records page and see if some world-records had been broken lately, and to my surprise, some have been. Although nothing is sure to touch old-school records like duck’s 8.18GHz P4 631 overclock (due to current architectures, not talent) for a while, it’s overall performance that matters, and that’s where today’s top overclocks really shine.

It took some time to see it happen, but it looks like coolaler managed to hit the evasive 6GHz mark on a Core i7-975 Extreme Edition, just one month ago. His raw clock was 6061.09MHz, and he used a 195MHz bus and scary-high 31x multiplier to get the job done (it was even done with a full 6GB of RAM installed!).

I am not sure how I missed this, but it looks like AMD’s Phenom II X4 processors have also hit a major milestone: 7GHz. This happened back in April, so I’m a little embarrassed to have missed it, but it was hit by three members of LimitTeam, who saw a raw frequency of 7127.85MHz, utilizing a 250MHz bus. Very, very impressive.

With Intel finally having seen a 6GHz overclock hit on their Core i7 processor, they’re probably anxious to finally see Lynnfield launch so extreme overclockers can get down and dirty with pushing it to the limit. Something tells me it’s going to overclock a wee bit better than i7, thanks primarily to the loss of the QPI bus, but we’ll see.

| Source: CPU-Z World Records |

Discuss: Comment Thread

|

Fusion-io ioDrive vs. Intel X-25M in RAID 0

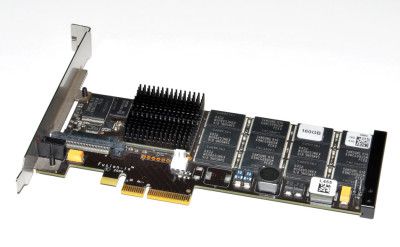

Fusion-io is a name that popped up last year out of nowhere, and from how things are looking, their name is going to remain in the limelight for quite some time. This is of course thanks to their ultra-fast solid-state storage solutions, such as the 160GB ioDrive that our friends at HotHardware had the privilege to test. This is a drive that has more pros than cons, but sadly, one con in particular is rather major… the $7,200 price tag.

Of course, this isn’t a storage solution designed for regular users, nor even the highest-end of enthusiast. Rather, these extreme speeds are perfectly-suited for database work, or even hardcore workstation use (project rendering). With such a high price though, the question must be begged… how can the same performance be achieved at a more reasonable price?

In an attempt to get that done, HotHardware took four Intel X-25M SSD’s, already known for being the best on the market, and put them together in RAID 0, essentially combining their performance. Theoretically, such a setup will beat Fusion-io’s drive in the read test, but fall short in the write. With those ideas in mind, the results shown aren’t too surprising, but what’s great to note is that the performance from even two Intel drives in RAID 0 is absolutely incredible. Not to mention the price tag is a lot easier to swallow as well…

Credit: HotHardware |

To look at the card itself you’ll note its pure simplicity and elegance. There are but a few passive components along with a bunch of Samsung SLC NAND Flash and Fusion-io’s proprietary ioDrive controller. The 160GB card we tested above is a half-height PCI Express X4 implementation. From a silicon content standpoint it’s a model of efficiency, though the PCB itself is a 16 layer board, which is definitely a complex quagmire of routing for all those NAND chips.

| Source: HotHardware |

Discuss: Comment Thread

|

Are Notebook Coolers Worth the Cash?

As you could probably imagine, companies approach us often regarding review ideas for products of all stripes, from motherboards to graphics cards to chassis’ to whatever else is technology-related. Over the course of the past year, though, there’s one product-type that really seems to have caught on, and a week doesn’t go by when we’re not thrown some review ideas for them. What could I be talking about?

As the picture no doubt gave away, I’m talking about notebook coolers. On Friday, I was asked once again if we’d ever take a look at that particular company’s coolers, or at the very least a roundup, and as usual, I said we’d like to in the future, but time won’t allow it right now. And while time is quite limited lately (while we focus on our most important content), I can’t help but ponder the idea a little bit, because many vendors are beginning to produce notebook coolers, and where Cooler Master is concerned, they even dedicated an entire line to all-things notebook (Choiix).

I’ve only dabbled in notebook cooling a few times before, but each time I did, I was left underwhelmed. While I do think some notebook coolers look nice, I don’t think many of them have the ability to cool your particular notebook that well. After all, notebook designs aren’t universal, so it’s understandably difficult for a vendor to create one that’s going to take care of the majority out there.

After thinking about it for a while, I began to wonder… even if notebook coolers were effective for a given model, how many people actually use them? I’ve had notebooks in the past that have run warm, but even then, I’ve never thought about picking up a product to help cool them down. Generally, if you’re using a notebook on a desk, the heat isn’t going to be so extreme that you simply couldn’t use it, and where cooling would prove most useful (in bed), the coolers themselves are too hard and have rough edges, so they aren’t exactly comfortable (and they could tear up your sheets – not in a good way!).

While I don’t have an immediate need for such a product, and don’t quite understand why they’re popular (or so I’m told by various companies), I’d like to ask you guys what you think. Do you used a notebook cooler? Would you if you knew it would genuinely improve temperatures? Have your own experiences with one? Let your voice be heard in our related thread! If you’re not yet a member of our forums, the sign-up is quick and painless, I promise!

|

Discuss: Comment Thread

|

MacBook Pro 13″ Can’t Display Millions of Colors – Or Can it?

Although I appreciate a quality display when I see one, I’m far from being a guru on the subject, so I’m absolutely stumped regarding recent news that the MacBook Pro 13″ model can’t display millions of colors as promised, but rather only a few hundred thousand. In a blog post made by icon designer Louie Mantia, he claims that though he believed the 13″ MacBook Pro would feature an 8-bit panel like the larger models, it in fact comes equipped with only a 6-bit panel.

Louie sold his 17″ last-gen MacBook Pro for something a little more portable, but was burned with this realization. After putting in some extensive effort to get a response from Apple, it couldn’t be done, so whether or not he found a dud, or if all such notebooks include a 6-bit panel, is unknown. To cloud the situation even further, professional photographer Rob Galbraith recently posted an article praising the new MacBook Pro line-up’s incredible panel quality, including the 13″ variant.

So what is it? Do all 13″ MacBook Pro’s include a 6-bit panel? And for that matter, do most notebook displays include 6-bit panels? Do even the larger MacBook Pro’s include 8-bit panels? The situation seems so utterly confusing, and in looking around the web, everyone’s opinion on the matter seems to conflict with one another. It’s not helping that Apple’s keeping so tight-lipped either, but it’s no real surprise.

Perhaps the most mind-boggling question would have to be… who on earth would want to do design work on a 13″ monitor?! Either way, if you’re in the market for a new notebook, especially the 13″ MacBook Pro, be sure to do some extensive research before a purchase to make sure you are getting what you’re expecting.

A few days later, I get a phone call from Apple, letting me know that Apple Engineering has declined to disclose this information. Excuse me? Declined? I just purchased your product and all I want is for you to verify the specs that you advertise. It should not be that difficult to do. But apparently, it is. A few years ago, a few individuals started a class-action lawsuit against Apple for advertising millions of colors with their 6bit displays. Unfortunately, they needed a “class” for a class-action lawsuit, and not enough people cared/noticed. The matter was settled out of court.

|

Discuss: Comment Thread

|

Pandora Has Saved Internet Radio (For Now)

The future of online radio has been unclear for quite some time, thanks to the greedy music industry, but it looks as though Pandora has saved the day… not only for themselves, but for Internet radio as a whole. In a new agreement, Pandora is giving up 25% (at least) of their revenue, but it does mean security until at least 2015. That gives Pandora and other online radio stations a fair amount of time to work a more reasonable deal, or for the clueless beings overseeing the entire process a clue or two.

The key part in all of this is that SoundExchange agreed to a 40-50% reduction in the per-song (per listener) rates, and in return, Pandora gives up some of its revenue as mentioned above. But that’s not all. Listeners of the free service are going to be affected, but only those who use the service for more than 40 hours per month. If you happen to be one of those, you’ll have to pay $1 for unlimited access (that seems more than fair).

If you already happen to be a paid member of Pandora, then you’re going to see absolutely no change. Overall this is a huge win for Pandora and Internet radio as a whole, but it’s really too bad that these companies have to bend over backwards to cater to the music industry. It’s in no way like this with typical radio, so it’s strange that online radio gets the industry’s panties in a bunch. Either way, this is a great outcome, and we can all relax for the next while.

Oh, and if Pandora could finally come to Canada, that’d be great, too.

But Pandora also had to give up a little more. Because the rates agreed upon are still quite a bit higher than other forms of radio, the service is going to have to put limits in place for users of its free version. Apparently, this will only affect 10% of the user base, as it’s basically just anyone who uses Pandora over 40 hours per month. If a user hits that wall, it will only cost them $0.99 to go unlimited for the remainder of the month. Seems fair.

| Source: Tech Crunch |

Discuss: Comment Thread

|

Upgrading Ubuntu from One Major Version to Another? Simple!

Regular visitors to the site probably know by now that I’m a Linux user, and I’ve been using Gentoo as my preferred distribution for the past three years. But because Gentoo requires a fair amount of attention (even just for the install), when it comes to installing Linux on a mobile machine, there are few distros easier to get up and going like Ubuntu. This is far from being an unknown choice in the Linux world, but it’s for good reason… it’s stable, detects a plethora of hardware and runs super-fast.

The reason I particularly enjoy it on notebooks is because it detects almost everything, if not everything there is to detect, from Bluetooth to WiFi to the graphics card to the audio and whatever else. I also found out this past weekend just how easy it is to upgrade as well, from one major version to another, and it impressed me enough to actually post about it.

For whatever reason, Ubuntu 9.04 would not boot up properly on my HP dv2. Not wanting to waste time with trouble-shooting, I downloaded the 8.04 LTS version instead, and sure enough, it booted up no problem. But since I wanted to remain current, I obviously wanted to upgrade to 9.04 and hope for the best, so that’s what I did. The process is remarkably simple, and given we’re talking about a full OS upgrade here, it’s in all regards impressive.

Since I was using the LTS version (long-term support), it meant that I had to change which packages it could download (LTS versions are designed to remain that version for the life of the support), and once done, the updater within Ubuntu will pick up that a new major version is available. So, I updated first to 8.10 and then to 9.04 and the entire process was unbelievably simple. The best part is that anyone could get by with this method of updating… not only Linux users. We’re definitely on the right track here.

For some bizarre reason, my audio card stopped working after the upgrade, but I’ll deal with that another day. One thing after another, it seems!

|

Discuss: Comment Thread

|

Google Announces Chrome OS

It looks like we can finally put another long-run rumor to rest, because Google has made it official that they’ve been working on their own OS, which currently goes by the name of Chrome OS. Like their browser, Chrome OS is going to be completely open source later this year, allowing developers to edit, tweak, and if you are like me, remove a line from the source code absolutely required for compiling because you don’t know what you’re doing.

When Chrome OS is launched, it will first target netbooks, and the focus will be on the Internet. The company has a goal to have a machine boot up within seconds, and essentially also allow you to check your e-mail within seconds. The OS isn’t going to focus on desktop applications, either, but rather cater to the cloud and utilize web-based apps. For a netbook, that sounds like a great idea, but I’m not so sure how it will fare if it’s ever ported to a desktop (although many will disagree with me).

The OS is, not surprisingly, going to be based on the Linux Kernel. It will however utilize it’s own window manager, which we can expect will likely mimic the Chrome web browser to some degree (not a bad thing). It’ll run on both x86 and ARM processors, and given the latter, we can also expect the OS to cater to various MIDs as well. Though that might be the case, Google stresses that this project is completely separate from Android, although both projects may overlap at some point.

The release is slated for next year, but it won’t be until early next year until we get a better idea of exact timing. The company is currently working with netbook vendors to release their product with Chrome OS pre-installed though, so it’s only a matter of time before we see just how great adoption will be.

Speed, simplicity and security are the key aspects of Google Chrome OS. We’re designing the OS to be fast and lightweight, to start up and get you onto the web in a few seconds. The user interface is minimal to stay out of your way, and most of the user experience takes place on the web. And as we did for the Google Chrome browser, we are going back to the basics and completely redesigning the underlying security architecture of the OS so that users don’t have to deal with viruses, malware and security updates. It should just work.

| Source: Official Google Blog |

Discuss: Comment Thread

|

New Contests from NVIDIA and Intel

We know you guys love to win stuff, so your ears are sure to perk up with the knowledge of two sweet contests being held by NVIDIA and Intel. Neither are related, but both offer up some great prizes. First is NVIDIA with their contest that aims to have gamers saying, “WoW”… can you even guess what game this is centered around? Purchase a qualifying GPU from a qualifying e-tailer between July 1 and August 1, and you’ll have the chance to enter.

There only seems to be one prize, but it’s an all-expenses paid trip to Blizzcon for you and a friend, so not a bad one at all. If you enter and don’t win, you’ll still get a free 30-day trial of WoW Classic out of the deal (because we all know a trial for that game is hard to come by, right?). The good news is that the contest is open to many different countries including of course USA, but also Canada, France, UK, Germany, Netherlands, Finland, Norway and also India. You can find out more at the contest site.

Intel arguably has the more interesting contest though, at least if the $80K in prizes is anything to go by. The company has teamed up with Destructoid for “Friday.Night.Fights”, where you compete to top their leaderboards for the chance to win some truly incredible hardware (even PCs valued at over $5K!). If you aren’t the best gamer out there, don’t worry… luck can also win you a prize here. You can access the contest site at the bottom of this post!

It begins! Register and compete to win some breathtaking gaming computers from Cyberpower and random prizes just for participating! The first game we’re playing is Quake Live. Here’s an extensive guide on how to pair up. The top 6 players after 2 weeks will move to a private semi-final mini-ladder tournament hosted by Destructoid Staff where we’ll give away the computer prizes, live!

| Source: Friday.Night.Fights. |

Discuss: Comment Thread

|

The Joys of Updating Our Test Suites… Part Thrice

Guess what’s tiring? If you said, “Doing a complete overhaul on all of Techgage’s methodologies and test suites”, you’d be absolutely correct! It feels like forever that I’ve been working on things around here, but we’re inching ever closer to completion, and I can’t wait. As mentioned before, our GPU scheme of things is all wrapped-up, and we’re in the process now of benchmarking our entire gamut of cards, so you can expect a review or two within the next few weeks with our fresh results.

As it stands right now, we’re eagerly working on revising our CPU test suite, and that’s proving to be a lot more complicated than originally anticipated. There’s obviously more to consider where CPUs are concerned, so we’re testing out various scenarios and applications to get an idea of what makes most sense to include. The slowest part is proving in getting prompt responses from all the companies we’re contacting, but that’s the nature of the business!

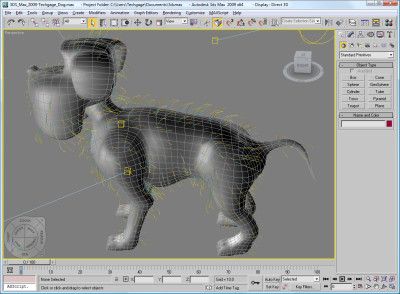

If there’s one thing you can expect to see in our upcoming CPU suite iteration, it’s a beefed-up number of tests. I don’t believe we’re lacking in that regard as it stands, and I certainly don’t want to go the opposite direction where we have too many results, but I do want to make sure we offer up the most robust set of results out there, to cater to not only the regular consumer or enthusiast, but also the professional. So in addition to tests such as 3ds Max 2010, we’re also going to add in Maya 2009. There are other professional and workstation apps I’m considering, but I won’t talk about those just yet.

Given that Linux is more popular than ever (and a decent number of our visitors are using the OS), I’d like to also introduce the OS again into our CPU content, and also possibly our motherboard content (not for performance, but rather compatibility). More on that later though, as we’re still looking to see which benchmarks would make the most sense with that OS (you can be sure one test would be application compilation).

Oh, and that machine in the photo above? That’s our revised GPU testing machine. Because the six titles we use for our testing total 55GB, we’ve opted to stick to a speedy Seagate Barracuda 500GB hard drive, while for memory, we’re using Corsair’s 3x2GB DDR3-1333 7-7-7 kit. Huge thanks to Gigabyte for supplying us with a brand-new motherboard for the cause, their EX58-EXTREME, and also Corsair for their HX1000W power supply. Other components include Intel’s Core i7-975 Extreme Edition and Thermalright’s Ultra-120 CPU cooler. And yes, that’s a house fan in the background, and no, it’s not part of our active cooling!

|

Discuss: Comment Thread

|

Mixing Your Mouth with Simple Technology

I don’t talk too often about music or music artists in our news section, but this is one of those times when there’s no reason not to. Not because the music is technologically advanced, but rather because of the exact opposite. Beatboxing is the art of creating music with nothing other than your mouth. Most often, created sounds come in the form of drum beats and other rhythm, and some pull it off so well, that it’s makes it hard to believe that no instruments or computers were involved.

Back in April, a friend linked me to a YouTube video by beatboxer Dub FX, for a song called Flow. Fifteen or so watches later, I knew I had to pick up the album, which I did a few weeks ago. I was a little skeptical, but I have to say, it’s very, very impressive, and I’m having a hard time putting it down. Call me naive, but in some parts I actually have a hard time accepting the fact that it’s beatboxed beats I’m listening to, and nothing else.

To create more robust music, Dub FX (and other beatboxers) use loop pedals to play back the music they’ve created, then sing over it. Other techniques are used to create even more robust music, but ultimately, the source music is from the mouth and nothing else. You can check out the video above to get a sample of Dub FX’s music, and also check out his official site.

I’m sure there are thousands of beatboxers worthy of mention, but another group in particular I find to be quite amazing (despite the musical genre not really being to my tastes) is Naturally 7. This is a seven-part R&B band, and again, all the music is created with the mouth. Want to be impressed? Check out the video.

Dub Fx the street-loop-beatboxer grew up in St Kilda / Melbourne / Australia performing in various bands before hitting the world-wide road. Dub Fx uses Roland BOSS effect & loop pedals to create sounds which when layered creates a live musical construct. Predominantly Dub Fx can be found busking through Europe with his Girlfriend the ‘Flower Fairy’ who also performs along side.

| Source: Dub FX Official Site |

Discuss: Comment Thread

|

China Bans Virtual Goods Selling

Some say that PC gaming is dying, but that’s hardly the case. What has seemed to have happened over the course of the past ten years is a shift of the type of games people play. MMORPG titles are more popular than ever, and with World of Warcraft exceeding 10,000,000 subscribers at the current time, it doesn’t look like the trend is going to die off anytime soon.

If you are one of the millions that currently subscribe to an MMO (*raises hand*), you likely know just how much of a problem gold-selling is, and perhaps you’ve even partook in some of this forbidden fruit yourself. I’d like to think that the majority of people who play an MMORPG would agree that gold-selling is a problem, and it’s for what I believe to be obvious reasons. Gold-selling means more bots and a skewed in-game economy… it’s that simple. But as hard as the game companies try to stop it, their attempts all but ultimately fail.

But, I read with great excitement the other day that the Chinese government has declared that virtual currency and services cannot be traded or sold. This is huge news, as China has the vast majority of gold-sellers. Will such a ruling actually thwart the practice? Here’s to hoping it will, but it’d be awful naive to believe that the problem is simply going to vanish. For something like this to remain truly effective, the entire world would have to create laws around it, and that’s obviously not going to happen (and it shouldn’t given all the more important things going on). Oh well, it’s at least a start… now to see if we’ll actually notice a difference.

The Chinese government estimates that trade in virtual currency exceeded several billion yuan last year, a figure that it claims has been growing at a rate of 20% annually. One billion yuan is currently equal to about $146 million. The ruling is likely to affect many of the more than 300 million Internet users in China, as well as those in other countries involved in virtual currency trading. In the context of online role playing games like World of Warcraft, virtual currency trading is often called gold farming.

| Source: InformationWeek |

Discuss: Comment Thread

|

VirtualBox 3.0 Final Released

Boy, it feels like it was just a few weeks ago that the VirtualBox 3.0 beta was released. Oh, right… it was. This release set a new record for software moving from the beta to final release stage, but apparently the software was “virtually” finished… the developers simply wanted to have beta-testing go on for a few weeks before shipping it as final. So, if you’re a VirtualBox user, you’ll want to make sure you give this one a download.

As I mentioned in the news post a few weeks ago, 3.0 brings two major additions: Guest SMP with up to 32 CPUs and also the introduction of 3D support (OpenGL 2.0 for Windows, Linux, Solaris and Direct3D 8/9 for Windows). As I mentioned in a forum thread, I didn’t have the best of luck when testing out the beta, and for the most part, nothing has really changed with the final. Hopefully your experiences will prove better than mine.

In looking around the web, it seems that some people have even been able to get Aero to work, although I’ve yet to see a screenshot showing that to be the case. Ryan Paul at Ars Technica did show a screenshot of the 3D in action using Ubuntu, however, and it works well enough to even allow Compiz to function. For all we know right now, the 3D aspect might work better on certain cards, but I’m not so sure. In the screenshot below, you can see Windows Vista 32-bit running under the 3.0 final under Gentoo Linux, and neither can I get Aero to work, nor do I have more than one CPU core available (I specified two and three, neither worked).

I’ll have to give VB 3.0 a try on other OS’ and see if my luck improves. Either way, if you’ve ever wanted to run Linux under your Windows or vice versa, or even run a different Linux distro (or another OS) under your preferred OS, give the latest version a download… you’re likely to regret it.

VirtualBox 3.0, the newest version of Sun’s high-performance, cross-platform virtualization software, is now freely available for download. The new version can handle heavyweight, server-class workloads like database and Web applications, as well as desktop workloads on client or server systems. It can support virtual SMP (symmetric multiple processing) systems with as many as 32 virtual CPUs in one virtual machine and delivers enhanced graphic support for desktop-class workloads.

| Source: VirtualBox 3.0 |

Discuss: Comment Thread

|

Top Music Inventions from the Past 50 Years

If there’s one thing many of us can relate to, it’d be our love for music, whether it’s listening or playing. If you share a similar passion as I do for listening to whatever genre strikes your fancy, could you pick just one particular music-related invention that you’d consider to be the most important ever to be unveiled?

I’d personally vote for the MP3 file format, because although it wasn’t the first digital music format to hit the market, it was the one that really kicked-off some amazing things to come. Such things include MP3 players (iPod, Zune, Sansa, etc), digital music sharing/purchasing and overall, the ease of handling such files. Imagine if today we still had to deal with our hard-copy CDs just to listen to the music… insane!

Online tech magazine T3 picks their top ten music inventions, and not surprisingly, the MP3 format (and even Napster) is on there, but their first is still a good one… Sony’s Walkman. If you were alive in the 90’s and enjoyed music, chances are you owned one of these bulky players that are quite humorous to look at today. I’m not a fan of ultra-small MP3 players such as the iPod Nano, but I sure prefer it over a book-sized player!

Without a shadow of a doubt, Sony’s portable music box altered the way we think about and enjoy music forever, and such an achievement has got us thinking about all the other innovations in music that should be celebrated and revered with just as much gusto. Thus, we’ve compiled a list of the top ten innovations in music tech over the last 50 years. As Friedrich Nietzsche once said: “Life without music would be a mistake.”

| Source: T3 Magazine |

Discuss: Comment Thread

|

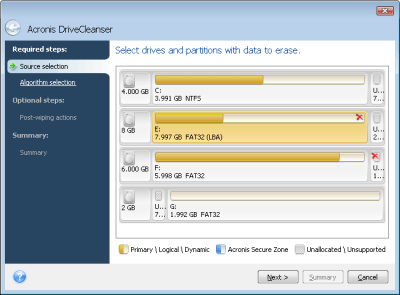

Our Labs Have Adopted Acronis True Image

As I mentioned a few weeks ago, we’re in the process of updating the entire gamut of test suites for all of our performance and non-performance-related content. Yes, it’s taking a lot longer than we could have ever anticipated, part in thanks to various things that came up (such as a four-day trip I took last week). We’re closer than ever to wrapping some up though, so you can expect to see some great things within the next few weeks.

Up to this point, we’ve always used Norton Ghost for all our hard drive backup/restore needs, but with this total test suite overhaul, I decided to test out some of the competition, to see if we could make an improvement there as well. I like Ghost, but it hasn’t proven to be the most reliable for me (sometimes I’d have to restart the restore process two or three times before it would actually work), and it doesn’t allow total drive backups (multiple partitions + MBR) that I could see. Those are two major issues for our particular needs.

Thanks to our review of Kingston’s SSD upgrade kit, I was introduced to Acronis True Image, and to my surprise, the version included allowed me to test out the simple backup/restore with my other drives. So, I tested it out with my new HP dv2, because I wanted to backup the entire drive (to retain the recovery partition), and keep the backup file handy in case I accidentally wipe that partition in the future.

Well, it worked, and the entire process was far easier to pull off than with Ghost. Both partitions along with the MBR were retained, and after wiping the target drive and restoring the backup, it was good as new. This impressed me enough to download the trial of the full-blown version, and again, the feature-set is amazing (especially for $50!). This isn’t an advertisement, but a real-world testament. I’ve only used one feature for any good deal of time, but that one alone is worth the price-tag to me.

We’re waiting on the shipment of a few copies, and once received, I’ll put it all to good use and follow-up in a few weeks with my thoughts. If you’re curious about the entire feature-set, you can check out the product site.

Acronis True Image Home 2009 is an award-winning backup and recovery solution for a good reason: it protects your PC after just one click and allows you to recover from viruses, unstable software downloads, and failed hard drives. Create an exact copy of your PC and restore it from a major failure in minutes, or back up important files and recover them even faster.

| Source: Acronis True Image Home 2009 |

Discuss: Comment Thread

|

If Software Companies were Soccer Teams

It’s an odd comparison to make, but is there a single software company that immediately comes to mind that is most like your favorite sporting team? I couldn’t immediately think up one for mine, but luckily Martin Veitch at CIO News took care of it, and I’d now have to agree. He took a handful of football (soccer) teams and compared them to some of the largest software companies around, and some of the comparisons are spot-on.

I’m an Arsenal supporter (or “Gooner”, more appropriately), and he compared them to Google. Fancy footwork, nice location, cool ideas and “easy on the eye” (what?). He surmises that both have good work that goes nowhere, and that sure seems to be the case with both lately. I’ll also add that both Google and Arsenal are some of the richest in their respective fields, but that’s not all that counts, aye?

Who could Manchester United possibly be like? IBM, says Martin. Both have old money and great tradition, and “everybody ends up going there in the end, even if they don’t like them.“. In some regards, that’s actually scarily true. One of my favorites is Real Madrid, who are most like Oracle. Tons of money, and they end up buying anything that moves. That’s probably a little more true for Real, though…

I dare say some other genius has already tried this schtick but in the spirit of Friday afternoon (and the neverending battle for employment preserving hits) here is Another! Stupid! Tabloid! List! of software companies that have strangely morphed into professional football (that’s *soccer*, not the boring American variety) teams.

| Source: CIO News & Part 2 |

Discuss: Comment Thread

|

Lenovo Takes Keyboard Alterations Seriously

What would a computer be without a keyboard? Useless if you ask me. But just how much thought has gone into the keyboard as we know it, and is there room for improvement? The folks at Lenovo think so, but it’s obvious that changes can’t be made without some serious thought first. How many times have you purchased a new keyboard or notebook, only to be upset by a certain placement? It’s happened to me too many times.

On their latest ThinkPad model, Lenovo moved their “Delete” key, and for some it might be a welcome or loathed change. But in an article by USA Today, it’s shown that the company doesn’t take such changes lightly, and they put in mass amounts of research before committing to one. Recently, they even installed keypress tracking software on 30 employees’ computers (the employees knew) in order to see which keys were used most often, and this helped them decide which keys should and shouldn’t be touched.

The question must be begged… are we due for a major keyboard overhaul? With the advent of people being able to type on ridiculously small keyboards (cell phones, PDAs, etc), it seems as though the current layouts could likely be changed for the better and no one would mind. Not to mention we still have some keys that seemingly have little purpose, such as the CAPS LOCK. I’m perfectly happy with current layouts on most boards, and I’m not opposed to change, but I’m really not sure what major changes any company could make that most everyone would be pleased with. That’s a tough one.

Push-back from consumers hasn’t stopped companies from testing and even manufacturing keyboards with unconventional designs over the years, in some cases demonstrating that people could learn to type faster than on standard QWERTY keyboards, so-called because of the arrangement of the top row of letters. During Hardy’s time at IBM, researchers came up with ball-shaped one-handed keyboards that he said were faster than standard ones.

| Source: USA Today |

Discuss: Comment Thread

|

PC Gaming Through the Ages

A few weeks ago, we posted an article that took a look at GOG.com (Good Old Games), a service that sells, as the name suggests, old titles. Some are older than others, so it’s fun to look through their collection to see how graphics have improved over time. Depending on how old you are and how long you’ve been using a computer, you may have jumped into things more recently when SVGA or better was available, and if so, you’ve missed a lot.

If you’re an old-school PC gamer, and I mean old-school, then you may have even played games that lacked color, also known as monochrome. I played few that way, because I quickly made the move to CGA when I was able. CGA was painful in many regards, because the game developers had two color palettes to work with, and both were headache-including. But hey, at least it was color.

Then the shift was made to EGA, which was pretty-well on par with 8-bit consoles (16 colors), then VGA (256 colors), then SVGA (65,536 colors) and beyond. The sky’s the limit nowadays, but it’s great to take a look at the history of PC gaming to see just how far we’ve come. We could very well still be using EGA or VGA… and we wouldn’t even know of anything better. Imagine that! Tech Radar has a nice article discussing these color limitations and a lot more, which I’ve linked below. I recommend reading, especially if you’ve never had the privilege (is it a privilege?!) of experiencing this history.

What separated this era of gaming from the likes of Crysis (if you ignore roughly 15 quantum leaps in various areas of technology) was that tricks such as these were essential. Elite used wireframe 3D graphics on most platforms because that was as much as they could hope to handle. Most games assumed the player would understand that principle, although some built it into the fiction of the game.

| Source: Tech Radar |

Discuss: Comment Thread

|

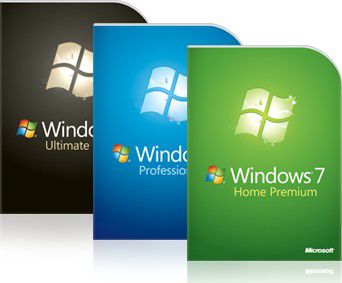

Windows 7 RTM Set for July 13, Family Pack a Possibility

We’ve been so immersed in Windows 7 news for the past year, it’s going to be great when the freaking OS is finally released! Who’s with me?! As we discovered early last month, that will happen on October 22, so the wait isn’t going to be too long from here on out. It now also appears that the RTM (release-to-manufacturing) will be distributed beginning on July 13 to TechNet subscribers (doesn’t seem like the public will have legal access to this one, but I could be wrong).

Also, as a reminder to what we spoke about two weeks ago, Microsoft is currently selling all Windows 7 editions for half-price until July 11. You need to act fast, because a deal like this might not come again anytime soon (and maybe not at all… even OEM prices aren’t quite this good usually).

Another interesting thing to note is that a Family Pack might be en route, but even then the pricing is unlikely to hold a candle to this current half-price sale. Some keen individual noticed a note in the end of the Windows 7 EULA that states, “If you are a ‘Qualified Family Pack User’, you may install one copy of the software marked as “Family Pack” on three computers in your household for use by people who reside there.“.

It hasn’t been made official yet, but it’s pretty hard to misinterpret that.

The main purpose of the RTM build will be to give hardware manufacturers more time to tweak their drivers with a working “final” version of Windows 7. The release of the build will coincide with the kickoff of Microsoft’s Worldwide Partner Conference (WWPC) set to kick off in New Orleans. Reportedly TechNet, MSDN, and a few other partner connections will all get the RTM build on July 13.

| Source: DailyTech |

Discuss: Comment Thread

|

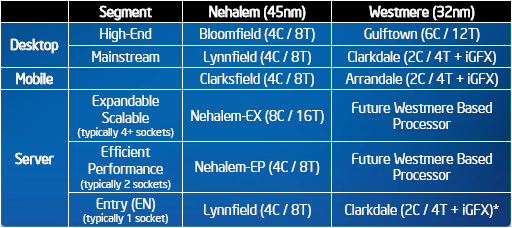

Intel’s Clarkdale to Launch During Q4?

It looks as though we’ll be seeing Intel’s Clarkdale a lot sooner than we expected, as recent roadmap revisions have indicated it will show up during Q4 2009, a full quarter earlier than originally planned. Will this effect the launch of Lynnfield? It’s really hard to say, but probably not. Intel still has much i7 stock, so it’d be unlikely they’d launch Lynnfield sooner than needed.

As a reminder, Clarkdale is the mainstream desktop variant of Intel’s 32nm Westmere, and will also be the first to feature an integrated graphics processor. These Dual-Cores will feature four threads, and will hopefully give us some real excitement. Will Westmere rid all other integrated graphics solutions obsolete? Let’s hope… we’re in bad need of some shake-ups!

Clarkdale isn’t going to come and disappear fast. No, Intel expects that during Q4, Clarkdale will account for a staggering 10% of their shipments, so let’s just hope demand can keep up! The scary thing? Core i7 shipments are set to account for only 1%, while Lynnfield will hog 2%. It’s the older generation that still proves the winner though, with Core 2 Quad processors expected to account for 9%, Core 2 Duo E8/7000 at 35% and Pentium E5/6000 at 31%.

The company’s 32nm Clarkdale CPUs will account for 10% of Intel’s total OEM desktop CPU shipments in the fourth quarter, while 45nm Core i7 processors will account for 1%, Lynnfield-based processor 2%, Core 2 Quad processors 9%, Core 2 Duo E8000/E7000 series processors 35%, Pentium E5000/E6000 series 31%, Celeron E3000 and Atom series together 8%, and 65nm-based Pentium E2000 and Celeron 400 together 4%.

| Source: DigiTimes |

Discuss: Comment Thread

|

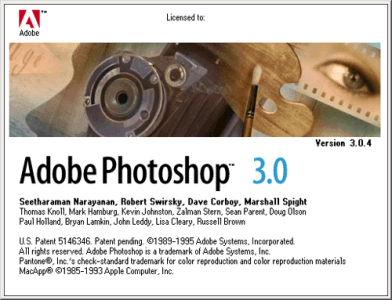

A Look at Adobe Photoshop Through the Years

Whether you’re a digital artist or the furthest thing from, chances are great that you’ve at one time found yourself using Adobe Photoshop. Its roots began in 1987 when Thomas Knoll created a monochrome picture viewer called “Display”. He soon realized the potential of a full-fledged image manipulation program, and Photoshop was born. Version 1.0 was first released in 1990, and exclusively for the Mac (and who knows, maybe this is one of the reasons Apple’s seem to be the choice of many graphics professionals).

With over 20 years under its belt, you could say that the application has changed quite drastically over the years. To help give a visual tour of the application from the past to present, blog Hongkiat has posted an in-depth look at various aspects of the application through the ages, with everything from the application icons, to the start-up screen, to the toolbox and of course the UI itself.

This is one application that continues to get better as the years pass, and that’s interesting. I’m no PS guru, but each time a new version comes out, I wonder what the heck could be made better, but Adobe continues to release features we didn’t even know we needed. I also can’t believe it’s already been six years since CS1 came out… I remember it like it was yesterday!

Adobe Photoshop has always been one of the greatest (if not the best) software when it comes to manipulating and editing image. It all started off in 1987 with a Mac application call Display, created by Thomas Knoll. With almost two decades worth of changes and improvements, you almost can’t imagine how the first version of Photoshop would look like by looking at the Photoshop you have on your desktop.

| Source: Hongkiat |

Discuss: Comment Thread

|

Windows 7 Pricing Announced (Plus a Half-Price Sale!)

The moment we’ve all (okay, not ALL) been waiting for… Windows 7 pricing! Some of it’s cool, some of it is ridiculous (I’ll get into that in a minute). For full versions (non-upgrades), Home Premium is $199.99 ($40 less than Home Premium for Vista), $299.99 for Professional and $319.99 for Ultimate. For upgrades, Home Premium will set you back $119.99, while Professional will sell for $199.99 and Ultimate for $219.99.

My first complaint would have to be for the minor pricing differences between Professional and Ultimate. Seriously, $20? All that’s going to do is confuse people, because just how much more could people get for that $20? To be fair, they ARE designed for two different crowds, but you’d still imagine the pricing would scale a wee bit more than this.

Second complaint could be the upgrade price. Yes, it’s a revamped OS, but Vista just came out two-and-a-half-years ago, so expecting people to pay between $119.99 and $219.99 seems a little harsh, especially given there doesn’t seem to be a family pack option (like Apple have so cleverly implemented).

Still want 7 but find these prices too high? Our good friend madstork91 pointed out something I missed… a half-price sale that begins this Friday. Yes, to help drum up interest for the new OS, and adoption, Microsoft will be selling each version (upgrade and full) for 50% off. THAT makes things quite a bit easier to stomach. This is one of those rare times when it will actually pay off to be an early adopter!

We will offer people in select markets the opportunity to pre-order Windows 7 at a more than 50% discount. In the US, this will mean you can pre-order Windows 7 Home Premium for USD $49.99 or Windows 7 Professional for USD $99.99. You can take advantage of this special offer online via select retail partners such as Best Buy or Amazon, or the online Microsoft Store (in participating markets).

| Source: Windows Team Blog |

Discuss: Comment Thread

|

Conan O’Brien Visits the Intel HQ

When I received a press release from Intel titled, “Intel Teams Up Again with Conan O’Brien”, I had to check it out right away. In just what way could Intel have anything to do with the new Tonight Show host? Well, the company is sponsoring the show throughout the season, and plans to inject some creative marketing into some episodes, which at this point will be in the form of periodic segments (and in the future, games and other things on the site).

The first segment aired the other night, and it’s been posted on the website, simply titled “A Visit to Intel”. It follows Conan as he takes a brief tour through the company to see how chips are made and what the environments are like that house them, while making time to take a walk through Intel’s own museum of technology (sign me up for that!).

The video is well worth a watch, especially if you’re a fan of Conan’s comedy and skits. I just wish it was a wee bit longer than three minutes! Oh well… there should be more en route…

Intel is inside The Tonight Show is a dedicated space for you to enjoy and interact with Intel and The Tonight Show. In the coming weeks we’ll be posting videos of featured Intel Tonight Show Moments, launching games featuring Tonight Show talent and much more! Below, Conan takes you behind the walls at Intel where 41,000 engineers are bent on turning science fiction into science fact in some of our favorite Intel Late Night Moments. Check back regularly for more intelligent comedy from Intel and The Tonight Show.

| Source: Intel is Inside… The Tonight Show |

Discuss: Comment Thread

|

The Joys of Updating Our Test Suites… Part Deux

Last week, I posted a news item regarding the various methodologies around the site that we’re currently in the process of updating, and since then, a lot has happened. We’re still a little ways off from being finished, but hey, progress is progress. That news item in particular tackled our GPU test suite, and I’m happy to report that we’ve since solidified our choices for what we’ll use going forward.

The six games include Call of Duty: World at War, Crysis Warhead, F.E.A.R. 2: Project Origin, Grand Theft Auto IV, GRID and also World in Conflict. These games were chosen for various reasons, but mostly because they’re all quite popular in today’s scheme of things, and for the most part, none of them favor either ATI or NVIDIA too heavily, so we should be good for a little while… hopefully at least until Windows 7 launches and is well underway.

So with that out of the way, next up is another important test suite… our processors! Admittedly, despite our current test suite being almost two-years-old, our selection has held up well, and I see very little reason for making drastic changes. What I’d rather do is add to the pile, to give an even more robust look at the performance between processors. Of the applications we plan to drop, however, SYSmark 2007 and ProShow Gold have a reason to shake in their boots.

I’m also in the beginning stages of something really cool I hope to add to our future CPU content, but it’s far too early to mention it, because at this point, it might not happen (it’s a wee bit complex). I hope it works out, though, because it’d give us an interesting spin on things. Once again though, and as always, we’re looking for comments, suggestions and whatever else you want to say towards our plans, so don’t hesitate to voice your opinion in our related thread (linked below). After all, we’re writing this content for you guys, not ourselves! Let’s work together to make the content the best it can be!

The first thing I want to drop is SYSmark 2007, because I find it rather useless. The results it delivers don’t scale as they really should, so it’s a bit misleading. I think it’s obvious that a Q9650 is far more powerful than an E8600, but SYSmark doesn’t tell us that. Plus, running the suite is a very patience-testing process. Not to mention, it requires a completely fresh install of Windows. That all on top of the fact that running it twice in a row, even with two iterations, could give differing results, and when we’re dealing with results that range between 1 – 250-ish, any variance can screw up the true scaling.

|

Discuss: Comment Thread

|

Intel and Nokia Expand Partnership to Develop Future Mobile Standard

Psst… guess what? Did you realize that mobile computing is ultra-popular? Of course you do. In fact, it might be impossible to not realize the fact. Ten years ago, cell phones were beginning to catch on, but they were far from being so popular that everyone owned one. That’s exactly how things are today, and between cell phones, smart phones, PDAs and other MIDs, many people own more than one product at a given time, so we’re definitely in the midst of a mobile revelation, which seems to be lasting a while.

To help continue delivering amazing mobile devices, Intel and Nokia have today announced an expanded relationship and cooperation that promises to help “shape the next era of mobile computing innovation“. To do this, the resulting partnership plans to create a completely new standard, which will of course involve Intel Architecture and select Nokia technologies. The release states that Intel is acquiring a license to Nokia’s HSPA/3G modem, although whether that’s supposed to become a part of the standard or not, I’m unsure.

The release also furthers the companies’ emphasis on open source software, and such operating systems as Moblin and Maemo, both Linux-based, are likely to become the base of these future devices. This is a good thing, and it might even enable developers to alter the OS to their own liking. After all, that’s the point of open source. As long as Windows compatibility is top-rate, I can’t see anyone being upset at having one of these at the base of their next MID or cell phone.

It might take a while before the fruits of these companies’ labor are made known, but with Intel’s developer forum taking place in just under three months, we may very well be hearing from Anand Chandrasekher, Intel’s Senior VP and GM of the Ultra Mobility Group, on all that’s being worked on between the two companies.

“This Intel and Nokia collaboration unites and focuses many of the brightest computing and communications minds in the world, and will ultimately deliver open and standards-based technologies, which history shows drive rapid innovation, adoption and consumer choice,” said Anand Chandrasekher, Intel Corporation senior vice president and general manager, Ultra Mobility Group. “With the convergence of the Internet and mobility as the team’s only barrier, I can only imagine the innovation that will come out of our unique relationship with Nokia. The possibilities are endless.”

| Source: Intel Press Release |

Discuss: Comment Thread

|