- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Was HP’s dv2 Worth the Wait?

When we hit up CES 2009 this past January, there were few notebooks that really caught my eye. There was one, however, that really left an impression, and that’s the dv2 from HP. In fact, it impressed us so much, that we awarded it one of our Best of CES 2009 awards. But, that was then, and this now. With the launch having occurred within the past month, does the dv2 live up to our “Best of” potential?

Well, I can’t answer that quite yet, but I did purchase this very notebook a few days ago to take along with me on our trip to Computex. As it stands, there are multiple models of the dv2, split between the US and Canada, and there’s also a base model and an upgraded model, the latter of which we had hands-on experience with at CES.

Sadly, I walked out of the store with the base model, due to the fact that the upgraded model was sold out just earlier that day. It’s too bad, too, since the upgraded model gives a lot more for your $200. That includes a Vista upgrade to Home Premium, a boost to the memory (4GB), double the battery-life, HDMI-out and also an external ODD. Whew. I was told that upon returning from the trip, they’d allow me to upgrade, so let’s see if that holds true!

For the uninitiated, the HP dv2 is designed to be a poor-man’s (kind-of) 12″ notebook. While most notebooks of this size cost well over $1,000, the dv2 settles in at around $600 USD. It’s also the only notebook currently on the market that includes AMD’s single-core 1.6GHz Athlon Neo 64 (think of it as AMD’s Intel Atom).

What that CPU means to you is… low power, but also low performance. It lacks any sort of HyperTransport, and it is becoming noticeable. I’ve had some instability issues so far, and even as I sit here typing this in the Toronto airport, I’m awaiting Firefox to “uncrash” itself. Whether or not that’s the fault of the notebook or the browser is yet to be seen, but I’ll have no choice but to notice any such issues since this is my only PC for the next two weeks. So far, no major issues to speak of though, and for a smaller PC, the keyboard is sweet.

I’ll post a complete review of the notebook about a week after Computex, so stay tuned.

| Source: HP dv2 CES Coverage |

Discuss: Comment Thread

|

Computex 2009 Coverage… Coming Soon!

If there’s one geeky thing perfect for kicking off the summer, it’d have to be Computex, one of the largest trade shows in the world. Unlike most trade shows though, Computex is one that directly pertains to most of what we report on. We’re talking vendors and product of all stripes, from motherboards, graphic cards, processors and every other integral system component, on top of gadgets and other technologies. It goes without saying, Computex is always interesting, for obvious reasons.

We’ll be reporting on as much as possible during the show, so make sure you check our news section often. We’ll also have a static roundup article in our content section that will aggregate all of the news we’ve reported on so far, so that you can find out what interests you fast and go read about it. Because Taipei is on the other side of the world, news will be posted in the late evening on these shores, so please bear that in mind!

We started a thread last week (which can be accessed below) that looks to see what you hope to gain from the show, and also what you expect to see. Feel free to hit up the thread (no sign-up is necessary for such topics) and give your two cents! Also, if time permits during the show, I’ll update that very thread as often as possible with miscellaneous photos that don’t really belong in our news section.

Our overall goal is to bring Computex to you, so don’t hesitate to voice your opinions and requests!

COMPUTEX TAIPEI has become the largest computer exhibition in Asia and the second largest in the world, next to CeBIT in Germany. Each year, key global businesses come to this event to launch their new products. Since a large portion of the businesses in the world have research and deployment centers or production facilities in Taiwan, this exhibition attracts observers, analysts, and journalists of computer and information industries from all over the world to discover and report the latest technologies, developments, and trends.

| Source: Computex Official Site |

Discuss: Comment Thread

|

Quick Gage: What is it?

With the release of our look at the iPod classic 120GB yesterday, we also unveiled a new site feature called “Quick Gage”. Since nothing about this was mentioned before, I wanted to take a moment to explain what it is, and what purpose it serves.

The primary goal of Quick Gage is simple… to help get more content on the site. It in no way will affect our regular content, but rather act as a supplement, so that even while we’re working ardently on our more robust content, the site won’t skip a beat. After all, with the extensive research, testing and writing, the majority of our content requires a lot of time, so Quick Gage is sure to help us get out regular content even while we’re working away on our regular features.

Past that, Quick Gage will also allow us to get content on the site for products we’ve either purchased ourselves, or for products that we’ve received that don’t exactly warrant a full-blown review. The iPod is a perfect example of that. It’s not a new product by any means, but since I recently purchased one, a Quick Gage proved to be the perfect avenue for me to give quick details on what it is, along with issues I experienced with the product, and a little bit of opinion.

We’re still ironing out all the details, but it’s likely that Quick Gage will become a feature for both our news section and our regular content section. If the product or service we’re covering doesn’t require too much space on our pages, then it will be best suited for the news section. But on the other side of the coin, if whatever we’re covering requires a bit more of an explanation or opinion, but not enough to be considered a regular article, then it will become a one-page article.

We of course always look for suggestions on any aspect of our site, and this one is no exception. If you have ideas for Quick Gage, or happen to know of a more creative name we could be using, don’t hesitate to comment in our related thread! You don’t need to sign up on the forums in order to comment in our site-related threads. There, I just took away your one and only excuse!

| Source: Quick Gage: Apple iPod classic 120GB |

Discuss: Comment Thread

|

Futuremark Announces “Lords of Overclocking” Event

Is it just me, or has anyone else noticed the intense emphasis put on overclocking in the past year? Just last summer, ASUS and Gigabyte held their own overclocking championships, and Gigabyte in particular saw enough value to continue holding the same this year. In case you missed it, we covered one such event just a few weeks ago, and will cover the final in two weeks.

Recently, even MSI, a company not really well known for major overclocking, announced that it was also going to hold an overclocking championship, which took at least me by surprise. Today, Futuremark announced that they were joining in on the fun, by holding their own event as well, with the grand prize winner being sent to MSI’s overclocking final, in addition to walking away with a bunch of product.

Futuremark’s contest is a little different, because rather than have select overclockers participate, anyone can – yes, even you. The benchmark? 3DMark 2006. How do you win? Isn’t that obvious? Overclock the heck out of your machine, and come out on top for your particular region (Europe, Americas, Asia). It’s that simple. It is too bad, however, that this contest essentially locks out anyone with a budget. You’re not going to see a winner with air cooling either…

Futuremark Corporation today announced a new competition for PC overclockers everywhere. The contest will see game, DIY, and overclocking enthusiasts from Europe, Asia and the Americas competing for the “Lord of Overclocking” title for their region. With Futuremark anticipating over 200,000 entries, the online contest will be the largest overclocking competition ever staged.

| Source: Lords of Overclocking |

Discuss: Comment Thread

|

Microsoft Adds Internet Media Streaming to Windows 7 RC

Something that Microsoft loves to do with their latest Windows releases is to include a lot of new functionality (some people call this bloated), and sometimes, a new application or feature they bundle actually gets rid of the need to download another similar application, saving time. In the case of a new media streaming feature, the company seems to be going after Slingbox owners. But as it appears so far, Sling Media has little to worry about right now.

The goal of the feature is to simply allow you to stream content from your home PC, whether it be music, video or photos. As it stands with the current RC, this is all tied to Windows Media Player, and requires you to have a Windows Live account, which would be associated with both PCs. With everything set up properly, you should be able to stream the aforementioned content from wherever you are located… at work or in a hotel somewhere.

Your success depends on a few factors, namely the speed of your Internet connection on both sides, and also the processing power of the host. In the ideal situation, you should be able to stream any content without audible skips or video lag, but ideally, your host should have a fast connection… I’d imagine at least 1Mbit/s upstream. In Ina’s case, a firewalled network caused some problems, so the technology certainly isn’t foolproof at this point in time. But hey, this is a rather nice feature to have bundled, and handy if you find yourself wanting it, without paying for a Slingbox.

Set-up is not overly complex, but nor is it elegant by any means. To get the PC ready, you have to turn on Internet streaming in Windows Media Player. The other piece is associating both machines with the same Windows Live ID. (The feature may eventually support other ID providers, but for now it’s only Windows Live.) Getting up and running required downloading a Windows Live ID Assistant from the Internet, which sends you to a browser.

| Source: Beyond Binary |

Discuss: Comment Thread

|

Learning Someone Else’s Lesson: Offsite Backups

Today’s storage solutions are, for the most part, more stable than ever, but that doesn’t mean backing up your data shouldn’t be taken seriously. We’ve wrote about this in our news many times, and even wrote a few articles in the past on how to get it done quickly and efficiently. If you’re the owner of a website, even a small one, the importance of backing up is even more essential because the data not only affects you, but many others as well.

Earlier this week, one of the largest flight simulation communities, Avsim, found out just how important it is to keep offsite backups, and yes, the offsite part is key. You see, the admins did keep backups, like responsible site owners, but what wasn’t considered was what would happen if hackers broke in and also took down the backup server, which is exactly what happened. In an instant, years of work… gone.

This absolute obliteration could have been aided with offsite backups, so let this be a lesson to any website owner out there. Make sure you download nightly copies of your website (syncing also works), so that you always have the most up-to-date copy of your website handy, in case something absurd like this happens. I feel for these guys either way though… to lose an actively-built site like that has got to sting more than a little.

The site’s founder, Tom Allensworth, said that the site would be down for the foreseeable future and was unsure if would ever go back up. “The method of the hack makes recovery difficult, if not impossible, to recover from,” Mr Allensworth said in a statement. “AVSIM is totally offline at this time and we expect to be so for some time to come. We are not able to predict when we will be back online, if we can come back at all. ”

| Source: BBC News |

Discuss: Comment Thread

|

Why You’ll Never See a 200Mbps Internet Connection

For those of us who use the Internet for a bit more than e-mail or browsing the web, the desire to have a fatter connection is usually hard to avoid – you know, unless you happen to have a personal OC/3 line. Virgin topped the charts last week though, when they announced deployment of their 200Mbps net connection, currently in very limited tests in Ashford, Kent, UK. The problem though, is that currently, you’d never see such speeds from a home connection.

Ars takes a look the reasons why, and they vary from hardware limitations to technical limitations. As it stands today, most people don’t have a gigabit network, which is exactly what you’d need to handle these speeds. Otherwise, you’d be limited to a 100Mbps connection, half the potential. But even if you do have such a connection, there are other factors to weigh in… such as overall load on the community network.

Bandwidth is usually shared and split up between a neighborhood, and if someone on there had essentially the equivalent of 20 regular broadband connections, that’s bound to cause some bottlenecks. The other limitation are the servers you’re connecting to yourself… chances are very few, if any, download servers will allow such speeds on a per-user basis. I at one time managed to download a file at 10MB/s off another server from our server, which was impressive, but that’s still only half of 200Mbps, roughly.

While impossible now, I’m sure such speeds won’t always be impossible. As for those with anything more than 10Mbps down and 1 Mbps up… I’m jealous. I feel like I’m stuck in the stone age here!

Each node on a cable system is set up in a loop, with every home on that node (up to several hundred) sharing the total bandwidth. Verizon’s FiOS also shares, but it divvies up 2.4Gbps between 32 homes; DOCSIS 3.0 cable systems can share around 160Mbps with up to 400-500 homes. Even during peak periods, the line is filled with data only about 10 percent of the time, so the “oversubscription” model generally works well—but heavy use by many users will cause slowdowns, especially on the upstream link.

| Source: Ars Technica |

Discuss: Comment Thread

|

Extreme Overclocking with AMD’s Dragon Platform – Phenom II x4 955

AMD has sent us along a fresh “Unprocessed” video, which takes a look at extreme overclocking with their current high-end Dragon platform. The video includes their brand-new Phenom II 955 CPU (which we are still in the process of working on content for – I promise) and also Gigabyte’s MA790FXT-UD5P… another motherboard we have en route and hope to put to the test very soon.

Overclocker Chew* puts all the hardware to the test, and of course LN2 is brought into the mix, to achieve some incredible scores… and even break a few world records. For 3D Mark 06, he was able to hit 32,281 for dual HD 4890’s, and 28,831 for dual HD 4850’s. For DDR3, he hit DDR3-1636 6-6-6-16 (I want this!), and for CPU overclocking, he hit 4.5GHz on air (wow) and 6.4GHz on LN2. Not bad, not bad at all. More results can be found in the video, so keep an eye out for ’em.

Overclocking Guru Chew* relentlessly overclocks “Dragon Technology” with stock AMD Phenom II X4 955 processors on GIGABYTE GA-MA790FXT-UD5P, and recently published his results spanning air, phase, dry ice, and liquid nitrogen. He pushes DDR3 and the extraordinary north bridge of the 955 to the limit. The stock 955 has tremendous north bridge scaling potential and achieved overclocks at significantly lower voltages than prior processor models.

The achievement was accomplished on GIGABYTE GA-MA790FX-UD5P and GA-MA790FXT-UD5P, featuring “Ultra Durable 3 Technology:” the industry’s first motherboard design with 2 ounces copper layers to tremendously enhance stability and performance for overclocking. Brian was able to break 3DMark06 records for dual Radeon HD 4850’s and 4890’s in the Dual Crossfire configuration supported by these two motherboards.

|

Discuss: Comment Thread

|

Vuze Releases “Vuze to Go” Mobile BitTorrent Client

If you are an active BitTorrenter (is that even a word?) and are often on the go, perhaps you’re looking for a portable client that you can take with you, on either your thumb drive or an external hard drive. At quick glance, I’m not completely sold on the reason for this, but if you happen to have a torrent half-downloaded, and need to leave the house, yet want the finished torrent with you when it completes, then I could see it. Seems like a niche need, though.

Popular BitTorrent software Vuze (formarly Azureus) has just released what I believe to be the first-ever paid BitTorrent client, called Vuze to Go. The goal is to simply offer you the ability to torrent on the go, and it’s as easy as installing the application to the thumb drive or another USB-based storage device, and running it from there, rather than from your regular Vuze installation.

There is of course a few issues with this. First, it costs money, so its attractiveness is faded from that alone. The reason for the charge is thanks to the technology used, from Ceedo, which essentially allows Vuze to Go to run from inside a virtual environment, which gets around the issue of a PC with no Java installed (which is required by the desktop Vuze). While I personally don’t mind Vuze too much, I still find it a bit bloated (especially this version), but for those Windows users who want a free alternative, nothing sounds better than uTorrent.

In order to get it to work on systems that don’t have Java installed, Vuze to Go contains a virtual operating system in addition to the regular Vuze application. Vuze teamed up with a company called Ceedo who developed the ‘virtualization’ technology. This partnership does come with some downsides though. First of all, the Vuze to Go client costs $9.99, which is quite unusual for a BitTorrent client. Vuze’s Director of Marketing Chris Thun told TorrentFreak that they were required to charge for the new application, and that the price tag was unavoidable.

| Source: TorrentFreak |

Discuss: Comment Thread

|

Supermicro Launches Intel Atom-based Servers

Because we at Techgage cover desktop computing so heavily, it’s easy to overlook the server side of things, which arguably, is just as large as desktop computing, if not larger. At the base of any company, large or small, there’s likely one server, or a thousand, or tens of thousands. What that means for larger companies are unbelievable power bills, and it’s no surprise… the computing power is huge.

Not all servers have got to be powerful enough to launch rockets, though. Take the latest offering from Supermicro, which instead of featuring the beefiest CPUs around, includes Intel’s basic Atom processors. I’ve heard in the past that Atom actually serves as a good base for a simple server, and a release like this from one of the largest server providers pretty much confirms that.

Supermicro’s more basic offering includes the single-core Atom 230, although a Dual-Core version is also available. The rackmount is smaller than 1U, with a depth of 9.8″, and given the overall power consumption and thermal output, cooling would be rather quiet, as opposed to much larger servers. I have to admit, I absolutely love products like this. If more people used servers like this where applicable, the power saved would be immense… not only from the servers themselves, but from the cooling required to keep them operational.

Optimized for the single-core Atom 230 processor, which consumes only 4 watts of power, Supermicro’s cost-effective X7SLA-L platform supports up to four SATA ports with RAID 0, 1, 5 and 10, along with seven USB 2.0 headers, 2 GB DDR2 memory, Intel GMA 950 graphics and a Gigabit Ethernet port. For more performance-intensive applications, the high-end X7SLA-H integrates the dual-core Atom 330 processor, which consumes 8 watts of power and expands upon the features of the X7SLA-L with dual Gigabit Ethernet ports, an additional onboard Type A USB 2.0 connector and an extra internal serial port.

| Source: Supermicro, Via: TG Daily |

Discuss: Comment Thread

|

Celebrating 50 Years of Silicon Integrated Circuits

Where would we be today without the invention of the integrated circuit? Good question, and I’m not sure I’d like to know the answer! This year marks the 50th anniversary of the integrated circuit (IC) that we know and love today, although the original IC the Fairchild Eight came up with wasn’t exactly the first. It was the first however to take off commercially, and the fundamentals from that finished product is still at the base of most electronics today.

Jack Kilby, of Texas Instruments, was the first to create a working IC back in 1958, which was based on germanium, not silicon. The further improvements of Fairchild Semiconductor’s silicon-based IC solved numerous problems over Kilby’s chip, and as as result, it was picked up in greater numbers. Kilby did however receive a Nobel Prize for his invention at the turn of the century.

At a recent event at the Computer History Museum, the IC was celebrated, and for good reason. What a 50 years it’s been! Both Gordon Moore and Jay Last, two of the Fairchild Eight, were present and gave speeches, and I particularly like this quote from Last, “It’s hard to believe that 50 years have passed. The reality of today is beyond our wildest imaginations of those days.” No kidding. Back then, you might have been put in a mental hospital if you accurately predicted what 2009 would be like!

The question now is “what’s next?” There are limitations to Moore’s Law, so current ICs may not be the long-term solution. But, as Moore states, “You get to the point where you can’t shrink things anymore. But that won’t stop innovation.” Well said.

He recounted the days when the eight of them, including Noyce and Hoerni, staged a mutiny at Shockley and struck out on their own, determined to find an existing company that wanted to use them to build out a semiconductor business. Moore said the men, most of whom were in their late 20s at the time, weren’t sure how to go about it, so they opened The Wall Street Journal, and circled the names of 30 companies they thought might be interested in their services. None bit.

| Source: Cutting Edge Blog |

Discuss: Comment Thread

|

Sirius XM Raises Subscription Rates

It was a huge surprise when it was found that Sirius could legally merge with XM, but subscribers of either service were the ones who had the right to remain really skeptical. With these two giants together, they have caused a monopoly on the market in every sense of the word – I don’t think there exists a single competitor in this space. But, as long as the prices kept where they were, there would be nothing to complain about, right?

Well, that’s not the case anymore, since Sirius XM has just announced some price hikes on all of their services. Whether this was caused by the recent drop of subscribers (over 400,000!) or not is unknown, but chances are it was. Sirius XM hasn’t been making a profit for as long as I remember (if at all), so to make money, someone has to pay, and that’s clearly going to be the customers.

Prices for the family plan have risen from $6.99 to $8.99, while the previously-free Internet version of the stations will now cost $2.99. I admit I’ve never once touched either Sirius or XM, so I’m not sure how good either service actually is, but given what I pay for my cable every month, $8.99 doesn’t seem all too bad. Of course, it’s the programming and reliability that matters, nothing more. Do you have experience with either? Let us know in our comments.

Now that Sirius XM is the only game in town, it’s nudging up fees for subscribers. Nice! The one and only satellite radio company’s boasts of its ever-increasing subscriber base are gone now, and the decline is significant. The number floating around the Internet is a loss of 400,000 subscribers. That still leaves 18.6 million, but there’s no way of knowing how many of that number are full-price-paying subscribers.

| Source: The Audiophiliac |

Discuss: Comment Thread

|

Purchasing an Alienware Machine Online? Be Careful

Purchasing used computer equipment online – especially complete machines – can be a little sketchy if you’re not careful. But assuming you receive the machine, and it works, you should be fine. Right? Well, apparently not if it was an Alienware desktop or notebook. According to one consumer’s recollection of a recent purchase, if you buy an Alienware notebook, even new, from a seller and don’t get properly attached to the ownership, you’ll be out of luck with regards to support, and replacement parts.

The author of the blog post, Chris Paget, bought a used Alienware notebook off of eBay, and after receiving it, he decided he needed the “smart bay” accessory, used to hot-swap hard drives, which he wanted for dual-booting purposes. He quickly found out what a hassle it would be, however, as Alienware simply refused to tell him even the price for the component, since he was not the original owner.

Although I appreciate Alienware’s loyalty to their customers, it’s not safe to automatically assume that because someone’s name isn’t on the machine, that it’s stolen. PCs are often given as gifts, and it’s typical for used equipment to get swapped around. It’s also sad that if your seller doesn’t cooperate, you’ll essentially be out of luck for purchasing replacement parts – a hard pill to swallow if Alienware happens to be the only company around with that exact part you need.

This example does raise a real concern though, since Alienware PCs tend to be hot products. Be careful when purchasing one such machine online, and look into getting the name transferred over, because you don’t want to wind up in the same situation as Chris.

Problem is, my money is apparently not good enough for Alienware. Tech support, customer service, and pre-sales support have all refused to even give me a price, let alone take my money. Why? For “security reasons” they’re not allowed to speak to anyone but the registered owner of the system, despite the fact that all I’m trying to do is purchase a (universally compatible) accessory. Nowhere else that I’ve found sells them (the CSR I spoke to suggested eBay and newegg), and Alienware apparently don’t want my money.

| Source: Chris Paget’s Blog, Via: Slashdot |

Discuss: Comment Thread

|

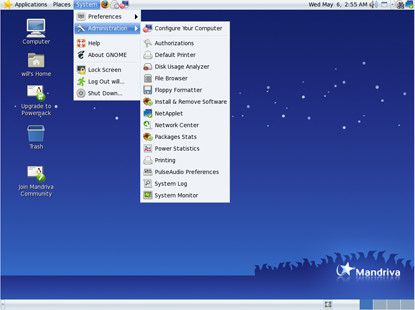

There’s More to Linux than Ubuntu!

When Ubuntu first bounced onto the Linux scene, it became a hit almost immediately, and even I’m unsure why that was the case. Although it’s built on an already-stable distribution (Debian), the clear version and robust community must have helped propel it into super stardom, at least in the geek world. But, there was an upsetting side-effect of Ubuntu’s popularity. Many people came to believe that Ubuntu = Linux, and as a result, other quality distros got overshadowed from the masses who were now interested in the alternative OS.

The situation today is a lot better than it used to be, though, and it seems that most people now understand that Ubuntu is not the only version of Linux out there. It remains easily the most popular, however. But aside from Ubuntu, what else is there? Sure, there’s Kubuntu and the like, but those are all still based on Ubuntu. What else is totally unique?

Maximum PC takes a look at seven distros other than Ubuntu to get a glimpse of what else is out there, and if you’re in the market to try out Linux but don’t know where to start, this looks to be a rather solid article to refer to. This kind of roundup isn’t unique, but this one in particular contains more information than any I’ve seen before. If I weren’t such a Gentoo fanboy, I really have no idea what distro I’d try to make my primary. They certainly all have their own pros and cons, many of which this article tackles.

Mandriva has three versions, each with different artwork and appearance. The color theme in the free edition is a light blue that is aesthetically pleasing, while the non-free editions feature a darker theme that helps to effectively differentiate them from the free version. Mandriva has been primarily a KDE-oriented distro ever since the days of MandrakeLinux, and Mandriva 2009’s use of KDE 4.2 is extremely effective and useful.

| Source: Maximum PC |

Discuss: Comment Thread

|

3D Realms Has Closed Shop

Of all the games currently in development, Duke Nukem Forever is one in particular that’s been made fun of time and again. How long will it take to see a release? Forever, of course. Sadly, that might actually be the case, as it has become known that the development studio behind the game, 3D Realms, has shut down, despite the fact that no “official” word has been made directly by the company.

However, it has indeed been confirmed by former employee Joe Siegler on the official forums, however, where he references an interview he made just days ago in saying, “When I recorded that, I didn’t know.“, so it appears that 3DR is actually… gone. As a huge fan of 3D Realms and Duke Nukem in particular, this news is really quite upsetting. It’s hard to be surprised given the insane release schedule for DNF, but that makes the news even more difficult to take.

For those who’ve been gaming for a while, and I’m talking 286/386 days, chances are you’ve played games from these guys even if you don’t realize it. The company first started out as Apogee and released such gems as Cosmo’s Cosmic Adventure, Hocus Pocus, Mystic Towers, Rise of the Triad, Commander Keen, Crystal Caves, Max Payne, Halloween Harry, Raptor and so forth. Not to mention Wolfenstein 3D and Duke Nukem I and II.

What will happen with what’s left of DNF is up in the air, and chances are good that George Broussard is working hard to figure out a way to keep the company afloat, unless that ship has already sailed. We’ll likely learn a lot more in the coming days. Hopefully it doesn’t mean the end for DNF. Many of us have been waiting a while for it (I still have a copy of a 1997 preview on the game), and it would be a shame to see it disappear.

A very reliable source close to Duke Nukem Forever developer 3D Realms today confirmed to Shacknews that the development studio has shut down. The closure came about as a result of funding issues, our source explained, with the shut down said to affect both 3D Realms and the recently resurrected Apogee. Employees of both entities have already been let go.

| Source: Shacknews |

Discuss: Comment Thread

|

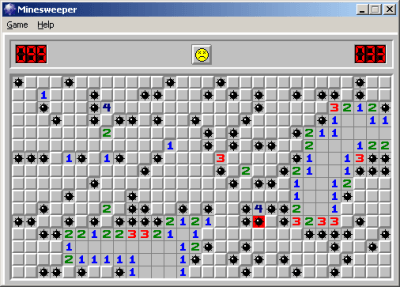

Is Minesweeper the Most Successful Game Ever?

I’ll be honest. When I picture computer gaming, the last thing I think of is Minesweeper, but, its popularity cannot be denied. Given that it’s included in Windows, and has been for a while (at least since 3.x), there are few people who haven’t opened it to waste a few minutes every so often. That adds up, and chances are, if you have been using Windows for a while, you’ve likely sunk some real time into this unbelievably simple gem.

Minesweeper and other Windows classics are usually one of the first things I check out with a new Windows version. After all, they’ve become such a common part of that particular OS, that I always want to see if they’ve been updated at all. Until Vista, they really haven’t been. There, though, the player can opt to use flowers instead of mines, thanks in part to complaints from countries where mines are a real problem.

As popular as Minesweeper has been, I do have to wonder if it’s one of the most popular games out there, statistically speaking. For me personally, Solitaire used to be my vice. Years ago, I got into the game so much that I actually started losing sleep because I couldn’t get the shuffling cards out of my head! It’s a good thing I wasn’t addicted to Freecell!

In the unlikely event you’ve never played it, the gist is that you start with an empty field (its size and number of mines determined by difficulty setting) and have to uncover squares one at a time. Underneath each is either a space, a number, or a mine. The numbers tell you how many mines are in the adjacent boxes, the mines kill you dead. To win, you have to clear the field without touching a mine. It’s a relatively simple game of deduction, but satisfying.

| Source: Tech Radar |

Discuss: Comment Thread

|

Windows 7 to be Released in October?

According to a post over at Slashdot, the Windows 7 launch date has been leaked, although it’s hard to say right now whether anyone should hold any credence in it. The date mentioned is October 23 of this year, a date that was carelessly thrown out by an Acer executive in Europe. I won’t get into the whole story on things, but Oct 23 does seem like a reasonable date, but there’s so much more to consider in the big picture.

Looking at things right now, Windows 7 is really shaping up to have a clean launch, and there doesn’t seem to be a real hitch holding back its development. After all, the Release Candidate has just been released, and should become available to regular consumers to test out soon. That’s a good sign. Plus, it’s been speculated that the RC/RTM phase could last about three months, and if true, that would place us at an August release date.

So where does that leave October 23? It’s hard to say. If Windows 7 seems really stable right now, is Microsoft simply playing it safe, and are deliberately holding back a launch for a few months in order to make sure every possible important bug is ironed out? After all, the last thing Microsoft wants to see is another Vista launch. Heck, even the consumers don’t want to see that (unless you are a Mac or Linux user maybe, you twisted freaks!).

Oct 23 sounds credible, but this is an OS after all… anything can happen.

Asked whether this confirmed the Windows 7 release date as September 2009, he coyly remarked that ‘when it’s in store it won’t have Windows 7 pre-loaded.’ Microsoft would probably prefer that he had stopped there, but he added: ‘We won’t be actually selling [Windows 7] a day before the 23rd October.’ Then, Acer’s Managing Director for the UK helpfully clarified that while their product will ship with Windows Vista at launch, because it is on sale less than 30 days before the Windows 7 release date, it will be eligible for the ‘upgrade program’ to get a free upgrade to the new OS.”

| Source: Slashdot |

Discuss: Comment Thread

|

OCZ Launches PCI-E-based SSD, “Z-Drive”

In the past few months, whenever the term “PCI-E SSD” was mentioned, the company ‘Fusion-io’ usually came to mind first. They were the innovators where the technology was concerned, but it was only a matter of time before others followed suit, and so far, those companies have included OCZ and Super Talent, the latter of which we talked about a few weeks ago.

Both OCZ’s and Super Talent’s drives have their perks, but OCZ’s Z-Drive easily wins any aesthetics awards (as you can see, it looks far better now than it did at CeBit). Compared to the Super Talent drive though, the Z-Drive falls a bit behind in performance. The 1TB model boasts Read speeds of 500MB/s and Write speeds of 470MB/s. This is compared to Super Talent’s RAIDDrive’s 1.2GB/s Read and 1.3GB/s Write.

We can assume that the pricing difference for both company’s models is quite stark, and OCZ is catering more towards enthusiasts, whereas Super Talent is going after the professionals or server environment. One thing to note also is that not many PCI-E-based SSDs can currently act as boot devices, so if you’re interested in one of these, you’re best bet is to have a standard S-ATA SSD as your OS drive, and use this as a supplement. Just the thought of that is amazing…

OCZ will compete against the RAIDDrive with its own Z-Drive SSD using a PCI-E 2.0 x4 slot. It will feature a combined 256MB cache managed with an onboard RAID controller. Capacities of 250GB, 500GB, and 1TB will be offered. Maximum read and write speeds vary for each model in the series, although the maximum sustained write speed will be limited to 200 MB/s for all Z-Drives. Random read and write speeds were not made available.

| Source: DailyTech |

Discuss: Comment Thread

|

Capcom Announces GeForce 3D Vision Support for Resident Evil 5

When NVIDIA first launched their GeForce 3D Vision at this year’s CES, no one was sure how it would take off, if at all. 3D gaming up to this point has been nothing more than a joke, and personally, I’ve never been impressed with any implementation up until I first put on the 3D Vision glasses. Even NVIDIA’s implementation isn’t perfect, but we’re on the right track, and assuming you aren’t expecting a fully immersive 3D experience, it can help make certain games much more enjoyable.

Until now, the company hasn’t had any real support from game developers to build their game around the technology, but today Capcom announced that the PC version of Resident Evil 5 (due later this year), will come bundled with support, although we’ll have to wait and see just how they develop the game to take better advantage of it.

We can assume that the game will look no different for those without the glasses, but there’s likely to be a mode to enable that will allow you to tell the game that you have the proper hardware. RE5, to me, is the perfect kind of title for this usage, because any more realism in a horror game is sure to get blood pumping. Hopefully when the game gets released, 120Hz monitors will be much more affordable…

Featuring a revolutionary new co-op mode of gameplay, Resident Evil 5 will let players experience fear together as terror moves out of the shadows and into the light of day. The PC version of Resident Evil 5 will feature online play for co-operative play sessions over the internet and will also take advantage of NVIDIA’s new GeForce 3D Vision technology (wireless 3D Vision glasses sold separately).

| Source: Capcom Press Release |

Discuss: Comment Thread

|

Common Reasons People Leave Linux

From the title alone, you can probably guess what this article is going to entail, and you’re probably going to be correct. But, it’s good to be refreshed of things from time to time, because Linux does need some improvement in order to take over alternative OS’, at least in my opinion. All too often I’ll hear complaints from people who’ve tried Linux and have gone back to Windows, and I’m certainly not the only one.

In a brief article at PC World, there’s a list of seven reasons that people decide to leave Linux, with the number one reason being the inability to run many applications that people are familiar with. That issue in particular is getting better over time, but I believe Linux will really start to take off when more commercial applications become available for the OS. Many people shun non-open-source applications, but it’s clear that there are some commercial applications that far exceed the capabilities of free alternatives.

Other common complaints include the fact that Linux didn’t pick up on some hardware, but in truth, Windows is no different. Never have I installed Windows and not have had to install drivers for something, whether it be networking or audio, or WiFi. There are other reasons listed in the article that are good, and I symphasize with newbie Linux users over them. Things are certainly getting better all the time though, that’s the important thing.

There are also those who take a haughty position and project their fear onto others: “I had to type commands! Ergo Linux just isn’t ready for the ordinary person!”. Here, the individual concerned seems to be implying that the ” ordinary user” (whoever that might be) suffers from an intelligence deficit and is incapable of typing commands. It that really true? Why do we always assume that other people can’t possibly be as smart as we are?

| Source: PCWorld |

Discuss: Comment Thread

|

AMD Celebrates 40 Years

Today officially marks the 40th anniversary for Advanced Micro Devices, and what an exciting forty years it’s been! The company was founded on May 1, 1969, just one year after Intel, and was formed to produce a number of “logic chips”, and believe it or not, they even at one point produced Intel processors, such as the AM286 pictured below.

Their first in-house processor was the K5, a chip that featured 4.3 million transistors built on a 500nm process, 8KB+16KB Cache and a clock-rate up to 100MHz. These chips plugged into either a Socket 5 or Socket 7 motherboard, the latter of which was my first experience with frying a motherboard (hurrah for not properly soldering fan wires!).

If you want to take an exhaustive trip down AMD’s history, I recommend you check out the Wikipedia page on the company. To help celebrate things though, the company is offering up a few different contests in order to repay their fans, and the first is for you to create a quick video (under 60 seconds) with you expressing your love for AMD, and you must say in some manner, “Happy 40th Anniversary AMD”.

Prizes include all of of AMD’s current high-end offerings, such as an X4 Black Edition CPU and ASUS motherboard and also the new budget (but powerful) Athlon 7850. If you’re not camera shy, get to making a video… you may just win!

In our 40 years, users and enthusiasts the world over have been integral in the development and marketplace adoption of our product lines. We’ve always appreciated the feedback and wishes of our customers and end users, and during this anniversary year we’d like to celebrate by saying thanks. So we’re rolling out a series of contests that will take place over the coming months to give back to our dedicated fans, and let you show your AMD spirit. In total we’re giving away 80 prizes, and the winners will take home some of the most innovative products in the market today.

| Source: AMD’s 40th Anniversary |

Discuss: Comment Thread

|

Old PC Kicking Around? Install Linux!

If you purchase a pre-built machine today, or build your own, you can remain confident that it’s going to run any of the mainstream operating systems available today. But there’s also likely many of you who have old machines hanging around that you can’t stand to get rid of, and it’s understandable. It could be memories, or simply the fact that you love having an old machine still running to brag about.

But the question now is, what can you do with the ancient box to make it somewhat useful? If it’s really old, you might not even be able to run Windows XP. If you are in such a predicament, and want an up-to-date OS, a Computerworld blog post points out that Linux is the way to go, but there are of course limits. If you are running an old machine with a lack of RAM and processor frequency, you might have to ignore fancier distros, such as Ubuntu and SUSE.

Other distros exist specifically for old hardware though, and some that aren’t might even run well. For the ancient of all ancient PCs (think <200MHz, <64MB of RAM) there’s Damn Small Linux, which is about as barebones as you can get, but is highly optimized for old hardware. The author of the article talks about running SUSE 10.3 on his 266MHz/512MB box though, which is rather impressive. So it’s good to know that you don’t have to throw out that old box yet, and in some cases, you don’t have to suffer with a command-line-based OS either!

What about older PCs though? Linux works great on them. The oldest working server I have is one of the ones I used in 1999 to prove, for the first time, that Linux was a better file server than Windows NT. It’s a white-box with a 266MHz Pentium II and 64MBs of memory. These days it’s running openSUSE 10.3. My least-powerful Linux desktop I’m currently using is HP Pavilion 7855 PC. It was born in 2001 with a 1GHz Pentium III and 512MBs of RAM. These days I run Mint 6 on this old vet of a PC. Frankly, that’s a little too much operating system for it.

| Source: Computerworld |

Discuss: Comment Thread

|

What Can You Do with 4k?

Programming isn’t easy, and when it comes to designing your code to be as efficient as possible, it’s even harder. Some coders don’t care as much about their code as others though, and that can result in applications or games ending up with a larger file size. That doesn’t matter much nowadays, but it shows true skill when you have a large application with a small footprint. Take Google’s Chrome browser for example. Small footprint, but huge functionality.

But, some people take things far more seriously, and where coders are concerned, serious is programming an application or game to fit inside of 4KB. Nope, that’s not a typo… 4 kilobytes… 4096 bytes. How’s that even possible, you ask? Great question, and though there are explanations around the web, I’m still confused. There’s a lot of trickery involved, and off-loading and executing various techniques on the CPU or GPU. What matters though, is that some of the results are amazing.

Take this one that a friend linked me to the other day. This is a video of one such 4k project which shows a fly-through of a fully-rendered mountain region, complete with high-altitude cliffs, rivers and not to mention music. As if that wasn’t enough, there are beams of light that pulse in the sky to the sound of the music. Not impressed? Did you skip the part where I said all of this is done in 4,096 bytes? That’s the equivalent of typing out the entire alphabet 157.5 times. The raw text of this post is equivalent to 2,028 bytes by itself! Not too many more and you can create something like this? Truly impressive.

exactly one year ago i made an executable procedural graphic and video called ixaleno, which i liked. people asked when such imagery would be realtime, and i was answering “today”, as i have already seen terragen quality landscapes, completely procedural, in opengl. the point really was how good i could do _in 4 kilobytes_. i experimented and concluded i could so something similar to ixaleno without much problem, and so i stoped playing with it.

| Source: Pouet.net |

Discuss: Comment Thread

|

Why We Need Audiophiles

In the past, some people have asked me if I was an audiophile, simply because I have a good set of cans, a good audio card, and also happen to stick to lossless formats for my collection. But, I’ll be the first to admit that I’m the furthest thing from being an audiophile. Just reading a review like Rory’s is enough to reaffirm the fact that I simply know nothing when it comes to quality audio.

You might feel like you don’t to be an audiophile or have expensive equipment to enjoy music, and you’re absolutely correct. But another good fact to realize is that even if we aren’t audiophiles, we can thank those who are, since they’re undoubtedly the reason that (affordable) audio equipment nowadays is so good, and not to mention music production in general as well.

Gizmodo believes we owe a lot to audiophiles, and came to realize this after paying a visit to Michael Fremer, an editor for Stereophile magazine. Although Michael has hundreds of thousands of dollars worth of equipment in his living room, he certainly doesn’t believe that anything like that is required. In fact, he even states that folks who build a quality system for $3,000 – $5,000 are going to achieve “85” (out of a hundred) when it comes to overall audio quality. Getting those extra few percentage points and decimal places is not an inexpensive affair.

But would you believe that despite his equipment, he prefers music on vinyl? There’s a few good reasons for it, and many true audiophiles feel the same way. And if you think that your 256 kbit/s audio is adequate, don’t go talking to Michael about it! Indeed, audiophiles may be the fussiest people around, but it works out to our favor in the end, that’s for sure.

We play my solid 256kbps VBR MP3 of “Heroes” off my iPod; it sounds like shit. Free of pops and crackles, yes, but completely lifeless, flat in every way. This is the detail that matters: Audiophiles are basically synesthesiacs. They “see” music in three-dimensional visual space. You close your eyes in Fremer’s chair, and you can perceive a detailed 3D matrix of sound, with each element occupying its own special space in the air. It’s crazy and I’ve never experienced anything like it.

| Source: Gizmodo |

Discuss: Comment Thread

|

GO OC’s North American Final to be Held Next Weekend

Just a few weeks ago, I posted a link to an online magazine devoted to both overclocking and of course, overclockers. In that post, I mentioned that the hobby of overclocking isn’t slowing down, and in fact, it seems to be growing at a very rapid pace. Proof of this is seen with sponsored overclocking events, and if there’s one company to throw their weight behind some of the biggest overclockers around, it’d be Gigabyte.

Their GO OC (Gigabyte Open Overclocking Championship) has spawned many different events so far, with the final set to be held in Taipei alongside Computex. To help gear up for that, Gigabyte, with the help of a few select sponsors, have been holding events all around the world, essentially to cherry-pick the best overclockers in the world to appear at the final.

Between March and now, Gigabyte has been wrapping up these events for finalists, with only one left, which will be held next weekend in California. We’ll be there to report from the event, where we can hope some world records will be broken. If not, there are sure to be records broken in June at Computex. For a lot more information about the event, head on over to the official site. The equipment that each overclocking team will be using is all listed there, alongside photos from previous events.

As an engineering powerhouse, GIGABYTE sees the need to bring world-ranked and the most extreme power users together on a yearly basis to further energize the OC community, and provide the most talented power users an opportunity to meet face to face, share their know-how and their point of views on how GIGABYTE strive for on engineering excellence and producing top-of-class motherboards and graphics cards catered to enthusiast crowd in the future.

| Source: Gigabyte Open Overclocking Championship |

Discuss: Comment Thread

|