- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Upcoming Trend in Notebooks: Ultra-Thin and Ultra-Low-Voltage

It’s been discussed numerous times in the past that netbooks have been one of the biggest innovations in mobile computing in the last while, and that doesn’t look to change right away. Given their price points (and this economy), they’re an extremely attractive option for anyone. It’s not just the price, though. The fact that they’re competent, small and tend to have great battery-life helps things also.

But what about those who want something a bit larger that has great battery-life, but doesn’t want to spend over $2,000? Well, they probably buy nothing, because the options are slim, or non-existent. The best 12″ – 13″ offerings on the market cost around $3,000, and it goes without saying, that’s not a realistic purchase for most people. Paul Otellini expects things to change later this year though, with the help of their upcoming CULV mobile processors.

An acronym for “Consumer Ultra-Low-Voltage”, CULV processors are to be placed in between Intel’s bare-minimum offering, the Atom, and their regular mobile solutions. So, huge performance wouldn’t be there, but it would be more than sufficient, and with that power we’re given, battery-life should be better than what we’re seeing today. During the first quarter earnings call, Otellini said, “I think you’ll see those at very attractive price points“. Sounds good to me.

We’ll have to wait a few months before we see these processors launch, but if it means that we can get ~12″ ultra-thin notebooks with great battery-life for a reasonable price (<$1,250), it will be a huge win for consumers. Well, at least those who like to travel and not have to worry about battery-life.

During Intel’s first-quarter earnings conference call Monday afternoon, Otellini had a surprising amount to say about Intel’s upcoming consumer ultra-low-voltage (CULV) processors, designed to fit into future ultrathin laptops that are expected to be priced significantly below $1,700-and-up luxury laptops such as the Apple MacBook Air and the recently-introduced Dell Adamo. The category of upcoming CULV-based laptops has been described by some observers as the MacBook Air for the masses.

| Source: Nanotech: The Circuits Blog |

Discuss: Comment Thread

|

Google Loses up to $1.65 Million Per Day on YouTube

I’d be hard-pressed to believe that not every single person who visits our site has also visited YouTube at least once in the past month. In fact, I’d believe that the vast majority have visited in the past week. YouTube has become one with the Internet, and even if you don’t go there by your own volition, you probably have friends who help you wind up there anyway.

So with that in mind, is it any surprise that Google actually loses upwards of $1.65 million per day on the service? Believe it or not, it looks to be the truth, and sure, “it’s Google”, but that’s still a staggering amount of money. So the question must be asked. When Google purchased the video sharing site in 2006, is this how they pictured things would be in 2009?

I’m doubtful. Apparently, they’ve yet to figure out a way to generate real revenue, and like other web services, such as Facebook, it seems like you need to first lose a lot of money to make any of it back. The uncertainty is the future of these services, and how they’ll change. Google’s not going to continue essentially giving away millions of dollars each day simply to benefit the viewers, but I also can’t guess what could be done otherwise. Paid subscriptions are one idea, but that’s another avenue of revenue that’s difficult to master. It will be interesting to see even the next year how things will play out for the monolithic web service.

The average visitor to YouTube costs Google more than a dollar ($513 million to $663 million in estimated losses divided by 375 million unique visitors). In effect, Google is paying you to enjoy YouTube videos. In return, it gets the chance to show you some advertising. But adoption of those big-ticket items (YouTube sells homepage roadblock ads at $175,000 per day and branded channels at $200,000 apiece) has been limited, and Google AdWords image advertising remains the primary revenue source for YouTube.

| Source: Internet Evolution |

Discuss: Comment Thread

|

Taking a Trip Through 31 Years of CPUs

I’m sure I’m not alone in thinking that sometimes, it’s a bit easy to take things for granted, when in reality, things are amazing. Take flying, for example. It’s an incredible feat to raise someone off the ground and fly them from one location to the next, but nowadays, it’s about as typical as hopping on a bus. Then there’s CPUs, and computers in general. Even just 10 years ago, things were so modest compared to today, so sometimes, it’s good to take a look at where we’ve been, to truly appreciate where we are now.

I remember when the first 1GHz processor came out… it was mind-blowing just to picture the amount of performance available. Nowadays, we’re almost reaching the same frequency in our smart phones! Times have sure changed, and to help put things in perspective, Maximum PC has posted a detailed article which takes a look at the past 31 years worth of CPUs, starting with the Intel 8086, a chip that boasted an amazing 29,000 transistors!

The picture below is one CPU that immediately caught my eye, for a few reasons. First, could you just imagine a CPU today that has an OS branding etched into its surface? Second, the same CPU became the first to implement an odd naming scheme, one that was actually used to give the customer a rough idea of where it stacked up against the competition. In this case, AMD’s chip competed with Intel’s Pentium 75, so the name was suffixed with -P75. Such things seem so silly nowadays, but little did we underestimate the other naming debacles that would occur over the years, and not just with CPUs.

The 386, which was later named 386DX to avoid confusion with a lower cost 386SX variant that would debut three years after launch, initially ran at 16MHz and, once again, would eventually double in speed to 33MHz. It also doubled the number of transistors from its predecessor to 275,000 and was Intel’s first 32-bit processor. The 386 could address up to 4GB (not MB) of memory, could switch between protected mode and real mode, and added a third ‘virtual’ mode, which allowed the execution of real mode applications that were unable to run in protected mode.

| Source: Maximum PC |

Discuss: Comment Thread

|

Apple’s $79 iPod shuffle Costs $21.77 to Produce

One common complaint from Apple naysayers is that the brand carries a luxury tax, and this is a point that even Microsoft is happy to point out in their most recent run of commercials. But, like other luxury items, customers believe the quality is there. The same rule can apply for things like cars, houses, watches, pens, et cetera. Where Apple is concerned, many users consider the design of the machines to be worth the cost-of-entry, in addition to the robust OS.

Many times though, if a product carries a huge price tag, you can be sure that the cost to produce it wasn’t cheap either. A Rolls Royce may cost $300,000, but the amount of manpower and materials to be put into one is staggering. There’s no 50% profit margin there. But with Apple, it’s a different story. Would you believe, for example, that their $79 third generation iPod shuffle costs a mere $21.77 to produce?

The only word I can think of is “wow”. If Apple earned $200 on a $1,000 MacBook, that would impress me, but the delta between the production costs of the shuffle and its SRP is just staggering! I’ll be the first to admit that I believe Apple’s products are over-priced, but let’s face the facts… many Apple customers are pleased with the quality, and if a company can deliver that kind of reputation while keeping huge profit margins like this, then kudos.

The smaller the component cost as a percentage of price, the higher the potential profit. This suggests the per-unit profit margin on the shuffle is higher than on other iPod models. The component cost for the first iPod touch released in 2007, for instance, amounted to about $147, or about 49% of its $299 retail price. The component cost of the third-generation iPod nano, also released in 2007, amounted to about 40% of its retail price.

| Source: Business Week |

Discuss: Comment Thread

|

Nikon Announces 12.3MP D5000 D-SLR, Includes Vari-Angle LCD

When I saw a rumor about a potential D5000 D-SLR from Nikon last week, all I could do was chuckle and ignore it. I mean… D5000? As if Nikon would slap a name like that on one of their cameras. Well, joke’s on me, because it’s true. I won’t get into the fact that seeing such a large number on the small badge is a little strange, because aside from that oddity (which happens to be my own opinion), this is actually a sweet camera.

At its $730 USD price-point, the D5000 (argh!) settles in ahead of the D60, but well behind the D90, and where competition is concerned, Canon’s EOS 500D is targeted. For that $730, you’ll receive a body equipped with a 12.3 megapixel CMOS sensor and Nikon’s much-touted EXPEED image processor. With the help of that sensor, the camera outputs images at the resolution of 4288×2848, and like the D90 and above, the D5000 can shoot in RAW+JPEG Fine – a definite plus.

Two other major features shared with the D90 are the live view and also the ability to capture video at 720p. A unique feature (on Nikon cameras) to the D5000 is the “vari-angle LCD”, one that allows you to pull out the LCD screen on the back and swivel it around. One huge bonus of this is that you’re able to turn the screen completely around to protect it when not in use. This feature is to be most useful in situations where you have to hold the camera above your head, because if you’ve ever shot pictures of a crowd while being in the crowd, you’ll know how difficult it is to achieve the perfect angle.

All-in-all, this is a great camera for the money, and it impresses me just how many features are crammed into models nowadays at these price ranges. Taking a look back just a few years at what was available and then at the D5000 today, $730 almost seems like a relative steal. My D80 isn’t looking near as fancy as it used do!

Nikon has unveiled the D5000 upper-entry-level DSLR and we’ve prepared a full preview of the camera and its features. Sitting between the D60 and D90, the D5000 appears to go head-to-head with Canon’s EOS 500D, aiming at upgrading DSLR owners and experienced compact users. It combines many of the features of the D90 in a slightly smaller, simpler body and includes a 12.3 MP CMOS sensor, live view, 720p movies and a 2.7 inch, 180 degree Tilt/Swivel LCD.

| Source: Digital Photography Review |

Discuss: Comment Thread

|

Intel Issues X25-M Firmware Update, Upgrades Algorithms

Over the course of the past year or so, Solid State Disk solutions have been praised up and down for their ultimate speed, reliability, form-factor and more. Of all the SSDs on the market, one model to hog a lot of attention was Intel’s own X25-M, and for good reason. When launched, it offered incredible speeds not yet seen before (which have now been exceeded by other vendors), and where SSD storage is concerned, that happens to be fairly important.

But, the mood was damped when a report made a few months ago at PC Perspective was published, where the editor noticed that the performance from his personal drive degraded over time, and the benchmarks proved it. The blame for this issue was assumed to reside with the algorithm used, and since fragmentation actually occurred on a sub-block level, typical software defraggers didn’t improve the situation, but rather made things even worse.

Intel followed up days after the originating article was published, and denied that they were able to replicate the issue. Well, it seems that didn’t last too long, because last night, Intel shot out an e-mail saying that the issue has been fixed, and that a new firmware update was made available for those interested. When I followed up to make sure that the problem was actually fixed and not simply reduced, I was told, “The issue reported by PCP has been fixed completely”.

To download the fix, you can go here. If you require support for whatever reason, you can refer to the support list here. I’ve flashed the X25-M’s we have here, and it was the simplest firmware update I’ve done in a while. All it takes is you to burn an .ISO image to a CD, boot up and follow the on-screen instructions. Five minutes, and the problem is no more (at least we hope).

So, if a drive is in what previously seemed to be a permanently degraded state (as discussed, we still feel this is highly unlikely for a client PC user), and a user installs the new firmware they will feel an instant improvement for any sequential operations, which will get better in time as the drive cleans itself up further. This firmware will also prevent the user from getting into such a drastic state of fragmentation, and generally help ensure the sequential write performance is as good as it can be at any moment. This change really has no significant impact on random performance.”

| Source: PC Perspective |

Discuss: Comment Thread

|

Intel’s Nehalem-EP Dual-Core Chip Has a Secret

During Intel’s fall 2007 Developer Forum, a few cool new technologies were unveiled, including the much-hyped CPUs built using a 45nm process. During the same period of time, AMD also had a few unique announcements of their own, including the triple-core Phenom X3’s, which were to come out a few months later. I clearly recall sitting in a San Francisco hotel when I first learned of it, and my first thought was, “What the…?“

Of course that was back then. After actually using an X3 chip, you can begin to understand why they are worthwhile, especially for the money. But when AMD first made their announcement, many people scoffed at how they were created. Essentially, an X3 chip is an X4 chip that didn’t quite live up to its potential. So, one core is simply disabled and it’s packaged as a Triple-Core. Simple enough.

Back to IDF ’07. After Paul Otellini’s keynote, he held a Q&A in another room in the Moscone Center, and when questioned about AMD’s X3 chips, he said something to the effect of, “We’d rather release processors with working cores.” That certainly got the point across. What’s interesting about this now is something that AMD has caught.

When Intel released their server Nehalems a few weeks ago, they quietly unveiled the first-ever Dual-Core processor built on that microarchitecture. As AMD now points out, that Dual-Core is created from a Quad-Core, which means two cores had to be disabled. How exactly this works, I’m unsure, but given the fact that yields are a bit lower with the higher process, they may very-well wind up with a fair amount of faulty chips to make into Dual-Cores. I’m quite sure we won’t be seeing a Triple-Core from Intel anytime soon, however.

There’s another oddity, though. While AMD repositions their faulty Quad-Cores as faster Tri-Cores, Intel’s Dual-Core E5502 is actually the slowest of the entire bunch. Figure that one out.

Intel publicly jeered AMD triple-core desktop processors with one core “defeatured,” yet they are apparently “defeaturing” two cores for their new datacenter darling. Perhaps no one wrote this story because the press now “get it” that this is just good, smart business in making a complete product family from a monolithic quad-core. But let’s just hope this remains a tri-lateral “agreement” honored by all involved parties: Intel, AMD, and the news media.

| Source: AMD Unprocessed |

Discuss: Comment Thread

|

Game Developers on Gold Selling

Aside from the obvious addictive nature, there is another real downside to any type of MMO game: gold sellers. For the uninitiated, gold sellers are companies who sell in-game currency and even accounts, for real-life cash. This is a reality that’s either loved or hated, and it’s easy to see things from the perspective of both sides. If one thing’s for certain though, it’s that gold sellers skew in-game economies, and that’s the factor that personally bugs me most about it.

Eurogamer has just wrapped up a four-part series on the investigation of gold sellers, and they’ve received perspectives on the state of things from gamers, the gold sellers themselves and also the game developers. After reading, you’ll likely have a newfound appreciation for what the game publishers have to deal with, because as it appears, this is not a simple problem to deal with.

One developer stated that the harder they push against botters, the stronger they become. He went on to say that if they keep pushing, eventually bots will be completely undetectable, making things even more difficult to deal with. Indeed, it’s a tough problem, and though some companies are doing interesting things to combat the issue, nothing seems foolproof.

I could write an essay on my personal thoughts on the matter, but to keep things simple, I’ll say that I think game developers need to revamp their thinking on how to design an MMO. Create it in such a way that gamers don’t feel the need to go purchase currency from gold sellers. That’s of course easier said than done, but I have no doubts it can be accomplished in a unique way. In my opinion, an MMO that completely lacks gold sellers will be the best one around. It’s just too bad that this seems impossible to pull off.

“Many people don’t realise that the companies selling services for World of Warcraft often target the players they’ve sold their services to,” argues the spokesperson. “Once these companies have access to an account, they will often turn around and sell the equipment and gold on the account or the actual account itself – if not immediately, then at some point down the line. In effect, players actually end up purchasing gold, items, or entire accounts stolen from other players.

| Source: Eurogamer, Via: Slashdot |

Discuss: Comment Thread

|

OCZ Releases Intel XMP Notebook Modules

I doubt anyone would discredit the usefulness of a notebook PC, but they do certainly have their downfalls. Performance, for one thing, never competes with the desktop, and it’s for obvious reasons. One particular area that has bugged me about this is with notebook memory. When we see DDR2-800 kits on the desktop with sweet 4-4-4-12 timings, it’s hard to look at the same frequenices with 6-6-6-12 timings on a notebook.

OCZ is working to improve the situation though, and is doing so with the release of what I believe to be the first Intel XMP-based SODIMM modules. XMP is a technology that enables slightly improved settings on a given memory set on an Intel platform. We delved into this in an interview conducted with Intel’s memory guru Chris Cox a few years ago, so we recommend you check that out if you want to know more.

Although pricing is unknown, the latest SODIMM kit from OCZ is likely to be the fastest available, thanks to the equipped DDR3-1066 speeds and 6-6-6-16 timings. By comparison, the rest of the competition is running 7-7-7-20 timings – a rather sizable difference given the frequency. This is of course available as a 4GB kit, so it sounds like they could be the perfect addition to your portable workstation! No word on availability, but it shouldn’t be too long before they hit the store shelves.

Designed to significantly increase performance levels of the entire notebook, these new modules feature Intel Extreme Memory Profiles (XMP), an exclusive set of SPD (Serial Presence Detect) settings that act as an integrated “plug and play” overclocking tool for enhanced memory performance. With a long history of providing the most innovative products to the enthusiast community, OCZ is excited to be among the first to take advantage of Intel’s interest and development of overclocking platforms extending beyond desktops to mobile gamers, enthusiasts, and professionals.

| Source: Official Product Page |

Discuss: Comment Thread

|

ATI Catalyst 9.4 Brings Bug Fixes, Improved OverDrive Tool

It hasn’t even been a full month since we saw the release of the Catalyst 9.3 driver, but here we are with 9.4. Given the short time-frame between each release, this one isn’t filled to the brim with new features, but there are a few things to point out. First is the revamped OverDrive utility, which AMD states will accurately determine the “best” overclocked Core and Memory frequencies for any HD 4000 series card.

We can assume that this revamped tool will be a little more accurate than previous versions, or else it wouldn’t have a reason to make it into the “what’s new” list in the driver’s PDF. Given that the HD 4000 series, especially the HD 4890, overclocks so well, it does make sense that the tool was given a little love. Of course, it’s still unlikely to appease the overclockers who don’t mind pushing their cards to their breaking point…but AMD does have to make sure they don’t go breaking things on people.

Also new in 9.4 are a few bug fixes, which include an issue with World of Warcraft, where flickering would occur when the Shadow setting was set to medium or high while using CrossFire. Other fixes include Google Sketchup’s blank screen issue, the ridding of artifacts for Age of Conan in DX10 mode and better support for HDMI connections using resolutions above 10×7.

That’s not all though, so you might want to check out the official release notes (PDF) to see what else has been altered. You can also download the latest driver from the AMD Game website here.

ATI Catalyst 9.4 includes a new ATI Overdrive auto-tune application finds over-clocked engine and memory values for ATI Overdrive supported ATI Radeon Graphics accelerators. This new added support is designed for the ATI Radeon HD 4000 Series of GPUs. We work hard to deliver the best platform solutions that consist of CPU and GPU. As the only company in the industry that can deliver both we have the unique opportunity to develop free software to optimize performance across AMD-based platforms.

| Source: AMD Blog |

Discuss: Comment Thread

|

Intel Celebrates 1-Year of Atom, Announces New Models

During Intel’s one-day Developer Forum in Beijing, the company celebrated the one-year anniversary of their Atom processor, while also announcing new models and other products. In a keynote given by Anand Chandrasekher, the Senior VP and General Manager of the Ultra Mobility Group, the first-ever live demonstration of upcoming Atom platform Moorestown was given, and from how it appears, this is going to be well worth waiting for.

Moorsetown, a follow-up to the Menlow platform, features the huge promise of using 10x less power when in an idle state, which should do well to increase battery-life for any MID (Mobile Internet Device) – even netbooks. This improvement was brought forth thanks to new power management techniques, a new partition optimized for MID segments and of course, the use of the Hi-k manufacturing process sure doesn’t hurt either. Moorsetown is still on track for a 2010 launch.

In the meantime, we’ll have two new Atom processors to fill the void, including the Z550 and Z515. The latter runs at 1.2GHz and is designed for use in smaller MIDs, while the Z550 is a speedy 2.0GHz offering, improving on the Z540 by 140MHz. Pricing was not announced, and neither were any other specs. In addition to these announcements, Anand went on to point out that additional OEMs were picking up on Atom, and notes that ultra-thin notebook designs (<1″ thick) are on their way.

Pat Gelsinger, Intel’s Senior VP and General Manager of the Digital Enterprise Group, went on to speak about the successful launches of Core i7 and Xeon 5500, and also talked about upcoming processors, such as the 32nm Westmere, which will feature a hybrid CPU/GPU design. The first mention of “Jasper Forest” was made here also. This Nehalem-based processor is designed for embedded and storage applications, so we’ll just have to wait and see what it has in store.

Finally, soon-to-be-retired Chairman Craig Barrett challenged the development community to put their expertise to good use to tackle some challenges presented in health care, economic development and the environment. His thoughts were all based on his travels to various countries (more than 30 a year!). Craig wrapped up his keynote by announcing the winners of the INSPIRE-EMPOWER challenge that he announced at last fall’s IDF. You can read a lot more about that specifically here.

Celebrating the 1-year anniversary of Intel Corporation’s introduction of its wildly popular Intel Atom processor family, Anand Chandrasekher, Intel senior vice president and general manager of the Ultra Mobility Group, introduced two new processors for Mobile Internet Devices (MIDs) and several other milestones during his keynote today at the Intel Developer Forum in Beijing.

| Source: Intel Press Release |

Discuss: Comment Thread

|

Acer Announces First ION-based PC, the AspireRevo

At January’s CES, NVIDIA impressed us so much with their ION platform, that we awarded it one of our Best of awards. Since that time though, the company has been rather quiet with regards to how ION was progressing, and the excitement was beginning to fade as no announcements had been made. That changes today though, with the unveiling of Acer’s AspireRevo, the first PC to use the ION platform.

Compared to a standard ATX chassis, the AspireRevo is 30x smaller, but it’s able to deliver 10x the graphical horsepower of similar Atom-based machines. The name “ION” is now more than just a name, as it’s become both a brand and the name of the included GPU… no “GeForce” here. The GPU is similar to those found in current MacBook’s, however.

Despite its size, the AspireRevo features a fair amount of connectivity. For video, a VGA and HDMI port are made available, with DVI being accomplished with the help of an adapter included in the box. There’s no shortage of peripheral connectivity, as the back of the unit sports 4 USB ports and also the LAN port, while two more USB ports are found on the front and top, for a total of six. Also in the front are the audio connectors, eSATA port and also a memory card reader.

For the size and price-point, this machine is truly packed. NVIDIA stressed the fact that even though the machine draws a low amount of power compared to modest desktops, it’s powerful enough to a) run Windows Vista with Aero and b) handle high-definition playback. Users can either run HD content right off the machine itself, or use an external Blu-ray player and enjoy their movies that way.

It took a little while before we were able to see that ION was still alive and well, but with a big name like Acer backing the platform, it has a good chance of succeeding in the marketplace. Not to mention that at this price-point, adoption will be much easier for many people. For $300, you get a small PC that’s capable of regular desktop use and HD playback, includes tons of functionality and a copy of Windows Vista Home Premium. If that’s not a good value, I don’t know what is.

I’ve included the full specs of the machine and additional pictures in the thread.

|

Discuss: Comment Thread

|

Even With Windows 7, Consumers Can Downgrade to XP

When Microsoft launched Windows Vista, it became clear fairly quickly that it wasn’t going to unseat Windows XP as the dominate OS. Over two years later, that’s still the case, and nothing’s going to change. Because of how things have played out, Microsoft has extended Windows XP’s life multiple times, and it looks like things aren’t going to change at all with the launch of Windows 7.

Despite the hype that Windows 7 is receiving (and for good reason), it looks like that upon launch, customers will have the option of downgrading to Windows XP, just like they are now able to with Windows Vista. Of course, this is going to be on a per-vendor basis, and not everyone has to agree to this policy (from what I understand). The fact that these vendors could charge you for a downgrade remains a real possibility as well, since the same thing is currently happening with Vista.

I find it a little odd that this is still in their policy, however, because it should be assumed that Windows 7 is going to deliver the goods that people are looking for. If people know in advance that the option to downgrade to a nine-year-old OS is an option, it can make some a little skeptical. Either way, I guess it is good to have an option, no matter how strange it may be.

The plan, according to the spokesperson, is still to terminate all sales of Windows XP by June 2010 at the earliest. Microsoft announced this plan about a year ago. The spokesperson also declined to comment on, or reject the possibility that XP netbook customers would be given incentives to upgrade to Windows 7.

| Source: DailyTech |

Discuss: Comment Thread

|

Apple Completes iTunes Plus Upgrade, Introduces Variable Pricing

Last week, we talked about a rumor that said iTunes would be moving over to their variable pricing scheme on April 7, and lo and behold, it has happened. This would be about a week behind their original schedule (they originally said “by the end of March”, but you can at least now be confident that everything available through the store is available as an iTunes Plus track, meaning 256Kbit/s bitrates and no DRM.

As I’ve mentioned in the past, I’ve been a rather heavy iTunes user since the company decided to start offering DRM-free music, and so I’ve been stalking some of the changes as they’ve been happening, and some things I’ve seen happen are not pleasing. In the earlier days of purchasing music on iTunes, I accidentally bought a couple tracks that were not iTunes plus… about 10 total. As it stands today, I still have three DRM infected tracks, and not one of them are available any longer.

So, it looks like I somehow got ripped-off there. In addition, those tracks aren’t the only ones mysteriously missing from the store, as over the past few months, I’ve kept a list of songs available on the service that I wanted to purchase once they went DRM-free, and rather than be DRM-free, they’re not available at all! Not good. But that all aside, the variable pricing is what most people are going to care about, and as it stands today, 30% of the top 100 tracks are $1.29 (Canadian version). The kicker? The same tracks are still available on Amazon.com’s music store for $0.99. Nice.

April 07 6:22PM PST Edit: It looks like Amazon has followed suit, as some tracks are now priced at $1.29. In their top list though, many songs are actually lower than $0.99, so it might very well work out to the consumer’s favor in the end. At least we can hope…

As promised, variable pricing has now been implemented at the iTunes music store. Already, we’re seeing most of the top 10 singles and 33 of the top 100 hitting the top price-point of $1.29 (encoded as DRM-free 256kbps AAC). Interesting as Amazon’s uncomfortably similar top 10 list has all these tracks priced at $0.99 (encoded as DRM-free 256kbps VBR MP3). A handful of tracks (nine in the top 100) do hit the higher $1.29 price further down Amazon’s list.

| Source: Engadget |

Discuss: Comment Thread

|

Nexuiz Continues to Get Better with Each New Release

If you consider yourself to be a hardcore PC gamer, chances are very good that you’ve played a fair share of first-person shooters, and there’s reason for that. Who doesn’t love some fast-paced action where you get (most often) to kill anything that moves? Talk about a great stress-reliever! But with the sheer number of such titles available at any given time, have you ever given thought to the free options that exist?

Take Nexuiz, for example. This game has been popular in the first-person shooter arena for a while, thanks in part to the fact that it’s both free and open-sourced. That’s right. After fragging a few buddies, you can hop out and start modifying the code! Free games sometimes have a bad rep though, and it’s hard to disagree with the reasons. Due to the lack of a commercial backing, most FPS’ titles tend to be less-than-polished, and it’s too bad since many have some brilliant developers on-board.

Well, Nexuiz impressed us enough to earn itself a spot in our Top 10 Free Linux Games article in late 2006 (the game is also available for Windows and Mac OS X), and things just keep getting better. Version 2.5 was released just the other day, and I had to give it a go, and boy, was I pleased at what I saw. One huge beef I had with the game over the years was that the control never felt truly perfect… to compare it to a game like Quake III or something similar, differences were clear.

I’m not sure what in particular has been changed, but the game plays like an absolute dream with 2.5, and I found the control to be spot-on with the weapons a bit improved also (it actually feels like I’m hitting someone now). The control isn’t the only thing affected though… the entire game client almost doubled in size! So to say it was an overhaul would be an understatement. If you’ve never given this deathmatch gem a try, definitely check it out. After all, it’s free and well-designed, and if fast-paced bloodshed is your bag, there’s not much to dislike.

Besides new maps, a new game-mode, and new weaponry, the game bots have also been enhanced in this first 2009 release. The bots now have better support for team working, bunny hopping, swimming, better way finding, and support for ladders. The Nexuiz bots will also use less CPU usage and maps should load faster. When it comes to performance improvements, Nexuiz 2.5 has its server/client communication completely rewritten. The benefit of rewriting and optimizing this network communication is that the consumed bandwidth has been cut in half!

| Source: Nexuiz, Via: Phoronix |

Discuss: Comment Thread

|

Roll-Your-Own Linux with SUSE Studio

One of the coolest things about Linux is the fact that there’s a ton of choice. In some regards, “Linux is Linux” is true, in that the backbone tends to be similar from distro to distro, but the truth is, there’s so much more choice where Linux is concerned than any other OS. Don’t like how one distro is set up? Go for another! While some OS’ look the same on everyone’s PC, having the option of different Linux distributions allows you to find one that suits you perfectly.

But what if things were taken a bit further? That’s what the SUSE project is hoping to do with their SUSE Studio roll-your-own distro website. Although the service is currently in a private alpha (sign-ups are available), the service allows you to actually build your own distro, which means the ISO you burn to a DVD is one you took part in customizing.

That in itself sounds simple, but the level of customization is staggering. You first choose a “base” build, each with a different desktop environment, and from there, you can select which packages to build into the ISO, and you can even go as far as building the distro with applications not usually found in the SUSE distribution (via URLs). You can even allow it to prepare a MySQL database… insane.

Once your configuration is complete, you have the option to save as a DVD image (.img or .iso), and believe it or not… you can even save it as a virtual machine, for use with Xen or VMware. This service is designed for users who already understand Linux, but wow, the potential is incredible. I cannot wait to see this service go live…

Almost every aspect of the ISO is customizable, from the background of the boot splash, custom logo, adding users/password, running custom scripts during boot, memory size for virtual machine. It took me 5 minutes to make a custom ISO with minimum changes and another 10 minutes to build the ISO. Real life application of this service has so much potential that its amazing, not because it is unique (which its not), but how simple and fast it is to build your own custom Linux distribution.

| Source: SUSE Studio, Via: Linux Haxor |

Discuss: Comment Thread

|

The Overclocker – Online Magazine Dedicated to All Things Overclocking

Alright, I’m willing to go out on a limb here and take a guess that most people reading this have at one time overclocked something in their computer, whether it be the CPU, GPU or anything else. There’s just something about taking a stock-clocked product and making it go faster than it was designed to… it’s exciting. So exciting, in fact, that overclocking is much more than just a hobby for some people, and given how addictive it can be, it’s no surprise.

Thanks to a friend who linked to this in their Facebook profile the other day, I stumbled on an online-only magazine (which happens to be free) that’s dedicated to the world of overclocking, and features updates on the goings-on, profiles of the overclockers themselves, numerous interviews, event updates and even some product reviews (which focus mostly on stability and overclocking).

Though I don’t at all consider myself to be a big overclocker (I’m just big), I find it hard to look through one of these magazines and not get a little excited. Professional overclockers bring things to the next level, with LN2, Dry Ice and other sub-zero chemicals, and if that doesn’t define hardcore, I don’t know what does. The magazines are well put together though, and if you don’t have a desire to overclock now, you might very-well have one after reading through one.

At The Overclocker we aim to promote overclocking, overclockers, their communities and websites. We also want to bring them closer to the industry. Overclockers are fast becoming the most influential people in technology; they find out what breaks and make whole product lines faster and more reliable. They buy the best components, they tell everyone else what to buy and often work in very important technology jobs.

| Source: The Overclocker |

Discuss: Comment Thread

|

Our HD 4890 & GTX 275 Article Will be Posted Soon

As you sit there, sipping your morning coffee and browsing the web, you may notice an overwhelming amount of content surrounding ATI’s new Radeon HD 4890 and also NVIDIA’s GeForce GTX 275. Naturally, you’re going to be wondering where our content is, but don’t fret… it’s on the way. Due to the fact that we received one of the cards exceptionally late, (no names, but it wasn’t ATI), I felt like I had no choice but to push back the article until later today, so that’s what I’m doing.

Because both cards are going to (supposedly) retail for $249, a review of one without the other makes absolutely no sense, and since I received the second card just yesterday (no joke), I haven’t had the time up to this point to put it to a proper test. You can expect the article to be published before the day’s through though, and I promise it will be worth the wait.

As it stands right now, I can honestly say that both cards are quite exciting. They’re both excellent performers and happen to be quite a bit of fun to overclock (to say I was pleased would be an understatement). I’ll be tackling that a lot more in the article though, so I won’t spoil anything now. Stay tuned. I’m working on getting this article up as fast as humanly possible.

|

Discuss: Comment Thread

|

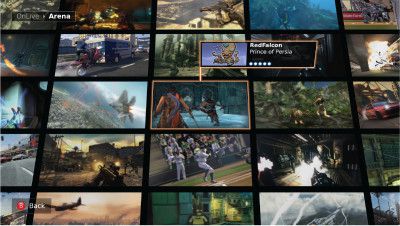

Is OnLive’s Technology Impossible to Pull Off?

Too good to be true” is a term being thrown around quite rampantly regarding the video game service OnLive, which was first unveiled at the Game Developers Conference last week. For the uninitiated, OnLive is to be a service available for both the PC/MAC platforms, and can also be used with a stand-alone device on your TV, used for streaming video games across the Internet for play.

I won’t get too deep into what it does or how it works for the sake of space, but I recommend checking out the video on the official site which explains all. Essentially, OnLive is designed to replace a console or PC, and no matter how beefy your computer may be, this streaming service is designed to allow you to experience the latest and greatest in the video game world, with the help of your broadband connection.

Not surprisingly, there are many doubts surrounding this product, and some people are coming right out and calling the technology “impossible”. It’s easy to understand why. How can a game look as good through this service as it would on a regular console? After all, streaming video doesn’t look that great, so what makes this product any different? Well, co-founder of OnLive Steve Perlman disagrees with all the nay-sayers, and if you are quick to discredit their technology, you could very-well be “ignorant”. Fair enough.

According to Steve, the reason their streaming technology is so good is because there have been “tens of thousands” of man-hours poured into it, and it’s not like any other video compression technology out there. He also goes on to mention that while the typical latencies for pressing a button on a gamepad and seeing the action in a game should be around 80ms, he states that OnLive will see a latency of around 35 – 40ms. Bold claims, that’s for sure. Despite the doubts I have myself, I’m looking forward to seeing it action later this year if it launches on schedule. It should be quite interesting.

While Perlman used that term specifically in regard to a Eurogamer editorial—”Why OnLive Can’t Possibly Work”—the outlet isn’t the only one questioning whether OnLive can deliver high-definition, perceptually real-time video game experiences without a console or PC. He tells the BBC that critics have not yet used the system, nor do they understand the technology behind it.

| Source: Kotaku |

Discuss: Comment Thread

|

Isn’t it About Time We Say Goodbye to FAT?

There are few feelings quite like the experience when the time comes to build a new machine. Ahh, the sense of something brand-new, and not to mention the fresh circuit board smell! But while our machines may be faster than ever, and their capabilities mind-blowing, the truth is that deep-down, some of the technologies being used aren’t so new. Take the BIOS, for example. It’s been around for a while, but it’s still found on most PCs.

But there are some technologies that a few people hope to see perish, and lately, that tech seems to be the FAT filesystem. Although FAT has seen a few iterations, it first saw the light of day in 1980, where it started off as a 12-bit version and today sits as a 32-bit file system. And aside from the fact that the file system’s filesize limit is 4GB, it’s served us quite well over the years. But as a recent lawsuit between Microsoft and TomTom proved, it might be a good idea to say sayonara, at last.

Who’s really pushing the idea of giving up on FAT is the nonprofit Linux Foundation, and while it might be easy to discredit their claims given they’re pro-Linux, some good points are made. This lawsuit proved that, well, “Microsoft is still Microsoft”, and despite their claims for software openness, most people can see through the bull. I’m not totally against FAT, since it does work in pretty much any operating system, but an open standard for removable media sure wouldn’t be a bad thing.

“The technology at the heart of this settlement is the FAT filesystem. As acknowledged by Microsoft in the press release, this file system is easily replaced with multiple technology alternatives. The Linux Foundation is here to assist interested parties in the technical coordination of removing the FAT filesystem from products that make use of it today,” he wrote. “Microsoft does not appear to be a leopard capable of changing its spots. Maybe it’s time developers go on a diet from Microsoft and get the FAT out of their products.”

| Source: Ars Technica |

Discuss: Comment Thread

|

The Eee PC Gets an Optical Disc Drive

As we touched on in our news post for OCZ’s DIY Neutrino netbook the other day, this is one product-type that has really taken the world by storm. These full-featured computers are small and light, fast enough for most needs, normally include better-than-average notebook battery-life and of course, carry an easy-to-stomach price. For many people, a netbook is a perfect fit. But, there are a few obvious drawbacks, and ASUS takes care of one of the biggest ones with their upcoming Eee PC 1004DN.

What could that drawback possibly be? Well, if you own a netbook, you probably guessed it was the total lack of an optical disc drive. It might seem simple to use a PC without a disc drive, given the advent of flash drives and the like, but it becomes a chore when you want to either redo your computer, install something like Linux, or even install some other piece of software you have on disc. It can be done, but it’s time-consuming (unless you happen to have an external drive).

So, the 1004DN includes a CD/DVD burner, but the only press image that seems to be floating around is seen below. While it’s a nice shot, it conveniently doesn’t show the side, which makes it rather clear that this Eee PC isn’t going to be as thin as the others, and that’s no surprise given that was the problem all along. Compared to previous Eee PC’s though, this one also adds the Atom N280 1.66GHz to the mix, in addition to 2GB of RAM. Sounds like quite a nice netbook actually, but let’s wait to see the pricing first.

ASUS says that its new 1004DN is the first netbook in the Eee range to offer an integrated Super-Multi optical drive allowing users to read and write to CDs and DVDs on the go. The machine also features an Express Card slot for expansion. The new Chicklet-style ASUS keyboard is used on the machine that puts the right shift key in the correct position and the machine uses the Super Hybrid Engine (SHE) to extend battery life.

| Source: DailyTech |

Discuss: Comment Thread

|

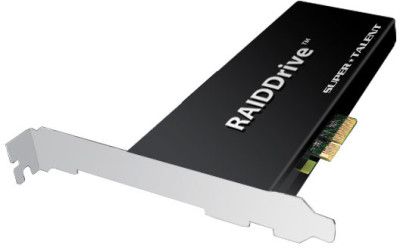

Super-Talent Announces PCI-Express SSD, “RAIDDrive”

You may recall last October when when we first found out about Fusion-io and their PCI-Express-based SSD. At the time, it was hard to gauge the true worth of such a product, but it’s becoming a lot easier to do so now. Months later, the same company followed-up with their ioDrive Duo, a drive that offers insane Read and Write speeds, and one heck of a price tag. They mean serious business.

Since then, OCZ also decided to join the fray, by showing off their Z-Drive solution at CeBIT last month. That drive appeared to be bulkier than Fusion-io’s solution, but it also happened to include 1TB of space, so the extra bulkiness is understandable. It apparently wasn’t only OCZ who wanted to follow-suit, because Super Talent today announced their own solution, the “RAIDDrive”.

I won’t bother getting into what a boring name that is, but it does go to show that PCI-Express SSDs are here to stay, and must have some real use somewhere. Super Talent boasts 1.2GB/s Read speeds, and oddly enough, 1.3GB/s Write speeds. I don’t recall ever seeing a storage device that actually had higher Write speeds than Read speeds, but I can now check that off my list. Pricing, not surprisingly, hasn’t been released, but if you have to ask, just go buy a car instead.

RAIDDrives support up to 2 TB of MLC or SLC Nand Flash memories, have a turbocharged DRAM Cache, and the RAIDDrive ES is fully battery backed to protect data in the event of power loss. The RAIDDrives connect through a PCIe Gen. 2.0 x8 interface and are capable of delivering sequential Read speeds of up to 1.2GB/s, sequential Write speeds of up to 1.3GB/s. More performance details will be unveiled in June. As an optional feature users will be able to configure the drives with an internal RAID5 capability to deliver an extra level of data protection for mission critical applications.

| Source: Super Talent Press Release |

Discuss: Comment Thread

|

Despite an ISP Shutdown, Spam Back to Ultra-High Levels

Once in a while, we’re hear news of something that should affect how much spam we receive, but by now, we’ve learned that that’s never the case. Whether is a huge spam dealer being murdered, or an entire ISP being shut down, spam will usually decrease for a while, but not for too long. According to new reports, although there was a noticeable decline in spam in November, we’re right back to original levels. Sweet.

Just how bad is it? Well, prepare to be depressed. Antispam company Postini calculates that a staggering 94% of e-mails are spam, and I don’t have a hard time agreeing there. For whatever reason, I tend to be a huge victim of spam, and I’ve estimated that between SpamAssassin’s work and what I actually receive, I’d get between 250 – 300 pieces of spam per day. That’s ridiculous. I have little doubt it’s even worse for others.

Aside from the annoyance factor of spam, I can’t help but think just how much money spam actually costs people. It would no doubt be far more than what the spammers actually earn themselves. At Techgage, we have a rather simple e-mail server, but even it deals with near ten-thousand e-mails per day, and according to this logic, 9,400 of them would be spam. That’s a lot of extra (needless) computing power. That’s just us… multiply that by all the e-mail servers in the world. I’m not even sure I’d want to know the answer to that one…

But this year, average spam volumes have increased about 1.2 percent each day. And there is evidence that spammers are now building more decentralized, peer-to-peer spamming botnets that no longer rely on the visible and vulnerable control nodes that they were using at McColo to guide their spam e-mail campaigns. “What the spammers have been using to rebuild is more technically advanced than what got taken out and is itself a more resilient technology,” Mr. Swidler said.

| Source: New York Times |

Discuss: Comment Thread

|

Intel’s Nehalem-EP Xeons Deliver Explosive Performance

When Intel launched their Core i7 processors last fall, we, like the rest of the editorial press, we were blown away. Hit with the right workload, the Nehalem architecture blew past not only the competition, but Intel’s own Yorkfield as well. As we’d expect, things don’t change too much on the server side of things, given the fact that its servers that tend to excel where multi-threading is concerned. HyperThreading worked well in our tests, and it works even better in server applications.

Our friends at The Tech Report have taken the brand-new high-end Xeon CPU for a spin, the 3.2GHz W5580, and are left more than impressed. Thanks to the numerous architectural differences over Yorkfield, and improved design compared to the latest Opterons, the latest Xeon manages to clean house in every-single test… well, all except the power consumption, where it draws more power at full load (to be expected from a 130W processor).

Where memory-intensive applications are concerned, the latest Xeon wins without worry. Thanks to the triple-channel memory controller, and the dropping of latency-plagued FB-DIMMs, the Xeon catches up to the Opteron and blows past it. As we found in our launch Core i7 article, complex algorithms is where the Nehalem architecture shines, and this article has the graphs to prove just how useful that is with server-based scenarios. Oh, but there’s one caveat. The $1,600 price tag. That’s simply the high-end model reviewed though. There are many more options available for you workstation/server builders working on a more modest budget.

Although we’ve seen Nehalem on the desktop, it’s even more impressive in its dual-socket server/workstation form. That’s true for several reasons, including the fact that this architecture was obviously designed with the server market in mind. This system layout translates particularly well into multi-socket systems, where its scalability is quite evident. Another reason Nehalem looks so impressive here is the simple reality that the past few generations of Xeons were handcuffed by FB-DIMMs, not only in due to added power consumption, but also in terms of memory latencies and, as a result, overall performance.

| Source: The Tech Report |

Discuss: Comment Thread

|

OCZ Releases Neutrino DIY Netbook

When ASUS first released the Eee PC 700 in the fall of 2007, no one could have predicted what was to come. It took no time at all before the PC took off and sold staggeringly well, and of course, we don’t have to get into all the competition that resulted. While “netbooks” have their share of downsides, they also have an overwhelming number of upsides, including battery-life, size and of course, price.

We heard for a while that OCZ was planning to also release a notebook, which was no surprise given that they have the capacity (thanks to their acquisition of Hypersonic in late 2007), but who expected it to be part of their DIY line-up? I didn’t, but it’s a nice surprise. Whether this is the first DIY netbook available, I’m unsure, but I haven’t heard of one up until now.

The Neutrino is similar to most other netbooks available, in that it comes in a 10″ form-factor, includes Intel’s 1.6GHz Atom N270 and features a 1024×600 resolution. Being that it’s DIY, the RAM, storage and operating system is left up to you. The naked model is going to retail for $269.99, and however you want to configure it is left up to you. With that price, you could add an inexpensive mobile hard drive and 2GB of RAM, and still come way under the $400 price-point of most of the competition. Of course, the Windows XP license will still be up to you. More reason to try out Linux, maybe?

“There are many consumers that desire the blend of essential functionalities and an ultra compact form factor, and our new Neutrino Do-It-Yourself netbooks based on Intel Atom technology allow users to design and configure their very own solution tailored to their unique needs,” commented Alex Mei, CMO of the OCZ Technology Group. “The Neutrino DIY netbook puts the control back in the hands of consumers by allowing them to configure a feature rich netbook with their own memory, storage, and preferred OS into a reasonably priced go anywhere computing solution.”

| Source: OCZ Product Page |

Discuss: Comment Thread

|