- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

HDMI 1.4 Standard Brings Revised Cable Badging

One of the biggest issues that exists today is confusion over technology. Most of you reading this site are not going to be tech illiterate, but we’re of the minority. Millions upon millions of regular consumers walk into brick and mortar stores on a regular basis and find themselves lost. “What kind of cable do I need?”, “Does my television even support it?”, “What kind of computer should I get for my needs?” and “Do I really need the ‘Ultimate Edition’ of Windows?” and so forth are common types of questions.

It’s important that regular consumers know what to look for when walking into a store, but because many don’t do research before doing so, they’re lost once they’re there. To help the issue, many companies over the years have tried hard to simplify their product line-ups, or provide information right on the product itself, so that people shouldn’t have to go to a sales representative and ask questions. Sometimes, these methods work decently well. Other times, they just add to the confusion.

One example of the latter is with HDMI’s latest cable-branding standard. Yes, apparently there is more than one kind of HDMI cable out there, and it’s not just based on the version of HDMI. With the introduction of HDMI 1.4, the conglomerate of companies that own rights to the technology have unveiled what’s supposed to be simpler badges to help consumers find what they need. But it’s fairly obvious that if there’s five different badges, then it’s not that simple.

Of these five, three are “Standard” and two are “High Speed”. When I think of HDMI, I tend to think of “1080p”, because when high-definition content, like what Blu-ray offers, first became popular, most people used HDMI as the go-to connection. But believe it or not, the “Standard” cables top out at 720p/1080i, so for those who want 1080p, this cable just won’t do. Instead, you will (and the majority I’d imagine) need “High-Speed”, which fully supports 1080p/60 and 2160p/30, or anything less, like 720p.

Up until this news, I had no idea there were different types of HDMI cables, so I’m not quite sure what it exactly is required to boost it to allow 1080p and higher content, but it’s unfortunate we can’t just have one cable and one cable only. You don’t see multiple versions of Component or DisplayPort cables (except where needed changes for length is concerned), so why complicate things with HDMI?

Five different versions of HDMI 1.4 will be available initially. Starting things off, HDMI Standard and HDMI Standard with Ethernet are the base versions of the new HDMI cables. They’re self explanatory, (if lengthy to type). HDMI Standard Automotive is the third, marking the last of the HDMI Standard series. The final two cable types are HDMI High Speed and HDMI High Speed with Ethernet. The need to add a second tier of cables to the lineup is a bit baffling, and the technical difference between Standard and High Speed HDMI cables is equally strange.

| Source: High-Def Digest |

Discuss: Comment Thread

|

Serious Sam HD Gets a Serious Release Today

Over eight-and-a-half years ago, a very serious game developer released an even more serious game called Serious Sam: The First Encounter. It certainly wasn’t unique because of its FPS likeness, but it was unique because the developer tossed a huge amount of humor in there and gave the player so much to kill, that you’d actually have to catch your breath every so often. It was intense.

Oddly enough, Serious Sam wasn’t the first title released by the Croatian developer, but rather it was Football Glory. Yes, I’m serious. The first Serious Sam came years later in 2001, followed by “The Second Encounter” in 2002. And of course, you probably remember Serious Sam 2, which happened to be one of the first games I ever reviewed for the site. It brought fantastic graphics (even today they’re more than acceptable), and even more humor and relentless action.

It’s been four years since that game’s release, so it’s understandable that fans of the series are anticipating Serious Sam 3, which thankfully has been in development for a couple of years, but has an unknown release date. To hold everyone over, Croteam released a hilarious video this past summer that advertised Serious Sam HD, a game that’s “more definitionier” and boasts performance of “6005 polygons per krundle.”

In the video, you see people who’ve had to play the SD version of Serious Sam, and if you don’t feel deep sorrow for them, then there’s something seriously wrong with you. If you were a fan of the original, then this game is a must-buy, if not only to play through again in all its high-definitiony goodness. It’s available today for the PC for $14.99 exclusively on Steam, with an Xbox Live Arcade version en route (date hasn’t been announced). In celebration of the release, I’m going to be ultra-serious about everything I do for the rest of the day. Seriously.

The indie sensation Serious Sam: The First Encounter is reborn in glorious high- definition for legions of long-time fans and a whole new generation of gamers around the world. Featuring dazzling visuals and revamped design, gamers take control of the legendary Sam ‘Serious’ Stone as he is sent back through time to ancient Egypt to battle the overwhelming forces of Notorious Mental and the Sirian army.

| Source: Serious Sam HD |

Discuss: Comment Thread

|

PC Shipments Rise in 2009, But Revenue Declines

As if proof was ever needed that netbooks have become more than just a fad, Gartner has just released a report that tells of a nice 2.8% growth for PC shipments in 2009, while at the same time showing an 11% decline in revenue. In most any market, those kinds of results would be considered strange, but not here. Netbooks have exploded in popularity, which helps the growth, but they cost far less than a normal PC, hence the decline in revenues.

It’s an interesting result, to say the least. Even if each person out there right now purchased a desktop PC and a netbook, the PC shipment growth would of course increase, but the acceleration of revenue growth wouldn’t be the same as if netbooks were out of the picture entirely. The raw data of just how successful netbooks are might be surprising nonetheless though. Out of all the shipments in 2009, which will hover around 298.9 million, 29 million will be netbooks.

There’s no two ways about it… 10% of such a market is huge, and as things are going, 2010 will finish off even better for the netbook, and the industry as a whole. While 298.9 million units shipped in 2009, Gartner expects that number to rise to 336.6 for 2010… that’s huge. Of that, 196.4 million units are expected to be consist of mobiles, 41 million of which will be netbooks (or other like devices).

With its 162 million unit market share for 2009, mobiles account for 54.2% of total PCs shipped, and if the 2010 numbers hold true, that percentage will increase to 58.3%. Given the obvious acceptance of mobile computers, whether it be notebooks, netbooks or whatever else, these numbers aren’t too surprising. As a desktop enthusiast, the numbers can be a little depressing, but they don’t have to be. After all, it’s not unusual for people to own more than one mobile computer, but few people tend to own more than one desktop PC. Desktops, and especially high-end desktops, will hopefully be around for some time to come.

“Mobile PC shipments continued to get a significant boost from mini-notebooks,” said Mr. Shiffler. “We’ve raised our near-term forecast for mini-notebooks in response, but we have also narrowed our scenarios for them. Mini-notebooks are facing increased competition from other low-cost mobile PCs, as well as alternative mobile devices. They are rapidly finding their level in the market, and we expect their growth to noticeably slow as early as next year.

| Source: Gartner Press Release |

Discuss: Comment Thread

|

Intel’s Initial “Westmere” Processor Models Leaked

Want in on some juicy details about Intel’s upcoming Clarkdale (Westmere) processors? Well, wait no longer, thanks to a German e-tailer leaking most of the information people care about, including model names, frequencies, TDPs and even pricing. As a quick refresher, Westmere will be Intel’s first, and also the industry’s first, 32nm consumer processor architecture. That alone makes them special, but along with that also comes an integrated graphics chip, snuggled up right beside the CPU on the same subtrate.

This isn’t quite a “fusion” design, as both the CPU and GPU are separated, but both are located side-by-side on the same chip, resulting in less room being taken on the motherboard. The first Westmere chips will come in the form of Clarkdale, the desktop PC variant. All Clarkdale chips will be dual-core, and for all we know right now, all models featuring an IGP may never move up to quad-core ranks. The reason is space, so if it did happen, we wouldn’t likely see see it until 22nm. Fortunately, most people who are concerned over quad-cores are not concerned with integrated graphics, so this issue should effect almost no one. For the performance-hounds, the six-core Gulftown, based on Westmere, will be the next chip to look forward to.

At the low-end, Intel will be kicking off Core i3 with two models, the i3-530 and i3-540. What earns them their Core i3 moniker is the lack of the ever-popular Turbo feature, which boosts a processor’s overall speed by a fixed ratio when it’s under load. These two chips will be clocked at 2.93GHz and 3.06GHz, respectively, have a TDP of just 73W, and retail for $125.95 and $146.56.

On the Core i5 side, the company will be launching four models: Core i5-650 ($195), i5-660 ($213), i5-661 ($213) and i5-670 ($306). The latter is what Intel would consider its highest-end offering, thanks to its 3.46GHz clock speed, but the i5-661 will be the more appealing option for those craving the best graphics performance from an integrated chip, as the GPU is boosted from 733MHz to 900MHz. The rest of the line-up is also clocked at 733MHz, so the i5-661 will offer the best graphics performance out of all the launch Westmere chips. Of course, what matters is the performance in general, and that’s yet to be seen.

Coming to the i5 family of products, these processors support Hyper-Threading and you should see four threads in Task Manager. Depending on a model, Turbo will accelerate one core between 3.46 and 3.73 GHz, with the only odd model being i5-661. 661 carries almost identical specs if it wasn’t for the clock – integrated graphics works at 900 MHz and we would expect Intel to push this unit hard, proving that 900MHz clock is sufficient for acceptable 3D performance.

| Source: Bright Side of News* |

Discuss: Comment Thread

|

Smoking Around Apple Computers May Void Warranty

As tech enthusiasts, if a problem arises with our PCs, notebooks, or whatever other gadgets we might be using, it’s automatic to attempt a fix ourselves before going through the dreaded process of getting someone else to. After all, who on this earth would enjoy calling up tech support to talk to someone who has less tech knowledge than they do? Even worse is to try to convince someone over the phone that your PC or product does need repaired, and that it’s not just a “user error”.

But, it’s inevitable that from time to time, something will break that you can’t fix, so the only option is to get an official dealer to take a look at it. Though it’s an inconvenience, it’s better than nothing… unless you’re a smoker. As it turns out, Apple considers smoking to potentially degrade your desktop, mobile or whatever else, and as a result, it’s been refusing service on products where smoking around the product is evident. It doesn’t matter if you have the best Apple care plan available… Apple can refuse to even touch the thing.

I’m sure a lot of people will disagree with me here (probably all smokers), but I’m having a hard time disagreeing with Apple’s policies here. I don’t believe that an authoritative study has been done to find out the effects that chemicals from smoking can have on electronics, but knowing what they can do to a human body, I have little doubt that there’s potential for degradation of components over time.

That’s not entirely the reason Apple is refusing smoke-ridden machines, though. It comes down to the fact that it’s unhealthy to handle PCs that have been smoked around for a long period of time, and the company considers it a hazard to its employees. Again, I can’t disagree there. I’ve seen a lot of computers that reside in houses where one or more people smoke, and they’re truly disgusting. I don’t believe that simply handling a smoke-caked computer is going to cause near as much damage as second-hand smoke would, but it’s disgusting nonetheless. The same could be said about PCs where food is dropped in between the keys, though, so it’s tough to decide what’s “too disgusting”.

What do you guys think about this? Is Apple in the right here, or the wrong? Should other companies follow suit? Or is it truly unacceptable and a huge insult to those who smoke? It should be mentioned that nowhere in Apple’s warranty policy does it mention that smoking can void the warranty, and I have a good feeling it wouldn’t, because the idea is a little foolish. It would essentially mean that no one could smoke in their own house, and that’s going to sound a little extreme to a lot of people. But on the other hand, if there’s a good reason for refusing smoke-caked computers, then it might be an accepted policy.

Today, April, 28, 2008, the Apple store called and informed me that due to the computer having been used in a house where there was smoking, that has voided the warranty and they refuse to work on the machine, due to “health risks of second hand smoke”. Not only is this faulty science, attributing non smoking residue to second hand smoke, on Chad’s part, no where in your applecare terms of service can I find anything mentioning being used in a smoking environment as voiding the warranty.

| Source: The Consumerist |

Discuss: Comment Thread

|

When Black Friday Deals Are Not Deals at All

If I had to choose just one activity I could freely give up and not have to do ever again, I’d ponder over “doing the dishes”, but I’d more realistically choose “shopping”. Shopping on occasion can be enjoyable, but as the days pass, I’m finding it harder and harder to ever find a minuscule gleam of joy from it. So, it’s a chore. I’m sure a lot of people agree with me, but most of those same people wouldn’t think twice about waking up ultra-early to take advantage of Black Friday deals, which is humorous as it offers the worst shopping experience ever.

As great as Black Friday deals can be, I’m still not willing to get up and inconvenience myself to save even a decent amount on an item. Most often, if you pay close enough attention, you can get great deals throughout the year on pretty much anything, so is it really worth being trampled over to save a couple of bucks? According to some reports, it’s looking like it’s going to be even less worth it this holiday season, as retailers are becoming just a bit sneakier.

I mentioned in our news a few weeks ago that retailers might be shooting themselves in the foot with current pricing, and it’s for the same reason that Black Friday this year is likely to be considered a flop compared to previous events. Because of the economy, stores have been offering great prices on a wide-range of merchandise for a while, so just how low could they possibly go for Black Friday?

There’s also the sneakiness factor that I mentioned above. It seems that some retailers are offering incredible deals in their fliers, but the fine print will reveal that they’ll have very low inventory. As an example, Sears will be offering a nice Samsung 40″ 1080p television for $599. That sounds great, until you realize that they’re likely to have only three on-hand. This is of course done to get people in the store, and if the item’s sold, the company rides on the fact that the person might buy something else in its place. Apparently this is a common practice, so if you are looking for the best Black Friday deals this year, make sure you read the fine print, because you might be wasting your time. Unless you are super dedicated of course.

I have to directly quote the author of the news article, because I couldn’t agree more: “Why can’t some of them use Black Friday as the first day of their new authenticity? It just might engender a little loyalty and a little trust. You know, for those other 364 days of the year.“

Why don’t stores offer a couple of truthful ads? Something like this: “Look, we’ve got three Samsung 40-inchers for $599.99. We won’t make any money on them. But we’re advertising them so that you can get excited. We promise there will be three of them and we’ll sell them to the first person who comes in and guesses the middle name of our handsome salesman, Brad. We think that’s fairer than having y’all fight, bite and claw outside our front door. Life is random. So are our deals.”

| Source: Technically Incorrect |

Discuss: Comment Thread

|

Top 20 Gadgets of 2000 – 2009

Top lists are far from rare, and as we’re getting close to 2010, I’m sure there will be countless more to look through in the next month or two (who knows, perhaps we might even have one). One posted at the website for Paste Magazine caught my eye, as it shows off the staff’s choices for the top 20 gadgets from the year 2000 to present. I don’t entirely agree with all of them, but overall it’s a solid list.

In the list’s 20th spot is the Bluetooth earpiece, which surprised me. I’ve always considered these to be instant douchebag creators, but the site mentions that while that could be the case, it’s also surely been responsible for many saved lives over the years, so how you can trash it? Interestingly, many of the choices on the list have to do with audio of some sort, whether it be music or real discussion.

As you’d expect, there are a fair number of USB devices, including the USB flash drive, which I’d have to agree as being a great choice. I’d place it much higher than #18, though. If you have a flash drive, and you probably do, just imagine not having one for a second, and see how great that idea sounds. This form of storage was slow to catch on, thanks in part to the early-adopter pricing, but today, I know almost no one who’s without one.

Most of the other winners aren’t that surprising, and it’s not going to shock a single person on earth that the iPod landed in first place. Other notable mentions include the Blackberry, Slingbox, iPhone and Amazon Kindle. I thought hard about what I’d have to consider my favorite gadget of the year 2000 or later is, and I quickly realized that I’m not much of a “gadget guy”. Although I don’t use it as often as I like, I might have to pick Sony’s PSP. It’s mobile, has stellar graphics and tons of capabilities, and the list is growing all the time. The only real downside is battery-life… I can’t stand it!

Travelers need no longer preserve their novels’ final chapters for the plane ride home. The online superstore Amazon introduced its peculiar literary instrument in 2007, compacting the book and the bookstore into a single, grayscale device. The Kindle married an unlikely couple: literature and the electronic. It will remain one of the few gadgets to be never criticized for its brain-melting capabilities. And best of all, thanks to digital ink, it reads just like paper.

| Source: Paste Magazine |

Discuss: Comment Thread

|

Apple Patents Forced Advertising

As a website owner, I’m well-aware of just how important advertising is to both the livelihood of staff members, and to the health of the site itself. There are some types of ads I don’t quite agree with, which is why you don’t see them here on our site. There’s one kind of ad in particular that bugs me… “pop-unders”, which preface an entire website or article with a full-page ad (usually featuring a “skip this ad” button).

But, even with that kind of advertising, I can understand the need, and despite it being an inconvenience, if the site’s worth it, then no problem. Plus, the fact that they can be skipped makes the issue a little bit easier to deal with. But what if you couldn’t skip them? Or any other type of advertisement for that matter? According to a patent filed by Apple last year, it looks like it could become a reality, although we’re not sure how soon.

The patent seems to target mobile computers, or where Apple’s concerned, probably handhelds, like the iPhone or iPod touch. The idea is this. An ad is displayed on your screen, and you have to prove to the device that you saw it before you can continue. There isn’t a simple “Skip this Ad” button, or if there is, it will be located on different parts of the screen with each ad, so you can’t simply guess where it will be.

There’s also the possibility that the ad will ask you a skill-testing question, with the answer being related to the ad. In this case, you actually would have to watch the entire ad if you wanted to gain control back over your device. It sounds insane, but the way things are going, it wouldn’t surprise me in the least if this became a reality soon, but depending on how it’s utilized, I might not be entirely against it.

If these ads mean that the device is free, then some people (not me) might prefer to take that route. After all, it’s easier to stomach the cost of a device via your time rather than your hard-earned cash. Plus, although I don’t think this will happen, at least soon, there’s also the possibility that this ad-type could be expanded to other areas of computing as well, such as desktop operating systems. What do you guys think about this? Would you deal with these types of ads to get a free, or cheaper device?

…forget about having a third-party developer providing you with an AppleScript to bypass this. Unless the advertisement “counts,” you’ll be locked out of using the device until you can prove you’ve paid attention. Apple even provides a sample menu bar, which will be haunting my dreams thanks to its Lucida Grande font and obvious Mac integration. This menu allows for the user to “preload” the timer of how long they can use their device without interruption — by watching multiple advertisements in advance.

| Source: PC World |

Discuss: Comment Thread

|

Proposed UK Internet Law Bordering on Asinine

For the most part, I’m not too pleased about the Internet situation we have in Canada, or at least in most of it. There’s a total lack of ISPs, so price-gouging goes on, making ISP services in most of the US look fantastic. After hearing about some new Internet law that’s on track to become instated within the next year or two in the UK… I’m starting to not mind my ISP so much. We all know of the hassle that the RIAA and MPAA have caused Internet-surfers over here, but compared to this new law in the UK, they look like pussycats.

The first major issue is the “three-strikes” rule, and it is just as it sounds. If you’re caught downloading “illegal” content three times, not only are you barred from using the Internet at your home, but you’re entire family will be as well. No surprise, there are also fines, but could you have ever guessed that they go up to £50,000? Are they serious? That amount of money could buy a seriously sweet Porsche… how on earth could this even be imagined, much less pushed through?

ISPs don’t escape this new law either. Their requirements would be to monitor traffic going in and out of a home to attempt at detecting illegal activity. If the ISP refuses to partake in this procedure, it can be fined £250,000. It gets even worse, but I think the full article at bottom should be read to get all of the details. There is absolutely nothing good about this new law, and people have real reason to be wary.

Petitions don’t often accomplish the goal they’re set out to do, but for the sake of this being such an important issue, any Brit should head here and sign your name and reason for opposing. No one can simply say, “I don’t pirate, so I have nothing to worry about.”, because that’s not the issue. You can have your Internet service cut off, and can be fined, without hard evidence. And even then, it’s not too difficult to use someone elses (as in, yours) Internet to download whatever you want. If this law is put in place, net security will become more important than ever.

The real meat is in the story we broke yesterday: Peter Mandelson, the unelected Business Secretary, would have to power to make up as many new penalties and enforcement systems as he likes. And he says he’s planning to appoint private militias financed by rightsholder groups who will have the power to kick you off the internet, spy on your use of the network, demand the removal of files or the blocking of websites, and Mandelson will have the power to invent any penalty, including jail time, for any transgression he deems you are guilty of.

| Source: Boing Boing |

Discuss: Comment Thread

|

Google Previews Chrome OS

In the world of software, new products of all stripes come out all the time, and rarely is a launch that exciting. But when the launch involves a brand-new operating system, and one that’s being developed by none other than Google, people start to pay attention. Yes, I’m of course talking about Chrome OS, an operating system that was only announced this past summer, and hasn’t been able to escape daily mention in the news ever since.

Google unveiled the OS for the first time at a low-key press conference yesterday, and it looks almost just as we’d expect. The entire OS is essentially a robust browser, with many tabs found at the top to access various parts of the system, or for various website tabs you might have open. It’s important to note that this isn’t a desktop OS, and it’s absolutely not meant to be, so such a simplified design might prove to be fine for most people running it on a netbook or similar device.

Think that might change? Don’t count on it. Apparently, Google is going to have rather tight hardware standards, so it’s not going to work without issue on everything. Interestingly, it’s not going to support typical HDDs, but rather SSDs. It will support x86 and ARM CPUs, however, which means it will support many mobile devices currently on the market. I wouldn’t count on these restrictions as being a bad thing, because Google has released the entire OS as open-source, so there’s little doubt that modified versions will come along for use on other platforms.

I’m quite interested to see just where Chrome OS is going to go, because Linux-based OS’ for netbooks and the like have been done before, and people always seem to flock back to a Windows-based OS. But given the popularity of Google online applications, this Linux OS in particular might appeal to a much larger crowd of people, and might just put Google on a path to OS super stardom.

Interested in giving the OS a try for yourself? There’s actually a torrent available, with a VMware image, and since VMware Player is completely free, as tackled in our news the other day, you have everything you need to see what it’s made of. Note that this should be treated as nothing more than a preview, though, because that’s what it is. The final version still isn’t due until sometime late next year.

As far as going to market, Google’s not talking details until the targeted launch at the end of next year, but Chrome OS won’t run on just anything — there’ll be specific reference hardware. For example, Chrome OS won’t work with standard hard drives, just SSDs, but Google is supporting both x86 and ARM CPUs. That also means you won’t be able to just download Chrome OS and go, you’ll have to buy a Chrome OS device approved by Google.

| Source: Engadget |

Discuss: Comment Thread

|

Adobe Releases GPU Accelerated Flash Plugin Beta

There was a rather significant release that occurred earlier this week that I ended up forgetting about, but it’s worth mentioning now even if I’m a few days late (whoops!). Adobe released its long awaited Flash 10.1 plugin. Before you say, “Who cares?!”, realize that this is the version that brings GPU acceleration into things. That’s right… the latest version (in beta) will allow you to run version Flash videos accelerated on the GPU rather than the CPU.

Right now, the acceleration is limited to H.264 encoded videos, but that’s hardly an issue given just how popular that codec is. The GPU acceleration isn’t just for the desktop either, but notebooks, and across all three graphics card producers. Yup, even Intel has added the support via its latest driver. There’s a catch though, and it applies to all three graphic card vendors. The card you have must be relatively recent in order for the acceleration to work.

For ATI cards, both the desktop and mobile parts need to be part of the HD 4xxx family, or higher, while on the integrated side, HD 3xxx and higher is supported. NVIDIA supports pretty much every GPU that’s been released since the 8000-series, including ION. For Intel, graphics chips part of the 4 series chipset family are supported. If you want to check the full list, or get additional details, you can download the official release notes (100KB PDF).

To make sure that the GPU acceleration works, be sure to download the latest possible driver from either vendor. ATI’s latest, and stable, Catalyst 9.11 is fine, but for NVIDIA you’ll need to download the beta 195.55 driver. For Intel, version 15.16.2.1986 or later is required. To take a look at some test videos while learning something about the new Flash, you can go here. The image I’ve posted below can be clicked to go to an H.264 YouTube HD video, in true 720p. Other 720p Flash videos are likely to also work fine.

I haven’t had the chance to test out the beta plugin yet, but I’d like to soon. I was hoping my “netbook”, the AMD-driven HP dv2 would be supported, but as it was built using an already-outdated GPU when I bought it this past spring, I’m out of luck. As far as I’m concerned, though, netbooks are the largest beneficiary of this feature. It’s frustrating to run a YouTube HD video only to have it lag like no tomorrow. I’m very interested to know if the latest drivers and plugin solve this issue, so if you test it out for yourself, be sure to relay your thoughts in our thread!

This public prerelease is an opportunity for developers to test and provide early feedback to Adobe on new features, enhancements, and compatibility with previously authored content. Consumers can try the beta release of Flash Player 10.1 to preview hardware acceleration of video on supported Windows PCs and x86-based netbooks. You can also help make Flash Player better by visiting all of your favorite sites, making sure they work the same or better than with the current player. We definitely want your feedback to help improve the final version, expected to ship in the first half of 2010.

| Source: Adobe Flash Player 10.1 Pre-Release |

Discuss: Comment Thread

|

ASUS Launches G51J 3D Notebook, Featuring NVIDIA’s 3D Vision

Think that NVIDIA’s 3D Vision is nothing more than a fad? According to a press conference held earlier this week by the company, that couldn’t be further from the truth, and it has a good handful of reasons to back up its claims. Unfortunately, a lot of what was discussed at the conference can’t be repeated until a later date, but the most interesting tidbit can be… mobile 3D Vision. That’s right, there exists a notebook out there that boasts such support. Is it a surprise that it’s from ASUS?

ASUS touts the G51J 3D as being a “3D Gaming Notebook”, for a few reasons. The first is that bundled in the box is NVIDIA’s 3D Vision kit, which includes both the wireless shutter glasses and receiver. Worried about the fact that 3D Vision requires a 120Hz display, but that’s never seen on a notebook? Don’t worry, ASUS has taken care of that, and I believe that makes the G51J one of the first, if not the first, consumer notebook to feature a 120Hz display.

The question of just how useful 3D Vision is on a notebook has been debated, and in truth, it is quite hard to sell someone on the technology, especially since very few games natively support it. But at the press conference earlier this week, NVIDIA gave news of upcoming support from various game developers, so the support is indeed growing, and when a company like ASUS vouches support for a technology, especially like this, you know there’s real potential.

It’s important to note that for a game to look “cool” with 3D Vision, it doesn’t have to be natively supported. NVIDIA has recently been demoing Left 4 Dead 2 to show off the technology, for example, and that’s one game that doesn’t list support. When the first Left 4 Dead came out, I tested it out also with the 3D Vision, and though it took a few minutes to get used to, I did find it to add to the experience overall.

In addition to the 120Hz display and 3D Vision inclusion, the G51J 3D includes NVIDIA’s fast GeForce GTX 260M 1GB graphics card, Intel’s Core i7-720QM processor (1.6GHz w/Turbo up to 2.8GHz), 4GB of DDR3-1066, up to 1TB (dual drives) of HDD storage, a Blu-ray ODD, a 15.6-inch display (1366×768) and a whole lot more. The suggested retail price is $1,699.99, and we should be able to expect availability on sites like Newegg very shortly.

As the first notebook capable of producing realistic 3D visuals in games and videos, the new ASUS Republic of Gamers (ROG) G51J 3D is designed to deliver a truly immersive gaming and multimedia experience to gamers everywhere. Equipped with NVIDIA 3D Vision and bundled with specially designed 3D glasses, the ASUS G51J 3D-which sports an NVIDIA GeForce GTX 260M with 1GB DDR3 video memory – delivers adrenaline-pumping, edge-of-your-seat visuals anytime, anywhere.

| Source: ASUS Press Release |

Discuss: Comment Thread

|

AMD Posts Final 40th Anniversary Contest, NVIDIA Holding Charity Auction

Both AMD and NVIDIA shot over some information regarding events that each is holding, one being a contest, the other being an auction. Both are equally as interesting, though. On the AMD front, the company is wrapping up its 40th anniversary celebrations by offering up some game consoles that use its technology, Microsoft’s Xbox 360 and Nintendo’s Wii. The company’s giving out 10 of each, and to win, it couldn’t be much easier.

You’ll first have to go to AMD’s official Facebook page and become a fan (original idea, I know), and then you’ll have to go to the “Giveaway” tab to complete the online form. It’s not a survey, but simply AMD’s way of collecting information (which would be needed to award the prize). You will be automatically opted into AMD’s marketing list, but the form states that you can unsubscribe at any time.

NVIDIA’s event might not be quite as exciting, but it’s far more important. It’s an eBay auction for a gaming PC valued at over $10,000, with 100% of the profits going to the Silicon Valley chapter of the Leukemia & Lymphoma Society. The PC, is as you’d imagine, jaw-dropping. It features Intel’s latest and greatest Core i7-975 Extreme Edition CPU, 12GB of Crucial RAM, 2x NVIDIA GeForce GTX 295 (four GPUs!), an ASUS Rampage II GENE motherboard, not one, but two Crucial 256GB SSDs, two Western Digital Caviar Black 1TB drives and… *catches breath*.

Alright, so there’s a lot of stuff here, including water-cooling, peripherals, the full-blown Ultimate version of Windows 7, a fleet of new games, a monitor along with NVIDIA’s 3D Vision and so much more. What makes the PC all the more interesting, though, is that it’s custom all over. It features a Danger Den box chassis that’s designed with superb airflow and water-cooling in mind, and it’s been lovingly caressed by the folks at Smooth Creations – you won’t see this paint job anywhere else.

Since the machine is valued at over $10,000, it wouldn’t be surprising to see the auction hit close to that, or even well over. When it comes to auctions like these, they’re impossible to predict. If you’re looking to go all out on a new PC, though, this looks to be a great way to do it. The best incentive might be the fact that the collective product is 100% tax deductible. Not only would you be supporting an important cause, but you wouldn’t have to pay taxes on it. Talk about a win/win!

|

Discuss: Comment Thread

|

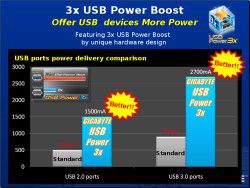

Gigabyte’s “333” Motherboards Bring S-ATA 3.0, USB 3.0 & 3x USB Power

Last week, Gigabyte held a press conference along with Marvell and Seagate to tout features with each of the respective company’s new products. On the Seagate side, there was of course the Barracuda XT, the company’s first drive to take advantage of the S-ATA 3.0 (6Gbp/s) spec. From Marvell was its SE9128 on-board chip, which is responsible for enabling S-ATA 3.0 on current Gigabyte motherboards that offer the support.

To coincide with the launch of both S-ATA 3.0 and USB 3.0, Gigabyte has relaunched a couple motherboards as revisions. To know if a board features these two technologies, you can simply look for the “A” addition in the model name. For example, the P55-UD6 becomes the P55A-UD6, and so on. Gigabyte calls the added technology on these boards the “333 Onboard Acceleration”, where the “A” in “Acceleration” is the reason for the A in the model name.

The last of the three represents “USB Power 3x”, which Gigabyte states is a unique feature at the current time. The 3x figure is literal, as in the slide below, you can see that the power output is indeed triple. The reason the company added this was because it will help negate the requirement of a power adapter for certain peripherals, such as external hard drives. It’s really hard at this point to understand just how unique this particular feature is, but once such high-powered USB 3.0 devices hit the market, testing will need to be done. You can be sure that if this is indeed as useful as Gigabyte says it is, then other companies won’t take long to follow.

|

|

|

|

Along with mentions of these technologies, Gigabyte included some light performance data as well, to help us gain a basic understanding of the improvements that can be seen. On the USB 3.0 front, and seen below, a massive 25GB HD movie would take only 70 seconds to transfer onto a perfect USB 3.0 storage device, down from 13.9 minutes on USB 2.0. Note that if this isn’t theoretical, it’s based on the best possible speeds out there, because 25GB in 70s equals 357MB/s, which current consumer SSDs can’t even manage.

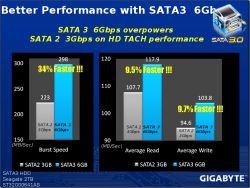

Of course, the presentation given wouldn’t be complete without some S-ATA 3.0 benchmark results, and those are as we expected, “nice”. The differences between S-ATA 2.0 and 3.0 aren’t staggering on current-gen devices, but things should improve when even faster SSDs get out here, or HDDs with lower latencies. On a similar drive, a S-ATA 2.0 drive hit a burst speed of 223MB/s, while on S-ATA 3.0, the drive hit 298MB/s. We also see boosts of 9.5% on the average read and 9.7% on the average write. Again, not major, but certainly not worthy of complaint, either.

Motherboard vendor support for both S-ATA 3.0 and USB 3.0 has been great so far, so now it’s just a matter of waiting for the respective devices to launch so we can take full advantage of what’s given to us. It’s kind of a weird situation to be in, because both of these technologies are so fast, that the products to take full advantage just don’t seem to exist, at least not on the consumer side. Hopefully that means that USB 3.0 will enjoy a full life like USB 2.0 had.

| Source: Gigabyte 333 Onboard Acceleration |

Discuss: Comment Thread

|

Intel Divulges Information on TRIM for Linux

This past Monday, I made a news post that explained my upcoming plans to install an SSD in my personal PC, to help get a better understanding of the real-world benefits of using one on a day-to-day basis. Simply put, I’m tired of boasting about how great the SSD revolution is without actually using one outside of our benchmarking machines. Once I’m prepared to do the upgrade, the drive to be installed will be Kingston’s SSDNow M-series 80GB, based on Intel’s X25-M G2.

Since that post, I got in contact with Intel who clarified a few of the points surrounding the issue of using TRIM under Linux. Sure, this is a topic that bores most people, but as a full-time user of the OS, I care about it quite a bit, and I’m sure a lot of others out there are in a similar situation as me. And as frustrating as I’ve found tracking down the information to be, I’m hoping this investigative sleuthing will affect a lot more than just me.

Intel made a couple of points that cleared up a lot, starting off with the fact that TRIM is indeed alive and well in Linux, and it comes down to having the right software installed to take advantage of it. I was pointed to a PDF that explains how the command can be executed (section 7.10.3.2), and that’s all that’s needed for a software engineer to implement the feature, whether it be someone in charge of a distro, a piece of software, or a file system.

I was also told that it wasn’t only ext4 that currently supported TRIM, but Btrfs, GFS2 and XFS do as well, with more to be added later if the file system developers decide to add it (hopefully, they will). Past the file system, there are two things that need to be in place for TRIM to work: a TRIM-aware Linux kernel or an application capable of passing the command (like hdparm) and of course, also an SSD that supports it.

Intel also stated that it’s been working with Red Hat and upstream Linux developers to provide guidance on supporting the feature in their (and other) operating systems for its particular SSD. TRIM in general isn’t SSD-specific, however, so if the support is there, then any distro to natively support it should do so with any TRIM-capable drive. Sadly, Intel couldn’t state when the fruits of this guidance would be seen, but I’m hoping it won’t be too long before something pops up.

There’s still just one thing I’m a little confused about. How can we use TRIM now? After all, even Intel said that it’s supported, so where is it? Well, there’s hdparm (a Linux hard drive benchmarking tool), but when looking at the help file, this message made me a little wary, “For Solid State Drives (SSDs). EXCEPTIONALLY DANGEROUS. DO NOT USE THIS FLAG!!“. Can you blame me?

I think a project is in order. In the next week or two, I’d like to install Kingston’s SSD not in my personal machine, but in the benchmarking machine as a secondary drive. I’ll install Gentoo Linux on the primary SSD or HDD, so as to make it easy to wipe the drive entirely should I need to during testing. Benchmarking the clean SSD, dirtying the heck out of it, and then running a benchmark again should be a good enough method of seeing whether or not TRIM works. I’m hoping that’s not going to be easier said than done.

|

Discuss: Comment Thread

|

NVIDIA Teases with Photo of GF100 Running DirectX 11

Hot on the heels of ATI’s launch of the dual-GPU Radeon HD 5970, an NVIDIA employee posted a fun little image on his Facebook that shows off a GF100 card, also known as the first card to use the company’s Fermi core. That’s not what’s important. What is, is that the card is seen running the Unigine DirectX 11 benchmark, with all its tessellation goodness in tact (you can tell tessellation is active by the spikes on the dragon). This is a good sign that the final silicon is right around the corner.

But… no matter how much NVIDIA tries to prove a point, there’s always a minor issue that causes the skeptics to question the validity of it all, and to be honest, I can’t blame them. The first problem is that this is a picture, not video, so there’s no real proof that it’s running at all. The second issue is that in the image, there’s another motherboard on a riser, with an audio card conspicuously installed, blocking out the view of the graphics card that could be running behind it.

Whether the picture is legit or not is up to you, but despite the things that work against NVIDIA in the photo, I’m going to believe that it is. At this point in time, I just can’t see NVIDIA putting out a “faked” photo after the issue with the mock card at Siggraph. Plus, we are at a point where Fermi cards are right around the corner, with a hopeful launch next month, or at the latest, very early 2010.

Aside from the benchmark being run, we can see that the GF100 card requires just two PCI-E 6-pin power connectors, which is nice to see given this is going to be NVIDIA’s highest-end offering for a while. ATI’s highest-end single-GPU, the Radeon HD 5870, is no different in this regard, but given that Fermi is supposed to be quite the power-hungry beast, seeing that we’re going to be able to fore go an 8-pin power connector is nice.

| Source: NVIDIA Teaser Shot |

Discuss: Comment Thread

|

VMware Player 3 Allows Creation of VM’s, Adds 3D Support

Last month, VMware released updated versions of its popular Workstation and Fusion virtualization software, for the PC and Mac, respectively. Of the new and updated features, we had full support for Windows 7, improved 3D rendering and a whole lot more. Seeing just what Fusion 3 packed in for Mac users, for an easy-to-swallow price of $80, I was a bit perturbed given the fact that the only solution for PC users is the $189 Workstation version.

I sent VMware’s Sr. Product Marketing Manager of the Desktop Business Unit, Michael Paiko, a couple of questions last week, including a request as to the reason why PC users don’t get a lesser-expensive version of Workstation, like Mac users do. The response surprised me, “VMware Player 3 has the ability to create virtual machines and it includes user friendly features such as easy install, seamless desktop integration (Unity), mutli-monitor support, and support for Windows 7 with Aero Graphics.“

Anyone who’s used VMware Player in the past would know that creation of virtual machines just wasn’t possible. It’s called “Player” for a reason, after all. I was a bit of a skeptic, but sure enough, after a download I saw that it was indeed possible to create virtual machines now, which puts Player on the same playing field as VirtualBox, which has allowed the same thing since its creation. Does VMware Player hold back important features to encourage upgrades to Workstation?

Not that I can see. The fact that Michael stated that Player supported Windows Aero gave me hope that it might support other 3D as well, and seen in the screenshot below, that’s exactly the case. Google Earth ran surprisingly well, and even my modest MMORPG ran as decent as can be through VMware. You can also see that the VM is using four threads of the CPU, which is another new feature (up from two). Overall, I am quite impressed with my experience with the application so far.

VMware also states that Player is a far better solution to “Windows XP Mode” in Windows 7, because it has greater capabilities, and added features, such as 3D support. Now here’s the real kicker. I was complaining that PC users didn’t have an affordable version of VMware like Mac users do, but with Player 3, I’ve been proven wrong. The difference now is that the Mac version is $80, and the PC version is $0. There might be some features Fusion has that Player doesn’t, and we plan on investigating that in the near-future, as our beloved Senior Editor and Mac fan Brett Thomas will be taking a hard look at Fusion 3, while I’ll see all of what Player is made of.

|

Discuss: Comment Thread

|

OCZ Unleashes Colossus 3.5″ SSD

At Computex this past June, OCZ unveiled an SSD unlike most others. Rather than stick to a simple 2.5″ frame, or toss a whack of chips onto a PCI-E card, the company’s “Colossus” drive is a 3.5″ solution that packs in more than one SSD and RAIDs them together, for insane speeds, and densities. Since then, the drive has suffered multiple delays, but according to a newly-issued press release, they can be in owner’s hands very shortly.

The Colossus comes in four flavors: 120GB, 250GB, 500GB and 1TB. Regardless of the version you pick up, you’ll be able to enjoy top speeds of 260MB/s read and write. Each varies slightly in its IOPS performance and sustained writes, with the best choice for overall speed being the 250GB model, which has a sustained write of 220MB/s and performance rating of 16,100 IOPS (4k random).

To hit such speeds, OCZ has implemented a dual controller design, which essentially turns multiple internal drives into a RAID 0 configuration. Because of this, it would be imperative to make regular backups of important data, as if one of the internal drives fail (this would be ridiculously rare), the data would be truly lost. The company’s choice does boost the performance though, and it’s exceptionally drool-worthy.

The Colossus drives are shipping to e-tailers now, and should be shipping to regular consumers within the next couple of weeks. Curious about pricing? If so, then these are probably not for you. They’re currently available for pre-order at ZipZoomfly, with the 120GB selling for $437.99, the 250GB for $826.99 and the 500GB for $1,530.99. It’s probably safe to say that we won’t be seeing the 1TB version readily available until these prices go down just a wee bit.

“The new Colossus Series is designed to boost desktop and workstation performance and is for high power users that put a premium on speed, reliability and maximum storage capacity,” said Eugene Chang, VP of Product Management at the OCZ Technology Group. “The Colossus core-architecture is also available to enterprise clients with locked BOMs (build of materials) and customized firmware to match their unique applications.”

| Source: OCZ Press Release |

Discuss: Comment Thread

|

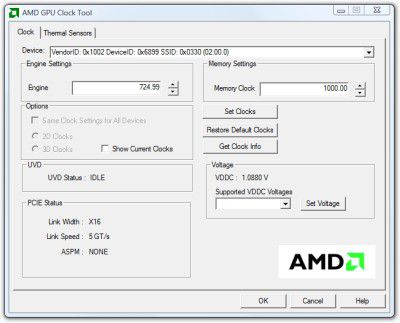

AMD’s GPU Clock Tool Simple, but Effective

In our review of Sapphire’s Radeon HD 5870 Vapor-X last week, I had a major complaint in our overclocking section. For some reason, on certain cards, the “Overdrive” overclocking tool bundled with ATI’s Catalyst Control Center is far too limiting. That card, for example, is clocked at 850MHz Core and 1200MHz Memory, and the tool only allowed a maximum boost of up to 900MHz Core and 1300MHz. That’s not horrible, but as overclockers, we obviously want a little more breathing room than this.

What’s a little bizarre, is that this limit can vary on the GPU itself. In our review of ASUS’ Radeon HD 5850 posted yesterday, I was surprised to see limits that spanned 475MHz beyond the reference Core clock, and 400MHz beyond the reference Memory clock. Compared to the limits we saw on the HD 5870, there are no limits here, essentially. So that might be a little odd, but I found a work-around that makes everything better, regardless of which GPU you own.

AMD itself actually puts out a tool called “AMD GPU Clock Tool”, although not so officially. It’s not available on the company’s website at all, but rather can be found on various tech sites, such as techPowerUp. I’m uncertain why this is, but it could be that AMD doesn’t want people to download it off its website and kill its cards, but that would seem like a strange reasoning. As you can see below though, it’s a simple tool, with the major benefit of expanded maximum clocks.

So where did AMD’s GPU Clock Tool get me? As mentioned in the review of Sapphire’s Vapor-X card, our top overclock was what the Catalyst Control Center limited us to, 900MHz/1300MHz. With this tool, though, I managed to push the card to 923MHz/1313MHz stable. Sure, that’s not a stark difference, but it is a difference nonetheless. If we had control over the voltage as well, I have little doubt that the card could be pushed even further.

| Source: AMD GPU Clock Tool |

Discuss: Comment Thread

|

Setting Up Wireless in Ubuntu is Hit or Miss

Earlier this month, I made a news post discussing some fresh changes unveiled with the latest version of Ubuntu, 9.04 (also referred to as Jaunty Jackalope). I’ve been using the latest OS on my notebook a fair bit since then, and I have to say, this is easily the best version of Ubuntu I’ve used to date, and it may well be the best version of an easy-to-deploy Linux that I’ve used to date as well. It installed easy, and has been working like a charm since.

Because my “netbook” doesn’t have an ODD, I installed the OS using a thumb drive. That process in itself is a little complicated, but another option would have been to install it from within Windows. But regardless, on the first boot, everything was working just fine, except for one thing… the wireless. This actually surprised me, because from what I recall, Ubuntu 8.04 detected the wireless just fine on the initial boot.

If I have a major gripe against Ubuntu, it’s the fact that minor things can change from release to release like this. Ubuntu 8.04, for example, booted up just fine on the dv2. Ubuntu 8.10 did not (in all fairness though, this could have been due to the thumb drive method I used, even though it’s the same for all releases). Then again, with Ubuntu 9.04, it boots up just fine, but the wireless doesn’t work. Luckily enough though, getting the wireless to function was unbelivably easy.

After I booted up with the thumb drive, I let the desktop sit for a minute, at which point a pop-up came up and told me I needed to enable a driver in order to use the wireless. Ahh, so there it is. The reason the wireless doesn’t work, is due to the proprietary nature of the driver. Why it worked just fine in an earlier release, though, I have no idea. Either way, after I chose to enable the “Broadcom STA wireless driver” and clicked “Activate”, I was online within two minutes (it had to download and install the driver via my wired connection).

That’s all fine and good. I was able to browse the Web while the install took place (the install took exactly 12 minutes, and resulted in 2.6GB being used on the HDD). Once the install was done, I rebooted and went into my newly-installed desktop environment. No surprise, my wireless didn’t work (changes to the Live CD are not reflected in the final install, and rightfully so). This time, though, simply plugging in the LAN cable and downloading the driver didn’t work, because Ubuntu didn’t come out and tell me like before that I needed to enable the driver.

After plugging in the LAN cable, and updating the entire OS through the built-in updater, something clicked, and I was able to see that a proprietary driver needed to be enabled. Once again, two minutes later, I was online via my wireless. The process wasn’t entirely as smooth as I had hoped, but it’s still a lot better than it could have been. As 2Tired2Tango mentioned in our forums not long ago, sometimes Ubuntu won’t even pick up the wireless driver at all, but this might be limited to Atheros. Either way, Ubuntu 9.04 impresses me quite a bit, and it’s reinvigorated my Linux spirit to some degree. It’s been quite a while since I’ve last touched OpenSUSE, Fedora and others, so something tells me I’m going to have to do that soon…

|

Discuss: Comment Thread

|

Personal SSD Usage: TRIM and Linux

SSDs might have been out and about for a couple of years, but up until now, I’ve never used one in my personal machine. I’ve of course fiddled with them on our benchmarking machines, but as for putting one to a more realistic, and long-term use, I haven’t. That’s going to soon change, though, as I have picked up one of Kingston’s latest 80GB drives, based on Intel’s X25-M G2. That means that this drive supports TRIM, and that’s pretty much exactly what I was waiting for before committing to an SSD.

Under the hood, Kingston’s G2 drives are a spitting image of Intel’s own, except for the sticker. So, like Intel’s G2 80GB, this drive features a 250MB/s read speed, and also a 70MB/s write speed. The write speed is lacking, it goes without saying, but as I have mentioned many times before, Intel’s SSDs excel where random reads and writes are concerned, making it a no-brainer choice for those who aren’t entirely concerned with sequential write speeds.

Installing the G2 drive, or any other that supports TRIM, in Windows is rather simple. As long as you’re running Windows 7, TRIM will work if the SSD supports it. For previous Windows OS’, a tool needs to be run on occasion to get the job done. It’s a less elegant solution, but at least there’s an option at all. For me, I have a challenge ahead. As many of you I’m sure are aware, I don’t run Windows as my primary OS, but rather Linux (Gentoo to be specific). So the next few months should prove interesting.

The situation of TRIM on Linux is difficult to understand right now. Officially, TRIM support was added to the Linux kernel last fall (2.6.28), but how it’s activated, or compiled in, I’m not sure. There’s also the question of whether TRIM in Linux is filesystem-specific. So far, the only trace I see of TRIM support is usually linked to ext4, but once again, I am not sure if it’s built in natively, or requires additional steps.

I’ve been asking around, and getting an answer to that has proven rather difficult. I plan to continue follow-up with various developers and companies to get a final answer, but unlike Windows 7, where the TRIM support is clear-cut, it’s not so much in Linux. Part of this could be the fact that TRIM is called something different wherever you look, so it’s likely easy to overlook it, even if you are staring right at it.

I’m hoping to learn a lot more in the coming weeks, especially before I install the drive. I have no real reason for holding off, except time, so I’m willing to wait to understand the situation better, so I know how to build the rig back up. Of course, if anyone out there in the Interwebs knows anything more of the TRIM situation in Linux, please comment in our thread and let me know. I should also stress that on a new SSD, especially Intel’s, TRIM isn’t that important. This is more of a major curiosity of mine, and since I’m such a performance hound, having TRIM functionality is definitely an interest.

|

Discuss: Comment Thread

|

Installing DirectX 11 Under Windows Vista

Microsoft has good reason to be pleased with its launch of Windows 7, because for one, it was fairly smooth, unlike Vista’s, and two, consumers are actually quite pleased with it. For gamers, a major technology came pre-installed with Windows 7, that of course being DirectX 11. Although once rumored to be a Windows 7 exclusive, that was put to rest shortly after the OS’ launch. Don’t expect to install it on XP, though… this is Vista and 7 only.

At this point in time, it’s difficult to install DirectX 11 if you don’t know where to look, because Microsoft has not made an installer available for public consumption at the usual sites, such as the official DirectX site. Rather, if you install DirectX from most sources, you’ll get either 9 or 10, not 11. The skeptic in me says that Microsoft is complicating the process on purpose in order to sell more Windows 7 copies, but I could be wrong. Either way, the proper installer is still too hard to track down.

So how’s the job done? You need to download what Microsoft calls a “Platform Update”, which includes a variety of updates, not only DirectX 11. Looking at the page for the download, you’ll notice mention of “Windows Graphics”, and further mention of DirectX 11. You have two options here. You could either download the entire Platform Update, or single out the DirectX 11 update. I can’t recommend either or, but I personally chose the former just because I like keeping things up to date as much as possible.

For those of you interested exclusively in DirectX 11, you can download the update here. For the rest of you, it appears that the Platform Update is now available through Windows Update, although it wasn’t a few weeks ago when I took care of it. If it’s not in your Windows Update for whatever reason, then the easiest thing to do would be to just grab the single DirectX 11 (and others if you want them) download, as Microsoft doesn’t seem to be offering the full-blown Platform Update as a single executable anymore.

There’s little reason to fuss over DirectX 11 at current time, but if you have an ATI 5000 series card, the update won’t hurt. Dirt 2 is the first game queued up for launch to take full advantage of it, and if you want to give a good benchmark a go, you could always play around with the Unigine “Heaven” benchmark, which we talked about a few weeks ago.

The Windows Graphics, Imaging, and XPS Library enables developers to take advantage of the advancements in modern graphics technologies for gaming, multimedia, imaging, and printing applications. The new features include: * Updates to DirectX to support hardware acceleration for 2D, 3D, and text-based scenarios * DirectCompute for hardware-accelerated parallel computing scenarios * XPS Library for document printing scenarios

| Source: Microsoft Platform Update |

Discuss: Comment Thread

|

Lucid Hydra Performance is Promising

If there’s one product that both AMD and NVIDIA would share an opinion on, it’s Lucid’s Hydra. This is a chip that’s set out to essentially replace CrossFireX and SLI, if all goes according to plan. Rather than handle multiple GPUs with alternate-frame rendering, Lucid’s Hydra shares the load in a way that the company won’t reveal – probably for good reason. The question, of course, is whether the company’s technology is worthy of belonging in any of our machines. Is it?

Well, it’s far too early to answer that, of course, as the product isn’t officially released, but our friends at The Tech Report have taken a trip down to Lucid’s HQs to give the product a test in its lab. Benchmarking this way is never ideal, for obvious reasons, but it’s better than nothing. After his exhaustive look within the time constraints, Scott was left impressed in some regards, but not entirely wowed in others. There’s more work to be done before the product’s final launch, it goes without saying.

In its current form, the scaling works well in some cases, but not well in others. For example, in F.E.A.R. 2, a single HD 4890 card proved faster than an HD 4890 + HD 4770, which makes no sense, as more power should equal more performance. This could be due to improper load balancing between a fast and slower card, but I’m hoping to see Lucid iron out this particular issue before the first iteration gets released. Not all combos suffer like this, however, as a GTX 260 + HD 4890 combination hit almost 25% more performance over a single GTX 260 (but it should be more like 50% at least).

Despite the issues, this technology has a ton of potential, and it’s rather incredible to see what this small company has pulled off thus far. It’s already received a bit of funding, and even Intel seems to be backing it, so with a little more time, and perhaps a little more money, Lucid could become a real competitor to CrossFireX and SLI. And who knows… it may even render those useless. For the time-being, though, and based off of the initial performance, neither AMD or NVIDIA has much to stress over.

To execute Lucid’s load-balancing algorithms, the Hyrda chip also includes a 300MHz RISC core based on the Tensilica Diamond architecture, complete with 64K of instruction memory and 32K of data memory, both on-chip. The chip itself is manufactured by TSMC on a 65-nm fabrication process, and Lucid rates its power draw (presumably peak) at a relatively modest 6W.

| Source: The Tech Report |

Discuss: Comment Thread

|

Microsoft Disconnects 600,000 Xbox Live Gamers

When the argument PC gaming’s demise arises, one of the most common issues discussed falls back on piracy. The fact is, piracy on the PC is easy, and no single person can deny that. Upon release, and sometimes even before, full games are available on various networks that include cracks to bypass protections, and the same goes for applications and other things. On consoles, though, piracy is made a lot more difficult. But, that doesn’t stop many people from taking part.

I knew that piracy on the Xbox 360 was rather common, but I had no idea just how common. Hot on the heels of the Modern Warfare 2 release, Microsoft has banned some 600,000 gamers from its Xbox Live network. That means, no official online gameplay for those using modded consoles. Currently, Xbox Live has some 20,000,000 subscribers, so 600,000 seems like nothing more than a drop in the bucket, but 3% is rather significant from some angles.

I admit I’m not that familiar with Xbox 360 modding, and I’m not sure if the only reason people mod their console is to pirate, but judging by Microsoft’s comments, that seems to be the case. It’s quoted as saying, “All consumers should know that piracy is illegal and that modifying their Xbox 360 console to play pirated discs violates the Xbox Live terms of use, will void their warranty and result in a ban from Xbox Live.“. Well, there’s not much room for confusion there.

How Microsoft detects modded consoles, I’m unsure, but given that the console must handshake with its servers, you’d imagine it wouldn’t be too difficult. So the moral of the story is this… if you want to play on Xbox Live, don’t mod your Xbox. This isn’t the first time Microsoft has had a huge sweep of bans, and I’m sure it won’t be the last. If you want to mod your Xbox, it’d probably be wise to purchase a second console, while keeping the first one “clean”.

But many gamers modify their consoles by installing new chips or software that allows them to run unofficial – but not always illegal – programs and games. However, some chips are specifically designed to play pirated games. Microsoft has not said how it was able to determine which gamers to disconnect. “We do not reveal specifics, but can say that all consoles have been verified to have violated the terms of use,” the firm said in a statement.

| Source: BBC News |

Discuss: Comment Thread

|

ASUS Gives Tribute to the Mona Lisa

Think that working at some of the largest companies in the world means there’s all work and no play? Well, ASUS might prove that once in a while, fun does have to come into the scheme of things, and that’s proven with a tribute to Leonardo da Vinci’s legendary painting, the Mona Lisa. ASUS’ recreation is found in the main hall of its Taiwan HQ, and unlike the real Mona Lisa, it’s much larger than a person, and no painter ever came near it.

Rather, this Mona Lisa is comprised of thousands of computer chips and motherboard bits. Up close, the “painting” looks like nothing more than a strange collection of various computer bits, but as you step back, the image becomes a lot more clear. Sure, it’s not the best-looking representation of the Mona Lisa ever created, but it might just be one of the most unique.

| Source: Wired |

Discuss: Comment Thread

|