- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Intel Unveils 48-Core “Single-Chip Cloud Computer” Processor

In case it isn’t obvious enough, both AMD and Intel believe that multi-core is the future of computing, and it’s hard to disagree. It’s easier for both companies to add cores than it is to build faster chips, and in a multi-threaded world, we’d still wind up with a single chip that is more powerful than anything else out there, but also a chip that’s slower in single-thread operations but king at multi-thread.

Unfortunately, this “multi-thread” scheme of things is taking longer to catch on than anyone could have hoped, but with AMD, Intel and others pushing multi-core so hard, the shift is going to happen sooner than later. About three years ago, Intel released information and proof-of-concept 80-core processor, which at the time, was simply mind-blowing. This week, the company has announced a 48-core processor, and while that seems lackluster to the 80-core, you can be assured that this one is far more refined and capable.

All 48 cores are based on IA, and as a result, each is fully programmable. Energy efficiency is key, here, and depending on performance needs, the chip is able to scale between 25W and 125W on the same silicon, which as Intel points out, is equivalent to just two household light bulbs at the high-end. Intel calls the CPU a “Single-Chip Cloud Computer”, because with such a large group of CPU cores working together, it works similarly to how major web services operate, and also super computers.

Each core on the chip is of course not going to be as powerful as our desktop chips today, but that’s the reason there are so many of them on a single chip. Working together, the performance could blow away anything we’re using today. The entire chip is split up into different sections, or “tiles” (24 * 2 cores), and sit sets of these can take advantage of independent voltage levels, and on a tile-by-tile basis, it can also take advantage of scalable frequencies.

Thanks to “routers” that are placed at each interconnect (24 in total), the cores can all talk to each other with huge bandwidth speeds, of 256GB/s. For increased memory efficiency, the chip includes a total of four independent memory controllers, with support of up to 64GB of DDR3. It’s a fast chip, with even better capabilities. When we’ll see these chips come to market is unknown, but Intel is accepting applications from those who want to become part of the research program behind it all. If you’re a developer, or simply interested in knowing more, a lot more information can be found here.

“With a chip like this, you could imagine a cloud datacenter of the future which will be an order of magnitude more energy efficient than what exists today, saving significant resources on space and power costs,” said Justin Rattner, head of Intel Labs and Intel’s Chief Technology Officer. “Over time, I expect these advanced concepts to find their way into mainstream devices, just as advanced automotive technology such as electronic engine control, air bags and anti-lock braking eventually found their way into all cars.”

| Source: Intel Press Release |

Discuss: Comment Thread

|

Inching Closer to Solving TRIM on Linux

It’s been exactly two weeks since I last reported on my investigations into TRIM for Linux, so now seems like a great time to update you all on my progress. Unfortunately, because I’ve been focusing so much on our regular content, I haven’t been able to dedicate as much time to testing things out as much as I’d like, but I have made a few strides and feel like I should have a complete answer to the situation soon.

The first problem I had to tackle seems simple. I had to figure out how to benchmark a storage device under Linux and get reliable results out of it. In Windows, there’s a variety of applications to use, such as HD Tune, HD Tach, PCMark Vantage and so forth, but there’s little choice under Linux, and of what there is, it’s all command-line-based. After fiddling around for hours on end, I settled on iozone. I chose it because it’s one of the easiest to use, and also one of the most configurable. Plus, it gave me reliable results, which is of obvious importance.

To benchmark a drive, I have iozone create a 16GB file to work with (the recommended value is ‘system RAM * 2’), and allow it use data chunks ranging between 4KB and 1MB. The exact syntax I’m using is below:

localhost rwilliams # iozone -a -f /mnt/ssdtest/iozone -i0 -i1 -y 4K -q 1M -s 16G

On a 100% clean Kingston SSDNow M Series 80GB (G1, no TRIM support) formatted as ext4, the 4K write speed was 86.55MB/s, while the read speed was 229.70MB/s. Given that these are close to the rated specs of the drive (officially, it’s 250MB/s read and 70MB/s write, but Intel’s drives have always written at higher-than-rated speeds), I feel confident in the results. So with the benchmarking solution squared away, the next chore was to figure out how to see if TRIM was indeed working or not. That of course required figuring out a scheme to properly “dirty” a drive.

In our look at Intel’s SSD Toolbox and TRIM solution back in October, I mentioned that due to Intel’s excellent algorithms in place, its drives are very complicated to dirty. As I found out over the past few days, the real issue was me, and not thinking about the mechanics of it all enough. To dirty the drive before, I simply allowed Iometer to create a file as large as the drive itself, and then re-ran the same test over and over until I was satisfied. Looking back, I can’t help but wonder what I was thinking, because when looking at the reason drives get dirtied, I could have solved my own predicament.

To effectively dirty an SSD, you need files, and lots of them. To make things easier with testing, I’ve been using the drives in my own machine, and because of this, my personal files were handy. So, with the 80GB G1 installed, I wrote a quick script to copy over my entire /documents/ folder (~74GB, 43,000 files), delete it, and then copy over three other folders (~64GB, 149,982 files), and then delete them. Rinse and repeat just once, and the result is one heck of a dirtied drive.

While the performance beforehand was roughly 230MB/s read and 86MB/s write, the results after the dirtying was 198.73MB/s read and 23.33MB/s write. Yes, that was on am “emptied” drive. The way I dirtied the drive may be a tad unreliastic, as I truly barraged the SSD with a total of almost 400,000 files, but as long as I had a dirtied drive, I was pleased. Now that I understand both the mechanics of benchmarking the drive, and also dirtying it, I’m going to install the 160GB G2 drive today and begin testing for TRIM. As it stands, I have no idea whatsoever if it’s going to work right out of the box, and deep-down, I don’t expect it to. Given where I am in testing now, though, I expect to have a real answer sometime next week, so stay tuned.

|

Discuss: Comment Thread

|

Psystar Settles with Apple, Allowed to Sell “Open” Computers

As 2009 draws to a close, I’d have to say that of all the big tech news stories to happen over the past year, the back and forth between Psystar and Apple has got to be one of the most interesting. It all started back in April when the company announced that its Mac clone machines were shipping, and proof of hard product came just a week later. Of course, this is Apple we’re dealing with, and it’s not going to just sit idly why some small runt of a company clones its machines. So, it was no surprise to anyone that the company sued Psystar.

There’s been a lot that’s happened since the summer, but in the end, Apple came out victorious and Psystar was found to have been violating its trademarks. The most recent happening, though, is a mutual agreement to drop all pending suits, and for Psystar to agree to stop selling its PCs with Mac OS X pre-installed. The work-around, is that Psystar is still allowed to sell the same machines, but sans the OS, and allow their customers to do the dirty work once they receive it.

It seems a little bizarre that even this is no big deal as far as Apple is concerned, because the entire idea around Psystar is that its PCs will have Mac OS X installed on them. So whether or not the OS is pre-installed, the end-goal is the same… customers are going to get PCs from Psystar, and put Mac OS X on there, probably with the company’s own tools. So that in itself is odd, but at least it means Psystar can still exist… albeit in a slightly different way than originally intended.

This deal opens up the doors for other companies who want to take after Psystar, as it wouldn’t make sense to allow just one company to do this and none other. It’ll be interesting to see if that happens, because it seems likely to me that if this method of delivering a Mac clone grows in popularity, Apple is going to take another hard look at things and try to kill it all off. I simply can’t see Apple standing by if something like this grows. Of course, is there even a real demand for faux Macs? That’s my question.

It’s an interesting deal, because it looks like it wouldn’t necessarily stop Psystar from selling its Mac clones. Instead, the company would be limited to selling its “Open” line of computers without OS X preinstalled, and that responsibility would lie instead with customers. Apparently that’s a compromise Apple is willing to live with, and with good reason, since the Mac maker would have to go after many other clone makers if it wasn’t.

| Source: Salon |

Discuss: Comment Thread

|

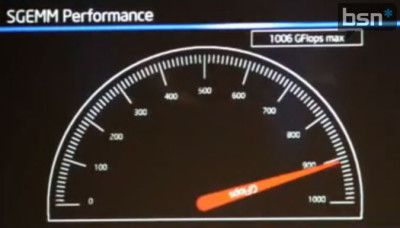

Intel’s Larrabee Computational Performance Beats Out Competition

When Intel first announced its plans to launch its own discrete graphics card, Larrabee, it seemed like everyone had an opinion. If you talked to a company such as AMD or NVIDIA, laughter, doubt, and more laughter was sure to come about. The problem, of course, is that Intel has never been known for graphics, and its integrated solutions get more flack than praise, so to picture the world’s largest CPU vendor building a quality GPU… it seemed unlikely.

The doubt surrounding Larrabee was even more pronounced this past September, during the company’s annual Developer Forum, held in San Francisco. There, Intel showed off a real-time ray-tracing demo, and for the most part, no one was impressed. The demo was based on Enemy Territory, a game based on an even older game engine. So needless to say, hopes were dampened even further after this lackluster “preview”.

But, hope is far from lost, as Intel has proven at the recent Super Computer 09 conference, held in Portland, Oregon. There, the company showed off Larrabee’s compute performance, which hit 825 GFLOPS. During the showing, the engineers tweaked what they needed to, and the result was a breaking of the 1 TFLOP barrier. But here’s the kicker. This was achieved with a real benchmark that people in the HPC community rely on heavily, SGEMM. What did NVIDIA’s GeForce GTX 285 score in the same test? 425 GFLOPS. Yes, less than half.

This is in all regards impressive, because if Intel’s card surpasses the competition by such a large degree where complex mathematics is concerned, then AMD and NVIDIA might actually have something to be concerned over. Unfortunately, such high computational performance doesn’t equal likewise impressive gaming performance, so we’re still going to have to wait a while before we can see how it stacks up there. But, this is still a good sign. A very good sign.

As you can see for yourself, Larrabee is finally starting to produce some positive results. Even though the company had silicon for over a year and a half, the performance simply wasn’t there and naturally, whenever a development hits a snag – you either give up or give it all you’ve got. After hearing that the “champions of Intel” moved from the CPU development into the Larrabee project, we can now say that Intel will deliver Larrabee at the price the company is ready to pay for.

| Source: Bright Side of News* |

Discuss: Comment Thread

|

Car and Driver Announces “Best10” for 2010

Each year, the well-respected Car and Driver magazine holds a “10Best” competition, where the editors get together and discuss which models deserve to be in the top ten for the year, based on various criteria. The cars don’t have to be ultra-expensive in order to place, but rather deliver the goods for its given price-range, and come off as an exciting car in general. This year, the magazine raised the price cap from $71,000 to $80,000, as it believes that $80K and higher is the revised point where you will begin to see diminishing returns.

The top 10 list this year is surprisingly not much different than last year’s, which could be considered “boring” or a good thing, depending on your perspective. The repeats this year include two models from Honda, the Accord and Fit. I’ll agree on the Accord’s placing, as for one thing, it’s straight-out a great-looking car, and looks much more expensive than it is. I’m not entirely enthralled over the back-end of recent Accord’s, but I have no major complaints. The Fit, on the other hand… I don’t think I’ll ever be a fan of the new-age “youthful” designs.

Also making a comeback is the BMW 3-series, in particular the 328i sedan. As much as I’m a Bimmer fan, I’m not much into sedans, but it’s hard to beat this one. It looks good, delivers 30+ MPG on the highway and has a reasonable 230HP engine to push you around all day. The magazine also mentions the M3, which is a great upgrade, as the engine becomes a 414HP V8 monster. I get chills just thinking about pushing this one to the red.

Other repeat entrants include the Cadillac CTS/CTS-V, Mazda MX-5 Miata (I can’t believe it, either), Porsche Boxster / Cayman and also Volkswagen’s GTI. The newbies to the group include the Mazda 3 (this should have been disqualified if for no other reason than it’s ridiculous front-end), Audi S4 and also Ford’s Fusion Hybrid, which oddly enough, happens to be the most technologically advanced car on the list. Just don’t tell the Cayman that.

There are faster cars, and ones with more horsepower, but the 3-series has earned a long list of comparison-test victories and now a 19th-consecutive 10Best appearance because of the instant confidence it imparts to the driver, making pushing a little harder completely comfortable. It’s the extraordinary precision and response of the perfectly weighted steering, the smooth predictability of the unwavering chassis, the optional sport seats that adjust and embrace in all the right ways, the slick six-speed manual transmissions, the firm but never harsh ride. In short, it’s the car we’d like to drive every day.

| Source: Car and Driver |

Discuss: Comment Thread

|

Intel Issues X25-M G2 Firmware Update

In late October, Intel released both a new firmware upgrade and SSD Toolbox, which enabled TRIM support on its G2 X25-M solid-state disks. Due to a data corruption bug, however, the firmware was pulled off its support site within days, and those who didn’t already successfully upgrade to the TRIM-enabled firmware had to wait. Well, that wait is done with, as the company has finally released the fixed version.

If you have an X25-M G2 (34nm), you can grab the firmware update here. If you’ve already updated with the original firmware, it’s still recommended that you upgrade, simply because you don’t want to risk running into the data corruption bug down the road. Although the firmware update isn’t designed to erase data, you should always back up before running it, just in case.

Unfortunately, Intel hasn’t posted an updated version of its SSD Toolbox, and I haven’t heard back on when that might happen. It was also pulled days after being posted, not due to the data corruption bug, but because it ended up clearing out restore points in Windows Vista and 7. For those running 7, TRIM will work automatically, but for those with Vista, you’ll have to wait until Intel re-releases the software in order to take advantage of the command.

| Source: Intel X25-M G2 Firmware Update |

Discuss: Comment Thread

|

Dirt 2 Due Out this Week, DirectX 11 Goodies Included

Where PC graphics are concerned, this week is a big one, because the first game on these shores capable of taking advantage of DirectX 11 technologies will be here, Dirt 2. The game was initially set for launch last month, but has since been delayed multiple times. Today was supposed to be the official launch, and even though AMD issued a press release stating that as being the case, it’s still not available anywhere. Steam’s website shows the date as being the 4th, and Amazon.com shows the 8th, so there’s confusion somewhere. I’m willing to believe Steam’s date, though, as it wouldn’t surprise me to see a pre-release there, followed by a store release elsewhere.

The game, which bears the full name of “Colin McRae: Dirt 2”, is the follow-up to the original that enjoyed great success. Though there are numerous racing titles out there, few take the racers off-road, and that’s what Dirt is all about. This title is the first since McRae’s passing in 2007, but he’s more than just a name on the box, as he’s featured in the gameplay, along with fellow off-road legends Travis Pastrana and Ken Block.

What makes this game special for PC gamers, though, is that it supports all the DirectX 11 goodies we had hoped would be included, and more. The game makes use of tessellation (the most commonly touted feature of DX11), and also introduces Shader 5.0 into the mix, for much more realistic lighting and shadows, without the supposed performance hit. Other graphical features include improved water effects, detailed and animated crowds (I am fussy when it comes to this, so I can’t wait to see it) and more realistic physics, such as with flowing flags and cloth.

HDR (high-dynamic range) is a feature that first became popular a few years ago, but has since been used in numerous PC and console titles, in order to give a more “realistic” (that can be debated) lighting result, whether outdoors, or indoors with the sunlight shining through a window. AMD notes in its press release that this improved HDR has twice the color depth of what was possible with DirectX9, and that the special effects can be rendered at up to 4x the resolution.

Of course, there’s much more to Dirt 2 than just the graphics, but given that this is the first DirectX 11 game available on these shores (S.T.A.L.K.E.R.: Call of Pripyat was first, but it’s currently only available in Russia), it’s worth noting the eye candy. We’ll be giving the game a good test later this week when it becomes unlocked on Steam, and it’s my hope to get a chance to review it. I admit I’m not a major fan of off-road racing games, but given the DirectX 11 support, this one intrigues me greatly.

With a wealth of image quality and performance enhancements supported only on new Direct X 11-capable ATI Radeon graphics cards, DiRT 2, the thrilling sequel to the award-winning Codemasters off-road racer, offers players a more realistic, immersive and exhilarating experience than ever before, and is the latest example of the benefits of AMD’s close working relationship with today’s leading game developers.

| Source: AMD Press Release |

Discuss: Comment Thread

|

VirtualBox 3.1 Released, Introduces “Teleportation” and Improved Snapshots

This past summer, Sun released a new major version of its popular virtualization software, VirtualBox. For fans of the tool, 3.0 brought a slew of notable features, including support for 3D graphics, increased allowed number of virtual CPUs, and a barrage of bug fixes and improved support for a wide-range of guest OS’. The first major update to the latest version is 3.1, just released yesterday. It arguably brings even more to the table than 3.0 did, so it’s definitely worth an upgrade.

One new feature is called “Teleportation”, also known as live migration, and though it doesn’t have much use from a desktop perspective, it’s going to be heavily used in workstation/server environments. It’s an interesting feature, and one I am looking forward to testing out, because although the time when a live migration needs to be done at home is rare, I have run into a few circumstances where the feature would have been nice to have.

The software’s ability to handle snapshots has been much improved as well, with the limitation of one snapshot being ceased. You can now create multiple snapshots of a VM if you like, and to take things one step further, you can even create branched-snapshots – snapshots that are a fork of another. This, like Teleportation, isn’t going to be used all too often in a desktop environment, but the feature is again going to be much appreciated by developers, and possibly also server admins.

In 3.0, VirtualBox introduced improved 3D support, and in 3.1, it’s 2D that gets some attention. The latest version has the ability to access the host’s graphics hardware to accelerate certain aspects of video. In this case, it seems limited to overlay stretching and color conversion, but that could no doubt be expanded in the future. There’s many more new features to be had, such as the ability to change your networking device while a VM is running, the introduction of EFI and more.

If you’re a VirtualBox user, 3.1 is well worth taking the time to upgrade for. For those who’ve never used VirtualBox, or might have not even dabbled in virtualization, I recommend you check it out, as it’s a completely free application. I wrote an article a few months ago that tackled an introduction to virtualization, so definitely give it a read if your curiosity is piqued! I will also be taking a hard look at 3.1 in the coming weeks, so stay tuned for an article to be posted sometime this month.

VirtualBox is a powerful x86 and AMD64/Intel64 virtualization product for enterprise as well as home use. Not only is VirtualBox an extremely feature rich, high performance product for enterprise customers, it is also the only professional solution that is freely available as Open Source Software under the terms of the GNU General Public License (GPL). Presently, VirtualBox runs on Windows, Linux, Macintosh and OpenSolaris hosts and supports a large number of guest operating systems including but not limited to Windows (NT 4.0, 2000, XP, Server 2003, Vista, Windows 7), DOS/Windows 3.x, Linux (2.4 and 2.6), Solaris and OpenSolaris, and OpenBSD.

| Source: VirtualBox |

Discuss: Comment Thread

|

Linux Mint 8 Released

The world of Linux is a fun one to be part of, because when it comes down to it, the sheer amount of choice, from applications to distributions, is unparalleled. For desktop Linux, some of the most popular options are Ubuntu, Fedora, openSUSE and a couple of others, but according to the top chart at DistroWatch, the third most popular version of Linux over the past six months was Linux Mint, and chances are, if you’re a Linux enthusiast, you know all about this one.

Linux Mint is the most popular distro based on Ubuntu, so that in itself means it has a solid base. But Mint takes things a bit further by doing more to make the OS feel like a desktop OS, rather than a work OS, by customizing the UI, including its own set of tools to make administration a simple task, among other interesting things that I won’t touch on here. For the most part, though, Mint is for those who want the robust security, and repositories of Ubuntu, but want something a bit different, with a fresh coat of paint.

I have been meaning to take a good look at Mint for the past few months, and until now, I still had an ISO on my PC for Linux Mint 6, which seems mighty foolish now that Linux Mint 8 has just been released. There are a few major changes to this new version, including an upgrade to the boot-loader, which is now GRUB 2. Because of this change, the ability to install Mint through Windows is no longer possible, and I’m not sure if it is possible as I’m not sure exactly how GRUB 2 differs from older versions.

In addition to the “Main” edition, which includes everything you need to get your desktop up and running, there’s a new “Universal” LiveDVD edition which includes a slew of languages, and no codecs support and no restricted formats. This is to assure that there are no legal issues in any country (the developers make it easy to install them, however… it’s just a menu item). Of course, Linux Mint is based on the latest Ubuntu codebase, 9.10, so that means it supports more hardware than ever, and also a new installer. I’m downloading this one, and I am going to give it a go soon (at least, well before Linux Mint 10 comes along!).

The 8th release of Linux Mint comes with numerous bug fixes and a lot of improvements. In particular Linux Mint 8 comes with support for OEM installs, a brand new Upload Manager, the menu now allows you to configure custom places, the update manager now lets you define packages for which you don’t want to receive updates,the software manager now features multiple installation/removal of software and many of the tools’ graphical interfaces were enhanced.

| Source: Linux Mint |

Discuss: Comment Thread

|

What if Coca Cola Cans Lost their Color?

More than ever, doing your part to help (or rather, not hurt) the environment is important, and that’s becoming increasingly more clear as the days pass. People themselves aren’t just jumping in to make sure they recycle their products, cut down on pollution among taking other earth-saving measures, but large companies are as well. And there’s no reason not too, because most often, cutting down on waste usually ends up saving money in the long run.

One trend I’ve noticed a lot lately is that music packaging is being cut down significantly. Many bands aren’t releasing the typical CD’s with plastic case, but rather a thin paper case, normally with a thin booklet. Out of the last ten albums I’ve purchased, at least half were like this. Two weeks ago, when I purchased Fight Club on Blu-ray, it was the same deal. There’s no paper inside the case at all, but rather just a recycling symbol to let you know the reason for it. Ironically, the case had a second “case”, or an outer layer of thick cardboard, which is actually worse than simply including a piece of paper inside, but I won’t nitpick.

Companies are now becoming a little more innovative in what they are doing to help the environment, and in the end, some of those measures are going to effect us as well. Some are better than others, but all help the environment, which is only a good thing (it’s unfortunate that it never effects the price, however). One potential idea that I saw the other day was more of a “what if?” scenario. What if Coca Cola, the world’s largest cola distributor, released its cans without paint, and instead embossed the logo and information to distinguish it from the others?

Coca Cola sells over 100,000,000 million cans of Coke, Diet Coke and Coke Zero per day, and as you’d imagine, that’s not only a lot of aluminum, it’s tons and tons of paint. If Coca Cola was to remove the paint, just imagine the effect that it would have on the industry. Other companies might follow, or there would be a real push to figure out a less harmful way of creating the color (it’s no doubt possible). It’s an interesting idea, and it’s one I can’t exactly consider outlandish, although picturing all Coke cans being like this is a little odd. But, stranger things have happened!

I assume the consumption only increases through time, but let’s take the daily 2007 numbers from Global INForM Cases Sales database: The total number of Coca-Cola cans sold per worldwide is 67,873,309. Diet Coke and Coke Zero sold 35,387,241, while My Coke sold 103,260,550. Yes, that’s all per day. So using only classic Coca-Cola’s daily sales figures, that means 24,773,757,785 are sold every year. Twenty-four billion cans.

| Source: Gizmodo |

Discuss: Comment Thread

|

Apple Releases iTunes LP SDK

A couple of months ago, Apple released a new format for music on its iTunes music store, called iTunes LP. Like the albums that LP denotes, iTunes LP was designed to give people more than just the music to go along with their purchase, such as unique video, lyrics, photos and so forth. In a sense, purchasing an iTunes LP album would be similar to buying the real thing from the store, except that it’s all digital.

Soon after Apple launched the format, rumors were fierce that the company charged an arm and a leg for the privilege of making one – a rumor that Apple fiercely shot down. Late last week, the company proved that it was just a rumor, by releasing the full SDK, called “TuneKit”, to allow musicians and record companies the ability to make their own iTunes LPs. Included are full instructions and guidelines, and to create one, you’ll have to understand HTML, CSS and JavaScript.

Part of the SDK is pre-made templates, so for those who don’t want to bother with an elaborate design (artwork aside), it’s as simple as dropping files into place, and packaging it all together to send along for Apple’s approval. One thing I’m not sure about is whether or not anyone can make an iTunes LP and send it to friends to use. This would apply to indie bands, and those without labels at all. I haven’t seen mention of it, though, so Apple might be keeping it exclusive.

The release of this SDK is important to the success of the format, although it’s hard to know just how much of a success it’s been up to this point. Being a pro-music store kind of guy, no digital package is ever going to take away the lustre of going right to the store to pick up the latest album I want, but I really have nothing against this format. As long as pricing keeps reasonable, the included extras might be worth it for some people.

The packages use standard HTML, CSS, and JavaScript to create the user interface and interaction, so any competent Web developer can put together an iTunes LP or Extra as long as the supporting content conforms to Apple’s standards. However, Apple is also providing a JavaScript framework called TuneKit that it developed while making the first iTunes LPs. Handy templates for iTunes LPs and iTunes Extras are available as well, making it a matter of dropping in “your own metadata, artwork, audio files, and video files.”

| Source: Ars Technica |

Discuss: Comment Thread

|

ASUS’ P55 Boards Don’t Degrade PCI-E Performance for S-ATA/USB 3.0

In last week’s review of Gigabyte’s P55A-UD4P, I raised a concern about using either S-ATA 3.0 or USB 3.0 devices and still achieving the best graphics performance possible. The problem, is that because these devices share the PCI-E bus, and also thanks to the lack of overall PCI-E lanes to begin with on P55, the primary graphics port will have degraded performance.

All you need to do is install a S-ATA 6Gbit/s drive, or a USB 3.0 device, and the primary PCI-E slot is pushed down to 8x speed, which may affect gaming performance on some GPUs. I’m of the belief that the performance would be next to nothing for most GPUs out there, but I do believe it could be an issue with dual-GPU cards, which require a lot more bandwidth than single-GPU offerings.

As it stands today, all of Gigabyte’s P55 offerings have this issue, but its X58 boards do not, because there is ample supply of PCI-E lanes to begin with. I touched base with ASUS to see if its P55 boards suffered the same kind of PCI-E degradation, and the simple answer was, “no”. To get around any potential issue, ASUS implements what’s called a PLX chip, which takes PCI-E lanes from the PCH to create a PCI-E 2.0 lane, which gets split in half to allow half of the available bandwidth to go to the S-ATA 3.0 usage, and the other half for USB 3.0.

Adding a PLX chip has its upsides and downsides, but the only real downside I foresee is the added cost to the board. At this point in time, Gigabyte offers a few P55 boards that go as low as $130, while the least-expensive ASUS P55 board I could find retailed for over $200. Those boards from ASUS would of course be far more feature-robust than Gigabyte’s $130 boards, but it is a little hard to look away when you are trying to set yourself up with a new build with both S-ATA 3.0 and USB 3.0 for the best price possible.

One other thing I mentioned in last week’s review of Gigabyte’s board is that I’d like to test out the effects of the degraded PCI-E slot, to see if there is a reason for concern or not. After all, if all you are using is a modest GPU, it might not need the full performance that the slot ordinarily offers, and this might be the reason Gigabyte seems so nonchalant about the entire matter. I still believe dual-GPU cards could cause an issue, but that’s something else that will be tested once I get my hands on what I need.

|

Discuss: Comment Thread

|

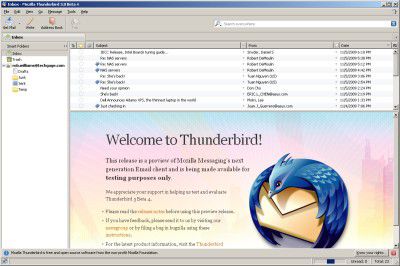

Top 10 Cross-Platform Applications

As we slowly inch towards the new year, I can’t help but think about the sheer number of “Top xx” lists I’ve looked at throughout 2009. There were many this year, some good and some “meh”, and when I think of the good ones, I tend to think of Lifehacker, since most often, I always learn of something new when looking at a top list of something I thought I was a master of.

So, the title “Top 10 Cross-Platform Apps” caught my attention, and though I expected to see 10 programs I use on a regular basis, I was surprised to see a couple listed that I’ve never heard of, and some that I forgot about, but would like to revisit again. The reason this top-list is worthwhile though, is that it doesn’t matter what OS you’re running… these apps work in Windows, Mac OS X and Linux just fine.

As the screenshot below suggests, one item on their list is Thunderbird, and that’s one I can personally vouch for as well, as I’ve been using it myself for just over five years. It has its downsides, but the upsides make up for those, and more. I don’t just use Thunderbird because the choices are limited in Linux, it’s because it’s reliable, isn’t bloated, and works well. The same can mostly be said about another entry on the site’s list, for the popular IM client Pidgin.

Other entries on the list of course include Firefox, with other familiar faces (for me) being 7-zip and VLC Media Player. Select applications I haven’t heard of include KeePass, an interesting method of having all your passwords handy no matter where you are, Miro, a cool video player that handles RSS and various popular services, Dropbox, an app that allows you to access the same folder anywhere, and Buddi, a personal finance organizer.

TrueCrypt is a multi-platform security tool for encrypting and protecting files, folders, or entire drives. The software behind it is open source, and so likely to be supported and developed beyond its current version and platforms. It’s only on Windows, Mac, and Linux at the moment (though that’s no small feat), but it can be made to run as a portable app, and its encryption standards—AES, Serpent, and Twofish—are supported by many other encryption apps that can work with it.

| Source: Lifehacker |

Discuss: Comment Thread

|

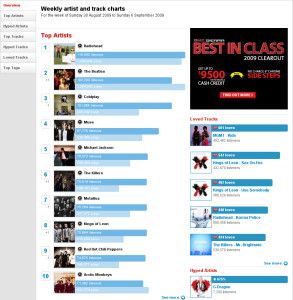

Last.fm: Behind the Popular Music Service

As I’ve mentioned in our news in the past, I’m a big fan of Last.fm, a site that not only keeps track of music I listen to, but also allows me to discover artists I’m not at all familiar with, based off recommendations that the site gives me. Because the site knows what I listen to, it can give better informed recommendations, and I can honestly say that I’ve discovered many artists thanks to the service, and I expect to discover many more going forward.

Such web services like this might seem simple on the front-end, but if you’re familiar with how web services work, and servers, you’re probably well aware that nothing is simple, and at Last.fm, there’s a lot of mechanics at work. Wired found this out by interviewing the head of web development, Matthew Ogle. Details of the back-end isn’t the only thing discussed, but things such as how the site pays artists is also tackled.

The technical bits are arguably the more interesting, though. Since the site launched in 2003, it’s streamed over 275,000 years worth of music off its own servers, which is mind-boggling to think about. Delivering all that data of course requires powerhouse machines, but I found it surprising to find out that the company utilizes solid state disks (SSDs) quite heavily. Matthew didn’t just mention SSDs, but even got specific about it, “The SSDs are made by Intel.“.

Unfortunately, Matthew didn’t go into precise detail about how much music the service holds, but he does say that they have “pretty much the largest library online“, and that even if the song isn’t on the servers, then there’s still likely some information of the song somewhere on the site. Last.fm is like the Wikipedia for music. If a band you know has a song out there, it’s likely listed on the site.

In addition to what’s mentioned above, Matthew goes into much more detail about the rest of the business at Last.fm, so if you are interested in the site, or the future of the service, this interview is a recommended read.

“We have pretty strong relationships with most of the majors and they’ve definitely been interested at one time or another in pulling specific data to, for example, judge what an artist’s next single should be. In aggregate, a lot of our data is very useful to them and we’re obviously very careful about that. We are hardcore geeks — died in the wool Unix nerds if you go back into our history — so as a result our data is under lock and key.

| Source: Crave UK |

Discuss: Comment Thread

|

TG Roundup: Radeon HD 5000 Series, Modern Warfare 2, Travel Router

To help continue the tradition of shilling our own content, let’s take another gander at our content of the past two weeks, shall we? If there’s one thing I’m pleased with, it’s that we caught up to ATI’s current HD 5000 releases and have posted reviews for the HD 5750, HD 5850 and also the HD 5870, and yes, it’s about time. We’re still behind on the HD 5970, but that was just released last week, so cut us some slack!

The most impressive of ATI’s first four cards of the HD 5000 series is of course the HD 5870 1GB. We took a look at Sapphire’s Vapor-X model, which pre-overclocks both the GPU core and Memory clock by just a smidgen. That’s not what makes the card interesting, though, but rather it’s the superb air-cooler which proved itself to be highly efficient. For any gamer who both has a real passion for gaming, and the $400 to spare, the HD 5870 is hands-down, the best choice in graphics today.

Not everyone wants to spend $400 for a graphics card though, and it’s understandable. The next best thing would be HD 5850, and we took a look at ASUS’ offering. That card proved to be quite the contender, and beat out NVIDIA’s closest competitor in almost all tests (and costs $70 less!). The performance was so good, that it’d be outright impossible to not recommend it for someone willing to spend near $300, but not more. You sure wouldn’t be disappointed.

The HD 5750 is currently the lowest-end offering of the HD 5000 series, and from that fact alone, I didn’t expect too much out of it performance-wise. It did prove itself to be a capable card, but it didn’t perform quite as well as I thought it would, when compared to NVIDIA’s slightly lower-priced GTS 250. It’s a tough one, but read the review if you are considering the card, since by the end, you should have a clear idea of what you want, and need.

What good is a graphics card without a game? We posted a review just last Friday of Infinity Ward’s latest offering, Call of Duty: Modern Warfare 2, and if you’re not one of the near 10 million who pre-ordered or purchased the game at launch, it’s well worth a read. Given the controversy that surrounded the game well before launch, it’s a hard game to sum-up in one or two sentences. Despite all the downsides of the game, though, I still have to give it… well… one and a half thumbs up. I’m not that easy.

Looking for a P55-based motherboard? Well, we have tested two recently that are worth a look, ASUS’ P7P55D Pro and Gigabyte’s P55A-UD4P. Both have their pros and cons, but both prove to be ample overclockers, allowing for 4GHz speeds on our Core i7-870 with absolute ease. Both also have a robust feature-set, and a similar price, so it’s hard to outright recommend one or the other. If USB 3.0 and S-ATA 3.0 is in the cards, then the Gigabyte board is the right option for you, but don’t run out and purchase it until you read our review, as we have a few words of warning.

Finally, there was one product that I didn’t think much of when I received it, but by the end, I was stoked. Of all things that I could be talking about, I’m talking about… a router. A travel router, at that. Sure, it sounds mundane, but TRENDnet’s TEW-654TR kicks some serious ass. Not only is it unbelievably small, but it offers the user three different modes (including one where it acts as a wireless card, for PCs without wireless access), along with a slew of features… and all for under $60. It’s sure hard to beat that!

We have some great content on queue for the next couple of weeks, but I won’t talk about any of it right now, except to say that some of it involves cool products, and some of it might involve a how-to. I’ll also add that we’ve been working on getting some contests up that I’ve been meaning iron out the details for, and I promise, some cool product is just begging to be won!

SiSoftware Releases SANDRA 2010

There are few pieces of software that have remained in our testing since the beginning of the site, and one of those few is SiSoftware’s SANDRA. The reason we like it is because it’s robust, and it’s been a trusted diagnostic / benchmarking tool for many years. Even before I became interested in computer hardware and most of what I write about today, I used SANDRA, so that’s saying something. Because we use it religiously in our testing, a new release is big news, and 2010 is no exception.

There are two major new features with 2010, and both are features which we’ve been waiting for and discussing with SANDRA for quite some time: Virtualization and improved GPGPU support. The most interesting of both for most people visiting this site would likely be the latter, which puts all recent GPUs to good use, and not in the gaming sense. SiSoftware added support for not only ATI’s STREAM and NVIDIA’s CUDA, but OpenCL and DirectCompute as well.

In the coming few weeks, I’m going to evaluate these news GPGPU tests and see if the information would be relevant enough for inclusion in our reviews. The performance of each GPGPU technology will vary, so it’s hard to settle on just one, but for the sake of being fair, OpenCL seems like the best bet, since it doesn’t favor a particular side, and is cross-platform. The results delivered are also in MPixel/s, so it’s kind of difficult at this point to gauge the overall use.

The other feature, Virtualization, is one I’m going to also look into, as we’re in the process of revising our CPU methodology, and adding a VM-related benchmark is something I’ve wanted for quite a while. It’s nearly impossible to properly benchmark virtualization technologies without multiple servers and a dedicated host, so I’m hoping this is the next best thing.

Other features of SANDRA 2010 include support for the AES-NI instruction set, which will prove useful for our upcoming Westmere review, and even AVX, an instruction set that’s not due to become available to end-consumers until Intel’s “Sandy Bridge” architecture late next year. Talk about being ahead of the curve! You can read the rest about the latest release at the link below, and to download, head right here.

Those running Windows 7 and Windows Vista will notice improved graphics and GPGPU support, including DirectX 11 Compute Shader/DirectCompute, OpenCL (GPU and CPU), as well as OpenGL graphics. In addition, Windows 7 users will also benefit from sensor information (GPS, temperature, brightness, voltage and fan), (multi)-touch, native support for SSD drives and SideShow devices. With each release, we continue to add support and compatibility for the latest hardware, architectures and operating systems, and this is no exception. SiSoftware continues to work with hardware vendors to ensure the best support for new emerging hardware.

| Source: SiSoftware Press Release |

Discuss: Comment Thread

|

Google Bans Scam Ads from its Advertising Network

As I mentioned in our news earlier this week, there’s a lot of advertising I can tolerate, but there’s also a lot of advertising that repulses me. One such form of advertising I can’t stand are scams, and I’m sure the only people against me on that are the scammers themselves. But what makes the scams even worse is that the ads they use for the purpose are likewise repulsive, and downright disgusting.

Think about it for a moment… what type of ad have you been seeing a lot more of lately, even on the biggest mainstream news sites around? Well, if you happen to stumble on similar sites as I do, then you’ve probably noticed promises of whiter teeth, without the need for a dentist visit or some other hardcore technique. There’s nothing wrong with wanting whiter teeth, of course, but I’m still stumped as to how such ads could attract even the most desperate person.

The reason the ads were “repulsive” was because the images used were the furthest thing from flattering, and they obviously used the worst case scenario for each malady. Yellow teeth would have been minor, but I remember seeing mouths with missing teeth, black teeth… just lunch-churning imagery to be caught off guard with. It appears that they must have done fairly well, since they’ve lasted so long, but as of last week, Google has taken a stance and de-listed the perpetrators from its search engine.

I’m never one to jump to praise Google, but it has to be done here, because aside from the ads themselves, this was proven to be a scam. People would suspect that they were getting a free trial of something, and instead be signed up for an expensive monthly subscription of some related medication or product. In one of the most ironic twists I’ve ever seen, one of the companies who was banned from Google, and openly admitted to misleading consumers, is now suing Google, Microsoft and Yahoo! over not de-listing a copycat site that infringed upon its name, which opened up after the initial de-listings. This is like a drug dealer going to the cops to out someone for stealing their dope. Pure ridiculousness.

When Dazzlesmile and its parent company Optimal Health Science decided to become squeaky clean and ceased their dealings with Epic, Epic allegedly continued to run the ad campaigns, according to the suit. But the new ads were funded by a Canadian named Jesse Willms, who allegedly operated infringing websites including DazzleSmilePro.com and DazzleSmilePure.com — both of which have since been removed (see screenshot). Dazzlesmile LLC is also suing Epic, Willms and AtLast, which filled the orders, for trademark infringement among other things.

| Source: Wired |

Discuss: Comment Thread

|

LessLoss Blackbody Interacts with Audio Gear Circuitry for Improved Sound

The world of audio is a complicated one. You may know that you have a keen sense of hearing, and can pinpoint the most minuscule tones of a song, but if you want to discuss it with a fellow audiophile, there’s unlikely to be a simple agreement on things. There’s also those who think that they know it all when it comes to audio, but don’t. Then of course there’s those who love audio, and don’t know the first thing about it, and that group includes me. Lastly, there’s the group of people who are so mind-bogglingly rich, that they’ll buy any piece of audio equipment, because they’re told that it’s amazing.

When I think of extreme high-end audio, I think of cables that are sold the world over. For most people, a $5 – $100 cable is going to suffice, so it’s hard to imagine spending well over $1,000 or more on one. The question that’s constantly brought up in a case like this is whether or not there’s any type of distinguishable difference between the two. Remember the Pear incident two-years-ago? An organization promised a $1 million prize if anyone could tell the difference between Pear’s and regular cables, and when a capable audiophile was found, Pear Cable backed out, for whatever reason.

Although I’m willing to bet that the vast majority of ultra-high-end audio equipment is good for nothing more than for a pat on the back for the owner, I’m sure there’s some equipment that’s actually not snake oil, and has a real use. When it comes to LessLoss’ Blackbody, I’m quick to jump on an assumption that it’s little more than a cool-looking door stop, and until we see a credible review of the product, that’s how it shall remain.

You see, the Blackbody isn’t a speaker, though it looks like one. Rather, it’s a device (I’m not sure if it’s powered or not, the article doesn’t say) that targets the interaction of your audio equipment’s circuitry with “ambient electromagnetic phenomena” and modifies its interplay. In short, it effects the circuitry inside your audio equipment, and because of it’s particle interaction, it’s touted as being able to pass through metal, plastic, wood and other surfaces. Somehow, fiddling with the very properties of the circuitry, the sound in the room improves.

I won’t go on, but what I should mention is that the device sells for $959, and it’s recommended that you purchase three if you have a proper audio setup and want the best results. You can read a lot more at the site’s product page below, but prepare to laugh or cry. It depends on your personality.

From the front side of the Blackbody, the coverage angle is 35 degrees going outwards from the middle of the star pattern. The more proximate the Blackbody’s coverage area is to your gear’s inner circuitry, the more effective it will be: proximity and angle of coverage should coincide with as much inner circuitry as possible. A quick look inside your gear can help you get an idea of where the circuits are located within your gear. If possible, several Blackbodys should be used in tandem to maximize coverage and effectiveness.

| Source: LessLoss Blackbody |

Discuss: Comment Thread

|

The Black Friday Roundup Roundup

I admit, I am never too impressed by Black Friday, even if some of the deals can’t be beat. I’m the kind of person who generally can’t stand going to the mall on normal day, so battling hundreds of rabid shoppers in the wee hours of the morning sure doesn’t do it for me. But, if you have a bit more strength than I do, and plan on sacrificing your sleep tomorrow to score some sweet deals, then you might appreciate taking a look at some of the roundups posted around the Web. Yes, this is our roundup roundup.

I’ve scoured the Web to find some of the best roundups out there, and while not exactly a roundup per se, the first stop you might want to make is Black Friday Ads, a site dedicated to supplying you with quick links to Black Friday deals from a variety of stores, including Sears, Best Buy, Newegg and a lot more. The second place to hit up would have to be Gizmodo, because their staff states it’s the “only list you need“, and I’m not about to disagree. It’s huge.

Gizmodo’s roundup might be a bit on the definitive side, but if you still haven’t seen something that strikes your fancy, there are a slew of smaller roundups around the Web, such as the one at DailyTech, focusing more on computers and TVs. i4u also has one that doesn’t quite single out particular deals, but links to some of the best out there. There are a few “best of” Black Friday roundups as well, from CrunchGear and CNET.

Engadget also has a spread out “roundup”, but it’s for the dedicated since it’s hard to find the gems (there’s no actual list). For some of the biggest online e-tailers, you could just go straight to the site to see all of the deals, as none make them hard to find. At quick look, I could easily find Black Friday deals at Amazon.com, Newegg.com, Walmart (you need to put in your zip code), and for the gaming-afflicted, there’s GameStop’s.

Alright, so there are a lot of deals out there, and while a lot of sales are going on today, the biggest ones don’t happen until tomorrow morning, so you can calmly begin your planning now. If I can offer one word of advice… be careful out there, and remember, money isn’t everything. If a situation at a store gets dangerous, get out of there. We don’t need another one of these events!

|

Discuss: Comment Thread

|

Volunteers Leaving Wikipedia at Alarming Rate

When I stand back and picture what I do on the Internet, I’m kind of shocked that it’s not much. I regularly visit certain news sites, such as CNN and Digg, sports sites, like Soccernet and ESPN.com and past that, I can’t think of any other site I visit on a regular basis, which is a bit sad if you ask me. Oh, right… I forgot all about Wikipedia. I am not sure about you guys, but this is a site I end up visiting every single day, and I can’t see that changing.

It doesn’t matter what it is… if I need a bit of info, I don’t go to a search engine (unless it’s technical), but to Wikipedia. If I want to remember who scored the winning touchdown of a previous Super Bowl, find out the brake horsepower of the original Miura, check up on my favorite bands to see if a new album is en route, find the actor from a movie or read up on some remote country that most people haven’t even heard of, I go to Wikipedia.

Heck, Wikipedia in itself could be considered a news site, because if big news breaks, you can expect the respective Wikipedia page to be updated before you even get there, and that, to me, is incredible. Because I rely on the site so often, it’s kind of disheartening to learn that there’s a noticeable trend of volunteers leaving, due to distaste with the article approval process, and other site politics. How bad is it? In the first quarter of 2008, 4,900 people left the site. This year, that number rose to 49,000.

Because Wikipedia is a non-profit organization, and has few employees, anyone who edits or adds a page to the site is a volunteer. The biggest issue is that on occasion, someone will put work into a page, upload it, and someone else will take it down because they don’t believe it fits the site’s standards. But, if the writer feels it deserves to be there, then they have to debate it out, and that’s obviously going to lessen the overall appeal of the site, you’d imagine.

I’ve written and updated certain Wikipedia pages in the past (mostly music), and I know that it’s not that much fun. So with that, I appreciate what all these volunteers do, and hate to see that the trend of them leaving continue. It raises an alarm on the future of the site, because without volunteers, Wikipedia wouldn’t have much reason to exist – at least for up-to-date information. There are a billion solutions to the problem, but likewise, there’s a billion things that could go horribly wrong with any of them. I think Wikipedia is going to be safe for quite a while, but something clearly needs to be done.

Research reveals that the volunteers who create the pages, check facts and adapt the site are abandoning the site in unprecedented numbers. Every month tens of thousands of Wikipedia’s editors are going “dead” – no longer actively contributing and updating the site – without a similar number of new contributors taking their place. Some argue that Wikipedia’s troubles represent a new phase for the internet. Maybe, as some believe, the website has become part of the establishment that it was supposed to change.

| Source: Times Online |

Discuss: Comment Thread

|

Buffalo Launches “First” USB 3.0 External Hard Drive

Yesterday, I posted news about OCZ’s upcoming USB 3.0-based SSD drives, and there, I mentioned that USB 3.0 external storage didn’t quite exist yet at the consumer level. Well, I can put my foot where my mouth is, because I stumbled on a release that occurred just two days ago that proves me wrong. Buffalo, known for its storage and networking products, released a USB 3.0 external hard drive called the “DriveStation”.

The press release issued by the company is a little misleading, as it states that the device is capable of “delivering transfer rates up to 625 MB per second“, which might be true, but, no one is going to see that kind of speed from the device until something major happens in the storage market. Even the fastest consumer SSDs on the market barely hit half of the 625 MB rated speeds. Unfortunately, the press release doesn’t state the real speeds at all.

The product page does, however, and it’s just what we’d expect: “up to 130MB/s“. That’s of course the typical speeds we’d expect to see from our standard mechanical desktop drives, and it’s also the same speeds we’d see if we were using an eSATA external enclosure. So what’s the benefit of USB 3.0? From what I can tell, a power adapter isn’t required, as the USB port itself provides enough juice. I can’t verify that for certain, but I’ve been unable to find contradicting information anywhere.

Earlier this month, it became known that Intel wasn’t planning to include native support for either USB 3.0 or S-ATA 3.0 in its chipset until 2011, and that shocked a lot of people, especially since Intel is a premium supporter of such technologies. Shortly after that post, I questioned an Intel employee about the lack of support, and the response simply came down to the lack of a real need right now. What we’re seeing from either technology at this point in time are rather minor gains in the grand scheme, so in that regard, there doesn’t seem to be much of a reason to run out and pick up a motherboard with support. for either, or both But on the other hand, with companies like OCZ pushing ultra-fast storage on USB 3.0, it may experience a quicker adoption than we might think.

“Innovation has always been Buffalo Technology’s core value and the new DriveStation HD-HXU3 combines both USB 3.0 performance, and the latest hard disk technology to give consumers the fastest, and most reliable solution on the market,” said Ralph Spagnola, vice president of sales at Buffalo Technology. “The DriveStation HD-HXU3 delivers on Buffalo’s ongoing commitment in delivering high quality, robust storage solutions to meet the high demands of today’s consumers.”

| Source: Buffalo Press Release |

Discuss: Comment Thread

|

ASUS Releases X58-based P6X58D Premium Featuring USB & S-ATA 3.0

With all the talk going around about USB 3.0 and S-ATA 3.0, you’d imagine that the first adopters would be those with high-end PCs. After all, nothing says bleeding-edge like a technology you can barely take advantage of! But that hasn’t been the case at all, as it seemed motherboard manufacturers have been much more interested in delivering both technologies to the mainstream audiences first, and idea must’ve been universal, as up until just last week, all such boards have been based around Intel’s P55 chipset.

I believe Gigabyte was the first out of the gate with its X58A-UD7 (I discussed Gigabyte’s implementation of both technologies last week), but ASUS didn’t take too long to launch a competing board, and has done so with its high-end P6X58D Premium. While Gigabyte’s implementation of USB 3.0 on its P55 boards degrades the primarily PCI-E slot speed to 8x, ASUS doesn’t seem to have that problem, either with its P55-based P7P55D-E Premium, or the P6X58D. To be fair, though, no X58 board will suffer that problem, as it has enough bandwidth lanes to satisfy the hungry appetite of USB 3.0 without affecting the PCI-E speeds.

As a “Premium” model, the P6X58D offers everything you’d expect a high-end board to. For gaming enthusiasts, there’s support for 3-way CrossFireX and SLI, while for overclockers, there’s a 16+2 phase power design, in addition to a robust heatsink configuration. Being an X58 board, it also offers support for triple-channel and up to 24 GB of RAM, with speeds up to 2000MHz (support varies from part to part… it’s not guaranteed), and of course, it also supports Intel’s upcoming hexa-core Gulftown processors.

In addition to the other usual slew of components, ASUS’ P6X58D board also includes numerous other technologies, such as TurboV, MemOK!, EPU, ExpressGate, and also DTS “Surround Sensation”. Being a higher-end board, the $309 price tag should surprise no one. It’s unfortunate that we haven’t seen more affordable options for USB / S-ATA 3.0 in the X58 space, but being that X58 is high-end itself, it’s not much of a surprise at the same time.

Supporting next-generation Serial ATA (SATA) storage interface, this motherboard delivers up to 6.0Gbps data transfer rates. Additionally, get enhanced scalability, faster data retrieval, double the bandwidth of current bus systems. Experience ultra-fast data transfers at 4.8Gbps with USB 3.0–the latest connectivity standard. Built to connect easily with next-generation components and peripherals, USB 3.0 transfers data 10X faster and is also backward compatible with USB 2.0 components.

| Source: ASUS Product Page |

Discuss: Comment Thread

|

Steam’s “Early Holiday” Sale Offers Great Games for Cheap

Black Friday is right around the corner, and there’s sure to be great deals all over the place, both online and off. Retailers and e-tailers all over are getting in on the fun, but the one to catch my attention is one that Valve is holding, through its Steam platform. Beginning today, and running through until Monday, it’ll be offering a collection of games each day at nicely discounted prices. Each deal will last just 24 hours, so acting fast is the way to go.

Currently, games such as Batman: Arkham Asylum, Champions Online, Far Cry 2 and Fallen Earth are priced at 50% off, with the newly-released Dragon Age seeing a nice 25% drop in price. The indie Osmos has an incredible 80% off, to settle in at $2, while Race Driver: GRID is 75% off to sell for $7.50. If you want to get a lot of games taken care of all at once, you can purchase the Lucasart pack, which includes 16 titles for $50 (60% off), or THQ’s pack, which includes 18 titles, for $50 as well (50% off).

If none of the games offered interest you (how could they not?!), you can purchase any as a gift. Remember, the deals change every-single day, up to Monday, so if a game you’ve been eyeing isn’t available today, it may be tomorrow.

| Source: Steam Holiday Sale |

Discuss: Comment Thread

|

OCZ to Deliver USB 3.0 SSD’s in 2010

S-ATA 3.0 and USB 3.0 are brand-new technologies, but both are readily available for the end-consumer to take advantage of right now. Of course, there’s a lot more than just having the ability to use either technology though, such as having actual product to take advantage of them. The releases up to now have been slow, with most storage solutions being high-end offerings, but OCZ issued a release yesterday that boasts its support for USB 3.0 (S-ATA 3.0 is also in its cards, though).

The company has partnered with Symwave, a fabless semiconductor company specializing in the design of SoCs (system-on-a-chip), which include USB 3.0 controllers, to deliver the company’s first USB 3.0-based SSDs. No images are available at this time, which is the reason I’m showing off the company’s Vertex SSD below in its place. There’s no performance information available in the press release, as it looks like both companies are going to hold off until CES to reveal all of the details.

Because USB 3.0 far exceeds the bandwidth limitations of USB 2.0, such storage devices would revitalize the meaning of mobile data. USB thumb drives typically top out at 30MB/s read and 25MB/s write, and that’s for lower-densities. With USB 3.0 SSD drives, the speeds delivered would be on par with actually having the SSD installed in your machine, and current consumer SSDs on the market wouldn’t currently be able to saturate the USB 3.0 bus (5Gbit/s), so the future is looking good.

It’s unknown when OCZ will be bringing such drives to market, but you could likely expect launch models to be relatively on-par with the densities we see today on high-end thumb drives, 32GB, 64GB or higher. But depending on how long the launch will take from this point, pricing might be much improved by then, so it might be common to see drives at that point with a much higher density, but not a break-the-bank price tag. Either way, I can’t wait to get a glimpse of or even test out one of these babies.

“Thanks to Symwave’s industry leading USB 3.0 storage controller, our external SSD device delivers 10x the transfer rate of USB 2.0 at 5Gb/s, as well as several ‘green’ improvements including superior power management and lower CPU utilization,” said Eugene Chang, Vice President of Product Management at the OCZ Technology Group. “We are determined to be at the forefront of the market by offering products with unparalleled performance, reliability, and design to unleash the potential of flash-based storage.”

| Source: OCZ Press Release |

Discuss: Comment Thread

|

Intel’s Gulftown Reviewed Months Prior to Launch

Ahh, the leaks just keep coming! Yesterday, I posted about a leak that came out of Germany which involved Intel’s entire upcoming Clarkdale line-up, seven models in all. Today’s leak is a little more robust than a simple list of model names and expected information, though. Believe it or not, it’s a full-blown review of Intel’s upcoming Gulftown processor, the six-core beast built upon the company’s Westmere micro-architecture.

Because Westmere is a derivative of Nehalem (resulting in it being part of Intel’s “Tick” phase), performance from a six-core processor can be predicted in a lot of ways. Simple math will tell you that Gulftown should prove at least 50% faster in applications that can take advantage of the entire CPU, although in some cases, the improved architecture of Westmere might boost the performance just a wee bit more, while also improving power consumption and temperatures.

The 50% figure holds true throughout a lot of the synthetic benchmarks in PCLab’s reviews. Most of SANDRA’s results show a 48 – 50% improvement, while most of the real-world benchmarks don’t show something so stark, as would be expected. Even Cinebench, a tool Intel itself uses to prove its processor’s worth, can’t take full advantage of the six-core CPU, showing just a 33% improvement over an equally-clocked Core i7 quad-core.

In tests such as video encoding, though, we are seeing rather nice gains. There’s a 43% increase in performance where x264 is concerned, which is quite impressive. Likewise, there’s a 46% gain in POV-Ray as well. As is mostly expected, the biggest gains are seen with true workstation apps, not the consumer apps. Still, it’s nice to know that Gulftown is coming along, and I can’t wait to take it for a spin myself in the months to come. But before that, it will be Westmere, the chip I have to admit I’ve been looking even more forward to.

The capacity ratio of the L3 cache to the number of cores remains the same: Bloomfield has 4 cores and 8 MB of L3, Gulftown has 6 cores and 12MB of L3. The L1D, L1C and L2 cache capacity remains unchanged. Also unchanged is the processor’s “make-up” – in addition to six cores in a single block of silicon there is a block of L3 cache, a triple-channel memory controller and two QPI links. One of them is used to communicate with the IOH system and with the rest of the system (X58 desktop chipset or 5520 server chipset).

| Source: PCLab |

Discuss: Comment Thread

|