- Qualcomm Launches Snapdragon 4 Gen 2 Mobile Platform

- AMD Launches Ryzen PRO 7000 Series Mobile & Desktop Platform

- Intel Launches Sleek Single-Slot Arc Pro A60 Workstation Graphics Card

- NVIDIA Announces Latest Ada Lovelace Additions: GeForce RTX 4060 Ti & RTX 4060

- Maxon Redshift With AMD Radeon GPU Rendering Support Now Available

Tech News

Lucid’s Hydra Chip to Have Dual Functionality

It’s been a little while since we last heard from the folks at Lucid, but it appears that things are still going well and it shouldn’t be too long before we see real product on the market. For those unaware, Lucid first announced product last fall during the Intel Developer Forum. Their product is “Hydra Engine”, which, as a hardware-based solution, aims to improve upon the methods used by both CrossFireX and SLI.

Rory took an exhaustive look at the technology when it was announced, so I highly recommend you check out that article to learn a lot more about what’s in store. Lars-Göran at Fudzilla managed to catch up with Lucid’s Senior Product Marketing Manager the other night in Taiwan, and discovered that things are still on track for the tech, and that in the end, the one chip will be capable of two different tasks.

The first, which has been implemented already, is to have the chip act as a PCI-Express controller, allowing ultra-fast transfers between multiple graphics cards. This is not the consumer variant though, but rather is designed for workstations. The other solution is to have it act in “graphics performance mode”, which is where it would replace CrossFireX and SLI’s functionality. Lucid believes that their solution is better, however, and from what Rory and I saw at IDF last year, we think that it may very well be. It will still be a little while before we can get our hands on actual product, but if Lucid can do all that they company claims, it should be worth the wait.

We also found out that the interface on the ELSA solution that connects to a workstation or server is using an external PCI Express x1 connection, although as this is only used for transferring data to be computed and already computed data between the CPU and the Tesla cards, this is meant to be more than enough for the intended usage scenarios. We’re not sure how this works with other solutions, such as using Quadro cards.

| Source: Fudzilla |

Discuss: Comment Thread

|

Sometimes, Free Videos are Better than Paid Versions

Over the course of the past month, I’ve kind of jumped on the digital music bandwagon, which for the most part, exclusively includes iTunes (since the Zune Marketplace is not available in Canada for whatever reason). As I’ve mentioned in the past multiple times, I love purchasing music at the store and then ripping it myself, but it’s a lot more economical to pay a dollar to download a single track, if that’s all I’m looking for, from a particular artist or album. As soon as DRM was dropped, Apple earned me as a customer, and I’m sure I’m not the only one who has begun using the store as a result of this move.

This post isn’t about my continuing support for iTunes though, but rather the laughable way in which some people get ripped off, and don’t even realize it. Take the popular YouTube video J**z In My Pants (viewer warning). In all regards, it’s hilarious, which is why I watched it near ten times. So what if a person wanted to go and purchase the video? Luckily, iTunes has it, and for only $1.49. But, that’s where the “rip-off” process begins.

See, while YouTube offers the video at an 854×479 resolution (yes, it’s actually that bizarre), the iTunes version is 640×352, almost half. Doesn’t that seem a bit odd? Paying for lesser quality? Sure, you can have the video on your own PC, but guess what? Downloading videos off of YouTube couldn’t be easier, and there are many programs out there that can do that (even legit applications like Nero offer that ability).

Thanks to one cool how-to I passed by yesterday, I found out how easy it is to save the videos in Linux. You simply go to the temp folder and grab it, and then add a file extension. From there, you can do whatever you want with it, whether you want to use it as is, or recode it for a media device. Here’s another plus, though. If you are saving an HD video, the resolution is actually 1280×720 (720p), which is now a resolution four times better than what’s available on iTunes.

It can be argued that downloading YouTube videos isn’t a noble practice, but for all intents and purposes, it seems to be. YouTube says you “can’t” download videos, but that’s because they don’t give you the ability. Once it’s cached in your browser, there’s nothing stopping you from going and grabbing it (the process in Windows is probably a little different, but I assume Mac OS X would be identical). So why exactly would someone want to pay $1.49 for a low-resolution offering, when they could go get a 720p version for free?

Simply put, content providers really need to start catering more to those who are actually paying money for these things. If YouTube offers a 720p version, then your $1.49 at iTunes should give you at least the same, or even a version with an improved bitrate. I realize I’m in the minority here, and most people won’t care, but if prices scaled a little more reasonably, I’d expect to see increased revenue all around. There’s no way I’m the only one who has clued into this.

|

Discuss: Comment Thread

|

Five Incredible Home Cinema Setups

In our previous posting, we talked about Thermaltake’s new “luxury” offerings, but I’m willing to bet that despite its luxury tag, the products could still be purchased by anyone who has a keen desire to own one. Luxury to me, is a status that should be awarded to products that are actually difficult to acquire, and much more expensive than the norm, whether it be a rumbling Ferrari, an exquisite Blancpain watch, a freshly-pressed Armani suit, a lock of Britney Spears’ hair, or… how about, a home cinema setup?

Digital Trends takes a look at a few impressive home setups, and if you are at all a movie buff, you might want to look away if you are known to spontaneously drool. It’s one thing to purchase a huge TV, a sweet sound system and other killer hardware, but it’s another to completely deck out a room to actually look like a small theater, and if you have the cash, it would be hard to resist such a thing.

Some examples here are simply astonishing. Most are complete with plush seating, a massive projector, intricate attention to room styling, and even a concession stand. Some even include a mannequin server! One interesting design (seen below) even splits the seating into two sections – one being the regular seating, and the other being at a “bar” directly behind it. I think “dream theaters” is a very appropriate term here.

Some people have houses as big as this theater, which is a sprawling 2,600 square feet and measures 28 by 40 feet with 12-foot ceilings. “”I believe the homeowner envisioned that allocating a large amount of space for this theater would pay dividends in regard to the use his family would get from it,” says Bob Gullo, president of Electronics Design Group, Inc, in Piscataway, NJ. It’s no surprise then, that the theater also cost as much to build as some people spend on their entire abode and took 548 hours to build.

| Source: Digital Trends |

Discuss: Comment Thread

|

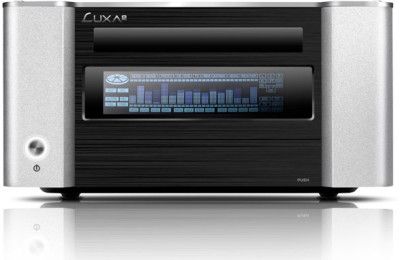

Thermaltake Launches “Luxury” LUXA2 Division

If you’ve ever built your own computer before, chances are good that you’ve either considered, or purchased, Thermaltake parts. We’ve also taken a look at quite a few of their products over the course of the few years we’ve been online, including their Spedo Advance full-tower, which we just posted a review for last week. For the most part though, Thermaltake caters to those of us who are interested in “bling”… who want products that aren’t boring, or generic. They’ve always done well in their delivery of that.

With their new LUXA2 division, which becomes official today, the company aims at a slightly different crowd… those who like great designs, keen style, a high-class touch, or, as their website shows, people who happen to have an entertainment system out in the middle of the woods.

To coincide with the new division’s launch, Thermaltake has unveiled four different HTPC chassis’, five of which are available soon, and also various notebook accessories, including a lift and a few different coolers. They even offer up a “mobile holder”… a contraption that holds your phone, and allows you to position it in different ways. Strange idea. Future products include even more of what they offer now, plus “data connect” and “handy accessory”, although we’ll have to wait and see what both of those mean.

LUXA2, a Division of Thermaltake, was created in 2009, with characteristics of simplicity, luxury, and unique lifestyle. The core design theory of LUXA2 start from simple shapes blending with luxury elements, which create the unique lifestyle for different segments within the society such as Creative Pro, Mobile Blogger, Leisure Seeker, Hip Newbie and Entertainment junkie.

| Source: LUXA2 |

Discuss: Comment Thread

|

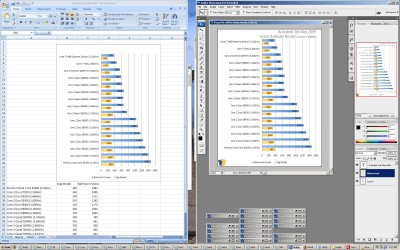

Upcoming OCZ Vertex SSD’s Boast Excellent Write Speeds

As I’ve mentioned in previous SSD-related news posts, as the months pass, the drives become larger and much faster, and that still holds true right now as it did a year ago. Heck, even the past few months. Recall when Intel released their ultra-fast 80GB X25-M. At $600, it offered 80GB, 250MB/s Read and 70MB/s Write. All-around impressive. But today, you can get an OCZ 120GB offering, featuring 230MB/s Read and a staggering 160MB/s Write… for around $300. That’s without question, impressive.

The best part is that things are constantly improving, and by the time S-ATA 6Gbps rolls around, we’re likely to see storage solutions that exceed far beyond what we’re dealing with even today. As DailyTech finds out, it won’t be long before we’re able to see yet another upgrade from OCZ, in their form of their Vertex.

Although the specs haven’t been finalized, internal testing at OCZ have revealed a top-end Write speed of 240MB/s, with the Read speed sticking to a constant 250MB/s. Obviously, some fancy RAID controller action must be going on, but even still. My only complaint about the previously-mentioned X25-M was its lackluster Write speed (it’s slower than most mechanical drives), but here we have drives up and above 200MB/s. Insane. Just imagine what the next six months will bring!

The Vertex series, which uses an Indilinx Barefoot SSD controller, was originally specified at 200MB/s sequential read and 160MB/s sequential write. However, OCZ’s internal tests show up to 250MB/s sequential read and 240MB/s sequential write speeds. These tests were conducted on an empty drive, and will not officially be presented to consumers. However, it gives an indication of how fast the final drives will be and allows some results to be inferred.

| Source: DailyTech |

Discuss: Comment Thread

|

Mz CPU Accelerator Helps Boost Application Performance

If you are at all a multi-tasker, chances are good that you’ve found yourself in a situation where the application you want to work better than the rest is severely lagging, disallowing you to get what you need done taken care of in a timely fashion. This can even happen on a beefy multi-core machine, because for the most part, the OS doesn’t care what application is hogging all of the resources.

Well, anyone who uses Windows (or any OS for that matter) can adjust specifics for a particular application, such as which cores to devote to which program, but all of that information is wiped as soon as you close the application or reboot. One application looks to take the hassle out of that though, and make life a bit easier. It’s called “Mz CPU Accelerator”, and its main purpose is to make sure that whatever application is in the foreground receives the bulk of the system resources, so that you don’t have to sit there and twiddle your thumbs.

I haven’t used the application yet, but I like its goals. Aside from having the application take control and handle things automatically, you have the ability to fine-tune a little bit, and even exclude certain applications from seeing any favoritism. Best of all, the program can save the settings for the next boot, so your system can always remain “accelerated”. The application is free, so even if it doesn’t work out as well for you as you had hoped, you lose nothing.

Mz CPU Accelerator is an application that automatically changes the priority of the foreground window, by allocating more CPU power to the currently active application (program-game). Automatically de-allocates CPU priority when a new active application is selected. Also, new version contains an option that manages the cores of your processor!

| Source: Mz Tweak, Via: Download Squad |

Discuss: Comment Thread

|

Nintendo Continues to Dominate Console Hardware and Software Sales

Back in December, we posted about Nintendo’s impressive showing this past November. Despite the fact that the economy has been in better shape, both Nintendo and companies like Apple continue to thrive, and it looks like that’s not going to change for a while. According to NPD reports, although video game sales (including consoles) continued to fall in January, Nintendo easily remains the leader, both in hardware and software.

The Wii was the leading console, no surprise, selling 679,200 units, while the Nintendo DS came in second, with 510,800 units. I can’t help but be continually impressed by numbers like these. Both the Wii and DS have been out for a while, but despite that, they still sell an insane number of units each month. The Wii came out in fall of 2006, well over two years ago, and it can still manage to sell close to 700,000 units in a single month. That’s impressive.

By comparison, Microsoft came in third from a console standpoint, with their Xbox 360, which sold 309,000 units. Sony’s products took fourth, fifth and sixth place, with the PS3 selling 203,200, the PSP selling 172,300 and the PlayStation 2 (yes, it’s still sold) selling 101,200. Even that number is impressive, given the original PS2 launched in 2000!

Nintendo games topped the software sales charts as well. First place went to the Nintendo Wii Fit with 777,000 units sold. Wii Play placed second with 415,000 units sold and Mario Kart Wii was third with 292,000 units sold. Gamasutra’s Matt Matthews thoroughly analyzed the January numbers declaring Nintendo platforms made up 52% of all industry sales which is up from 33% when compared to the same time last year.

| Source: DailyTech |

Discuss: Comment Thread

|

iMagic OS: Commercial Linux Distro Gone Wrong

The term “commercial Linux distribution” might sound a little odd at first, given that Linux is well-known for being a completely free operating system, but I do feel that in some cases, a commercial offering can be a good thing. In fact, it was a commercial distribution that helped me find my way to Linux in the first place, when I picked up Caldera OpenLinux 2.4 sometime in 2000.

Back then, I purchased a boxed copy of Linux for a reason. I appreciated the the fact that I received a manual (or in the case of Caldera, I think it was a book), and also premium support, should it have been needed. Today though, certain Linux distros are so evolved, that in most cases, free versions are superior to any commercial product. Take Ubuntu for example. It’s free, but its community is huge, so if you ever run into an issue, you are sure to receive an answer to your question fairly quickly.

I digress though, because this post isn’t about a solid commercial product, but rather a lackluster one. “iMagic OS” is a distro based on Ubuntu, that is customized in such a way to become more at-home with those who are either familiar with OS X, or Windows. Sadly, this is not difficult to pull off, since the distro appears to use a slightly customized version of KDE for Windows’ users, and GNOME with a dock for OS X users.

Another issue is the price, of $80 for the Pro version, which is truly ridiculous given it’s not even a boxed product. The second issue comes when you add something to your cart. Once you do that, you have to promise them that you’ll install the OS on no more than three computers. Seriously? How about someone downloads Ubuntu instead and installs it on as many PCs as they like? I’m keen on that idea, personally.

For the most part, this distro is the result of taking Ubuntu and customizing it… something that many, many people have done before (which is why there seem to be a hundred variants of Ubuntu available). The biggest features of iMagic seem to be features that are available on any other distro, and though the developers claim to include proprietary software, like “magicOffice”, it appears from the screenshots that it’s simply a customized version of OpenOffice. I could be wrong though, but after looking through the site, I’m doubtful.

The moral of the story? Don’t fall for cheap commercial Linux offerings. If you want to go the retail route, look for OpenSUSE or even Ubuntu. At least there, you’ll see premium support, nice packaging, and an OS that’s known to be rock-stable.

It has a license agreement that will make you blush. Not once but twice, it mentions explicitly that you are can only install iMagic OS in no more than 3 computers. You have to agree in these terms (here & here) in order to purchase this OS. According to its Wikipedia entry, “It features a registration system that when violated, prevents installation of the OS, as well as new software and withdraws updates and support.”

| Source: LinuxHaxor |

Discuss: Comment Thread

|

Microsoft to Open Retail Stores

Microsoft last week announced their plan to take a route made successful by Apple and open up their own chain of retail stores. From what I understand, locations haven’t yet been announced, but you could expect that initial stores will be placed in the same cities where Apple’s stores thrive so well, such as New York and San Francisco. Can Microsoft pull off what Apple has succeeded at so well? Only time will tell.

Like their locations, Microsoft hasn’t given specific information regarding what can be found within their retail stores, but you can expect that the vast majority of it will be Microsoft’s own software and products, while the rest will be a accessories for said products. So aside from being able to pick up a copy of Windows 7, you’ll also be able to pick up the latest Xbox 360 title and Visual Studio and probably a new case for your Zune (I’m assuming).

We won’t know how well such a chain of stores will fare in the marketplace until they open, but I’m looking forward to seeing the result. Speculation has already begun though, on blogs all around the web. One in particular at PC World came up with a somewhat humorous top ten list of features that you can expect to see at the new stores, including the ones quoted below.

Credit: Robert Scoble |

Instead of a “Genius Bar” (as Apple provides) Microsoft will offer an Excuse Bar. It will be staffed by Microsofties trained in the art of evading questions, directing you to complicated and obscure fixes, and explaining it’s a problem with the hardware — not a software bug. – Fashioned after Microsoft’s User Account Control (UAC) in Vista, sales personnel will ask you whether you’re positive you want to purchase something at least twice.

| Source: PC World |

Discuss: Comment Thread

|

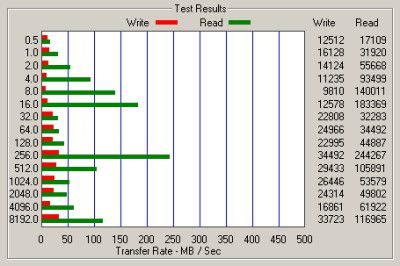

SSDs Suffer Performance Degradation Over Time

We’ve talked a lot about SSDs in our news section before, and for good reason. They’re small, fast and desirable in almost every way. But, like most things that are “too good to be true”, there are a few caveats – namely price and fragmentation. Our friends at PC Perspective decided to thoroughly investigate the latter issue, and I have to admit, the results are very interesting. Simply put, while SSDs can offer incredible performance at first, their performance can degrade fast.

Due to how SSDs are designed, they’re bound to become fragmented, and that issue is amplified when we’re talking about models with small densities, such as 80GB or lower. Fragmentation might sound like a simple issue, but really, the effect it has on performance is huge, and the problem goes beyond something that a defragmenter could fix (most often they will make things even worse). In some of the site’s examples, the write speed degraded to around ~25MB/s, from the normal 70 – 80MB/s. That’s massive. Surprisingly enough though, that issue can actually be remedied…

… but, with yet another downside. In order to restore the drive to its original performance state, you’d have no choice but to format the entire thing. If the drive is stores your main OS, you can likely trick it in a few ways to improve performance, but you’ll never see the performance you did after the first OS install. The article goes into depth as to why, and how to remedy it, but in truth, it really doesn’t put SSDs in a terrific light. Larger SSDs would put the issue off for a little while, but that would all depend on how fast you fill it up. Hopefully OS’ and SSD memory controllers (or the firmware) can become even smarter sooner than later to avoid such performance degradation in future models.

Once internal fragmentation reached an arbitrary threshold (somewhere around 40 MB/sec average write speed), the drive would seem to just give up on ‘adapting’ its way back to solid performance. In absence of the mechanism that normally tries to get the drive back to 100%, large writes do little to help, and small writes only compound the issue by causing further fragmentation.

| Source: PC Perspective |

Discuss: Comment Thread

|

Huge List of Windows 7 Tips & Tricks

Where “top lists” are concerned, there’s no shortage, but sadly, the really good ones are a little more rare. Like the one I posted about earlier, one posted last week at Tech Radar is one of the better ones, with a staggering 50 tips and tricks for Windows 7 users. Even if you think you know Windows (and most of you probably do), you’ll no doubt learn something new from this article.

Of these tips, many refer to features I didn’t even know existed in Windows 7, including one I’d expect to get a lot of respect called “Problem Steps Recorder”. One of the biggest cause of frustration is trying to help someone with their computer over the phone or Internet, and not really knowing what the issue is since the person can’t explain things properly.

PSR is designed to follow the users steps, by capturing keystrokes, screenshots, etc, and then bundle them all together in an archive for someone else to review. So rather than trying to picture what problem the user is experiencing, you’ll be able to review the hard evidence. This is a great addition to the OS, and one I’m sure is going to come in handy for many people.

Other notable features include the ability to burn ISOs using built-in tools (finally!), improved family control, an RSS-based wallpaper, the ability to customize how System Restore functions and much, much more. The best part? These tips are bases just off of the beta. By the time the RTM version hits, we’re bound to see such lists become even more robust.

The Windows 7 Media Centre now comes with an option to play your “favourite music”, which by default creates a changing list of songs based on your ratings, how often you play them, and when they were added (it’s assumed you’ll prefer songs you’ve added in the last 30 days). If this doesn’t work then you can tweak how Media Centre decides what a “favourite” tune is- click Tasks > Settings > Music > Favourite Music and configure the program to suit your needs.

| Source: Tech Radar |

Discuss: Comment Thread

|

Fun Facts: Techgage’s Browser and OS Usage Statistics

It’s been a while since I last took a hard look into our stats software to see where the browser/OS war on our website stands, so I figured now was a great time to do so. Although we won’t reveal actual traffic information, what we’ll show here is usage information for both web browsers and operating systems. For the most part, this information is of no real importance, so just take it for its fun-factor, rather than a gauge of the entire Internet’s usage.

During 2008, the leading web browser used to visit the site was Firefox, which isn’t too much of a surprise. However, at 51.28% usage, it isn’t exactly dominating. The second spot belonged to Internet Explorer, which captured 38.98%, while Opera took third with 4.57%. Apple’s Safari and Google’s Chrome took a combined 3.97%, while the rest of the usage was taken by more obscure browsers, such as Konqueror and Netscape.

As it appears, not too much has changed this year so far. Since January 1, Firefox has retained its number one spot with 51.79%, while IE suffered a slight decline to 35.80%. Opera kept hold of an almost equal share, with 4.38%. Chrome and Safari both experienced increases though, with the former at 4.09% and latter at 2.97%. Of the Safari usage, the vast majority belonged to Mac users, while Windows and the iPhone were basically even.

How about operating systems? Again, this is no surprise, but Windows took the lead by an extremely large margin, with 89.58% usage. Linux comes in second with 5.31%, while Mac OS X takes third with 4.39%. All other usage is taken up with mostly mobile browsers, such as the iPhone and iPod. Oh, and for those of you curious, of our Windows’ stats, 63.42% of users were running XP, while 32.16% were running Vista.

The year has basically just begun, and the way things are looking, these stats may change quite a bit before the year’s through. It’s likely that Internet Explorer 8 will land later this year, and with the Mac’s usage growing steadily, we may see a stark increase there as well. It’s going to be an interesting year where OS’ and browsers are concerned.

|

Discuss: Comment Thread

|

Top 10 First-Person Shooter Cliches

Where the word “cliche” is concerned, the definition might as well include discussion of first-person shooter titles. It’s one of those words that I don’t hear of too often, but when I do, most often the person will be talking about an FPS game. What is it about that genre that makes cliches so noticeable? Well, it could be that certain gameplay aspects are done to death, and in multiples, in countless FPS titles.

It’s tough for a developer to create a totally unique gaming experience, that much I understand. But, a lot can be improved when taking a look at lists like the “Top 10 FPS Cliches” that was posted at IGN last week. For the most part, I have to agree with almost everything listed, and really, I didn’t even realize just how overused some of these gameplay elements were until I saw them listed.

For example, when is the last time you played an FPS that didn’t have some “mega” weapon that could be acquired near the end of the game? Or one that contained barrels you couldn’t explode? Or crates!? Or the ability to hold a ridiculous amount of gear/ammo without being weighed down? Although some FPS games have done well to stray off the beaten path, there’s definitely room for improvement. If developers took a look at this list and tried to create a game with none of what’s listed here, we’d no doubt wind up with some pretty interesting results.

Alright. I’ll exclude exploding barrels. I won’t complain if they stay.

Whatever this evil villain’s plan is, it apparently can’t be pulled off without a tremendous quantity of explosive liquids. Fortunately for us, this vast supply of highly volatile fluids is so important to his operation that he wants to know where it is at all times. With that in mind, he’s had the stuff put into bright red barrels that are stacked neatly in plain view.

| Source: IGN |

Discuss: Comment Thread

|

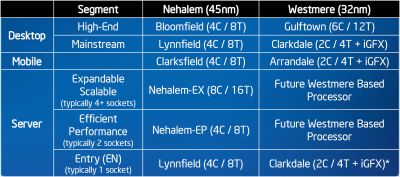

Intel’s Gulftown to Use Native Hexa-Core Design

Earlier this week, we posted an article that took a look at Intel’s current CPU roadmap, which included desktop, mobile and server products. In case you haven’t taken the time to look through, I recommend you do, since there are some extremely interesting products in the pipeline. This includes the launch of the Dual-Core Westmere chips later this year, which will feature both a CPU and GPU on the same substrate.

One area of confusion hovered around Gulftown, though, a Hexa-Core enthusiast chip that offers up 12 threads of operation. The question was how such a chip would be built, because six is an odd number where cores are concerned. Not to mention that Core i7 featured a native Quad-Core design. It would no doubt be an odd choice to backtrack and release Gulftown with a similar design as Core 2 Quad, which were two Dual-Core dies set next to each other.

The reason for concern was due to the fact that Westmere lacks certain features that Core i7 can boast about, such as a QPI bus and also a triple-channel memory controller. After discussing the design with Intel though, we found out that similar to Dunnington, Gulftown will feature a similar design as Core i7, but will just be using Westmere cores at the base, rather than Nehalem cores. Simple explanation, really.

One of the biggest reasons to look forward to Gulftown is the fact that it’s still compatible with the X58 chipset, which is a rather unique thing to see happen. Deep down though, the chip will include a similar design, so a BIOS update for your X58 motherboard should be able to fix whatever needs fixing. As discussed in our article discussion thread though, when Gulftown gets released, USB 3.0 and SATA 6 Gbit/s will be available (or should be), and those two will be difficult to ignore.

| Source: Intel Reveals Westmere 32nm Roadmap |

Discuss: Comment Thread

|

Silverlight Plugin for Linux, Moonlight, Sees Version 1.0

As a Linux user, I can state that it’s at times frustrating to have to wait so long to see a technology become available for the OS, namely, web-based technologies. Adobe’s Flash technology, for example, took a while to mature on the platform, especially if you ran a 64-bit OS, and it really wasn’t until recently that I found myself actually pleased with their offering.

But while Flash might be one of the most popular plugins on the web, it’s not the only one. Microsoft’s Silverlight technology has been doing a fair job of playing catch-up, and for the most part, I’ve been pleased with what I’ve seen with the technology on Windows. Well, the wait is somewhat over for those who want Silverlight functionality in their Linux OS, but there are a few caveats.

The first is that this “final” 1.0 version supports only Silverlight 1.0, and since many sites that are running Silverlight are running 2.0, that still leaves us in the dark. However, with development so active, we should be seeing 2.0 support very, very soon, with an alpha supposedly planned for next month. Also, once the plugin is installed, not all 1.0 content will work if special codecs are required. Luckily, to install those, you will be prompted and just be required to click “Install”. Fairly simple overall.

While the plugin lacks a little bit to be desired, I have to give kudos to Miguel de Icaza and his team for their dedication to this project since it’s beginning in 2007. It’s come a long way, and hopefully we’ll be able to see much success with it in the months ahead.

Microsoft’s proprietary multimedia codecs, which are used for streaming video content, are not bundled with the plugin. When the user visits a web page that requires the codecs, Moonlight will launch a codec installation utility that can automatically download the codec binaries from Microsoft and install them on the user’s system.

| Source: Ars Technica |

Discuss: Comment Thread

|

Intel Decides to Include SLI Support on the DX58SO

When we posted about Core i7 preview back in November, one complaint we had with regards to Intel’s own DX58SO motherboard was the lack of NVIDIA SLI support. The reasons behind this were laid out in an article we posted a few months prior to that, stating that NVIDIA will charge manufacturers on a per-board basis to include the feature. This reportedly tacks on $5 to any board that bears support.

Since neither of these companies put much effort into getting along, Intel saw no reason to cave into NVIDIA’s offer to include SLI on their board, and for the most part, it seemed like NVIDIA didn’t have much interest in selling them the capability, either. For those looking to build a high-end i7 machine though, that ruled out Intel’s “Smackover” board, unless you had CrossFireX in mind. That changes today, however.

For whatever reason, Intel decided to opt for SLI support on the DX58SO board, and it’s retroactive. Anyone who owns the board already can go and download the latest BIOS to enable the support. How licensing works given so many boards have already been sold, I’m unsure. Intel likely had to pay a flat fee for all of the boards sold so far, and will continue to give NVIDIA whatever it is they charge for all forthcoming sales. It’s unlikely that the current price for the board will increase due to this change, however, since the board is already priced a little high given its feature-set.

All motherboard manufacturers must pay the required hefty fees and submit boards through the certification process at NVIDIA’s Santa Clara Certification Lab. There are unique ‘cookies’ given to each vendor to enable SLI usage. NVIDIA branding and logos must be prominently displayed as a condition of SLI licensing.

| Source: DailyTech |

Discuss: Comment Thread

|

Would You Purchase an Xbox Live Gamertag?

Own an Xbox 360? Subscribe to Xbox Live? Have a cool gamertag that wasn’t forced onto you because a thousand variations of the same nickname already existed? Well, Xbox live user “Hitman” sure does, and he realizes it – so much so that he’s working on getting it sold. Seriously. Hard to blame him though. People will pay for virtually anything nowadays, as long as it strikes their interest.

In the case of the name “Hitman”, it’s wanted by virtually everyone (except me). It’s one of those names that seems to scream “elite” and “top dog”, and something of some vital importance. I admit, I think choosing such a popular name to use as your own moniker is a little foolish, only because it’s far from being unique, or personal. If I have to count how many times I’ve seen the name “Legolas” in an MMORPG…

But I digress. I might not have interest in the name, but it’s clear that many people do, and I for one am curious to see how well the eBay auction performs. But it begs the question. Doesn’t Microsoft have some policy against this type of action? If not, we might very-well see some other Live old-schoolers going down the same route.

Update: Looks like Microsoft might have had an issue with the eBay auction after all… it’s now been removed.

![]()

Graziano said he thought there might be some big money in selling Hitman, particularly because he said he once saw an article on a video game site that estimated “Hitman” was the most commonly used term in Xbox Live gamertags. Indeed, he said that when he plays, he commonly encounters comments like, “Wow, so you’re the original Hitman?” and, “How’d you get that screen name?”

| Source: CNET Gaming and Culture Blog |

Discuss: Comment Thread

|

Getting the Most Out of an SSD

With there being so much solid-state disk talk all over the place, there are also countless myths floating about as well. Thanks to these, many have the wrong idea about SSD’s, and how owning one will make their lives better. Information Week takes a look at a few common myths and facts to help people choose the right SSD, if any, and for the most part, I agree with most of their points.

One that stood out to me was that an SSD will boot faster than a mechanical drive, and that’s definitely true. What most people don’t take into consideration though, is the fact that the BIOS POST process is what takes up most of the time when booting up, and if you have a bloated Windows installation running, it’s going to take a while to load up, regardless of the storage solution. Launching individual applications is no doubt much faster though, and that’s where the most noticeable gains usually are.

Another interesting myth is that an SSD will prolong your battery life, which is also true. I can’t say that I’m truly impressed with the gains I’ve experienced in the past though, which have been pretty negligible. It really seems to depend on what you’re doing, and if you’re watching a movie that’s running off the drive, then your CPU usage is going to go up (SSDs have a much higher CPU usage than mechanical drives), so in some cases, the battery-life might be even worse. This is one issue in particular I’m looking to study deeper in the future though.

This is a fairly good article though, and a good one that can be passed along to friends or family who are bugging you about SSDs. Any chance to pass off a link rather than explain the entire technology is a win/win in my books.

Many pundits treat a hard drive as the only component that uses electrical power. Au contraire! It is, in fact, a minor component, overshadowed by the power draw of your screen, CPU, memory, and GPU. The brighter your screen, the more intense your calculations or your display rendering, the more acutely do those four components suck your battery dry. And if you have an optical drive, let’s not forget all the spinning it does while you’re watching Twilight.

| Source: Information Week |

Discuss: Comment Thread

|

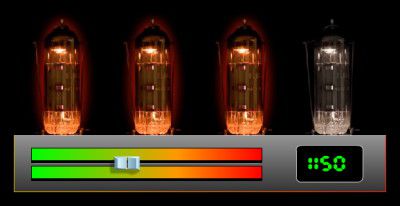

EVGA’s GPU Voltage Tuner Tool Released

Overclocking. Hobby for some, life for others. In the past, I used to take overclocking seriously to a certain degree, but to become truly successful at it, you have no choice but to devote a lot of time and energy to studying up and sometimes even modding whatever product it is you’re trying to overclock. That’s been especially true with motherboards in the past, and it’s never too uncommon to see extra wires soldered from one point to another in order to get the upper-hand in a benchmarking run.

This isn’t that extreme, but to offer even more control to their customers, EVGA has released their long-awaited “GPU Voltage Tuner”, which does just as it sounds. With CPU overclocking, the option to increase the voltage can be found right in the BIOS… it’s far from being complicated. With GPU overclocking, the process could prove to be much more complex, since the option for voltage control has never been easily available.

With this new tool, which exclusively supports EVGA’s own GTX 200-series, the user is able to increase the voltage in what seems to be three or four steps total, and as it stands right now, the red area, as seen in the below picture, is not yet enabled, and I can jump to conclusions to understand why. With this tool, heat is going to increase, so it’s imperative to make sure you have superb airflow. If your card dies when using this tool, it doesn’t look like it’s warrantied, so be careful out there!

This utility allows you to set a custom voltage level for your GTX 295, 280 or 260 graphics card. (see below for full supported list) Using this utility may allow you to increase your clockspeeds beyond what was capable before, and when coupled with the EVGA Precision Overclocking Utility, it is now easier than ever to get the most from your card. With these features and more it is clear why EVGA is the performance leader!

| Source: EVGA GPU Voltage Tuner |

Discuss: Comment Thread

|

Windows XP’s Mainstream Support Ends Soon

We’ve been hearing about a supposed death of Windows XP for quite a while, but up to this point, not too much has happened that proves that the OS is really on its way out. Well, that’s until now, since Microsoft will be moving to pull all mainstream support for the OS within the next three months. From that point forward, it will be put on “extended support”, which will be beneficial to companies, not the end-user.

The humorous thing in all of this, though, is that Windows XP really isn’t that ready to die. The reason is its usage, which is still sky-high, despite the fact that Vista has been out for now just over two years. According to our analytics software, 63.55% of our Windows visitors in January were using XP, while 32.28% ran Vista. Though numbers aren’t unlike other places on the web, though, which makes things even more interesting.

XP will become the first OS to have it’s plug pulled while still in the so-called prime of its life. I admit that I’ve come to enjoy Vista a lot more since its launch two years ago, but XP is still one heck of a stable OS. To add to it, this will also be the first time that Microsoft has pulled the plug on an OS still being sold. Netbook vendors have been wise to include XP on their lower-powered devices for obvious reasons, but after April, the support for the OS will be shifted from Microsoft over to the vendor.

My question is, after April hits, will people really decided that the time is right to finally go upgrade to Vista, or wait for Windows 7? I have a gut feeling at the answer is no with Vista, but things might begin to change a little bit with 7, especially since it has the capability to run just fine on netbooks as well.

“If you’re buying a netbook with XP, you have to accept that XP is not in mainstream support,” Cherry added. Not that that should matter much. “XP is well known by this point,” Cherry argued. “A significant number of its problems have been identified and resolved, so the chances aren’t great that there would be some new major issue.”

| Source: PCWorld |

Discuss: Comment Thread

|

AMD Launches Five AM3-based Processors

AMD has today announced five new AM3-based processors that we’ve been expecting to see for a few months now. These new chips perfectly follow-up to the company’s Phenom II launch last month, which featured two Quad-Cores, the X4 940 and X920. Today’s releases feature three more Quad’s, and two Tri’s, all of which are priced very competitively to Intel’s offerings.

The top model of the new releases is the X4 910, which at 2.6GHz, settles right below the X2 920 at 2.8GHz. Like its bigger brothers, the 910 features 6MB of L3 Cache. Also introduced today are the 800 series, including the X4 810 and X4 805. Both models are Quad-Cores, but feature slightly less cache than the 900 series, with 4MB L3 Cache. These models are clocked at 2.6GHz and 2.5GHz, respectively.

On the Tri-Core side of things, we have the X3 720 Black Edition, which AMD promises to be one heck of an overclocker (they expect people will have even better luck overclocking this chip than they do with the X4 940). Given this chip clocks in at 2.8GHz, it’s going to be plenty fast even without overclocking. The X3 710 becomes AMD’s current lowest-end AM3 offering, at 2.6GHz. Despite the missing core, these Tri’s still include 6MB of L3 Cache like the 900 series, so in some regards, they may even out-perform the 800-series Quad’s in certain scenarios.

One of the biggest new features of the AM3 platform is DDR3 support, and although it really wasn’t that needed (the differences are mostly moot for most people), it’s nice to now have the option. The best part of AM3 is that it still natively supports DDR2 as well, and can also be used in all AM2+ motherboards. So, if you don’t want to upgrade to an AM3 motherboard right now (and it seems like you shouldn’t), you can still pick up a new chip and use it in your current motherboard. AM2 boards might work in some cases, but you’d want to do some reading up on it first to make sure.

We’re running a little behind here, but you can expect our review of the X4 810 and X3 720 BE later this week.

AMD (NYSE: AMD) extended the value and lifespan of its heralded Dragon platform technology today with five new additions to its AMD Phenom II processor family, including the industry’s only 45nm triple-core processors and three new AMD Phenom II quad-core processors. These AMD Phenom II processors deliver choice and lay the foundation for memory transition; they fit in either AM2+ or AM3 sockets and support DDR2 or next generation DDR3 memory technology. AMD continues to enhance the Dragon platform technology value to OEM and channel partners as well as Do-It-Yourself (DIY) consumers who build and customize their own PCs.

| Source: AMD Press Release |

Discuss: Comment Thread

|

Virtualization is for More than Just IT Departments

Given my position here at Techgage, I tend to be familiar with many aspects of technology, but sometimes, there are areas that I don’t pay too much attention to, for various reasons. Take virtualization, for example. It’s a technology that’s been around forever (even dating back to the earliest computers), but it’s not something I’ve ever looked that deep into. I tried Xen when it was new, and thought it was “neat”, but I never thought much about it, especially with regards to how the general technology could benefit me. Like many others, I thought virtualization mainly had a place in an IT environment, but that’s really not the case.

In the previous news post, I mentioned that I use Linux as my main OS. As I’m sure fellow Linux users could relate, that can at times be inconvenient. Personally, I need Windows for site and non-site reasons, such as requiring Office 2007 for our review graphs or for iTunes to purchase some music. Rebooting out of Linux and into Windows is the furthest thing from convenience.

At some point last week, I realized that I’ve put off virtualization for far too long, and decided to give it a try. For those unaware, virtualization (or rather, full hardware virtualization) is a technique that allows you to run a complete operating system from within your current operating system. So, if you run Windows, you’d be able to run another version of Windows (or the same if you choose to), Linux or even Solaris… and others (even DOS!).

That idea tempted me for obvious reasons. To be able to use Windows from within Linux would mean I’d never have to reboot, unless I wanted to get some hardcore gaming going. The first application I tested out was VirtualBox, which appears to be the most popular free solution, and for good reason. Within an hour, I had a working version of both Windows XP and Windows 7, and both ran well… I was surprised. I’m also giving VMware’s Workstation a try, and I have to say, that program is even more impressive. With that, 3D graphics is supported to a good degree, even allowing me to play my favorite MMORPG without extremely minor side-effects.

To make a long story shorter, I can honestly say that I’m now a huge fan of virtualization, especially now that I realize just how reliable and fast it can be. On this Quad-Core machine with 8GB of RAM, I can run a Windows XP environment at near-full speed (as you can see in the screenshot above). Also, even though I had that much stuff open, I had no problem with working at a normal pace. The downsides are few, and again, I’m really impressed by how well it all works. I haven’t had a single serious issue with VMware or VirtualBox since I began toying with them, and I’ve tested three versions of Windows and three Linux distributions so far.

Due to this new-found passion I’ve discovered, you can expect to read some virtualization-related content on the site in the weeks to come, starting off with what will probably be an article dedicated to an introduction to the technology, with a far more in-depth look at how it works and what it is. There’s only so much space in a news post, and I’ve already blown past the limit I keep in my head. If you want to tinker around with VM’s as well, remember that it’s free to do so with VirtualBox. I found VMware to be a bit more stable and much more robust, but it’s not free, so it might not be worth it to everyone (it allows 3D whereas VirtualBox really doesn’t, though).

|

Discuss: Comment Thread

|

What Linux Content Should We Write?

Over the weekend, I decided to do a little maintenance on the site, and at the same time, I checked out some of the our categories to see where we need to push a little more focus to. Some categories need more love than others, but when I peeked inside our list of Linux content, I was pretty-much shocked. The last real Linux article we had was in August of 2007, one that took a deep look at how to take full advantage of rsync in order to properly back up your machine. The information there is still completely relevant, so it’s definitely still worth checking out.

One reason I found our lack of recent Linux content a little strange is due to the fact that I run Linux as my main OS, and have for just about three years. So, why I haven’t thought much about writing Linux content in recent months is beyond me, but I’d like that to change. There was a lot to write about back then, and there’s sure a lot more to write about now. To add to things, we have a lot of visitors running Linux, so it’s clear we need to publish some more relevant content for those folk.

So, my simple question is this. If you are a Linux user, or a wannabe Linux user, what type of content would you like most to see on our site? How-tos? Top (5, 10) lists? Distribution reviews? In the past, we’ve published varied content, but every-single article performed quite well, so there seems to be great interest all over the place. One of the most fun articles I had to write was game emulation under Linux, which was probably because it forced me to game a little bit. That article is also still worth checking out if you want to open up vast amounts of opportunity for gaming, or simply want to go back and play a classic console game you grew up with.

If you have any ideas, please feel free to post them in our related thread (found below), and we’ll definitely consider all recommendations. Availability of time will definitely come into play here, but I’ve enjoyed writing Linux content in the past, so I expect that fitting it into my schedule wouldn’t be too difficult. I have been working sporadically on one piece of content that I hope will be posted in the next month, although I won’t talk much about it now. It should be a good one though, and perfectly compliment what has become our number one piece of content of all time (and yes, it’s Linux-related!).

|

Discuss: Comment Thread

|

Texas “Hackers” Start Something, Hoosiers and Illinois Scream “Me Too!”

When road signs in Texas warned of Nazi zombies last week, we all collectively had a good laugh. When “hackers” pulled the same stunt a few days later in my beloved home state of Indiana, I could barely muster the energy to defend the copy cat “crime”. In all due respect, Zombies pose a much greater threat to humanity than 10K hard drives. That said, we did get this gem from a passing motorist “It’s kind of crazy. I’m totally confused. I’m kind of expecting… dinosaurs to run down the road, or something.” Totally. This comment made me cringe.

In what seems to be a me too type prank-a-thon, hackers (and I use this term loosely) again warned of the coming zombie apocalypse in Illinois yesterday by, you guessed it, changing a road sign to read “DAILY LANE CLOSURES DUE TO ZOMBIES”. So to map this out, the Zombies have somehow migrated north from Texas, all the way to Illinois and Raptors are running loose in central Indiana. I expect the two groups to meet somewhere around Terre Haute, Indiana. If this happens, I say we just give them the entire city.

Is this even funny anymore? These road signs are notoriously low-hanging fruit when it comes to civil disobedience. Then again, if this is what the folks of a rather affluent area of northern Indianapolis choose to do with their time, so be it. It’s far better than my choice of recreation.

Who am I kidding? This is totally awesome. Totally.

“Oh. By the way, this is VERY illegal. You have been warned.” – Me

Steve Wozniak Joins Fusion-io as Chief Scientist

This past fall, we first found out about Fusion-io, a company that aimed to essentially produce an SSD within a PCI-E card. As we found out soon after, it definitely has some bragging rights. Given the incredible bandwidth of the PCI-E bus, the top-end speeds were nothing short of amazing, with the absolutely only downside being the amazingly high-price (of around $3,000).

Well, if you doubted the company, you might want to take a look at them again. It was just announced that none other than Steve Wozniak has joined the company to become their chief scientist. This seems like an odd choice for a hardware company, but although Steve has dealt with a lot more software than hardware as of late, he’s had his share of hardware experiences in his day. He’s a tinkerer at heart, no question.

It’s also interesting to note that Wozniak isn’t new to the company, as he’s been on the company’s advisory board for the past few months. His choice to remain with the company stems from his belief that the company “is in the right place at the right time with the right technology“. Well, one thing’s for sure, and that’s that the product has huge potential. Now, let’s just fast-forward to the future where we can all have one of these in our desktop PCs…

“With the revolutionary technological advances being made by Fusion-io, the company is in the right place at the right time with the right technology and ready to direct the history of technology into the 21st century and beyond,” said Wozniak. “The technology marketplace has not seen such capacity for innovation and radical transformation since the mainframe computer was replaced by the home computer. Fusion-io’s technology is extremely useful to many different applications and almost all of the world’s servers.”